Abstract

Metal additive manufacturing (AM) typically suffers from high degrees of variability in the properties/performance of the fabricated parts, particularly due to the lack of understanding and control over the physical mechanisms that govern microstructure formation during fabrication. This paper directly addresses an important problem in metal AM: the determination of the thermal history of the deposited material. Any attempts to link process to microstructure in AM would need to consider the thermal history of the material. In situ monitoring only provides partial information and simulations may be necessary to have a comprehensive understanding of the thermo-physical conditions to which the deposited material is subjected. We address this in the present work through linking thermal models to experiments via a computationally efficient surrogate modeling approach based on multivariate Gaussian processes (MVGPs). The MVGPs are then used to calibrate the free parameters of the multi-physics models against experiments, sidestepping the use of prohibitively expensive Monte Carlo-based calibration. This framework thus makes it possible to efficiently evaluate the impact of varying process parameter inputs on the characteristics of the melt pool during AM. We demonstrate the framework on the calibration of a thermal model for laser powder bed fusion AM of Ti-6Al-4V against experiments carried out over a wide window in the process parameter space. While this work deals with problems related to AM, its applicability is wider as the proposed framework could potentially be used in many other ICME-based problems where it is essential to link expensive computational materials science models to available experimental data.

Two-stage multi-variate statistical calibration of the finite element thermal model

Similar content being viewed by others

Introduction

Integrated computational materials engineering (ICME) prescribes a framework for the acceleration in the development and deployment of materials through the establishment and exploitation of process-structure-property-performance (PSPP) relationships. PSPPs in turn can be established through linking materials models at multiple length (and possibly time) scales. The goal in ICME is to optimize the materials, manufacturing process, and component designs prior to part fabrication [1]. Inherently, ICME involves utilization of physics-based simulation models that aid in understanding the behavior of complex systems. These models use the system governing equations to compute and predict specific quantities of interest (QoIs). As a well-established fact, all of these simulation models are imperfect and thus their predictions will differ from the actual physical phenomena they are trying to describe.

The disagreement between the real-world and the model outputs can be attributed to one or more of the following factors: (1) incomplete understanding of the physical system, (2) incomplete information about model parameters, (3) incorrect values for the model inputs, (4) natural stochastic behavior of the system, and (5) uncertainties associated with available numerical simulation algorithms [1,2,3,4,5]. Hence, identification, characterization, and quantification of the uncertainties associated with these models become necessary in order to strengthen the robustness of model predictions, which is in turn essential if one is to use the models to guide the design/optimization of the systems (in this case, materials).

As an independent field of study, uncertainty quantification (UQ) seeks to address the challenges associated with the (unknown) uncertainties in models used to describe the behavior of complex systems. UQ is an established field that has been successfully applied to many areas including climate models [6], computational fluid dynamics [7], forestry [8], nuclear engineering [9], and econometrics [10]. Although UQ is a key need for computational materials models [11, 12], there is a literature gap in this area [13]. Reference [3] presented a review of the few existing works on UQ of multi-scale simulation models. More recently, reference [13] conducted UQ for a physics-based precipitation model of nickel-titanium shape memory alloys through combining experimental and computer simulation data.

Additive manufacturing (AM) is an area that can potentially benefit substantially from the ICME framework. First, AM processes such as laser powder bed fusion (L-PBF) are recognized for the high variability in the performance, composition, and microstructure of parts [14]. This variability is due to our incomplete understanding of the many complex and coupled phenomena that occur as energy interacts with raw material to produce a solid part [15]. Currently, many researchers work on developing simulation models at different scales to help understand different aspects of a specific AM process. For example for the L-PBF process, different simulation models exist that focus on different physical aspects, including characteristics of the powder bed, evolution of the melt pool, solidification process, and generation of residual stresses. Inevitably, these models introduce various sources of uncertainty [16]. Second, ICME provides the necessary infrastructure to support and accelerate qualification and certification [17], a key technological barrier of AM. Recently, references [18, 19] discussed the opportunities and challenges of how state-of-the-art UQ techniques can be used for predicting materials properties in AM processes.

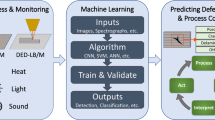

The purpose of this work is to conduct formal UQ for a computational materials model used to predict melt pool characteristics in L-PBF metal AM processes. More specifically, we perform statistical calibration of an FEM-based thermal model via surrogate (or reduced order) modeling and Bayesian inference. The statistical calibration problem (also known as the inverse UQ problem) refers to making inference on the posterior distributions of a set of calibration parameters such that model predictions are in agreement with experimental observations [20]. To the best of the authors’ knowledge, the current work is the first to conduct such rigorous calibration using a multivariate Gaussian process (GP)-based surrogate model. While the focus of the work is on specific physical phenomena associated with L-PBF AM, the overall framework can be readily adapted to address similar problems that involve systematic calibration of complex multiple-output computational materials models.

Prior to this work, UQ of ICME simulation models has been classically conducted using Monte Carlo methods (see [21,22,23,24,25]). However, for many computationally expensive models, Monte Carlo methods are impractical, and sometimes even unfeasible, as they require sufficiently large numbers of simulation runs in order to acquire the statistics necessary to adequately characterize model uncertainty. This is especially true in the specific case of computational models for AM that tend to be computationally demanding, which precludes the utilization of Monte Carlo methods. To address this, we construct a surrogate model (also known as an emulator or meta-model). This represents a statistical approximation that can be used in lieu of the original computationally expensive simulation model without sacrificing too much accuracy. Although surrogate modeling has been studied in prior works, one important distinguishing feature of the surrogate model developed in the present study is its ability of approximating simulation models that have multiple outputs or QoIs. This is an important feature since multi-output simulation models are quite common in science and engineering applications [26, 27]. Conventional UQ approaches for multi-output models typically ignore correlations that might exist among model outputs, and thus usually conduct independent UQ analysis for each output independently. Clearly, this de-coupling overlooks inherent coupling or interdependence that may exist among multiple outputs of a single model.

The remainder of this paper is organized as follows: “Proposed Framework” has two subsections: “Melt Pool Modeling Through FEM Based Thermal Modeling” introduces the FEM-based simulation model used in this work. “Multivariate Statistical Calibration” describes the statistical framework used for building the surrogate and calibration models. “Results: Calibration of FEM Based Thermal Model using Ti-6Al-4V Tracks” reports the results of implementing the proposed calibration procedure for the melt pool FEM-based simulation model using both simulations and experimental characterization. “Conclusions and Future Work Directions” concludes the paper with a summary of findings and directions for future research.

Proposed Framework

This section will start by presenting the physics-based FEM-based thermal model, followed by the multivariate statistical framework used to calibrate that model. Readers who are not focused on understanding the details of mathematical and statistical model developments can skip “Multivariate Statistical Calibration” and proceed directly to the results and discussion in “Results: Calibration of FEM Based Thermal Model using Ti-6Al-4V Tracks”.

Melt Pool Modeling Through FEM-Based Thermal Modeling

L-PBF processes offer attractive advantages and capabilities over conventional manufacturing techniques. These include, for example, higher geometric freedom, flexibility to customize parts, and recently the potential capability of tailoring the microstructures (and hence the properties) of fabricated parts. However, they are in the meantime very complex processes that involve several physical mechanisms most of which are not yet fully understood. Therefore, it is crucial to develop better understanding of these mechanisms that drive the thermal history within the part during fabrication. Ideally, in situ thermal monitoring can be used to capture information about thermal histories during fabrication. However, experimental measurement of the thermal field in L-PBF is extremely difficult due to a number of challenges such as very high thermal gradients and cooling rates, micro-scale melt pool size, and emissivity variations, among many other challenges. Consequently, numerical methods are needed to complement experiments in understanding the thermal history during the fabrication of L-PBF parts.

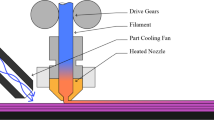

Formally, we define the melt pool as the region in the laser powder interface at which metal powder particles fuse to form a pool of molten metal that eventually solidifies after the laser beam moves to another location. In this paper, we developed a three-dimensional FEM-based thermal model implemented in COMSOL Multiphysics software to study melt pool characteristics, including geometry and thermal profiles, during the fabrication of single tracks printed in a thin layer of powder on top of a solid substrate. The powder layer was assumed as a 30-μ m continuum medium over a 1-mm-thick substrate. Ti-6Al-4V alloy was selected as the material for both the powder layer and the substrate.

To ensure accurate analysis, second-order quadrilateral Lagrange elements with the size of 30 μ m were used for the laser powder interaction zone, while coarser tetrahedral elements were employed for the rest of the simulation domain. Note that, since second-order elements with node points at the mid points of the sides as well as in the element center were used in the laser powder interaction zone, the accuracy of the solution is higher compared to a first-order element with the same size, which is mostly used in the literature for similar models. In addition, the results of the mesh convergence study indicated that further reduction in the fine element size extremely increases the computation cost, although it has a negligible influence on the melt pool size. Single track simulations were run for a 3-mm-long track. Figure 1 shows a sample output of the model with melt pool temperature profiles. It is worth noting that to reduce the computation cost, a relatively smaller simulation domain is used compared to the real experiments. A small domain might potentially cause the final solution to be adversely affected by the boundary condition ambient temperature. To account for this, trial simulations varying the domain size were conducted to show negligible effect on the melt pool size. Table 1 shows the results of varying the domain size which changes the melt pool dimensions by less than 1 μ m.

An appreciable number of FEM-based thermal models have been developed to predict the thermal history and melt pool geometry during L-PBF. In these works, the effects of process parameters (e.g., laser power, scanning velocity, hatch spacing), material properties, and powder properties (e.g., particle size distribution, layer thickness) have been investigated. For these melt pool models, an appropriate powder bed model should be employed. Modeling of the powder bed has been done in two different ways: powder scale (refer to [28,29,30,31,32,33,34,35]) and continuum scale (refer to [36,37,38,39,40,41,42,43]). Although the first approach enables simulating the size variations and the local changes in the melt pool such as incomplete melting or formation of pores [28, 29], it is computationally expensive such that it is almost impossible to use it for full-part simulation. The latter approach, on the other hand, has been widely employed due to its relatively low computational cost and ease of implementation. While some studies have taken fluid dynamics effects in the melt pool into account (e.g., Marangoni convection in [33, 44, 45]), a significant number of works in the literature have neglected those effects to simplify the model (see for example [38,39,40, 43]). The change in volume during melting of the powder [42, 46, 47] and layer built-up were modeled in some studies [37, 41, 48, 49]. We refer the interested readers to review papers on numerical modeling and simulation of AM for more information [50,51,52].

The model used in this work accounts for several heat transfer mechanisms that take place during metal L-PBF. In particular, conduction, convection, radiation, phase transitions (namely, solid-to-liquid and liquid-to-gas transitions), latent heat of melting/evaporation, temperature dependent material properties, and the effective thermo-physical properties for the powder layer were considered. The heat conduction equation is given by the following:

where \(\rho (T)\) is the density, \(C_{p}(T)\) is the specific heat capacity, \(k(T)\) is the thermal conductivity, T is the temperature, t is the time, and Q is the volumetric heat flux. The variation in the material properties during phase change is described based on apparent heat capacity method in which the latent heat is accounted for as an extra term in the heat capacity description:

where \(k_{i} (T)\), \(\rho _{i} (T)\), \(C_{p,i} (T)\), and \(\phi _{i}\) denote the thermal conductivity, density, specific heat capacity, and volume fraction of phase i, respectively. During phase change, the value of \(\phi _{i}\) smoothly varies from 1 to 0. In this model, solid (i = 1), liquid (i = 2), and vapor (i = 3) phases are considered; hence, M is set to 3. \(L_{j\rightarrow j + 1}\) represents the latent heat and \(\alpha _{m,j \rightarrow j + 1}\) describes the mass fraction of the phase.

The effective density (ρeff) and the effective thermal conductivity (keff) of the powder are defined as follows [53]:

where \(\rho _{\text {solid}}\) and \(k_{\text {solid}}\) are the density and the thermal conductivity of the bulk solid. The decrease in the porosity of the powder during melting is described by \(\emptyset (T)\), where \(\emptyset (T)= 0\) implies full melting. n is the empirical parameter and set to 4 according to [53].

Temperature-dependent material properties of Ti6Al4V as reported in [54] are used for the bulk solid phase. Equations 6 and 7 are used to calculate the effective density and thermal conductivity of the powder, while the specific heat capacity of the powder was assumed to be the same as bulk solid as suggested in the majority of previous works in the literature (see [41,42,43, 53, 55,56,57,58,59]). The density and heat capacity of the liquid were extrapolated according to [59]. Note that for simplification and to reduce the computational time, the model only accounts for conduction mode melting and neglects fluid dynamics effects in the melt pool. However, to account for the effect of Marangoni convection on the melt pool size and geometry, the thermal conductivity of liquid was increased according to [41, 56, 59]. This was achieved by multiplying the thermal conductivity of the bulk solid phase at the melting temperature (29 W/mk) by a constant multiplier, denoted by \(\theta _{3}\) in “Results: Calibration of FEM Based Thermal Model using Ti-6Al-4V Tracks”.

The laser beam was defined as a two-dimensional Gaussian distributed moving heat source. The initial temperature of the build was set to the ambient temperature (298 K). Natural convection and radiation were applied as boundary conditions on the powder surface:

where h is the coefficient of the convective heat transfer which was set to 14 W/m2K, \(T_{\text {amb}}\) is the ambient temperature (298 K), T is the current temperature, \(\epsilon \) is the emissivity coefficient (0.7 according to [57]), and \(\sigma _{\mathrm {B|}}\) is the Stefan–Boltzmann constant, respectively. To account for evaporation, a heat flux on the powder surface, as described in Eq. 10 was applied as a boundary condition:

where \(m_{\mathrm {v|}}\) and \(L_{\mathrm {v}}\) stand for the mass of vapor and the latent heat of evaporation, respectively. The mass of vapor is calculated based on the volume fraction of vapor phase (ϕ3). A symmetry boundary condition was applied along the scanning path to reduce the computational cost. All other boundaries were maintained at the ambient temperature. Finally, it is worth mentioning that keyhole effect is not observed in the experimental settings employed in this study (see “Experimental Measurements”). Hence, it is not accounted for in the model. More details regarding the physical description of the model can be found in [58].

Since the thermal model takes different physical mechanisms into account, there are multiple materials parameters that influence the model results, and thus, the predictive capabilities of the model. After preliminary simulation experiments, the following parameters were identified as most significant on variability in model outputs: (1) laser absorptivity, (2) powder bed porosity, and (3) thermal conductivity of the liquid. It is known that the laser absorptivity is a function of temperature and has different values for powder, solid, and liquid materials. There are several factors (e.g., beam intensity, wavelength, temperature, oxidation, powder size, and distribution) affecting the absorptivity of the material. Therefore, it is difficult to experimentally measure it. Note that while low values of absorptivity result in insufficient energy input and incomplete melting of the powder particles, very high values lead to overheating of the particles, hence over estimation of the melt pool size.

Moreover, to account for the effect of Marangoni convection on the melt pool size and geometry, the thermal conductivity of liquid was increased according to [39, 59, 60]. However, there is no consensus in the community on the level of this increase. Powder porosity is used as an input to predict the effective thermo-physical properties (thermal conductivity, density, heat capacity) of the powder layer. Therefore, it has a significant influence on the predicted thermal distribution. Considering the aforementioned aspects, a need for the calibration of these three parameters was realized.

It is worth pointing out the key simplifying assumptions of the FEM thermal model used in this work. First, in order to reduce the computation time, the fluid dynamic phenomena occurring within the melt pool are neglected. Second, the powder bed is assumed as a continuum layer with effective thermo-physical properties. The porosity of the powder bed will be later treated as a calibration parameter in the remainder of the work. Finally, the volume shrinkage resulting from melting of the powder is neglected.

Multivariate Statistical Calibration

After describing the melt pool FEM-based simulation model in “Melt Pool Modeling Through FEM Based Thermal Modeling”, we now describe the multivariate statistical framework that will be employed to calibrate that model. This approach is referred to as calibration of computer models by [61]. We emphasize that although AM is our focus application platform, the framework developed in this section can be readily generalized to other problems.

Before we introduce the mathematical formulations, we establish notation and some definitions. Since the word model will be employed to refer to different types of models that constitute the building blocks of the framework, we clearly define specific cases to avoid misinterpretation and ambiguity. We use computer model to denote a computational model implemented via a computer code that simulates and recreates any process (physical, social, mathematical, etc.) by a set of calculations derived from proper study of the process. One example of a computer model is the thermal model explained in Section “Melt Pool Modeling Through FEM-Based Thermal Modeling”. The term statistical model will refer to the calibration methodology presented in this section, and is sub-divided into two key components: the surrogate model and the calibration model, which will be defined in the following paragraphs.

Previous approaches for the calibration of computer models using rigorous statistics rely on Monte Carlo (MC) methods. While MC methods are extremely valuable and well studied, the fact that they necessitate generating sufficiently large numbers of simulations (sometimes in the order of 15,000–20,000 simulations) makes them impractical for calibrating computationally expensive models. One possible approach to overcome this challenge is using a two-stage approach based on surrogate modeling (also called meta-modeling or emulation) and suggested in a series of works [61,62,63,64]. The surrogate model is thus the computationally efficient statistical approximation of the original computer model.

In the calibration problem, whether or not a surrogate model is used, we distinguish between two different types of inputs to the computer model [13]:

-

Control inputs (denoted by \(\boldsymbol {x}\)) are inputs to the computer model that are directly set to known pre-determined values by the user. Examples of control inputs in some computer models include temperature, pressure, or velocity.

-

Calibration parameters (denoted by \(\boldsymbol {\theta }\)) are inputs or parameters to the computer model that are unknown with certainty, or not measurable, at the time of simulation, but do influence the results of the computations. Examples include material properties or unknown physical constants.

The goal of the calibration model is thus to estimate the calibration parameters such that the computer model simulations agree with experimental observations of the real process being simulated [13]. Formally, the statistical model follows the equation:

where the experimental observation \(\boldsymbol {y}^{E}\) of the real process run at some values of control inputs \(\boldsymbol {x}\) is equal to the summation of the response of the computer model \(\boldsymbol {y}^{S}\), a discrepancy (or inadequacy) function \(\boldsymbol {\delta }\), and some measurement error \(\boldsymbol {\epsilon }\). The objective is to estimate the values of the calibration parameters \(\boldsymbol {\theta }^{\star }\). Definitions for each term in Eq. 11 will be provided as we briefly describe the two stages of the statistical model in the following subsections. More details can be found in the Appendices. It is worth mentioning that parameter calibration for our FETM model is specific to the material system being processed using AM. Hence, if a new material system is being considered, the parameters must be re-calibrated which necessitates generating a new set of experimental data.

Multivariate Surrogate Model

In this section, we build a multivariate surrogate model that replaces the computer model \(\boldsymbol {y}^{S}\left (\boldsymbol {x},\boldsymbol {\theta }\right )\) in Eq. 11. For detailed derivations, the interested reader is encouraged to study the work by [65]. Note that we define the computer model as a function \(\boldsymbol {y}^{S}\) \(=\) \(\boldsymbol {f}\left (\cdot \right )\) that takes as input the control inputs \(\boldsymbol {x}\) and the calibration parameters \(\boldsymbol {\theta }\), and returns a q-dimensional response vector \(\boldsymbol {y}^{S}\in \mathbb {R}^{q}\). The inputs \(\boldsymbol {x}\) and parameters \(\boldsymbol {\theta }\) lie in some multidimensional spaces \(\mathcal {X}\subseteq \mathbb {R}^{p}\) and \(\mathcal {T}\subseteq \mathbb {R}^{t}\), respectively. Thus, the computer model \(\boldsymbol {f}\) is essentially a mapping \(\boldsymbol {f}:\mathcal {X}\times \mathcal {T}\mapsto \mathbb {R}^{q}\). Although \(\boldsymbol {f}\) is a deterministic function (that is, if run multiple times at same input values, it will return the same value for responses), in order to approximate it with a surrogate model, we can regard \(\boldsymbol {f}\) as an stochastic process [65].

As mentioned in “Introduction”, we employ GP models that are known for their attractive mathematical and computational properties [66]. The GP model assumes that given any finite collection of inputs \( \boldsymbol {x}_{1},\ldots ,\boldsymbol {x}_{n} \) the model outputs \(\boldsymbol {f}(\boldsymbol {x}_{1}),\ldots ,\boldsymbol {f}(\boldsymbol {x}_{n})\) will follow a q-dimensional Gaussian process. Hence, their joint probability distribution becomes a matrix-variate normal distribution

with mean matrix \(\boldsymbol {m}\) and cross-covariance matrix \(\boldsymbol {C}\), which is fully defined by some set of hyperparameters Φ. The multivariate q-dimensional GP is denoted as

where \(c\left (\cdot ,\cdot \right )\) is a positive definite correlation function accounting for correlation in the input space with \(c\left (\boldsymbol {x},\boldsymbol {x}\right )= 1\), and \(\boldsymbol {{\Sigma }}\in \mathbb {R}_{+}^{q,q}\) is a positive definite matrix accounting for correlations between outputs.

The first step to build the GP surrogate model is to run the computer model for several simulations in order to gather a set of data that will be used to train the surrogate model. We choose to employ the Latin hypercube sampling (LHS) method given its ability to explore the input space uniformly and homogeneously. The training data set consists of N points and is denoted by

where \(\boldsymbol {X}^{S}\) is an \(N\times \left (p+t\right )\) input matrix and \(\boldsymbol {Y}^{S}\) is an \(N\times q\) output matrix.

Appendix A describes how to estimate the GP hyperparameters. Specifically, the procedure to find the posterior distribution of the roughness parameters \(\boldsymbol {r}\) through a Bayesian approach is explained. After estimation of the hyperparameters, we assess the performance of the GP surrogate model through k-fold cross validation (CV). CV is a common technique to evaluate the adequacy of predictive models, including surrogate models, through computing a metric that captures the deviation of the predictions obtained using the predictive model (the surrogate model in our case) and the true quantity being predicted (computer model predictions in our case). Put simply, our target is to ensure that predictions obtained using the surrogate model are close to those obtained using the original computer model. In a CV procedure, we partition the training dataset \(\left (\boldsymbol {X}^{S},\boldsymbol {Y}^{S}\right )\) into k disjoint partitions. \(k-1\) of these partitions are used to train the surrogate model, and then predictions are made on the left-out partition using (21). These predictions are then compared with the computer model predictions. This process is iterated k times, such that at every iteration, a different partition is left out, and after all k iterations all partitions have been left out once and only once. Finally, the performance metric is computed. Many metrics have been reported in the literature on predictive modeling and machine learning. We utilize the well-known mean absolute percentage error (MAPE) defined as

where \(y_{i,j}^{S}\) is j-th element of the computer model output at input \(\left (\boldsymbol {x},\boldsymbol {\theta }\right )_{i}\), and \(\hat {y}_{i,j}^{S}\) is the j-th element of the surrogate model prediction evaluated at the same input \(\left (\boldsymbol {x},\boldsymbol {\theta }\right )_{i}\) using the estimated values for \(\boldsymbol {r}\).

If the CV results are unsatisfactory (high MAPE), more data is required. Appendix B provides guidelines for an efficient way of acquiring more training data using adaptive sampling for the GP surrogate model.

Multivariate Calibration Model

Once the surrogate model has been adequately constructed, it can now be used in lieu of the original computer model in Eq. 11 to generate sufficiently large number of simulations needed to conduct calibration of the parameters \(\boldsymbol {\theta }\). Some of the steps in developing the following calibration procedure follow the work of [67].

We start by elucidating the two remaining terms of the statistical model given in Eq. 11. The term \(\delta \left (\boldsymbol {x}\right )\) is a discrepancy or model inadequacy function. This function accounts for factors that result in deviation between the computer model predictions and the real process being simulated, including missing physics, simplifying assumptions, and numerical errors. The term \(\epsilon \left (\boldsymbol {x}\right )\) models the measurement error associated with experimental observations. Note that both of these terms depend only on control inputs \(\boldsymbol {x}\), since the calibration parameters are not changed or controlled in experiments.

Similar to what was done with the surrogate model in “Multivariate Surrogate Model”, we model \(\delta \left (\cdot \right )\) as a multivariate q-dimensional GP,

with mean function that is equal to 0 for all elements, and a stationary squared exponential correlation function

where \(R_{\delta }=\text {diag}\left (\boldsymbol {r}_{\delta }\right )\) is a diagonal matrix of positive roughness parameters with \(\boldsymbol {r}_{\delta }=\left [r_{1}^{\left (\delta \right )},\ldots ,r_{p}^{\left (\delta \right )}\right ]\in \mathbb {R}_{+}^{p}\), and the covariance matrix of the model outputs \(\boldsymbol {{\Sigma }}_{\delta }=\text {diag}\left (\boldsymbol {\sigma }_{\delta }\right )\) is a diagonal matrix with positive variances \(\boldsymbol {\sigma }_{\delta }=\left [\sigma _{1},\ldots ,\sigma _{q}\right ]\in \mathbb {R}_{+}^{q}\).

The measurement error term \(\epsilon \left (\cdot \right )\) is also modeled as a multivariate q-dimensional GP,

with mean function equal to 0 for all elements, and correlation function given by the Kronecker delta function

and noise matrix \(\boldsymbol {{\Sigma }}_{\epsilon }=\text {diag}\left (\boldsymbol {\psi }\right )\) with positive noise variances \(\boldsymbol {\psi }=\left [\psi _{1},\ldots ,\psi _{q}\right ]\in \mathbb {R}_{+}^{q}\).

In order to build the calibration model, we need another data set which is constructed from experimental observations. The procedure to obtain the experimental data is explained in “Experimental Measurements”. We denote this data set as

where \(\boldsymbol {X}^{E}\) is an \(n\times p\) controllable input matrix and \(\boldsymbol {y}^{E}\left (\boldsymbol {x}\right )\) is the result of the experiment observed at \(\boldsymbol {x}\); thus, \(\boldsymbol {Y}^{E}\) is a \(n\times q\) matrix. It is worth to mention that the size n of this dataset may be different from simulation dataset size N, and that only control inputs \(\boldsymbol {x}\) are used in the context of physical experiments (as opposed to \(\left (\boldsymbol {x},\boldsymbol {\theta }\right )\) tuples for both the computer and surrogate models).

Having the dataset \(\left (\boldsymbol {X}^{E},\boldsymbol {Y}^{E}\right )\), we estimate the posterior distributions for the calibration parameters and hyperparameters. We conduct a Bayesian methodology to achieve this, where the posterior distributions of the hyperparameters Φcal is given by

The distributions are computed using the Metropolis Hastings algorithm after adequate selection of the prior distributions \(\pi \left (\boldsymbol {\theta },\boldsymbol {r}_{\delta },\boldsymbol {\sigma }_{\delta },\boldsymbol {\psi }\right )\). The formulations required for estimating the hyperparameters \(\boldsymbol {{\Phi }}_{\text {cal}}\) can be found in Appendix C.

After determining these posterior distributions, the last remaining step is to construct a predictor that can be used to compute model predictions at input settings that have not been previously simulated or experimentally measured, and we rely on the Kriging technique, also known as the best linear unbiased estimator (BLUP) [68]. Details of the Kriging technique are provided in Appendix D.

To assess the performance of the calibrated model, a cross validation (CV) procedure similar to the one described for the surrogate model in “Multivariate Surrogate Model” can be used. The mean absolute percentage error (MAPE) can be computed using Eqs. 27 and 28. The key difference is the fact that in this case, simulations from the calibrated surrogate model are compared with experimental measurements, in contrast to comparing surrogate model predictions with the computer model predictions.

Results: Calibration of FEM-Based Thermal Model Using Ti-6Al-4V Tracks

The statistical calibration procedure is conducted on the FEM-based thermal model described in Section “Melt Pool Modeling Through FEM Based Thermal Modeling”. Following our definitions, the thermal model represents the computer model, and the two terms will be used interchangeably in the remainder of the text. This computer model predicts the three-dimensional thermal profiles of the moving melt pool during L-PBF AM. It is reported in the literature that the melt pool temperature and geometry (depth and width) are important factors influencing the outcome of the L-PBF process [69]. The inputs and outputs of the computer model are described as follows:

-

Two control inputs

-

\(x_{1}\): laser power (W)

-

\(x_{2}\): laser scan speed (mm/s)

-

-

Three calibration parameters

-

\(\theta _{1}\): powder bed porosity (%)

-

\(\theta _{2}\): laser absorptivity(%)

-

\(\theta _{3}\): coefficient of thermal conductivity for liquid (\({\frac {\mathrm {W}}{\mathrm {m} \cdot \mathrm {K}}}\))

-

-

Three model outputs (or quantities of interest, QoIs)

-

\({y_{1}^{S}},{y_{1}^{E}}\): melt pool depth into the solid substrate (μ m)

-

\({y_{2}^{S}},{y_{2}^{E}}\): melt pool width (μ m)

-

\({y_{3}^{S}},{y_{3}^{E}}\): melt pool peak temperature (∘C).

-

The chosen control inputs (laser power and speed) are known to have the most significant effect on the melt pool characteristics, and are commonly studied by AM researchers (see for example [68]). In terms of the notation defined in “Multivariate Statistical Calibration”, we have the length of the control inputs vector \(p = 2\), and lengths of the calibration parameters vector and computer model outputs vector \(t=q = 3\). We test the performance of the proposed multivariate calibration procedure through studying the melt pool conditions while fabricating single track of Ti-6Al-4V. We derive the posterior distributions of the calibration parameters \(\boldsymbol {\theta }\) using a synthesis of computer model simulations denoted by matrices XS and \(\boldsymbol {Y}^{S}\), and experimental observations denoted by matrices \(\boldsymbol {X}^{E}\) and \(\boldsymbol {Y}^{E}\). We start by constructing a GP-based surrogate model using a data set of computer model simulations (“Building the Surrogate Model”). Next, we conduct manufacturing and characterization experiments to collect the data required for calibrating the model (“Experimental Measurements”). Finally, we conduct model calibration and prediction (see “Prediction Using the Calibrated Model”).

Building the Surrogate Model

Since L-PBF processes involve complicated physical phenomena with different forms of heat and mass transfer and material phase transitions, the run-times for computer simulation models are typically long. This necessitates the use of computationally efficient surrogate models, both for the purpose of conducting calibration or for process planning and optimization. In the present case, the execution time for the FEM-based thermal model developed was dependent on the model inputs (control inputs and calibration parameters). From initial test simulation runs, execution times ranged between 30 min to 5 h. Hence, performing a traditional Markov chain Monte Carlo (MCMC) with 50,000 iterations would take approximately 800 weeks. Furthermore, MCMC sampling strategies preclude the use of embarrassingly parallel modes of execution to improve computational time. Instead, we use the two-stage surrogate-modeling approach explained in “Multivariate Surrogate Model” to address this challenge.

To build the surrogate model, a training data set from the original FEM-based thermal model is first needed. This data set consists of the two matrices \(\boldsymbol {X}^{S}\) and \(\boldsymbol {Y}^{S}\) introduced earlier, representing simulation inputs and outputs, respectively. We use the Latin hypercube sampling (LHS) strategy to uniformly select design points from the control input and calibration parameters space, \(\mathcal {X}\times \mathcal {T}\). The lower and upper bounds for the control input space \(\mathcal {X}\) was chosen as \(\mathcal {X}_{\min }=\left \lbrace 30~\mathrm {W},80\text { mm}/\mathrm {s}\right \rbrace \) and \(\mathcal {X}_{\max }=\left \lbrace 500~\mathrm {W},400\text { mm}/\mathrm {s}\right \rbrace \). These bounds were determined based on prior knowledge of the commercial metal L-PBF system used in this study and machine specifications. The lower and upper bounds for the calibration parameter space were chosen as \(\mathcal {T}_{\min }=\left \lbrace 20 \%, 40 \%, 1 \right \rbrace \) and \(\mathcal {T}_{\max }=\left \lbrace 70 \%, 90 \%, 25 \right \rbrace \). These bounds were specified by the AM researchers based on previous values reported in the literature to construct an initial region within which the true values of \(\boldsymbol {\theta }\) are believed to lie. A simulation data set of size \(N = 130\) was generated over the \(\mathcal {X}\times \mathcal {T}\) space. Hence, \(\boldsymbol {X}^{S}\) is an \(N \times (p+t)\) matrix with 130 different and uniformly selected \((\boldsymbol {x},\boldsymbol {\theta })\) combinations, and \(\boldsymbol {Y}^{S}\) is an \(N \times q\) with elements representing outputs of the thermal model for input \(\boldsymbol {X}^{S}\). To accelerate the process, simulations were conducted using the Texas A&M 843-node high-performance computing cluster. Each simulation was conducted on a single node with 20 cores. Multiple nodes were used for cross validation purposes, “Multivariate Surrogate Model”.

Recall from Eq. 20 that the conditional posterior distribution of \(\boldsymbol {f}(\cdot )\) given the simulation training data (XS,YS) and roughness parameters \(\boldsymbol {r}\) is a q-variate T process. The Bayesian approach was then used to estimate the roughness parameters. To ensure their positivity, log-logistic prior distributions for the elements of \(\boldsymbol {r}\) with both scale and shape parameters equal to 1 were used (see Eq. 24). Next, using the single-component Metropolis-Hastings algorithm, the posterior distributions of \(\boldsymbol {r}\) were generated after 50,000 iterations with 25% burn-in period and thinning every fifth sample. Figure 2 shows the histograms and kernel density estimates of the posterior distributions for the roughness parameters.We observe that the posteriors are informative, i.e., unimodal and suggesting specific values for the roughness parameters. The modes of these distributions were used as the estimates for these roughness parameters \(\boldsymbol {r}\). At this stage, the surrogate model is built and ready to use, since essentially when the roughness parameters have been estimated, the output of the computer model at any given combination of \((\boldsymbol {x},\boldsymbol {\theta })\) can be estimated using Eq. 21. A confidence interval for this estimate can also be determined using Eq. 22. The surrogate model allows for significant savings in computational time, since the predictions can be made instantaneously using the GP after the hyperparameters have been estimated. On the other hand, the simulation model prediction runs would take 1–4 h depending on the process parameter inputs.

It is necessary to validate and assess the performance of the surrogate model once the hyperparameters \(\boldsymbol {{\Phi }}_{\text {sim}}\) are estimated. A tenfold cross validation was performed for the surrogate model and the results are displayed in Fig. 3a, b, c, corresponding to the three model outputs: melt pool depth, width, and peak temperature, respectively. In the plots, the horizontal axes represent the outputs of the computer simulation model, while the vertical axes show the predicted outputs using the surrogate model with the bars representing confidence intervals for these predictions. In other words, the red line represents the ideal case with surrogate model predictions \(\mathbb {E}[f(\boldsymbol {x})|\boldsymbol {X}^{S},\boldsymbol {Y}^{S},\boldsymbol {\theta }]\) being in full agreement with computer model simulations yS(x, 𝜃).

It can be visually seen that the predictive performance of the surrogate model is satisfactory. For a quantitative assessment, the computed MAPE values for the three outputs are reported in Table 2, also indicating satisfactory performance. Note that since the predictive accuracy, represented by MAPE, was deemed acceptable, there was no need for further sampling using the adaptive sampling technique described in “Multivariate Surrogate Model”.

Experimental Measurements

As mentioned in “Multivariate Statistical Calibration”, experimental data is needed to calibrate the computer model. LHS design was also used to uniformly explore the control input space \(\mathcal {X}\). A total of \(n = 24\) different configurations of \(\boldsymbol {x}\) were determined, which constitute XE. Next, the fabrication and characterization were conducted to obtain the corresponding outputs \(\boldsymbol {Y}^{E}\).

Melt Pool Depth and Width

Single tracks of length 20 mm were fabricated on a 30-μ m powder bed using a ProX 100 DMP commercial L-PBF system by 3D Systems. The system is equipped with a Gaussian profile fiber laser beam with wavelength \(\lambda = 1070\text { nm}\) and beam spot size of approximately 70-μ m diameter. Argon was used as inert protective atmosphere during fabrication. The raw Ti-6Al-4V powder was produced by LPW Technology. Single tracks were built on a Ti-6Al-4V substrate, which was subsequently cut with a Buehler precision saw and mounted for cross-sectional analysis. Metallographic grinding was performed with silicon carbide papers (320 to 600 grit size) followed by manual polishing with 1-μ m diamond suspension and final precision polishing with colloidal silica suspension. To make melt pool boundary lines more visible, chemical etching was performed using a 3:1 volume mixture of HCl and HNO3 solution. Melt pool depth and width were measured using optical microscopy (Nikon Optiphot - POL) and verified with scanning electron microscopy (FEG-SEM/FIB TESCAN LYRA3). Representative SEM images that were used for measuring the melt pool depth and width are shown in Fig. 4. We visually ascertain from the figure that both higher laser powers and lower scan speed increase the melt pool size; however, the impact of laser speed on the melt pool dimensions is higher, primarily due to the low maximum power on the system (50 W).

Melt Pool Peak Temperature

The L-PBF system was custom integrated with a thermal imaging sensor to conduct in situ monitoring of melt pool temperature during fabrication. The sensor is a two-wavelength imaging pyrometer (ThermaVIZ®; by Stratonics Inc.) that consists of two high-resolution CMOS imaging detectors. Both detectors have a field of view (FOV) with \(1300\times 1000\) pixel resolution mapped to a \(30\times 27\) mm area, which yields a resolution of 24 \({\mu }\)m per pixel. Figure 5 shows the pyrometer integrated inside the ProX100 DMP build chamber. Experimental calibration of the pyrometer (which is to be distinguished from statistical calibration of the model) was performed in situ after integration using a tungsten filament (halogen tungsten lamp) for a range of temperatures between 1500 and 2500 ∘C. By fabricating the single tracks within the FOV of the pyrometer, thermal images of the melt pools were taken at approximately \(250\) Hz. These images were used to compute the melt pool peak temperature.

A sample melt pool temperature map taken from a representative thermal image is shown Fig. 6 where X and Y coordinates are pixels resolved by the pyrometer and the color scale represents temperature. The temperature map shows zero for temperature values below \(1500~^{\circ }\mathrm {C}\) that fall outside the calibration range of the pyrometer.

Prediction Using the Calibrated Model

With the surrogate model fully defined and the experimental measurements conducted, we are now able to estimate the calibration parameters 𝜃, as well as the remaining hyperparameters \(\boldsymbol {{\Phi }}_{\text {cal}}\) required for the statistical model (rδ,σδ, and \(\boldsymbol {\psi }\), introduced in Eqs. 11, 14, and 15). As instructed in “Multivariate Calibration Model”, we use the Bayesian framework and Metropolis-Hastings MCMC to estimate the set of hyperparameters \(\boldsymbol {{\Phi }}_{\text {cal}}=\left \lbrace \boldsymbol {\theta }, \boldsymbol {r}_{\delta }, \boldsymbol {\sigma }_{\delta }, \boldsymbol {\psi } \right \rbrace \). The following prior distributions are selected for the hyperparameters:

Note that the priors for the calibration parameters \(\theta _{i}\) are all uniform and hence non-informative to avoid bias in estimation, and since no information beyond the suggested lower and upper bounds were available. Examples of constructing informative prior distributions using additional prior knowledge can be found in [70, 71]. The lower and upper bounds for these prior distributions, \((\alpha ^{\theta }_{i}, \beta ^{\theta }_{i})\), were set equal to the lower and upper bounds of the parameters space \(\mathcal {T}_{\min }=\left \lbrace 20 \%, 40 \%, 1 \right \rbrace \) and \(\mathcal {T}_{\max }=\left \lbrace 70 \%, 90 \%, 25 \right \rbrace \). For the roughness parameters \(r_{\delta _{i}}\), log-logistic priors were used as recommended by [65]. For the variance parameters \(\sigma _{i}\) and \(\psi _{i}\), inverse gamma priors are selected because they represent conjugate priors for the multivariate normal likelihood function in our model.

Similar to “Building the Surrogate Model”, single-component Metropolis-Hastings procedure was used to compute the posterior distributions for the hyperparameters. Figure 7 shows the histograms and kernel density estimates for these parameters after 100,000 MCMC iterations with 25% burn-in period and thinning every fifth sample. In the plots, we observe unimodal and well-informative posteriors for all of the calibration parameters with \(\theta _{1}\) and \(\theta _{3}\) showing symmetric density functions and \(\theta _{2}\) showing a density function skewed to the right. Table 3 reports the posterior mean, mode, and standard deviation for the posterior distributions of the calibration parameters.

Porosity, \(\theta _{1}\), is used to calculate the effective thermo-physical properties of the powder bed (i.e., thermal conductivity and density). It was observed during simulations that by changing the porosity from 0.3 to 0.5 the thermal conductivity of the powder changes up to \(2\frac {\mathrm {W}}{\mathrm {m} \cdot \mathrm {K}}\), which leads to an insignificant change in the thermal history and only a few microns change in the melt pool size. Thus, by considering the variability in experimental measurements for melt pool dimensions, this change becomes negligible, and the wide nature of the posterior distribution for 𝜃1 is physically consistent. Furthermore, a posterior mean of 0.423 is reasonable since it agrees with the reported range of porosity for similar powder sizes and layer thicknesses (see [59, 72]).

The posterior distribution of absorptivity (𝜃2) shows a more informative posterior distribution with mean of 0.782. This value demonstrates reasonable agreement with reported experimental results in the literature [73, 74]. However, considering the difficulties associated with experimentally measuring absorptivity due to its dependence on multiple parameters (i.e., wavelength, temperature, oxidation, powder size, powder distribution, powder porosity), these experimental results might involve high uncertainty. Therefore, we confidently agree that the estimated distribution for absorptivity is consistent with the underlying physical phenomena controlling the interactions between the laser and the powder bed.

The narrow range of the posterior distribution of \(\theta _{3}\) can be attributed to its significant effect on the thermal profile and the melt pool size. A unit increase in the liquid thermal conductivity coefficient might lead to a change on the order of 100 K in the thermal history and in a change between 5 and 10 μ m in the melt pool size. Additionally, if extremely high values are used for this parameter, the applied energy would be rapidly transferred to the surroundings and the energy input will reduce, thus the melt pool peak temperature would decrease in an unrealistic manner. Therefore, only a small region of this parameter results in physically meaningful simulations, explaining the narrow posterior distribution. Please note that the calibration parameter \(\theta _{3}\) refers to the coefficient used for estimating the effective thermal conductivity of liquid. It is simply a multiplier, which is multiplied by the thermal conductivity of the bulk at the melting temperature (29 W/mk).

Next, we use the predictive distributions from Eq. 27 to assess the performance of the calibrated model via a sixfold cross validation. Figure 8 displays the results of the sixfold cross validation for each of the three outputs \(y_{i}\). In the plots, the horizontal axes represent experimental measurements, while the vertical axes are the predicted outputs using the calibrated model with the bars representing the confidence intervals for the predictions. In other words, each point on the plots compares the experiment yE(x, 𝜃) versus the calibrated model prediction \(\mathbb {E}[y^{P}(\boldsymbol {x})|\boldsymbol {X}^{E},\boldsymbol {Y}^{E},\boldsymbol {X}^{S},\boldsymbol {Y}^{S},\boldsymbol {\theta }^{*}]\), and the red straight line is a reference line representing ideal predictions.

Upon visual inspection, the plots qualitatively show acceptable predictive performance for \(y_{1}\) (melt pool depth) and \(y_{2}\) (melt pool width), but less accurate predictions for \(y_{3}\) (peak temperature), particularly in the case with too low and too high values of \(y_{3}\). Quantitatively, the error metric MAPE for each output are reported in Table 4. We notice that the MAPEs for melt pool depth and width, \(y_{1}\) and \(y_{2}\), are relatively low compared to the full range of simulations: 5 and 3%, respectively. These results show that the calibration model is effectively correcting the simulation model output when we use the Kriging technique in Eq. 27. However, the predictions for melt pool peak temperature, \(y_{3}\), show a higher value of MAPE (12% of the simulation range) compared to the predictions for melt pool depth and width. We believe that this is not likely to be due to the approximation provided in the surrogate model, since cross validation showed that the surrogate model gives reasonably accurate predictions. On the other hand, this error is likely due to the inherent high uncertainty associated with experimental temperature measurements using contactless methods (pyrometry) [75]. The uncertainty in the temperature data can be measured by computing its standard deviation. The average standard deviation of the experimental measurements for \(y_{1}\), \(y_{2}\), and \(y_{3}\) are 3.03 μ m, 8.14 μ m, and \(306.3~^{\circ }\mathrm {C}\), respectively. We notice low standard deviations for \(y_{1}\) and \(y_{2}\) (6% of the simulation ranges), in contrast to a relatively high standard deviation for \(y_{3}\) (24% of the simulation range). This is the likely explanation for the high MAPE associated with the predictions of \(y_{3}\) due to high measurement noise, which signals the need for improving existing measurement techniques or developing new sensors with lower measurement noise.

To support our argument that uncertainty in experimental temperature measurements explain the high reported value of MAPE for \(y_{3}\), we re-implemented the multivariate calibration procedure with only the melt pool depth and width (y1 and \(y_{2}\), respectively) as model outputs. In other words, we excluded the melt pool temperature \(y_{3}\) as a model output. Figure 9 shows the results of the sixfold cross validation for this calibrated model. We observe that that the cross validation plots show improvement in predictive performance, indicated by more proximity of the blue data points to the red line, narrower confidence intervals, and lower MAPE error values of 2.42 and 4.96 μ m for \(y_{1}\) and \(y_{2}\), respectively. This both supports our claim regarding measurement errors associated with temperature measurements and demonstrates satisfactory performance of the calibrated model.

Conclusions and Future Work Directions

We developed an efficient procedure for conducting formal calibration (also known as inverse uncertainty quantification (UQ) analysis) of computational materials models. In addition to providing one of the first efforts to systematically perform UQ analysis for ICME models, we also present a versatile multivariate statistical framework to perform such analysis in the case of models with multiple quantities of interest (QoIs), in contrast to many previous research efforts that typically focus on a univariate scalar QoI. The proposed framework involves a two-step procedure that starts with constructing a computationally efficient multivariate Gaussian process-based surrogate model that can be used in lieu of the original expensive computational model. The surrogate model can then be used to generate sufficiently large numbers of simulations needed to conduct calibration through a synthesis with experimental measurements.

We implemented the proposed multivariate statistical framework to calibrate a finite element method (FEM)-based thermal model for laser powder bed fusion metal additive manufacturing (L-PBF AM). The model predicts the thermal history and melt pool geometry during fabrication, and can potentially become one of the core elements of an ICME platform for the L-PBF process. Our results indicate that the multivariate surrogate model is capable of adequately approximating the original FEM-based thermal model to a good degree of accuracy. Furthermore, predictions made using the calibrated model showed good agreement with experimental measurements conducted in a case study on fabricating single tracks of Ti-6Al-4V AM parts on a commercial L-PBF system instrumented with in situ temperature monitoring capability.

The current work represents a foundation for numerous future investigations. First, coupling the calibrated melt pool model with other physics-based models in a complete multi-model ICME platform for laser-based AM would be of great value. Second, calibration of ICME simulation models with high-dimensional output (e.g., fully explicit microstructure simulations) will be very useful but has not been conducted yet. Third, more validation experiments with other measurement instruments can be carried out to achieve better accuracy for predicting melt pool temperature.

References

National Research Council (2008) Integrated computational materials engineering: a transformational discipline for improved competitiveness and national security. National Academies Press, Washington, D.C.

O’Hagan A (2006) Bayesian analysis of computer code outputs: a tutorial. Reliab Eng Syst Safety 91 (10):1290–1300

Chernatynskiy A, Phillpot SR, LeSar R (2013) Uncertainty quantification in multiscale simulation of materials: a prospective. Annual Rev Mater Res 43:157–182

Panchal JH, Kalidindi SR, McDowell DL (2013) Key computational modeling issues in integrated computational materials engineering. Comput Aided Des 45(1):4–25

McDowell DL, Kalidindi SR (2016) The materials innovation ecosystem: a key enabler for the materials genome initiative. MRS Bull 41(4):326–337

James M, Murphy D, Sexton MH, Barnett DN, Jones GS et al (2004) Quantification of modelling uncertainties in a large ensemble of climate change simulations. Nature 430(7001):768

Witteveen JAS, Sarkar S, Bijl H (2007) Modeling physical uncertainties in dynamic stall induced fluid–structure interaction of turbine blades using arbitrary polynomial chaos. Comput Struct 85(11):866–878

Van Oijen M, Rougier J, Smith R (2005) Bayesian calibration of process-based forest models: bridging the gap between models and data. Tree Physiology 25(7):915–927

Avramova MN, Ivanov KN (2010) Verification, validation and uncertainty quantification in multi-physics modeling for nuclear reactor design and safety analysis. Prog Nucl Energy 52(7):601–614

Kilian L, Zha T (2002) Quantifying the uncertainty about the half-life of deviations from PPP. J Appl Econ 17(2):107–125

Modeling Across Scales (2016) A roadmapping study for connecting materials models and simulations across length and time scales (the minerals, metals and materials society 2015)

Howe D, Goodlet B, Weaver J, Spanos G (2016) Insights from the 3rd World Congress on Integrated Computational Materials Engineering. JOM 68(5):1378–1384

Tapia G, Johnson L, Franco B, Karayagiz K, Ma J, Arroyave R, Karaman I, Elwany A (2017) Bayesian calibration and uncertainty quantification for a physics-based precipitation model of nickel–titanium shape-memory alloys. J Manuf Sci Eng 139(7):071002

Franco BE, Ma J, Loveall B, Tapia GA, Karayagiz K, Liu J, Elwany A, Arroyave R, Karaman I (2017) A sensory material approach for reducing variability in additively manufactured metal parts. Scientific Reports, 7

Bauereiß A, Scharowsky T, Körner C (2014) Defect generation and propagation mechanism during additive manufacturing by selective beam melting. J Mater Process Technol 214(11):2522–2528

Lopez F, Witherell P, Lane B (2016) Identifying uncertainty in laser powder bed fusion additive manufacturing models. J Mech Des 138(11):114502

Seifi M, Salem A, Beuth J, Harrysson O, Lewandowski JJ (2016) Overview of materials qualification needs for metal additive manufacturing. JOM 68(3):747–764

Zhen H, Mahadevan S (2017a) Uncertainty quantification in prediction of material properties during additive manufacturing. Scr Mater 135:135–140

Zhen H, Mahadevan S (2017) Uncertainty quantification and management in additive manufacturing: current status, needs, and opportunities. The International Journal of Advanced Manufacturing Technology, 1–20

Timothy G, Trucano LP, Swiler TI, Oberkampf WL, Pilch M (2006) Calibration, validation, and sensitivity analysis: what’s what. Reliab Eng Syst Saf 91(10):1331–1357

Liu B, Qingyan X (2004) Advances on microstructure modeling of solidification process of shape casting. Tsinghua Sci Technol 9(5):497–505

Liu P, Lusk MT (2002) Parametric links among Monte Carlo, phase-field, and sharp-interface models of interfacial motion. Phys Rev E 66(6):061603

Holm EA, Srolovitz DJ, Cahn JW (1993) Microstructural evolution in two-dimensional two-phase polycrystals. Acta metallurgica et materialia 41(4):1119–1136

Spittle JA, Brown SGR (1989) Computer simulation of the effects of alloy variables on the grain structures of castings. Acta Metall 37(7):1803–1810

Zhu P, Smith RW (1992) Dynamic simulation of crystal growth by Monte Carlo method I. Model description and kinetics. Acta metallurgica et materialia 40(4):683–692

Higdon D, Gattiker J, Williams B, Rightley M (2008) Computer model calibration using high-dimensional output. J Am Stat Assoc 103(482):570–583

Paulo R, García-donato G, Palomo J (2012) Calibration of computer models with multivariate output. Comput Stat Data Anal 56(12):3959–3974

Khairallah SA, Anderson AT, Rubenchik A, King WE (2016) Laser powder-bed fusion additive manufacturing: physics of complex melt flow and formation mechanisms of pores, spatter, and denudation zones. Acta Mater 108:36–45

Khairallah SA, Anderson A (2014) Mesoscopic simulation model of selective laser melting of stainless steel powder. J Mater Process Technol 214(11):2627–2636

Ganeriwala R, Zohdi TI (2016) A coupled discrete element-finite difference model of selective laser sintering. Granul Matter 18(2):21

Qiu C, Panwisawas C, Ward M, Basoalto HC, Brooks JW, Attallah MM (2015) On the role of melt flow into the surface structure and porosity development during selective laser melting. Acta Mater 96:72–79

Leitz KH, Singer P, Plankensteiner A, Tabernig B, Kestler H, Sigl LS (2015) Multi-physical simulation of selective laser melting of molybdenum. Proceedings of Euro PM, 4–7

Panwisawas C, Qiu C, Anderson MJ, Sovani Y, Turner RP, Attallah MM, Brooks JW, Basoalto HC (2017) Mesoscale modelling of selective laser melting thermal fluid dynamics and microstructural evolution. Comput Mater Sci 126:479–490

Ly S, Rubenchik AM, Khairallah SA, Guss G, Matthews MJ (2017) Metal vapor micro-jet controls material redistribution in laser powder bed fusion additive manufacturing. Scientific Reports, 7

Pei W, Zhengying W, Zhen C, Li J, Shuzhe Z, Jun D (2017) Numerical simulation and parametric analysis of selective laser melting process of AlSi10Mg powder. Appl Phys A 123(8):540

Matsumoto M, Shiomi M, Osakada K, Abe F (2002) Finite element analysis of single layer forming on metallic powder bed in rapid prototyping by selective laser processing. Int J Mach Tools Manuf 42(1):61–67

Roberts IA, Wang CJ, Esterlein R, Stanford M, Mynors DJ (2009) A three-dimensional finite element analysis of the temperature field during laser melting of metal powders in additive layer manufacturing. Int J Mach Tools Manuf 49(12):916–923

Verhaeghe F, Craeghs T, Heulens J, Pandelaers L (2009) A pragmatic model for selective laser melting with evaporation. Acta Mater 57(20):6006–6012

Shen N, Chou K (2012) Thermal modeling of electron beam additive manufacturing process. powder sintering effects ASME Paper No MSEC2012-7253

Hussein A, Hao L, Yan C, Everson R (2013) Finite element simulation of the temperature and stress fields in single layers built without-support in selective laser melting. Mater Des 52:638–647

Fu CH, Guo YB (2014) 3-dimensional finite element modeling of selective laser melting Ti-6Al-4V alloy. In: 25th Annual International Solid Freeform Fabrication Symposium

Loh L-E, Chua C-K, Yeong W-Y, Song J, Mapar M, Sing S-L, Liu Z-H, Zhang D-Q (2015) Numerical investigation and an effective modelling on the selective laser melting (SLM) process with aluminium alloy 6061. Int J Heat Mass Transfer 80:288–300

Romano J, Ladani L, Sadowski M (2015) Thermal modeling of laser based additive manufacturing processes within common materials. Procedia Manuf 1:238–250

Heeling T, Cloots M, Wegener K (2017) Melt pool simulation for the evaluation of process parameters in selective laser melting. Additive Manuf 14:116–125

Xia M, Dongdong G, Guanqun Y, Dai D, Chen H, Shi Q (2017) Porosity evolution and its thermodynamic mechanism of randomly packed powder-bed during selective laser melting of Inconel 718 alloy. Int J Mach Tools Manuf 116:96–106

Huang Y, Yang LJ, Du XZ, Yang YP (2016) Finite element analysis of thermal behavior of metal powder during selective laser melting. Int J Therm Sci 104:146–157

Dai K, Shaw L (2001) Thermal and stress modeling of multi-material laser processing. Acta Mater 49 (20):4171–4181

Van Belle L, Vansteenkiste G, Boyer JC (2012) Comparisons of numerical modelling of the selective laser melting. In: Key engineering materials, volume 504, pages 1067–1072. Trans Tech Publ

Liu Y, Zhang J, Pang Z (2018) Numerical and experimental investigation into the subsequent thermal cycling during selective laser melting of multi-layer 316l stainless steel. Opt Laser Technol 98:23–32

Zeng K, Pal D, Stucker B (2012) A review of thermal analysis methods in laser sintering and selective laser melting. In: Proceedings of Solid Freeform Fabrication Symposium Austin, TX, pp 796–814

Schoinochoritis B, Chantzis D, Salonitis K (2017) Simulation of metallic powder bed additive manufacturing processes with the finite element method: a critical review. Proc Instit Mech Eng, Part B: J Eng Manuf 231(1):96–117

Markl M, Körner C (2016) Multiscale modeling of powder bed–based additive manufacturing. Annu Rev Mater Res 46:93–123

Yin J, Zhu H, Ke L, Lei W, Dai C, Zuo D (2012) Simulation of temperature distribution in single metallic powder layer for laser micro-sintering. Comput Mater Sci 53(1):333–339

Mills KC (2002) Recommended values of thermophysical properties for selected commercial alloys. Woodhead Publishing, Sawston

Masmoudi A, Bolot R, Coddet C (2015) Investigation of the laser–powder–atmosphere interaction zone during the selective laser melting process. J Mater Process Technol 225:122–132

Criales LE, Arısoy YM, Özel T (2015) A sensitivity analysis study on the material properties and process parameters for selective laser melting of Inconel 625. In: ASME 2015 International manufacturing science and engineering conference, pages v001t02a062–v001t02a062. American society of mechanical engineers

Yang J, Sun S, Brandt M, Yan W (2010) Experimental investigation and 3D finite element prediction of the heat affected zone during laser assisted machining of Ti6Al4V alloy. J Mater Process Technol 210(15):2215–2222

Karayagiz K, Elwany A, Tapia G, Franco B, Johnson L, Ji M, Karaman I, Arroyave R (2018) Numerical and experimental analysis of heat distribution in the laser powder bed fusion of Ti-6 Al-4V. IISE Transactions, (just-accepted), 1–44

Bo C, Price S, Lydon J, Cooper K, Chou K (2014) On process temperature in powder-bed electron beam additive manufacturing: model development and validation. J Manuf Sci Eng 136(6):061018

Vastola G, Zhang G, Pei Q X, Zhang Y-W (2016) Modeling the microstructure evolution during additive manufacturing of Ti6Al4V: a comparison between electron beam melting and selective laser melting. JOM 68(5):1370–1375

Kennedy MC, O’Hagan A (2001) Bayesian calibration of computer models. Journal of the Royal Statistical Society. Series B, Statistical Methodology, 425–464

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Statistical science, 409–423

Haylock RG, O’Hagan A (1996) On inference for outputs of computationally expensive algorithms with uncertainty on the inputs. Bayesian Stat 5:629–637

Oakley J, O’hagan A (2002) Bayesian inference for the uncertainty distribution of computer model outputs. Biometrika 89(4):769–784

Conti S, O’Hagan A (2010) Bayesian emulation of complex multi-output and dynamic computer models. J Stat Plan Inference 140(3):640–651

Rasmussen CE, Williams CKI (2006) Gaussian processes for machine learning. The MIT Press, Cambridge

Bhat K, Haran M, output MG (2010) Computer model calibration with multivariate spatial: a case study. Frontiers of Statistical Decision Making and Bayesian Analysis, 168–184

Tapia G, Khairallah S, Matthews M, King WE, Elwany A (2017) Gaussian process-based surrogate modeling framework for process planning in laser powder-bed fusion additive manufacturing of 316l stainless steel. The International Journal of Advanced Manufacturing Technology, 1–13

Mani M, Feng S, Lane B, Donmez A, Moylan S, Fesperman R (2015) Measurement science needs for real-time control of additive manufacturing powder bed fusion processes. US Department of Commerce, National Institute of Standards and Technology

Boluki S, Esfahani MS, Qian X, Dougherty ER (2017a) Incorporating biological prior knowledge for Bayesian learning via maximal knowledge-driven information priors. BMC Bioinforma 18(14):1–1. https://doi.org/10.1186/s12859-017-1893-4

Boluki S, Esfahani MS, Qian X, Dougherty ER (2017) Constructing pathway-based priors within a Gaussian mixture model for Bayesian regression and classification. IEEE/ACM Trans Comput Biol Bioinformatics PP(99):1–1. https://doi.org/10.1109/TCBB.2017.2778715

Romano J, Ladani L, Sadowski M (2016) Laser additive melting and solidification of Inconel 718: finite element simulation and experiment. JOM 68(3):967–977

Boley CD, Khairallah SA, Rubenchik AM (2015) Calculation of laser absorption by metal powders in additive manufacturing. Appl Opt 54(9):2477–2482

A Rubenchik SW, Mitchell S, Golosker I, LeBlanc M, Peterson N (2015) Direct measurements of temperature-dependent laser absorptivity of metal powders. Appl Opt 54(24):7230–7233

Grasso M, Colosimo BM (2017) Process defects and in situ monitoring methods in metal powder bed fusion: a review. Measure Sci Technol 28(4):044005

Gelfand AE, Diggle P, Guttorp P, Fuentes M (2010) Handbook of spatial statistics. CRC Press, Boca Raton

Casella G, Berger RL (2002) Statistical inference volume, vol 2. CA, Duxbury Pacific Grove

Mahmoudi M, Tapia G (2017) Multivariate statistical calibration of computer simulation models. https://github.com/mahmoudi-tapia/MVcalibration

Acknowledgements

R.A. also acknowledges the support of NSF through the NSF Research Traineeship (NRT) program under Grant No. NSF-DGE-1545403, “NRT-DESE: Data-Enabled Discovery and Design of Energy Materials (D3EM).” R.A. and I.K. also acknowledge the partial support from NSF through Grant No. NSF-CMMI-1534534.

Funding

This work was supported by an Early Stage Innovations grant from NASA’s Space Technology Research Grants Program, Grant No. NNX15AD71G. Portions of this research were conducted with High Performance Research Computing resources provided by the Texas A&M University (https://hprc.tamu.edu).

Author information

Authors and Affiliations

Corresponding author

Additional information

Supplementary Data

The complete dataset for building the surrogate model and calibrating the parameters as well as the MATLAB source codes have been shared and are freely available on github.com [78]. It includes the codes for loading the data, Monte Carlo simulation, and visualization of the outputs. The community can access and reproduce all of our results, in addition to use our models for their own data. Instructions regarding how to run the codes are given in the main scripts as identified on the Github repository.

Appendices

Appendix A: Mathematical Details of the Multivariate Surrogate Model

Considering the simple univariate case, a GP model is a non-parametric statistical model in which a stochastic process \(f\left (\cdot \right )\) is assumed to have all of its finite-dimensional distributions as multivariate normal [76]. Therefore, the joint probability distribution of the outputs from the stochastic process at any finite set of inputs \(\left \{ \boldsymbol {x}_{1},\ldots ,\boldsymbol {x}_{n}\right \} \) (assuming f only takes x as inputs for now) is modeled as an n-dimensional multivariate normal distribution

where the mean vector \(\boldsymbol {m}\) is defined by a mean function \(m\left (\cdot \right )\), and covariance matrix \(\boldsymbol {C}\) is defined by a covariance function \(c\left (\cdot ,\cdot \right )\), with \(\mathbb {E}\left [f\left (\boldsymbol {x}\right )\middle |\boldsymbol {{\Phi }}\right ]=m\left (\boldsymbol {x}\right )\) and \(\text {cov}\left [f\left (\boldsymbol {x}_{i}\right ),f\left (\boldsymbol {x}_{j}\right )\middle |\boldsymbol {{\Phi }}\right ]=c\left (\boldsymbol {x}_{i},\boldsymbol {x}_{j}\right )\). The whole distribution is fully defined by some set of hyperparameters \(\boldsymbol {{\Phi }}\). Hence, we denote a univariate GP by

We now rewrite the multivariate GP as

and translate all these definitions into our original context, \(\boldsymbol {y}^{S}=f\left (\boldsymbol {x},\boldsymbol {\theta }\right )\). We build a multivariate GP-based on Eq. 16, with the i-th input tuple denoted as \(\left (\boldsymbol {x},\boldsymbol {\theta }\right )_{i}=\left [x_{i,1},\ldots ,x_{i,p},\theta _{i,1},\ldots ,\theta _{i,t}\right ]^{\top }\). Mean and correlation functions are defined as follows:

where \(h:\mathcal {X}\times \mathcal {T}\rightarrow \mathbb {R}^{m}\) is a function (defined by the modeler) that maps the input space to m basis functions, \(\boldsymbol {B}=\left [\boldsymbol {\beta }_{1},\ldots ,\boldsymbol {\beta }_{q}\right ]\in \mathbb {R}^{m,q}\) is a matrix of regression coefficients, and \(R=\text {diag}\left (\boldsymbol {r}\right )\) is a diagonal matrix of positive roughness parameters with \(\boldsymbol {r}=\left [r_{1},\ldots ,r_{p},r_{p + 1},\ldots ,r_{p+t}\right ]\in \mathbb {R}_{+}^{p+t}\). The roughness parameter vector \(\boldsymbol {r}\) explains how rough (or smooth) the function is, i.e., how quickly its values change across the input domain.

With the choices of linear regression mean function in Eq. 17, stationary squared exponential correlation function in Eq. 18, and separable covariance structure (with \(\boldsymbol {{\Sigma }}\in \mathbb {R}_{+}^{q,q}\) accounting for correlations between outputs), the model is fully defined by

It can then be shown, as presented in [65], that the conditional posterior distribution of f given \(\boldsymbol {r}\), after integrating out \(\boldsymbol {B}\) and \(\boldsymbol {{\Sigma }}\), is a multivariate q-dimensional T process, such that the probability distribution it yields is a matrix-variate T distribution:

with \(N-m\) degrees of freedom (denoted as dof henceforth), and

where

To summarize, the T process defined in Eqs. 20–22 can be used as a fast surrogate model for the simulation model. Its mean function \(m^{\star }\) interpolates the training data \(\left (\boldsymbol {X}^{S},\boldsymbol {Y}^{S}\right )\) exactly and provides an approximation to \(f\left (\cdot \right )\). For the surrogate model to be only dependent on the data, we need to integrate out the roughness parameters \(\boldsymbol {r}\). This step is achieved through a Bayesian approach, for which the posterior distribution of the roughness parameters (again after proper integration of B and \(\boldsymbol {{\Sigma }}\), see [65]) is given by the following:

with

Subsequently, we set the prior distribution for \(\boldsymbol {r}\) to follow a joint log-logistic distribution as below:

We estimate posterior distributions of these roughness parameters using the Metropolis-Hastings algorithm and select their mode as the values to be used in the surrogate model defined in Eqs. 20, 21, and 22. Once the roughness parameters \(\boldsymbol {r}\) have been estimated, the surrogate model in Eq. 20 is fully defined.

Appendix B: Guidelines for Adaptive Sampling

If CV results are satisfactory (i.e., MAPE is low), then we can move to the next step of the calibration procedure in “Multivariate Calibration Model”. Otherwise, we seek to improve the predictive power of the surrogate model. More specifically, we run an additional number of computer model simulations, such that we have a larger training data set that results in a better surrogate model. We achieve this through an adaptive sampling (AS) technique to select new data points to sample based on present results. The algorithm devised for this purpose is similar to a grid search, where we subdivide each dimension from the \(\left (p+t\right )\)-dimensional input spatial domain into grids (perhaps with different number of divisions per dimension) yielding \(N_{\text {AS}}\) number of different data points within the grid,

and calculate the predictive variance for each point based on the probability distribution from Eq. 20:

where \(\hat {\boldsymbol {{\Sigma }}}_{j,j}\) is the j-th element in the diagonal of matrix \(\hat {\boldsymbol {{\Sigma }}}\).

Elements of the vector AS represent the average predictive variance among all outputs at a specific input point. The vector is then sorted in descending order, and the corresponding points for the first 20 elements (with the largest average predictive variance) are selected to be evaluated using the expensive computer model. The underlying hypothesis is that adding these points that showed high predictive variance to the training data set \(\boldsymbol {X}^{S}\) will improve the predictive power of the surrogate model. We denote the set with these new appended data points by \(\boldsymbol {X}^{\text {AS}}\).

In implementing the adaptive sampling procedure as outlined above, we include two filters that ensure better sampling of new points. The first filter flags points that are very close to one other. This filter essentially avoids sampling more than one point from within a small sub-set of the input space, since this is not likely to provide more information. The filter takes the first 20 elements of the vector AS with largest predictive variance, and selects those that are at least some distance threshold \(\tau _{\text {AS}}\) apart from one another. When a point is flagged and excluded, the next point from vector AS becomes a candidate to be added to \(\boldsymbol {X}^{\text {AS}}\) if it satisfies the filter criterion.