Abstract

Diet and lifestyle play a significant role in the development of colorectal cancer, but the full complexity of the association is not yet understood. Dietary pattern analysis is an important new technique that may help to elucidate the relationship. This review examines the most common techniques for extrapolating dietary patterns and reviews dietary pattern/colorectal cancer studies published between September 2011 and August 2012. The studies reviewed are consistent with prior research but include a more diverse international population. Results from investigations using a priori dietary patterns (i.e., diet quality scores) and a posteriori methods, which identify existing eating patterns (i.e., principal component analysis), continue to support the benefits of a plant-based diet with some dairy as a means to lower the risk of colorectal cancer, whereas a diet high in meats, refined grains, and added sugar appears to increase risk. The association between colorectal cancer and alcohol remains unclear.

Similar content being viewed by others

Introduction

Colorectal cancers are the third most commonly diagnosed cancer in men and the second most commonly diagnosed cancer in women worldwide [1]. Previous reports have concluded that diet and lifestyle choices play a significant role in the development of colorectal cancer [2]. In 2007, the World Cancer Research Fund (WCRF) and the American Institute for Cancer Research (AICR) jointly issued a groundbreaking report entitled “Food, Nutrition, Physical Activity, and the Prevention of Cancer: a Global Perspective,” commonly referred to as the “Second Expert Report” [3]. Convincing evidence in the report connects several specific diet and lifestyle behaviors to the development of colorectal cancer. However, the report also noted several areas where associations were inconclusive. The authors highlighted the need for new methods of investigation to further the understanding of the causal relationship between diet, lifestyle, and cancer development. One such area cited was examination of “broad patterns of diets, and the interrelationship between elements of diets.…” [4].

A 2010 update of the colorectal cancer section of the report included many new studies that utilized a broader approach of examining diet in the form of dietary pattern investigation [5]. The 2010 report reclassified certain dietary factors previously linked at the probable level to the convincing level; however, many suspected associations continued to be ambiguous, necessitating a need for continued research and review. Since the 2010 update, several new dietary pattern studies have been published, adding to the current knowledge base. The goal of this review is to provide an overview of the benefits of applying dietary pattern analysis to the investigation of colorectal cancer, briefly describe the most common techniques for extrapolating dietary patterns, and update the evidence linking colorectal cancers to dietary patterns published since 2010.

Dietary Patterns

Use of dietary patterns to investigate the impact of diet on chronic disease risk has become increasingly more common during the past 15 years. Dietary patterns have gained favor as a comprehensive method of examining diet that better captures the in vivo interrelationship of nutrients as they are consumed within a population. Dietary patterns are particularly useful for the investigation of diet and chronic disease development, because chronic disease often is influenced by many interacting variables that modify each other’s impact [6]. Unlike the traditional single-nutrient model, measures of total diet quality take into account undiscovered and undefined characteristics of foods and account for interaction between all nutrients at the levels they are present in the diet.

The term dietary pattern is used in the literature in reference to several techniques of defining total diet quality [7]. Two primary approaches to derivation of dietary patterns have been identified. The first is the creation of an a priori algorithm to compute a dietary index or score resulting in the creation of a single numerical score indicating total dietary quality. The second approach derives dietary patterns empirically by applying statistical techniques a posteriori to existing dietary data to elucidate distinct patterns as they exist within a given cohort. Both techniques have been shown to reflect validly population dietary patterns but have different purposes in what they seek to evaluate [8].

A Priori Dietary Patterns

A priori dietary patterns generally take the form of indices and scores designed to compare an individual’s intake to a set of expert guidelines defining dietary quality. Expert guidance on optimal dietary behavior for disease prevention often is the synthesis of multiple lines of research, suggesting a relationship between consumption or avoidance of individual foods or nutrients and disease development. When guidelines are created recommending consumption of nutrients and foods in a systematic manner, they reflect the informed opinions of the experts involved, which may or may not have the predicted impact on disease risk. Creation of an index or score based on expert guidelines seeks to evaluate the realistic effect of greater adherence to the dietary pattern created from guidelines based on single nutrient/food data [9]. Several new studies that employed this technique, including a study examining adherence to the WCRF 2007 recommendations and risk of colorectal cancer development [23], are discussed later in this review.

A Posteriori Dietary Patterns

Empirically derived dietary patterns are statistically extrapolated from observed patterns of consumption within a given cohort. The objective of empirically derived dietary patterns is to identify favorable profiles associated with disease prevention, as well as unfavorable profiles as they exist in a given community [6–9].

There are several strengths of examining a posteriori dietary patterns. First, data-driven analysis can identify many different types of dietary patterns as they exist within different populations, whereas a nutrition index or risk score only ranks on a continuum or indicates in a linear manner whether recommendations are met. It is desirable to be able to identify multiple dietary patterns, because it is probable that multiple dietary patterns found within diverse populations are beneficial for disease prevention. Whereas index- and score-based methods of summarizing dietary patterns are helpful to evaluate specific dietary goals or questions, they do not capture the full range of beneficial diets, because they are limited by predetermined hypotheses. Second, empirically derived dietary patterns are very useful when there is little or no information to inform expert guidance for the creation of indexes and scores. Finally, a significant advantage of examining empirically derived dietary patterns within a population is that in addition to quantifying healthful diets, they are effective for identification of dietary patterns associated with increased risk of disease. Once identified, these high-risk patterns can then be targeted for prevention efforts.

Several techniques have been applied to derivation of a posteriori dietary patterns. Factor analysis has become the most common manner of extrapolating patterns, and within the broader technique, principal component analysis (PCA) has been predominantly used. Factor analysis has been used in many different cohorts internationally and usually results in identification of two or three main patterns. Because factor analysis creates a continuous scale, tertiles, quartiles, or quintiles within each pattern are usually examined to minimize confounding caused by similar dietary habits of individuals at the center of the continuum. At the center, an artificial cutoff must be made to divide the groups, but individuals whose diets fall in the middle of the range are more alike than they are different.

A less commonly used technique known as cluster analysis also has been used. Unlike factor analysis, which sorts the cohort based on input factors, such as foods and food groups, cluster analysis determines groups by focusing on differences in intake among individuals [9, 10]. Factor analysis and similar techniques result in groups that overlap each other; conversely cluster analysis determines discrete patterns of intake among subgroups creating nonoverlapping clusters.

During the past several years, a lesser known a posteriori method known as reduced rank regression (RRR) also has emerged. Reduced rank regression is somewhat similar to principal component factor analysis in that linear functions of predictor variables, such as food groups, are identified, but instead of explaining variance directly in intake, an intermediate variable, such as a biomarker (or biomarkers), is used for the variance variable [11]. The technique has been promoted as having the combined strengths of both a priori and a posteriori methods, because RRR uses two information sources: prior information, which allows this method to account for current scientific evidence, and data from the study, which allows them to represent in vivo correlation of dietary components. The major limitation of RRR concerns the use of a biomarker in the model. If the biomarker is not a good proxy measure, the model will contain a significant source of error that may influence findings. Due to this limitation, few studies using RRR are published to date. However, one manuscript, published in 2011, that used a related technique [18] is reviewed later.

A Posteriori Dietary Patterns and Colorectal Cancer

Since the 2010 WCRF/AICR updated literature review, several important studies have applied a posteriori methods of investigating dietary patterns to risk of colorectal cancer development. In early 2012, Magalhaes et al. published a systematic review and meta-analysis on dietary patterns derived by applying principal component analysis to development of colorectal cancer. The review included literature through August 2010 [12••]. Studies available for inclusion in the meta-analysis were primarily from North American and European populations. The two most common dietary pattern types identified within the body of literature reviewed were a healthy pattern characterized by high fruit and vegetable intake and an unhealthy “western” pattern with a predominance of meat and refined grains. A few studies also identified a high alcohol “drinker” pattern. The healthy pattern was associated with a lower risk of colon cancer, whereas the western pattern was associated with a higher risk. Neither pattern was associated with rectal cancer. Within the meta-analysis, the drinker pattern was associated with neither colon nor rectal cancer.

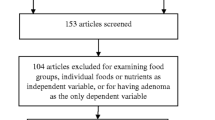

Between September 2011 and August 2012, five studies used a posteriori dietary patterns to examine risk of colorectal cancer development. Results from these studies are described in more detail in Table 1. Of these studies, three came from South America, one from Europe, and one in black Americans. All three South American studies were case-control designs.

One small study (45 cases, 95 controls) from the Cordoba province in Argentina identified three major dietary patterns [13], including a “Southern Cone” pattern, which was characterized predominantly by red and processed meats, starchy vegetables, wine, fats, and oil; a “high sugar drinks” pattern with high intake of sugar-sweetened beverages, foods with added sugar, fats and oil, fish and poultry; and a “prudent pattern” high in dairy foods, fruits, vegetables, poultry, and fish. The high, sugar-sweetened beverages pattern was strongly associated with development of colorectal cancer, followed by the Southern Cone pattern. In contrast with the other two dietary patterns, the prudent pattern was associated with lower odds of colorectal cancer development.

Another study by DeStefani et al. looked at 610 cases and more than 1,200 controls in Uruguay [14]. Application of factor analysis derived four food-based dietary patterns, including a “prudent pattern” high in fruits, vegetables, and white meat intake; a “western pattern” high in red meat, grains, and tubers intake; a “drinker pattern” high in alcohol and processed meat consumption; and in men only, a “traditional pattern” high in eggs, vegetables, fruits, and grains. The prudent pattern was inversely associated with colorectal cancer in both men and women. The western pattern was directly associated only with increased risk for colon cancer, and the drinker pattern was marginally associated with total colorectal cancer only in men. The men-only traditional pattern, which is similar to the prudent pattern high in fruits and vegetables, also was inversely associated with colorectal cancer.

DeStefani et al. also examined dietary patterns derived based on nutrients rather than foods within the same cohort resulting in three patterns [15]. The meat-based pattern resembled the food-based western pattern and was high in animal protein and fat from red and processed meats, dairy, and eggs. The plant-based pattern was similar to the food-based prudent pattern and was high in vitamin C and carotenoids. The carbohydrate pattern consisted of high intakes of both refined and unrefined grains. Similar to their findings with food-based patterns, in the nutrient-based analysis the meat-based pattern was associated with higher odds of colorectal cancer and the plant-based pattern with lower odds of colorectal cancer. The carbohydrate pattern showed no association with risk.

A hospital-based, case-control study in Portugal used both principal component analysis and cluster analysis to derive dietary patterns [16]. Magalhaes et al. first derived ten dietary patterns with principal component analysis and then, based on these patterns, grouped participants into three final patterns based on their intake similarities. The first pattern was a typical healthy pattern with high intake of fruits, vegetables, whole grains, poultry, fish, and dairy products. The second was a western-like pattern high in red and processed meat, refined grains, sweets, and alcoholic beverages. The last pattern was characterized by moderate consumption of foods commonly found in both of the previous two patterns. In comparison with the healthy pattern, the moderate consumption pattern was most strongly associated with rectal cancer, but still had a weaker association with colon cancer. The western-like pattern was strongly associated with total colorectal cancer and colon cancer. Although there was a suggestion of a specific association between the western-like pattern and rectal cancer, it did not reach statistical significance.

In a large cohort of U.S. black women focused on colorectal adenoma incidence, two dietary patterns were derived [17•]. The “prudent pattern” extrapolated in this population is similar to the “healthy” pattern derived in other populations and is characterized by a plant-based diet with low-fat animal products. The “western” pattern was similar to a typical unhealthy pattern of animal protein, fat, refined grains, and sugar. After 10 years of follow-up, a higher risk for colorectal adenomas was observed with increasing adherence to the western pattern and a lower risk was observed with the prudent pattern.

Another approach to derive dietary patterns is to identify foods that are correlated with biomarkers of colorectal cancer risk. When the goal is to identify foods that are simultaneously correlated with several biomarkers, the reduced rank regression procedure outlined earlier is used. If there is only one biomarker being considered, linear regression can be employed. This process was applied in a cohort of U.S. women with fasting C-peptide as the biomarker for colorectal cancer in the Nurses’ Health Study cohort [18•]. A diet high in red meat, fish, sugar-sweetened beverages but low in dairy, coffee, and whole grains was correlated with a higher C-peptide level. Adherence to this pattern appeared to increase colon cancer risk only among those with a body mass index (BMI) > 25 or who were inactive.

Conclusions from a Posteriori Dietary Pattern Studies

Studies from the past 2 years continue to indicate the benefits of a plant-based pattern with some dairy products as a means to lower the risk of colorectal cancer, whereas a diet high in meats, refined grains, and added sugar appears to increase the risk. There was remarkable consistency across these studies in the patterns derived by principal component analysis, as well as looking at results across different geographic and ethnic populations. Regardless of the name given to the patterns by the investigators, a plant-based pattern and an animal food and refined grains pattern emerged from each population. It is notable that the number of food groups, the exact type of foods within each food group, and the quantity of consumption differed between populations. However, the consistency of results suggests that specific differences in foods are less important than consuming an overall plant-based diet in colorectal cancer prevention. Although there were relatively few studies in Asian and South American populations, existing data appeared to indicate that results do not differ between different ethnic backgrounds.

In interpreting the results, one must keep in mind that both principal component analysis and cluster analysis identify existing eating patterns. Therefore, even when an association is detected, it does not represent the most beneficial or the most detrimental eating pattern. On the other hand, because patterns derived by reduced rank regression or linear regression focus on associations with selected biomarker(s), the food groups identified may not be intuitively logical and may contain a combination of food groups that may not represent known common eating patterns.

A Priori Diet Quality Scores and Colorectal Cancer

A priori diet quality scores measure adherence to dietary recommendations for general health or specific disease prevention. Previous studies have examined a variety of dietary quality scores that were not originally constructed specifically for colorectal cancer prevention but have observed a lower risk of colorectal cancer with better adherence to these guidelines [19–21]. Guidelines evaluated included the DASH score (Dietary Approaches to Stop Hypertension) [19, 21], which is a set of general healthy eating guidelines, the Healthy Eating Index 2005, and its modified version the Alternate Healthy Eating Index [20]. They share similar features in emphasizing fruits, vegetables, and whole grains intake, and discouraging excessive animal products consumption. Adherence scores also have been developed for regional diets, such as the Mediterranean diet, which include characteristics that have been shown to be beneficial [22].

Since 2010, several studies have looked at the association between total diet quality as measured by an a priori diet quality score and development of colorectal cancer. Studies are summarized in Table 2. As with prior research, no score was specifically developed for colorectal cancer prevention, but one study published in the past year investigated a score based on the 2007 World Cancer Research Fund and the American Institute of Cancer Research Second Expert Report guidelines [3].

Besides emphasizing fruits and vegetables and discouraging red meats, the WCRF/AICR guidelines promote unprocessed cereals and recommend lower intake of energy-dense foods, refined starch, sugary drinks, and foods preserved with salt. Romaguera et al. constructed an adherence score to these guidelines with a maximum of 6 points for men and 7 points for women, including an additional breastfeeding recommendation [23••]. This set of guidelines was tested using data from the EPIC cohort, which is a multi-country European follow-up study. Among almost 39,000 adults followed for a median of 11 years, adherence to these guidelines was clearly associated with a lower risk for colorectal cancer. Each one point increase in the score conferred a 22% risk reduction (relative risk = 0.88; 95% confidence interval = 0.84, 0.91).

It is not surprising that adherence to these guidelines resulted in a lower colorectal cancer risk, because many of the WCRF guidelines were shown individually to influence colorectal cancer risk. Red and processed meats and being overweight are well-established risk factors [24, 25]. High-fiber intake had been shown to potentially reduce risk [26]. Quickly digested carbohydrates in the form of refined grains and sugary drinks may increase colorectal cancer risk through the insulin-IGF (insulin like growth factor) axis, although evidence for an in vivo effect is limited [27].

The European Food Safety Authority also established a set of seven guidelines on carbohydrates, total fat, linoleic acid, alpha-linolenic acid, eicosapentaenoic acid (EPA), docosahexaenoic acid (DHA), fiber, and water. An adherence score of 0 to 6 was constructed for an Italian hospital-based, case-control study (1,953 cases, 4,154 controls) [28]. Whereas this score was not associated with overall colorectal cancer risk, among men, there was a suggestion that the top score (6 points) was inversely associated with risk of colon cancer (relative risk = 0.78; 95% confidence interval = 0.31-1.54, not statistically significant). Among women, a higher score actually conferred a higher risk of rectal cancer, and there was no association with colon cancer. When the authors analyzed individual components of the score, there was a suggestion that linoleic acid and alpha-linolenic acid were weakly associated with colorectal cancer risk. However, there is no evidence from the existing literature to suggest that these two fatty acids, or polyunsaturated fatty acids, are associated with colorectal cancer or specifically rectal cancer risk.

In this case-control study, dietary information was obtained after the participants were informed of their diagnosis. Therefore, recall bias may have occurred; however, it is difficult to speculate what types of foods the cases and controls would report differently. Among the components of this score, only fiber has been clearly shown to reduce the risk of colorectal cancer [26]. Therefore, it is not surprising that the score was not associated with colorectal cancer.

The traditional Mediterranean diet of the 1960s consists of high intakes of plant foods and olive oil, moderate amounts of dairy, and low quantities of red meat and sweets [29]. Previous studies have shown that adherence to the Mediterranean diet was associated with a lower risk of colorectal cancer [20, 21]. A cohort study from the Italian arm of the EPIC study adds to that body of evidence [30]. A Mediterranean diet score specific to the Italian population, the Italian Mediterranean Index, was constructed. The 12-point score emphasized high intakes of pasta, common Mediterranean vegetables, fish, olive oil, and legumes and discouraged intakes of red meat, sugary beverages, potatoes, and butter. After a mean follow-up of 11 years, individuals at the top half of the score range had half the risk of colorectal cancer than those who scored 0 or 1 point in the score. A lower risk was observed in both colon and rectal cancers.

In addition, data from a hospital-based, case-control study in Greece, with 250 cases and controls each also supported the benefits of the Mediterranean diet [31]. This study utilized a 15-component adherence score with a maximum of 75 points. For each point increase in this Modified Mediterranean Diet score, a 12% reduction of colorectal cancer odds was observed (95% confidence interval = 0.84-0.92). Individuals with or without metabolic syndrome benefited equally. Just as with the WCRF recommendations, the Mediterranean diet also included many of the dietary features that have shown to reduce colorectal cancer risk, including low red meat and sweets intake, high consumption of plant foods, and moderate dairy intake [32].

A six-point healthy Nordic food scale consisting of common Scandinavian foods, including apples and pears, cabbage and root vegetables, rye bread and oatmeal, and fish, was constructed for a Danish study [33]. After a median of 13 years of follow-up, a significant colorectal cancer risk reduction of 35% (confidence interval 6-54%) was observed in women, but not in men. The food items in this index generally fit into the known beneficial food groups of fruits, vegetables, and whole grains. However, the score does not emphasize low intakes of detrimental foods groups, such as red meats and added sugar, which may partly explain the inconsistent results between men and women as men may consume more red meat than women.

Conclusions

Both previous and recent data indicate that a plant-based diet with abundant fruits and vegetables, moderate amounts of dairy, and limited red and processed meats, refined grains, and added sugar is associated with lower risk of colorectal cancer. Results from a priori methods of defining dietary patterns (i.e., diet quality scores) and a posteriori methods of identifying existing eating patterns (i.e., principal component analysis) are remarkably consistent in supporting the benefits of that combination of foods. In addition, data are consistent despite the wide variety of specific types of fruits and vegetables within different regions. The cohort studies reviewed have reasonable follow-up durations that allowed for adequate time to capture potential dietary influences.

However, despite observed patterns associated with clear risk reduction, current data do not necessarily identify a single optimal diet for colorectal cancer prevention. None of the set of dietary characteristics, be it a priori recommendations or analysis of existing consumption patterns, were developed specifically for colorectal cancer prevention. Both previous and recent data are mostly from Caucasian populations. Although confirmation in other ethnicities is worthwhile, there is little reason why the same diet pattern would not be beneficial. In conclusion, there is strong evidence to support that a minimally processed, plant-based diet with moderate dairy consumption and low intake of red and processed meat is associated with lower risk of colorectal cancer.

References

Papers of particular interest, published recently, has been highlighted as: •Of importance •• Of major importance

International Agency for Research on Cancer: Globocan 2008: Cancer Fact Sheet. Available at http://globocan.iarc.fr/factsheets/cancers/colorectal.asp. Accessed August 2012

World Cancer Research Fund/American Institute for Cancer Research: Chapter 7: Cancers. In Food, Nutrition, Physical Activity and the Prevention of Cancer: a Global Perspective. Washington DC: AICR; 2007.

World Cancer Research Fund and American Institute for Cancer Research. Food, Nutrition, Physical Activity and the Prevention of Cancer: a Global Perspective. Washington: AICR; 2007.

World Cancer Research Fund and American Institute for Cancer Research: Chapter 11: Research issues. In Food, Nutrition, Physical Activity and the Prevention of Cancer: a Global Perspective. Page 360. Washington DC: AICR; 2007.

World Cancer Research Fund and American Institute for Cancer Research: WCRF/AICR Systematic Literature Review Continuous Update Project Report: The Associations between Food, Nutrition and Physical Activity and the Risk of Colorectal Cancer. Completed Oct 2010. Available at http://www.dietandcancerreport.org/cancer_resource_center/downloads/cu/Colorectal%20cancer%20CUP%20report%20Oct%202010.pdf. Accessed September 2012.

Hu FB. Dietary pattern analysis: a new direction in nutritional epidemiology. Curr Opin Lipidol. 2002;13:3–9.

Michels KB, Schulze MB. Can dietary patterns help us detect diet-disease associations? Nutr Research Rev. 2005;18:241–8.

Moeller SM, Reedy J, Millen AE, et al. Dietary patterns: challenges and opportunities in dietary patterns research, an Experimental Biology workshop, April 1, 2006. J Am Diet Assoc. 2007;107:1233–9.

Kant AK. Dietary patterns and health outcomes. J Am Diet Assoc. 2004;104:615–35.

Quatromoni PA, Copenhafer DL, Demissie S, et al. “The internal validity of a dietary pattern analysis. The Framingham nutrition studies” J Epidemiol. Community Health 2002.

Hoffmann K, Boeing H, Boffetta P, et al. Comparison of two statistical approaches to predict all-cause mortality by dietary patterns in German elderly subjects. Br J Nutr. 2005;93:709–16.

•• Magalhães B, Peleteiro B, Lunet N. Dietary patterns and colorectal cancer: systematic review and meta-analysis. Eur J Cancer Prev. 2012;21:15–23. This review and meta-analysis examines dietary pattern studies that used principal component analysis to identify patterns and summarizes three main patterns that emerge from the literature. The patterns identified are a “healthy” plant-based diet, a “western” animal protein, refined grain pattern, and in fewer studies a “drinker” pattern identified by relatively higher alcohol intake. The patterns are consistent across studies despite differences in the food that comprise the patterns.

Pou SA, Díaz MdP, Osella AR. Applying multilevel model to the relationship of dietary patterns and colorectal cancer: an ongoing case-control study in Córdoba, Argentina. Eur J Nutr 2012;51:755-764.

De Stefani E, Deneo-Pellegrini H, Ronco AL, et al. Dietary patterns and risk of colorectal cancer: a factor analysis in Uruguay. Asian Pac J Cancer Prev. 2011;12:753–9.

De Stefani E, Ronco AL, Boffetta P, et al. Nutrient-derived dietary patterns and risk of colorectal cancer: a factor analysis in Uruguay. Asian Pac J Cancer Prev. 2012;13:231–5.

Magalhães B, Bastos J, Lunet N. Dietary patterns and colorectal cancer: a case-control study from Portugal. Eur J Cancer Prev. 2011;20:389–95.

• Makambi KH, Agurs-Collins T, Bright-Gbebry M, et al. Dietary patterns and the risk of colorectal adenomas: the black women's health study. Cancer Epidemiol Biomarkers Prev. 2011;20:818–25. This study examined a posteriori dietary patterns in black American women. Patterns identified were similar to those found in white populations in America and Europe. Associations were similar in this population to those observed in more studied cohorts.

• Fung TT, Hu FB, Schulze M, et al. A dietary pattern that is associated with C-peptide and risk of colorectal cancer in women. Cancer Causes Control. 2012;23:959–65. This study uses a relatively new method of deriving dietary patterns using linear regression and a biomarker intermediary, the technique similar to reduced rank regression. The strengths of both a priori hypotheses and a posteriori observation are utilized.

Dixon LB, Subar AF, Peters U, et al. Adherence to the USDA Food Guide, DASH Eating Plan, and Mediterranean dietary pattern reduces risk of colorectal adenoma. J Nutr. 2007;137:2443–50.

Reedy J, Mitrou PN, Krebs-Smith SM, et al. Index-based dietary patterns and risk of colorectal cancer: the NIH-AARP Diet and Health Study. Am J Epidemiol. 2008;168:38–48.

Fung TT, Hu FB, Wu K, et al. The Mediterranean and Dietary Approaches to Stop Hypertension (DASH) diets and colorectal cancer. Am J Clin Nutr. 2010;92:1429–35.

Sofi F, Abbate R, Gensini GF, et al. Accruing evidence on benefits of adherence to the Mediterranean diet on health: an updated systemic review and meta-analysis. Am J Clin Nutr. 2010;92:1189–96.

•• Romaguera D, Vergnaud AC, Peeters PH, et al. Is concordance with World Cancer Research Fund/American Institute for Cancer Research guidelines for cancer prevention related to subsequent risk of cancer? Results from the EPIC study. Am J Clin Nutr. 2012;96:150–63. This study applied a risk score based on the WCFR/AICR guidelines to a cohort to test the ability of the guidelines to act in concert as a dietary pattern that is effective for prevention of colorectal cancer. The guidelines were created for cancer prevention in general, testing against specific forms of cancer is an important step in verifying their validity.

Chan DS, Lau R, Aune D, et al. Red and processed meat and colorectal cancer incidence: meta-analysis of prospective studies. PLoS One. 2011;6:e20456.

Okabayashi K, Ashrafian H, Hasegawa H, et al. Body mass index category as a risk factor for colorectal adenomas: a systematic review and meta-analysis. Am J Gastroenterol. 2012;107:1175–85.

Aune D, Chan DS, Lau R, et al. Dietary fibre, whole grains, and risk of colorectal cancer: systematic review and dose-response meta-analysis of prospective studies. BMJ. 2011;10(343):d6617.

Aune D, Chan DS, Lau R, et al. Carbohydrates, glycemic index, glycemic load, and colorectal cancer risk: a systematic review and meta-analysis of cohort studies. Cancer Causes Control. 2012;23:521–35.

Turati F, Edefonti V, Bravi F, et al. Adherence to the European food safety authority's dietary recommendations and colorectal cancer risk. Eur J Cancer Prev. 2012;66:517–22.

Nestle M. Mediterranean diets: historical and research overview. Am J Clin Nutr. 1995;61:1313S–20S.

Agnoli C, Grioni S, Sieri S, et al.: Italian Mediterranean Index and risk of colorectal cancer in the Italian section of the EPIC cohort. Int J Cancer 2012, http://onlinelibrary.wiley.com

Kontou N, Psaltopoulou T, Soupos N, et al. Metabolic Syndrome and Colorectal Cancer: The Protective Role of Mediterranean Diet-A Case-Control Study. Angiology. 2012;63:390–6.

Aune D, Lau R, Chan DS, et al. Dairy products and colorectal cancer risk: a systematic review and meta-analysis of cohort studies. Ann Oncol. 2012;23:37–45.

Kyrø C, Skeie G, Loft S, et al.: Adherence to a healthy Nordic food index is associated with a lower incidence of colorectal cancer in women: The Diet, Cancer and Health cohort study. Br J Nutr 2012, 1-8. http:journals.cambridge.org

Acknowledgments

T.T. Fung is supported by a grant from the National Institutes of Health (NIH).

Disclosure

No potential conflicts of interest relevant to this article were reported.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fung, T.T., Brown, L.S. Dietary Patterns and the Risk of Colorectal Cancer. Curr Nutr Rep 2, 48–55 (2013). https://doi.org/10.1007/s13668-012-0031-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13668-012-0031-1