Abstract

Thoughtful clinical trial design is critical for efficient therapeutic development, particularly in the field of amyotrophic lateral sclerosis (ALS), where trials often aim to detect modest treatment effects among a population with heterogeneous disease progression. Appropriate outcome measure selection is necessary for trials to provide decisive and informative results. Investigators must consider the outcome measure’s reliability, responsiveness to detect change when change has actually occurred, clinical relevance, and psychometric performance. ALS clinical trials can also be performed more efficiently by utilizing statistical enrichment techniques. Innovations in ALS prediction models allow for selection of participants with less heterogeneity in disease progression rates without requiring a lead-in period, or participants can be stratified according to predicted progression. Statistical enrichment can reduce the needed sample size and improve study power, but investigators must find a balance between optimizing statistical efficiency and retaining generalizability of study findings to the broader ALS population. Additional progress is still needed for biomarker development and validation to confirm target engagement in ALS treatment trials. Selection of an appropriate biofluid biomarker depends on the treatment mechanism of interest, and biomarker studies should be incorporated into early phase trials. Inclusion of patients with ALS as advisors and advocates can strengthen clinical trial design and study retention, but more engagement efforts are needed to improve diversity and equity in ALS research studies. Another challenge for ALS therapeutic development is identifying ways to respect patient autonomy and improve access to experimental treatment, something that is strongly desired by many patients with ALS and ALS advocacy organizations. Expanded access programs that run concurrently to well-designed and adequately powered randomized controlled trials may provide an opportunity to broaden access to promising therapeutics without compromising scientific integrity or rushing regulatory approval of therapies without adequate proof of efficacy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Improved therapeutics for patients with amyotrophic lateral sclerosis (ALS) is desperately needed to reduce functional decline, improve quality of life, and extend survival. The history of ALS therapeutic development includes a long list of negative and failed clinical trials, necessitating a critical review of past clinical trial designs and an innovative approach for study design methodologies moving forward [1,2,3]. While negative trials are an inevitable part of drug development, it is hoped that improved clinical trial designs can avoid failed or ambiguous clinical trials that do not provide decisive results, allowing trials of ineffective or unsafe therapeutics to be terminated faster and allowing effective therapeutics to be identified more efficiently [4].

A multifaceted approach is needed to optimize ALS clinical trial design. Careful outcome measure selection is necessary to leverage psychometric and statistical advantages while also capturing clinically meaningful results that are relevant to the mechanism of the therapeutic agent of interest [5]. The use of ALS prediction algorithms and statistical enrichment techniques can serve as a valuable tool to improve trial efficiency and ability to detect a treatment effect [6, 7]. Continued development and validation of biofluid biomarkers will serve as a valuable and necessary adjunct to clinical outcome measures by assessing target engagement, and the importance of biomarker incorporation into early phase clinical trials is increasingly recognized [8, 9]. Other recent innovations such as at-home technologies for measuring progression are promising tools for future trials that could reduce participant burden while increasing frequency of outcome measurement [10]. Another promising development in the ALS research landscape is the increased inclusion of patient advocates and advisors in all stages of ALS clinical trial development [11].

This review will discuss review past, present, and future approaches in ALS clinical trial design and will highlight areas of recent progress and innovation, in particular focusing on clinical trials for sporadic ALS.

ALS Outcome Measures

Strategic outcome measure selection and clinical trial design is particularly important for ALS studies, where investigators often hope to detect modest treatment effects in a patient population with variable rates of disease progression and heterogenous phenotypes. Considerations for outcome measures include reliability and reproducibility of the outcome measure, responsiveness, or ability of the outcome measure to detect change when change has actually occurred, and clinical relevance. Optimizing outcome measure selection will vary for each study depending on the goals of the study, the patient population, and mechanism or target of the therapeutic intervention, and therefore, a one-size-fits-all approach cannot be applied when selecting an outcome measure. Here, we will review the strengths and weakness of the commonly used ALS outcome measures (summarized in Table 1) and highlight recent innovations and outcome tools that are available or in development for future use.

Survival

Many early ALS clinical trials used survival as the primary outcome measure [12, 13]. Survival as a primary outcome measure is appealing at face value because it is unequivocally objective with clear clinical relevance. The specific survival endpoint used in most ALS trials is time to tracheostomy-free survival or time to permanent assisted ventilation [14], although there is no single universally accepted approach for the best way to define the survival endpoint in an ALS trial. The use of tracheostomy-free survival or time to permanent assisted ventilation allows more study participants to reach the survival endpoint for data analysis, but this approach introduces unintended factors in the analysis besides time to death due to ALS, such as variation in clinician treatment practices or participant acceptance of ventilatory interventions [15]. The disadvantage of survival as a primary outcome measure is reduced efficiency, due to limited ability to analyze outcomes for participants that do not meet the mortality endpoint during the follow-up period. As a result, studies with survival as a primary outcome measure have typically required large sample sizes and long trial durations. As an example, the confirmatory ALS study of riluzole enrolled over 900 participants for an 18-month study [16]. The current pipeline of ALS drug development demands a more efficient approach.

Some recent ALS studies, including AMX0035 (NCT03127514, NCT03488524) [17] and edaravone (NCT01492686) [18], have included open-label extension studies that allow for longer-term survival analysis as an exploratory outcome measure. This is an appealing strategy that uses alternate, more efficient primary outcome measures for the randomized controlled trial portion of the study, and then allows long-term survival analysis in support of the primary study findings. The AMX0035 study also utilized a firm, OmniTrace, to obtain complete survival data in study participants using public records and databases, allowing for a more complete and robust survival analysis [17]. Inclusion of an open-label extension study also offers a patient-centric study design where participants initially assigned to placebo will later get definite access to the experimental agent, which is expected to help with recruitment and retention. It is important to note that the FDA has expressed concerns about considering the survival data from open-label extension studies as sufficient for supporting efficacy, and thus, the primary data from the randomized controlled trials remains critical for drug approval [19].

The Revised ALS Functional Rating Scale

Many contemporary ALS clinic trials rely on the revised ALS Functional Rating Scale (ALSFRS-R) as the primary outcome measure [14, 20]. This is an ordinal scale that includes 12 questions, each rated 0 through 4, that assess an ALS patient’s ability and need for assistance in various activities or functions. The scale provides a total score (best of 48) from four subscores which assess speech and swallowing, (bulbar function), use of upper extremities (cervical function), gait and turning in bed (lumbar function), and breathing (respiratory function) [20]. A slower rate of ALSFRS-R decline correlates with longer survival [21]. In the clinical trial setting, the ALSFRS-R is typically administered and scored by a trained staff member based on the patient’s self-report, and the scale has also been validated over the telephone [22] or as a self-administered scale [23]. Test–retest reliability has been reported at values between 0.87 and 0.96, with higher reliability when a consistent evaluator is administering the scale [24, 25].

There are several important limitations with the ALSFRS-R as an outcome measure. A one-point change can represent a small or a large amount of functional change depending on the question and the item, and thus, a one-point change is not a quantifiable unit of functional change across the scale [26]. Additionally, analyses of the ALSFRS-R show that the scale is not unidimensional, meaning that items on the scale measure domains other than functional status [27]. These properties create mathematical limitations when the ALSFRS-R sum score is used as an outcome measure. In other words, each one-point change on the scale represents a different quantity of functional change, and furthermore, some one-point changes on the scale represent a change in a domain other than functional status, and thus, the sum score as a primary measure of functional status is flawed. These measurement discrepancies also create ambiguity when determining the clinical significance of small ALSFRS-R changes. A survey study of ALS clinicians showed that the majority of clinicians surveyed believe that a 20% change in ALSFRS-R slope is clinically meaningful [28], but more rigorous or universal definitions of clinically meaningful change for the ALSFRS-R are lacking. This definition is particularly limited when considering that a 20% change in slope represents different levels of functional change across the scale due to its scoring structure.

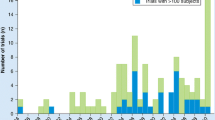

Additionally, decline in the ALSFRS-R score is often assumed to be linear for the purposes of statistical analysis, but in reality, the scale declines in a curvilinear manner, with difficulty detecting changes at the extremes of the scale [29]. While overall average decline on the ALSFRS-R is often reasonably linear over the course of a clinical trial period for the treatment and/or placebo groups, linear decline in the pre-treatment period cannot be inferred, and decline on the individual level often follows a non-linear trajectory (Fig. 1) [30]. The ALSFRS-R may also lack responsiveness, which is the ability for scale to detect change when change has actually occurred. A study of the ALSFRS-R from a pooled clinical trial database showed that 25% of placebo-group patients showed no change in the ALSFRS-R score over a 6-month period, while 16% of placebo patients had no change in ALSFRS-R over a 12-month period [31]. These measurement plateaus have significant implications for clinical trials that are investigating agents aiming to slow progression of disease by reducing the ability to detect treatment effects. In the setting of a clinical trial where a difference of several points in the ALSFRS-R can determine the success or failure of an ALS therapeutic [32, 33], these psychometric considerations are of practical importance.

Individual ALSFRS-R trajectories are displayed from the Emory ALS Center clinic population. The significant heterogeneity in disease progression rates as well as non-linear decline at the individual level are noted [126]

The Combined Assessment of Function and Survival

The Combined Assessment of Function and Survival (CAFS) is a non-parametric tool that allows analysis of both survival and functional status in a single outcome measure. Participants that survive the duration of the study period are ranked as having a higher score/better outcome than participants who do not survive the study period. Within the surviving cohort, participants are placed in rank order based on rate of progression according to the ALSFRS-R. For participants who die or reach permanent mechanical ventilation during the study period, outcome rank order is based on survival time. This tool not only allows survival data to be prioritized as the most important outcome for deceased participants, but also allows analysis of all participants by analyzing surviving participants as well as, according to functional decline, improving study efficiency compared to a study using survival alone as the primary outcome [34]. The FDA has voiced support of a joint assessment of function and survival compared to analyzing function alone [19]. Because ALSFRS-R is the tool used to measure functional decline for this composite measure, the limitations that apply to ALSFRS-R also apply to the CAFS, and the addition of survival analysis may not be helpful in shorter studies where few participants meet the death endpoint. Due to the non-parametric rank-order approach of the CAFS, this outcome can also be challenging to interpret clinically, as it does not provide a rate of functional decline or a measure of treatment effect.

The Rasch-Built Overall ALS Disability Scale

The Rasch-Built Overall ALS Disability Scale is a 28-item patient reported outcome measure assessing overall disability level for patients with ALS, and this scale was created to overcome the psychometric limitations of the ALSFRS-R [35]. Unlike ordinal scales, this scale is linearly-weighted, meaning that a one-point change is a consistent unit of disability measurement across the scale (and a two-point change indicates twice the amount of disability). Rasch analyses indicate that the scale is unidimensional, meaning that the items on the scale are all measures of disability and not other domains, and thus, the sum score is indeed a valid measure of overall disability. The ROADS questions have improved item targeting compared to the ALSFRS-R, meaning that a broader range of ability levels are assessed, which is expected to help with scale responsiveness. The scale is also more reliable than ALSFRS-R, with a test–retest reliability of 0.97. These psychometric advantages are encouraging and are expected to confer statistical advantages when ROADS is used as an outcome measure in a clinical trial, but longitudinal data and real-world clinical trial data are still needed for confirmation. The scale has been translated and validated in Chinese [36] and Italian [37], but additional translations of the scales are required before this tool can be utilized in broader international clinical trials.

Neurophysiologic Outcome Measures

A variety of neurophysiologic outcome measures have been tested in ALS research studies [38, 39], with the hopes that these tools can serve as objective, quantitative markers of motor neuron loss. Motor unit number estimation (MUNE) [40] and motor unit number index (MUNIX) [41] are two techniques that have showed promise as biomarkers of lower motor neuron measures in ALS research trials, as these measures decline over time in patients with ALS and correlate with other ALS clinical and functional outcome measures [42]. Reproducibility varies depending on the protocol used as well as the skill, experience, and training of the examiner, and real-world use of these tools has proved to be challenging, particularly as a mainstream measure for larger studies [43, 44]. There is certainly theoretical appeal to these neurophysiologic measures, but refinement of techniques to improve the reproducibility and accessibility of testing protocols are needed before this becomes an accepted surrogate marker of lower motor neuron loss [45]. In addition, because these techniques predominantly allow for testing on distal limb muscles, they may not serve as an overall measure of motor neuron loss.

Another electrophysiologic measure of interest is electrical impedance myography (EIM). EIM testing involves application of a painless electrical current through surface electrodes to measure the compositional properties of muscle [46, 47]. Recent advances in EIM technology have improved the ease and accessibility of testing, and at-home testing could be an option for future trials that broadens real-world use of this technique [10]. One study has suggested that using EIM as the primary outcome measure could result in a fivefold reduction in needed sample size [48], but to date, EIM has only been used as a secondary or exploratory outcome in ALS clinical trials. A disadvantage of EIM is that the data obtained from this testing is not accessible or interpretable in real time to the clinician or investigator, and thus, it is less familiar to many ALS investigators and not used as a mainstream clinical tool.

Transcranial magnetic stimulation (TMS) methods have also been explored as biomarkers to measure cortical motor excitability and upper motor neuron dysfunction in ALS. However, studies to date have not led to consistent results [49,50,51], and some studies have demonstrated technical limitations with inability to record responses in a significant number of study participants [52]. These techniques may serve as useful adjunct measures to show target engagement in the future, but more work is needed to refine these tools.

Measures of Muscle Strength

Quantitative measures of muscle strength are appealing as an ALS outcome measure given that loss of muscle strength is a clinical hallmark of disease progression [53]. Measuring limb strength using a portable hand-held dynamometer is a common approach used for ALS trials, where individual muscle measurements are standardized based on data from healthy controls, and then, an overall combined megascore is utilized as the outcome of interest [54, 55]. This approach has good reliability with adequate training, but floor and ceiling effects are observed with this measurement tool, and strength is not adequately quantified when muscle strength of the study participant exceeds the strength of the examiner. Fixed dynamometry, as is performed with the Accurate Test of Limb Isometric Strength (ATLIS) device, can overcome the floor and ceiling effects and reliance on examiner strength to improve sensitivity [56], but the equipment required for this approach in past trials in large and cumbersome, limiting more widespread use and practical application of this technique. Recent work has been done to validate a portable fixed dynamometer, which could be a promising tool that combines the convenience of hand-held dynamometry with ATLIS’ range of measurement abilities [57].

Measures of ventilatory strength are commonly captured in ALS trials, as these outcomes are of clinical relevance and correlate with survival [58,59,60]. Vital capacity is typically the measure of interest [61, 62], although other parameters such as inspiratory force and voluntary cough parameters have also been studied [63,64,65]. Slow (SVC) and forced vital capacity (FVC) measurements correlate highly with each other and also correlate with survival and functional measures [58, 66]. FVC requires a fast expiratory effort, which may be impeded by spasticity, and both tests can be impaired by bulbar weakness due to upper airway collapse during maximal exhalation [67]. SVC has served as the primary outcome measure in several recent ALS clinical trials of fast skeletal muscle troponin activators, where the drug is hoped to improve diaphragm contractility (NCT03160898, NCT02496767) [68, 69]. The COVID-19 pandemic created logistical challenges with obtaining spirometry measurements due to infection prevention concerns and limitations with in-person study assessments [70], but at-home spirometry measurements have emerged as a viable alternative [71]. Ventilatory measures have been noted to have a higher coefficient of variation and decreased sensitivity compared to other typically used primary outcomes for ALS [53], so from many studies, spirometry measures are more useful as a secondary or exploratory outcome measure.

At-Home Outcome Measures

With advances in technology, at-home assessments are increasingly becoming a strategy of interest for measuring disease progression [10]. At-home measurement offers the possibility of increasing the frequency of outcome measurement, which should in turn improve responsiveness and reduce the impact of measurement error. Traditional patient-reported outcomes can be captured on a smart phone or computer, but other more novel approaches include assessments of speech, motion analysis using wearable sensors, and GPS tracking to assess travel patterns [72,73,74,75]. However, the analysis of these parameters requires sophisticated machine learning techniques and is typically complex. Additional research is needed to refine the analysis algorithms for these technologies, to determine the best candidates or compositive measures to serve as trial outcome measures, and to translate the complex data output into clinically understandable data points.

Statistical Enrichment

Prediction algorithms for anticipating expected ALS disease progression serve as valuable tools for improving clinical trial efficiency and reducing sample size. Simulated ALS clinical trials that incorporate prediction algorithms into the study design show the potential to reduce sample size by 15–20% [76, 77]. Models for disease progression can also be used for participant stratification or as part of study inclusion/exclusion criteria to select an appropriate study cohort [6, 78]. Applying prediction algorithms that rely on only baseline variables to design inclusion and exclusion criteria for a selected population can also avoid the need for an observational lead-in period, allowing earlier initiation of treatment and creating more patient-centric study designs [79]. Prediction algorithms are particularly valuable for exploratory analyses, for example, when trying to identify subgroups of treatment responders by comparing prediction progression to actual progression [80], or even evaluate individual-level disease progression and compare observed vs expected progression [81]. Current prediction algorithms rely on ALSFRS-R as a predictor variable and predict ALSFRS-R or survival as the outcome of interest based on currently available data in existing databases, but the same mathematical prediction techniques can be used to incorporate novel outcomes in the future as more data on newer tools becomes available [76, 81].

One approach that has been increasingly utilized to improve statistical power in ALS clinical trials is selecting a more homogenous patient population in terms of rate of disease progression so that treatment effects can be observed using a smaller sample size over a shorter duration. As an example, early studies of edaravone (NCT00330681) in heterogenous ALS populations did not identify statistically significant treatment benefits [82], but post hoc subgroup analyses identified a cohort of potential responders. A new trial of edaravone was designed to study a targeted cohort of ALS patients with well-defined, more uniform rates of progression to improve statistical power [32]. Specifically, study participants had to be in relatively early stages of disease with a high baseline functional status, have a vital capacity > 80% predicted, have scores of at least 2 on each individual ALSFRS-R item, and have disease duration of less than 2 years. Additionally, participants with moderate levels of progression were selected based on a decline of the ALSFRS-R of 1–4 points over a 12-week lead-in observation period, excluding participants with the slowest and fastest progression rates from participation. This targeted study of only 137 participants showed a statistically significant difference in the primary outcome measure and led to FDA approval of edaravone. Disease prediction models were also used to support the notion of generalizability of edaravone efficacy to a broader population [83] and to provide additional evidence of AMX0035 efficacy in the open-label extension study [84]. The phase 2 study of AMX0035 also utilized statistical enrichment techniques to improve statistical power [85], in this case utilizing past data from a pooled clinical trial database (PRO-ACT) [86] to identify early onset participants that were predicted to have fast disease progression. This study enrolled participants with disease duration of 18 months or less meeting El Escorial criteria for definite ALS. This approach did not require a lead-in period, which allows earlier initiation of treatment and is certainly preferred by study participants. Run-in periods also have the potential to introduce bias, as excluding participants in the run-in period may decrease the generalizability of study results to the broader treatment population, and inaccurate capture of lead-in outcome measures might occur due to desire to qualify for active treatment [87]. Additionally, it is possible that delayed initiation of some therapeutic agents could reduce treatment efficacy.

Given the regulatory success of edaravone and the promising data on AMX0035, other recent ALS clinical trials have followed by designing studies seeking to reduce heterogeneity in baseline functional status and rates of disease progression. However, this approach has resulted in some ambiguity for clinical use of ALS treatments in the real-world setting [88,89,90,91]. It is not certain that drugs that are beneficial at early stages of ALS still have the same beneficial effects when initiated in late stages of disease, and questions have also been raised about clinical significance of these treatments, even in the setting of statistical significance. Additionally, there is no consensus about whether or not positive findings observed in smaller targeted trials require confirmation in a larger sample size to confirm reproducibility and generalizability of results. This is a challenging issue when trying to balance the dire and time-sensitive need for better ALS treatments with concerns for scientific rigor and avoidance of unnecessary risks, burdens, and costs.

Platform Trial

Recently, an innovative platform trial (NCT04297683) approach has been launched for ALS [92]. This trial creates a shared ongoing clinical trial infrastructure where different ALS drugs can be tested on an ongoing basis using a shared protocol. This design allows for improved efficiency owing to ongoing trial infrastructure, and fewer participants are assigned to the placebo arm due to analysis of pooled placebo groups among regimens. Platform trials for other diseases, particularly in the cancer field, offer additional adaptive advantages, such as disease subtype stratification based on biomarkers or phenotype and response-adaptive randomization, where frequent efficacy analyses allow changes to randomization allocations to be adjusted in real time [93, 94]. The current ALS platform trial does not yet incorporate this adaptive agility due to current limitations in biomarkers and inability to quickly measure treatment response. The current ALS platform trial also enrolls a relatively heterogeneous ALS population and utilizes the ALSFRS-R as a primary outcome measure, which may reduce study power compared to other current trial approaches that are using updated outcome measures or statistical enrichment techniques.

Biomarkers

ALS researchers are increasingly recognizing the importance of ALS biofluid biomarkers in clinical trials. For many past ALS trials with negative results, it is unknown whether the drug failed to engage the intended target or if the mechanism of interest is not an effective strategy for ALS treatment. Biomarkers of target engagement are critical for informative and decisive clinical trials and should be incorporated into early phases of drug development. The ideal biomarker candidate would show response to treatment faster than currently used clinical measures, which would greatly improve trial efficiency and allow for more adaptive trial designs. For candidate biomarkers to serve as a surrogate measure of clinical response, research is needed to define how changes in biomarker levels correlate with clinical outcome measures and how biomarker levels or changes predict clinically relevant outcomes.

Neurofilaments have emerged as promising biomarkers to assess treatment response. Neurofilament light chains and heavy chains in both CSF and serum have been shown to be elevated in patients with ALS compared to controls and correlate with disease progression as well as survival [95, 96]. Neurofilament light chain levels may have a stronger association with poorer prognosis [97, 98]. Studies have also shown relative stability of neurofilament levels over time, a promising feature for a biomarker that potentially could be used as an indicator of treatment response [98], assuming a correlate relationship between neurofilament levels and disease progression. Neurofilament levels are currently a useful adjunct measure for many clinical trials, but it is still unknown what measurement of neurofilament change should be considered meaningful or how to define a treatment response. Recent studies highlight these uncertainties; the phase 1–2 study of Tofersen in SOD1 ALS showed reduction of neurofilament light chains with treatment without a corresponding benefit on clinical outcome measures [99], while the AMX0035 study showed benefit on its primary clinical outcome measure without a significant reduction in neurofilament heavy chains [85]. While the future of neurofilaments as a marker of treatment response is unclear, research in pre-symptomatic ALS gene carriers has shown that neurofilaments could serve as a promising biomarker of conversion to symptomatic ALS, preceding the detection of clinical ALS symptoms [100]. This novel approach is being employed in the ongoing ATLAS study (NCT04856982), where pre-symptomatic SOD1 gene carriers will initiate treatment after detection of elevated neurofilament levels but before onset of clinical symptoms [101].

Selection of appropriate biomarkers depends on the mechanism of the therapeutic agent and the specific patient population being tested. Other potential biomarkers that warrant further study include measures of oxidative stress or neuroinflammation [102]. PET imaging has been considered as a biomarker of target engagement for drugs targeting CNS neuroinflammation due to its ability to characterize microglial activation and correlations with rate of disease progression [103, 104]. However, there are some practical limitations including cost, limited availability at select tertiary imaging centers, and need for participants to lie flat for testing [105]. In the future, it is hoped that biomarkers can be used to guide a precision medicine treatment approach; for example, ALS patients with specific inflammatory profiles might be responsive to treatment with a targeted anti-inflammatory agent. This type of approach has not been successful to date [106] but warrants further exploration, particularly as the scientific community furthers the understanding of underlying ALS disease mechanisms. Even in ALS therapeutic development for seemingly sporadic ALS, it is possible that genetic modifiers may play a role in differential response to treatment, as suggested by a post hoc analysis of the pooled lithium trials showing that UNC13A carriers demonstrated a beneficial treatment response [107].

Diversity, Equity, and Inclusion in ALS Research

Future research studies must prioritize equity and diverse participant recruitment to ensure that treatment advances will benefit all patients with ALS. According to the Centers for Disease Control National ALS Registry, which compiles data from three national administrative databases and included self-reported data, 83% of ALS incident cases in the USA occur in white patients [108]. However, the Pooled Resource Open-Access ALS Clinical Trials (PRO-ACT) database, which combines pooled clinical trial data from more than 17 representative ALS clinical trials, shows that 95% of ALS clinical trial participants are white [86]. The reasons for this discrepancy are likely multifactorial. Many ALS trials recruit participants with shorter disease durations, but black patients have on average a diagnostic delay that is 8 months longer than white patients, reducing the window of clinical trial eligibility [109]. Broader studies examining barriers to research participation for people of minority communities identified a weak relationship with the medical and research community as well as a high cost to study participation as common concerns that limit inclusion in research [110]. Future ALS clinical trials must consider ways to engage minority and underserved communities for inclusion in research to improve equitable access to trials and the generalizability of study results.

ALS Patient Advocacy and Patient-Centric Trials

A recent trial of Lunasin (NCT02709330) serves as an interesting example of a patient-centric trial approved drugs [111]. The trial had very broad inclusion criteria, including participants with long disease duration or on mechanical ventilation that are not typically eligible for interventional trials. The study was an open-label design with outcome measures collected online. The study had rapid recruitment and high adherence and retention rates. As this study was for a non-prescription supplement, the protocol was published so that interested participants could follow along at home without formal study enrollment. This study also included biomarker analysis, studying histone acetylation, that showed a lack of target engagement. The Lunasin ALS study provided an efficient design to evaluate for the presence or absence of a large treatment effect and could be used as a model for studying other supplements or medications that are already FDA-approved and have known favorable safety profiles. It is important to note that this study design would not allow detection of modest treatment effects and would be insufficient when robust safety monitoring is needed. In addition, the use of historical controls as was used in this trial are specifically discouraged by FDA due to scientific limitations, and thus, placebo-controlled trials are required for FDA approval of new drugs [112].

Many recent ALS clinical trials have benefited from increased input from patient advisors and advocates. Both ALS Clinical Trial Guidelines and FDA guidance for drug development recognize the importance of patient input in clinical trial design [113]. Patient input into study protocols can help reduce unnecessary or intolerable burden of study activities, and advocacy efforts have led to more frequent open-label extension programs, which in addition to helping recruitment efforts also adds to scientific discovery by providing additional long-term safety and exploratory efficacy data. The ALS Clinical Research Learning Institute (ALS-CRLI), a patient-driven program that trains ALS research ambassadors about research and clinical trials, serves as a valuable template for building partnerships between the scientific community and the patients they serve. Graduates of the ALS-CRLI have gone on to serve as trial advisors and patient advocates, strengthening the design of trials and building trust within the ALS community [11]. ALS advocates have called for increased access to experimental treatments, citing higher risk tolerance in the setting of an incurable fatal disease and principles of autonomy. However, ALS clinicians and scientists have concerns about the risks of bypassing appropriate regulatory oversight and the potential for predatory practices to harm ALS patients that are in a vulnerable position [89, 114].

Past experience with diaphragm pacing systems (DPSs) for the treatment of ALS serves as a cautionary tale for unproven treatments. Initial use of DPS in ALS was based on results of small, uncontrolled studies in the early 2000s [115,116,117]. The process for FDA approval of devices through a Humanitarian Use Device Exemption requires little scientific justification and does not require the same level of testing as drug approval, and as a result, DPS was FDA approved for use in ALS in 2011 [118]. While some ALS clinicians were wary of the safety profile and scientific rationale for DPS in ALS [119], other ALS clinicians presumed that DPS was safe and possibly effective and offered DPS as part of standard treatment at the request of interested patients. Years later, well-controlled randomized controlled trials showed that these devices were actually harmful in patients with ALS. A UK randomized controlled trial was terminated early due to safety concerns when patient implanted with DPS were found to have median survival of 11 months compared to median survival of 22.5 months in the group using non-invasive ventilation alone [120], and these findings were replicated in second French randomized controlled trial that also terminated enrollment early due to accelerated mortality in the DPS-treated group [121]. This experience served as a reminder of the importance of well-designed randomized controlled trials and the harms of relying on uncontrolled or anecdotal data. While predictive modeling and well-matched historical controls can be considered as supplementary approaches for randomized controlled trials, and statistical techniques can be used to reduce the size of placebo groups, typical ALS trials will require a placebo arm to adequately assess safety and detect efficacy given the modest effects of most candidate treatments and the variability of disease progression. Moreover, FDA guidance currently discourages reliance on historical controls to support drug development [112].

ALS stakeholders and advocates have brought increasing attention to expanded access programs and “Right-to-Try” laws as a means of accessing promising experimental therapies before regulator approval occurs. To date, practical and logistical barriers have limited utilization of these programs. It is hoped that the recent passage of the Accelerating Access to Critical Therapies for ALS Act (ACT for ALS) in December 2021 [122] will allow more patients to access investigational drugs while still promoting concurrent high-quality clinical trials to obtain decisive safety and efficacy results. This bill establishes a grant program to support expanded access programs, which is hoped to overcome the time and resource barriers that have prevented adaptation of these programs in the past. The impact of this bill on reducing barriers and improving participation in expanded access programs is still unknown, but this is a promising approach for meeting the priorities of the ALS advocacy community without compromising scientific rigor or the ability to complete decisive phase 3 clinical trials.

Conclusion

ALS clinical trial design is complex and depends on the specific goals of the study and mechanism of the therapeutic agents. Future ALS trials will be strengthened by combining the strategies discussed in this review—refining clinical outcome measures, applying thoughtful statistical enrichment techniques, reducing infrastructure barriers, and utilizing drug-specific biomarkers of target engagement. ALS investigators and pharmaceutical companies should select outcome measures and power studies carefully based on mechanism of action, expected variability and statistical power, and psychometric properties of the measurement tool. Investigators should strongly consider the inclusion of promising novel outcome measures as secondary or exploratory outcome measure to facilitate faster validation of new tools. Biomarker development is critical to assess target engagement and should be incorporated in early phase studies. However, current biomarkers are only able to serve adjunct tools and are not adequate for predicting clinically relevant outcomes. As biomarker and mechanistic research improves, so will the ability to pursue precision-medicine treatment approaches for ALS. Investigators are urged to prioritize diversity, equity, and inclusion to improve validity and generalizability of their research findings. Ongoing partnerships with patient advocates and stakeholder will continue the development of patient-centric approaches that serve the needs of the ALS community while still maintaining scientific rigor. Current ALS therapeutic development relies on stepwise incremental progress, and optimizing clinical trial design will improve the efficiency and reliability of this process.

References

Hergesheimer R, et al. Advances in disease-modifying pharmacotherapies for the treatment of amyotrophic lateral sclerosis. Expert Opin Pharmacother. 2020;21(9):1103–10.

Katyal N, Govindarajan R. Shortcomings in the current amyotrophic lateral sclerosis trials and potential solutions for improvement. Front Neurol. 2017;8:521.

Mitsumoto H, Brooks BR, Silani V. Clinical trials in amyotrophic lateral sclerosis: why so many negative trials and how can trials be improved? Lancet Neurol. 2014;13(11):1127–38.

Goyal NA, et al. Addressing heterogeneity in amyotrophic lateral sclerosis CLINICAL TRIALS. Muscle Nerve. 2020;62(2):156–66.

Hartmaier SL, et al. Qualitative measures that assess functional disability and quality of life in ALS. Health Qual Life Outcomes. 2022;20(1):12.

Berry JD, et al. Improved stratification of ALS clinical trials using predicted survival. Ann Clin Transl Neurol. 2018;5(4):474–85.

Goutman SA, et al. Recent advances in the diagnosis and prognosis of amyotrophic lateral sclerosis. Lancet Neurol. 2022;21(5):480–93.

Wilkins HM, Dimachkie MM, Agbas A. Blood-based biomarkers for amyotrophic laerosis. In Amyotrophic Lateral Sclerosis, T. Araki, Editor. Brisbane (AU); 2021.

Kiernan MC, et al. Improving clinical trial outcomes in amyotrophic lateral sclerosis. Nat Rev Neurol. 2021;17(2):104–18.

Rutkove SB, et al. Improved ALS clinical trials through frequent at-home self-assessment: a proof of concept study. Ann Clin Transl Neurol. 2020;7(7):1148–57.

Bedlack R, et al. ALS clinical research learning institutes (ALS-CRLI): empowering people with ALS to be research ambassadors. Amyotroph Lateral Scler Frontotemporal Degener. 2020;21(3–4):216–21.

U.K.-L.S. Group, et al. Lithium in patients with amyotrophic lateral sclerosis (LiCALS): a phase 3 multicentre, randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2013;12(4):339–45.

Groeneveld GJ, et al. A randomized sequential trial of creatine in amyotrophic lateral sclerosis. Ann Neurol. 2003;53(4):437–45.

Tornese P, et al. Review of disease-modifying drug trials in amyotrophic lateral sclerosis. J Neurol Neurosur Psychiatry. 2022.

Gordon PH, et al. Defining survival as an outcome measure in amyotrophic lateral sclerosis. Arch Neurol. 2009;66(6):758–61.

Lacomblez L, et al. Dose-ranging study of riluzole in amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis/Riluzole Study Group II. Lancet. 1996;347(9013):1425–31.

Paganoni S, et al. Long-term survival of participants in the CENTAUR trial of sodium phenylbutyrate-taurursodiol in amyotrophic lateral sclerosis. Muscle Nerve. 2021;63(1):31–9.

Shefner J, et al. Long-term edaravone efficacy in amyotrophic lateral sclerosis: post-hoc analyses of Study 19 (MCI186-19). Muscle Nerve. 2020;61(2):218–21.

PCNS Advisory Committee. Combined FDA and Applicant Briefing Document, NDA# 216660, Drug Name: AMX0035/ sodium phenylbutyrate (PB) and taurursodiol (TURSO), U.S. Food and Drug Administration. 2022. https://www.fda.gov/media/157186/download. Accessed June 2022.

Cedarbaum JM, et al. The ALSFRS-R: a revised ALS functional rating scale that incorporates assessments of respiratory function. BDNF ALS Study Group (Phase III). J Neurol Sci. 1999;169(1–2):13–21.

Kimura F, et al. Progression rate of ALSFRS-R at time of diagnosis predicts survival time in ALS. Neurology. 2006;66(2):265–7.

Kasarskis EJ, et al. Rating the severity of ALS by caregivers over the telephone using the ALSFRS-R. Amyotroph Lateral Scler Other Motor Neuron Disord. 2005;6(1):50–4.

Montes J, et al. Development and evaluation of a self-administered version of the ALSFRS-R. Neurology. 2006;67(7):1294–6.

Miano B, et al. Inter-evaluator reliability of the ALS functional rating scale. Amyotroph Lateral Scler Other Motor Neuron Disord. 2004;5(4):235–9.

Brooks BR. Natural history of ALS: symptoms, strength, pulmonary function, and disability. Neurology. 1996;47(4 Suppl 2):S71–82.

Franchignoni F, et al. A further Rasch study confirms that ALSFRS-R does not conform to fundamental measurement requirements. Amyotroph Lateral Scler Frontotemporal Degener. 2015;16(5–6):331–7.

Franchignoni F, et al. Evidence of multidimensionality in the ALSFRS-R Scale: a critical appraisal on its measurement properties using Rasch analysis. J Neurol Neurosurg Psychiatry. 2013;84(12):1340–5.

Castrillo-Viguera C, et al. Clinical significance in the change of decline in ALSFRS-R. Amyotroph Lateral Scler. 2010;11(1–2):178–80.

Gordon PH, et al. Progression in ALS is not linear but is curvilinear. J Neurol. 2010;257(10):1713–7.

Hu N, et al. The frequency of ALSFRS-R reversals and plateaus in patients with limb-onset amyotrophic lateral sclerosis: a cohort study. Acta Neurol Belg. 2022.

Bedlack RS, et al. How common are ALS plateaus and reversals? Neurology. 2016;86(9):808–12.

The Writing Group, A.L.S.S.G. Edaravone. Safety and efficacy of edaravone in well defined patients with amyotrophic lateral sclerosis: a randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2017;16(7):505–12.

Committee for Medicinal Products for Human Use (CHMP). European Medicines Agency Withdrawal Assessment Report, Radicava, EMA. 2019. https://www.ema.europa.eu/en/documents/withdrawal-report/withdrawal-assessment-report-radicava_en.pd. Accessed July 2022.

Berry JD, et al. The Combined Assessment of Function and Survival (CAFS): a new endpoint for ALS clinical trials. Amyotroph Lateral Scler Frontotemporal Degener. 2013;14(3):162–8.

Fournier CN, et al. Development and validation of the Rasch-built overall amyotrophic lateral sclerosis disability scale (roads). JAMA Neurol. 2019.

Sun C, et al. Chinese validation of the Rasch-Built Overall Amyotrophic Lateral Sclerosis Disability Scale. Eur J Neurol. 2021;28(6):1876–83.

Manera U, et al. Validation of the Italian version of the Rasch-Built Overall Amyotrophic Lateral Sclerosis Disability Scale (ROADS) administered to patients and their caregivers. Amyotroph Lateral Scler Frontotemporal Degener. 2021;1–6.

Ahmed N, Baker MR, Bashford J. The landscape of neurophysiological outcome measures in ALS interventional trials: a systematic review. Clin Neurophysiol. 2022;137:132–41.

Vucic S, Rutkove SB. Neurophysiological biomarkers in amyotrophic lateral sclerosis. Curr Opin Neurol. 2018;31(5):640–7.

Shefner JM, et al. Multipoint incremental motor unit number estimation as an outcome measure in ALS. Neurology. 2011;77(3):235–41.

Neuwirth C, et al. Motor unit number index (MUNIX): a novel neurophysiological technique to follow disease progression in amyotrophic lateral sclerosis. Muscle Nerve. 2010;42(3):379–84.

Boekestein WA, et al. Motor unit number index (MUNIX) versus motor unit number estimation (MUNE): a direct comparison in a longitudinal study of ALS patients. Clin Neurophysiol. 2012;123(8):1644–9.

Neuwirth C, et al. Implementing Motor Unit Number Index (MUNIX) in a large clinical trial: Real world experience from 27 centres. Clin Neurophysiol. 2018;129(8):1756–62.

Shefner JM, et al. The use of statistical MUNE in a multicenter clinical trial. Muscle Nerve. 2004;30(4):463–9.

de Carvalho M, et al. Motor unit number estimation (MUNE): where are we now? Clin Neurophysiol. 2018;129(8):1507–16.

Rutkove SB. Electrical impedance myography: background, current state, and future directions. Muscle Nerve. 2009;40(6):936–46.

Rutkove SB, et al. Electrical impedance myography as a biomarker to assess ALS progression. Amyotroph Lateral Scler. 2012;13(5):439–45.

Shefner JM, et al. Reducing sample size requirements for future ALS clinical trials with a dedicated electrical impedance myography system. Amyotroph Lateral Scler Frontotemporal Degener. 2018;19(7–8):555–61.

Schanz O, et al. Cortical hyperexcitability in patients with C9ORF72 mutations: relationship to phenotype. Muscle Nerve. 2016;54(2):264–9.

Menon P, Kiernan MC, Vucic S. Cortical hyperexcitability precedes lower motor neuron dysfunction in ALS. Clin Neurophysiol. 2015;126(4):803–9.

Chervyakov AV, et al. Navigated transcranial magnetic stimulation in amyotrophic lateral sclerosis. Muscle Nerve. 2015;51(1):125–31.

Wainger BJ, et al. Effect of ezogabine on cortical and spinal motor neuron excitability in amyotrophic lateral sclerosis: a randomized clinical trial. JAMA Neurol. 2021;78(2):186–96.

Shefner JM, et al. Quantitative strength testing in ALS clinical trials. Neurology. 2016;87(6):617–24.

Cudkowicz ME, et al. Dexpramipexole versus placebo for patients with amyotrophic lateral sclerosis (EMPOWER): a randomised, double-blind, phase 3 trial. Lancet Neurol. 2013;12(11):1059–67.

Cudkowicz ME, et al. Safety and efficacy of ceftriaxone for amyotrophic lateral sclerosis: a multi-stage, randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2014;13(11):1083–91.

Andres PL, et al. Fixed dynamometry is more sensitive than vital capacity or ALS rating scale. Muscle Nerve. 2017;56(4):710–5.

Bakers JNE, et al. Portable fixed dynamometry: towards remote muscle strength measurements in patients with motor neuron disease. J Neurol. 2021;268(5):1738–46.

Heiman-Patterson TD, et al. Pulmonary function decline in amyotrophic lateral sclerosis. Amyotroph Lateral Scler Frontotemporal Degener. 2021;22(sup1):54–61.

Enache I, et al. Ability of pulmonary function decline to predict death in amyotrophic lateral sclerosis patients. Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(7–8):511–8.

Calvo A, et al. Prognostic role of slow vital capacity in amyotrophic lateral sclerosis. J Neurol. 2020;267(6):1615–21.

Goyal NA, Mozaffar T. Experimental trials in amyotrophic lateral sclerosis: a review of recently completed, ongoing and planned trials using existing and novel drugs. Expert Opin Investig Drugs. 2014;23(11):1541–51.

Paganoni S, Cudkowicz M, Berry JD. Outcome measures in amyotrophic lateral sclerosis clinical trials. Clin Investig (Lond). 2014;4(7):605–18.

Tabor-Gray LC, et al. Characteristics of impaired voluntary cough function in individuals with amyotrophic lateral sclerosis. Amyotroph Lateral Scler Frontotemporal Degener. 2019;20(1–2):37–42.

Shefner JM, et al. A phase III trial of tirasemtiv as a potential treatment for amyotrophic lateral sclerosis. Amyotroph Lateral Scler Frontotemporal Degener. 2019;1–11.

de Carvalho M, et al. Respiratory function tests in amyotrophic lateral sclerosis: the role of maximal voluntary ventilation. J Neurol Sci. 2022;434:120143.

Pinto S, de Carvalho M. Comparison of slow and forced vital capacities on ability to predict survival in ALS. Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(7–8):528–33.

Lechtzin N, et al. Respiratory measures in amyotrophic lateral sclerosis. Amyotroph Lateral Scler Frontotemporal Degener. 2018;19(5–6):321–30.

Shefner JM, et al. A phase 2, double-blind, randomized, dose-ranging trial of reldesemtiv in patients with aLS. Amyotroph Lateral Scler Frontotemporal Degener. 2021;22(3–4):287–99.

Andrews JA, et al. VITALITY-ALS, a phase III trial of tirasemtiv, a selective fast skeletal muscle troponin activator, as a potential treatment for patients with amyotrophic lateral sclerosis: study design and baseline characteristics. Amyotroph Lateral Scler Frontotemporal Degener. 2018;19(3–4):259–66.

Andrews JA, et al. Amyotrophic lateral sclerosis care and research in the United States during the COVID-19 pandemic: challenges and opportunities. Muscle Nerve. 2020;62(2):182–6.

Helleman J, et al. Home-monitoring of vital capacity in people with a motor neuron disease. J Neurol. 2022.

Stegmann GM, et al. Early detection and tracking of bulbar changes in ALS via frequent and remote speech analysis. NPJ Digit Med. 2020;3:132.

Garcia-Gancedo L, et al. Objectively monitoring amyotrophic lateral sclerosis patient symptoms during clinical trials with sensors: observational study. JMIR Mhealth Uhealth. 2019;7(12):e13433.

Beukenhorst AL, et al. Smartphone data during the COVID-19 pandemic can quantify behavioral changes in people with ALS. Muscle Nerve. 2021;63(2):258–62.

Baxi EG, et al. Answer ALS, a large-scale resource for sporadic and familial ALS combining clinical and multi-omics data from induced pluripotent cell lines. Nat Neurosci. 2022;25(2):226–37.

Kuffner R, et al. Crowdsourced analysis of clinical trial data to predict amyotrophic lateral sclerosis progression. Nat Biotechnol. 2015;33(1):51–7.

Zhou N, Manser P. Does including machine learning predictions in ALS clinical trial analysis improve statistical power? Ann Clin Transl Neurol. 2020;7(10):1756–65.

Beaulieu D, et al. Development and validation of a machine-learning ALS survival model lacking vital capacity (VC-Free) for use in clinical trials during the COVID-19 pandemic. Amyotroph Lateral Scler Frontotemporal Degener. 2021;22(sup1):22–32.

Taylor AA, et al. Predicting disease progression in amyotrophic lateral sclerosis. Ann Clin Transl Neurol. 2016;3(11):866–75.

Chang C, et al. A permutation test for assessing the presence of individual differences in treatment effects. Stat Methods Med Res. 2021;30(11):2369–81.

Westeneng HJ, et al. Prognosis for patients with amyotrophic lateral sclerosis: development and validation of a personalised prediction model. Lancet Neurol. 2018;17(5):423–33.

Abe K, et al. Confirmatory double-blind, parallel-group, placebo-controlled study of efficacy and safety of edaravone (MCI-186) in amyotrophic lateral sclerosis patients. Amyotroph Lateral Scler Frontotemporal Degener. 2014;15(7–8):610–7.

Brooks BR, et al. Evidence for generalizability of edaravone efficacy using a novel machine learning risk-based subgroup analysis tool. Amyotroph Lateral Scler Frontotemporal Degener. 2022;23(1–2):49–57.

Paganoni S, et al. Survival analyses from the CENTAUR trial in amyotrophic lateral sclerosis: evaluating the impact of treatment crossover on outcomes. Muscle Nerve. 2022.

Paganoni S, et al. Trial of sodium phenylbutyrate-taurursodiol for amyotrophic lateral sclerosis. N Engl J Med. 2020;383(10):919–30.

Atassi N, et al. The PRO-ACT database: design, initial analyses, and predictive features. Neurology. 2014;83(19):1719–25.

Laursen DRT, Paludan-Muller AS, Hrobjartsson A. Randomized clinical trials with run-in periods: frequency, characteristics and reporting. Clin Epidemiol. 2019;11:169–84.

Turnbull J. Is edaravone harmful? (A placebo is not a control). Amyotroph Lateral Scler Frontotemporal Degener. 2018;19(7–8):477–82.

Glass JD, Fournier CN. Unintended consequences of approving unproven treatments-hope, hype, or harm? JAMA Neurol. 2022;79(2):117–8.

Al-Chalabi A, et al. July 2017 ENCALS statement on edaravone. Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(7–8):471–4.

Witzel S, et al. Safety and effectiveness of long-term intravenous administration of edaravone for treatment of patients with amyotrophic lateral sclerosis. JAMA Neurol. 2022;79(2):121–30.

Paganoni S, et al. Adaptive platform trials to transform amyotrophic lateral sclerosis therapy development. Ann Neurol. 2022;91(2):165–75.

Adaptive Platform Trials Coalition. Adaptive platform trials: definition, design, conduct and reporting considerations. Nat Rev Drug Discov. 2019;18(10):797–807.

Alexander BM, et al. individualized screening trial of innovative glioblastoma therapy (INSIGhT): a Bayesian adaptive platform trial to develop precision medicines for patients with glioblastoma. JCO Precis Oncol. 2019;3.

Halbgebauer S, et al. Comparison of CSF and serum neurofilament light and heavy chain as differential diagnostic biomarkers for ALS. J Neurol Neurosurg Psychiatry. 2022;93(1):68–74.

Poesen K, Van Damme P. Diagnostic and prognostic performance of neurofilaments in ALS. Front Neurol. 2018;9:1167.

Gaiani A, et al. Diagnostic and prognostic biomarkers in amyotrophic lateral sclerosis neurofilament light chain levels in definite subtypes of disease. JAMA Neurol. 2017;74(5):525–32.

Skillback T, et al. Cerebrospinal fluid neurofilament light concentration in motor neuron disease and frontotemporal dementia predicts survival. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration. 2017;18(5–6):397–403.

Miller T, et al. Phase 1–2 trial of antisense oligonucleotide tofersen for SOD1 ALS. N Engl J Med. 2020;383(2):109–19.

Benatar M, et al. Neurofilaments in pre-symptomatic ALS and the impact of genotype. Amyotroph Lateral Scler Frontotemporal Degener. 2019;20(7–8):538–48.

Benatar M, et al. Design of a randomized, placebo-controlled, phase 3 trial of tofersen initiated in clinically presymptomatic SOD1 variant carriers: the ATLAS study. Neurotherapeutics. 2022.

Verber NS, et al. Biomarkers in motor neuron disease: a state of the art review. Frontiers in Neurol. 2019;10.

Corcia P, et al. Molecular imaging of microglial activation in amyotrophic lateral sclerosis. PLoS ONE. 2012;7(12):e52941.

Zurcher NR, et al. Increased in vivo glial activation in patients with amyotrophic lateral sclerosis: assessed with [(11)C]-PBR28. Neuroimage Clin. 2015;7:409–14.

Chew S, Atassi N. Positron emission tomography molecular imaging biomarkers for amyotrophic lateral sclerosis. Front Neurol. 2019;10:135.

Miller RG, et al. Randomized phase 2 trial of NP001-a novel immune regulator: safety and early efficacy in ALS. Neurol Neuroimmunol Neuroinflamm. 2015;2(3): e100.

van Eijk RPA, et al. Meta-analysis of pharmacogenetic interactions in amyotrophic lateral sclerosis clinical trials. Neurology. 2017;89(18):1915–22.

Mehta P, et al. Incidence of amyotrophic lateral sclerosis in the United States, 2014–2016. Amyotroph Lateral Scler Frontotemporal Degener. 2022;1–5.

Brand D, et al. Comparison of phenotypic characteristics and prognosis between black and white patients in a tertiary ALS clinic. Neurology. 2021;96(6):e840–4.

Le D, et al. Improving African American women's engagement in clinical research: a systematic review of barriers to participation in clinical trials. J Natl Med Assoc. 2022.

Bedlack RS, et al. Lunasin does not slow ALS progression: results of an open-label, single-center, hybrid-virtual 12-month trial. Amyotroph Lateral Scler Frontotemporal Degener. 2019;20(3–4):285–93.

Center for Drug Evaluation and Research. Guidance Document: Amyotrophic Lateral Sclerosis: Developing Drugs for Treatment Guidance for Industry, U.S. Food and Drug Administration. 2019. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/amyotrophic-lateral-sclerosis-developing-drugs-treatment-guidance-industry. Accessed June 2022.

Andrews JA, Bruijn LI, Shefner JM. ALS drug development guidances and trial guidelines: consensus and opportunities for alignment. Neurology. 2019;93(2):66–71.

Simmons Z. Right-to-try investigational therapies for incurable disorders. Continuum (Minneap Minn). 2017;23(5, Peripheral Nerve and Motor Neuron Disorders):1451–7.

Scherer K, Bedlack RS. Diaphragm pacing in amyotrophic lateral sclerosis: a literature review. Muscle Nerve. 2012;46(1):1–8.

Onders RP, et al. Complete worldwide operative experience in laparoscopic diaphragm pacing: results and differences in spinal cord injured patients and amyotrophic lateral sclerosis patients. Surg Endosc. 2009;23(7):1433–40.

Schmiesing CA, et al. Laparoscopic diaphragmatic pacer placement—a potential new treatment for ALS patients: a brief description of the device and anesthetic issues. J Clin Anesth. 2010;22(7):549–52.

Center for Devices and Radiologic Health. Summary of Safety and Probably Benefit (SSPB): Diaphragm Pacing Stimulator, U.S. Food and Drug Administration. 2011. https://www.accessdata.fda.gov/cdrh_docs/pdf10/H100006b.pdf. Accessed June 2022.

Amirjani N, et al. Is there a case for diaphragm pacing for amyotrophic lateral sclerosis patients? Amyotroph Lateral Scler. 2012;13(6):521–7.

Di PWC, Di PSGC. Safety and efficacy of diaphragm pacing in patients with respiratory insufficiency due to amyotrophic lateral sclerosis (DiPALS): a multicentre, open-label, randomised controlled trial. Lancet Neurol. 2015;14(9):883–92.

Gonzalez-Bermejo J, et al. Early diaphragm pacing in patients with amyotrophic lateral sclerosis (RespiStimALS): a randomised controlled triple-blind trial. Lancet Neurol. 2016;15(12):1217–27.

H.R. 3537: accelerating access to critical therapies for ALS act.

Lacomblez L, et al. A confirmatory dose-ranging study of riluzole in ALS. ALS/Riluzole Study Group-II. Neurology. 1996;47(6 Suppl 4):S242–50.

Cudkowicz ME, et al. A randomized placebo-controlled phase 3 study of mesenchymal stem cells induced to secrete high levels of neurotrophic factors in amyotrophic lateral sclerosis. Muscle Nerve. 2022;65(3):291–302.

Glass JD, et al. Lumbar intraspinal injection of neural stem cells in patients with amyotrophic lateral sclerosis: results of a phase I trial in 12 patients. Stem Cells. 2012;30(6):1144–51.

Fournier C, Glass JD. Modeling the course of amyotrophic lateral sclerosis. Nat Biotechnol. 2015;33(1):45–7.

Required Author Forms

Disclosure forms provided by the authors are available with the online version of this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Invited Review, Motor neuron disease therapy for the twenty-first century.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Fournier, C.N. Considerations for Amyotrophic Lateral Sclerosis (ALS) Clinical Trial Design. Neurotherapeutics 19, 1180–1192 (2022). https://doi.org/10.1007/s13311-022-01271-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13311-022-01271-2