Abstract

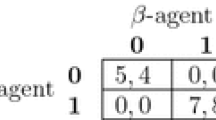

We analyze reinforcement learning under so-called “dynamic reinforcement.” In reinforcement learning, each agent repeatedly interacts with an unknown environment (i.e., other agents), receives a reward, and updates the probabilities of its next action based on its own previous actions and received rewards. Unlike standard reinforcement learning, dynamic reinforcement uses a combination of long-term rewards and recent rewards to construct myopically forward looking action selection probabilities. We analyze the long-term stability of the learning dynamics for general games with pure strategy Nash equilibria and specialize the results for coordination games and distributed network formation. In this class of problems, more than one stable equilibrium (i.e., coordination configuration) may exist. We demonstrate equilibrium selection under dynamic reinforcement. In particular, we show how a single agent is able to destabilize an equilibrium in favor of another by appropriately adjusting its dynamic reinforcement parameters. We contrast the conclusions with prior game theoretic results according to which the risk-dominant equilibrium is the only robust equilibrium when agents’ decisions are subject to small randomized perturbations. The analysis throughout is based on the ODE method for stochastic approximations, where a special form of perturbation in the learning dynamics allows for analyzing its behavior at the boundary points of the state space.

Similar content being viewed by others

References

Arthur WB (1993) On designing economic agents that behave like human agents. J Evol Econ 3:1–22

Bala V, Goyal S (2000) A noncooperative model of network formation. Econometrica 68(5):1181–1229

Basar T (1987) Relaxation techniques and asynchronous algorithms for online computation of non-cooperative equilibria. J Econ Dyn Control 11:531–549

Beggs AW (2005) On the convergence of reinforcement learning. J Econ Theory 122:1–36

Benaim M, Hofbauer J, Hopkins E (2009) Learning in games with unstable equilibria. J Econ Theory 144(4):1694–1709

Bergin J, Lipman BL (1996) Evolution with state-dependent mutations. Econometrica 64(4):943–956

Blondel VD, Hendrickx JM, Olshevsky A, Tsitsiklis JN (2005) Convergence in multiagent coordination, consensus, and flocking. In: Proceedings of the 44th IEEE conference on decision and control, Seville, Spain, December 2005, pp 2996–3000

Börgers T, Sarin R (1997) Learning through reinforcement and replicator dynamics. J Econ Theory 77(1):1–14

Borkar VS (2008) Stochastic approximation: a dynamical systems viewpoint. Cambridge University Press, New York

Cho IK, Matsui A (2005) Learning aspiration in repeated games. J Econ Theory 124:171–201

Conlisk J (1993) Adaptation in game: two solutions to the Crawford puzzle. J Econ Behav Organ 22:25–50

Crawford VP (1985) Learning behavior and mixed strategy Nash equilibria. J Econ Behav Organ 6:69–78

Dutta B, Jackson M (2000) The stability and efficiency of directed communication networks. Rev Econ Des 5:251–272

Erev I, Roth A (1998) Predicting how people play games: reinforcement learning in experimental games with unique, mixed strategy equilibria. Am Econ Rev 88:848–881

Franklin GF, Powell JD, Emami-Naeini A (2002) Feedback control of dynamic systems. Prentice Hall, Upper Saddle River

Fudenberg D, Levine DK (1998) The theory of learning in games. MIT Press, Cambridge

Fudenberg D, Tirole J (1991) Game theory. MIT Press, Cambridge

Hart S (2005) Adaptive heuristics. Econometrica 73(5):1401–1430

Hess RA, Modjtahedzadeh A (1990) A control theoretic model of driver steering behavior. IEEE Control Syst Mag 10(5):3–8

Hofbauer J, Sigmund K (1988) The theory of evolution and dynamical systems. Cambridge University Press, Cambridge

Hopkins E, Posch M (2005) Attainability of boundary points under reinforcement learning. Games Econ Behav

Jackson M, Wolinsky A (1996) A strategic model of social and economic networks. J Econ Theory 71:44–74

Jadbabaie A, Lin J, Morse SA (2003) Coordination of groups of mobile agents using nearest neighbor rules. IEEE Trans Autom Control 48(6):988–1001

Kandori M, Mailath G, Rob R (1993) Learning, mutation, and long-run equilibria in games. Econometrica 61:29–56

Karandikar R, Mookherjee D, Ray D (1998) Evolving aspirations and cooperation. J Econ Theory 80:292–331

Khalil HK (1992) Nonlinear systems. Prentice-Hall, New York

Kushner HJ, Yin GG (2003) Stochastic approximation and recursive algorithms and applications, 2nd edn. Springer, New York

Lewis D (2002) Convention: a philosophical study. Blackwell, Oxford

Massaquoi SG (1999) Modeling the function of the cerebellum in scheduled linear servo control of simple horizontal planar arm movements. PhD thesis, Cambridge, MA

Matsui A, Matsuyama K (1995) An approach to equilibrium selection. J Econ Theory 65:415–434

Maynard Smith J (1982) Evolution and the theory of games. Cambridge University Press, Cambridge

McRuer D (1980) Human dynamics in man–machine systems. Automatica 16:237–253

Moreau L (2005) Stability of multiagent systems with time-dependent communication links. IEEE Trans Autom Control 50(2):169–182

Najim K, Poznyak AS (1994) Learning automata: theory and applications. Pergamon, Elmsford

Narendra K, Thathachar M (1989) Learning automata: an introduction. Prentice-Hall, New York

Norman MF (1968) On linear models with two absorbing states. J Math Psychol 5:225–241

Olfati-Saber R (2006) Flocking for multiagent dynamic systems: algorithms and theory. IEEE Trans Autom Control 51(3):401–420

Olfati-Saber R, Fax JA, Murray RM (2007) Consensus and cooperation in networked multiagent systems. Proc IEEE 95(1):215–233

Pemantle R (1990) Nonconvergence to unstable points in urn models and stochastic approximations. Ann Probab 18(2):698–712

Sandholm WH (2010) Population games and evolutionary dynamics. MIT Press, Cambridge

Schelling T (2006) The strategy of conflict. Harvard University Press, Cambridge

Selten R (1991) Anticipatory learning in two-person games. In: Selton R (ed) Game equilibrium models. Evolution and game dynamics, vol I. Springer, Berlin, pp 98–153

Shamma JS, Arslan G (2005) Dynamic fictitious play, dynamic gradient play, and distributed convergence to Nash equilibria. IEEE Trans Autom Control 50(3):312–327

Shapiro IJ, Narendra KS (1969) Use of stochastic automata for parameter self-organization with multi-modal performance criteria. IEEE Trans Syst Sci Cybern 5:352–360

Skyrms B (2002) Signals, evolution and the explanatory power of transient information. Philos Sci 69:407–428

Skyrms B, Pemantle R (2000) A dynamic model of social network formation. Proc Natl Acad Sci USA 97:9340–9346

Strogatz SH (2003) Sync: the emerging science of spontaneous order. Hyperion, New York

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Tang F-F (2001) Anticipatory learning in two-person games: some experimental results. J Econ Behav Organ 44:221–232

Verbeeck K, Nowe A, Lenaerts T, Parent J (2002) Learning to reach the Pareto optimal Nash equilibrium as a team. In: McKay RI, Slaney J (eds) AI2002 advances in artificial intelligence. LNCS, vol 2557. Springer, Berlin

Wang X, Sandholm T (2003) Learning near-Pareto-optimal conventions in polynomial time. In: Neural information processing systems: natural and synthetic (NIPS)

Weibull J (1997) Evolutionary game theory. MIT Press, Cambridge

Young HP (1993) The evolution of conventions. Econometrica 61:57–84

Young HP (1998) Individual strategy and social structure. Princeton University Press, Princeton

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chasparis, G.C., Shamma, J.S. Distributed Dynamic Reinforcement of Efficient Outcomes in Multiagent Coordination and Network Formation. Dyn Games Appl 2, 18–50 (2012). https://doi.org/10.1007/s13235-011-0038-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-011-0038-z