Abstract

Artificial intelligence (AI) is finding more uses in the human society resulting in a need to scrutinise the relationship between humans and AI. Technology itself has advanced from the mere encoding of human knowledge into a machine to designing machines that “know how” to autonomously acquire the knowledge they need, learn from it and act independently in the environment. Fortunately, this need is not new; it has scientific grounds that could be traced back to the inception of computers. This paper uses a multi-disciplinary lens to explore how the natural cognitive intelligence in a human could interface with the artificial cognitive intelligence of a machine. The scientific journey over the last 50 years will be examined to understand the Human-AI relationship, and to present the nature of, and the role of trust in, this relationship. Risks and opportunities sitting at the human-AI interface will be studied to reveal some of the fundamental technical challenges for a trustworthy human-AI relationship. The critical assessment of the literature leads to the conclusion that any social integration of AI into the human social system would necessitate a form of a relationship on one level or another in society, meaning that humans will “always” actively participate in certain decision-making loops—either in-the-loop or on-the-loop—that will influence the operations of AI, regardless of how sophisticated it is.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

Since the inception of the human race, technologies have been an integral part of human society. The Oldowan stone tools [5] assisted humans 2.6 million years ago in farming, hunting and construction. Simple tools created opportunities for humans, providing them with more resources to support their families and enabling them to improve their quality of life. For millions of years, these technologies physically augmented the human. Only a thousand years ago, when the Chinese invented Suanpan [6] (one of the first forms of a calculator known in history), did humankind start to see the birth of cognitive augmentation: tools that help humans to think faster and do complex counting and arithmetic operations that cannot be done by the human brain alone. This form of augmentation enabled humans to be more efficient in trade.

Digital calculators then added greater functionalities that ranged from complex calculations to the ability of storing and memorising information. As humanity started to develop the first electronic digital computers through Babbage’s work in 1821 [7], new extensions to human cognition began to appear. Present day technology enables a computer to augment humans’ planning abilities, as in the case of a GPS planner in a car, and can memorise items to extend human memory, as in the case of memorising appointments using a calendar. Sensor technologies with natural biological sensors enable humans to see, hear and feel things they could not see, hear or feel before. Robotic actuators allow humans to extend their body [8, 9] when they lose an arm or a leg, or when they need extra strength, by using an exoskeleton to carry more weight than they could normally support. The technological landscape has evolved steadily from simple automation to advanced automation that can respond better than a human in a specific situation. For example, a collision avoidance system in a car can sense danger faster than a human and can execute a resolution strategy in a time critical, life-or-death situation. Despite this long history of physical and basic-level cognitive augmentation, never before have humans been able to augment their intelligence. Tools, including computers, have always been under significant human control. The human is the master and the tool has always been the slave that has no mind of its own to challenge its human master.

Artificial intelligence (AI) promised for many years to revolutionise this form of augmentation. Since its inception, AI has promised to solve problems on behalf of the human independently; it can understand humans and communicate with them, and it can even challenge humans in their unique characteristic: natural intelligence. The Turing test [10] has been the primary test for AI, challenging AI developers to design an AI to be indistinguishable from humans [11]. However, for decades, the promise of AI was bigger than its reality, creating valleys of AI death [11], where far more complex properties of humans are still in their infancy in the AI world.

Over the last decade, the fate of AI has started to turn, together with its enabling technologies, such as sensors, communication, the Internet, computer speed and storage. Micro-services [12], which break down large tasks into many small programs that can be distributed everywhere and anywhere and be summoned on demand when the need arises, offered industry an opportunity to replace a single giant AI program with many independent services performing specialised functions [13]. This concept of micro-services is used today by many companies, including Microsoft, and has been the basis for some of the most recent media stunts, such as those associated with the robot Sophia becoming the first robot to be granted citizenship by a country [14]. Micro-services will not only conceal the reality of AI affairs today but will also allow sudden unanticipated tipping points to appear in the technological landscape of AI. As we approach these tipping points, we need to pose the question: what is AI exactly, and what roles should humans play in safeguarding society against AI?

What Is Artificial Intelligence?

There are many definitions of AI [15, 16], thanks to the complexity of expressing the concept of intelligence in finite words and the blend of beauty and ambiguity in human language. These attempts spent little time arguing what “artificial” refers to in AI and much time arguing what “intelligence” is. Properly, computational intelligence is a more adequate terminology to avoid the philosophy of what artificial is and what it is not, and to focus more technologically on the fact that the AI I discuss in this article is of a computational nature. Nevertheless, I start by offering my own definitions for AI for two reasons. First, to communicate to the reader what AI means to me, which will facilitate an understanding of the remainder of this paper. Second, to offer a structure for this paper that naturally unfolds the relationship between AI and humans as well as that between AI and other concepts and research areas, such as autonomy, smart autonomous systems, trusted autonomy and robotics.

I begin with my functional definition of AI:

Definition 21

Artificial intelligence is concerned with the design of computer algorithms, methods and methodologies that enable machines to: understand the world and assess themselves and their context to identify hazards, threats and opportunities affecting their goals; generate, choose and execute appropriate courses of actions to achieve their goals; learn to improve their performance and adapt to changes in their surroundings; and educate and transfer their knowledge to others (humans and machines).

I like to simplify the above definition technologically to the following:

Definition 22

Artificial intelligence aims to design algorithms to provide computers with cognitive skills and competencies for sense-making and decision-making.

These two definitions are anchored in the underlying philosophy of this paper. The first lists the characteristics of different AI algorithms to be able to:

-

interpret data, represent and understand context and situations

-

assess opportunities and risks in contexts and situations

-

design, plan and generate courses of actions, select and execute one or more of them, and reason about and explain the choices they make,

-

learn and adapt

-

share knowledge by transferring it to other AIs or, through explanation, to a human.

The second definition posits AI as the automation of cognition (machine cognition) to develop skills and competencies to perform tasks. It highlights the two major streams of applications we see in today’s world of AI: data analytics, which focuses on analysing, interpreting and transforming data into knowledge, and autonomy, which focuses on producing actions that assist the AI in achieving its own design objectives.

In data analytics—a core step for sense-making—the results of the analysis inform humans or another AI to identify an appropriate course of action. The classic cycle of data mining and knowledge discovery in databases commence with an objective for the analysis and a set of data that then goes through a series of steps including cleaning, feature selection, application of a machine learning technique, and identification of an appropriate model for point/class prediction, or some sort of summarisation and understanding of the data through association rules, clustering and/or summarisation techniques. The output of data analytics enables the decision maker (human or AI) to understand the environment, make prediction and anticipate consequences of options.

Since data analytics does not produce decisions per se, the risk of that AI needs to be managed by those who will use the output of data analytics to generate the actions. For example, a panel display in a vehicle shows the result of the analysis performed by the AI to transform raw signals received by the car into information to be used by the driver, for example, external temperature information and navigation. The human could choose to ignore this information or use it as appropriate.

Decision-making produces actions based on the data and understanding provided to the agent (a human or an AI). If the decision-making agent acts directly on the environment, part of the risk of that decision is transferred to the inputs to that agent. A loan assessment tool makes decisions by itself and acts on the information it receives. If the tool guarantees to make the right decision, this would likely be conditional on receiving the correct information with an appropriate level of accuracy. This tool could still make the wrong decision if the human feeds it with incorrect information. Nevertheless, the tool might simply make a recommendation to a human who has the authority to accept or reject this recommendation; hence, the risk of the decision could still be managed.

Autonomy requires an AI with both sense-making and decision-making abilities, as well as the ability and authority to execute the decision. This form of AI senses information from its environment, assesses context, makes decisions, executes the decisions and is authorised to execute these decisions. An autonomous loan agent would collect information about the borrower, analyse the risk profile of the borrower and their financial abilities, decide the size of loan the borrower may obtain, initiate the loan in the system and authorise the loan. When an autonomous loan agent works together with a human financial adviser, the human-AI relationship needs to be considered at the design stage of the AI development and during human training. Since the relationship has been a long-studied research topic, it is pertinent to discuss the literature for the reader to appreciate that the design of this relationship could happen on a fine level of granularity, which manages the risk for the society. While challenges exist, as I discuss later in this article, an important piece of enabling science revolves around the concept of function allocation.

Function Allocation to Humans and Machines

At the design stage of a new technology, the potential missions and contexts for which the technology will be used are analysed to identify the different functions required for these missions. If the mission is to drive a car from one place to another, we could have a function to observe the environment, a function to evaluate the observations to understand the current location of the car, a function to detect hazards, a function that plans the next location of the car, a function to decide on appropriate acceleration and de-acceleration rates to control the velocity of the car, and a function to steer the wheel. These seem sufficient for our purpose to avoid unnecessarily complete enumeration of this cumbersome task. When a car has some level of automation, which functions should we delegate to automation, when and how? These questions have been the subject of a long history of research focusing on function allocation: the process by which functions are allocated to humans and machines. It is a form of division of labour. Function allocation raises three key questions:

-

Methodology: How should functions between humans and machines be allocated?

-

Responsibility: Who is doing the allocation?

-

Authority: Who can authorise an allocation?

Function Allocation Methodologies

Two distinct categories of methods exist for function allocation: static and adaptive allocation. Rouse and Rouse [17] defined three classes of static allocation:

-

comparison allocation, where the better performer is chosen; that is, if the human is better than the machine in one function, the human is chosen, otherwise the machine

-

leftover allocation, where every function that could be automated is allocated to automation and only those functions for which no automation is possible are allocated to humans

-

economic allocation, which uses a cost-benefit analysis approach, where if automating a function is not cost-effective, even if it could be automated, it is assigned to a human.

Static allocation presents a multitude of problems. First, it assumes that the suitability of a human or a machine for a function does not change over the course of the mission. This is clearly not the case, because the state of the environment, AI and humans are all factors that influence the suitability of a human or a machine for a particular function in a particular context. In a situation with severe consequences, a function that is performed by a machine might need to be performed by a human, and vice versa—a function that a human does well under normal workload conditions might need to be switched to a machine if the human is overloaded.

Second, none of the three classes of static allocation described above considers the human element. Each sees humans as machines, and the allocation is based purely on factors such as performance and cost. This could lead to assigning humans uninteresting functions, causing human boredom and demotivation. A third problem is the underlying assumption that it is the designer who decides what to allocate to humans and machines. The human operator does not have a say and is assumed to “listen” to whatever the designer has decided when using this form of “technologically centred design”.

The problems described above gave birth to a new category of function allocation called adaptive allocation, developed to serve a “human-centred design”. Different names of this category emerged, including adaptive aiding, adaptive automation, and adaptive allocation, but the origin can be traced back to the early 1960s [18, 19]. The concept was popularised with a United States Air Force project in the 1970s on cockpit automation [20]. Adaptive allocation changes function allocation during a mission. Rouse [21] discussed three strategies (called automation allocation logic [AAL]) to control when a change in function allocation needs to be triggered:

-

critical event logic, where automation hands over a function to a human if there is a critical event or vice versa

-

measurement-based logic, where the handover could occur in any direction at any point in time based on a continuous measurement of a state such as human workload level

-

modelling-based logic, where human performance is captured in a model that predicts future human workload and makes decisions about when a different function allocation is needed.

The earlier versions of adaptive allocation focused on simple models of human performance that were built through observational research. This was true for adaptive aiding [19] and adaptive automation [18]. Research areas such as human-machine interaction [22], man-machine symbiosis [23], human-machine teaming [5] and human-autonomy teaming [24] have largely relied on simple models to either analyse or model the relationship. Meanwhile, a second evolutionary path has been growing steadily since the 1970s, which has led to the newer concepts of augmented cognition [25] and cognitive-cyber symbiosis (CoCyS; [26]).

However, dynamic function allocation could distract humans, disturb their situational awareness and, consequently, negatively affect safety and performance in the tasks assigned to them. Moreover, if the allocation is based on subjective data and/or objective data that interfere with the task with which a human is involved, the allocation errors could increase, resulting in higher errors in the human-AI relationship. Therefore, the use of augmented cognition and CoCyS attempts to overcome these limitations.

Augmented cognition [27, 27] is encephalography (EEG)-based adaptive allocation. EEG represents the signals occurring because of brain activities that are triggered by brain functions. In 1875, Richard Caton demonstrated that fluctuations in brain activities follow mental activities. This finding meant that brain signals could possibly indicate the impact of the mental processing a human is experiencing while performing certain functions. However, Caton’s work (as cited in [28]) was demonstrated on animals. It was not until 1929 that Hans Berger [29] published the first study to record EEG data from humans. In 1934, Adrian and Matthews [30] demonstrated that not only are brain waves triggered by mental activities but also by external stimuli, which could influence the way they are triggered. This idea was possibly the first scientific evidence for neurofeedback, a recent field in clinical psychology [28], which retrains the human brain to produce signals to correct physiological causes of some psychological illnesses, such as attention deficit disorder.

In 1970, the United States Department of Defense (DoD) recognised the potential of this maturing line of research and embarked on two projects on biocybernetics [31] and brain computer interfaces [32]. The ability to record and interpret human EEG opened a variety of opportunities, including the potential for deriving workload, attention and situational awareness objective indicators from EEG to guide AAL, even in real time in an operating environment. Another United States DoD activity in the early 2000s led to the new concept of augmented cognition. As EEG sensors developed and became more reliable, the science evolved to a reasonable technological maturity level, and function allocation research using EEG became well established. In this line of research, function allocation logic has possibly been the simplest form of AI. It was pre-designed, lacked flexibility because it did not change its behaviour once deployed and, simply put, lacked the smartness and autonomy to match the complexity of the AI it was attempting to manage in the first instance.

This led to the Australian born CoCyS [26] concept, which revolutionised the adaptation process from a machine adapting to a human to smart adaptive agents (called “e-cookies”) that act as autonomous relationship managers between humans and machines. In essence, we could imagine that it is possible to standardise all AI systems by adding to the AI a front-end that communicates with the human, assess the human trustworthiness, and most importantly, is able to present to the human information at sufficient pace and form suitable for the human to understand and act on, while simultaneously able to translate back and forth these information with its internal components. CoCyS offer an alternative that is more design friendly, cost effective, and could be designed and added to any existing system. Similar to human relationship managers, e-cookies are AI relationship managers with their sole responsibility being to manage the relationship between humans and other AIs or autonomous systems. For example, e-cookies do not play chess, but they interface between the human who is playing chess and the AI recommender system who is supporting this human to play chess. E-cookies sit in between to ensure that the human and the AI recommender system work in harmony and in a trust-worthy manner.

Each e-cookie analyses different data sources (e.g. EEG, muscle movements, heart rate, skin temperature, eye tracking, voice, images) measured from the human under observation, objectively inferring human states (e.g. workload, attention, emotion). It equally analyses different data sources from the automation itself to understand autonomously the state of the task and predict its future states. An e-cookie then learns from this information to identify when and how to adapt the function allocation strategy. An e-cookie also acts as a smart regulator and/or smart safety net to ensure the trustworthiness of the relationship.

CoCyS opened the way to a new and more sophisticated form of AAL, one that is trust based, allowing an e-cookie to reallocate the functions between humans and machines based on trust indicators. If a machine is hacked or spoofed, some functions are retracted from the machine and allocated to the human. If there is a suspicious change in human identity, some functions that were performed by the human are reallocated to the machine. Adaptive allocation created a control agent between humans and machines. To avoid confusion between the automation we have discussed so far and the automation of the agent responsible for adaptive allocation, I refer to the latter as the allocation agent. An example of an AI-based allocation agent I have discussed above is e-cookie. This naturally raises the question of who is doing the allocation agent job, and who has the authority to make a decision to execute the allocation agent’s recommended course of action. These two questions will be addressed next.

Function Allocation Responsibility

Humans can control the allocation agent; thus, they can assess a situation and adopt a strategy of when they wish to push a function to the AI and when they wish to retract a function from the AI. Equally, the control of the allocation agent can be automated. For example, when the system notices a critical event, it can reallocate a function from a human to a machine or vice versa based on a set of predefined rules. E-cookie is an example of advanced automation of the allocation agent. However, what type of intervention triggers are needed for the human or AI to intervene, when and how? This line of thinking, however, depends on the level of automation and the role the human plays in the system.

Levels of automation (LOA) refers to the maturity level of automation. Sheridan [33, 34] defined 10 levels using a “who-centred” approach, while Endsley and Kaber [35] defined another 10 levels from an information-sharing and situation awareness perspective. The latter were then refined, with Endsley [36, 37] increasing the levels to 12. The two approaches are presented in Table 1.

LOA have evolved in a culture of decision-making in safety critical systems where the physical body of the AI in the form of a robot is not part of the operator’s responsibility and, therefore, is irrelevant to the relationship between humans and machines. Nonetheless, in the field of human-robot interaction, the relationship between the human and the robot can take different forms.

Scholtz [38] defined five roles for humans in human-robot interaction: supervisor, operator, teammate, bystander and mechanic. In a supervisory role, the human oversees what the machine does and advises accordingly. A supervisor does not become involved with low-level tasks for which the machine is responsible. Instead, the supervisor takes meta-actions and makes plans and high-level decisions. An operator, however, monitors low-level action-level tasks. A teleoperation scenario is an example of a human operator responsible for performing low-level tasks. A teammate is a role in which the human works collaboratively with the machine to perform the mission. They can both issue advice to each other and delegate tasks to one another. As a mechanic, the human modifies any abnormal behaviour the machine displays or fixes a mechanical problem with the machine. A bystander acts as a facilitator between the robot and the environment, for example, a bystander might remove an obstacle from the robot’s path if the robot is not designed for managing obstacles or if the robot is so expensive and fragile that such damage would be costly.

In summary, a supervisor guides the robots, an operator controls low-level actions, a teammate collaborates with the robot to perform the mission, a mechanic fixes the robot or its mistakes, and a bystander modulates the relationship between the robot and the environment. Other forms of human-machine relationships can be seen in the LOA table above, such as shared control, supervisory control, mixed initiatives or blended decision-making.

These different forms of human-AI relationships have a significant impact on function allocation. A static function allocation works in simple tasks where the exact division of labour between the AI and the human is clear. In more complex tasks, where the environment is naturally uncertain, it becomes more difficult to follow a static function allocation. The authority for function allocation becomes more important than ever and should be considered in the design of the AI. Who should authorise a reallocation? Should the function allocation agent itself be allowed to change? These questions will be addressed next.

Function Allocation Authority

The allocation agent could be a human or an AI. It might be delegated to authorise a change in function allocation or another human or an AI might authorise the change. In the latter case, the allocation agent makes a recommendation, while the authoriser accepts or rejects this recommendation. Regardless of the nature of the allocation agent, the question remains: what are the conditions for a machine to authorise a change in function allocation? Inagaki [39] offered examples for situations that could justify the machine authorising such a decision and noted:

“The automation may be given the right to take an automatic action for maintaining system safety, even when an explicit directive may not have been given by an operator at that moment, providing the operators have a clear understanding about the circumstances and corresponding actions which will be taken by the automation.” [39, p. 17]

While safety is indeed an important factor, it seems more appropriate to generalise Inagaki’s perspective using a risk lens. I distil from the literature three factors that should influence this decision: time criticality, skills to judge and severity of consequences. In a situation where the time needed to act is insufficient for a human to make the decision, either the machine needs to decide or the consequences of inaction need to be evaluated.

A function is time critical when the difference between the time by which the decision needs to be made to be an effective decision and the time required to make that decision becomes smaller and approaches zero. An allocation agent in an aircraft flying using the autopilot system might foresee a weather cell five minutes ahead. The allocation agent assesses that the complexity of this situation is not suitable for the autopilot to fly the aircraft and hands over control to a human. The 5-min window allows the human to overcome the effect of a surprise, comprehend the situation and prepare for action. If the time window were five seconds instead, this would not provide sufficient time for the human to perform the function. Therefore, time criticality depends not only on the decision to be made but also on the capabilities of the agent that will make the decision, the context and the exact situation the agent is facing.

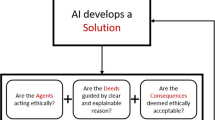

The second influencing factor, skills to judge, relates to when the human or machine is not sufficiently skilled to authorise; that is, the human or machine lacks the skill to judge whether a change in function allocation should take place or not. The third factor concerns whether the severity of consequences is high or not. Figure 1 summarises all possible combinations of these factors and the recommended authority for the decision.

The red areas highlight the need and importance of the pre-design analysis to ensure that either the human or the machine has the necessary skills to judge the appropriateness of a change in function allocation.

The table could be further adopted to suit the specific context for adaptation to occur. For example, while a human could authorise the action when the time is uncritical and even when the machine is skilled, a skilled machine could equally authorise the action if the human is overloaded.

Relationships of Equals: Why Is Teaming Hard for AI Agents?

The discussion so far has highlighted the function allocation problem and described the relationship between humans and machines purely in terms of function allocation. As the LOA for machines approach Level 12, the relationship between humans and machines changes in nature. Function allocation focuses the effort on well-engineered concepts, leaving out elements where machines could have a different intent from humans and could even “supervise” the human. Take, for example, a robot teacher supervising a child while learning multiplication, or a robot coach supervising a group of swimmers. In these cases, current LOA are insufficient, and therefore limited in their abilities to describe these future AI systems; hence, it would be restrictive to limit the discussion of challenges in the human-AI relationship using only LOA.

Scholtz’s roles [38], discussed above in the “Function Allocation Responsibility” section, could be useful here; they can be summarised functionally as to guide, to control, to collaborate, to fix and to modulate. With smart (advanced AI-based) autonomous systems, we should assume that these functions are available for both humans and automation. In one situation, the human might supervise a robot performing low-level control, while in another, the human might perform the low-level control task while the robot supervises the human. A first-aid robot could perform a mechanical role to treat humans if they are injured.

When the AI takes an equal role to a human, the level of control could take a multitude of forms, as shown in Table 2.

Clearly, before AI takes more control in the world, it should reach a level of maturity that makes it, at least, able to collaborate with humans. Human-AI collaboration is a non-trivial problem. Klien et al. [43] listed 10 different challenges that the technology faces before AI can reach the level of maturity required to collaborate with humans as equals. I list these challenges here for completeness (Table 3):

Scrutinising the 10 challenges above, one can easily observe the gap between AI technology as it stands today and the requirement for AI to be able to collaborate as equal to humans. For example, the second challenge calls for AI to be able to model humans’ intentions and humans to be able to model AI’s intentions. A significant amount of research is still under way to infer human intention in simple human-robot interaction tasks with unsolved problems, never mind complex interaction tasks. The research community is still developing solutions for AI to be, interpretable [2,3,4], transparent and explainable [1] to allow humans to understand the intention of an AI and develop mutual predictability and shared understanding. It is tempting for some to claim that taking the human out of the loop will make collaboration easier because different AIs could exchange intentions more efficiently in their own computer language than by attempting to learn intentions or communicate them with a human. Even in this situation, challenges 1, 7, 8, 9 and 10 impose significant burdens on the current most-advanced AI systems. For example, negotiation alone is a computationally expensive, mostly intractable problem [46].

The discussion above demonstrates that the development of an AI that could truly collaborate, as an equal teammate, with humans is technologically distant from today. Even if an AI exists that is so sophisticated that it can claim to be more intelligent than a human, such an AI will struggle significantly to work as a team member with other AIs because of the computational complexity required to scale the AI for team negotiation and self-synchronisation of actions. Most of the challenges above could have solutions once the context has been appropriately limited. The technological reality discussed above for AIs working in open-ended contexts does not preclude us having in the near future very advanced AI systems that could truly outperform humans in specific real-world tasks. These advanced AI systems will require effective methodologies for their social integration in the human system and a level of trust to allow them autonomy in their specific operating context. These AI systems form the context for discussion in the next section.

Human-AI Trust

Trust is the glue of social systems because it assists humans to manage and reduce complexity in the world [47]. Some of the factors that allow a trustor to trust a trustee include the ability to carry out a given task, benevolence towards the trustor, and integrity such as fairness and honesty [48]. Giffin [49] noted listeners’ perceptions of a speaker’s expertness, reliability, intentions, activeness, personal attractiveness and the majority opinion of the listener’s associates as elements of trust. Thus, trust blends a complex array of interaction factors including attitude, beliefs, control, emotion, risk and power.

Behavioural psychologists see trust from a social dilemma lens. Deutsch [50, 51], for example, viewed trust as a path of ambiguity that could lead to one of two possible outcomes: one is perceived to have negative valency that is greater than the positive valency perceived to be associated with the second. The trustee controls the outcome and decides which event will occur. If the trustor chooses this path, the trustor is said to trust the trustee; otherwise, the trustor distrusts the trustee. The literature on trust is immense, with definitions from many perspectives. Two common definitions are selected. Mayer et al. defined trust as “willingness of a party to be vulnerable to the actions of another party based on the expectation that the other will perform a particular action important to the trustor, irrespective of the ability of monitor or control that other party.” [48, p. 712] Lee and See “the attitude that an agent will help achieve an individual’s goals in a situation characterised by uncertainty and vulnerability.” [52, p. 51]

The common thread in these two definitions, and many other definitions in the literature, is that trust exposes a person to vulnerability [26]. Borrowing definitions of vulnerabilities from the risk assessment literature could help us better understand human-AI trust. A vulnerability could be broken down as follows [53]:

The above relationship explains the three dimensions affecting a vulnerability. The first is the capability of the trustee. The more capable a trustee is, the more serious a vulnerability could become because it could cause more damage if the trustee defects. The second is the opportunity that the trustor gives to the trustee. When the trustor trusts the trustee, the trustor gives the trustee an opportunity to defect. The third is the intent of the trustee. A capable trustee that is given an opportunity (is trusted) will not defect if the intent is good.

The above explanation fits perfectly with AI. The capability of an AI represents its skills and competency levels, and thus, the level of automation of that AI. The opportunity is the level of autonomy, the degrees of freedom with which the AI is permitted to execute its actions and the authority delegated to it. The intent in simple AI agents is the design intent, whereas the intent in AI agents that learn and adapt could change as they interact with the environment. Thus, human-AI trust could be mapped across the two dimensions of level of automation and level of autonomy, assuming the AI’s intent is aligned with the human’s intent.

Figure 2 summarises the human-AI relationship using a vulnerability lens. If the intent of the AI is not aligned with that of the human, the AI is likely to make decisions that disappoint the human and cause the human to suspect the intent of the AI, thus leading to a situation of human mistrust regardless of the AI’s level of automation (capability) and level of autonomy (opportunity). If the intent of the AI is aligned with the human, automation and autonomy start to moderate the relationship. When the level of automation is lower than the level of autonomy, the AI is given opportunities that exceed its abilities, leading to the human over-relying on the AI, culminating in disappointment, distrust and an untrustworthy AI. When the level of automation is greater than the level of autonomy, the AI is more capable than the opportunities it is given. In this underutilisation case, the trustworthy AI performs the tasks successfully despite being underused but the high cost of producing this AI is not justified with its use. When the level of automation matches the level of autonomy, the AI is trustworthy, and the overall system is balanced.

The risk in the human-AI relationship could be traced to who is doing what in each of the four components (sense-making, decision-making, execution ability and execution authority), resulting in a number of situations as shown in Table 4.

Table 4 assumes that a full block is assigned to either the AI or the human. For example, the overall sense-making functional-block is a human’s responsibility alone or the AI’s alone. The human-AI relationship is normally designed on a finer level of granularity. Since the interaction of a human with an AI would invoke multiple functions, Lee and See [52] referred to this fine level of granularity as function specificity, where the human interacts with specific functions. One function may be trustworthy, while another may not. This situation may cause the human to distrust the overall system. This naturally takes us back to function allocation and the need for a smart allocation logic, such as e-cookie, to manage the relationship and ensure trustworthy human-AI interaction.

A central point in the discussion above is to be able to assess the level of autonomy. The literature relies on indirect indicators to assess the level of autonomy. In particular, performance has been a central focus in designing these indicators.

Neglect tolerance [54] represents the reduction in an autonomous system performance when it is being neglected by its human supervisor. A fully autonomous system will continue to function at the same level, while a semi-autonomous system will suffer from severe drops in performance. In effect, neglect tolerance measures how skilled a system is.

Interaction efficiency [54] complements the above metric. A fully autonomous system could successfully undertake a task without human intervention. However, the performance of that system may improve once a human interacts with it. If effect, interaction efficiency measures the level of competency a skilled system has.

Neglect Benevolence [55] represents the time an autonomous system needs to stabilise between two successive instructions. This time could be seen equivalent to the concept of task switching, and represents the time needed for task switching such that the system is able to perform each task at its best.

The primary problems in the indicators above include being post-development; that is, they are proposed by the human-robot interaction literature where the system has already been designed and built. Therefore, they cannot guide the design of the system efficiently. Our discussion in this paper suggests another set of indicators that could guide the design of the system, functional analysis. By decomposing the mission into functions, weighting each function based on its level of criticality and complexity of the skills required to perform them, level of autonomy could be measured as the percentage of the weighted sum of those functions implemented and those available for a mission.

The post-development indicators could then be used as modifiers for testing each function. For example, neglect tolerance and interaction efficiency could then be measured on the level of individual functions. Similarly, neglect benevolence could be evaluated between each two functions that could be required in a mission to operate serially. From this discussion, we could formalise the metrics as follows:

where N is the total number of functions required to complete a mission effectively and efficiently, M is the number of functions that have been implemented, Wi is the weight of function i reflecting its criticality and skill complexity, NTi is neglect tolerance for function i, IEi is the interaction efficiency for function i, and NBik is neglect benevolence for function i when followed up by function k.

All metrics above generate values between 0 and 100%; thus, they are bounded. Their range could be mapped to categories such as low, medium and high autonomy level based on the specifics of the mission and design requirements.

Many Questions, Few Answers

The paper has drawn on a large transdisciplinary body of literature to demonstrate that there has been a wealth of research conducted in which scientists, technologists and engineers have thought about the relationship between humans and AI. While methodologies exist, new fundamental and challenging questions continue to emerge. The complexity of AI is increasing; thus, what used to work just in a structured safety critical environment, such as air traffic control, needs now to be used in unstructured environments, in the hands of the public, and in situations where it is not necessarily possible to think through responses in advance. This calls for new test and evaluation methods [56] and leaves us with more questions than the current state of science can readily answer. I will focus on a few.

Should humans have control over AI? AI operates in a social context. Whatever roles it will play as a technology, it will be serving a role in society. Some aspects of this role are better performed by AI in which human intervention could be undesirable, while other aspects need human approval. For example, a self-driving car should self-manage itself when an obstacle suddenly appears. In this situation, any delegation to a human could be fatal, since the human does not have the cognitive capacity to respond in time. The destination of the self-driving car is a human choice, which the human needs to approve. The answer, therefore, is not a straightforward yes or no; it is a matter of when and how. Function allocation gives us the scientific foundations to search for an answer. As more AI becomes integrated into society, we need to simultaneously dig deep to explore the functions and design the required functional allocation logic.

What are the risks associated with becoming over-reliant on AI? Under-reliance represents inefficiency, while over-reliance represents risk. This question raises the importance of understanding the true trustworthiness of an AI and its capability, and of conducting a continuous assessment of its behaviour to calibrate levels of trustworthiness. Using a risk lens, the answer would also lie in the frequency of being over-reliant, the magnitude of consequences and the trustworthiness of the AI. Over-reliance will lead to negative consequences. The more negative consequences we see, the more likely we will distrust an AI and remove it from service. Can we design triggers for intervention? Should humans intervene in the functioning of the AI? If yes, when should they? A good starting point towards answering these questions is the literature on function allocation. Different forms of human-AI relationships call for different answers to these questions. If the AI is skilled in a task where it is supervising a human, it would be inefficient for the human to intervene in the AI’s task. In a supervisory control task where the human supervises the AI, clearly the human is authorised and exists to intervene with the AI when needed.

Recalling that e-Cookies are AI agents that act as relationship managers between humans and autonomous systems. E-cookies aim to encode triggers for intervention in the allocation agent. E-cookies need to be designed as a smart watchdog to ensure that the AI is performing correctly. However, this is not a simple expectation. There are fundamental challenges in the design of E-cookies that need rigorous science to address them, for example, how to assess the safety of an AI that continues to learn and evolve with new behaviours that did not exist at the design stage.

Conclusion

In this article, I have discussed the human-AI relationship using scientific and technological lenses. The literature of function allocation has shown that the human-AI relationship is not only about humans using AI or humans interacting with one thing called the AI, but also about different forms of micro-relationships that involve functional interactions. In a semi-autonomous car, the human interacts with the displays inside the car, the wheel when it needs to, the navigation system and so on. Similarly, in fully autonomous cars, the human will interact with the car using voice, receiving audio and visual information, and possibly haptic feedback. Each of these interactions performs different functions and engages in services offered to the human. The human trust in the car will be influenced by these different interactions.

I have argued that the Human-AI relationship should not be studied from a mere use perspective alone, which could lead to sever negative consequences. I discussed the allocation agent representing an AI that is dynamically reallocating functions between the human and the AI. The allocation agent itself is an AI and could simply cause the human to distrust or mistrust the AI in the car if the allocation agent is not skilled enough to reallocate functions effectively and efficiently. It is important to design the allocation agent, and clearly articulate the level of delegation to that agent and its authority to act in different contexts. The AI in the future could turn out to be AIs within an AI, making it difficult to trace the root causes of distrust or mistrust between the human and the machine.

I conclude that any social integration of AI into the human social system would necessitate a form of relationship on one level or another between the human and the AI in society, meaning that humans will always actively participate in some decision-making loops that will influence the operations of AI. Even the most autonomous and clever AI will exist within a social system in which it needs to interact with humans and other AI systems. An AI must become socially integrated.

References

Cambria E, Poria S, Hazarika D, Kwok K. Senticnet 5: discovering conceptual primitives for sentiment analysis by means of context embeddings. Proceedings of AAAI; 2018.

Ma Y, Peng H, Khan T, Cambria E, Hussain A. Sentic LSTM: a hybrid network for targeted aspect-based sentiment analysis. Cognitive Computation 2018;10(4):639–50.

Sun X, Peng X, Ding S. Emotional human-machine conversation generation based on long short-term memory. Cogn Comput 2018;10(3):389–97.

Ding G, Chen M, Zhao S, Chen H, Han J, Liu Q. 2018. Neural image caption generation with weighted training and reference. Cognitive Computation, pp 1–15.

Susman RL. Who made the oldowan tools? Fossil evidence for tool behavior in plio-pleistocene hominids. J Anthropol Res 1991;47(2):129–51.

Flegg G. 1989. Numbers through the ages. Macmillan International Higher Education.

Randell B. The origins of digital computers: selected papers. Berlin: Springer; 2013.

Controzzi M, Cipriani C, Carrozza MC. Design of artificial hands: a review. The Human Hand as an Inspiration for Robot Hand Development. Springer; 2014. p. 219–246.

Goh S, Abbass H, Tan K, Al-Mamun A, Thakor N, Bezerianos A, Li J. Spatio-spectral representation learning for electroencephalographic gait pattern classification. IEEE Trans Neural Syst Rehabil Eng 2018;26(9):1858–67.

Turing A. Computing machinery and intelligence. Mind 1950;59(236):433–60.

Warwick K, Shah H. Passing the turing test does not mean the end of humanity. Cogn Comput 2016;8(3): 409–19.

Smallegange JAP, Bastiaansen HJM, Venema AP, Bronkhorst AW. 2018. Big data and artificial intelligence for decision making: Dutch position paper. Technical Report STO-MP-IST-160 NATO.

Dragoni N, Giallorenzo S, Lafuente AL, Mazzara M, Montesi F, Mustafin R, Safina L. Microservices: yesterday, today, and tomorrow. Present and Ulterior Software Engineering. Springer; 2017. p. 195–216.

Abbass HA. 2017. An AI professor explains: three concerns about granting citizenship to robot sophia. The Conversation. Retrieved from https://theconversation.com/an-ai-professor-explains-three-concerns-about-granting-citizenship-to-robot-sophia-86479.

Bringsjord S, Schimanski B. What is artificial intelligence? Psychometric ai as an answer. IJCAI, Citeseer; 2003. p. 887– 893.

Fetzer JH. What is artificial intelligence? Artificial Intelligence: Its Scope and Limits. Springer; 1990. p. 3–27.

Rouse W, Rouse W. Design for success: a human-centered approach to designing successful products and system. New York: Wiley; 1991.

Babcock ML. 1960. Reorganization by adaptive automation. PhD thesis University of Illinois at Urbana-Champaign.

Berry PC. 1961. Psychological study of decision making Technical Report NAVTRADEVCEN 797-1, Arlington, VA: Psychological Research Associates.

Pope AT, Bogart EH, Bartolome DS. Biocybernetic system evaluates indices of operator engagement in automated task. Biol Psychol 1995;40(1-2):187–95.

Rouse W. 1994. Twenty years of adaptive aiding: origins of the concept and lessons learned. Human performance in automated systems: Current research and trends, pp 28–32.

Fitts PM, Viteles M, Barr N, Brimhall D, Finch G, Gardner E, Grether W, Kellum W, Stevens S. 1951. Human engineering for an effective air-navigation and traffic-control system and appendixes 1 thru 3. Technical report, OHIO STATE UNIV RESEARCH FOUNDATION COLUMBUS.

Licklider JC. 1960. Man-computer symbiosis. IRE transactions on human factors in electronics (1), pp 4–11.

Sheridan T. Human supervisory control. Handbook of human factors and ergonomics. Hoboken. New Jersey: Wiley; 2012. p. 990–1015.

Schmorrow D, Stanney KM, Wilson G, Young P. Augmented cognition in human-system interaction. Handbook of Human Factors and Ergonomics 2006;3:1364–83.

Abbass H, Petraki E, Merrick K, Harvey J, Barlow M. Trusted autonomy and cognitive cyber symbiosis: open challenges. Cogn Computat 2016;8(3):385–408.

Schmorrow D, Kruse AA. Darpa’s augmented cognition program-tomorrow’s human computer interaction from vision to reality: building cognitively aware computational systems. Proceedings of the 2002 IEEE 7th Conference on Human Factors and Power Plants, 2002. IEEE; 2002. p. 7–7.

Demos JN. 2005. Getting started with neurofeedback WW Norton & Company.

Berger H. Electroencephalogram in humans. Archiv fur Psychiatrie und nervenkrankheiten 1929;87:527–70.

Adrian ED, Matthews BH. The berger rhythm: potential changes from the occipital lobes in man. Brain 1934; 57(4):355–85.

Hill JM. 1973. Biocybernetics project. Technical report, COMPUTER CORP OF AMERICA CAMBRIDGE MA.

Vidal JJ. Toward direct brain-computer communication. Annu Rev Biophys Bioeng 1973;2(1):157–80.

Sheridan T. Telerobotics, automation, and human supervisory control. Cambridge: MIT Press; 1992.

Sheridan T, Verplank W. 1978. Human and computer control of undersea teleoperators. Technical report Massachusetts Inst of Tech Cambridge Man-Machine Systems Lab.

Endsley MR. Level of automation effects on performance, situation awareness and workload in a dynamic control task. Ergonomics 1999;42(3):462–92.

Endsley MR. From here to autonomy: lessons learned from human–automation research. Human factors 2017; 59(1):5–27.

Endsley MR. Automation and situation awareness. Automation and human performance. Routledge; 2018. p. 183–202.

Scholtz J. Theory and evaluation of human robot interactions. Proceedings of the 36th Annual Hawaii International Conference on System Sciences, 2003. IEEE; 2003. p. 10.

Inagaki T. Adaptive automation: design of authority for system safety. IFAC Proceedings 2003;36(14):13–22.

Taylor RH, Menciassi A, Fichtinger G, Fiorini P, Dario P. Medical robotics and computer-integrated surgery. Springer handbook of robotics. Cham, Switzerland: Springer. In: Taylor R, editors; 2016. p. 1657–1684.

Abbass H, Tang J, Amin R, Ellejmi M, Kirby S. The computational air traffic control brain: computational red teaming and big data for real-time seamless brain-traffic integration. J Air Traffic Control 2014;56 (2):10–7.

Hainsworth DW. Teleoperation user interfaces for mining robotics. Auton Robot 2001;11(1):19–28.

Klien G, Woods DD, Bradshaw JM, Hoffman RR, Feltovich PJ. Ten challenges for making automation a team player in joint human-agent activity. IEEE Intell Syst 2004;19(6):91–5.

Kovatchev B, Cheng P, Anderson SM, Pinsker JE, Boscari F, Buckingham BA, Doyle FJ III, Hood KK, Brown SA, Breton MD, et al. Feasibility of long-term closed-loop control: a multicenter 6-month trial of 24/7 automated insulin delivery. Diabetes Technology & Therapeutics 2017;19(1):18–24.

Reddy R. Robotics and intelligent systems in support of society. IEEE Intell Syst 2006;21(3):24–31.

Dunne PE, Wooldridge M, Laurence M. The complexity of contract negotiation. Artif Intell 2005;164 (1-2):23–46.

Luhmann N. 2018. Trust and power: John Wiley & Sons, New York.

Mayer RC, Davis JH, Schoorman FD. An integrative model of organizational trust. Academy of Management Review 1995;20(3):709–34.

Giffin K. The contribution of studies of source credibility to a theory of interpersonal trust in the communication process. Psychol Bull 1967;68(2):104.

Deutsch M. 1962. Cooperation and trust. Some theoretical notes.

Deutsch M. The resolution of conflict: constructive and destructive processes. New Haven: Yale University Press; 1977.

Lee JD, See KA. Trust in automation: designing for appropriate reliance. Human factors 2004;46(1):50–80.

Abbass H. 2015. Computational red teaming: Springer, Berlin.

Olsen DR, Goodrich MA. Metrics for evaluating human-robot interactions. Proceedings of PERMIS. Volume 2003; 2003. p. 4.

Nagavalli S, Luo L, Chakraborty N, Sycara K. Neglect benevolence in human control of robotic swarms. 2014 IEEE International Conference on Robotics and automation (ICRA), IEEE; 2014. p. 6047–6053.

Joiner KF, Efatmaneshnik M, Tutty M. Test and evaluation evolutions. Number 23. Evolving toolbox for complex project management. Taylor and francis group LLC; 2018.

Funding

This study was funded by the Australian Research Council (grant number DP160102037).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares that he has no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Abbass, H.A. Social Integration of Artificial Intelligence: Functions, Automation Allocation Logic and Human-Autonomy Trust. Cogn Comput 11, 159–171 (2019). https://doi.org/10.1007/s12559-018-9619-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-018-9619-0