Abstract

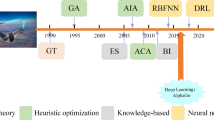

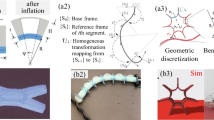

In order to improve the performance of the robotic arm effectively, this study established a robotic arm velocity planning model developed by artificial intelligence in the simulation system. The model not only considered the dynamic factors of the robotic arm but was also able to set different customized conditions such as machining accuracy and rotation angle. The study could be divided into three parts. First, the simulation environment was constructed with the ABB IRB140 six axes multipurpose industrial robot. To be consistent with real-world situations, a Vortex physics engine was applied to the simulation supplying varying locomotion parameters. In this research, friction, kinematics, and inertia were considered. Second, artificial intelligence was imported into the robotic arm through the establishment of connecting V-rep and Python. The proposed model was developed in the Python environment by deep deterministic policy gradients. Eventually, a design of the appropriate reward function governing the ultimate results was presented. Compared with traditional velocity planning, the proposed method can decline moving error by 0.05 degrees under the considerations involving dynamic factors in a robotic arm. Besides, the proposed velocity planning strategy could be obtained after taking the training time of one hour which can meet the demand for the time cost of the industry.

Similar content being viewed by others

References

Zhang, X., et al. (2021). Time delay compensation of a robotic arm based on multiple sensors for indirect teaching. International Journal of Precision Engineering and Manufacturing, 22, 1841–1851. https://doi.org/10.1007/s12541-021-00542-w

Zhang, T., et al. (2020). Robotic curved surface tracking with a neural network for angle identification and constant force control based on reinforcement learning. International Journal of Precision Engineering and Manufacturing, 21, 869–882. https://doi.org/10.1007/s12541-020-00315-x

Pham, A.-D., & Ahn, H.-J. (2021). Rigid precision reducers for machining industrial robots. International Journal of Precision Engineering and Manufacturing, 22, 1469–1486. https://doi.org/10.1007/s12541-021-00552-8

Kim, S. H., et al. (2019). Robotic machining: A review of recent progress. International Journal of Precision Engineering and Manufacturing, 20, 1629–1642. https://doi.org/10.1007/s12541-019-00187-w

Jeong, H., & Lee, I. (2022). Optimization for whole body reaching motion without singularity. International Journal of Precision Engineering and Manufacturing, 23, 639–651.

Park, J., et al. (2022). User intention based intuitive mobile platform control: Application to a patient transfer robot. International Journal of Precision Engineering and Manufacturing, 23, 653–666.

Bingul, Z., Ertunc, H., & Oysu, C. (2005). Applying neural network to inverse kinematic problem for 6R robot manipulator with offset wrist. In Adaptive and natural computing algorithms: Proceedings of the international conference, Portugal. Springer.

Duka, A.-V. (2014). Neural network based inverse kinematics solution for trajectory tracking of a robotic arm. Procedia Technology, 12, 20–27. https://doi.org/10.1016/j.protcy.2013.12.451

Almusawi, A. R., Dülger, L. C., & Kapucu, S. (2016). A new artificial neural network approach in solving inverse kinematics of robotic arm (denso vp6242). Computational Intelligence and Neuroscience. https://doi.org/10.1155/2016/5720163

Lee, D.-G., et al. (2023). Learning-based discrete hysteresis classifier using wire tension and compensator for flexible endoscopic surgery robots. International Journal of Precision Engineering and Manufacturing, 24, 83–94.

Son, J., Kang, H., & Kang, S. H. (2023). A review on robust control of robot manipulators for future manufacturing. International Journal of Precision Engineering and Manufacturing, 8, 1–20.

Tondu, B., & Bazaz, S. A. (1999). The three-cubic method: An optimal online robot joint trajectory generator under velocity, acceleration, and wandering constraints. The International Journal of Robotics Research, 18, 893–901. https://doi.org/10.1177/02783649922066637

Macfarlane, S., & Croft, E. A. (2003). Jerk-bounded manipulator trajectory planning: Design for real-time applications. IEEE Transactions on Robotics and Automation, 19, 42–52. https://doi.org/10.1109/TRA.2002.807548

Martínez, J. R. G., et al. (2017). Assessment of jerk performance s-curve and trapezoidal velocity profiles. In 2017 XIII international engineering congress (CONIIN). IEEE.

Liu, H., Lai, X., & Wu, W. (2013). Time-optimal and jerk-continuous trajectory planning for robot manipulators with kinematic constraints. Robotics and Computer-Integrated Manufacturing, 29, 309–317. https://doi.org/10.1016/j.rcim.2012.08.002

Li, H., et al. (2007). Motion profile planning for reduced jerk and vibration residuals. SIMTech Technical Reports, 8, 32–37. https://doi.org/10.13140/2.1.4211.2647

Lee, A. Y., & Choi, Y. (2015). Smooth trajectory planning methods using physical limits. Proceedings of the Institution of Mechanical Engineers, Part C: Journal of Mechanical Engineering Science, 229, 2127–2143. https://doi.org/10.1177/0954406214553982

Fang, Y., et al. (2019). Smooth and time-optimal S-curve trajectory planning for automated robots and machines. Mechanism and Machine Theory, 137, 127–153. https://doi.org/10.1016/j.mechmachtheory.2019.03.019

Ha, C.-W., Rew, K.-H., & Kim, K.-S. (2008). A complete solution to asymmetric S-curve motion profile: Theory & experiments. In 2008 International conference on control, automation and systems, Korea. IEEE. https://doi.org/10.1109/ICCAS.2008.4694244

Zou, F., Qu, D., & Xu, F. (2009). Asymmetric s-curve trajectory planning for robot point-to-point motion. In 2009 IEEE international conference on robotics and biomimetics (ROBIO), China. IEEE. https://doi.org/10.1109/ROBIO.2009.5420482

Rew, K.-H., & Kim, K.-S. (2009). A closed-form solution to asymmetric motion profile allowing acceleration manipulation. IEEE Transactions on Industrial Electronics, 57, 2499–2506. https://doi.org/10.1109/TIE.2009.2036032

Lillicrap, T. P., et al. (2015). Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, https://doi.org/10.48550/arXiv.1509.02971

Kim, S. W., et al. (2022). Recent advances of artificial intelligence in manufacturing industrial sectors: A review. International Journal of Precision Engineering and Manufacturing. https://doi.org/10.1007/s12541-021-00600-3

Yu, R., et al. (2017). Deep reinforcement learning based optimal trajectory tracking control of autonomous underwater vehicle. In 2017 36th Chinese control conference (CCC), China. IEEE. https://doi.org/10.23919/ChiCC.2017.8028138

Li, W., et al. (2021). A speedy reinforcement learning-based energy management strategy for fuel cell hybrid vehicles considering fuel cell system lifetime. International Journal of Precision Engineering and Manufacturing-Green Technology, 9, 859–872. https://doi.org/10.1007/s40684-021-00379-8

Zheng, C., et al. (2022). A deep reinforcement learning-based energy management strategy for fuel cell hybrid buses. International Journal of Precision Engineering and Manufacturing-Green Technology, 9, 885–897. https://doi.org/10.1007/s40684-021-00403-x

Xu, D., et al. (2023). Recent progress in learning algorithms applied in energy management of hybrid vehicles: A comprehensive review. International Journal of Precision Engineering and Manufacturing-Green Technology, 10, 245–267.

Vecerik, M., et al. (2017). Leveraging demonstrations for deep reinforcement learning on robotics problems with sparse rewards. arXiv preprint arXiv:1707.08817, https://doi.org/10.48550/arXiv.1707.08817

Inoue, T., et al. (2017). Deep reinforcement learning for high precision assembly tasks. In 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), Canada. IEEE. https://doi.org/10.1109/IROS.2017.8202244

Abb Irb 140 Product Specification [Online]. (2018, October). Available: https://global.abb/group/en

Acknowledgements

We would like to thank Delta corp. for providing valuable discussions.

Funding

The authors have no relevant financial or non-financial interests to disclose. All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript. The authors have no financial or proprietary interests in any material discussed in this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, HH., Cheng, CK., Chen, YH. et al. The Robotic Arm Velocity Planning Based on Reinforcement Learning. Int. J. Precis. Eng. Manuf. 24, 1707–1721 (2023). https://doi.org/10.1007/s12541-023-00880-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-023-00880-x