Abstract

Since its introduction in the early 2000s, robust optimization with budget uncertainty has received a lot of attention. This is due to the intuitive construction of the uncertainty sets and the existence of a compact robust reformulation for (mixed-integer) linear programs. However, despite its compactness, the reformulation performs poorly when solving robust integer problems due to its weak linear relaxation. To overcome the problems arising from the weak formulation, we propose a bilinear formulation for robust binary programming, which is as strong as theoretically possible. From this bilinear formulation, we derive strong linear formulations as well as structural properties for robust binary optimization problems, which we use within a tailored branch and bound algorithm. We test our algorithm’s performance together with other approaches from the literature on a diverse set of “robustified” real-world instances from the MIPLIB 2017. Our computational study, which is the first to compare many sophisticated approaches on a broad set of instances, shows that our algorithm outperforms existing approaches by far. Furthermore, we show that the fundamental structural properties proven in this paper can be used to substantially improve the approaches from the literature. This highlights the relevance of our findings, not only for the tested algorithms, but also for future research on robust optimization. To encourage the use of our algorithms for solving robust optimization problems and our instances for benchmarking, we make all materials freely available online.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Dealing with uncertainties is inevitable when considering real-world optimization problems. A classical approach to include uncertainties into the optimization process is robust optimization, where different realizations of the uncertain parameters are modeled via an uncertainty set. A robust optimal solution remains feasible for all considered scenarios in the uncertainty set and minimizes the worst-case cost occurring under these scenarios. The concept was first introduced by Soyster [40] in the early 1970s, was later considered for combinatorial optimization problems and discrete uncertainty sets by Kouvelis and Yu [30] in the 1990s, and was analyzed in detail by Ben-Tal and Nemirovski [10,11,12] and Bertsimas and Sim [15, 16] at the beginning of this century. An overview on robust optimization is given in [9, 13, 21]. The approach by Bertsimas and Sim has proven to be the most popular, with the introductory paper [16] being the most cited document on robust optimization in the literature databases Scopus and Web of Science (search for robust optimization in title, keywords, and abstract). The approach’s popularity is primarily based on the intuitive definition of the uncertainty set and the existence of a compact reformulation for the robust counterpart. However, instances from practice can still pose a considerable challenge for modern MILP solvers, even if the non-robust problem is relatively easy to solve, as observed e.g. by Kuhnke et al. [31]. In this paper, we address this challenge by studying the structure of robust binary problems and proposing a new branch and bound algorithm. Thereby, we restrict ourselves to problems with uncertain objective functions. However, most of our results carry over to general robust optimization.

We start by formally defining a standard, so called nominal, binary program

with an objective vector \(c\in {\mathbb {R}}^{n}\), a constraint matrix \(A\in {\mathbb {R}}^{m\times n}\), and a right-hand side \(b\in {\mathbb {R}}^{m}\). Instead of assuming the objective coefficients \(c_{i}\) to be certain, we consider uncertain coefficients \(c'_{i}\) that lie in an interval \(c'_{i}\in \left[ c_{i},c_{i}+{\hat{c}}_{i}\right] \) and can deviate from their nominal value \(c_{i}\) by up to the deviation \({\hat{c}}_{i}\). In the worst-case, all coefficients \(c_{i}'\) are equal to their maximum value \(c_{i}+{\hat{c}}_{i}\), as this maximizes the optimal solution value. However, for practical problems it is in general very unlikely that all coefficients deviate to their maximum value. Bertsimas and Sim [16] propose a robust counterpart to \(\text {NOM}\), with an adjustable level of conservatism, by defining a budget \(\varGamma \in \left[ 0,n\right] \) on the set of considered uncertain scenarios. For this robust counterpart, we do not consider all scenarios, but only those in which at most \(\left\lfloor \varGamma \right\rfloor \) coefficients \(c_{i}'\) deviate to their maximum \(c_{i}+{\hat{c}}_{i}\) and one coefficient deviates by a fraction of \(\left( \varGamma -\left\lfloor \varGamma \right\rfloor \right) \). The robust counterpart can be written as

with \(\left[ n\right] :=\left\{ 1,\ldots ,n\right\} \). The above problem is non-linear and thus impractical, but can be reformulated by dualizing the inner maximization problem, as shown by Bertsimas and Sim [16]. This results in the compact robust problem

with

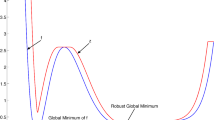

Unfortunately, solving \(\text {ROB}\) as an MILP may require much more time than solving the nominal problem \(\text {NOM}\). For example, we observed in our computational study that Gurobi [26] already struggles to solve robust knapsack instances with only 100 items within a time limit of an hour (see Sect. 8.5). This is because the integrality gap of the formulation \({\mathscr {P}}^{\text {ROB}}\) may be arbitrarily large, even if the integrality gap of the corresponding nominal problem is zero (see Sect. 2). This is problematic, since a large integrality gap implies that optimal solutions to the linear relaxation are most likely far from being integer feasible, i.e., many variables that should be integer take fractional values. However, primal heuristics in MILP solvers, like the feasibility pump [19], perform better for solutions that are nearly integer feasible. Furthermore, even if we find an optimal solution, we probably have to spend much more time proving that it is indeed optimal.

There exist several approaches and studies in the literature on how to solve \(\text {ROB}\) in practice. Bertsimas et al. [14] as well as Fischetti and Monaci [20] evaluate whether it is more efficient to solve \(\text {ROB}\) over the compact reformulation \({\mathscr {P}}^{\text {ROB}}\) or using a separation approach over an alternative formulation with exponentially many inequalities, all of which correspond to a scenario from the uncertainty set. Although the alternative formulation is exponentially large, it is theoretically as strong, or weak respectively, as the compact reformulation. Atamtürk [5] addresses the issue of the weak formulation \({\mathscr {P}}^{\text {ROB}}\) and proposes four different strong, although considerably larger, formulations for solving \(\text {ROB}\). Atamtürk even proves that the strongest of the four formulations describes the convex hull of the set of robust solutions if the linear relaxation

is the convex hull of the set of nominal solutions. Another approach for solving \(\text {ROB}\) is to resort to its nominal counterpart. Bertsimas and Sim [15] show that there always exists an optimal solution \(\left( x,p,z\right) \) to \(\text {ROB}\) such that \(z\in \left\{ {\hat{c}}_{0},{\hat{c}}_{1},\ldots ,{\hat{c}}_{n}\right\} \), with \({\hat{c}}_{0}=0\). Note that the ideal choice for \(p_{i}\) is always \(\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\), with \(\left( a\right) ^{+}:=\max \left\{ a,0\right\} \) for \(a\in {\mathbb {R}}\). When fixing \(z\in \left\{ {\hat{c}}_{0},{\hat{c}}_{1},\ldots ,{\hat{c}}_{n}\right\} ,\) the term \(\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\) becomes linear, and thus \(\text {ROB}\) can be written as an instance of its nominal counterpart

Hence, solving \(\text {ROB}\) reduces to solving up to \(\left| \left\{ {\hat{c}}_{0},{\hat{c}}_{1},\ldots ,{\hat{c}}_{n}\right\} \right| \le n+1\) nominal subproblems \(\text {NOS}\left( z\right) \), implying that the robust counterpart of polynomially solvable nominal problems is again polynomially solvable. However, if the number of distinct deviations \(\left| \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \right| \) is large then solving all nominal subproblems may require too much time. Hence, it is beneficial to discard as many non-optimal choices for z as possible. For \(\varGamma \in {\mathbb {Z}}\), Álvarez-Miranda et al. [4] as well as Park and Lee [38] showed independently that there exists a subset \({\mathscr {Z}}\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) containing an optimal choice for z with \(\left| {\mathscr {Z}}\right| \le n+2-\varGamma \), or \(\left| {\mathscr {Z}}\right| \le n+1-\varGamma \) respectively. This result was later improved by Lee and Kwon [33], who prove that \({\mathscr {Z}}\) can be chosen such that \(\left| {\mathscr {Z}}\right| \le \left\lceil \frac{n-\varGamma }{2}\right\rceil +1\) holds. Hansknecht et al. [27] propose a divide and conquer approach for the robust shortest path problem that also aims to reduce the number of nominal subproblems to be solved. Their algorithm, which can as well be used to solve general problems \(\text {ROB}\), successively divides the set of deviations \(\left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) into intervals and chooses in each iteration a value z from the most promising interval for solving the nominal subproblem \(\text {NOS}\left( z\right) \). After each iteration, given the optimal objective values of the previously considered subproblems, non-optimal choices of z are identified and discarded by using a relation between the optimal objective values of \(\text {NOS}\left( z\right) \) for different z.

Roughly summarized, there are two general directions for solving \(\text {ROB}\) in the literature: strong formulations on the one hand and fixing z on the other hand. In this paper, we take a middle course between these directions by proposing a branch and bound algorithm that combines restrictions on z with strong formulations. The general idea of the branch and bound paradigm, which was first proposed by Land and Doig [32], for solving general optimization problems \(\min \left\{ v\left( x\right) \big |x\in {\mathscr {X}}\right\} \) is to partition (branch) the set of feasible solutions \({\mathscr {X}}=\bigcup _{i=1}^{k}X_{i}\) and then solve the corresponding subproblems \(\min \left\{ v\left( x\right) \big |x\in X_{i}\right\} \) recursively. In order to avoid a complete enumeration, an easy to obtain dual bound \({\underline{v}}\left( X\right) \le \min \left\{ v\left( x\right) \big |x\in X\right\} \) is computed for every considered \(X\subseteq {\mathscr {X}}\) and compared with a primal bound, which is the value of the so far best known solution. In our case, we partition the set of solutions \({\mathscr {X}}={\mathscr {P}}^{\text {ROB}}\cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{n}\times {\mathscr {Z}}\right) \), where \({\mathscr {Z}}\) contains an optimal choice for z, into subsets \({\mathscr {P}}^{\text {ROB}}\cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{n}\times Z\right) \) with \(Z\subseteq {\mathscr {Z}}\). For the corresponding robust subproblems \(\text {ROB}\left( Z\right) \), we introduce improved formulations \({\mathscr {P}}\left( Z\right) \) and prove structural properties, from which we derive strong dual bounds on the optimal objective value \(v\left( \text {ROB}\left( Z\right) \right) \). This enables us to prune subsets \(Z\subseteq {\mathscr {Z}}\) containing non-optimal values for z. Furthermore, once the not yet pruned sets Z are sufficiently small, our findings enable us to solve \(\text {ROB}\left( Z\right) \) efficiently as an MILP, sparing us from considering many nominal subproblems \(\text {NOS}\left( z\right) \) separately.

The fourfold contribution of this paper is summarized in the following.

-

We propose a branch and bound algorithm to solve \(\text {ROB}\) and show in an extensive computational study that it outperforms all existing approaches from the literature by far. The code of all tested algorithms is available online [23].

-

For developing the branch and bound algorithm, we first introduce different strong formulations and prove several structural properties for \(\text {ROB}\).

-

We show that these structural properties can as well be used to improve existing approaches from the literature substantially, highlighting the relevance of our findings also for future research.

-

To conduct the computational study, we carefully construct a set of hard robust instances on the basis of real-world nominal problems from MIPLIB 2017 [25]. We make these instances freely available online for future benchmarking in the field of robust optimization [24].

Outline Before we introduce the basic framework of our branch and bound algorithm, we provide the theoretical foundations in Sects. 2 and 3. In Sect. 2, we discuss the weakness of \({\mathscr {P}}^{\text {ROB}}\) and propose a bilinear formulation \({\mathscr {P}}^{\text {BIL}}\) for \(\text {ROB}\), which is as strong as theoretically possible. Although the bilinearity limits the practical use of this formulation, \({\mathscr {P}}^{\text {BIL}}\) will play a critical role in the design of our branch and bound algorithm. Based on the bilinear formulation, we introduce the strong linear formulations \({\mathscr {P}}\left( Z\right) \) for restricted \(z\in Z\) in Sect. 3. Using formulation \({\mathscr {P}}\left( Z\right) \), we present a basic framework of our branch and bound algorithm in Sect. 4, which will then be improved in the subsequent sections by gaining more insight in the structure of \(\text {ROB}\). In Sect. 5, we show how to improve the formulations by using cliques in the so-called conflict graph of the nominal problem. In Sect. 6, we characterize optimal choices of p and z, establishing the theoretical background for many components of the branch and bound algorithm, which we describe in detail in Sect. 7. Finally, in Sect. 8 we conduct our computational study.

2 A strong bilinear formulation

To better understand why formulation \({\mathscr {P}}^{\text {ROB}}\) is problematic, we start by considering an example showing that the integrality gap of \(\text {ROB}\) can be arbitrarily large, even if the integrality gap of the corresponding nominal problem is zero.

Example 1

Consider the trivial task of choosing the smallest out of n elements

whose integrality gap is zero for all \(c\in {\mathbb {R}}^{n}\). Now, consider an instance of the uncertain counterpart \(\text {ROB}\) with \(c=0\), \({\hat{c}}=1\), and \(\varGamma =1\)

Let \(v\left( \text {ROB}\right) \) be the optimal objective value of \(\text {ROB}\) and \(v^{\text {R}}\left( \text {ROB}\right) \) be the optimal value of the linear relaxation. For the above problem, we have \(v\left( \text {ROB}\right) =1\). However, \(\left( x,p,z\right) =\left( \frac{1}{n},\ldots ,\frac{1}{n},0,\ldots ,0,\frac{1}{n}\right) \) is an optimal fractional solution with \(v^{\text {R}}\left( \text {ROB}\right) =\frac{1}{n}\). Thus, the integrality gap is \(\frac{v\left( \text {ROB}\right) -v^{\text {R}}\left( \text {ROB}\right) }{\left| v^{\text {R}}\left( \text {ROB}\right) \right| }=n-1\).

The example shows that choosing fractional values of x in the linear relaxation enables us to meet the constraints \(p_{i}+z\ge {\hat{c}}_{i}x_{i}\) with a relatively low value of z, which marginalizes the influence of the deviations on the objective value. To overcome these problems, we will discuss alternative formulations for modeling \(\text {ROB}\).

Formally, we call \({\mathscr {P}}\subseteq {\mathbb {R}}^{n_{1}+n_{2}}\) a formulation for the problem \(\min \left\{ c^{T}x\big |x\in {\mathscr {X}}\right\} \) with a set of solutions \({\mathscr {X}}\subseteq {\mathbb {Z}}^{n_{1}}\times {\mathbb {R}}^{n_{2}}\) if \({\mathscr {P}}\cap \left( {\mathbb {Z}}^{n_{1}}\times {\mathbb {R}}^{n_{2}}\right) ={\mathscr {X}}\) holds [42]. Using a formulation, we can solve the original problem by solving \(\min \left\{ c^{T}x\big |x\in {\mathscr {P}}\right\} \) and branching on the integer variables. Additionally, \({\mathscr {P}}'\subseteq {\mathbb {R}}^{n+n'}\) is called an extended formulation for a problem if its projection \({\text {proj}}\left( {\mathscr {P}}'\right) \subseteq {\mathbb {R}}^{n}\) into the original solution space is a formulation for that problem. For two formulations \({\mathscr {P}}_{1}\) and \({\mathscr {P}}_{2}\) with \({\mathscr {P}}_{1}\subseteq {\mathscr {P}}_{2}\), we say that \({\mathscr {P}}_{1}\) is at least as strong as \({\mathscr {P}}_{2}\). When considering extended formulations, we compare their projections instead.

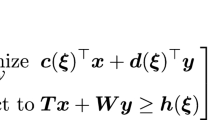

To the best of our knowledge, the only results directly targeting the weakness of \({\mathscr {P}}^{\text {ROB}}\) are presented by Atamtürk [5], who proposes four problems \(\text {RP1}\) - \(\text {RP4}\) that are equivalent to \(\text {ROB}\), using different (extended) formulations \({\mathscr {P}}^{\text {RP1}},\ldots ,{\mathscr {P}}^{\text {RP4}}\). The theoretical strength of the four formulations exceeds the one of \({\mathscr {P}}^{\text {ROB}}\) by far. More precisely, we have \({\text {proj}}\left( {\mathscr {P}}^{\text {RP4}}\right) \subsetneq {\text {proj}}\left( {\mathscr {P}}^{\text {RP1}}\right) ={\mathscr {P}}^{\text {RP2}}={\text {proj}}\left( {\mathscr {P}}^{\text {RP3}}\right) \subsetneq {\mathscr {P}}^{\text {ROB}}\) for non-trivial cases. The problem

over the strongest formulation

with \(\left[ n\right] _{0}:=\left\{ 0,\ldots ,n\right\} \), is especially interesting. For every vertex \(\left( x,p,z,\omega ,\lambda \right) \) of the polyhedron \({\mathscr {P}}^{\text {RP4}}\), it holds \(\lambda _{k^{*}}=1\) for a \(k^{*}\in \left[ n\right] _{0}\) and \(\lambda _{k}=0\) for \(k\ne k^{*}\). Choosing \(\lambda \) in such a way reduces \(\text {RP4}\) to solving the nominal subproblem \(\text {NOS}\left( {\hat{c}}_{k^{*}}\right) \). Thus, \(\text {RP4}\) essentially combines the nominal subproblems \(\text {NOS}\left( z\right) \) that are solved in the Bertsimas and Sim approach for all possible values \(z\in \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) into one problem.

Formulation \({\mathscr {P}}^{\text {RP4}}\) is not only the strongest proposed by Atamtürk, but can be seen as the strongest possible polyhedral formulation overall. This is because it preserves the integrality gap of the nominal problem [5]. However, the disadvantage of all formulations \({\mathscr {P}}^{\text {RP1}},\ldots ,{\mathscr {P}}^{\text {RP4}}\) is that they may become too large for practical purposes, as we will see in the computational study in Sect. 8.

To deal with this issue, we introduce a smaller, although bilinear, formulation for \(\text {ROB}\). For this, we multiply z in the constraints \(p_{i}+z\ge {\hat{c}}_{i}x_{i}\) of the original formulation \({\mathscr {P}}^{\text {ROB}}\) with \(x_{i}\) for all \(i\in \left[ n\right] \). The resulting constraint \(p_{i}+zx_{i}\ge {\hat{c}}_{i}x_{i}\) is valid for all solutions of \(\text {ROB}\), since the inequality becomes \(p_{i}\ge 0\) for \(x_{i}=0\) and is equivalent to the original inequality for \(x_{i}=1\). The new bilinear formulation

is at least as strong as \({\mathscr {P}}^{\text {RP4}}\), as stated in the following theorem.

Theorem 1

It holds \({\mathscr {P}}^{\text {BIL}}\subseteq {\text {proj}}\left( {\mathscr {P}}^{\text {RP4}}\right) \).

Proof

Let \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\) and assume that \(0={\hat{c}}_{0}\le {\hat{c}}_{1}\le \cdots \le {\hat{c}}_{n}\) holds. First, consider the case in which we have \(z\le {\hat{c}}_{n}\). Then there exists an index \(j\in \left[ n-1\right] _{0}\) and a value \(\varepsilon \in \left[ 0,1\right] \) with \(z=\varepsilon {\hat{c}}_{j}+\left( 1-\varepsilon \right) {\hat{c}}_{j+1}\). We define \(\lambda _{k}=0\) for \(k\notin \left\{ j,j+1\right\} \) and \(\lambda _{j}=\varepsilon \) as well as \(\lambda _{j+1}=1-\varepsilon \). Furthermore, we set \(\omega _{i}^{k}=\lambda ^{k}x_{i}\) for all \(i\in \left[ n\right] ,k\in \left[ n\right] _{0}\) and show that \(\left( x,p,z,\omega ,\lambda \right) \in {\mathscr {P}}_{\text {RP4}}\). The first five constraints of formulation \({\mathscr {P}}_{\text {RP4}}\) are trivially satisfied by the definition of \(\varepsilon ,\ \lambda \) and \(\omega \). For the last constraint, we have

for all \(i\in \left[ n\right] \), where equality \(\left( *\right) \) holds since \(\left( {\hat{c}}_{i}-{\hat{c}}_{j}\right) \) and \(\left( {\hat{c}}_{i}-{\hat{c}}_{j+1}\right) \) are either both non-positive if we have \(i\le j\) or both non-negative if we have \(i\ge j+1\).

For the case \(z>{\hat{c}}_{n}\), we define \(\lambda _{k}=0\) for \(k\in \left[ n-1\right] _{0}\) and \(\lambda _{n}=1\). Furthermore, let \(\omega _{i}^{k}=\lambda ^{k}x_{i}\) for all \(i\in \left[ n\right] \) and \(k\in \left[ n\right] _{0}\). Again, \(\left( x,p,z,\omega ,\lambda \right) \) satisfies the first five constraints trivially. Moreover, we have

and thus \(\left( x,p,z,\omega ,\lambda \right) \in {\mathscr {P}}_{\text {RP4}}\), which completes the proof.\(\square \)

Although formulation \({\mathscr {P}}^{\text {BIL}}\) is strong and compact, its bilinearity is rather hindering when solving instances in practice. To understand how we can still make practical use of it, we first consider \({\mathscr {P}}^{\text {BIL}}\) with z restricted to a fixed value. The formulation becomes not only linear, but it also holds \(p_{i}=\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\) for all \(i\in \left[ n\right] \) in an optimal (fractional) solution \(\left( x,p,z\right) \). Hence, the problem of optimizing over the set \({\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ z\right\} \right) \) is equivalent to

which is the linear relaxation of the nominal subproblem \(\text {NOS}\left( z\right) \). This is noteworthy, since this equivalence does not hold for \({\mathscr {P}}^{\text {ROB}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ z\right\} \right) \). The strength of the linearization for fixed z suggests that we may also derive strong linearizations of \({\mathscr {P}}^{\text {BIL}}\) for general restrictions on z, that is \(z\in Z\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \). In the next section, we introduce such a linearization, which will be a key component of our branch and bound algorithm.

3 Strong linear formulations for bounded z

Consider a subset \(Z\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) and let \({\underline{z}}=\min \left( Z\right) \) and \({\overline{z}}=\max \left( Z\right) \) for the remainder of this paper. Assuming that there exists an optimal solution \(\left( x,p,z\right) \) to \(\text {ROB}\) with \(z\in Z,\) we can restrict ourselves to the domain \({\mathbb {R}}^{2n}\times \left[ {\underline{z}},{\overline{z}}\right] \). We use this to obtain a linear relaxation of the restricted bilinear formulation \({\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left[ {\underline{z}},{\overline{z}}\right] \right) \).

Lemma 1

The linear constraints

and

are valid for all \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left[ {\underline{z}},{\overline{z}}\right] \right) \).

Proof

Since \(p_{i}+zx_{i}\ge {\hat{c}}_{i}x_{i}\) and \(p_{i}\ge 0\) hold for all \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\), the restriction \({\underline{z}}\le z\) implies

Furthermore, due to \(z\le {\overline{z}}\), we obtain

\(\square \)

Note that the Constraints (1) and (2) strictly dominate the inequalities \(p_{i}+z\ge {\hat{c}}_{i}x_{i}\) and \(p_{i}\ge 0\) of \({\mathscr {P}}^{\text {ROB}}\) in the case of \({\underline{z}}>0\) and \({\hat{c}}_{i}>{\overline{z}}\) respectively. Both constraints address the problem of the original formulation, which is that one can decrease \(x_{i}\) in a fractional solution down to \(x_{i}\le \frac{z}{{\hat{c}}_{i}}\) in order to choose \(p_{i}=0\), even if we have \({\hat{c}}_{i}>z\). Given a lower bound \(z\ge {\underline{z}}\), Constraint (1) reduces the benefit of decreasing \(x_{i}\), as the right-hand side only decreases with the factor \(\left( {\hat{c}}_{i}-{\underline{z}}\right) ^{+}\) instead of \({\hat{c}}_{i}\). For an upper bound \(z\le {\overline{z}}\), Constraint (2) guarantees that \(p_{i}\) is not zero for \({\hat{c}}_{i}>{\overline{z}}\) and \(x_{i}>0\) by using the fact that the value of \(p_{i}\) is at least \({\hat{c}}_{i}-{\overline{z}}\) if we have \({\hat{c}}_{i}>{\overline{z}}\) and \(x_{i}=1\).

Using these strengthened constraints, we obtain the robust subproblem

over the linear formulation

As shown in Lemma 1, \({\mathscr {P}}\left( Z\right) \) is a relaxation of the restricted bilinear formulation \({\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left[ {\underline{z}},{\overline{z}}\right] \right) \). Note that \({\mathscr {P}}\left( Z\right) \) becomes stronger, the narrower the bounds of Z are, i.e., for \(Z,Z'\) with \(\left[ {\underline{z}},{\overline{z}}\right] \subsetneq \left[ {\underline{z}}',{\overline{z}}'\right] \) it holds \({\mathscr {P}}\left( Z\right) \subsetneq {\mathscr {P}}\left( Z'\right) \cap \left( {\mathbb {R}}^{2n}\times \left[ {\underline{z}},{\overline{z}}\right] \right) \) for non-trivial cases. The following statement shows that \({\mathscr {P}}\left( Z\right) \) is even as strong as \({\mathscr {P}}^{\text {BIL}}\) in the case where z equals one of the bounds \({\underline{z}},{\overline{z}}\).

Proposition 1

It holds \({\mathscr {P}}\left( Z\right) \cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) ={\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \).

Proof

Consider a solution \(\left( x,p,z\right) \in {\mathscr {P}}\left( Z\right) \cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \). For \(z={\underline{z}}\), it holds

and for \(z={\overline{z}}\), we have

Hence, \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\) and thus \({\mathscr {P}}\left( Z\right) \cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \subseteq {\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \). The statement follows together with Lemma 1.\(\square \)

Note that the improved formulation \({\mathscr {P}}\left( Z\right) \) comes with the cost of a larger constraint matrix compared to \({\mathscr {P}}^{\text {ROB}}\), as we have \(p_{i}\ge \left( {\hat{c}}_{i}-{\overline{z}}\right) ^{+}x_{i}\) instead of \(p_{i}\ge 0\). This can be hindering in practice, as smaller constraint matrices tend to be computationally beneficial. We overcome this issue by substituting \(p_{i}=p_{i}'+\left( {\hat{c}}_{i}-{\overline{z}}\right) ^{+}x_{i}\) and \(z=z'+{\underline{z}}\). We then obtain the equivalent substituted problem

over the substituted formulation

The substituted problem \(\text {ROB}^{\text {S}}\left( Z\right) \) is also interesting from a theoretical point of view. Since \(z'\le {\overline{z}}-{\underline{z}}\) holds for all optimal solutions, \(\text {ROB}^{\text {S}}\left( Z\right) \) is equivalent to \(\text {ROB}\) for an instance with objective coefficients \(c_{i}+\left( {\hat{c}}_{i}-{\overline{z}}\right) ^{+}\), deviations \({\hat{c}}'_{i}=\left( \min \left\{ {\hat{c}}_{i},{\overline{z}}\right\} -{\underline{z}}\right) ^{+}\), and an added constant \(\varGamma {\underline{z}}\). This will be useful in subsequent sections, since properties that we prove for \(\text {ROB}\) carry over directly to \(\text {ROB}^{\text {S}}\left( Z\right) \) and \(\text {ROB}\left( Z\right) \).

In the next section, we show how to use formulation \({\mathscr {P}}\left( Z\right) \) in a branch and bound algorithm for solving \(\text {ROB}\).

4 The basic branch and bound framework

The general idea of our branch and bound framework, which is sketched in Algorithm 1, is to solve \(\text {ROB}\) by branching the set \(\left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) of possible values for z into subsets \(Z\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \), for which we then consider the robust subproblem \(\text {ROB}\left( Z\right) \). For each considered subset Z, we store a dual bound \({\underline{v}}\left( Z\right) \) based on the linear relaxation value \(v^{\text {R}}\left( \text {ROB}^{\text {S}}\left( Z'\right) \right) \) for a superset \(Z'\supseteq Z\) using the strong formulation from the previous section. If the dual bound \({\underline{v}}\left( Z\right) \) is greater than or equal to the current primal bound \({\overline{v}}\) then we can prune Z. If Z cannot be pruned, we first asses the strength of formulation \({\mathscr {P}}^{\text {S}}\left( Z\right) \), which converges towards the strength of \({\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \) and achieves equality at latest for \(\left| Z\right| =1\) according to Proposition 1. If \({\mathscr {P}}^{\text {S}}\left( Z\right) \) is almost as strong as \({\mathscr {P}}^{\text {BIL}}\cap \left( {\mathbb {R}}^{2n}\times \left\{ {\underline{z}},{\overline{z}}\right\} \right) \) then we may directly solve the substituted robust subproblem \(\text {ROB}^{\text {S}}\left( Z\right) \), sparing us from considering further subsets of Z. Otherwise, if \({\mathscr {P}}^{\text {S}}\left( Z\right) \) is too weak, we continue solving the linear relaxation and branching into subsets \(Z=Z_{1}\cup Z_{2}\).

Note that the framework given in Algorithm 1 only serves for getting a basic intuition, as many components are described vaguely. For example, we leave open for now how to evaluate whether we can stop branching Z due to \({\mathscr {P}}^{\text {S}}\left( Z\right) \) being “strong enough”. We will describe all components of our algorithm in detail in Sect. 7. There, we will not only discuss whether and how we should branch Z (Sect. 7.5) and how to choose the next \(Z\in {\mathscr {N}}\) (Sect. 7.4), but also improve on the computation of dual bounds (Sect. 7.1) and primal bounds (Sect. 7.2) and discuss an efficient pruning strategy (Sect. 7.3).

Before doing so, we first establish some theoretical background in the following two sections that will be crucial for the design of our algorithm.

5 A reformulation using cliques in conflict graphs

In this section, we propose a stronger formulation for \(\text {ROB}\) that depends on so-called conflicts between variables and can also be used to solve the robust subproblems \(\text {ROB}\left( Z\right) \). We already considered the concept of extended formulations in Sect. 2. We now propose a reformulation that is also not in the original variable space, but not an extended formulation. To generalize the concept, we call a problem \(v'=\min \left\{ c'^{T}x'\big |x'\in {\mathscr {X}}'\right\} \) over a polyhedron \({\mathscr {P}}'\subseteq {\mathbb {R}}^{n_{1}'+n{}_{2}'}\) with \({\mathscr {P}}'\cap \left( {\mathbb {Z}}^{n_{1}'}\times {\mathbb {R}}^{n_{2}'}\right) ={\mathscr {X}}'\) a reformulation in a different variable space of \(v=\min \left\{ c^{T}x\big |x\in {\mathscr {X}}\right\} \), if both have the same optimum objective value, i.e., \(v=v'\), and there exists a polynomially time computable, cost preserving mapping \(\phi :{\mathscr {P}}'\rightarrow {\mathbb {R}}^{n_{1}+n{}_{2}}\) with \(\phi \left( {\mathscr {X}}'\right) \subseteq {\mathscr {X}}\). Then, instead of solving the original problem, we can solve the problem over \({\mathscr {X}}'\) and map an optimal solution \(x'\in {\mathscr {X}}'\) to an optimal solution \(\phi \left( x'\right) \in {\mathscr {X}}\). To generalize the concept of strong formulations, we say that \({\mathscr {P}}'_{1}\) is at least as strong as \({\mathscr {P}}'_{2}\) if \(\phi _{1}\left( {\mathscr {P}}'_{1}\right) \subseteq \phi '\left( {\mathscr {P}}'_{2}\right) \) holds.

Here, we reformulate \(\text {ROB}\) in a different variable space by aggregating variables p in a tailored preprocessing step. Preprocessing routines, which aim to reduce the size and improve the strength of a given problem formulation, are key components of modern MILP solvers and critical to their performance [1, 3, 17]. One of these preprocessing routines involves the search for logical implications between binary variables, e.g., \(x_{i}\!=\!1 \!\!\Rightarrow x_{j}\!=\! 0\) for every solution x. These implications can be modeled within a so-called conflict graph, consisting of a node for every binary variable \(x_{i}\) and its complement \({\overline{x}}_{i}=\left( 1-x_{i}\right) \) [6]. There exists an edge between two nodes in the conflict graph if there exists no solution where the corresponding literals are both equal to one. Since every solution to the original problem corresponds to an independent set within the conflict graph, all valid inequalities for the independent set problem on the conflict graph are also valid for the original problem. An interesting type of valid inequalities are set-packing constraints, which are defined by cliques in the conflict graph, i.e., subsets of nodes forming a complete subgraph. For a clique \(\left\{ x_{i_{1}},\ldots ,x_{i_{q}}\right\} \cup \left\{ {\overline{x}}_{j_{1}},\ldots ,{\overline{x}}_{j_{{\overline{q}}}}\right\} \), at most one of the literals can be equal to one, which yields the corresponding set-packing constraint \(\sum _{k=1}^{q}x_{i_{k}}+\sum _{k=1}^{{\overline{q}}}\left( 1-x_{j_{k}}\right) \le 1\).

Here, we are less interested in adding set-packing constraints to our formulations, as they are already used in modern MILP solvers. Instead, we focus on the structural implications of set-packing constraints consisting of positive literals \(x_{i}\) on the variables p and robustness constraints \(p_{i}+z\ge x_{i}\). To ease notation, we call a subset \(Q\subseteq \left[ n\right] \) a clique if the variables \(\left\{ x_{i}\big |i\in Q\right\} \) form a clique in the conflict graph. The following proposition shows that we can use a partitioning \({\mathscr {Q}}\) of \(\left[ n\right] \) into cliques to obtain a stronger reformulation of \(\text {ROB}\) in a smaller variable space.

Proposition 2

Let \({\mathscr {Q}}\) be a partitioning of \(\left[ n\right] \) into cliques. Then the problem

over the formulation

is a reformulation in a different variable space of \(\text {ROB}\) that is at least as strong as \({\mathscr {P}}^{\text {ROB}}\).

Proof

First, note that if \(p'_{Q}=\sum _{i\in Q}p_{i}\) for all \(Q\in {\mathscr {Q}}\) holds then \(\left( x,p,z\right) \) and \(\left( x,p',z\right) \) have the same objective value for their respective problems. Hence, in order to show \(v\left( \text {ROB}\right) =v\left( \text {ROB}\left( {\mathscr {Q}}\right) \right) \), we construct corresponding solutions fulfilling this property.

Let \(\left( x,p,z\right) \) be a solution to \(\text {ROB}\) and consider \(\left( x,p',z\right) \) with \(p'\in {\mathbb {R}}_{\ge 0}^{{\mathscr {Q}}}\) such that \(p'_{Q}=\sum _{i\in Q}p_{i}\). For all cliques \(Q\in {\mathscr {Q}}\), we have \(\sum _{i\in Q}x_{i}\le 1\) and thus there exists an index \(j\in Q\) such that \(x_{i}=0\) for all \(i\in Q\backslash \left\{ j\right\} \). It follows

for all \(Q\in {\mathscr {Q}}\), proving \(\left( x,p',z\right) \in {\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \), and thus \(v\left( \text {ROB}\left( {\mathscr {Q}}\right) \right) \le v\left( \text {ROB}\right) \).

It remains to show that every \(\left( x,p',z\right) \in {\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{\left| {\mathscr {Q}}\right| +1}\right) \) has a corresponding solution \(\phi \left( x,p',z\right) \in {\mathscr {P}}^{\text {ROB}}\cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{n+1}\right) \) of the same cost. Note that such a mapping \(\phi :{\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \rightarrow {\mathbb {R}}^{2n+1}\) already implies \(v\left( \text {ROB}\left( {\mathscr {Q}}\right) \right) \ge v\left( \text {ROB}\right) \), and thus \(v\left( \text {ROB}\right) =v\left( \text {ROB}\left( {\mathscr {Q}}\right) \right) \). We define the image of \(\left( x,p',z\right) \in {\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \) as \(\phi \left( x,p',z\right) =\left( x,p,z\right) \) and consider two different cases for the definition of \(p\in {\mathbb {R}}^{n}\). For cliques \(Q\in {\mathscr {Q}}\) with \(\sum _{j\in Q}{\hat{c}}_{j}x_{j}>0\), we define \(p_{i}=\frac{{\hat{c}}_{i}x_{i}p'_{Q}}{\sum _{j\in Q}{\hat{c}}_{j}x_{j}}\) for all \(i\in Q\). Then \(p_{i}+z\ge {\hat{c}}_{i}x_{i}\) holds, since we have

For cliques \(Q\in {\mathscr {Q}}\) with \(\sum _{j\in Q}{\hat{c}}_{j}x_{j}=0\), we can choose \(p_{i}\) arbitrarily as long as \(p'_{Q}=\sum _{j\in Q}p_{j}\), since \(p_{i}+z\ge 0={\hat{c}}_{i}x_{i}\) holds for any \(p_{i}\ge 0\). This shows not only that \(\phi \left( {\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{\left| {\mathscr {Q}}\right| +1}\right) \right) \subseteq {\mathscr {P}}^{\text {ROB}}\cap \left( {\mathbb {Z}}^{n}\times {\mathbb {R}}^{n+1}\right) \) holds, but also proves the strength of \(\text {ROB}\left( {\mathscr {Q}}\right) \), because we did not use the integrality of x and thus have \(\phi \left( {\mathscr {P}}^{\text {ROB}}\left( {\mathscr {Q}}\right) \right) \subseteq {\mathscr {P}}^{\text {ROB}}\).\(\square \)

Reconsider Example 1 from Sect. 2 to see that reformulation \(\text {ROB}\left( {\mathscr {Q}}\right) \) is not only equal, but actually stronger. In the example, \(\left[ n\right] \) is a clique and we thus have

compared to \(v^{\text {R}}\left( \text {ROB}\right) =\frac{1}{n}\).

As mentioned in Sect. 3, the improvement of \(\text {ROB}\) can also be applied to \(\text {ROB}^{\text {S}}\left( Z\right) \). Given a clique partitioning \({\mathscr {Q}}\) of \(\left[ n\right] \) we obtain the stronger reformulation

over

Obviously, in order to obtain these strong reformulations, we first have to compute a conflict graph and a clique partitioning \({\mathscr {Q}}\) of \(\left[ n\right] \). Ideally, this partitioning contains few cliques that are as large as possible. However, finding a partitioning of minimum cardinality is equivalent to computing a minimum clique cover, which was shown to be \({{\mathscr {N}}}{{\mathscr {P}}}\)–hard by Karp [28]. Moreover, building the whole conflict graph itself is also \({{\mathscr {N}}}{{\mathscr {P}}}\)–hard [18]. Consequently, we have to restrict ourselves to a subgraph of the whole conflict graph. If our algorithm was natively implemented in a MILP solver, we could use the conflict graph that is computed during the solver’s preprocessing without spending additional time searching for conflicts. Unfortunately, we cannot access the conflict graph in Gurobi [26], the solver we use for our implementation. Thus, we implement our own heuristics in which we check for each constraint of the nominal problem whether it implies conflicts between variables. Afterwards, we use these conflicts to partition \(\left[ n\right] \) greedily into cliques. As the construction of conflict graphs and clique partitionings are not the focus of this paper, we refer to Appendix A for a detailed description of our implementation. For related work on the construction and handling of conflict graphs, we refer to Achterberg et al. [1], Atamtürk et al. [6], as well as Brito and Santos [18].

Note that our approach of aggregating constraints and variables depends on the variable z being shared by all constraints \(p_{i}+z\ge x_{i}\). Atamtürk et al. [7] propose for the mixed vertex packing problem a similar approach for aggregating constraints containing conflicting binary variables and a common continuous variable. Their mixed clique inequalities are analogous to our clique inequalities and their strengthened star inequalities can be adapted for generalizing these. For now, we leave the adaptation for future research and stick to using clique inequalities depending on clique partitionings, as we otherwise cannot benefit from the reduced number of variables. We will see in our computational study in Sect. 8 that using clique partitionings already yields a substantially stronger reformulation for many instances and improves the performance of our branch and bound algorithm. Before describing the branch and bound algorithm in detail in Sect. 7, we further establish some theoretical background in the next section by characterizing optimal solutions of \(\text {ROB}\). Note that although we solve \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \) in practice, for the sake of simplicity, we mostly refer to the equivalent problem \(\text {ROB}\left( Z\right) \) in the remainder of this paper and only refer to \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \) when necessary, e.g., when considering its linear relaxation or the strength of the formulation \({\mathscr {P}}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \).

6 Characterization of optimal values for p and z

The central idea of our branch and bound algorithm for solving \(\text {ROB}\) is to restrict the value of z and trying to find an optimal corresponding nominal solution \(x\in {\mathscr {P}}^{\text {NOM}}\). In this section, however, we want to consider the opposite direction. Given a nominal solution \(x\in {\mathscr {P}}^{\text {NOM}}\), what are the optimal values for p and z? The answer to this question will deepen our understanding of the structural properties of \(\text {ROB}\) and is of practical use in many ways. First, we will generalize the result of Lee and Kwon [33], who showed for \(\varGamma \in {\mathbb {Z}}\) that there exists a subset \({\mathscr {Z}}\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \), with \(\left| {\mathscr {Z}}\right| \le \left\lceil \frac{n-\varGamma }{2}\right\rceil +1\), containing an optimal choice for z. This reduction is relevant for our branch and bound algorithm, as we only have to consider subsets \(Z\subseteq {\mathscr {Z}}\). Second, given a choice of z, we will be able to restrict our search for a corresponding nominal solution \(x\in {\mathscr {P}}^{\text {NOM}}\) to those for which the chosen z is optimal. We will extensively use this idea within our branch and bound algorithm, especially in Sect. 7.1 where we describe further dual bounding strategies. Third, as we prove the characterization for (potentially fractional) solutions within \({\mathscr {P}}^{\text {BIL}}\), we can compute for any \(x\in {\mathscr {P}}^{\text {NOM}}\) the corresponding objective value for the optimization problem over \({\mathscr {P}}^{\text {BIL}}\). This provides an upper bound on the optimal objective value over \({\mathscr {P}}^{\text {BIL}}\), which we compare to \(v^{\text {R}}\left( \text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \right) \) in order to obtain an indicator of the strength of \({\mathscr {P}}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \). We use this indicator in our branch and bound algorithm to decide whether \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \) should be solved directly as an MILP or whether Z needs to be shrunk further, as explained in Sect. 7.5. The following theorem states the characterization of optimal values for p and z.

Theorem 2

Let \(x\in {\mathscr {P}}^{\text {NOM}}\) be a (fractional) solution to \(\text {NOM}\). We define

and

The values \(z\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \) are together with \(p_{i}=\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\) for \(i\in \left[ n\right] \) exactly the optimal values satisfying \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\) and minimizing \(\varGamma z+\sum _{i=1}^{n}p_{i}\).

For integer solutions \(x\in {\mathscr {P}}^{\text {NOM}}\), the theorem states that z should be large enough such that there are at most \(\varGamma \) indices \(i\in \left[ n\right] \) with \(x_{i}=1\) and \({\hat{c}}_{i}>z\). Otherwise, we could increase z while simultaneously decreasing \(p_{i}\) for more than \(\varGamma \) indices, leading to an improvement of the objective value. Conversely, z should be small enough such that there exist at least \(\varGamma \) indices \(i\in \left[ n\right] \) with \(x_{i}=1\) and \({\hat{c}}_{i}\ge z\). Otherwise, we could decrease z and would have to increase \(p_{i}\) for less than \(\varGamma \) indices, also yielding an improvement of the objective value. Obviously, if \(\varGamma \) is so large that \(\sum _{i\in \left[ n\right] }x_{i}<\varGamma \) holds then we need to choose z as small as possible, i.e., \(z=0\).

Before proving Theorem 2, we characterize the bounds \({\underline{z}}\left( x\right) \) and \({\overline{z}}\left( x\right) \) in an additional way. The proof of the following lemma can be found in Appendix B.

Lemma 2

For \(x\in {\mathbb {R}}^{n}\), we have

and

Using the above lemma, we are able to prove Theorem 2.

Proof of Theorem 2

First, note that the interval \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \) is well-defined, since \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}>z}x_{i}\le \varGamma \) is a weaker requirement than \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}>z}x_{i}<\varGamma \) and we thus have \({\underline{z}}\left( x\right) \le {\overline{z}}\left( x\right) \) by definition of \({\underline{z}}\left( x\right) \) and Eq. (4). Furthermore, \(p_{i}=\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\) is optimal for a given x and z, as we minimize and have \(p_{i}\ge \left( {\hat{c}}_{i}-z\right) x_{i}\) and \(p_{i}\ge 0\) for all \(\left( x,p,z\right) \in {\mathscr {P}}^{\text {BIL}}\).

Now, let \(z\ge {\underline{z}}\left( x\right) \) and consider another value \(z'>z\) together with an appropriate \(p'\) such that \(\left( x,p',z'\right) \in {\mathscr {P}}^{\text {BIL}}\). By definition of \({\underline{z}}\left( x\right) \), it holds

and thus

Hence, the objective value is non-decreasing for \(z\ge {\underline{z}}\left( x\right) \). Moreover, for \({\overline{z}}\left( x\right) <\infty \), we even have \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}>z}x_{i}<\varGamma \) in the case of \(z={\overline{z}}\left( x\right) \) by Eq. (4). Then \((*)\) is a proper inequality and it follows that all choices \(z'>{\overline{z}}\left( x\right) \) are non-optimal.

Now, let \(z\le {\overline{z}}\left( x\right) \) and consider \(z'<z\). This implies \({\overline{z}}\left( x\right) >0\) and together with the definition of \({\overline{z}}\left( x\right) \), we obtain

It follows

Therefore, the objective value is non-increasing for \(z\le {\overline{z}}\left( x\right) \), which shows that all \(z\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \) are optimal. Furthermore, if it holds \(z'<{\underline{z}}\left( x\right) \) then we have \(0<{\underline{z}}\left( x\right) \) and thus \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}\ge {\underline{z}}\left( x\right) }x_{i}>\varGamma \) by Eq. (3). Then, for \(z={\underline{z}}\left( x\right) \) it follows that \((**)\) is again a proper inequality and all choices \(z'<{\underline{z}}\left( x\right) \) are non-optimal.\(\square \)

As already mentioned, Lee and Kwon [33] showed for \(\varGamma \in {\mathbb {Z}}\) that the number of different values for z to be considered can be reduced from \(n+1\) to \(\left\lceil \frac{n-\varGamma }{2}\right\rceil +1\). To see this, it is helpful to sort the deviations \({\hat{c}}_{i}\). Therefore, for the remainder of this paper, we assume without loss of generality that \({\hat{c}}_{0}\le \cdots \le {\hat{c}}_{n}\) holds. The first observation leading to the reduction of Lee and Kwon is that the values \(z\in \left\{ {\hat{c}}_{n+1-\varGamma },\ldots ,{\hat{c}}_{n}\right\} \) are no better than the value \(z={\hat{c}}_{n-\varGamma }\), i.e., \({\hat{c}}_{n-\varGamma }\ge {\underline{z}}\left( x\right) \) for all solutions \(x\in {\mathscr {P}}^{\text {NOM}}\). The second observation is that if the value \(z={\hat{c}}_{i}\) is optimal then \(z\in \left\{ {\hat{c}}_{i-1},{\hat{c}}_{i+1}\right\} \) also contains an optimal choice. To put it in terms of Theorem 2: if \({\hat{c}}_{i}\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \) holds then we also have \(\left\{ {\hat{c}}_{i-1},{\hat{c}}_{i+1}\right\} \cap \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \ne \emptyset \). Hence, \({\mathscr {Z}}=\left\{ {\hat{c}}_{0},{\hat{c}}_{2},{\hat{c}}_{4},\ldots ,{\hat{c}}_{n-\varGamma }\right\} \) contains an optimal choice for z. The following statement generalizes the first observation to \(\varGamma \in {\mathbb {R}}_{\ge 0}\). Furthermore, both observations are strengthened by using conflicts and a clique partitioning, which we already compute to obtain the strengthened formulations from Sect. 5, to reduce the set \({\mathscr {Z}}\).

Proposition 3

Let \({\mathscr {Q}}\) be a partitioning of \(\left[ n\right] \) into cliques and \(q:\left[ n\right] \rightarrow {\mathscr {Q}}\) be the mapping that assigns an index \(j\in \left[ n\right] \) its corresponding clique \(Q\in {\mathscr {Q}}\) with \(j\in Q\). For

it holds \({\hat{c}}_{i^{\max }}\ge {\underline{z}}\left( x\right) \) for all solutions \(x\in {\mathscr {P}}^{\text {NOM}}\cap \left\{ 0,1\right\} ^{n}\) and there exists an optimal solution \(\left( x,p,z\right) \) to \(\text {ROB}\) with \(z\in \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{i^{\max }}\right\} \).

Now, let \(G=\left( \left[ n\right] ,E\right) \) be a conflict graph for \(\text {ROB}\) and \(\varGamma \in {\mathbb {Z}}\). Furthermore, let \({\mathscr {Z}}\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{i^{\max }}\right\} \) such that \({\hat{c}}_{i^{\max }}\in {\mathscr {Z}}\) and for every \(i\in \left[ i^{\max }-1\right] _{0}\) it holds

-

\({\hat{c}}_{i}\in {\mathscr {Z}}\) or

-

there exists an index \(k<i\) with \({\hat{c}}_{k}\in {\mathscr {Z}}\) and for all \(j\in \left\{ k+1,\ldots ,i-1\right\} \) there exists an edge \(\left\{ j,i\right\} \in E\) in the conflict graph G.

Then there exists an optimal solution \(\left( x,p,z\right) \) to \(\text {ROB}\) with \(z\in {\mathscr {Z}}\).

A proof of the above proposition and an algorithm for computing a set \({\mathscr {Z}}\) meeting the required criteria can be found in Appendix C. Note that the second part of the proposition only holds for \(\varGamma \in {\mathbb {Z}}\). This is because the statement relies on the fact that for \({\hat{c}}_{i}\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \) and \(\varGamma \in {\mathbb {Z}}\), it also holds \({\hat{c}}_{k}\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \). However, for \(\varGamma \notin {\mathbb {Z}}\), we always have \({\underline{z}}\left( x\right) ={\overline{z}}\left( x\right) \), which implies that \({\hat{c}}_{i}\) always needs to be contained in \({\mathscr {Z}}\).

After paving the way with the theoretical results of the previous sections, we now describe the components of our branch and bound algorithm in detail in the next section.

7 The branch and bound algorithm

In the following sections, we will describe our approach for computing dual and primal bounds, our pruning rules as well as our node selection and branching strategies. A summary of the components, merged into one algorithm, is given in Sect. 7.6. An overview on different strategies regarding the components of branch and bound algorithms is provided by Morrison et al. [37].

For the remainder of this paper, \({\mathscr {Z}}\subseteq \left\{ {\hat{c}}_{0},\ldots ,{\hat{c}}_{n}\right\} \) will be a set of possible values for z, as constructed by Algorithm 6 from Appendix C. To ease notation, we will refer to the considered subsets \(Z\subseteq {\mathscr {Z}}\) as nodes in a rooted branching tree, where \({\mathscr {Z}}\) is the root node and \(Z'\) is a child node of Z if it emerges directly via branching. Furthermore, we denote with \({\mathscr {N}}\subseteq 2^{{\mathscr {Z}}}\) the set of active nodes, that are the not yet pruned leaves of our branching tree, which are still to be considered.

7.1 Dual bounding

The focus of this paper is primarily on the computation of strong dual bounds \({\underline{v}}\left( Z\right) \). We already paved the way for these in the previous sections by introducing the strong reformulation \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) ,\) yielding dual bounds \({\underline{v}}\left( Z\right) =v^{\text {R}}\left( \text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \right) \). In the following, we show that we can obtain even better bounds by restricting ourselves to solutions fulfilling the optimality criterion in Theorem 2.

7.1.1 Deriving dual bounds from \(\text {ROB}\left( Z\right) \)

Imagine that we just solved a robust subproblem \(\text {ROB}\left( Z\right) \), using the equivalent problem \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \), and observed that the optimal objective value \(v\left( \text {ROB}\left( Z\right) \right) \) is significantly higher than the current primal bound \({\overline{v}}\). Furthermore, imagine that there exists a yet to be considered value \(z'\) in an active node \(Z'\in {\mathscr {N}}\) that is very close to one of the just considered values \(z\in Z\). Note that the objective function of the nominal subproblem \(\text {NOS}\left( z\right) \), arising from fixing z, differs only slightly in its objective function \(\varGamma z+\sum _{i=1}^{n}\left( c_{i}+\left( {\hat{c}}_{i}-z\right) ^{+}\right) x_{i}\) from the nominal subproblem \(\text {NOS}\left( z'\right) \). This suggests that the objective value \(v\left( \text {NOS}\left( z'\right) \right) \) is probably not too far from \(v\left( \text {NOS}\left( z\right) \right) \). Since \(v\left( \text {ROB}\left( Z\right) \right) \) is higher than \({\overline{v}}\) and also a dual bound on \(v\left( \text {NOS}\left( z\right) \right) \), we might be able to prune \(z'\) without considering \(\text {ROB}\left( Z'\right) \) if we are able to carry over some information from \(\text {NOS}\left( z\right) \) to \(\text {NOS}\left( z'\right) \). In fact, Hansknecht et al. [27] showed that there exists a relation between the optimal solution values \(v\left( \text {NOS}\left( z\right) \right) \) for different values z.

Lemma 3

[27] For \(z'\le z\), it holds \(v\left( \text {NOS}\left( z'\right) \right) \ge v\left( \text {NOS}\left( z\right) \right) -\varGamma \left( z-z'\right) \).

Proof

The objective function \(\varGamma z+\sum _{i=1}^{n}\left( c_{i}+\left( {\hat{c}}_{i}-z\right) ^{+}\right) x_{i}\) of \(\text {NOS}\left( z\right) \) is non-increasing in z when omitting the constant term \(\varGamma z\). This implies \(v\left( \text {NOS}\left( z'\right) \right) -\varGamma z'\ge v\left( \text {NOS}\left( z\right) \right) -\varGamma z\), which proves the statement.\(\square \)

Accordingly, in addition to the dual bound \({\underline{v}}\left( Z'\right) \) for a node \(Z'\in {\mathscr {N}}\), we can also maintain individual dual bounds \({\underline{v}}\left( z'\right) \) on the optimal objective value \(v\left( \text {NOS}\left( z'\right) \right) \) with \({\underline{v}}\left( z'\right) =v\left( \text {ROB}\left( Z\right) \right) -\varGamma \left( {\underline{z}}-z'\right) \) for \(z'<{\underline{z}}\) after solving \(\text {ROB}\left( Z\right) \). The dual bound for a node \(Z'\) is then the combination of the linear relaxation value \(v^{\text {R}}\left( \text {ROB}^{\text {S}}\left( Z',{\mathscr {Q}}\right) \right) \) and the minimum of all individual bounds \(\min \left\{ {\underline{v}}\left( z'\right) \big |z'\in Z'\right\} \), i.e., we have

While this already strengthens the dual bounds in our branch and bound algorithm, we can improve the results of Hansknecht et al. even more by using the optimality criterion from Theorem 2 and the clique partitioning \({\mathscr {Q}}\) from Sect. 5. Since we are solely interested in optimal solutions to \(\text {ROB}\), it is sufficient to only consider solutions to \(\text {NOS}\left( z'\right) \) that fulfill the optimality criterion, i.e., solutions \(x'\) with \(z'\in \left[ {\underline{z}}\left( x'\right) ,{\overline{z}}\left( x'\right) \right] \). If an optimal solution to \(\text {NOS}\left( z'\right) \) does not fulfill this property then \(z'\) is no optimal choice in the first place and can therefore be pruned. Accordingly, we establish an improved bound that is not a dual bound on \(v\left( \text {NOS}\left( z'\right) \right) \), but a dual bound on the objective value of all solutions to \(\text {NOS}\left( z'\right) \) fulfilling the optimality criterion.

Let \(x'\) be such a solution to \(\text {NOS}\left( z'\right) \) with objective value \(v'\). Note that \(x'\) is also a feasible solution to \(\text {NOS}\left( z\right) \) and let \(v\ge v\left( \text {NOS}\left( z\right) \right) \) be the corresponding objective value. For \(z'<z\), the value \(v'\) is decreased by \(\delta ^{\text {dec}}=\varGamma \left( z-z'\right) \) compared to \(v\), but increased by \(\delta ^{\text {inc}}=\sum _{i=1}^{n}\left( \left( {\hat{c}}_{i}-z'\right) ^{+}-\left( {\hat{c}}_{i}-z\right) ^{+}\right) x_{i}'\). This yields the estimation

on the objective value \(v'\). Note that the decrease by \(\delta ^{\text {dec}}\) is taken into account in the estimation of Lemma 3, but the increase \(\delta ^{\text {inc}}\) is not. Obviously, \(\delta ^{\text {inc}}\) can be zero if we have \(x_{i}'=0\) for all \(i\in \left[ n\right] \) with \({\hat{c}}_{i}>z'\). However, if \(x'\) fulfills the optimality criterion then we know from Theorem 2 that there exist at least \(\varGamma \) indices with \({\hat{c}}_{i}\ge z'\) and \(x_{i}'=1\). Assuming that there do not exist \(\varGamma \) indices with \({\hat{c}}_{i}=z'\), there must exist at least one \(i\in \left[ n\right] \) with \({\hat{c}}_{i}>z'\) and \(x_{i}'=1\), yielding a positive lower bound on \(\delta ^{\text {inc}}\). Taking conflicts between variables \(x_{i}\) into account, we might even deduce that there must exist some indices with \(x_{i}'=1\) and very high \({\hat{c}}_{i}\), which improves the bound on \(\delta ^{\text {inc}}\).

Note that for \(z'>z\), Lemma 3 provides no bound on \(\text {NOS}\left( z'\right) \), although we can apply similar arguments to this case. Observe that Inequality (5) still holds, with \(\delta ^{\text {inc}}\le 0\) and \(\delta ^{\text {dec}}<0\). Unfortunately, if we have \(x_{i}'=1\) for all \(i\in \left[ n\right] \) with \({\hat{c}}_{i}>z\) then \(\delta ^{\text {inc}}<0\) might have a large absolute value, leading to a weak estimation. However, if \(x'\) fulfills the optimality criterion then we know from Theorem 2 that there exist at most \(\varGamma \) indices with \({\hat{c}}_{i}>z\) and \(x_{i}'=1\). From this, we can again deduce a lower bound on \(\delta ^{\text {inc}}\), which can also be improved by taking conflicts between variables \(x_{i}\) into account.

Theorem 3

Let \({\mathscr {Q}}\) be a partitioning of \(\left[ n\right] \) into cliques, \(z,z'\in {\mathbb {R}}_{\ge 0}\), and \(x'\) be an arbitrary solution to \(\text {NOS}\left( z'\right) \) of value \(v'\) that satisfies \(z'\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \). Then we have \(v'\ge v\left( \text {NOS}\left( z\right) \right) -\delta _{z}\left( z'\right) \), where the estimator \(\delta _{z}\left( z'\right) \) is defined as

Proof

For \(z'=0\), the statement follows from Lemma 3. Otherwise, we obtain an estimation

as in Inequality (5), by considering the difference in the objectives of \(\text {NOS}\left( z'\right) \) and \(\text {NOS}\left( z\right) \). Consider the case \(z'>z\). Since it holds \(z'\ge {\underline{z}}\left( x'\right) \), it follows from the definition of \({\underline{z}}\left( x'\right) \) in Theorem 2 that we have

We obtain

where the last equality holds since \({\mathscr {Q}}\) is a partitioning of \(\left[ n\right] \).

Now, let \(0<z'<z\). Since \(z'\le {\overline{z}}\left( x'\right) \) holds, Theorem 2 implies

We obtain

which concludes the proof.\(\square \)

The above statement now enables us not only to compute bounds for \(z'>z\), but also stronger bounds for \(0<z'<z\). Note that for \(z'=0\), we have to use the dual bound from Lemma 3, since Theorem 2 provides no statement on the required structure of \(x'\) in this case.

In our branch and bound algorithm, we use the estimators \(\delta _{{\underline{z}}}\left( z'\right) \) for all \(z'<{\underline{z}}\) and \(\delta _{{\overline{z}}}\left( z'\right) \) for \(z'>{\overline{z}}\) after solving \(\text {ROB}\left( Z\right) \). Accordingly, we define for \(Z\subseteq {\mathscr {Z}}\) the estimators

The improved bounds \(v\left( \text {ROB}\left( Z\right) \right) -\delta _{Z}\left( z'\right) \) come with the cost of a higher computational effort compared to the bounds from Lemma 3. However, the additional overhead is marginal, as we can solve the involved maximization problems in linear time and compute all estimators \(\delta _{{\underline{z}}}\left( z'\right) \), or \(\delta _{{\overline{z}}}\left( z'\right) \) respectively, simultaneously. Algorithm 2 describes our approach for computing the estimators for a set \({\mathscr {Z}}'\subseteq {\mathscr {Z}}\) of remaining values \(z'\).

We first compute \(\delta _{{\overline{z}}}\left( z'\right) \) for \(z'\in \left\{ z'\in {\mathscr {Z}}'\big |z'>{\overline{z}}\right\} \) (lines 1 to 9). For computing \(\delta _{{\overline{z}}}\left( z'_{j}\right) \), we consider all deviations \({\hat{c}}_{k}\) with \({\overline{z}}<{\hat{c}}_{k}\le z'_{j}\) (line 4) and add the corresponding value \({\hat{c}}_{k}-{\overline{z}}\) (line 8). Furthermore, we mark the clique \(q\left( k\right) \) containing k as considered by adding it to the set \({\mathscr {Q}}'\) and we associate the clique \(q\left( k\right) \) with the index k by maintaining a mapping \(q^{-1}:{\mathscr {Q}}'\rightarrow \left[ n\right] \) (line 7). However, if \(q\left( k\right) \) is already contained within \({\mathscr {Q}}'\) then we considered an index \(k'=q^{-1}\left( q\left( k\right) \right) \) with \(q\left( k\right) =q\left( k'\right) \) before k and counted the value \({\hat{c}}_{k'}-{\overline{z}}\) towards \(\delta _{{\overline{z}}}\left( z'\right) \). Hence, either \({\hat{c}}_{k}-{\overline{z}}\) or \({\hat{c}}_{k'}-{\overline{z}}\) has to be subtracted, as we only count the highest value per clique. Since we iterate over the deviations in a non-decreasing order, it holds \({\hat{c}}_{k}-{\overline{z}}\ge {\hat{c}}_{k'}-{\overline{z}}\), which is why we subtract \({\hat{c}}_{k'}-{\overline{z}}\) (line 6). Note that we do not have to consider all values \(\left\{ {\hat{c}}_{k}\big |{\overline{z}}<{\hat{c}}_{k}\le z'_{j}\right\} \) for computing \(\delta _{{\overline{z}}}\left( z'_{j}\right) \) if we already considered the values \(\left\{ {\hat{c}}_{k}\big |{\overline{z}}<{\hat{c}}_{k}\le z'_{j-1}\right\} \) for \(\delta _{{\overline{z}}}\left( z'_{j-1}\right) \). Instead, we construct \(\delta _{{\overline{z}}}\left( z'_{j}\right) \) on the basis of \(\delta _{{\overline{z}}}\left( z'_{j-1}\right) \) and only iterate over \(\left\{ {\hat{c}}_{k}\big |z'_{j-1}<{\hat{c}}_{k}\le z'_{j}\right\} \).

The computation of \(\delta _{{\underline{z}}}\left( z'\right) \) for \(z'\in \left\{ z'\in {\mathscr {Z}}'\big |z'<{\underline{z}}\right\} \) is almost analogous (lines 10 to 24). The difference here is that we only consider up to \(\varGamma \) values \({\underline{z}}-{\hat{c}}_{k}\). Hence, we not only maintain the set \({\mathscr {Q}}'\) and the mapping \(q^{-1}\), but also a list containing the indices of currently added values \({\underline{z}}-{\hat{c}}_{k}\). The list is updated every time we subtract (line 18) or add (line 20) a value \({\underline{z}}-{\hat{c}}_{k}\). Furthermore, since we iterate reversely over \(\left\{ {\hat{c}}_{k}\big |z'_{j}\le {\hat{c}}_{k}<{\underline{z}}\right\} \), the list is ordered non-decreasing with respect to \({\underline{z}}-{\hat{c}}_{k}\). Hence, before assigning \(\delta _{{\underline{z}}}\left( {\hat{c}}_{i_{j}}\right) \), we check whether L contains more than \(\varGamma \) elements and, if necessary, remove the first \(\varGamma -\left| L\right| \) indices together with their value \(z-{\hat{c}}_{k}\) and their clique \(q\left( k\right) \) (lines 21 to 23).

7.1.2 Optimality-cuts

Consider a node \(Z\subseteq {\mathscr {Z}}\) of our branching tree and assume that \(\left( x,p,z\right) \) is a solution to \(\text {ROB}\left( Z\right) \) with \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap Z=\emptyset \). We know from Theorem 2 that it is needless to consider x for the subset Z, as there is a different set \(Z'\subseteq {\mathscr {Z}}\) with \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap Z'\ne \emptyset \) if x is part of a globally optimal solution. Nevertheless, it is possible that \(\left( x,p,z\right) \) is an optimal solution to \(\text {ROB}\left( Z\right) \), resulting in an unnecessarily weak dual bound \({\underline{v}}\left( Z\right) \). Using the following theorem, we are able to strengthen our formulations such that we only consider solutions \(\left( x,p,z\right) \) with \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap Z\ne \emptyset \) and thus raise the dual bound \({\underline{v}}\left( Z\right) \).

Theorem 4

Let \(x\in {\mathscr {P}}^{\text {NOM}}\cap \left\{ 0,1\right\} ^{n}\) be a solution to \(\text {NOM}\) and \(\underline{\text {c}}\le \overline{\text {c}}\) bounds on z. Then \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap \left[ \underline{\text {c}},\overline{\text {c}}\right] \) \(\ne \emptyset \) holds if and only if x satisfies

and in the case of \(\underline{\text {c}}>0\) also

Proof

We have \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap \left[ \underline{\text {c}},\overline{\text {c}}\right] \) \(\ne \emptyset \) if and only if \({\underline{z}}\left( x\right) \le \overline{\text {c}}\) and \(\underline{\text {c}}\le {\overline{z}}\left( x\right) \) holds. We first show that \({\underline{z}}\left( x\right) \le \overline{\text {c}}\) holds if and only if x fulfills Inequality (6). We know from Theorem 2 that \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}>{\underline{z}}\left( x\right) }x_{i}\le \varGamma \). Then \({\underline{z}}\left( x\right) \le \overline{\text {c}}\) implies

and thus Inequality (6) due to x being binary. Additionally, x cannot fulfill Inequality (6) if we have \(\overline{\text {c}}<{\underline{z}}\left( x\right) \), as this contradicts the minimality in the definition of \({\underline{z}}\left( x\right) \).

It is clear to see that \(\underline{\text {c}}\le {\overline{z}}\left( x\right) \) applies if we have \(\underline{\text {c}}=0\). Hence, it remains to show that for \(0<\underline{\text {c}}\), it holds \(\underline{\text {c}}\le {\overline{z}}\left( x\right) \) if and only if x fulfills Inequality (7). We know from Theorem 2 that \(\sum _{i\in \left[ n\right] :{\hat{c}}_{i}\ge {\overline{z}}\left( x\right) }x_{i}\ge \varGamma \) holds. Then \(\underline{\text {c}}\le {\overline{z}}\left( x\right) \) implies

and thus Inequality (6). Additionally, x cannot fulfill Inequality (7) if \({\overline{z}}\left( x\right) <\underline{\text {c}}\) holds, as this contradicts the maximality in the definition of \({\overline{z}}\left( x\right) \).\(\square \)

In our branch and bound algorithm, we add the above Inequalities (6) and (7), with \(\underline{\text {c}}={\underline{z}}\) and \(\overline{\text {c}}={\overline{z}}\), as optimality-cuts to the formulation \({\mathscr {P}}^{\text {S}}\left( Z,{\mathscr {Q}}\right) \) when solving the corresponding linear problem. However, the optimality-cuts can cause several problems when added to a robust subproblem \(\text {ROB}\left( Z\right) \), especially with respect to the dual bounds of the last section. Let \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \) be the corresponding problem with added optimality-cuts for bounds \(\underline{\text {c}}\le {\underline{z}}\) and \({\overline{z}}\le \overline{\text {c}}\). Note that in the proof of Theorem 3, we require \(x'\), the solution to \(\text {NOS}\left( z'\right) \), to be feasible for \(\text {NOS}\left( z\right) \) in order to show that \(v\left( \text {NOS}\left( z\right) \right) -\delta _{z}\left( z'\right) \) is a dual bound. Analogously, we require \(x'\) to be a feasible solution to \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \) in order to derive a dual bound from \(v\left( \text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \right) \). That is, if \(x'\) does not meet the optimality-cuts then we can not derive any dual bounds from \(v\left( \text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \right) \). However, if \(\left[ {\underline{z}}\left( x'\right) ,{\overline{z}}\left( x'\right) \right] \cap \left[ \underline{\text {c}},\overline{\text {c}}\right] \ne \emptyset \) holds then \(x'\) is according to Theorem 4 a feasible solution to \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \), leading to the following generalization of Theorem 3.

Corollary 1

Let \(Z\subseteq {\mathbb {R}}_{\ge 0}\) and \(\underline{\text {c}}\le \overline{\text {c}}\) with \(Z\subseteq \left[ \underline{\text {c}},\overline{\text {c}}\right] \). Furthermore, let \(z'\in \left[ \underline{\text {c}},\overline{\text {c}}\right] \) and \(x'\) be an arbitrary solution to \(\text {NOS}\left( z'\right) \) of value \(v'\) satisfying \(z'\in \left[ {\underline{z}}\left( x'\right) ,{\overline{z}}\left( x'\right) \right] \). Then \(v'\ge v\left( \text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \right) -\delta _{Z}\left( z'\right) \) holds.

Accordingly, there is a trade-off in the choice of \(\underline{\text {c}},\overline{\text {c}}\). On the one hand, the optimal objective value \(v\left( \text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \right) \), and thus the derived dual bounds for other \(z'\in \left[ \underline{\text {c}},\overline{\text {c}}\right] \), increases if the bounds \(\underline{\text {c}},\overline{\text {c}}\) are close together. On the other hand, we want to derive dual bounds for as many \(z'\) as possible. Furthermore, the optimality-cuts can be hindering for finding good primal bounds. We resolve this trade-off by adding loose optimality-cuts, corresponding to wide bounds \(\underline{\text {c}},\overline{\text {c}}\), in the beginning and gradually strengthening them as we consider more robust subproblems.

Let \({\mathscr {Z}}^{*}\subseteq {\mathscr {Z}}\) be the union of all nodes \(Z^{*}\subseteq {\mathscr {Z}}\) for which we already solved a robust subproblem \(\text {ROB}\left( Z^{*},\underline{\text {c}}^{*},\overline{\text {c}}^{*}\right) \) and let \({\mathscr {Z}}'=\bigcup _{Z\in {\mathscr {N}}}Z\) be the union of all active nodes. In our branch and bound algorithm, for a node \(Z\in {\mathscr {N}}\), we choose \(\underline{\text {c}},\overline{\text {c}}\in {\mathscr {Z}}'\) as wide as possible around Z such that there exists no \(z^{*}\in {\mathscr {Z}}^{*}\) in between, i.e.,

and

In order to see that it is not reasonable to expand the interval \(\left[ \underline{\text {c}},\overline{\text {c}}\right] \), consider a value \(z'\in {\mathscr {Z}}'\backslash \left[ \underline{\text {c}},\overline{\text {c}}\right] \). By definition, we already considered a subproblem \(\text {ROB}\left( Z^{*},\underline{\text {c}}^{*},\overline{\text {c}}^{*}\right) \) with \(z'\in \left[ \underline{\text {c}}^{*},\overline{\text {c}}^{*}\right] \) for a node \(Z^{*}\) containing a value \(z^{*}\) with \(z'<z^{*}<{\underline{z}}\) or \({\overline{z}}<z^{*}<z'\). Since \(\delta _{Z^{*}}\left( z'\right) \le \delta _{z^{*}}\left( z'\right) <\delta _{Z}\left( z'\right) \) holds, we have already computed a dual bound \({\underline{v}}\left( z'\right) =v\left( \text {ROB}\left( Z^{*},\underline{\text {c}}^{*},\overline{\text {c}}^{*}\right) \right) -\delta _{Z^{*}}\left( z'\right) \) that is probably better than a potential dual bound derived from \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \). Thus, expanding \(\left[ \underline{\text {c}},\overline{\text {c}}\right] \) tends to be useless for obtaining new dual bounds. Now, assume that there exists a nominal solution x with \(\left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \cap \left[ \underline{\text {c}},\overline{\text {c}}\right] =\emptyset \) such that \(\left( x,p,z\right) \) is feasible for \(\text {ROB}\left( Z\right) \) and also defines an improving primal bound \({\overline{v}}\). In this case, it would be beneficial to expand \(\left[ \underline{\text {c}},\overline{\text {c}}\right] \) such that x fulfills the optimality-cuts and we obtain a new incumbent. However, we have seen in the proof of Theorem 2 that the objective value of \(\left( x,p,z\right) \) is non-increasing for \(z\le {\overline{z}}\left( x\right) \) and non-decreasing for \(z\ge {\underline{z}}\left( x\right) \) with the appropriate \(p=\left( {\hat{c}}_{i}-z\right) ^{+}x_{i}\). Using the arguments from above, we should have already found a solution \(\left( x,p^{*},z^{*}\right) \) that is at least as good as \(\left( x,p,z\right) \) for a previous subproblem \(\text {ROB}\left( Z^{*},\underline{\text {c}}^{*},\overline{\text {c}}^{*}\right) \). Accordingly, expanding \(\left[ \underline{\text {c}},\overline{\text {c}}\right] \) is also uninteresting for obtaining new primal bounds.

In the next section, we show what else we can do except for choosing appropriate bounds \(\underline{\text {c}},\overline{\text {c}}\) in order to guide the branch and bound algorithm in the search for primal bounds.

7.2 Primal bounding

We already stated in the introduction that the potentially large optimality gap of \(\text {ROB}\) can cause problems for MILP solvers when trying to compute feasible solutions. Hence, we have to provide guidance for the solver in order to consistently obtain strong primal bounds. As the focus of this paper is on the robustness structures of \(\text {ROB}\), and not the corresponding nominal problem \(\text {NOM},\) we implement no heuristics that explicitly compute feasible solutions x to \(\text {NOM}\). Nevertheless, our branch and bound algorithm naturally aids in the search for optimal solutions by quickly identifying non-promising values of z. This allows us early on to focus on nodes \(Z\subseteq {\mathscr {Z}}\) containing (nearly) optimal choices for z, for which solving \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \) is much easier, using the equivalent problem \(\text {ROB}^{\text {S}}\left( Z,{\mathscr {Q}},\underline{\text {c}},\overline{\text {c}}\right) \), and yields (nearly) optimal solutions to \(\text {ROB}\).

Furthermore, even when considering \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \) for a node \(Z\subseteq {\mathscr {Z}}\) that contains no optimal choice for z, we can potentially derive good primal bounds or even optimal solutions to \(\text {ROB}\). In many cases, an optimal solution \(\left( x,p,z\right) \) to \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \) does not meet the optimality criterion \(z\in \left[ {\underline{z}}\left( x\right) ,{\overline{z}}\left( x\right) \right] \), which leaves potential for improving the primal bound provided by \(v\left( \text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \right) \). Since \({\overline{z}}\left( x\right) \) is easily computable, we can obtain a better primal bound \({\overline{v}}\left( x\right) \) provided by the solution value of \(\left( x,p',{\overline{z}}\left( x\right) \right) \), with \(p_{i}'=\left( {\hat{c}}_{i}-{\overline{z}}\left( x\right) \right) ^{+}x_{i}\). Moreover, we can not only compute \({\overline{v}}\left( x\right) \) for an optimal solution x to \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \), but any feasible solution the solver reports while solving \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \). This increases the chance of finding good primal bounds, as an improved sub-optimal solution may provide an even better bound than an optimal solution to \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \). We will see in our computational study that our branch and bound algorithm quickly finds optimal solutions to \(\text {ROB}\), often while considering the very first robust subproblem. Additionally, the possibility to derive strong primal bounds from sub-optimal solutions, which may be found early on while solving \(\text {ROB}\left( Z,\underline{\text {c}},\overline{\text {c}}\right) \), will be relevant for our pruning strategy in the next section.

7.3 Pruning