Abstract

Purpose of Review

This review of the literature aims to present potential applications of radiomics in cardiovascular radiology and, in particular, in cardiac imaging.

Recent Findings

Radiomics and machine learning represent a technological innovation which may be used to extract and analyze quantitative features from medical images. They aid in detecting hidden pattern in medical data, possibly leading to new insights in pathophysiology of different medical conditions. In the recent literature, radiomics and machine learning have been investigated for numerous potential applications in cardiovascular imaging. They have been proposed to improve image acquisition and reconstruction, for anatomical structure automated segmentation or automated characterization of cardiologic diseases.

Summary

The number of applications for radiomics and machine learning is continuing to rise, even though methodological and implementation issues still limit their use in daily practice. In the long term, they may have a positive impact in patient management.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recently, there has been growing interest in possible applications of data mining and artificial intelligence (AI) in medicine. The field of radiomics includes a collection of techniques used to automatically extract large amounts of quantitative features from medical images through the analysis of pixel grey level distribution, thus possibly leading to new insights in pathophysiological mechanisms underlying different medical conditions [1]. Texture analysis (TA) is one of the main areas of radiomics, evaluating grey level value patterns in images that are not detectable by qualitative assessment by a human reader. Therefore, it plays an important role in analyzing features of different tissues or organs in radiology, contributing to the potential development of new biomarkers [2]. For example, texture features may have histopathologic correlates that may help in the evaluation of patient prognosis [3].

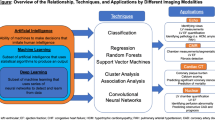

AI is frequently used to develop classification or regression models from radiomics data [4]. In particular, machine learning (ML) is the subfield of AI which enables predictive modeling through automated recognition of patterns in the data space (Fig. 1) [5]. ML is based on the use of different algorithm types, which can be broadly classified based on their training mechanism in supervised, unsupervised, and reinforcement learning [6]. The first one requires labeled data to guide the training process, whereas the second does not, as the software automatically searches for structures in data instead. The unsupervised learning process usually results in data clustering, which needs subsequent analysis to correlate its findings with outcomes of interest. Finally, in reinforcement learning, there are both positive and negative reinforcement loops which progressively improve the prediction ability of the algorithm, leading to growth in accuracy through “experience.” ML applications may also include a combination of these types of learning, even though supervised learning is the most common approach in medical imaging. Among the different subtypes of ML algorithms, neural networks (NNs) are frequently used in radiology, due to their intrinsic ability to analyze images. This type of model processes data similarly to the human brain as it is based on a network of nodes, also called “neurons.” Every node stores a numeric value, and the connection between each neuron represents a weight of the NN, which corresponds to the strength of connections between nodes. This architecture results in a multilayer network of nodes, each layer progressively working on a higher degree of abstraction, with the final layer encoding the desired output. Deep learning (DL) is a type of NN which contains multiple hidden layers, detecting complex, non-linear relationships between image features [7]. Thus, DL allows high-level abstractions of the data present in medical images [8].

In the past few years, ML has proved to be potentially useful in multiple subspecialities of healthcare, and several of these tools are now approved for clinical practice [9,10,11]. Radiology is one of the most promising fields of radiomics and ML application, as these may be used for automatic detection and characterization of lesions or segmentation of medical images [12, 13]. In particular, there has been a growing number of scientific works showing ML as a powerful tool in imaging of cardiovascular diseases [14]. For instance, it may improve image acquisition and reconstruction time [15]. They have also shown promising results in automated segmentation of anatomical structures and classification of diseases [16, 17]. Finally, ML may provide new understanding of known diseases though its ability to uncover hidden patterns in the data, thus improving their future management [18].

This review aims to provide an overview of promising applications of radiomics and ML in the domain of cardiovascular imaging disease, sorted by imaging modality. Specifically, we will focus on cardiac imaging, given its essential role to diagnose numerous cardiologic diseases and the consequently growing number of AI tools in this field [19].

Echocardiography

Echocardiography is a widely used imaging modality in cardiology, particularly for the assessment and measurement of heart chambers and in the study of valvular disease [20]. It may greatly benefit from ML tools as these could be used to obtain automated and accurate measurements, reducing inter- and intra-rater variability, which are typical of ultrasound examinations. For example, ML-based software could automatically calculate clinically relevant echocardiography parameters, such as left ventricular ejection fraction. Ash et al. developed a ML model, trained on more than 50,000 echocardiographic exams, which automatically calculates left ventricle ejection fraction with high consistency (mean absolute deviation = 2.9%) and sensitivity and specificity (0.90 and 0.92, respectively) [21]. These solutions may improve the imaging workflow, as well as increase the accuracy of measurements, in particular in case of less-experienced operators [22]. Similarly, AI may be used to automatically calculate global longitudinal strain and left atrial volume (LAV) [23]. As shown by Mor-Avi and colleagues, who evaluated 92 patients, the values of LAV obtained from echocardiography present high correlation with those derived from cardiac magnetic resonance (CMR), in particular when using real-rime 3D technique (r = 0.93 vs. r = 0.74 for maximal LAV; r = 0.88 vs. r = 0.82 for minimal LAV) [24].

AI may also enable automatic detection of wall motion anomalies from echocardiography, as shown by Huang and colleagues. Their group developed an accurate convolutional NN model using a training dataset of 10.638 echocardiography exams performed in two tertiary care hospitals [25]. It achieved an area under the receiver operating characteristic curve (AUC) of 0.891, sensitivity of 0.818, and specificity of 0.816 [25]. The potential value of ML is also emerging in the setting of aortic valve stenosis management, again through automated measurements and image analysis [26].

Another avenue for the implementation of radiomics and ML in echocardiography is represented by the characterization of myocardial tissue anomalies. This type of analysis may be challenging as changes are often subtle. Kagiyama et al. used both supervised and unsupervised learning approaches to develop a ML tool. In this case, a training dataset of 534 echocardiography scans was used, with corresponding CMR images serving as the reference standard. The resulting model predicted the presence myocardial fibrosis with an AUC of 0.84, sensitivity of 86.4%, and specificity of 83.3% [27].

Finally, ML may identify functional phenotypes from whole–cardiac cycle echocardiography. In particular, Loncaric et al. used unsupervised learning trained on a dataset of 189 patients with known hypertension and 97 healthy controls and found that their software could automatically identify patterns in velocity and deformation which correlate with specific structural and functional remodeling [28]. Similarly, AI has been used to analyze diastolic parameters correlating with specific phenotypes, thus leading to a more personalized patient management [29].

Coronary Computed Tomography Angiography

Coronary computed tomography angiography (CCTA) has become one of the most important diagnostic exams in cardiology in multiple settings. Indeed, it plays a pivotal role in the diagnosis of chronic coronary syndrome, as it is recommended as the initial test for diagnosing coronary artery disease, especially when this condition cannot be excluded by clinical exams alone [30]. Radiomics proved to be useful in identifying vulnerable coronary atherosclerotic plaques. For instance, it was used to extract features from CCTAs performed on 624 individuals of the Framingham Heart Study cohort with and Agatston score higher than 0. These patients were clinically followed for more than 9 years, and ML accurately identified subjects at risk of major cardiovascular events among them [31]. Furthermore, Kolossváry et al. developed a tool which detects the napkin-ring sign, an imaging finding of atherosclerotic plaques which correlates with major adverse cardiac events [32]. They enrolled 2674 patients who underwent CCTA due to stable chest pain. Twenty patients with napkin-ring sign were identified within this cohort and matched with 30 healthy controls. More than 4000 radiomics features were extracted from each exam, and the model had an excellent discriminatory power, with a reported AUC > 0.80. On the other hand, Hamersvelt and colleagues used DL to identify patients with significant coronary artery stenosis among those classified as having an intermediate degree of stenosis (corresponding to 25–69% vessel caliber reduction) [33]. This approach proved to have a good potential as the AUC was 0.76 and sensitivity of 92.6%; however, the specificity was only 31.1% [33]. Radiomics may also aid in detecting the presence of coronary inflammation, which has been associated with higher risk of major cardiovascular accidents [34].

CCTA is known to have high negative predictive value to exclude acute coronary syndrome, in particular in patients with low-to-intermediate pre-test probability [35]. Some ML tools have been developed to improve CCTA’s performance in the setting of acute coronary syndrome. For instance, Hinzpeter et al. created a ML model based on TA data using CCTAs of 20 patients with acute myocardial infarction and 20 healthy controls. This proved to be accurate in distinguishing healthy individuals from those with acute myocardial infarction (AUC of 0.90), even if on a small sample of cases overall [36]. Hu and colleagues used radiomics to predict major adverse cardiovascular events from CCTA features [37]. They collected a total of 105 lesions from 88 CCTAs in the training set, and 31 CCTAs were used as the validation set. A total of 1409 radiomics features were extracted and the final model demonstrated an AUC of 0.762 for the training set and 0.671 for the validation one. These results are promising, although this tool also requires further validation prior to consideration for its introduction in clinical practice.

Recently, imaging of pericoronary adipose tissue on routinary CCTA has shown to be a good way to measure coronary inflammation [38]. Therefore, Lin et al. created a model integrating CCTA and clinical features which employs radiomic data of pericoronary adipose tissue to accurately (AUC = 0.87) classify patients with myocardial infarction and those with stable or absent coronary artery disease (CAD) [39]. Interestingly, Mannil et al. found that AI could also be helpful in the setting of non-contrast enhanced low radiation CCTA. They investigated the use of different models (NN, decision tree, naïve Bayes, random forest, sequential minimal optimization), based on TA radiomic data. These proved to be effective in detecting myocardial infarction from non-contrast enhanced low radiation CCTA, with the best (naïve Bayes) achieving a sensitivity of 83% and a specificity of 84% [40].

Radiomics can also be used to identify features useful to predict higher cardiovascular risk. For instance, Oikonomou and colleagues used ML to find features of perivascular adipose tissue associated with major cardiovascular events in three experiments [41]: the first analysis compared adipose tissue biopsies obtained from patients undergoing cardiac surgery with CT images; the second used random forest to distinguish patients who suffered from major cardiovascular events from healthy controls; and the third focused on patients with acute myocardial infarction. Radiomics has the ability to detect features of perivascular adipose tissue (apart from inflammation) associated with CAD [42]. Furthermore, ML may accurately identify patients who require coronary intervention. Liu et al. enrolled 296 patients with symptomatic CAD and stenosis (> 50%) to create a training dataset in order to develop a DL tool which could automatically calculate fractional flow reserve [43]. It proved to be accurate, thus possibly reducing the need of invasive coronary intervention. The automated computation of fractional flow reserve with ML may also be useful in the emergency setting with patients suffering from acute chest pain [44].

AI may also be useful in the differential diagnosis process in particular settings. For example, radiomics can accurately differentiate artifact caused by left atrial appendage from thrombi, as shown by Ebrahimian and colleagues. They developed a highly accurate tool (AUC = 0.85) which only requires early-phase contrast-enhanced CT images to work [45]. Similarly, a ML model may be used in the setting of suspected prosthetic valve obstruction to differentiate pannus from thrombi or vegetation [46].

CCTA may also be useful in evaluating the myocardium when CMR is not available. For example, Qin and colleagues used radiomics to detect myocardial fibrosis in hypertrophic cardiomyopathy using CMR as reference [47]. They enrolled 161 patients and used logistic regression to create a classification model which proved to have high diagnostic power (AUC = 0.81 in the training set and 0.78 in the testing cohort). Esposito et al. used TA to detect extra-cellular matrix changes in the myocardium of patients with ventricular tachycardia, analyzing late iodine enhancement images and identifying different phenotypes of remodeling [48]. Similarly, the analysis of late iodine enhancement with ML may also be useful in distinguishing cardiac sarcoidosis from non-ischemic cardiomyopathies [49].

Radiomics and ML could also identify patients with high risk of major cardiovascular events among those with left ventricular hypertrophy using non-contrast cardiac computed tomography, with high accuracy (AUC > 0.70) [50].

Cardiac Magnetic Resonance Imaging

Cardiac magnetic resonance (CMR) is an essential modality in cardiovascular imaging as it allows evaluation of both function and structure of the heart, and it is crucial in the diagnosis and management of many diseases. An increasing number of AI tools have been developed to be implemented in CMR, aimed at reducing acquisition and reading time as well as improve reproducibility. As previously mentioned, they may also help in automated classification of lesion phenotypes. For instance, Cetin and colleagues extracted radiomic features from CMRs to build models for the classification and diagnosis of cardiovascular diseases [51]. The same research group also used different types of ML algorithms (support vector machine, random forest, and logistic regression) to identify specific CMR features in patients with cardiovascular risk factors (in particular with hypertension, diabetes, high cholesterol, current, and previous smoking) [52]. This approach proved to accurately identify cardiac tissue textures specific for each risk group, with good accuracy (AUC > 0.6).

Regarding myocardial infarction, Chen et al. used TA to extract features from native and post-contrast T1 mapping images to evaluate the extracellular volume fraction mapping and detect irreversible changes after myocardial infarction [53]. In their study, an AUC of 0.91 was achieved, and thus their pipeline may be helpful in predicting left ventricular adverse remodeling. In this setting, TA may also be used to extract features from late-gadolinium enhancement (LGE) images which correlate with a higher risk of developing arrhythmias. This in turn may lead to improved selection of patients that would benefit from an implanted cardioverter defibrillator [54]. Radiomics may also be employed to differentiate non-viable, viable, and remote infarcted myocardial segments analyzing LGE patterns [55]. It may also extract important additional information from unenhanced images, which may be relevant when it is not possible to employ contrast agents (e.g., in case of renal impairment, a common condition in CAD patients). Quanmei et al. used radiomics features from unenhanced T1 mapping and T1 values to diagnose myocardial injury in ST-segment elevation myocardial infarction with high accuracy (AUC = 0.88 in the training set and 0.86 in the test one) [56]. Similarly, Zhang and colleagues developed a DL tool to automatically detect and delineate chronic myocardial infarction from unenhanced CMRs, which showed an AUC of 0.94 [57]. Eftestøl et al. investigated TA’s ability to identify patients that would require implantable cardioverter defibrillator among those with myocardial infarction with high specificity (84%) [58]. ML may also be useful in the differential diagnosis of myocarditis with acute clinical presentation and acute myocardial infarction. In this setting, Baesslet et al. performed TA of T1 and T2 map sequences from 39 CMRs, achieving an AUC of 0.88, a sensitivity of 89%, and a specificity of 92% [59].

In clinical practice, another role for CMR is represented by the diagnosis and management of cardiomyopathies, and ML may also help in this domain. For example, TA may help to discriminate between hypertensive heart disease and hypertrophic cardiomyopathy. Neisius et al. used it to analyze global native T1 mapping images from 232 subjects and their solution achieved an overall accuracy of 0.86 [60]. Alis et al. used both TA and ML to identify patients with tachyarrhythmia from a population of subjects affected by hypertrophic cardiomyopathy [61]. They enrolled 64 patients and tested different types of ML algorithms (support vector machines, naive Bayes, k-nearest-neighbors, and random forest) to analyze LGE patterns, achieving a sensitivity of 95.2%, specificity of 92.0%, and accuracy of 95%. The analysis of LGE patterns may also predict the risk of developing adverse events in the setting of hypertrophy cardiomyopathy with systolic dysfunction [62]. Furthermore, TA may allow for the extraction of features correlating with tachyarrhythmia in patients with hypertrophic cardiomyopathy from non-contrast T1 images [63].

Interestingly, radiomics may help in associating specific genetic mutations to imaging phenotypes. Wang et al. developed an image analysis pipeline to classify hypertrophic cardiomyopathy patients related to MYH7 or MYBPC3 mutations using exclusively T1 native maps, resulting in an AUC higher than 0.90 [64]. Similarly, TA analysis of T1 maps may aid in differentiating patients with dilatative cardiomyopathy from healthy controls, as shown by Shao et al. This group implemented a support vector machine model with an accuracy higher than 0.85 [65]. In dilatative cardiomyopathy, DL and ML may be helpful in identifying specific phenotypes and predicting prognosis [66].

Finally, DL may allow to reduce or even avoid the use of gadolinium in CMR, as proposed by Bustamante et al. using cardiovascular 4D flow MRI. This software may be useful, for example, in congenital heart disease patients as pediatric subjects are likely to require long-time follow-up [67, 68]. Additionally, the analysis of native T1 maps may help identifying patients with low-likelihood of LGE, thus avoiding contrast administration in selected cases [69].

Nuclear Cardiology

Single-photon emission computed tomography (SPECT) is an important imaging modality in assessing significant CAD and risk of major cardiovascular events [70]. As with other modalities, ML may be useful as it may obtain automated segmentations of SPECT images [71]. Furthermore, ML and DL may be also used to classify SPECT images and identify patients with CAD. Apostolopoulos et al. used different subtypes of ML (NNs and random forest) to analyze a large dataset composed by 566 patients who underwent gated SPECT with 99mTc-tetrofosmin. They were able to prove that these tools could perform diagnosis with an accuracy of 79.15% [72]. Deep convolutional NNs could also predict risk of CAD and obstructive disease from 99mTc-tetrofosmin SPECT with high accuracy (AUC = 0.80) [73].

ML may be employed to reduce scan time and radiation dose by avoiding the acquisition of one or more phases of SPECT studies. For instance, Eisenberg et al. used ML to potentially avoid the acquisition of the rest phase in SPECT, as they developed an algorithm exclusively based on the stress myocardial phase in conjunction with multiple clinical features. They reported an accurate prediction of obstructive and high-risk CAD, with an AUC of 0.84 [74]. Hu and colleagues developed a ML tool which can predict per-vessel coronary revascularization within 90 days after stress/rest 99mTc-Sestamibi/Tetrofosmin (AUC = 0.79), even outperforming the interpretation of expert nuclear cardiologists [75].

ML may also improve automatic detection of myocardial perfusion abnormalities. In particular, a deep convolutional NN improved the detection rate of myocardial perfusion abnormalities from stress/rest SPECT performed with 99mTc-Tetrofosmin or 99mTc-Sestamibi with an AUC of 0.872 [76]. ML may also be used in analyzing PET myocardial perfusion data to predict the risk of adverse cardiovascular events [77].

Image Quality Improvement

Another application of ML, especially DL, is represented by improvements in image acquisition. Specifically, DL models may be trained to reduce image noise, artifacts, radiation dose, and inter- and intra-observer variation of measurements [78]. For instance, ML has been used to improve echocardiography acquisition, facilitating access to this imaging modality in the emergency setting. Narang et al. developed a DL software helping non-expert users to acquire exams of acceptable quality. They evaluate 240 exams from two academic hospitals, obtaining diagnostic echocardiography scans in 92.5–98.8% of patients [79].

Regarding cardiac CT, the main aim of ML is to obtain good quality images while reducing radiation dose. This may be achieved creating synthetic contrast-enhanced images from non-enhanced acquisition, thus also avoiding contrast injection [80]. Another possibility is to use low dose protocols, which unfortunately usually determine an increase in image noise [81]. For example, Wolterink et al. used a convolutional NN to automatically convert low dose CT images in higher quality images, comparable to routine-dose CT, enabling accurate coronary calcium scoring [82].

ML may also improve CMR image quality. In this setting, it can be used to reduce motion artifacts, which may strongly deteriorate image diagnostic quality. In particular, Küstner et al. used DL to retrospectively obtain high quality images from low quality ones, where motion artifacts were present. It is interesting to note that, while they obtain high quality images, some anatomical structures were erased or altered; therefore, this type of image processing requires further evaluation prior to introduction in clinical practice [83]. Furthermore, ML may be used to improve images reconstruction, thus improving quality and speeding up scan time [84]. For instance, Hauptmann used ML to reduce acquisition time in patients with congenital heart disease, achieving good image quality and also obtaining automated measurements of heart chambers which were comparable with those of expert radiologists [85].

Discussion

As shown in our review, radiomics and AI have numerous potential applications in the field of cardiovascular imaging. These range from improved image acquisition, higher inter-reader reproducibility, better diagnostic accuracy, and more personalized patient management. In the future, it may also enable automated and accurate prognosis prediction, while more short-medium term implementations could allow reduced artifacts, radiation dose, and scan time. Even though there is a constantly growing amount of studies performed using these tools, few of them are actually approved for clinical practice [86]. As a matter of fact, there are still some issues to overcome of which physicians and patients, as end users, should be aware.

First of all, methodological quality of radiomics and ML studies is frequently low. This has been demonstrated by multiple systematic reviews performed in other fields of medical imaging [12, 87,88,89]. Unfortunately, this finding has also been recently confirmed in the setting of cardiovascular imaging, in particular regarding CT and CMR research [90]. Out of 53 papers reviewed, the median quality score was only 19.4% (interquartile range = 11.1–33.3%), which is not satisfactory. On the other hand, median quality showed a positive trend over the years, even though it peaked at approximately 25%. This systematic review and quality assessment highlights the need for higher standards that should be expected for this area of research by journals, reviewers, and readers.

Specific limitations that lower the quality of studies in this area are also tied to inconsistencies in study design or presentation. For example, image acquisition protocols and preprocessing steps are frequently not described in detail in the papers. The limited scope of most radiomics research is also limiting its potential value, as exams are usually performed in a single institution, thus limiting assessment of model reproducibility and generalization on new data [91]. Another common issue is represented by the fact that almost all ML studies are retrospective in nature, which increases the risk of reporting bias [92]. These points lead to another concern, overfitting. This may be due to excessive tailoring of the ML model to the training population, poor quality of data, or its ineffective preprocessing and results in low ability to generalize [93]. In other words, the results obtained in one institution will not be replicable in another site, hindering the clinical applicability of the process. To overcome overfitting, the ideal solution is represented by the training of ML models on large multi-institutional datasets with appropriate data processing [94]. Finally, as highlighted in auditing of public imaging datasets, it is also crucial to evaluate the quality of medical images used for the training process [95, 96]. Low quality input data can only result in low quality models.

In ML, model interpretability and explainability still represent an open issue, especially for highly complex algorithms, especially DL. Intuitively, it is desirable for the decision process of a predictive model to be clearly presented, facilitating their adoption by physicians. This would also allow for greater involvement of the end user in evaluating the correctness of the model’s output and timely identification of biases or other issues [93]. On the other hand, the current technology does not allow for this type of information to be actually available or to realistically expect this in the next few years. Some domain experts have already proposed that our attention should not be focused on “understanding” DL models, but rather on requiring strong validation alone [97]. In any case, a consensus should be reached on the actual requirements of radiomics and ML software prior to their approval for clinical use. Unfortunately, many products are becoming commercially available with a still unsatisfactory amount of evidence [9].

Conclusions

The number of radiomics and ML-based tools will probably continue to increase in the future. Even in the light of current issues limiting their effective implementation in clinical practice, they still present the potential to positively impact cardiovascular imaging and improve patient outcome. Physicians must become well-versed in the basics of radiomics and familiar with good data science practices to be confident end users and retain a leadership role in this emerging domain of medical imaging.

Abbreviations

- AI:

-

Artificial intelligence

- TA:

-

Texture analysis

- ML:

-

Machine learning

- NN:

-

Neural network

- DL:

-

Deep learning

- LAV:

-

Left atrial volume

- CMR:

-

Cardiac magnetic resonance

- AUC:

-

Area under the receiver operating characteristic curve

- CAD:

-

Coronary artery disease

- LGE:

-

Late-gadolinium enhancement

- SPECT:

-

Single-photon emission computed tomography

References

Lambin P, Rios-Velazquez E, Leijenaar R, Carvalho S, van Stiphout RGPM, Granton P, Zegers CML, Gillies R, Boellard R, Dekker A, Aerts HJWL. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur J Cancer. 2012;48:441–6. https://doi.org/10.1016/j.ejca.2011.11.036.

Scalco E, Rizzo G, Texture analysis of medical images for radiotherapy applications, Br. J. Radiol. 90 (2017). https://doi.org/10.1259/bjr.20160642.

Lubner MG, Smith AD, Sandrasegaran K, Sahani DV, Pickhardt PJ. CT Texture analysis: definitions, applications, biologic correlates, and challenges. Radiographics. 2017;37:1483–503. https://doi.org/10.1148/rg.2017170056.

Kocak B, Durmaz ES, Ates E, Kilickesmez O. Radiomics with artificial intelligence: a practical guide for beginners. Diagnostic Interv Radiol. 2019;25:485–95. https://doi.org/10.5152/dir.2019.19321.

Choy G, Khalilzadeh O, Michalski M, Do S, Samir AE, Pianykh OS, Geis JR, Pandharipande PV, Brink JA, Dreyer KJ. Current applications and future impact of machine learning in radiology. Radiology. 2018;288:318–28. https://doi.org/10.1148/radiol.2018171820.

Cuocolo R, Ugga L, Imaging applications of artificial intelligence, Heal. J. 18 (2018).

Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, van der Laak JAWM, van Ginneken B, Sánchez CI. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. https://doi.org/10.1016/j.media.2017.07.005.

Zaharchuk G, Gong E, Wintermark M, Rubin D, Langlotz CP. Deep learning in neuroradiology. Am J Neuroradiol. 2018;39:1776–84. https://doi.org/10.3174/ajnr.A5543.

Tsuneta S, Oyama-Manabe N, Hirata K, Harada T, Aikawa T, Manabe O, Ohira H, Koyanagawa K, Naya M, Kudo K. Texture analysis of delayed contrast-enhanced computed tomography to diagnose cardiac sarcoidosis. Jpn J Radiol. 2021;39:442–50. https://doi.org/10.1007/s11604-020-01086-1.

Cuocolo R, Perillo T, De Rosa E, Ugga L, Petretta M, Current applications of big data and machine learning in cardiology., J. Geriatr. Cardiol. 16 (2019) 601–607. https://doi.org/10.11909/j.issn.1671-5411.2019.08.002.

Cuocolo R, Caruso M, Perillo T, Ugga L, Petretta M. Machine learning in oncology: a clinical appraisal. Cancer Lett. 2020;481:55–62. https://doi.org/10.1016/j.canlet.2020.03.032.

Ugga L, Perillo T, Cuocolo R, Stanzione A, Romeo V, Green R, Cantoni V, Brunetti A. Meningioma MRI radiomics and machine learning: systematic review, quality score assessment, and meta-analysis. Neuroradiology. 2021;63:1293–304. https://doi.org/10.1007/s00234-021-02668-0.

Perillo T, Cuocolo R, Ugga L. Radiomics in the imaging of brain gliomas: current role and future perspectives. Heal J. 2020;20:747–9.

Haq I-U, Haq I, Xu B, Artificial intelligence in personalized cardiovascular medicine and cardiovascular imaging, Cardiovasc Diagn Ther 11 (2021) 911–923. https://doi.org/10.21037/cdt.2020.03.09.

Sermesant M, Delingette H, Cochet H, Jaïs P, Ayache N. Applications of artificial intelligence in cardiovascular imaging. Nat Rev Cardiol. 2021;18:600–9. https://doi.org/10.1038/s41569-021-00527-2.

Ghorbani A, Ouyang D, Abid A, He B, Chen JH, Harrington RA, Liang DH, Ashley EA, Zou JY. Deep learning interpretation of echocardiograms. Npj Digit Med. 2020;3:10. https://doi.org/10.1038/s41746-019-0216-8.

B. JL, Z. MA, K. A, M. K, N. HN, H. TY, S. M, P. X, T. AM, S. S, Detecting clinically meaningful shape clusters in medical image data: metrics analysis for hierarchical clustering applied to healthy and pathological aortic arches, IEEE Trans. Biomed. Eng. 64 (2017) 2373–2383. https://doi.org/10.1109/TBME.2017.2655364.

Bagheri A, Groenhof TKJ, Asselbergs FW, Haitjema S, Bots ML, Veldhuis WB, de Jong PA, Oberski DL. Automatic prediction of recurrence of major cardiovascular events: a text mining study using chest X-ray reports. J Healthc Eng. 2021;2021:1–11. https://doi.org/10.1155/2021/6663884.

Narula J, Chandrashekhar Y, Ahmadi A, Abbara S, Berman DS, Blankstein R, Leipsic J, Newby D, Nicol ED, Nieman K, Shaw L, Villines TC, Williams M, Hecht HS, SCCT,. expert consensus document on coronary computed tomographic angiography: a report of the society of cardiovascular computed tomography. J Cardiovasc Comput Tomogr. 2021;15(2021):192–217. https://doi.org/10.1016/j.jcct.2020.11.001.

Italiano G, Fusini L, Mantegazza V, Tamborini G, Muratori M, Ghulam Ali S, Penso M, Garlaschè A, Gripari P, Pepi M, Novelties in 3D transthoracic echocardiography, J Clin Med 10 (2021) 408. https://doi.org/10.3390/jcm10030408.

Asch FM, Poilvert N, Abraham T, Jankowski M, Cleve J, Adams M, Romano N, Hong H, Mor-Avi V, Martin RP, Lang RM. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging. 2019;12:1–9. https://doi.org/10.1161/CIRCIMAGING.119.009303.

Scalco E, Rizzo G. Texture analysis of medical images for radiotherapy applications. Br J Radiol. 2017;90:20160642. https://doi.org/10.1259/bjr.20160642.

Salte IM, Østvik A, Smistad E, Melichova D, Nguyen TM, Karlsen S, Brunvand H, Haugaa KH, Edvardsen T, Lovstakken L, Grenne B. Artificial intelligence for automatic measurement of left ventricular strain in echocardiography. JACC Cardiovasc Imaging. 2021;14:1918–28. https://doi.org/10.1016/j.jcmg.2021.04.018.

Mor-Avi V, Yodwut C, Jenkins C, Khl H, Nesser HJ, Marwick TH, Franke A, Weinert L, Niel J, Steringer-Mascherbauer R, Freed BH, Sugeng L, Lang RM. Real-time 3D echocardiographic quantification of left atrial volume: multicenter study for validation with CMR. JACC Cardiovasc Imaging. 2012;5:769–77. https://doi.org/10.1016/j.jcmg.2012.05.011.

Huang M-S, Wang C-S, Chiang J-H, Liu P-Y, Tsai W-C. Automated recognition of regional wall motion abnormalities through deep neural network interpretation of transthoracic echocardiography. Circulation. 2020;142:1510–20. https://doi.org/10.1161/CIRCULATIONAHA.120.047530.

Thoenes M, Agarwal A, Grundmann D, Ferrero C, McDonald A, Bramlage P, Steeds RP, Narrative review of the role of artificial intelligence to improve aortic valve disease management, J. Thorac. Dis. 13 (2021) 396–404. https://doi.org/10.21037/jtd-20-1837.

Kagiyama N, Shrestha S, Cho JS, Khalil M, Singh Y, Challa A, Casaclang-Verzosa G, Sengupta PP. A low-cost texture-based pipeline for predicting myocardial tissue remodeling and fibrosis using cardiac ultrasound. EBioMedicine. 2020;54:102726. https://doi.org/10.1016/j.ebiom.2020.102726.

Loncaric F, Marti Castellote P-M, Sanchez-Martinez S, Fabijanovic D, Nunno L, Mimbrero M, Sanchis L, Doltra A, Montserrat S, Cikes M, Crispi F, Piella G, Sitges M, Bijnens B, Automated pattern recognition in whole-cardiac cycle echocardiographic data: capturing functional phenotypes with machine learning, J Am Soc Echocardiogr. 34 (2021) 1170–1183. https://doi.org/10.1016/j.echo.2021.06.014.

Fletcher AJ, Lapidaire W, Leeson P. Machine learning augmented echocardiography for diastolic function assessment. Front Cardiovasc Med. 2021;8:1–11. https://doi.org/10.3389/fcvm.2021.711611.

Neumann FJ, Sechtem U, Banning AP, Bonaros N, Bueno H, Bugiardini R, Chieffo A, Crea F, Czerny M, Delgado V, Dendale P, Knuuti J, Wijns W, Flachskampf FA, Gohlke H, Grove EL, James S, Katritsis D, Landmesser U, Lettino M, Matter CM, Nathoe H, Niessner A, Patrono C, Petronio AS, Pettersen SE, Piccolo R, Piepoli MF, Popescu BA, Räber L, Richter DJ, Roffi M, Roithinger FX, Shlyakhto E, Sibbing D, Silber S, Simpson IA, Sousa-Uva M, Vardas P, Witkowski A, Zamorano JL, Achenbach S, Agewall S, Barbato E, Bax JJ, Capodanno D, Cuisset T, Deaton C, Dickstein K, Edvardsen T, Escaned J, Funck-Brentano C, Gersh BJ, Gilard M, Hasdai D, Hatala R, Mahfoud F, Masip J, Muneretto C, Prescott E, Saraste A, Storey RF, Svitil P, Valgimigli M, Aboyans V, Baigent C, Collet JP, Dean V, Fitzsimons D, Gale CP, Grobbee DE, Halvorsen S, Hindricks G, Iung B, Jüni P, Katus HA, Leclercq C, Lewis BS, Merkely B, Mueller C, Petersen S, Touyz RM, Benkhedda S, Metzler B, Sujayeva V, Cosyns B, Kusljugic Z, Velchev V, Panayi G, Kala P, Haahr-Pedersen SA, Kabil H, Ainla T, Kaukonen T, Cayla G, Pagava Z, Woehrle J, Kanakakis J, Toth K, Gudnason T, Peace A, Aronson D, Riccio C, Elezi S, Mirrakhimov E, Hansone S, Sarkis A, Babarskiene R, Beissel J, Cassar Maempel AJ, Revenco V, de Grooth GJ, Pejkov H, Juliebø V, Lipiec P, Santos J, Chioncel O, Duplyakov D, Bertelli L, Dikic AD, Studencan M, Bunc M, Alfonso F, Back M, Zellweger M, Addad F, Yildirir A, Sirenko Y, Clapp B. ESC Guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J. 2019;41(2020):407–77. https://doi.org/10.1093/eurheartj/ehz425.

Zeleznik R, Foldyna B, Eslami P, Weiss J, Alexander I, Taron J, Parmar C, Alvi RM, Banerji D, Uno M, Kikuchi Y, Karady J, Zhang L, Scholtz J, Mayrhofer T, Lyass A, Mahoney TF, Massaro JM, Vasan RS, Douglas PS, Hoffmann U, Lu MT, Aerts HJWL. Deep convolutional neural networks to predict cardiovascular risk from computed tomography. Nat Commun. 2021;12:715. https://doi.org/10.1038/s41467-021-20966-2.

Kolossváry M, Karády J, Szilveszter B, Kitslaar P, Hoffmann U, Merkely B, Maurovich-Horvat P, Radiomic features are superior to conventional quantitative computed tomographic metrics to identify coronary plaques with napkin-ring sign, Circ Cardiovasc Imaging. 10 (2017). https://doi.org/10.1161/CIRCIMAGING.117.006843.

van Hamersvelt RW, Zreik M, Voskuil M, Viergever MA, Išgum I, Leiner T. Deep learning analysis of left ventricular myocardium in CT angiographic intermediate-degree coronary stenosis improves the diagnostic accuracy for identification of functionally significant stenosis. Eur Radiol. 2019;29:2350–9. https://doi.org/10.1007/s00330-018-5822-3.

Cheng K, Lin A, Yuvaraj J, Nicholls SJ, Wong DTL. Cardiac computed tomography radiomics for the non-invasive assessment of coronary inflammation. Cells. 2021;10:879. https://doi.org/10.3390/cells10040879.

Collet J-P, Thiele H, Barbato E, Barthélémy O, Bauersachs J, Bhatt DL, Dendale P, Dorobantu M, Edvardsen T, Folliguet T, Gale CP, Gilard M, Jobs A, Jüni P, Lambrinou E, Lewis BS, Mehilli J, Meliga E, Merkely B, Mueller C, Roffi M, Rutten FH, Sibbing D, Siontis GCM, Kastrati A, Mamas MA, Aboyans V, Angiolillo DJ, Bueno H, Bugiardini R, Byrne RA, Castelletti S, Chieffo A, Cornelissen V, Crea F, Delgado V, Drexel H, Gierlotka M, Halvorsen S, Haugaa KH, Jankowska EA, Katus HA, Kinnaird T, Kluin J, Kunadian V, Landmesser U, Leclercq C, Lettino M, Meinila L, Mylotte D, Ndrepepa G, Omerovic E, Pedretti RFE, Petersen SE, Petronio AS, Pontone G, Popescu BA, Potpara T, Ray KK, Luciano F, Richter DJ, Shlyakhto E, Simpson IA, Sousa-Uva M, Storey RF, Touyz RM, Valgimigli M, Vranckx P, Yeh RW, Barbato E, Barthélémy O, Bauersachs J, Bhatt DL, Dendale P, Dorobantu M, Edvardsen T, Folliguet T, Gale CP, Gilard M, Jobs A, Jüni P, Lambrinou E, Lewis BS, Mehilli J, Meliga E, Merkely B, Mueller C, Roffi M, Rutten FH, Sibbing D, Siontis GCM. ESC Guidelines for the management of acute coronary syndromes in patients presenting without persistent ST-segment elevation. Eur Heart J. 2020;42(2021):1289–367. https://doi.org/10.1093/eurheartj/ehaa575.

Hinzpeter R, Wagner MW, Wurnig MC, Seifert B, Manka R, Alkadhi H. Texture analysis of acute myocardial infarction with CT: First experience study. PLoS ONE. 2017;12:e0186876. https://doi.org/10.1371/journal.pone.0186876.

Hu W, Wu X, Dong D, Cui L-B, Jiang M, Zhang J, Wang Y, Wang X, Gao L, Tian J, Cao F. Novel radiomics features from CCTA images for the functional evaluation of significant ischaemic lesions based on the coronary fractional flow reserve score. Int J Cardiovasc Imaging. 2020;36:2039–50. https://doi.org/10.1007/s10554-020-01896-4.

Antonopoulos AS, Sanna F, Sabharwal N, Thomas S, Oikonomou EK, Herdman L, Margaritis M, Shirodaria C, Kampoli A-M, Akoumianakis I, Petrou M, Sayeed R, Krasopoulos G, Psarros C, Ciccone P, Brophy CM, Digby J, Kelion A, Uberoi R, Anthony S, Alexopoulos N, Tousoulis D, Achenbach S, Neubauer S, Channon KM, Antoniades C, Detecting human coronary inflammation by imaging perivascular fat, Sci. Transl. Med. 9 (2017). https://doi.org/10.1126/scitranslmed.aal2658.

Lin A, Kolossváry M, Yuvaraj J, Cadet S, McElhinney PA, Jiang C, Nerlekar N, Nicholls SJ, Slomka PJ, Maurovich-Horvat P, Wong DTL, Dey D. Myocardial infarction associates with a distinct pericoronary adipose tissue radiomic phenotype. JACC Cardiovasc Imaging. 2020;13:2371–83. https://doi.org/10.1016/j.jcmg.2020.06.033.

Mannil M, Kato K, Manka R, von Spiczak J, Peters B, Cammann VL, Kaiser C, Osswald S, Nguyen TH, Horowitz JD, Katus HA, Ruschitzka F, Ghadri JR, Alkadhi H, Templin C. Prognostic value of texture analysis from cardiac magnetic resonance imaging in patients with Takotsubo syndrome: a machine learning based proof-of-principle approach. Sci Rep. 2020;10:20537. https://doi.org/10.1038/s41598-020-76432-4.

Knuuti J, Wijns W, Saraste A, Capodanno D, Barbato E, Funck-Brentano C, Prescott E, Storey RF, Deaton C, Cuisset T, Agewall S, Dickstein K, Edvardsen T, Escaned J, Gersh BJ, Svitil P, Gilard M, Hasdai D, Hatala R, Mahfoud F, Masip J, Muneretto C, Valgimigli M, Achenbach S, Bax JJ, Neumann F-J, Sechtem U, Banning AP, Bonaros N, Bueno H, Bugiardini R, Chieffo A, Crea F, Czerny M, Delgado V, Dendale P, Flachskampf FA, Gohlke H, Grove EL, James S, Katritsis D, Landmesser U, Lettino M, Matter CM, Nathoe H, Niessner A, Patrono C, Petronio AS, Pettersen SE, Piccolo R, Piepoli MF, Popescu BA, Räber L, Richter DJ, Roffi M, Roithinger FX, Shlyakhto E, Sibbing D, Silber S, Simpson IA, Sousa-Uva M, Vardas P, Witkowski A, Zamorano JL, Achenbach S, Agewall S, Barbato E, Bax JJ, Capodanno D, Cuisset T, Deaton C, Dickstein K, Edvardsen T, Escaned J, Funck-Brentano C, Gersh BJ, Gilard M, Hasdai D, Hatala R, Mahfoud F, Masip J, Muneretto C, Prescott E, Saraste A, Storey RF, Svitil P, Valgimigli M, Windecker S, Aboyans V, Baigent C, Collet J-P, Dean V, Delgado V, Fitzsimons D, Gale CP, Grobbee D, Halvorsen S, Hindricks G, Iung B, Jüni P, Katus HA, Landmesser U, Leclercq C, Lettino M, Lewis BS, Merkely B, Mueller C, Petersen S, Petronio AS, Richter DJ, Roffi M, Shlyakhto E, Simpson IA, Sousa-Uva M, Touyz RM, Benkhedda S, Metzler B, Sujayeva V, Cosyns B, Kusljugic Z, Velchev V, Panayi G, Kala P, Haahr-Pedersen SA, Kabil H, Ainla T, Kaukonen T, Cayla G, Pagava Z, Woehrle J, Kanakakis J, Tóth K, Gudnason T, Peace A, Aronson D, Riccio C, Elezi S, Mirrakhimov E, Hansone S, Sarkis A, Babarskiene R, Beissel J, Maempel AJC, Revenco V, de Grooth GJ, Pejkov H, Juliebø V, Lipiec P, Santos J, Chioncel O, Duplyakov D, Bertelli L, Dikic AD, Studenčan M, Bunc M, Alfonso F, Bäck M, Zellweger M, Addad F, Yildirir A, Sirenko Y, Clapp B. ESC Guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J. 2019;41(2020):407–77. https://doi.org/10.1093/eurheartj/ehz425.

Libby P, Ridker PM, Maseri A. Inflammation and atherosclerosis. Circulation. 2002;105:1135–43. https://doi.org/10.1161/hc0902.104353.

Liu X, Mo X, Zhang H, Yang G, Shi C, Hau WK. A 2-year investigation of the impact of the computed tomography–derived fractional flow reserve calculated using a deep learning algorithm on routine decision-making for coronary artery disease management. Eur Radiol. 2021;31:7039–46. https://doi.org/10.1007/s00330-021-07771-7.

Martin SS, Mastrodicasa D, van Assen M, De Cecco CN, Bayer RR, Tesche C, Varga-Szemes A, Fischer AM, Jacobs BE, Sahbaee P, Griffith LP, Matuskowitz AJ, Vogl TJ, Schoepf UJ. Value of Machine Learning–based coronary CT fractional flow reserve applied to triple-rule-out CT angiography in acute chest pain. Radiol Cardiothorac Imaging. 2020;2:e190137. https://doi.org/10.1148/ryct.2020190137.

Ebrahimian S, Digumarthy SR, Homayounieh F, Primak A, Lades F, Hedgire S, Kalra MK. Use of radiomics to differentiate left atrial appendage thrombi and mixing artifacts on single-phase CT angiography. Int J Cardiovasc Imaging. 2021;37:2071–8. https://doi.org/10.1007/s10554-021-02178-3.

Nam K, Suh YJ, Han K, Park SJ, Kim YJ, Choi BW. Value of computed tomography radiomic features for differentiation of periprosthetic mass in patients with suspected prosthetic valve obstruction. Circ Cardiovasc Imaging. 2019;12:1–10. https://doi.org/10.1161/CIRCIMAGING.119.009496.

Qin L, Chen C, Gu S, Zhou M, Xu Z, Ge Y, Yan F, Yang W. A radiomic approach to predict myocardial fibrosis on coronary CT angiography in hypertrophic cardiomyopathy. Int J Cardiol. 2021;337:113–8. https://doi.org/10.1016/j.ijcard.2021.04.060.

A. Esposito, A. Palmisano, S. Antunes, C. Colantoni, P.M.V. Rancoita, D. Vignale, F. Baratto, P. Della Bella, A. Del Maschio, F. De Cobelli, Assessment of remote myocardium heterogeneity in patients with ventricular tachycardia using texture analysis of late iodine enhancement (LIE) cardiac computed tomography (cCT) images, Mol. Imaging Biol. 20 (2018) 816–825. https://doi.org/10.1007/s11307-018-1175-1.

Tsuneta S, Oyama N, Kenji M, Taisuke H, Tadao H, Osamu A, Texture analysis of delayed contrast ‑ enhanced computed tomography to diagnose cardiac sarcoidosis, Jpn J Radiol. (2021). https://doi.org/10.1007/s11604-020-01086-1.

Kay FU, Abbara S, Joshi PH, Garg S, Khera A, Peshock RM. Identification of high-risk left ventricular hypertrophy on calcium scoring cardiac computed tomography scans. Circ Cardiovasc Imaging. 2020;13:1–11. https://doi.org/10.1161/CIRCIMAGING.119.009678.

Cetin I, Sanroma G, Petersen SE, Napel S, Camara O, Ballester M-AG, Lekadir K, A radiomics approach to computer-aided diagnosis with cardiac cine-MRI, in: Int. Work. Stat. Atlases Comput. Model. Hear., 2018: pp. 82–90. https://doi.org/10.1007/978-3-319-75541-0_9.

Cetin I, Raisi-Estabragh Z, Petersen SE, Napel S, Piechnik SK, Neubauer S, Gonzalez Ballester MA, Camara O, Lekadir K, Radiomics signatures of cardiovascular risk factors in cardiac MRI: results from the UK Biobank, Front. Cardiovasc. Med. 7 (2020) 1–12. https://doi.org/10.3389/fcvm.2020.591368.

Chen B, An D, He J, Wu C-W, Yue T, Wu R, Shi R, Eteer K, Joseph B, Hu J, Xu J-R, Wu L-M, Pu J. Myocardial extracellular volume fraction radiomics analysis for differentiation of reversible versus irreversible myocardial damage and prediction of left ventricular adverse remodeling after ST-elevation myocardial infarction. Eur Radiol. 2021;31:504–14. https://doi.org/10.1007/s00330-020-07117-9.

Engan K, Eftestol T, Orn S, Kvaloy JT, Woie L, Exploratory data analysis of image texture and statistical features on myocardium and infarction areas in cardiac magnetic resonance images, in: 2010 Annu. Int. Conf. IEEE Eng. Med. Biol., IEEE, 2010: pp. 5728–5731. https://doi.org/10.1109/IEMBS.2010.5627866.

Larroza A, López-Lereu MP, Monmeneu JV, Gavara J, Chorro FJ, Bodí V, Moratal D. Texture analysis of cardiac cine magnetic resonance imaging to detect nonviable segments in patients with chronic myocardial infarction. Med Phys. 2018;45:1471–80. https://doi.org/10.1002/mp.12783.

Ma Q, Ma Y, Yu T, Sun Z, Hou Y. Radiomics of non-contrast-enhanced T1 mapping: diagnostic and predictive performance for myocardial injury in acute ST-segment-elevation myocardial infarction. Korean J Radiol. 2021;22:535. https://doi.org/10.3348/kjr.2019.0969.

Zhang N, Yang G, Gao Z, Xu C, Zhang Y, Shi R, Keegan J, Xu L, Zhang H, Fan Z, Firmin D. Deep learning for diagnosis of chronic myocardial infarction on nonenhanced cardiac cine MRI. Radiology. 2019;291:606–17. https://doi.org/10.1148/radiol.2019182304.

Eftestøl T, Woie L, Engan K, Kvaløy JT, Nilsen DWT, Ørn S, Texture analysis to assess risk of serious arrhythmias after myocardial infarction, (2012) 365–368.

Baessler B, Luecke C, Lurz J, Klingel K, Das A, von Roeder M, de Waha-Thiele S, Besler C, Rommel K-P, Maintz D, Gutberlet M, Thiele H, Lurz P. Cardiac MRI and texture analysis of myocardial T1 and T2 maps in myocarditis with acute versus chronic Symptoms of heart failure. Radiology. 2019;292:608–17. https://doi.org/10.1148/radiol.2019190101.

Neisius U, El-Rewaidy H, Nakamori S, Rodriguez J, Manning WJ, Nezafat R. Radiomic Analysis of Myocardial Native T1 Imaging discriminates between hypertensive heart disease and hypertrophic cardiomyopathy. JACC Cardiovasc Imaging. 2019;12:1946–54. https://doi.org/10.1016/j.jcmg.2018.11.024.

Alis D, Guler A, Yergin M, Asmakutlu O. Assessment of ventricular tachyarrhythmia in patients with hypertrophic cardiomyopathy with machine learning-based texture analysis of late gadolinium enhancement cardiac MRI, Diagn. Interv. Imaging. 2020;101:137–46. https://doi.org/10.1016/j.diii.2019.10.005.

Cheng S, Fang M, Cui C, Chen X, Yin G, Prasad SK, Dong D, Tian J, Zhao S. LGE-CMR-derived texture features reflect poor prognosis in hypertrophic cardiomyopathy patients with systolic dysfunction: preliminary results. Eur Radiol. 2018;28:4615–24. https://doi.org/10.1007/s00330-018-5391-5.

Baeßler B, Mannil M, Maintz D, Alkadhi H, Manka R. Texture analysis and machine learning of non-contrast T1-weighted MR images in patients with hypertrophic cardiomyopathy—Preliminary results. Eur J Radiol. 2018;102:61–7. https://doi.org/10.1016/j.ejrad.2018.03.013.

Wang J, Yang F, Liu W, Sun J, Han Y, Li D, Gkoutos GV, Zhu Y, Chen Y. Radiomic analysis of native T 1 mapping images discriminates between MYH7 and MYBPC3-related hypertrophic cardiomyopathy. J Magn Reson Imaging. 2020;52:1714–21. https://doi.org/10.1002/jmri.27209.

Shao X, Sun Y, Xiao K, Zhang Y, Zhang W, Kou Z, Cheng J. Texture analysis of magnetic resonance T1 mapping with dilated cardiomyopathy. Medicine (Baltimore). 2018;97:e12246. https://doi.org/10.1097/MD.0000000000012246.

Sammani A, Baas AF, Asselbergs FW, te Riele ASJM. Diagnosis and risk prediction of dilated cardiomyopathy in the era of big data and genomics. J Clin Med. 2021;10:921. https://doi.org/10.3390/jcm10050921.

Bustamante M, Viola F, Carlhäll C, Ebbers T. Using deep learning to emulate the use of an external contrast agent in cardiovascular 4D Flow MRI. J Magn Reson Imaging. 2021;54:777–86. https://doi.org/10.1002/jmri.27578.

Saeed M. Editorial For “Reduction of contrast agent dose in cardiovascular mr angiography using deep learning.” J Magn Reson Imaging. 2021;54:806–7. https://doi.org/10.1002/jmri.27618.

Neisius U, El-Rewaidy H, Kucukseymen S, Tsao CW, Mancio J, Nakamori S, Manning WJ, Nezafat R. Texture signatures of native myocardial T 1 as novel imaging markers for identification of hypertrophic cardiomyopathy patients without scar. J Magn Reson Imaging. 2020;52:906–19. https://doi.org/10.1002/jmri.27048.

van Dijk JD, Mouden M, Ottervanger JP, van Dalen JA, Knollema S, Slump CH, Jager PL. Value of attenuation correction in stress-only myocardial perfusion imaging using CZT-SPECT. J Nucl Cardiol. 2017;24:395–401. https://doi.org/10.1007/s12350-015-0374-2.

Betancur J, Rubeaux M, Fuchs TA, Otaki Y, Arnson Y, Slipczuk L, Benz DC, Germano G, Dey D, Lin CJ, Berman DS, Kaufmann PA, Slomka PJ. Automatic valve plane localization in myocardial perfusion SPECT/CT by machine learning: anatomic and clinical validation. J Nucl Med. 2017;58:961–7. https://doi.org/10.2967/jnumed.116.179911.

Apostolopoulos ID, Apostolopoulos DI, Spyridonidis TI, Papathanasiou ND, Panayiotakis GS. Multi-input deep learning approach for cardiovascular disease diagnosis using myocardial perfusion imaging and clinical data. Phys Medica. 2021;84:168–77. https://doi.org/10.1016/j.ejmp.2021.04.011.

Betancur J, Commandeur F, Motlagh M, Sharir T, Einstein AJ, Bokhari S, Fish MB, Ruddy TD, Kaufmann P, Sinusas AJ, Miller EJ, Bateman TM, Dorbala S, Di Carli M, Germano G, Otaki Y, Tamarappoo BK, Dey D, Berman DS, Slomka PJ. Deep learning for prediction of obstructive disease from fast myocardial perfusion SPECT. JACC Cardiovasc Imaging. 2018;11:1654–63. https://doi.org/10.1016/j.jcmg.2018.01.020.

Eisenberg E, Miller RJH, Hu L, Rios R, Betancur J, Azadani P, Han D, Sharir T, Einstein AJ, Bokhari S, Fish MB, Ruddy TD, Kaufmann PA, Sinusas AJ, Miller EJ, Bateman TM, Dorbala S, Di Carli M, Liang JX, Otaki Y, Tamarappoo BK, Dey D, Berman DS, Slomka PJ, Diagnostic safety of a machine learning-based automatic patient selection algorithm for stress-only myocardial perfusion SPECT, J Nucl Cardiol. (2021). https://doi.org/10.1007/s12350-021-02698-4.

Hu L, Betancur J, Sharir T, Einstein AJ, Bokhari S, Fish MB, Ruddy TD, Kaufmann PA, Sinusas AJ, Miller EJ, Bateman TM, Dorbala S, Di Carli M, Germano G, Commandeur F, Liang JX, Otaki Y, Tamarappoo BK, Dey D, Berman DS, Slomka PJ. Machine learning predicts per-vessel early coronary revascularization after fast myocardial perfusion SPECT: results from multicentre REFINE SPECT registry. Eur Hear J - Cardiovasc Imaging. 2020;21:549–59. https://doi.org/10.1093/ehjci/jez177.

Liu H, Wu J, Miller EJ, Liu C, Yaqiang, Liu, Y. Liu, Diagnostic accuracy of stress-only myocardial perfusion SPECT improved by deep learning, Eur. J. Nucl. Med. Mol. Imaging. 48 (2021) 2793–2800. https://doi.org/10.1007/s00259-021-05202-9.

Slart RHJA, Williams MC, Juarez-Orozco LE, Rischpler C, Dweck MR, Glaudemans AWJM, Gimelli A, Georgoulias P, Gheysens O, Gaemperli O, Habib G, Hustinx R, Cosyns B, Verberne HJ, Hyafil F, Erba PA, Lubberink M, Slomka P, Išgum I, Visvikis D, Kolossváry M, Saraste A. Position paper of the EACVI and EANM on artificial intelligence applications in multimodality cardiovascular imaging using SPECT/CT, PET/CT, and cardiac CT. Eur J Nucl Med Mol Imaging. 2021;48:1399–413. https://doi.org/10.1007/s00259-021-05341-z.

van den Oever LB, Vonder M, van Assen M, van Ooijen PMA, de Bock GH, Xie XQ, Vliegenthart R. Application of artificial intelligence in cardiac CT: from basics to clinical practice. Eur J Radiol. 2020;128:108969. https://doi.org/10.1016/j.ejrad.2020.108969.

Narang A, Bae R, Hong H, Thomas Y, Surette S, Cadieu C, Chaudhry A, Martin RP, McCarthy PM, Rubenson DS, Goldstein S, Little SH, Lang RM, Weissman NJ, Thomas JD. Utility of a deep-learning algorithm to guide novices to acquire echocardiograms for limited diagnostic use. JAMA Cardiol. 2021;6:624. https://doi.org/10.1001/jamacardio.2021.0185.

Santini G, Zumbo LM, Martini N, Valvano G, Leo A, Ripoli A, Avogliero F, Chiappino D, Della Latta D, Synthetic contrast enhancement in cardiac CT with deep learning, (2018) 1–8. http://arxiv.org/abs/1807.01779.

Padgett J, Biancardi AM, Henschke CI, Yankelevitz D, Reeves AP. Local noise estimation in low-dose chest CT images. Int J Comput Assist Radiol Surg. 2014;9:221–9. https://doi.org/10.1007/s11548-013-0930-7.

Wolterink JM, Leiner T, Viergever MA, Išgum I. Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging. 2017;36:2536–45. https://doi.org/10.1109/TMI.2017.2708987.

Küstner T, Armanious K, Yang J, Yang B, Schick F, Gatidis S. Retrospective correction of motion-affected MR images using deep learning frameworks. Magn Reson Med. 2019;82:1527–40. https://doi.org/10.1002/mrm.27783.

Montalt-Tordera J, Muthurangu V, Hauptmann A, Steeden JA. Machine learning in magnetic resonance imaging: image reconstruction. Phys Medica. 2021;83:79–87. https://doi.org/10.1016/j.ejmp.2021.02.020.

Hauptmann A, Arridge S, Lucka F, Muthurangu V, Steeden JA. Real-time cardiovascular MR with spatio-temporal artifact suppression using deep learning–proof of concept in congenital heart disease. Magn Reson Med. 2019;81:1143–56. https://doi.org/10.1002/mrm.27480.

van Timmeren JE, Cester D, Tanadini-Lang S, Alkadhi H, Baessler B. Radiomics in medical imaging—“how-to” guide and critical reflection. Insights Imaging. 2020;11:91. https://doi.org/10.1186/s13244-020-00887-2.

Stanzione A, Gambardella M, Cuocolo R, Ponsiglione A, Romeo V, Imbriaco M. prostate MRI radiomics: a systematic review and radiomic quality score assessment. Eur J Radiol. 2020;129:109095. https://doi.org/10.1016/j.ejrad.2020.109095.

Chetan MR, Gleeson FV. Radiomics in predicting treatment response in non-small-cell lung cancer: current status, challenges and future perspectives. Eur Radiol. 2021;31:1049–58. https://doi.org/10.1007/s00330-020-07141-9.

Zhong J, Hu Y, Si L, Jia G, Xing Y, Zhang H, Yao W. A systematic review of radiomics in osteosarcoma: utilizing radiomics quality score as a tool promoting clinical translation. Eur Radiol. 2021;31:1526–35. https://doi.org/10.1007/s00330-020-07221-w.

Ponsiglione A, Stanzione A, Cuocolo R, Ascione R, Gambardella M, De Giorgi M, Nappi C, Cuocolo A, Imbriaco M, Cardiac CT and MRI radiomics: systematic review of the literature and radiomics quality score assessment, Eur. Radiol. (2021). https://doi.org/10.1007/s00330-021-08375-x.

Lambin P, Leijenaar RTH, Deist TM, Peerlings J, de Jong EEC, van Timmeren J, Sanduleanu S, Larue RTHM, Even AJG, Jochems A, van Wijk Y, Woodruff H, van Soest J, Lustberg T, Roelofs E, van Elmpt W, Dekker A, Mottaghy FM, Wildberger JE, Walsh S. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol. 2017;14:749–62. https://doi.org/10.1038/nrclinonc.2017.141.

Sauerbrei W, Taube SE, McShane LM, Cavenagh MM, Altman DG. Reporting recommendations for tumor marker prognostic studies (REMARK): an abridged explanation and elaboration. JNCI J Natl Cancer Inst. 2018;110:803–11. https://doi.org/10.1093/jnci/djy088.

Challen R, Denny J, Pitt M, Gompels L, Edwards T, Tsaneva-Atanasova K. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. 2019;28:231–7. https://doi.org/10.1136/bmjqs-2018-008370.

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–8. https://doi.org/10.1038/nature21056.

Oakden-Rayner L. Exploring large-scale public medical image datasets. Acad Radiol. 2020;27:106–12. https://doi.org/10.1016/j.acra.2019.10.006.

Cuocolo R, Stanzione A, Castaldo A, De Lucia DR, Imbriaco M. Quality control and whole-gland, zonal and lesion annotations for the PROSTATEx challenge public dataset. Eur J Radiol. 2021;138:109647. https://doi.org/10.1016/j.ejrad.2021.109647.

Ghassemi M, Oakden-Rayner L, Beam AL. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Heal. 2021;3:e745–50. https://doi.org/10.1016/S2589-7500(21)00208-9.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Human and Animal Rights and Informed Consent

All reported studies/experiments with human or animal subjects performed by the authors have been previously published and complied with all applicable ethical standards (including the Helsinki declaration and its amendments, institutional/national research committee standards, and international/national/institutional guidelines).

Conflict of Interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Cardiac Nuclear Imaging

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Spadarella, G., Perillo, T., Ugga, L. et al. Radiomics in Cardiovascular Disease Imaging: from Pixels to the Heart of the Problem. Curr Cardiovasc Imaging Rep 15, 11–21 (2022). https://doi.org/10.1007/s12410-022-09563-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12410-022-09563-z