Abstract

Being aware of mutual influences between individuals is a major requirement a robot to efficiently operate in human populated environments. This is especially true for the navigation among humans with its mutual avoidance maneuvers. While humans easily manage this task, robotic systems are still facing problems. Most of the recent approaches concentrate on predicting the motions of humans individually and deciding afterwards. Thereby, interactivity is mostly neglected. In this work, we go one step back and focus on understanding the underlying principle of human decision making in the presence of multiple humans. Non-cooperative game theory is applied to formulate the problem of predicting the decisions of multiple humans that interact which each other during navigation. Therefore, we use the theory of Nash equilibria in static and dynamic games where different cost functions from literature rate the payoffs of the individual humans. The approach anticipates collisions and additionally reasons about several avoidance maneuvers of all humans. For the evaluation of the game theoretic approach we recorded trajectories of humans passing each other. The evaluation shows that game theory is able to reproduce the decision process of humans more accurately than a decision model that predicts humans individually.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Robots have evolved immensely in the past decades, but there is still a long way to go in terms of enabling them to permanently operate in human populated environments. First achievements include robots that navigate autonomously to unknown places [7, 53] or guide people at fairs [62]. Apart from that, autonomous vehicles already navigate in urban environments among human-driven vehicles [81]. Other robots interact physically with humans, for example they hand over objects [72] or assist elderly people [63]. These applications show that the barrier between robots and humans has been fading, which leads to a major challenge in robotics: ensuring reliable and socially accepted motion in order to realize the human-robot coexistence. A vital factor for achieving this goal is the awareness of the mutual influence between human individuals and the robotic systems. Modern robotic systems have to consider that humans are interaction-aware: they reason about the impact of possible future actions on the surrounding and expect similar anticipation from everyone else [6, 55, 61]. Of our particular interest is the interaction-aware navigation of humans, meaning the conditionally cooperative behavior that leads to mutual avoidance maneuvers. Common motion planners neglect this notion and focus on independent motion prediction of individuals. Thereby, the prediction can be unreliable because it is indifferent to humans that might avoid the robot. It is important, that the future trajectory of the human depends on the motion of the surrounding humans and on the motion of the robot. Consequences are unnecessary detours, inefficient stop and go motions, or a complete standstill if the planner fails to identify a collision-free trajectory. The worst case is a collision with a human. Trautman et al. [78] argue that these problems would still exist with perfect prediction and that they could only be resolved by anticipating the human mutual collision avoidance. This clarifies that the individual motion prediction of humans and a consecutive planning of the robot motion is a poor model for human decision making. Hence, a main reason of robotic problems lies in an insufficient model of the human decision process. That is why we model human decision making in the presence of multiple humans during navigation. We formulate a decision model that considers interaction-awareness and reasoning. On top, the model can cope with human diversity: humans decide individually (decentralized planning) and have different preferences (e.g., speed, goal).

In this paper, the problem formulation of interaction-aware decision making is based on game theory. Game theory is “the study of mathematical models of conflict and cooperation between intelligent rational decision-makers” [51, p. 1]. It extends the traditional optimal control theory to a decentralized multi-agent decision problem [3, p. 3]. Thus, prediction and planning are considered simultaneously. We specifically choose to use game theory because it is a mathematical formulation and incorporates reasoning about possible actions of others and consequences of interdependencies, i.e. interaction-awareness. It further allows for individual decision making and individual utility functions that capture preferences. Its strength lies within its generalizability. Accordingly, a variety of modeling approaches exists, as well as diverse solution concepts that aim to predict the decision of agents, for example which trajectory they will take. Within this work, our focus lies on the solution concept of Nash equilibria in non-cooperative games. These are equilibria where no one gains anything by only changing the own decision.

General Idea: Approximating the decision making of humans during interaction-aware navigation with the theory of Nash equilibria in non-cooperative games.

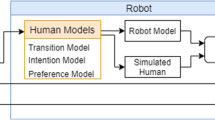

In the following, two possible ways to model human navigation are presented. One model assumes simultaneous decision making, the other one sequential decision making. Both models are combined with cost functions from literature. We evaluate for which of these combinations, Nash’s theory reproduces the navigational decisions of humans best. Thereby, the evaluation is based on captured human motion data to ensure real human behavior. Additionally, the game theoretic approach is compared with a common prediction based decision model. Our intention is to draw further conclusions about human navigational behavior and to highlight the potential of game theory for this problem. Note, that the presented work focuses on humans and on modeling their decisions. We do not yet present a motion prediction or motion planning algorithm for robots. However, the derived knowledge can improve motion prediction of humans and robot motion planning, as well as the social acceptance of robots. If the behavior of a robot is based on a human-like decision process, its intentions are far easier to interpret and as a result, a human interaction partner feels more secure [12, 77] (Fig. 1).

This paper is organized as follows. Sect. 2 surveys the work related to human motion analysis and interaction-aware navigation. The next section gives an outline of the game theoretic method used to analyze human motion (Sect. 3); two different models and five possible cost functions are presented. The experimental setup and the evaluation method are discussed in Sect. 4, followed by the results in Sect. 5, and possible extensions in Sect. 6.

2 Related Work

The problem of modeling interaction-awareness of several agents has been addressed in different areas including human motion analysis, computer animation and robot motion planning. It is particularly attractive for a branch within the latter field—the socially-aware robot navigation [39, 66]. Related experiments and motion planners that consider interactivity are presented in this section. Additionally, the section elaborates about applications of game theory in motion planning and decision making problems.

Various groups of researches have studied human collision avoidance during walking [5, 6, 15, 16, 31, 54, 55, 61]. They have been interested in when, where, as well as to which extent humans adjust their path or velocity to avoid a collision with another dynamic object. All studies agree that humans anticipate the future motion of dynamic objects and possible collisions. That means that humans include prediction into their own motion planning and do not solely react. However, parts of these studies neglect the interaction-awareness of humans during walking by only considering avoidance maneuvers with a passive, dynamic object. For example, the subjects of the studies by Cinelli and Patla [15, 16] had to avoid a human-shaped doll that was moving towards them; Basili et al. [5] and Huber et al. [31] asked their participants to cross paths with a non-reacting human.

In contrast are the studies from Pettré et al. [61], van Basten et al. [6], and Olivier et al. [54, 55]. These authors told two participants to avoid a collision, which revealed that humans collaboratively adjust their gait. Interestingly, the amount of adjustment was unequally distributed [55, 61]: the person passing first had less effort because s/he mainly adopted the velocity, whereas the one giving way adjusted both, velocity and path. In summary, analyzing human locomotor trajectories shows up the important characteristics of human collision avoidance. This can be used to evaluate or enhance the human-likeness of motion models. Unfortunately, it does not reveal how to reproduce human avoidance behavior in order to use it for motion prediction or motion planning.

Researchers have often based human motion models on repulsive forces acting on particles. That has been especially popular for crowd simulations: Pelechano et al. [59] or Sud et al. [76] employ the social forces model [28] where the agents are exposed to different repulsive and attractive forces depending on their relative distances; Heïgeas et al. [27] define forces in analogy to a spring-damper system with varying stiffness and viscosity values; and Treuille et al. [79] use a potential field approach. However, we refrain from elaborating this field deeper because most works are based on reactive approaches and neglect that humans include prediction in their motion planning. While this may be appropriate for high density crowds, reactive approaches struggle—according to [5, 31, 61]—with creating locally realistic motions in low or medium density crowds.

Trautman et al. [78] focus on these medium density crowds and plan further ahead by relying on Gaussian processes. The authors define the “Freezing Robot Problem”: once the environment gets to crowded, the planner rates all possible maneuvers as unsafe due to increasing prediction uncertainty. As a result, the robot “freezes” or performs unnecessary detours. They argue that this problem would still exist, even without uncertainty and with perfect prediction. It could be resolved by anticipating the human collaborative collision avoidance. They developed a non-parametric statistical model based on Gaussian processes that estimates crowd interaction from data. Thereby, independent Gaussian processes are coupled by a repulsive force between the agents. Experiments verified that the interactive algorithm outperforms a merely reactive one.

An earlier approach was shown by Reynolds [65]. He uses different steering behaviors to simulate navigating agents. One of these behaviors—the unaligned collision avoidance—predicts future collisions based on a constant velocity assumption and gets the agents to adjust steering and velocity to avoid state-time space leading to a collision.

In contrast to this rule based method are approaches based on velocity obstacles [22], like its probabilistic extensions [37]. Van den Berg et al. [9] combine a precomputed roadmap with so-called reciprocal velocity obstacles. This approach is updated in [10] to the optimal reciprocal collision avoidance that guarantees collision-free navigation for multiple robots assuming a holonomic motion model. Further assumptions are that each robot possesses perfect knowledge about the shape, position and velocity of other robots and objects in the environment. Extensions that incorporate kinematic and dynamic constraints exist in [1, 74].

However, Pettré et al. [61] state that the works in [65] and [9] lack to simulate the large variety of the human behavior because they rely on near-constant anticipation-times and on the common knowledge that all agents apply the same avoidance strategy. Instead, they presented an approach which produces more human-like trajectories: they solve pairwise interactions with a geometrical model based on the relative positions and velocities of the agents. It is tuned with experimental data and analyzed according its validity for crowds by Ondřej et al. [56]. The authors state to perform better, in a sense that the travel duration of the agents is shorter, when compared to [9] or [28].

Shiomi et al. [71] developed a robot that successfully navigates within a shopping mall. It relies on an extended social force model that includes the time to collision as a parameter to compute an elliptic repulsion field around an agent [85]. Mutual collision avoidance is implicitly introduced by calibrating the repulsion force with human avoidance trajectories. A field trial revealed that the robot using this method was perceived as safer than if it used a time varying dynamic window approach [70]. Nevertheless, most of the mentioned methods assume that an agent’s behavior can be described by a limited set of rules.

Recently, learning based approaches are becoming increasingly popular. Lerner et al. [43] extract trajectories from video data to simulate human crowds. They create a database containing example navigation behaviors that are described by the spatio-temporal relationship between nearby persons and objects. During the simulation the current state of the environment is compared with the entries of the database. The most similar entry defines the trajectory of the agent, thus, implicitly creates reactive behavior. This means that the variety of the behaviors is limited to the size of the database. Moreover, all individuals need to be controlled globally.

Luber et al. [46] proposed an unsupervised learning approach based on clustering observed, pairwise navigation behaviors into different motion prototypes. These prototypes are defined by the relative distance of two agents over time and their approaching angle. They are used to derive a dynamic cost map for a Theta\(^*\) planner that generates a path at each time step.

Apart from that, many researchers rely on inverse reinforcement learning techniques. Kuderer et al. [40] learn a certain navigation policy while a robot is tele-operated. The principle of maximum entropy [88] is used to learn the weights of the feature functions. In particular, homotopy classes (i.e., on which side to pass an object) are considered. Kretzschmar et al. [38] improve this method further; they used a joint mixture distribution that consists on one hand of a discrete distribution over these homotopy classes, and on the other hand of continuous distributions over the trajectories in each homotopy class. An experiment revealed that the resulting trajectories are perceived as more human-like than the one produced by their previous method [40] or the social force model [28].

An alternative is given by Henry et al. [29] who learn how a simulated agent has to join a pedestrian flow by using the density and average flow directions as features. Similarly, Kim and Pineau [36] proposed to use the population density and velocity of the surrounding objects. The effect of the different features in [29] and [36] were investigate by Vasquez et al. [82] and compared with social force features [28]. Results showed that the social force features perform best when applied specifically for the learned scene, but seem to generalize worst to other scenes. The features in [29] and [36] are more generalizable and manage similarly well.

A new approach to consider interaction-awareness is to model the navigational decision problem with game theory. By providing the language to formulate decision problems, game theory has already found some ways into robotics. It is used in robust control [4, 23, 58], for example for landing an aircraft [23]. Also the task planning of a planetary surface rover runs with game theoretic tools [32].

The use of game theory is also growing within the robotic research community, in particular in the fields of motion planning and coordination. LaValle and Hutchinson [42] were among the first who proposed game theory for the high-level planning of multiple robot coordination. Specific applications are a multi-robot search for several targets [48], the shared exploration of structured workspaces like building floors [73], or coalition formation [24]. Closely related to these coordination tasks is the family of pursuit-evasion problems. For example in [3, 49, 83], and can be formulated as a zero-sum or differential game. Zhang et al. [86] introduced a control policy for a motion planner that enables a robot to avoid static obstacles and to coordinate its motion with other robots. Their policy is based on zero-sum games and assigning priorities to the different robots. Thus, it eludes possible mutual avoidance maneuvers by treating robots with a higher priority as static obstacles. The motion of multiple robots with the same priority are coordinated within the work of Roozbehani et al. [67]. They focused on crossings and developed cooperative strategies to resolve conflicts among autonomous vehicles. Recently, Zhu et al. [87] discussed a game theoretic controller synthesis for multi-robot motion planning. So far, there has been almost no attempts to connect game theory with models for human motion—with the exception of Hoogendoorn and Bovy [30]. They focus on simulating crowd movements and generated promising results, especially for pedestrian flows, by formulating the walking behavior as a differential game. However, they do not solve the original problem or discuss a common solution concept like equilibrium solutions. They eventually transform the problem into an independent optimal control problem based on interactive cost terms. They specifically payed attention on reproducing human-like crowd behavior, hence, their simulation based evaluation is qualitative and assesses the macroscopic group behavior.

Our approach is to analyze human motion—in particular, the human avoidance behavior—from a game theoretic perspective. Using game theory provides several advantages over existing approaches. Compared to merely reactive methods, the key factor of game theory is the mutual anticipation of the influence of other agents’ possible motions on oneself and vice versa; costs (or payoffs) of own actions can depend on decisions of others. Thus, future interactions are predicted and incorporated which corresponds to human behavior. Moreover, individual cost functions can be assigned to each agent if desired. As a result, agents can behave asymmetrically which overcomes the restrictions of most of the mentioned algorithms with anticipated collisions avoidance. Learning based methods are very promising because of their inherent usage of real, human motion data. This is at the same time a drawback because their validity is dependent on the versatility of the their experimental data. In contrast, game theory offers a more general formulation with a variety of extensions and can apply learned cost functions as well.

In this paper, interaction-aware decision making is formulated as a non-cooperative game, whereas a static and a dynamic representation is proposed. Navigational decisions are predicted by calculating Nash equilibria dependent on alternative cost functions form literature. We further evaluate which combination of model and cost function approximates recorded human navigation experiment best. Note, that we do not present an operational motion planner or prediction algorithm in this paper. The presented approach is based on our previous work [80]. We extend our analysis by also regarding the Nash equilibria of dynamic games and by examining four additional cost functions, which mainly perform better than the previously used one. Further, a comparison between the game theoretic decision model and a commonly used prediction based one is conducted.

3 Analyzing Interaction-Aware Navigation with Game Theory

For a game theoretic formulation of human decision making during navigation we consider two game formulations with five different cost functions each. Then the solution concept of Nash equilibria is used to predict possible outcomes of the game. In the following, game theoretic terms and tools are briefly explained and an illustrative example is given.

3.1 Defining Static and Dynamic Games

The two considered models are finite, non-cooperative, nonzero-sum, perfect information games. However, one game is static, the other one is dynamic. In the static case, decisions are taken simultaneously, in contrast to deciding sequentially in dynamic games. The term non-cooperative refers to a game theoretic branch wherein all agents aim to minimize their cost individually.Footnote 1 One speaks of a nonzero-sum game if the sum of each agent’s costs can differ from zero. Keeping that in mind, the components of the static game are defined by:

Definition 1

(Static Game) Finite, static, non-cooperative, nonzero-sum game [44]:

-

1.

Finite set of N players \(\mathcal {P}= \{P_1,\dots ,P_N\}\).

-

2.

Finite action set \(\mathcal {A}= \mathcal {A}_1\times \dots \times \mathcal {A}_N\), where \(\mathcal {A}_i\) is defined for each player \(P_i \in \mathcal {P}\). Each \(a_i^j \in \mathcal {A}_i\) is referred to as an action of \(P_i\), with \(j = \{1,2,\dots ,M_i\}\) and \(M_i\) being the number of actions of \(P_i\).

-

3.

Cost function \(J_i\): \(\mathcal {A}_1 \times \mathcal {A}_2 \times \dots \times \mathcal {A}_N \rightarrow \mathbb {R} \cup \{\infty \}\) for each player \(P_i \in \mathcal {P}\).

The game is finite if the number of actions is bounded for all players. The subscript i always refers to the addressed player. Each player \(P_i\) has different actions \(a_i^j \in \mathcal {A}_i\) and a cost function \(J_i\). The superscript j refers to an action, and \(a_i^j\) is the jth action out of \(M_i\) actions of player \(P_i\).

In a static game, as defined above, the players decide once and simultaneously. Hence, navigating agents are modeled as if they observe the situation first, and then decide instinctively. This assumption of humans using default collision avoidance strategies is supported by Huber et al. [31].

Other studies [55, 61] in turn state that the amount of shared effort during the avoidance maneuvers is unequal, depending on who is first. This indicates that humans observe and react, which may be more accurately modeled by considering sequential decisions. Dynamic games model these situations where decisions during navigation are taken sequentially. In this case, the property of perfect information in games becomes important to get a complete definition of the game. A game is a perfect information game if each player perfectly knows about the actions of all players that happened previously. Thus for the dynamic model of navigation, it is assumed that the agents choose consecutively, and an instant after they observe the actions of the agents acting before them. It is unclear if the static or dynamic model is more accurate. Both models are evaluated and compared in this work.

A mathematical description of a dynamic game is the extensive form. This form emphasizes the sequential decision making. The components are given by:

Definition 2

(Dynamic Game) Finite, perfect information, dynamic, non-cooperative, nonzero-sum game [44]:

-

1.

Finite set of N players \(\mathcal {P}= \{P_1,\dots ,P_N\}\).

-

2.

Finite action set \(\mathcal {A}= \mathcal {A}_1\times \dots \times \mathcal {A}_N\).

-

3.

Set of terminal nodes \(\mathcal {Z}\).

-

4.

Cost function \(J_i: \mathcal {Z} \rightarrow \mathbb {R} \cup \{\infty \} \) for each \(P_i \in \mathcal {P}\).

-

5.

Set of choice nodes \(\mathcal {H}\).

-

6.

Action function \(\chi :\,\mathcal {H} \rightarrow 2^{\mathcal {A}}\); assigns each choice node a set of possible actions.

-

7.

Player function \(\rho :\,\mathcal {H} \rightarrow \mathcal {P}\); assigns each choice node a player \(P_i \in \mathcal {P}\) who chooses the action at the node.

-

8.

Successor function \(\sigma :\,\mathcal {H}\times \mathcal {A} \rightarrow \mathcal {H}\cup \mathcal {Z}\); uniquely assigns a choice node and an action a subsequent node.

Informally speaking, a dynamic game is a (graph theoretic) tree, in which each node depicts a decision of one of the agents, each edge depicts an action, and each leaf depicts a final game outcome.

3.2 Solving Games: Strategies and the Nash Equilibrium

The definitions introduced before withhold which actions a player should choose. Game theorists introduced diverse solution concepts that can be interpreted as an advice or used as prediction of what is likely to happen. One of the most famous solution concepts is the Nash equilibrium: it is an allocation where no player can reduce the own cost by changing the strategy if the other players stick to their strategies. Thus, a Nash equilibrium is a best response for everyone. It implies that agents aim to minimize their own cost. This is corroborated by existing literature stating that humans execute their motions by following a minimization principle. Accordingly, humans minimize for example the energy consumption of their gait [47, 75], or the global length of their paths [11]. Also psychologists claim that even infants expect a moving agent as having goals which it aims to achieve in a rational way, like by taking the shortest path [19]. Because these statements bring the Nash equilibrium into focus, we concentrate on this solution concept. Nevertheless, another solution concept—the Pareto optimality—is discussed and briefly evaluated in Sect. 6.1.

Before presenting a mathematical definition of a Nash equilibrium, the notion strategy is introduced because it differs for static and dynamic games. Similar for both types is that each player \(P_i\) has to play a strategy \(s_i^j\) out of the strategy set \(\mathcal {S}_i\). This strategy can be pure or mixed. A pure strategy is deterministic; whenever a pure strategy is played in a game, the same actions are chosen. If a mixed strategy is played, a pure strategy is chosen stochastically with a fixed probability. Thus, a game may reach different outcomes with the same, mixed strategy. In a static game, for a player \(P_i\) choosing a pure strategy \(s_i^j \in \mathcal {S}_i\) is equivalent to choosing an action \(a_i^j \in \mathcal {A}_i\), hence, \(s_i^j = a_i^j\). Defining a strategy in the dynamic case is more complex because one has to define for each choice node of a player in \(\mathcal {H}\) which action is to be played, whether or not the choice node is reached during the game. Thus, the pure strategies of a player \(P_i\) in a dynamic game consist of the Cartesian product \(\prod _{h\in \mathcal {H},\rho (h) = P_i}\chi (h) \) [44]. For further explanation an example is given in Sect. 3.4.

Keeping that in mind, we denote a combination of strategies of each player as an allocation \(s = (s_1^{j},\dots , s_N^{j})\). A Nash equilibrium \(s^* = (s_1^{j^*},\dots , s_N^{j^*})\) is marked with an asterisk and is a best response for everyone, assuming possible strategies and cost functions are common knowledge. It is defined by:

Definition 3

(Nash equilibrium) The N-tuple of strategies \((s_1^{j^*}, \dots , s_N^{j^*})\), with \(s_i^{j^*} \in \mathcal {S}_i\), constitutes a non-cooperative Nash equilibrium for a N-player game if the following N inequalities are satisfied for all \(s_i^j \in \mathcal {S}_i\):

Note that the existence of a Nash equilibrium is guaranteed given that mixed strategies are allowed [52]. Choosing a specific form for the cost function \(J_i\) even ensures the existence of a Nash equilibrium in pure strategies (see Sect. 3.3). Possible choices for such a \(J_i\) regarding human navigation are discussed in the next section.

It is further important that the Nash equilibrium is bounded to two main assumptions: common knowledge of all players, and strictly rational behavior of all players. Common knowledge implies that all players know about the whole action set and the cost functions. We assume that humans gain their (common) knowledge through experience and their ability to take perspective. Humans learn in their everyday life what alternatives exist to reach a goal and how other people behave while walking. At the same time humans are able to view a situation from another’s point-of-view and infer about their possible actions and intentions. Rational behavior is defined as a behavior that maximizes an expected utility [17] (i.e., minimize expected cost). Section 6.1 discusses rationality more detailed, as well as to which extent both assumptions are justified for interaction-aware decision making during navigation. In case either of these assumptions is violated, game theory extensions are proposed.

3.3 Determining Cost Functions for Human Navigation

The mathematical definition of a Nash equilibrium in Definition 3 demonstrates that the accuracy of the game theoretic prediction is dependent on both the choice of the solution concept and the cost function. This section presents five different choices to rate the cost for human navigation. The evaluation is presented in Sect. 4. Thereby, each cost function \(J_i\) consists of an independent component \(\hat{J}\) and an interactive component \(\tilde{J}_i\):

Note that this partitioning clarifies that the game theoretic formulation results in an independent set of optimal control problems if no interaction occurs. \(\hat{J}\) is only dependent on the action \(a_i^j\) that player \(P_i\) considers. It rates for example the length or time of the trajectory. The interactive component \(\tilde{J}_i\) namely contains the interactive cost. It is not only dependent on the own choice of action but also on the other players’ actions.

Four of the considered cost functions (I–IV) assume that humans prefer trajectories that are, above all, without collision and otherwise minimize their cost with respect to their free-space motion. The fifth cost function (V) contains an additional cost term that rates how close humans pass each other, hence, shares some characteristics with the social force model. This cost function will be discussed last. For the other four cost functions a common interactive component \(\tilde{J}_i\) can be defined.

Cost Function

I–IV The cost functions consider a collision to be the only possible interaction.

\(\tilde{J}^{\mathrm{I-IV}}_i \) becomes infinity in case that action \(a_i^j\) leads to a collision with the strategy of another player, otherwise the term is zero. An action corresponds to a discrete trajectory with the states \(\mathbf {x}(t)\), with \(a_i^j = (\mathbf {x}(1), \mathbf {x}(2), \dots , \mathbf {x}(T))\). Two trajectories collide if the Euclidean distance between two positions is smaller than a threshold R at any time t.

By choosing a cost function in the form of Eq. (2) with Eq. (3), the existence of a Nash equilibrium in pure strategies is guaranteed: a player’s cost is either \(\hat{J}(a_i^j)\) or infinity. If it is infinity for a special allocation, all other players with whom a collision would occur also have infinite cost. But this is not a best response for any player (only in case all actions of a player would result in a collision).

In the following, the choices for the independent component \(\hat{J}\) of the first four cost functions are presented. Two of them need a trajectory as input, the other ones merely need the path information.

Cost Function

I A frequently used cost function in motion planning [41] is the length \(\mathcal {L}\) of the path.

Another cost function using path input is given by Papadopoulos et al. [57]. They learned the parameters of a cost function by studying the geometry of the path in free-space and by using inverse optimal control. Their model bases on non-holonomic motions along a path that is approximated by line segments with the state vector \({\mathbf {x}(k) = [x, y, \varphi ]^T}\) at the kth segment of the path. x and y denote positions and \(\varphi \) the orientation. Their cost function depends only on the shape of the path and is invariant to changes of the velocity.

with \(\kappa \) being the curvature and \(\lambda (k)\) being the length of the kth segment. Let K be the total number of segments. Then a possible cost function is:

Cost Function

II The cost function is based on Eq. (5) and accounts for the energy related to the curvature, and for the distance between the current state and the goal state.

with \(\varDelta \mathbf {x}^2 = [(x(k) - x(K))^2, (y(k) - y(K))^2 ,(\varphi (k) - \varphi (K))^2]^T\) and \(\mathbf {c}^T = [125, 42, 190]\). The distances from the current state to the goal state can be interpreted as space-varying weights on the curvature [57].

Both mentioned cost functions are path and thus not velocity dependent. Another possibility is to use trajectory information. Consequently, we also consider the following cost functions.

Cost Function

III The function rates the duration \(\mathcal {T}\) needed for the player \(P_i\) to play an action, meaning to walk along the trajectory.

A more complex cost function is given by Mombaur et al. [50] who studied the run of human locomotor trajectories during goal-directed walking in free-space. They state that human trajectories are optimized according to an underlying principle which was learned with inverse optimal control. In contrast to cost function \({\hat{J}}^\mathrm{II}\), they assume the motion model to be holonomic with the state vector \(\mathbf {x}(t) = [x, y, \varphi , v_{\text {forw}}, v_{\text {ang}}, v_{\text {orth}}]^T\) and the control vector \(\mathbf {u}(t) = [u_{\text {forw}}, u_{\text {ang}}, u_{\text {orth}}]^T\) (forward, angular, orthogonal acceleration).

Cost Function

IV The cost function assigns a cost for the execution time \(\mathcal T\) needed (as in Eq. (7)). Additionally, it favors sparse accelerations and the human to be oriented towards the goal.

with \(\mathbf {u}(t)^2 = [u_{\text {forw}}^2, u_{\text {ang}}^2, u_{\text {orth}}^2, \psi ^2]_t^{T}\), and \(\psi \) being the difference between the angular difference to the goal denoted as \(\mathbf {z} = [x(T), y(T)]^{T}\) and the human body orientation \(\varphi \);

The parameter vector is \(\mathbf {c}^T = [1.2, 1.7, 0.7, 5.2]\) [50].

The last cost function is adopted in large parts from Pellegrini et al. [60]. They showed that a tracking algorithm performs better if it takes social interactions between pedestrians into account as well as their orientation towards a goal. Minimizing a learned cost function allowed for calculating the next expected velocity of the tracked object. This cost function is used here to rate a whole trajectory. A holonomic motion model with the state vector \(\mathbf {x}(t) = [x,y]^T\) and the control vector \(\mathbf {u}(t) = [v_x, v_y]^T\) is chosen.

Cost Function

V The cost function rates if a trajectory leads towards its goal and maintains a desired speed. Additionally, it rewards trajectories that steer an agent away from an expected point of closest approach to another agent.

\(\mathcal G(t)\) is dependent on the goal \(\mathbf z = [x(T), y(T)]^T\), and \(\mathcal V(t)\) depends on a desired speed \(v_{\text {des}}\), with

Each fellow player of \(P_i\) gets assigned a weight \(w_n(t)\) determined by distances and angular displacements of players to each other.Footnote 2 The additional interactive cost resulting from player \(P_i\) approaching player \(P_n\) is denoted by:

where \(d^2_{in}(t)\) is the square distance between the positions of \(P_i\) and \(P_n\) at the expected point of closest approach. The calculation of that point is based on a constant velocity assumption. It is similar to the social force model, but differs in a crucial way: instead of modeling humans at their current positions, the expected point of closest approach is predicted and used as origin of the repulsion. This implies that humans include prediction into the motion planning, rather than being reactive particles [60]. The parameter vector is \(\mathbf c^T = [c_1, c_2, \sigma ] = [2.073, 2.330, 0.361]\) [60].

3.4 Formulating Interaction-Aware Decision Making with Game Theory

In this section, the game theoretic tools described above are applied to a navigational decision problem. A static and dynamic game is set up and their Nash equilibria are calculated. Thereby, a mapping between game theoretic terms and navigational components is given with the aid of an example illustrating the navigation on a pavement. The example is depicted in Fig. 2 where two agents want to pass each other.

First, we map the components of a static game in Definition 1 to the pavement scenario. The two agents are the players \(P_1\) and \(P_2\). Choosing an action \(a_i^j\) is equivalent to choosing a trajectory. In the example in Fig. 2, each player can choose one out of five trajectories, thus the action set of \(P_i\) is \(\mathcal {A}_i = \{a_i^1, a_i^2, \dots , a_i^5\}\). In the static case, this mirrors directly the set of pure strategies \(\mathcal {S}_i = \{s_i^1, \dots , s_i^5 \} = \{a_i^1, \dots , a_i^5 \}\). Figure 3 assigns each action a trajectory and shows the cost component \(\hat{J}\), that choosing a trajectory entails (assuming it is collision-free). The cost is chosen such that it is proportional to the length of the path and that passing right is favorable to passing left. The cost \(J_i(s_1^j, s_2^j)\) of a player depending on the allocation are written down in a matrix as shown in Table 1, where each cell contains a cost pair \(J_1|J_2\).

Illustration of Fig. 2 as static game. The actions of each player and the cost of the trajectories are shown (assuming it is collision-free)

After all components are mapped, the pure Nash equilibria of the game are computed. In the static, two-player case the inequalities in Eq. (1) reduce to:

Informally speaking, a cell in Table 1 (i.e., an allocation) is a pure Nash equilibrium if a) the cost entry \(J_1\) is less or equal than all other costs \(J_1\) in its column and b) the cost entry \(J_2\) is less or equal than all other costs \(J_2\) in its row. Four allocations satisfy both conditions. They are circled in Table 1. For example, the allocation \(s^* = (s_1^{3*}, s_2^{5*})\) is a Nash equilibrium. Choosing this equilibrium means that the players decide simultaneously to play trajectory \(a_1^3\) and \(a_2^5\), respectively.

In case that the agents decide sequentially, the dynamic game is used. Definition 2 is applied on the pavement situation in Fig. 2. The dynamic game is depicted as a tree. However, its illustration gets confusing with too many branch-offs. For that reason the example is altered by only regarding three possible trajectories for each agent as shown in Fig. 4. This leads to the action set \(\mathcal {A}= A_1 \times A_2 = \{a_1^L, a_1^M, a_1^R, a_2^L, a_2^M, a_2^R\} \). Similar to the static game, we have two players \(P_1\) and \(P_2\). However, a player function \(\rho \) is needed that states the agent acting first. For this example \(P_1\) is chosen. After observing one out of three actions of \(P_1\), \(P_2\) reacts by playing one of his actions in turn. This results in the tree shown in Fig. 4. The terminal nodes—the leafs—assign the cost for each player as defined by the cost function \(J_i\). The cost functions are the same as in the static game.

Dynamic representation of the pavement example in Fig. 2. The upper part shows the actions of the players with its corresponding cost without collision. The lower part is the tree representation of the dynamic game with the cost pairs \(J_1|J_2\) at the leafs (the first entry refers to \(P_1\), the second to \(P_2\), respectively). The subgame-perfect Nash equilibrium is circled

One major difference between static and dynamic games lies in their strategy space. \(P_1\) has one choice node and three actions, thus \(3^1=3\) different strategies with with \(\mathcal {S}_1 = \{s_1^1, s_1^2, s_1^3\} = \{a_1^L, a_1^M, a_1^R\}\). More interesting, player \(P_2\) has three choice nodes, thus already \(3^3 = 27\) strategies, \(\mathcal {S}_2 = \{s_2^1, \dots , s_2^{27}\} = \{(a_2^L, a_2^L,a_2^L), (a_2^R, a_2^L,a_2^L), \dots \}\). The number of strategies is that high because one has to define a choice of action for each choice node. The reason why this refinement is necessary is that a Nash equilibrium was originally defined for static games. For the definition in Eq. (1) to be still valid for the dynamic game, one has to define the strategies such that they state for each choice node of a player which action is to be played—whether or not the choice node is reached during the game [44]. However, this definition allows for unlikely equilibrium solutions in a dynamic game. For example, one of the Nash equilibria in the dynamic case is the allocation \(s^* = (a_1^{L*}, (a_2^{M}, a_2^M, a_2^L)^*)\). It fulfills the conditions in Eq. (1): none of the players would benefit from changing only the own strategy. However, this would be merely the case if \(P_2\) ‘threatens’ to provoke a collision by playing \(a_2^M\) as reaction to \(a_1^M\), and \(a_2^L\) as reaction to \(a_1^R\). Actually carrying out this threat would yet not be the best response of \(P_2\). \(P_1\) can assume this to be an unlikely behavior and thus a non-credible threat. Also experience proves that humans rarely collide. They avoid collisions rather than provoking them. This example shows that in dynamic games the notion of a Nash equilibrium can be too weak [44]. For that reason, the stricter subgame-perfect equilibria is used for dynamic games in this work. Thus, equilibria that imply the threat to provoke a collision are excluded. A Nash equilibrium is subgame-perfect if it constitutes a Nash equilibrium in every subgame of the complete game. An example of a subgame is shown in Fig. 4. It consists of a node within the tree and all its subsequent nodes. In our example, the only subgame-perfect Nash equilibrium is \(s^* = (a_1^{M*}, (a_2^M, a_2^R, a_2^M)^*)\).

From now on, we will slightly abuse the notation of strategies in dynamic games. Instead of giving a combination of actions for each choice node, only the best response trajectory of the second player to the first player’s decision is stated. For example the subgame-perfect equilibrium reduces to \(s^* = (a_1^{M*}, a_2^{R*}) = (s_1^{M*}, s_2^{R*})\), where \(a_2^{R}\) it the best response to \(a_1^{M}\) (circled cost pair in Fig. 4).

In this context we want to discuss the link between game theory and optimal control. As mentioned before, the problem formulation of game theory results in a set of coupled optimal control problems. Nevertheless, the specific combination of a dynamic game that contains only the binary component \(\tilde{J}^{\mathrm{I}-\mathrm{IV}}_i\) in Eq. (3) as interactive part can also be reformulated by several independent optimal control problems. Therefore, all actions of the agent choosing first that inevitably lead to a collision with a following agent are removed. After that, it is sufficient that the agent choosing first only minimizes its independent cost component \(\hat{J}^{\mathrm{I}-\mathrm{IV}}\), which is independent of the actions of the other agents. The agents acting afterwards choose their trajectory based on independent optimizations with the already played actions as constraints.

4 Evaluation Method

We evaluate if the Nash equilibria in either of the proposed game setups sufficiently reproduce the decision process during human navigation. Or differently phrased: we are interested in whether or not Nash’s solution mirrors a human-like navigation behavior. We consider a Nash equilibrium allocation to be human-like if it proposes the same—or at least an alike—solution as a human would choose. The validity of the Nash solution is assessed separately for both game models, each in combination with one of the five cost functions.

This section presents the experimental setup used to capture the motion data, the game setup, and the statistical analysis used to test the presented approach. The steps with their inputs and outputs are shown in Fig. 9. It illustrates that the purpose of the experiment is to capture human trajectories which can be used as action sets for the game setup. Importantly, the validation is based entirely on human motion data to ensure that the action set contains valid human behavior. The next step—the game theoretic analysis—calculates all allocations that constitute a Nash equilibrium. Then the validity of the Nash solution is tested by comparing their similarity to human solutions by applying a Bootstrap test.

At the end of this section, an alternative decision model to game theory based on independent prediction is introduced. It serves as a further baseline for the performance evaluation of the proposed decision model.

4.1 Experimental Setup

In order to acquire a set of human walking trajectories, we repeatedly recorded the motion of two persons passing each other. Thereby, the start and goal positions changed partly. As preparation three possible start and end positions were marked with colored DinA4 papers on the floor and at eye level. The setup of the papers as well as the dimensions of the recording area is shown in Figs. 5 and 6. The distance between the edges of the papers was chosen to be 0.4 m.

Illustration of the actions of game 1C-A3. Actions that are incorporated into action set \(\mathcal {A}\) are drawn solid, others are dashed. All trajectories going from 1 to C out of the scenes 1C-A3 and 1C-A2 constitute \(\mathcal {A}_1\). The set \(\mathcal {A}_2\) consists of all trajectories going from A to 3 out of the scenes 1C-A3 and 2B-A3. Table 2 shows the corresponding bi-matrix, the grey area maps actions to entries in the matrix

During the experiment two participants facing each other were advised to walk over previously selected start and goal positions. Thereby, they started one meter in front of the actual starting marker such that the acceleration phase was beyond the recorded area (compare Fig. 6). Start and goal positions were known to both participants. Overall, 17 different start/goal configurations were chosen such that the covered distance in a whole was the same for each person. Thereby, the paths crossed in 10 out of 17 cases. All chosen configurations are listed in Fig. 10. Here, a configuration of start/goal positions including the two recorded trajectories is called scene and named according to the start and goal positions. For example, the scene shown in Fig. 6 on the left is scene 1C-A3. In our previous work [80], we already showed the existence of interaction in most of these scenes by comparing them to free-space motions.

In order to create our database, eight healthy subjects (mean age \(\pm \) SD: \(27 \pm 2.7\) years) were recorded. Each of the 17 scenes was repeated ten times for two pairs of subjects, and five times for the other pairs leading to [in total: \((4\cdot 10\cdot 17)+(4\cdot 5\cdot 17)\)] 1020 trajectories. The sequence of scenes was randomized differently for all subjects.

The human motion was recorded with the vision based motion capture system Qualisys.Footnote 3 Thereby, reflective markers were put on each person and the positions of these markers were recorded over time (at 204 Hz). After that, the mean position of the markers was calculated at each time step for each person and smoothed with a Butterworth filter (4th order, 0.01 cutoff frequency [50]) in order to filter the torso oscillations caused by the steps. The distribution of the five markers is shown in Fig. 5. It was chosen by following the setup in [50]. However, we neglected the markers on the knee and feet such that the mean constitutes approximately the center of mass of the torso. The resulting discrete trajectories constitute the actions in the game theoretic setup.

4.2 Game Theoretic Setup

Each of the 17 scenes with different start/end configurations can be represented as an individual game. They differ from each other by the action sets. In the following, the game setup is shown by the example of game 1C-A3 (Fig. 6). First, the static game is discussed. A bi-matrix is set up (Table 2) and the components in Definition 1 are mapped:

-

1.

The player set \(\mathcal {P}\) consists of the two subjects.

-

2.

The action set \(\mathcal {A}\) consists of the action set \(\mathcal {A}_1\) and \(\mathcal {A}_2\). In the game 1C-A1, \(\mathcal {A}_1\) contains all trajectories \(a_1^{j,\text {1C}}\) that \(P_1\) walked during the experiment starting at ‘1’ going to ‘C’—not just the trajectories of all scenes 1C-A3. For a better understanding compare with Fig. 7 and the corresponding bi-matrix in Table 2. Here a subset of \(\mathcal {A}_1\) is drawn as solid lines in green (left, scenes 1C-A3) and black (middle, scenes 1C-A2). This means, all the trajectories of other scenes with ’1C’, as 1C-A2 or 1C-B1, are also part of the action set because they constitute valid ways to get from ‘1’ to ‘C’.

Likewise, the action set \(\mathcal {A}_2\) contains all trajectories \(a_2^{j,\text {A3}}\) that were captured while \(P_2\) was walking from ‘A’ to ‘3’. They are drawn as solid lines in green (left, scenes 1C-A3) and black (right, scenes 2B-A3).

-

3.

Each allocation \(s = (s_1^j, s_2^j) = (a_1^{j,\text {1C}}, a_2^{j,\text {A3}})\) is rated with a cost function as in Eq. (2) for each player. The bi-matrix in Table 2 is constructed by using cost function \({\hat{J}}^\mathrm{IV}\). The collision radius R is chosen individually for each pair of subjects by identifying the minimum recorded distance from all simultaneous walks.

Second, we model the game 1C-A3 dynamically (Definition 2). The players, action sets and cost functions remain the same as for the static game. In contrast, the strategy space changes because dynamic games can have a sequence of actions. However, this sequence needs to be defined beforehand. According to the human avoidance studies in [55, 61], the agent coming first adopts the trajectory less. This indicates that this agent chooses first while the other one reacts. Thus, we determine for each scene and each pair of subjects which subject was more often the one entering the recorded area first. In Sect. 5, both options, that this player acts either first or second, are evaluated.

After setting up the game, the Nash equilibria are calculated. In Table 2 the bi-matrix of the game 1C-A3 is shown, for clarity the action set is reduced. The only Nash equilibrium of the (reduced) static game is circled: it is the allocation \(s^* = (a^{4*,\text {1C}}_1,a^{2*,\text {A3}}_2)\). This corresponds to a pair of trajectories, one trajectory for \(P_1\) and one trajectory for \(P_2\), respectively. It is denoted as equilibrium trajectory pair.

We assume that human interaction-aware decision making during navigation can be approximated with the Nash equilibrium solutions from game theory. This is only true if these equilibrium trajectory pairs constitute a provable human-like solution. In order to test the assumption, real human solution pairs are needed to serve as ground truth to which the equilibrium pairs are compared. Such pairs will be called reference trajectory pairs. They are the simultaneously recorded trajectories of a scene. For example, the subjects were asked to walk from ’1’ to ’C’ and from ’A’ to ’3’, respectively. This leads to a captured trajectory pair \((\tilde{a}_1^{j,\text {1C}}, \tilde{a}_2^{j,\text {A3}})\). This trajectory pair can be used as ground truth for game 1C-A3. In the following, reference trajectory pairs are tagged with a tilde. As each scene was repeatedly captured during the experiment, several reference trajectory pairs exist for each game. The set containing all reference trajectory pairs \((\tilde{a}_1^{j,\text {1C}}, \tilde{a}_2^{j,\text {A3}})\) is denoted as \(\mathcal {R}^{\text {1C-A3}} \). The elements of its complement \(\mathcal {\overline{R}}^{\text {1C-A3}} \) are \((a_1^{j,\text {1C}}, {a}_2^{j,\text {A3}})\). Additionally, the set \(\mathcal {E}^{\text {1C-A3}} \) is defined that contains all equilibrium pairs \((a_1^{j*,\text {1C}}, a_2^{j*,\text {A3}})\) of a game. Its elements can be elements of both \(\mathcal {R}^{\text {1C-A3}} \) and \(\overline{\mathcal {R}}^{\text {1C-A3}}\). By applying this to our example in Table 2, the sets are \(\mathcal {R}^{\text {1C-A3}} = \{ (\tilde{a}_1^{1,\text {1C}}, \tilde{a}_2^{1,\text {A3}}), (\tilde{a}_1^{2,\text {1C}}, \tilde{a}_2^{2,\text {A3}}), (\tilde{a}_1^{3,\text {1C}}, \tilde{a}_2^{3,\text {A3}})\}\) (bold pairs) and \(\mathcal {E}^{\text {1C-A3}} = \{(a^{4*,\text {1C}}_1,a^{2*,\text {A3}}_2)\}\) (circled).

For further illustration, the recorded trajectories (i.e., actions) of another game (1C-B2) are drawn in Fig. 8. The actions are assigned different colors that represent the different trajectory pair sets. In this example one equilibrium trajectory pair exists (drawn in violet). The reference trajectories are green and the remaining trajectories are black.

4.3 Similarity Measurement

In order to test the proposed approach, we check if the equilibrium trajectory pairs in \(\mathcal {E}^{\text {1C-A3}}\) are more similar to the reference pairs in \(\mathcal {R}^{\text {1C-A3}}\) than the other pairs in \(\mathcal {\overline{R}}^{\text {1C-A3}}\). The similarity is measured with the Dynamic Time Warping distance \(\delta \). The distance \(\delta \) is zero if two time series are identical, hence, the smaller the value of the distance the more similar they are. The algorithm is used because it can compare trajectories that differ in the number of time steps. Gillian et al. [25] explain how to apply it for multi-dimensional time series (e.g., trajectories).

The Dynamic Time Warping distance between two trajectories is denoted as \(\delta (\tilde{a}^l_i, a^j_i)\), where \(\tilde{a}^l_i\) is the tested reference trajectory and \(a_i^j\) the one that is tested against. In order to compare a reference trajectory pair to another pair of trajectories, the two Dynamic Time Warping distances are calculated separately and summed up. This leads to a trajectory pair distance d:

Note that only trajectories of the same player are compared. All trajectory pair distances between the elements of \(\mathcal {R}^{\text {1C-A3}}\) and \(\mathcal {E}^{\text {1C-A3}}\), \(\mathcal {R}^{\text {1C-A3}}\) and \(\mathcal {\overline{R}}^{\text {1C-A3}}\) are calculated respectively, leading to two additional sets. They are the output of the similarity measurement step (compare Fig. 9):

-

Set \(\mathcal {D}_{\mathcal {E}}^{\text {1C-A3}}\); contains all trajectory pair distances d of the possible comparison between the elements of \(\mathcal {E}^{\text {1C-A3}}\) and \(\mathcal {R}^{\text {1C-A3}}\).

-

Set \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {\overline{R}}}\); contains all trajectory pair distances d of the possible comparison between the elements of \(\mathcal {\overline{R}}^{\text {1C-A3}}\) and \(\mathcal {R}^{\text {1C-A3}}\).

As mentioned above, only one pair of subjects has been considered so far. Considering all pairs of subjects is done by repeating the game setup and similarity measurement step for each pair of subjects. The resulting distance sets are merged. For simplicity we denoted the merged sets as \(\mathcal {D}_{\mathcal {E}}^{\text {1C-A3}}\) and \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {\overline{R}}}\), too.

4.4 Statistical Validation Method

After calculating the sets \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {E}}\) and \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {\overline{R}}}\), we are interested in whether or not the values in \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {E}}\) are mostly smaller than the values in \(\mathcal {D}^{\text {1C-A3}}_{\mathcal {\overline{R}}}\). Therefore, the null hypothesis \(\text {H}_0\) is defined to be:

Null hypothesis \(\text {H}_0\): The median values of the distributions from which the two samples \(\mathcal {D}_{\mathcal {E}}\) and \(\mathcal {D}_{\mathcal {\overline{R}}}\) are obtained are the same.

We test against this null hypothesis by computing the p value with a one-sided Bootstrap test [21] using a \(5 \,\%\) significance level. We use Bootstrap, a resampling method widely used in statistical inference, because the true distributions are unknown and the considered sample sizes of some scenes are too small (in some cases \(<\)30) for inference based on the t-distribution.

Since 17 different scenes are regarded, 17 Bootstrap tests are necessary. In order to overcome the multiple testing problem, the Benjamini-Hochberg procedure [8] was used to adjust the significance level of the p values.

4.5 Baseline Comparison: Prediction Based Decision

In order to further evaluate the game theoretic approach, its performance is compared to the performance of a prediction based decision model. Therefore, a model is used where each agent independently predicts the future motions of surrounding agents first and decides afterwards which trajectory to take based on the prediction. It is a model that—like the presented one—anticipates collisions and assumes humans to be more than merely reacting particles. However, it omits the reasoning about possible other motions of agents.

For the experimental setup of two persons passing each other, the prediction based decision model is realized as follows. Again the setup of the game 1C-A3 is used as example. Both persons know the current position, velocity and goal of the other person. Based on this knowledge and the assumption that persons move with constant velocity to their goal, person \(P_1\) predicts the future trajectory of \(P_2\), and vice versa. Note, that this differs from a merely constant velocity approach because the goal is known. Only the way to the goal is predicted. The predictions are denoted as \(a_1^{\text {1C},\text {pred}}\) and \(a_2^{\text {A3,pred}}\). Then \(P_1\) chooses a trajectory that minimizes the cost function \(\min (J_1(a_1^{j,\text {1C}}, a_2^{\text {A3,pred}}))\), \(\forall a_1^{j,\text {1C}} \in \mathcal {A}_1\). \(P_2\) does likewise for \(\min (J_2(a_1^{\text {1C,pred}},a_2^{j,\text {A3}}))\), \(\forall a_2^{j,\text {A3}} \in \mathcal {A}_2\). The output will be the trajectory pair \((a_1^{j^*,\text {1C}},a_2^{j^*,\text {A3}})\). The human-likeness of this decision is validated with the same approach as for the equilibrium trajectory pairs as illustrated in Fig. 9. The only difference is that, instead of using game theory to decide on which trajectory pair to take, the prediction based decision models is used.

5 Results

The results of the statistical validation with the Bootstrap tests will be presented in this section. It is followed by the results of the alternative prediction based decision model and a discussion and potential shortcomings.

5.1 Static Game Model

First, the results of the evaluation of the Nash equilibria in static games are presented. Table 3 shows for how many tests (out of 17) the null hypothesis (see Sect. 4.4) was rejected after using the Benjamini-Hochberg procedure. All five choices of the cost function \({J}_i\) are listed. Additionally, the corresponding p values are shown in Table 6.

The best result was achieved using \({J}_i^{\mathrm{I}}\), the length of a trajectory. In this case, the equilibrium pairs are more similar to the reference pairs than other possible solutions for all 17 tests. All median values of the trajectory pair distances related to the equilibrium pairs are significantly smaller. For illustration, the confidence intervals of the medians are shown in Fig. 10. They are also calculated with a Bootstrap test using a 5 % significance level. Note that none of the confidence intervals overlap. This means, that the theory of Nash equilibria in static games using \({J}_i^{\mathrm{I}}\) is indeed suitable to approximate the decision process behind human avoidance maneuvers because it chooses the same, or at least similar, trajectories as the subjects did. Almost as good performed the other path based cost function \({J}_i^{\mathrm{II}}\) rating curvature; the null hypothesis was rejected in 16 cases.

Static game modeling. The 95 % confidence intervals of the medians of the trajectory pair distances are shown for cost function \(J_i^{\mathrm{I}}\) (Length) (compare Table 3). \(\mathcal {D}_{\mathcal {E}}\) referes to the similarity of the Nash equilibrium trajectory pairs to the ground truth, \(\mathcal {D}_{\mathcal {\overline{R}}}\) referes to the set of remaining possible trajectory pairs, respectively. If they do not overlap the difference is significant and \(\text{ H }_0\) is rejected

Table 3 further reveals that the two cost functions regarding the path seem to represent the human behavior more accurately than the ones regarding trajectory cost. Moreover, the elemental cost functions—i.e., cost for length \({J}_i^{\mathrm{I}}\) or time \({J}_i^{\mathrm{III}}\)—tend to achieve better results than the respective learning based cost functions \(J_i^{\mathrm{II}}\), \({J}_i^{\mathrm{IV}}\). Since they were learned in free-space, these functions may be too specific for the direct usage in an environment populated by humans. Both learned cost functions include either a cost for time or length, however, also add a goal dependent cost. For example, \({J}_i^{\mathrm{IV}}\) charges an additional cost if the agent is not facing the goal. One could argue that this goal driven behavior becomes less important in such environments while minimizing time/length is still a prevalent aim of humans.

The worst result was achieved for \({J}_i^{\mathrm{V}}\), which shares characteristics with the social force model. This cost function often leads to equilibrium pairs that are further apart than the respective pairs of the cost functions that merely consider collisions in the interactive component. Apparently, \({J}_i^{\mathrm{V}}\) sets to much emphasis on proximity when applied in a game theoretic setup for the presented scenario.

5.2 Dynamic Game Model

If a scene is modeled as a dynamic game, we assume that the subject who was more often the first one to enter the recorded area is allowed to choose first. In the tables this player will be marked with a circled one, hence, \(P_i^{\textcircled {\tiny 1}}\). In order to assess if our assumption holds and if the order makes a difference, each dynamic game is played twice; ones with the player \(P_i^{\textcircled {\tiny 1}}\) choosing first and ones with \(P_i^{\textcircled {\tiny 1}}\) choosing second. After that, the human-likeness of the Nash solution is validated as described previously. Table 4 summarizes the results and the corresponding p values are listed in detail in Table 6.

The null hypothesis is rejected for the majority of the tests if \(P_i^{\textcircled {\tiny 1}}\) chooses first. The sequence of which cost performs best is similar to the static model, however, the number of rejected null hypothesis is lower in each case. Only \(J_i^{\mathrm{V}}\) performs similarly well in the static and dynamic setup, but is still the weakest. The best result was again achieved with cost function \({J}_i^{\mathrm{I}}\) (Length). Additionally, the results indicate that games with \({J}_i^{\mathrm{I}}\) are the ones being the most affected by changing the playing order. This result is in line with the studies from Pettré et al. [61] which state that the person giving way needs to make a larger avoidance maneuver. Opposed to that is cost function \({J}_i^{\mathrm{III}}\); here the number of rejected hypotheses slightly increases if \(P_i^{\textcircled {\tiny 1}}\) chooses second.

5.3 Prediction Based Decision Model

The results for the validation of the decision model based on constant velocity to the goal prediction is summarized in Tables 5 and 6. Similar to the static game, the path based cost functions perform better than the trajectory based ones, however, all cost functions perform clearly worse than within the static or dynamic model. Especially clear is the difference for \(J_i^{\mathrm{V}}\): only for two scenes, out of 17, the suggested trajectory pairs are more similar to the reference pairs than a randomly picked pair.

5.4 Discussion

The result that the prediction based decision model performed worse than the game theoretic decision model is in line with Trautman et al. [78]: it indicates that it is insufficient to merely include prediction, even if the goal of the surrounding person is known. It is advantageous and more human-like to consider interaction-awareness. Game theory is a suitable way to formulate the reasoning about possible interaction of actions and approximates the human decision process during navigation more accurately than the prediction based model.

The numbers in Tables 3 and 4 further imply that the static game is more accurate than the dynamic one. Nevertheless, there is no significant difference between any of the medians of the two \(\mathcal D_{\mathcal {E}}\) sets in the static and dynamic case, respectively. A reason why the null hypothesis was less rejected in the dynamic case may be that the set \(\mathcal {E}\) is smaller if only the sub-game perfect Nash equilibria are considered. This results in a smaller sample size and thus in a lower confidence by determining the median. This may be resolved by recording more subjects.

Notwithstanding the above, another reason why the dynamic model is less accurate may be the policy for evaluating \(P_i^{\textcircled {\tiny 1}}\). It may be too restricted in cases where the player choosing first is not fixed but swaps repeatedly, thus is independent from the scene. This is omitted in the current validation method. On the one hand, \(P_i^{\textcircled {\tiny 1}}\) is merely the subject who was more often the first within the recorded area, but not always. On the other hand, the equilibrium trajectory pairs in \(\mathcal {E}\) are compared to all reference pairs in \(\mathcal {R}\) of a game. This issue can be addressed by reducing the set \(\mathcal {R}\) such that it only contains the trajectory pairs of a scene wherein the player choosing first is indeed the one who entered the recorded area first. We yet refrained from doing so because for some tests the sample size would shrink too much to make a reliable statement.

Surprisingly, the performance of the only cost function that includes proximity cost, i.e. social cost, is always the weakest. As mentioned above, this is probably because it favors trajectories that are too far apart. However, social force features in cost functions are still worth to be considered. As mentioned in Sect. 2, Vasquez et al. [82] investigated different cost features. They concluded that social forces showed the best results for the learned scenes while being at the same time the ones generalizing worst for unknown scenes. This may have also happened for the used data set.

Finally, a number of potential shortcomings need to be considered. First, the study was limited to two person games. Extending it to several persons should still maintain the results because more persons means less collision-free trajectories. Only those are chosen as Nash equilibrium and are hence more similar to the ground truth. Second, the presented models assume that humans only decide ones and, thus, a sequence of decisions or changing a decision is not captured in the model yet. An exact model should always choose one the reference pairs. However, building an exact model would only work if every detail of decision making during navigation is known and included. A game may also have several equilibria and the theory of Nash is indifferent towards the question which equilibrium the players should choose eventually. Therefore, the accuracy of the model can be further enhanced and potential extensions are discussed in the next section.

6 Extensions and Applications

The following section looks beyond the horizon of the presented method. The first part of the section calls attention to possible extensions of the presented game theoretic models. The second part discusses if the results can be further applied to robots since the evaluation focuses on humans.

6.1 Further Analysis and Potential Extensions

Apart from comparing static and dynamic games, we additionally looked into another game theoretic solution concept for static games, the Pareto optimality [44]. A Pareto optimal outcome is an allocation in which it is impossible to reduce the cost of any player without raising the cost of at least one other player. Thus, Pareto optimality has some notion of ‘fairness’, while the Nash concept assumes rational players, which merely minimize their own cost. Nash equilibria are mostly Pareto inefficient [34]. However, choosing a cost function of the form as in Eq. (2) with Eq. (3) results in the set of Pareto optimal allocations being a subset of the Nash equilibrium allocations. For example, in the game in Table 1 the cost of the Pareto optimal allocations are (3|1), (2|2) and (1|3). As a consequence, the statistical comparison between the two sets of trajectory pair distances—one set using the Nash concept, and one set using Pareto optimality—revealed no significant results for the four cost function using the interactive component as defined in Eq. (3). Nevertheless, Pareto optimality is worth to be considered if further interactive components are added to the cost function (e.g., keeping a comfortable distance). In this case the Nash equilibria and Pareto optimal allocations coincide less and a comparison is more expressive. Accordingly, additional tests reveal that the performance increases for \(J_i^{\mathrm{V}}\) if Pareto efficiency is used as solution concept: in 15 cases the null hypothesis can be rejected (instead of 10 cases with Nash, see Table 3).

A consequence of the presented model is that players need to coordinate their choices and “agree upon” an equilibrium. Rules on how humans come to an agreement are studied in coordination games [18]. The agreement can be based on facts like in cases where one of the equilibria is payoff superior or less risky, but also on social rules. An especially interesting extension for navigation is to include traffic rules into the formulation.

The mentioned models and extensions all assume that the agents behave rationally in a sense that they minimize their expected cost. They also imply common knowledge (see Sect. 3.2). Researchers within different sub-fields of game theory yet argue that the common knowledge assumption and the conventionally defined rationality “is not characteristic of human social interaction in general” [17]. An apparent case where common knowledge is hard to imply is if the number of agents is too large. The conditions may also be violated if it is required to plan far ahead in the future or if the problem is complex (e.g., for games like Chess or Go). These are different facets of bounded rationality, which is deliberately omitted in this work. So far we focus on well-known and studied game theoretic approaches. They are evaluated for the presented task and compared among each other and to prior work. Thinking at the application of navigating humans in populated environments, we assume the number of potential players to be uncritical. Also the planning horizon while walking is within seconds rather than minutes. Nevertheless, game theoretic approaches that consider bounded rationality may further improve our model and are highly applicable for crowd simulations. For an overview see [68]. Other relevant concerns are raised by behavioral/psychological game theorists [2, 13]. They refer to various experimental results wherein people seemingly do not decide rational, meaning act differently as predicted by the theory of Nash. The rationality assumption is often questioned in social decision problems wherein prior beliefs or emotions influence the decision. Both are hard to pin down in utility functions since in the world we can usually only measure pecuniary payoffs or cost [17]. It is one of the greatest challenges to define a perfectly correct analytic model of the human decision process. Camerer yet comments in [17] that “many weaknesses of game theory are cured by new models that embody simple cognitive principles, while maintaining the formalism and generality that make game theory useful”. We want to introduce game theory as an effective method to model interactivity during navigation, doing so by starting with elementary game models that are step-wise refined in future work, among others with approaches from behavioral game theory. A promising next step is for example the fairness equilibrium [13] that namely includes human sense of fairness into the utilities.

6.2 Relevance for Robot Motion Planning among Humans

This paper focuses on how humans navigate among humans, yet with the intent to apply gained insights to improve the navigation of robots among humans. It needs to be investigated if the results of this work can be directly applied to the robot navigation problem in populated environments since humans may react differently towards a robot than towards a human. Nevertheless, existing literature indicates that humans perceive and treat robots similar as humans to some extent. Accordingly, humans already associate human features with inanimate devices. For example, they attribute emotions with robot motion patterns [69] and ascribe intentions to moving objects, even if they are merely geometric shapes [14, 26, 33]. Intriguingly, researches also exposed that a robot action is represented in a similar manner within the human brain as the respective human action, if only for hand gestures and humanoid grippers [84]. But even if a robot resembles a human only to some degree, human-like behaviors or features are still advantageous. Thus, the Media Equation Theory [64] and the associated research field of social robotics show that the performance of human-robot collaboration is enhanced when robots employ human-like behaviors [12, 20, 35, 45, 77]. Thus, we are confident that our conclusions can enhance the human-robot cooperation during navigation.

7 Conclusion

The understanding and correct modeling of interaction-aware decision making of humans during navigation is crucial to further evolve robotic systems that operate in human populated workspaces. This paper introduces non-cooperative game theory and the Nash equilibrium as a framework to model the decision process behind human interaction-aware behavior. A condition for the suitability of Nash’s theory is that humans behave rationally in a sense that they aim to minimize their own cost. In this work, this assumption was implicitly validated for five different cost functions. The game theoretic approach was first proposed formally and was then applied and validated for the problem of predicting the decision of multiple agents passing each other. We showed that the solution concept of Nash equilibria in games picks trajectories that are similar to the humans’ choice of trajectories. Thereby, the best results were achieved with a static game model in combination with a length based cost function. Moreover, using elemental cost functions—based solely on the length of the trajectory or the time needed—tended to be more accurate than the respective learning based cost functions. The game theoretic approach was additionally compared with a prediction based decision model. It anticipates collisions but omits the reasoning about possible other motions of persons, i.e. the interaction-awareness. The results show that both presented game theoretic models outperform the prediction based decision model. This further highlights the need to include interaction-awareness into the decision modeling.

The derived knowledge is helpful for a variety of robotic systems, like future service robots that need to predict human motion more accurately or that need to move in a human-like way. It is equally usable for the autonomous automobile navigation or for modeling the interaction during arm movement coordination tasks.

Future work includes improving the results by using a cost function that considers further interaction parameters like social or traffic rules. Thus, other solution concepts (Pareto optimality, fairness equilibrium) can be validated and compared to the Nash equilibrium. Additionally, one has to analyze if humans converge mainly to a specific equilibrium. Coordination games would supply a promising framework for this analysis. In order to consider uncertainties in the game formulation (e.g., the cost function of the players or their goals) we plan to apply Bayesian games and run experiments in which the players do have imperfect information about the intentions of the other players. Moreover, we intend to implement a game theory based motion planner and to conduct human-human/human-robot experiments. They further evaluate the future approach and the question on how humans perceive robots during navigation.

Notes

Non-cooperative in contrast to cooperative/coalitional games where the focus is set on what groups of agents—rather than individuals—can gain by forming coalitions. In a nutshell, coalitional game theory answers two questions: which coalition will form, and how should that coalition divide its payoff among its members [44, p. 70].

For a detailed calculation please refer to [60].

References

Alonso-Mora J, Breitenmoser A, Rufli M, Beardsley P, Siegwart R (2013) Optimal reciprocal collision avoidance for multiple non-holonomic robots. In: Martinoli A, Mondada F, Correll N, Mermoud G, Egerstedt M, Hsieh MA, Parker LE, Sty K (eds) Distributed autonomous robotic systems. Springer, Berlin Heidelberg, pp 203–216

Attanasi G, Nagel R (2008) A survey of psychological games: theoretical findings and experimental evidence. In: Innocenti A, Sbriglia P (eds) Games, rationality and behavior. Essays on behavioral game theory and experiments. Palgrave Macmillan, New York, pp 204–232

Başar T, Olsder GJ (1999) Dynamic noncooperative game theory, 2nd edn. SIAM, Philadelphia

Başar T, Bernhard P (1995) H-infinity optimal control and related minimax design problems. Birkhäuser, Basel

Basili P, Sağlam M, Kruse T, Huber M, Kirsch A, Glasauer S (2013) Strategies of locomotor collision avoidance. Gait Posture 37(3):385–390

van Basten B, Jansen S, Karamouzas I (2009) Exploiting motion capture to enhance avoidance behaviour in games. In: Egges A, Geraerts R, Overmars M (eds) Motion in Games. Springer, Berlin, pp 29–40