Abstract

This article provides an overview of the current state of agent-based modeling in managerial science. In particular, the aim is to illustrate major lines of development in agent-based modeling in the field and to highlight the opportunities and limitations of this research approach. The article employs a twofold approach: First, a survey on research efforts employing agent-based simulation models related to domains of managerial science is given which have benefited considerably from this research method. Second, an illustrative study is conducted in the area of management accounting research, a domain which, so far, has rarely seen agent-based modeling efforts. In particular, we introduce an agent-based model that allows to investigate the relation between intra-firm interdependencies, performance measures used in incentive schemes, and accounting accuracy. We compare this model to a study which uses both, a principal-agent model and an empirical analysis. We find that the three approaches come to similar major findings but that they suffer from rather different limitations and also provide different perspectives on the subject. In particular, it becomes obvious that agent-based modeling allows us to capture complex organizational structures and provides insights into the processual features of the system under investigation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last two decades a new approach of research in the social sciences has emerged: agent-based modeling (ABM)—often synonymously termed as agent-based computational models, agent-based simulations, multi-agent systems or multi-agent simulations (e.g. Squazzoni 2010). According to Gilbert (2008) ABM “is a computational method that enables a researcher to create, analyze, and experiment with models composed of agents that interact within an environment” (p. 2). ABM is used to derive findings for the system’s behavior (“macro level”) from the agents’ behavior (“micro level”) (Bonabeau 2002; Epstein 2006a). This gives reason to believe that ABM could lead to “generative social science” (Epstein 2006a) or to “social science from the bottom-up” (Epstein and Axtell 1996).

ABM involves constructing a computational model of the system under investigation and “observing” the behavior of the agents and the evolving properties of the system in time by extensive experimentation by means of computer simulation. Agent-based simulation in the social sciences can serve several purposes like, for example, predicting consequences, performing certain tasks (which is typically the case in the domain of artificial intelligence), or discovering theory (Axelrod 1997a, b). The latter means that simulation is used to develop structural insights and gain understanding of fundamental processes within a certain area of interest (e.g. Davis et al. 2007; Dooley 2002; Harrison et al. 2007; Gilbert and Troitzsch 2005). Axelrod regards simulation “a third way of doing science” (1997a, p. 3) or a “third research methodology” (1997b, p. 17) which has in common with deduction the explicit set-up of assumptions (though it does not prove theorems by the “classical” mathematical techniques) and which generates data from a set of rules (rather than from measurement of real world data as it is typical for induction). According to Gilbert and Troitzsch (2005), to develop a model the researcher has “to iterate between a deductive and inductive strategy” (p. 26), starting with a set of assumptions, generating data by an experimental method and analyzing the data inductively. Ostrom (1988) regards simulation as a third symbol system, apart from natural language and mathematics, for representing and communicating (theoretical) ideas; in particular, he argues that any theory which can be formulated in either mathematics or natural language can also be expressed by the means of a programming language.

ABM can be called a rather interdisciplinary field of research since researchers from various subject areas such as sociology, economics, managerial science, computer sciences, and evolutionary biology have contributed to the development of methods for ABM and have applied agent-based models to their domains of research as an approach to gaining theoretical insights. As such, in economics the term “Agent-based Computational Economics” has been established for the “computational study of economies modeled as evolving systems of autonomous interacting agents” (Tesfatsion 2001, p. 281). However, it is worth mentioning that ABM is not only characterized by a certain methodological approach but some assumptions of, in particular, neoclassical economics related to agents’ behavior are also relaxed. For example, in ABM it is common to assume that agents show some form of bounded rationality in terms of bounded information and bounded computing power (e.g. Epstein 2006a; Axelrod 1997a). As a consequence, in agent-based models agents are usually not able to find the global optimum of a solution space “instantaneously”; rather they discover the solution space stepwise in a process of searching for better solutions (e.g. Safarzyńska and van den Bergh 2010; Chang and Harrington 2006). Hence, the processes by which organizations evolve or technological changes diffuse, for example, based on the behavior of interacting agents, are a major concern in ABM.

This article attempts to provide an overview of the current state of ABM as a research approach for developing theory in the area of managerial science, and, in particular, to illustrate and discuss the potential contributions to and limitations of ABM in managerial science. For this, we take a twofold approach: On the one hand, we focus on research topics in managerial science which have been rather extensively studied using agent-based models to serve as examples. On the other hand, there are also areas of managerial science which, to the very best of the author’s knowledge, have rarely employed ABM so far, as in management accounting research. Hence, in order to explore the perspectives of ABM in this area of managerial science, we apply ABM to a well-studied question in management accounting, namely when to apply aggregate measures (in terms of firm-related performance measures) rather than measures related to the business unit. In particular, we compare a “classical” approach in economics based on a principal-agent model and a related empirical study, both conducted by Bushman et al. (1995), with an agent-based simulation model.

The remainder of the paper is organized as follows. In Sect. 2 we give a short overview of the main properties of agent-based computational models. Section 3 provides a brief review of the use of ABM in various fields of managerial science while Sect. 4 comprises a comparison of an analytical, an empirical and an agent-based research approach in the area of management accounting. Section 4 is intended to illustrate potential contributions and shortcomings of ABM as research approach in managerial science by way of example in order to serve as a basis for Sect. 5 where we discuss the opportunities and limitations of ABM for managerial science in a broader perspective.

2 Structural features of agent-based models

Agent-based computational models consist of three central building blocks at their very core, (1) the agents, (2) the environment in which the agents reside, and (3) interactions among the agents (e.g. Tesfatsion 2006; Epstein and Axtell 1996). In Sect. 2.1, these are described in more detail, followed by a short overview of typical setups for the simulation experiments applied in ABM in Sect. 2.2.

2.1 Building blocks of agent-based models

Agent-based models are intended to allow for deriving insights into a system’s behavior from the micro level, i.e. the agents’ behavior. Hence, agents are at the heart of agent-based modeling. Agents are autonomous, decision-making entities pursuing certain objectives (e.g. Bonabeau 2002; Safarzyńska and van den Bergh 2010; Tesfatsion 2006). Autonomy in this sense means that the individual behavior of the agents is not determined directly (“top-down”) by a central authority, irrespective of interaction with a possibly existing central unit or feedback from the macro- to the micro-level (Epstein 2006a; Chang and Harrington 2006). The agents receive information from their environments and about other agents, and react to the information; however, they also pro-actively initiate actions in order to achieve their objectives (Wooldridge and Jennings 1995). Hence, from the perspective of implementing an agent-based model, an agent is a set of data reflecting the agent’s knowledge about the environment and other agents and a set of methods describing the agent’s behavior (Tesfatsion 2006).

In an agent-based model, agents may represent individuals like, for example, workers, business unit managers or board members of a firm or, in other contexts, for example, consumers or family members. They may also represent a group of individuals. For example, it might be appropriate to regard a department or a family as a single agent. Grouping individual agents to “aggregate” agents is particularly interesting in managerial science since, for example, it allows hierarchical structures to be mapped (Chang and Harrington 2006; Anderson 1999). However, agents do not necessarily have to be human or, at least, solely composed of humans; rather they can be biological entities (e.g. animals or flocks, forests) or technical entities (e.g. robots).

With respect to agents’ behavior, a common assumption is that agents show some form of bounded rationality (Simon 1955). In particular, agents are assumed to decide on basis of bounded information such that they do not have global information about the entire search space and have limited computational power (Epstein 2006a; Safarzyńska and van den Bergh 2010; Anderson 1999). Hence, although agents are usually modeled as pursuing certain goals, they are not global optimizers. Instead agents merely conduct local search processes, meaning that only solutions which differ but slightly from recent solutions are discovered and considered as alternative options. Thus, agents are assumed to carry out “myopic” actions to achieve their goals (Axtell 2007; Safarzyńska and van den Bergh 2010).

Another important feature of ABM is that the models typically comprise agents which differ in their characteristics, i.e. which are heterogeneous (Safarzyńska and van den Bergh 2010; Epstein 2006a). Agents may show differences with respect to several dimensions such as knowledge, objectives, rules for the formation of expectations, decision rules or information processing capabilities.Footnote 1 Thus, in ABM agents are reflected in their diversity (Kirman 1992; Hommes 2006; Epstein 2006a; Axtell 2007) rather than relying on a representative agent, i.e. the “representative” individual that, when maximizing utility, chooses the same options as the aggregate choice of the heterogeneous population of individuals as often employed in economic models (Kirman 1992). According to Stirling (2007), “diversity” or heterogeneity of agents could show up in three dimensions, i.e. variety, balance, and disparity. While variety relates to the number of categories into which the agents are subdivided, balance means the ratios which the categories have in the population of agents and disparity qualifies the level of distinctiveness of the categories (Safarzyńska and van den Bergh 2010; Stirling 2007).

In agent-based models, the agents “reside” in an environment which captures the second core component of ABM. In a rather abstract formulation, the environment characterizes the tasks or problems the agents face, and, in this sense, the environment also represents the constraints the agents have to respect when fulfilling their tasks (Chang and Harrington 2006). Depending on the subject of the model, the environment might be given by the physical space (i.e. the geographical location and time of agents and artifacts) or the conceptual space (i.e. the “location” in a figurative sense so that “neighbored” agents are more likely to interact) (Axelrod and Cohen 1999). Inspired by evolutionary biology, other authors use the term “fitness landscape” to characterize the environment: “A fitness landscape consists of a multidimensional space in which each attribute (gene) of an organism is represented by a dimension of the space and a final dimension indicates the fitness level of the organism” (Levinthal 1997, p. 935). The landscapes may be highly rugged with numerous peaks and the agents (e.g. organisms or organizations) search for higher levels of fitness. We will return to this aspect in Sects. 2.2 and 4.2.

Hence, the term environment in ABM is used in rather a broad sense and could denote, for example, a landscape of renewable resources (Epstein and Axtell 1996), a dynamic social network, a set of interrelated tasks or just an n-dimensional lattice in which agents are located. However, in ABM two aspects should be clearly stated: First, the environment allows us to determine whether agents are “neighbored”, either literally or in a figurative sense, and, thus, whether agents interact “locally” and search “locally” for superior solutions to their concerns. Second, in ABM the environment is usually represented in an explicit space, i.e. the environment is explicitly given (Epstein 2006a), and the agents—due to bounded rationality—usually do not have perfect knowledge of the entire environment.

Capturing the task and the constraints agents have to meet, the environment can be characterized with respect to various aspects: For example, the task might be simple or complex, in terms of being decomposable or not (Chang and Harrington 2006), or the environment could be stable or dynamic over the observation period (Siggelkow and Rivkin 2005). For dynamic environments, various causes of change could be modeled, like the occurrence of new agents (e.g. new firms entering a market) and increasing competition or new technologies being invented (Chang and Harrington 2006).

Interactions among agents constitute the third core building block of agent-based models and can be categorized with respect to different dimensions. With respect to the mode of communication among agents, agent-based models could comprise direct and/or indirect interactions (Safarzyńska and van den Bergh 2010; Weiss 1999; Tesfatsion 2001). Direct interaction requires some kind of communication between the agents. For example, agents might inform each other about the actions they intend to take. In the indirect scenario, agents interact with each other through the environment. For example, agents may observe each other, note how the environment is affected by the actions other agents carry out and react to the changes in the environment. Moreover, by observing other agents, they could learn from each other and might imitate each other.

Moreover, interactions could be classified according to the form of coordination (Tesfatsion 2001; Weiss 1999): For example, in an agent-based model agents could cooperate (e.g. share their knowledge and other resources) in order to achieve a common goal or compete due to conflicting goals. However, it should be mentioned that ABM also allows the exploration of more complex cooperative interactions like, for example, friendship (Klügl 2000).

In agent-based models, the local interactions among agents induce effects on the macro-level of the system which cannot be directly linked to the individual behavior of the agents. Hence, properties of the system “emerge” from the local interactions of agents which cannot be derived in terms of a “functional relationship” from the individual behaviors of those agents (Epstein 2006a; Epstein and Axtell 1996; Tesfatsion 2006). In order to explain macroscopic regularities, like norms or price equilibria, the question is whether and how they might be the result of simple, decentralized local interactions between heterogeneous autonomous agents. In this sense, Epstein and Axtell (1996, p. 33) define the rather multifaceted term “emergent structure” as “a stable macroscopic or aggregate pattern induced by the local interaction of the agents”. From the interactions of both the agent-to-agent and the agent-to-environment type, self-organization might evolve (Tesfatsion 2001; Epstein and Axtell 1996).

2.2 Simulation approaches in ABM

A characteristic feature of ABM is the extensive exploitation of computational tools like object-oriented programming and computational power in order to carry out the simulation experiments (Tesfatsion 2001; Chang and Harrington 2006). Hence, even though ABM is sometimes regarded as “a mindset more than a technology” (Bonabeau 2002, p. 7280), some remarks from a more technical perspective of simulation seem appropriate.

In line with Law (2007), simulation models could be categorized according to three dimensions: (1) whether the system under investigation is represented for a certain point in time (static) or whether the system can evolve over time (i.e. static vs. dynamic); (2) whether the model contains random components or not (i.e. stochastic vs. deterministic); and (3) whether in the representation of the system modeled the state variables can change continuously with respect to time or instantaneously at certain points in time (i.e. continuous vs. discrete) where in the latter case different time-advance mechanisms have to be distinguished.

Agent-based simulation models usually include probabilistic components and are dynamic and discrete in nature: agent-based models are often employed to study processes of, for example, adaptation, diffusion, imitation or learning, and to analyze the processes that lead to increased performance in detail, e.g. with respect to the speed of improvement or the diversity of search processes. Accordingly, this is reflected in dynamic simulation models. The processes to be studied are represented in discrete periods. In particular, in each period of the observation time, agents assess the current situation, search for options, evaluate the options and make their choices as defined in the behavioral rules, all potentially in interaction with each other. Then, these actions and events are aggregated to that period’s end state in the system, which is the initial state for the next period. Stochastic components in agent-based models might be, for example, the initial state of the system, external shocks (e.g. the occurrence of a new competitor or radical innovations), the options which the agents discover in a certain period (remember that in agent-based models, decision-makers are not able to survey the entire search space at once and, thus, stepwise discover new solutions) or the agents’ choice of options depending on propensities (rather than definite preferences) modeled via the probability of taking a certain option and, for example, subject to learning (Duffy 2006).

In agent-based simulations, time mostly is modeled in discrete time steps with a fixed-increment time advance, and, thus, leading to equidistant time steps (Gilbert 2008, p. 28). According to Gilbert (2008), an event-driven simulation is an alternative, in particular, when it is not required that all agents have the chance to act at each time step, i.e. a next-event time advance mechanisms brings the simulation clock forward until the next event occurs, for example, when a decision is made by an agent.

Beyond these rather “formal” classifications, Davis et al. (2007), in studying the potential contributions and pitfalls of simulation methods for theory development, argue that the choice of the simulation approach is crucial “because of its framing of research questions, key assumptions, and theoretical logic” (p. 485). The authors distinguish between structured and non-structured simulation approaches. The former contain some built-in properties, like, for example, key assumptions about the system to be modeled. In contrast, non-structured approaches are customizable, offering more flexibility to the modeler. However, a structured approach has the advantage that it has been used and, thus, vetted by other researchers, and that the approach does not represent “an idiosyncratic world tailor-made by the modeler. This reduces the fear that the model is rigged to produce the desired result” (Rivkin 2001, p. 280). Davis et al. (2007) provide an extensive overview of structured simulation approaches used in the area of managerial science—for simulation studies in general, not only for ABM—and, in particular, mention system dynamics, NK fitness landscapes, genetic algorithms, cellular automata and stochastic processes. From these approaches, cellular automata and NK fitness landscapes are outlined more into detail in the following—cellular automata since, according to Dooley (2002; see also Fioretti 2013), they could be regarded as a rather simple form of ABM and thus are helpful to demonstrate the idea of ABM, and NK fitness landscapes since they were widely used in agent-based models in managerial science (see Sect. 3) and serve as basis in our illustrative study in Sect. 4.

Cellular automata (Wolfram 1986) consist of a grid where each agent “resides” in a cell and, hence, the lattice reflects a spatial distribution of the agents. The cells can take various states (most simply just the states “0” or “1”). The state s j,t of cell j at time t depends on its own state in the previous period t − 1 and the previous state of the neighbored cells, for example, cells s j−1, t−1 and s j+1, t−1. From this, the two main aspects of cellular automata for ABM can be seen (Walker and Dooley 1999). First, a central idea is that the impact of agents on each other depends on the distance between the agents, i.e. the closer the neighborhood, the greater the influence on each other. Second, the dependence from which the state of a cell (agent) evolves is specified in rules representing the agent’s behavior. For example, one rule might be “if the sum of left and right neighbors is two, change to a 1” and another “if the sum of left and right neighbors is lower than 2, change to a 0”. Hence, starting from a randomly chosen initial configuration, the states of the cells in the grid change over time according to the specified rules. From the macro perspective of the grid, i.e. the system to be investigated, the researcher is interested in whether and, if so, how certain patterns occur from the spatial interaction processes. The researcher can mainly change two variables within the simulation experiments, i.e. the behavioral rules of the agents and the “radius” of neighborhood (e.g. if only direct neighbors affect each other). Agent-based models implemented via cellular automata mainly seek to study the emergence of macro-level patterns resulting from local interactions like competition, diffusion or segregation in a set of agents (Davis et al. 2007). For example, Lomi and Larsen (1996) apply cellular automata for investigating the characteristics of global dynamics in a branch (e.g. founding or mortality rates) resulting from local competition among organizations.

While cellular automata mainly allow the mapping of evolutionary processes, NK fitness landscapes might be regarded as an approach for representing adaptive search and optimization processes (Davis et al. 2007). NK fitness landscapes were originally developed in evolutionary biology (Kauffman 1993; Kauffman and Levin 1987) to study how effectively and how fast biological systems adapt to reach an optimal point. NK fitness landscapes were initially introduced to managerial science by Levinthal (1997). Since our exemplary agent-based model presented in Sect. 4 is based on NK fitness landscapes, including further details of the formal specifications, at this point we confine our remarks to the main ideas of this approach. The term NK fitness relates to the number N of attributes (e.g. genes, nodes, activities, decisions), and the level K of interactions among these attributes. Each attribute i can take two states d i ∊ {0, 1}, i = 1, …, N and, hence, the overall configuration d is given by an N-dimensional binary vector. The state d i of attribute i contributes with C i to the overall fitness V(d) of configuration d. However, depending on the interactions among attributes, C i is not only affected by d i , but also by the state of K other attributes d j,j≠i . In the case of K = 0, the fitness landscape is single-peaked. If K is raised to the maximum, i.e. K = N − 1, altering one single state d j affects the fitness contributions of all other attributes and, usually, this leads to highly rugged fitness landscapes with numerous local maxima for V(d) (Altenberg 1997; Rivkin and Siggelkow 2007).

The explicit modeling of interactions among attributes might be regarded as the core feature of NK fitness landscapes, explaining its value for research in managerial science: Controlling parameter K, the approach allows to study systems with variable complexity in terms of interdependencies among subsystems (e.g. among subunits or decisions in an organization) with respect to overall fitness. In particular, with the fitness landscape for all possible configurations d specified according to the structure of interactions, the system under investigation searches for higher levels of fitness (performance) by applying certain search strategies like incremental moves (i.e. only one of the i bits switched) or long jumps. Agent-based models using the idea of NK fitness landscapes mainly allow study of the speed and effectiveness of the adaptation processes of modular systems controlling for the interactions among the modules. In managerial science there have been numerous applications of this simulation approach (for overviews see, e.g. Ganco and Hoetker 2009; Sorenson 2002), as might also become apparent in the next section.

3 Selected applications of ABM in managerial science

3.1 Some remarks on the selection of studies

In this section we seek to illustrate the potential of agent-based modeling for research in managerial science by way of example. Given the rather vast literature related to ABM in managerial science, the decision is required which literature to attend to. For this, we find it helpful to start with the particular strengths that simulation-based research is regarded to unfold in managerial science. As such, it has been argued that simulation in general, not only the agent-based approach, is particularly effective for developing theory “when the research question involves a fundamental tension or trade-off” (Davis et al. 2007, p. 485) like, for example, short- versus long-term or chaos versus order, and when “multiple interdependent processes operating simultaneously” occur (Harrison et al. 2007, p. 1229; in a similar vein Davis et al. 2007, p. 495).

Against this background, we decided to base our selectionFootnote 2 on whether one or both of two rather prominent tensions in managerial science are captured in the research question: “exploration versus exploitation” (March 1991) and “differentiation versus integration” (Lawrence and Lorsch 1967). We focus on these tensions since they have been widely studied in managerial science, show up in various domains of managerial science and found numerous manifestations in agent-based models.Footnote 3

In particular, we selected agent-based simulation studies addressing “exploration versus exploitation” and/or “differentiation versus integration” from those fields in managerial science which (a) have seen a remarkable stream of agent-based modeling efforts and (b) are of general interest in managerial science (and, for example, are not related to a certain functional specializationFootnote 4). Given these criteria, we focus on the domains of strategic management, innovation, and organizational structuring and design—where each of which has seen a vast number of studies employing ABM.Footnote 5

Moreover, we decided to address agent-based modeling efforts which are related to the internal of organizations, e.g. decision-making or structures within organizations, rather than entire organizations (firms)—or for being more concrete: in the research efforts introduced, with only some very few exceptions,Footnote 6 the agents represent entities within an organization (e.g. departments, managers) and not entire firms. Beside the valuable effect of further limiting the research efforts to be reported on in this survey, another motivation for this decision is that it allows for keeping a similar level of analysis as provided in our illustrative study in Sect. 4. This gives also reason to a further decision: We limitFootnote 7 the exemplary presentation of modeling efforts to those employing NK fitness landscapes (Sect. 2.2) which is also the simulation approach chosen in our illustrative study.

The research efforts which we describe subsequently more into detail are also summarized in Table 5 in the “Appendix”, including the publishing journal, the research question, (appearance of) the core tensions addressed and the main explanatory variables and parameters as well as the major outcome variable under investigation.

3.2 Agent-based models addressing the tension of “exploration versus exploitation”

Since the seminal work of March (1991) the terms “exploration” and “exploitation” were increasingly used in domains like, for example, organizational learning, innovation, competitive advantage, organizational design, and technological diffusion. According to March (1991) the “essence of exploitation is the refinement and extension of existing competences, technologies, and paradigms”, the “essence of exploration is experimentation with new alternatives” (p. 85). Exploitation is associated, for instance, with incremental innovation, local search, and stepwise improvement around known solutions whereas exploration includes things like radical innovation, distant search, and identification of new solutions. After reviewing numerous definitions and conceptualizations of the two terms, Gupta et al. (2006) based on March (1991), conclude that both, exploitation and exploration, require some kind of learning, improvement and acquisition of new knowledge, but while exploitation occurs around the same “trajectory”, exploration is directed towards entirely different trajectories. As March (1991) points out, there is a fundamental tension between exploration and exploitation since both compete for scarce resources and organizations decide between the two either explicitly or implicitly: explicit choices between the two show up in investment decisions or decisions between alternative competitive strategies; implicit decisions between exploitation and exploration are incorporated, for example, in organizational structures and procedures which affect the balance between the two.

In his seminal work, March (1991) also presents a simulation-based study on “exploration versus exploitation” which is based on the idea of stochastic processes according to the classification of Davis et al. (2007, p. 486) (and not on NK landscapes as in the center here). In the model, agents learn from the organizational code (i.e. wisdom accepted in the organization about how to do things) due to socialization and education; in turn, the code evolves according to the behavior of those agents within the organization whose beliefs correspond better to reality. The diversity of agents’ beliefs and learning speed is critical for the dynamics of the organizational code and agents’ behavior which in turn affect in how far the organizational code reflects reality.

March’s (1991) model allows for the general finding that exploitation is beneficial in the short run but problematic in the long run. However, it was argued that the model lacks some richness, particularly, with respect to properties of the underlying landscape, (i.e. the “reality” as more or less accurately reflected in the organizational code and agents’ beliefs) (Chang and Harrington 2006). Subsequently, we illustrate the agent-based stream of research related to the balance of exploration versus exploitation building on the idea of NK fitness landscapes (Kauffman and Levin 1987; Kauffman 1993) and, with that, in particular, the complexity of interactions within the underlying fitness landscapes is inevitably a core issue.

The idea of NK fitness landscapes was initially introduced by Levinthal (1997)Footnote 8 in the field of management science. In Levinthal’s model the tension “exploration versus exploitation” shows up in the form of “local search versus long jumps” or “local adaptation versus radical organizational change” of organizations searching for superior levels of fitness (or likelihood of survival). Hence, in a rather general perspective, organizations are regarded as agents acting in and reacting to their competitive environment and seeking to generate competitive advantages which could be described in terms of selection and fitness (Hodgson and Knudsen 2010). Levinthal’s analysis aims to figure out whether the initial configuration of an organization persists and, by that, affects firm heterogeneity. According to the idea of NK fitness landscapes, in Levinthal’s model the fitness of an organization is regarded to depend on its N characteristics (“genes”) which could, for example, represent features of its organizational form or strategic policy; moreover, in line with the NK framework, the K interactions between the firm’s characteristics are taken into account where highly interactive characteristics lead to highly rugged fitness landscapes with multiple peaks of nearly similar fitness. The organizations conduct a form of local search meaning that in each period they have the choice of keeping up with the status quo of its N characteristics or to choose one of N other configurations where just one of the N features is changed. Additionally, in each period the organizations discover one radically different configuration of the N characteristics in the sense that each of the N dimensions are specified new at random. Whether the status quo configuration is abandoned in favor of one these N + 1 alternatives depends on whether a higher level of fitness can be achieved. When organizations are in the beginning of their adaptation radical changes occur rather frequently, but when organizations have achieved a rather high level of fitness in highly rugged landscapes (i.e. complex interrelations among the N dimensions) radical changes become rare since it is relatively unlikely that a promising radically changed alternative is discovered. The results suggest that the initial characteristics of an organization have a persistent effect on its future form if the fitness landscape is highly rugged. Hence, firm heterogeneity, rather than being induced by the different environments the firms are operating in, emerges by processes of local search and adaptation from the starting configuration when the firm was founded. Levinthal (1997) concludes with the finding that tightly coupled organizations (i.e. organizations with highly interrelated characteristics or high levels of K) “can not engage in exploration without foregoing the benefits of exploitation” while “more loosely coupled organizations can exploit the fruits of past wisdom while exploiting alternative bases of future viability” (p. 949).

Based on Levinthal’s seminal work (1997), Rivkin (2000) aims at identifying the optimal level of complexity within a firm’s strategy in order to prevent imitation. Inimitability is regarded a major cause of competitive advantage in prominent schools of thought in strategic management. For instance, according to the “resource-based view of the firm” inimitability could result from causal ambiguity or tacit knowledge (e.g. Powell et al. 2006); according to the “market-based view of the firm” as based on industrial economics, high barriers could prevent competitors from market entrance even if imitation is feasible in principle (e.g. (Caves and Porter 1977). In contrast to these hypotheses, Rivkin (2000), employing an agent-based model, argues that imitation could be effectively hampered by the sheer complexity of a strategy. In this study, the tension of exploration versus exploitation occurs in the form of different imitation strategies of the imitator: incremental improvement in terms of stepwise adoption of the strategy to be imitated versus informed copying in the sense that the imitator reconfiges radically the own strategy in the direction of the leading firm’s strategy. In particular, based on the framework of NK landscapes Rivkin (2000) provides evidence on the imitation-preventing effect of complexity in three steps: First, he shows that the complexity of interactions among elements leads to intractability with respect to finding the global optimum, since even for a moderate time required for the evaluation for newly discovered configurations, an exhaustive search is too time-consuming (NP completeness). In the second step, a simulation is applied to show that an imitator who engages in incremental improvements in order to copy a successful firm is doomed to failure in the face of complexity. Third, Rivkin shows by simulation that even a more advanced form of imitation, i.e. copying on the basis of careful observation of the high-performing leader, is prone to failure if complexity is high. The reason is that in the case of highly coupled decisions, some of the leader’s decisions tend to catapult the imitator into a performance basin rather than to the desired performance peak.

Another strand of research is directed towards the design and structure of new products and processes relying on NK fitness landscapes. In particular, the level of modularity (complexity) of design and the implications for firm performance as well as the persistence of competitive advantages have been analyzed. Ethiraj and Levinthal (2004)—regarding modularization as a way to deal with complexity—study the implications of over- or under-modularity compared to some “true” level of modularity as given by the structure of interdependencies within the design problem. The tension between exploration and exploitation appears in form of two distinct key processes of how product design could evolve: by local search or/and by recombination, i.e. substituting one module by another (either from within the organization or from copying a module of another firm). Obviously, recombination provides the opportunity of new (“distant”) solutions in terms of exploration. The authors find that too extensive modularization may induce designers’ ignorance toward relevant interdependencies between modules and may potentially lead to inferior designs. Moreover, potential speed gains from a modular (i.e. parallelized) design might be balanced out by an inefficient integration and testing phase, cyclic behavior and low performance improvements.

In a similar vein, Ethiraj et al. (2008) analyze how the modularity of new products affects the trade-off between innovation and imitation by competitors. In this modelling effort the tension of “exploration versus exploitation” occurs in a trade-off between the incremental innovation of an innovation leader and the imitation of a low-performing firm. In particular, innovation is represented as a process of incremental local search where managers seek to enhance the performance of product modules by incremental intra-module changes; imitation by competitors has the form of distant search: the imitator, substitutes a subset of the own choices and/or interdependencies among choices with an equivalent set of choices and/or interdependencies copied from the innovation leader. The authors find that modularity of design induces a trade-off between innovation performance and deterrence to be imitated. The experimental results indicate that nearly modular structures yield persistent performance differences between innovation leaders and imitators.

The research efforts introduced exemplarily so far focus mainly on “exploration versus exploitation” in the context of complexity versus modularity of strategy, products or processes without going more into detail of how managers make their choices. The studies that we present briefly in the following address managerial decision-making more in detail.

In this sense, the research effort of Gavetti and Levinthal (2000) addresses the tension between exploration and exploitation in form of different modes of strategic decision-making. In particular, the authors distinguish forward-looking search processes from backward-looking ones. In the forward-looking search, the decision maker relies on “beliefs about the linkage between the choice of actions and the impact of those actions on outcomes” (Gavetti and Levinthal 2000, p. 113). The decision maker disposes of a (simplified) cognitive representation of the (true) fitness landscape allowing for identifying those areas in the fitness landscape that promise high fitness levels and thus “moving” to these areas at once in a “long jump” (i.e. altering many characteristics of the N-dimensional configuration of an organization’s strategy at once). In contrast, experiential search is represented as a process of local search, meaning that only one or some attributes of the current state, i.e. the actual experience, are changed, and, should this change appear to be productive, it is used as a basis for a new local search. Gavetti and Levinthal (2000) focus on situations where the cognitive representation of the fitness landscape is a simplified version of the true landscape. In particular, the cognitive representation includes fewer dimensions of actions than the true landscape incorporates. Hence, by applying a forward-looking search in the perceived landscape, the decision maker seeks to identify a superior region in the true landscape, and then tries to exploit this region with the experiential search, i.e. local search as described above. Gavetti and Levinthal find that even simplified representations of the actual landscape provide powerful guidance for subsequent experiential searches in the actual landscape.

In a similar vein, the “exploration versus exploitation” tension shows up in the study of Gavetti et al. (2005). The study addresses the question of how effective so-called analogical reasoning is in situations of novelty and complexity compared to “deductive reasoning and rational choice” on the one side and the idea that “firms discover effective positions through local, boundedly rational search and luck” (p. 692) on the other side. While the former enables decision makers to directly identify and move to the global optimum—however “distant” from the status quo it is—does the latter allow for stepwise improvements related to the status quo only. Analogous reasoning is regarded as something in between meaning that, when facing a new situation, decision makers apply insights developed in one context to a new setting (p. 693). The authors study the effects of certain managerial characteristics on the contribution of analogical reasoning to firm performance. They find that analogical reasoning especially contributes to firm performance when managers are able to effectively distinguish similar industries from different ones. Moreover, analogical reasoning appears to become less effective with depth of managerial experience but more effective with increasing breadth of experience.

Sommer and Loch (2004), focusing on complexity in innovation projects and unforeseeable uncertainty, investigate the contributions of trial and error learning in contrast to so-called “selectionism”. Complexity refers to the number of parts (variables) and the interactions among them while unforeseeable uncertainty is defined “as the inability to recognize and articulate relevant variables and their functional relationships” (p. 1334). Two approaches to dealing with complexity and unforeseeable uncertainty are studied with respect to the effects on project payoff. Trial and error learning means “flexibly adjusting project activities and targets to new information, as it becomes available” (p. 1335) via local search. If an organization implements selectionism, several solutions are developed in parallel and the most appropriate one is selected ex post. However, ex post identifying the most appropriate solution requires employing a test for the solutions developed. Hence, apart from the complexity of the system to be designed, the quality of the test is the second factor to be considered. The results indicate that trial and error learning is the more robust approach since it yields higher pay-offs in the case of imperfect tests, in particular, when the project incorporates a manifold of interactions; moreover, trial and error learning turns out to be more advantageous even in the case of a perfect ex ante test when the system size is large.

Ghemawat and Levinthal (2008) argue that a strategy is specified by two complementary approaches, i.e. ex ante design and ex post adjustment. The first represents a top-down pre-specification of some major principles and some particular policy choices; the latter describes the emergence of strategic positions and tactical alignment. Hence, in terms of fitness landscapes, top-down pre-specification means strategic guidance for positioning an organization in one or another region of the landscape whereas the latter is related to stepwise improvement. Hence, top-down pre-specification is associated with long jumps (exploration) whereas tactical alignment relates to local search (exploitation). The balance between these two elements of strategic specification also shapes the requirements for strategic planning: if, for example, only a few higher-level choices make subsequent lower-level decisions more or less self-evident, than strategic planning is subject to relatively modest requirements compared to a situation where the strategic action plan has to be completely specified in advance. In modifying the symmetric structure as given in the standard NK model, Ghemawat and Levinthal (2008) take into account that some choices might be more influential (“strategic”) than others. The simulation results reveal, first, that it is beneficial with respect to long-term performance to ex ante focus on the more influential choices rather than on a random selection of choices; second, the results indicate that tactical adjustments could compensate for mis-specified strategic choices if these are highly interactive with other strategic decisions but not if they have low levels of interactions with other policy choices. Hence, in this model, in a way the tension of “exploration versus exploitation” is studied with respect to the sequence in time of how the two are considered in decision-making.

Another strand of research addresses the relevance of attitudes in favor of innovation taking place. Denrell and March (2001) in an simulation-based study (employing stochastic processes) show that adaptive processes tend to reproduce success and, thus, lead to a bias against risky and novel alternative options unless, for example, adaptation is based on some erroneous information. Baumann and Martignoni (2011) extend this idea in analyzing whether a systematic pro-innovation bias could increase firm performance. The “exploration versus exploitation” tension is addressed in a way that decision-makers intend to conduct exploitation in terms of incremental improvement but where exploration happens “accidentally” due to a systematic pro-innovation bias. The authors motivate their analysis with observations that several “mechanisms” at the individual as well as organizational level tend to prevent rather than foster innovations and change like, for example, the status quo bias in individual decision making (Kahneman et al. 1982) or inadequate applications of standard financial tools like the discounted cash flow method (Christensen et al. 2008). Building on the idea of NK fitness landscapes, Baumann and Martignoni (2011) find that a moderate systematic bias in favor of innovation could increase performance in the long run in the case of complex and stable environments, since a pro-innovation bias enhances exploration; however, in most cases an unbiased evaluation of options turns out to be most effective.

3.3 Agent-based models addressing the tension of “differentiation versus integration”

“Differentiation versus integration” is the second prominent tension we consider in our illustrative survey. These notions are widely used in managerial science and they date back to the seminal work of Lawrence and Lorsch (1967), notwithstanding the fact that the notions capture issues which were connected ever since and inevitably to organizational structuring. In particular, differentiation denotes “the state of segmentation of the organizational system into subsystems, each of which tends to develop particular attributes in relation to the requirements posed by its relevant external environment” (Lawrence and Lorsch 1967, pp. 3–4); integration “is defined as the process of achieving unity of effort among the various subsystems in the accomplishment of the organization’s task” where a task is regarded as “a complete input-transformation-output cycle involving at least the design, production, and distribution of some goods or services” (p. 4). Differentiation is associated with issues like division of labor, specialization and delegation; integration is related to, for example, coordination by hierarchies, incentives or sharing of norms. Lawrence and Lorsch (1967) point out, that “differentiation and integration are essentially antagonistic, and that one can be obtained only at the expense of the other” (p. 47) and, hence, the need for specialization is to be balanced with the need for coordination. Several studies apply an agent-based approach to figure out core issues of the “differentiation versus integration” tension. Subsequently, we report on some research efforts building on the framework of NK fitness landscapes.

In their agent-based approach Dosi et al. (2003) construe differentiation as a “division of cognitive labour” (p. 413), meaning that it affects how new solutions are generated (e.g. which scope the search process has for new solutions and who searches); integration is regarded as the determination of how and which solutions are selected. At the heart of Dosi et al.’s paper is the relation between decomposition (i.e. how search in the organization is configured) on the one hand and incentives (related to individual, team or firm performance) and the power to veto the decisions of other agents as selection mechanism on the other hand. In this modeling effort, the N “genes” of the N-dimensional “genom” of the NK framework represent the decisions which an organization has to make in order to fulfil its task; hence, the organizations face an N-dimensional binary decision problem which, in the course of differentiation, is partitioned with the partitions assigned to organizational sub-units. Interactions within the decomposed overall decision problem of the organization are mapped according to the framework of NK fitness landscapes. However, it is of interest whether interactions across partitions assigned to sub-units occur. In particular, in the case that the organizational decomposition and assignment to sub-units does not perfectly reflect the true interactions between decisions (meaning that cross-unit decisional interactions occur), according to Dosi et al. (2003) incentives could induce sub-units to mutually perturb each other’s search processes, in which case hierarchical or lateral veto power turns out to be useful in preventing endless perturbations.

How well different organizational forms can cope with changes in the environment while searching for higher levels of performance is a major issue in Siggelkow and Rivkin (2005). They introduce an agent-based model which has some features in common with the model of Dosi et al. (2003) as sketched above. However, two distinctive features in Siggelkow and Rivkin’s model deserve closer attention. First, to represent environmental turbulence, the authors let the fitness landscapes undergo correlated shocks at periodic intervals. Second, with respect to coordination—or integration in terms of Lawrence and Lorsch (1967), Siggelkow and Rivkin do not only take the incentive system (firmwide versus departmental incentives) and veto power into account but also distinguish a variety of intermediate coordination mechanisms between centralized and completely decentralized decision-making. Thus, keeping the decomposition of decisions fixed (two sub-units of equal decisional scope), the turbulence and the complexity of the environment (in terms of interactions between the decisions) for the different coordination modes is varied. Complexity stresses the importance of a broad search for superior solutions, while turbulence raises the relevance of speedy adjustments. Furthermore, the results indicate that in the most demanding case of highly turbulent plus complex environments, two organizational forms turn out to have the best balance for a speedy and broad search: first, an organization relying on lateral communication and firm-wide incentives and, second, a centralized organization where both forms are required to have considerable information processing capabilities to evaluate alternatives.

This brings us to the third focal aspect of this section, a reflection on information processing in organizations in agent-based models. In the two models sketched so far, the decision makers have limited information about the entire landscape of solutions they are operating in and, thus, have to explore the solution space stepwise in order to find superior configurations. Moreover, the information processing capacities of organizational members are integrated in the model in terms of how many alternatives can be evaluated at once (e.g. Siggelkow and Rivkin 2005). However, another aspect is that the decision makers might have difficulties evaluating the consequences of alternative solutions, once discovered.

Some agent-based models have been introduced which seek to fill this gap. In this sense, the tension of “differentiation versus integration” is analyzed from the angle of information processing capabilities in organizations. As such, the research of Knudsen and Levinthal (2007) should be mentioned. Knudsen and Levinthal build on the seminal works of Sah and Stiglitz (1988, 1986, 1985), which provide fundamental insights into the robustness of different organizational structures against Type I errors (accepting inferior options) and Type II errors (rejecting superior options) in a project-selection framework. In particular, Knudsen and Levinthal (2007) introduce path dependence and interactions into the project-selection framework: While in the original framework, the projects to decide on are randomly drawn from a fixed distribution of options, in Knudsen and Levinthal’s model, the availability of alternative project proposals depends on the current state of the organization. Relying on the idea of local search and NK fitness landscapes, the authors draw alternative projects from the “neighborhood” of the current practice, i.e. alternative options only differ in a few attributes from the current state. The so-called “task environment” describes whether changing one attribute from the current state in favor of an alternative option also has an effect on the performance contributions of other attributes or not. Knudsen and Levinthal (2007) investigate how the imperfect screening capabilities of evaluators, i.e. imperfect capabilities to assess the consequences of alternatives, affect the performance achieved in search processes and under the regime of different organizational forms between hierarchies and polyarchies. With respect to the tension of “differentiation versus integration” the study of Knudsen and Levinthal (2007) reveals that with delegation of decision-making the mode of integration (in terms of polyarchies versus hierarchies) should be seen in the light of the accuracy of the screening capabilities. For example, hierarchies tend to be efficient in case of rather inaccurate evaluations and are particularly prone to stick to local maxima in case of perfect evaluators.

These findings motivated further agent-based simulation studies on the imperfections of ex ante evaluations and under various organizational arrangements, whether the imperfections are of an unsystematic nature (Wall 2010, 2011) or due to systematic errors (biases) (Tversky and Kahneman 1974) when evaluating alternatives (e.g. as already reported on Baumann and Martignoni 2011; Behrens et al. 2014).

3.4 Agent-based models addressing both tensions

In this section we seek to introduce studies which employ ABM to address the “exploration versus exploitation” and the “differentiation versus integration” tension in conjunction with each other. This is of interest, for example, to investigate which organizational forms foster innovation.

In their widely recognized paper, Rivkin and Siggelkow (2003) raise a similar question, i.e. how to balance the search for good solutions and stability around good solutions once discovered in an organization where the former addresses “exploration” and the latter “exploitation” according to March (1991, see also Sect. 3.2). The authors identify five organizational components affecting organizational performance, (1) the allocation (decomposition) of decisional tasks to sub-units, (2) the authority of a central office, (3) the alternative possible solutions the sub-units discover and inform a central authority about, (4) the incentive system which might reward sub-units for firm performance or for their departmental performance, (5) the information-processing abilities of the central authority. In particular, Rivkin and Siggelkow’s (2003) analysis is put forward in four steps: First, the authors investigate the effects that an active central authority has on the search process and on performance. They find that, in line with conventional wisdom, central authority is not helpful in the case of low interactions between sub-units’ decisions but for moderate levels of interaction, a central authority appears to increase organizational performance. However, contrary to conventional wisdom, if interactions are dense, centralization turns out to be harmful since the central authority tends to lead the organization to one of the many bad local optima in which the organization is then likely to be trapped. In the second step, the effects of the sub-units’ managers’ capabilities for a broad search of solutions are investigated. The results show that, contrary to conventional wisdom, centralization is more valuable if managers are highly capable of excessive searching and interactions are dense since then the central authority has a stabilizing effect. This corresponds to the results of Dosi et al. (2003) as reported in Sect. 3.3. The third step of analysis reveals some results that run contrary to intuition of the “differentiation versus integration” tension: Intuition suggests that central authority (i.e. refraining from delegation) and firm-wide incentives (i.e. increasing integration) could serve as substitutes to each other; however, results indicate that they are rather complements: firm-wide incentives can coordinate the intentions of sub-units to act in the firm’s best interests (integration) but do not necessarily coordinate decentralized choices: “Capable subordinates can engage in aggressive, well-intentioned search that results in mutually destructive `improvement´” (Rivkin and Siggelkow 2003, p. 306). The fourth step of analysis confirms conventional wisdom that centralization provides no additional benefit if no interactions between sub-units’ decisions exist; however, decomposing the overall organizational task in such a way that some interactions remain, induces additional search efforts on the sub-units’ site which could be beneficially exploited by a capable central authority.

Another question of organizational structure that might be particularly relevant for innovation is raised by Siggelkow and Rivkin (2006): Does extensive searching at lower levels of an organization increase exploration? Building on the framework of NK-landscapes, the overall N-dimensional decision problem of the organization is split into two parts of equal size with each of this partitions assigned to one of two subunits. In this structure, the subunits’ capability for exploration is captured by the number of alternatives that each subunit is able to evaluate (and this, in particular, is given by the number of single decisions in the subunits’ decisional vectors which could be changed at once). Siggelkow and Rivkin (2006) find that extensive decentralized exploration does not universally increase exploration at firm level. In particular, if cross-departmental interactions exist, departmental managers tend to screen out those solutions that are not in line with their preferences. In consequence, innovative and preferable solutions from the firm’s perspective might remain unknown at the company level. Marengo and Dosi (2005), as well as Rivkin and Siggelkow (2006), also apply agent-based simulations to come to similar conclusions.

In the models sketched so far, the structural settings of the organizations are kept stable over time. Siggelkow and Levinthal (2003) investigate whether it might be useful to temporarily change the organizational form, i.e. to transiently modify the configuration of differentiation and integration. In particular, the authors compare a permanently centralized organization (all decisions of an N-dimensional binary decision-problem are made by a central authority without any delegation) and a permanently decentralized organization (the firm’s decisions are decomposed into two parts of equal size and delegated to two subunits) with an organization which starts as a decentralized structure and after a certain time becomes reintegrated into a centralized one. In non-decomposable settings (meaning that decisions assigned to one subunit also affect the outcome of decisions of another subunit), the reintegrated form outperforms the permanently decentralized and the permanently centralized structure. With respect to the decentralized form, this is caused by two patterns in the search process: firstly, cyclic “self-perturbance” may occur when each sub-unit modifies its decisions for improving performance and, because of cross-unit interactions, affects the performance of the other unit, which then starts modifying its choices and so on. In this situation, reintegration could stop the cycling behavior. The second pattern which might occur is that the sub-units have found the optimal solution given the choices of the other mutually dependent department, and, hence, sub-units might have found a Nash equilibrium which might, however, be low-performing with respect to firm performance. Then, reintegration could “disturb” the situation and lead to higher levels of overall performance. Moreover, the study of Siggelkow and Levinthal (2003) views the “differentiation versus integration” tension from an angle of environmental dynamics by asking which organizational structure is able to achieve high levels of performance after it has gone through an external shock. Then, a centralized organization with rather a large “distance” to the optimal solution is likely to stick to an inferior local maximum, especially when the distance between the initial point and the optimal solution is high. Compared to that, starting with a decentralized search allowing for local exploration and switching to a centralized organization after some periods allowing for refinement and improvement (exploitation), leads to a higher long-term performance on average. The more general conclusion from this is that organizations facing a highly complex decision-making problem could be centralized in a steady state but should temporarily turn to a decentralized form if they go through major environmental changes. Hence, in the temporarily decentralized organization the tension between exploration and exploitation, is “resolved” into a sequence in time, i.e. local exploration first and then global exploitation, and for each of these phases the appropriate balance between differentiation and integration is implemented.

4 ABM in management accounting research: firm-wide versus business unit-related performance measures in the compensation of business unit managers

In the previous sections we gave an exemplary overview of how agent-based modeling contributed to research on two fundamental issues (“tensions”) of managerial science. In this section, by employing an example related to the “differentiation versus integration” tension, we seek to illustrate how agent-based simulation could contribute to research in the domain of management accounting.

There is some evidence that simulation as a research method is still only of minor relevance in the domain of management accounting. Hesford et al. (2007) analyzed 916 articles published from 1981 to 2000 in the ten most influential journals in management accounting with respect to research topic and method applied, and only three of the 916 papers were built on a simulative approach.Footnote 9 Even though, since then, some further simulation-based studies have been conducted (e.g. Labro and Vanhoucke 2007; Leitner 2014), the dissemination of simulation and agent-based simulation, in particular, in the domain of management accounting research, appears rather low.

Against this background, the purpose of this section is to illustrate the potential benefits as well as the possible shortcomings of ABM in the domain of management accounting research. For this, we proceed as follows: As an illustration we refer to the study by Bushman et al. (1995), which analyzes whether interactions between business units affect the (optimal) incentive system of business unit managers, in particular, when compensation is based on firm performance or on the performance of business units.

First, the relation of this topic to the “differentiation versus integration” tension as discussed in Sects. 3.3 and 3.4 merits a comment: The tension occurs in the question of how the reward structure, inevitably based on noisy performance measures, as a way of integration should be designed in face of different levels of interdependencies between business units resulting from differentiation.

For our purpose, the Bushman et al. study is of particular interest since it applies a twofold research method: In the first part a closed-form model is introduced and afterwards the results of an empirical study are presented. Hence, the idea behind our procedure is to provide some indication as to how agent-based simulation models could contribute to filling the “sweet spot” (p. 497) between analytical modeling and empirical studies in the area of management accounting, as Davis et al. (2007) expects simulation techniques to do so.

Subsequently, we briefly report on the analytical and empirical part of the Bushman et al. study (Sect. 4.1). In Sect. 4.2 we introduce an agent-based simulation model which takes up major aspects of the closed-form model by Bushman et al. (1995). Section 4.3 presents and discusses the results of our agent-based model. In Sect. 4.4 we discuss the agent-based approach as applied to our exemplary topic in comparison to the two research approaches employed by Bushman et al.

4.1 Study by Bushman et al. (1995)

4.1.1 Theoretical part

By means of a principal-agent model, Bushman et al. “show that the use of aggregate performance measures is an increasing function of intra-firm interdependencies” (1995, p. 101). The model considers the contracts between a risk-neutral firm (principal) employing a number m of risk-averse business unit managers k as agents (each with a negative exponential utility function). For mapping cross-unit interdependencies, manager k’s effort not only affects the performance of this manager’s own business unit D k but also the performance of other units. In particular, the performance of unit k is given by an additive function as

with f kl ≥ 0 denoting the marginal product of a manager l’s effort e l on unit k’s performance, f kk > 0 for all k = 1, …, m and with θ k being a normally distributed random variable with mean zero, variance σ k , and covariance (θ k , θ l ) = σ kl . It is worth mentioning that, due to f kl ≥ 0, only positive but no negative side effects on other business units are incorporated in the model. As a measure for the possibilities for spillover effects caused by manager k, the authors define the set \(M_{k} = \left\{ {\left. l \right|f_{kl} > 0{\text{ for any }} l \ne k} \right\}\). Then |M k | = m − 1 means that manager k can affect the performance of all other business units, while with |M k | = 0 the manager can merely influence the performance of the own unit. The performance of the firm is given as

The model considers a linear incentive scheme with compensation w i given by

Hence, given the components of interdependencies and incentives, the model is set up from the “familiar ingredients” of principal-agent models, i.e. the principal maximizing his/her utility function in terms of the difference between overall performance A and the sum of compensation w k subject to opportunity wage constraints and the rational action choice constraints of business unit managers (e.g. Lambert 2001). The solution of the model leads to the following findings (Bushman et al. 1995, pp. 108):

-

(1)

The relative use [γ k /(γ k + β k )] of the aggregate performance measure A in the optimal incentive system for manager k increases

-

with the increasing possibilities |M k | of the manager to affect the performance of other units

-

for |M k | ≥ 1 given, with an increasing marginal impact f kl on other units

-

for |M k | ≥ 1 given, with a decreasing number of business units m

-

(2)

The ratio γ k /β k of the relative weights of the business units’ and the aggregate performance in the optimal contract depends

-

on the correlation between business unit k and the rest of the firm: In particular, if the units’ performances are correlated with each other, noise could be filtered out of the manager’s contract by putting more weight on the “non-k” performance measures

-

on the noise of the overall performance A: With increasing noise related to A its relative weight γ k decreases

4.1.2 Empirical part

The empirical part of Bushman et al.’s study is based on a sample of 246 firms which participated in a survey on compensation plans. However, with respect to the explanatory variables, it is worth mentioning here that the authors do not examine the second part of their theoretical findings, i.e. those related to the information quality (noise) of the performance measures, due to the unavailability of appropriate data.

To measure the level of intra-firm interdependencies, Bushman et al. (1995) use firm-wide characteristics like diversification or intersegment sales. In particular, high diversification across product lines is assumed to lead to business units that are rather independent from each other. The authors distinguish between related diversification (within an industry) and unrelated diversification (sales distributed across unrelated industries). Accordingly, they hypothesize that the use of aggregate performance measures is negatively related to product-line diversification—with an even stronger negative relation to unrelated diversification. The same is predicted for geographical diversification, which is assumed to be negatively related to the use of aggregated performance measures in compensation plans. Furthermore, intra-firm sales are regarded as a proxy for intra-company interdependencies since they could occur if the output of one unit serves as the input of other units. Consequently, it is hypothesized that the use of aggregate performance measures is positively related with intra-firm sales.

To empirically capture the use of aggregate versus business unit performance measures, Bushman et al. (1995) determine two distinct aspects, first, the hierarchical level a manager is assigned to, and, second, the levels of aggregation of performance measures. For the first aspect, on the basis of the underlying empirical survey, Bushman et al. distinguish four hierarchical levels in terms of “Corporate CEO”, “Group CEO”, “Division CEO” and “Plant Manager”; accordingly, for the second aspect, i.e. the aggregation of performance measures, the authors differentiate between corporate, group, division, and plant performance. The data available from the underlying survey reflect the relative weights that firms give to these performance measures for each managerial level. This allows the weights to be summed up of those performance measures used in the annual bonus plans of a certain hierarchical level that are “more aggregate” than the organizational level of the respective manager. With respect to long-term incentives, Bushman et al. analyze the extent to which incentives like stock options are tied to performance measures above the organizational level of the managers.

The empirical part of Bushman et al.’s (1995) study is more differentiated than the theoretical part with regard to, at least, two aspects: the number of hierarchical levels (i.e. four vs. two levels) and the time structure of the incentives (annual and long-term incentives vs. not determined time-horizon). However, a general finding of the theoretical part—namely that with increasing cross-unit interactions, all else equal, it is more useful to base compensation on aggregate performance measures—is broadly supported by the empirical results. In particular, intra-firm sales and geographical diversification, serving as proxies for intra-firm interdependencies, turn out to be highly relevant for the annual bonuses in the predicted manner. In contrast, product-line diversification appears to be marginally relevant for the annual compensation plans, and even the differentiation between related and unrelated product-line diversification does not improve the explanatory power. The general finding of the theoretical part is also supported with respect to the long-term incentives which turned out to be nearly entirely tied on corporate performance (i.e. tied to an aggregate performance measure): they are positively associated with intra-firm sales and negatively associated with product-line and geographic diversification. While the general finding of Bushman et al.’s theoretical part also holds for the different hierarchical levels captured in the empirical part, the proxies for intra-firm interactions turn out to have different explanatory power at different hierarchical levels.

4.2 An agent-based simulation model

In this section, we describe an agent-based model which reflects major features of the Bushman et al.’s (1995) principal-agent model as there are interdependencies between business units, linear additive incentive schemes based on the business unit or more aggregate performance measures and linear additive errors related to performance measures. However, given the characteristic properties of agents in ABM (see Sect. 2.1), there are also major differences compared to Bushman et al.’s formal model like, for example, the “solution strategy” of the unit managers (i.e. stepwise improvement rather than maximization) due to limited information about the solution space.

The central issue of our illustrative subject of investigation is the intersection of interdependencies between business units on the one hand and performance measures used in the incentive scheme for business unit managers on the other. Hence, the simulation model has to allow for the representation of different structures of interdependencies. For this, the concept of NK fitness landscapes (Kauffman 1993; Kauffman and Levin 1987, see Sect. 2.2) provides an appropriate simulation approach (Davis et al. 2007) as it allows interactions between attributes to be mapped in a highly flexible and controllable way.

In our model, artificial organizations, consisting of business units and a central office, including an accounting department, search for solutions providing superior levels of organizational performance for an N-dimensional decision problem. In particular, at each time step t (t = 1, …, T) in the observation period, our artificial organizations face an N-dimensional decision problem d !t , d 2t , …, d Nt . Corresponding to the formal platform of the NK model, the N single decisions are binary decisions, i.e. \(d_{it} \in \left\{ {0,1} \right\} , { }(i = 1, \ldots ,N)\). With that, over all configurations of the N single decisions, the search space at each time step consists of 2N different binary vectors \({\mathbf{d}}_{t} \equiv (d_{1t} , \ldots ,d_{Nt} )\). Each of the two states d it ∊ {0; 1} makes a certain contribution C it (with 0 ≤ C it ≤ 1) to the overall performance V t of the organization. However, in accordance with the NK framework, the contribution C it to overall performance may not only depend on the single choice d it ; moreover, C it may also be affected by K other decisions, K ∊ {0, 1, …, N − 1}. Hence, parameter K reflects the level of interactions, i.e. the number of other choices d jt , j ≠ i which also affect the performance contribution of decision d it . For simplicity’s sake, it is assumed that the level of interactions K is the same for all decisions i and stable over observation time T. More formally, contribution C it is a function c i of choice d it and of K other decisions:

In line with the NK model, for each possible vector (d i , d 1 i , …, d K i ) the contribution function c i randomly draws a value from a uniform distribution over the unit interval, i.e. U [0, 1]. The contribution function c i is stable over time. Given Eq. 4, whenever one of the choices d it , d 1 ti , …, d K it is altered, another (randomly chosen) contribution C it becomes effective. The overall performance V(d t ) of a configuration d t of choices is represented as the normalized sumFootnote 10 of contributions C it , which results in

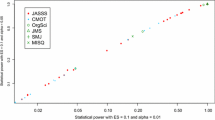

Vice versa, depending on the interaction structure, altering d it might not only affect C it , but also the contribution of C jt,j≠i to “other” decisions j ≠ i. Hence, altering d it could provide further positive or negative contributions (i.e. spillover effects) to overall performance V t . In the most simple case, no interactions between the single choices d it exist, i.e. K = 0, and the performance landscape has a single peak. In contrast, a situation with K = N − 1 for all i reflects the maximum level of interactions, and the performance landscape would be maximally rugged (e.g. Altenberg 1997; Rivkin and Siggelkow 2007).