Abstract

Artificial intelligence (AI) continues to transform firm-customer interactions. However, current AI marketing agents are often perceived as cold and uncaring and can be poor substitutes for human-based interactions. Addressing this issue, this article argues that artificial empathy needs to become an important design consideration in the next generation of AI marketing applications. Drawing from research in diverse disciplines, we develop a systematic framework for integrating artificial empathy into AI-enabled marketing interactions. We elaborate on the key components of artificial empathy and how each component can be implemented in AI marketing agents. We further explicate and test how artificial empathy generates value for both customers and firms by bridging the AI-human gap in affective and social customer experience. Recognizing that artificial empathy may not always be desirable or relevant, we identify the requirements for artificial empathy to create value and deduce situations where it is unnecessary and, in some cases, harmful.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

For the past three decades, novel interactions between firms and customers have often been fueled by new technologies (Grewal et al. 2020a). Various forms of digital marketing arose from the debut of the Web, the rising influence of search engines, and the proliferation of social media. Mobile marketing became an important field due to the popularity and functionalities of smart mobile devices (Grewal et al. 2016). Retail interactions have been significantly enhanced by various in-store technologies such as handheld scanners, digital price tags, and augmented reality (Grewal et al. 2020b, 2020c). More recently, firm-customer interactions are undergoing another transformation with the help of artificial intelligence (AI), representing the latest marketing innovation brought forth by technology. As our review of AI marketing applications in Web Appendix 1 shows, AI technologies have been applied to many areas of firm–customer interactions and across all phases of the customer journey (Hoyer et al. 2020).

Despite diverse AI marketing applications, our review shows that AI technologies have largely focused on improving the cognitive and behavioral dimensions of customer experience (Puntoni et al. 2021). Much less attention has been paid to the emotional and social components of customer experience, even though they are essential to superior customer outcomes (Lemon and Verhoef 2016). This lack of attention is striking in view of the demonstrated negative effect of using AI in place of human agents when interacting with customers. For example, Luo et al.’s (2019) study of AI sales agents found that, when customers knew their conversational partner was a bot, they were curter, purchased less, and perceived the agent as less empathic than human sales agents. In another study of humanoid service robots, Mende et al. (2019) found that these service robots elicited a greater level of psychological discomfort than human service providers, leading to potentially self-detrimental consumption consequences. These studies point to a significant gap between AI-enabled marketing interactions and those managed by humans, impeding the effective use of AI technologies and the consistent management of firm-customer interactions. For AI technologies to be effective in the marketing domain, future AI applications need to go beyond technological efficiency and accuracy to become more sensitive to the emotional and social aspects of customer experience (Huang and Rust 2018; Puntoni et al. 2021).

In this paper, we argue that artificial empathy is key to bridging the human-AI gap on affective and social customer experience and should become an important consideration in future AI-enabled firm-customer interactions. Artificial empathy represents an ability of AI agents to detect and adapt to humans’ cognitive needs and emotional state (Asada 2015). As an important stage in AI evolution (Huang and Rust 2018), empathic AI improves upon less-adaptive traditional non-empathic AI and brings AI one step closer to human intelligence (Dial 2018). Currently, technologies for building empathic AIs are already available. Advances in affective computing have made it possible for AI agents to adapt to users’ emotions (Poria et al. 2017; Sekar 2019). Practical empathic AI applications such as Microsoft XiaoIceFootnote 1 and Meta AI’s Blender BotFootnote 2 are being developed for human-AI interactions. With such technologies, artificial empathy is not a distant reality but can be implemented at least to some extent now.

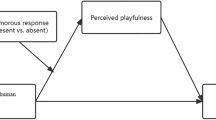

Despite the technological promise and growing realization that artificial empathy is important to effective AI applications, detailed examination of artificial empathy as it applies to marketing is lacking. In particular, when and how artificial empathy should be integrated into AI-enabled marketing interactions remain unanswered (Davenport et al. 2020). Addressing these gaps, this article provides a systematic framework for leveraging artificial empathy in marketing interactions, for situations where consumers are clearly aware that they are interacting with an AI agent (see Fig. 1). Drawing from diverse disciplines such as computer science, psychology, robotics, and communications, we explicate artificial empathy, its key components, and how it can be implemented in marketing interactions through the latest empathic AI technologies. We examine how artificial empathy can generate value by bridging the AI-human customer experience gap and empirically test the ideas in a pilot study. In the meantime, we note that artificial empathy is not a panacea for all AI-enabled marketing interactions. Attempts at humanizing AI agents sometimes can create unintended negative consequences (Crolic et al. 2022). It is important that businesses are cognizant of when artificial empathy is beneficial vs. unnecessary or detrimental. To this end, we discuss the prerequisites for artificial empathy to create value and identify situations where artificial empathy may not be so desirable.

Our research contributes to marketing research and practice in several ways. First, most discussions of empathic AI to date have occurred in computer science and related fields (see Paiva et al. 2017). But as Davenport et al. (2020) argue, the marketing discipline should play an equally important role in addressing AI-related problems given the vast opportunities and potential gains from AI marketing applications. We respond to this call by combining empathic AI research in computational fields with the psychology and marketing literatures to bring systematic discussions of empathic AI into the marketing field. We identify important research propositions and future research questions to fuel marketing investigations in this area. Second, by centering the discussion of AI marketing applications on artificial empathy, we unite the need for algorithmic optimization and automation with the experiential aspect of the consumer-AI interaction (Puntoni et al. 2021) to create a common purpose and framework for such investigations. As empathy is inherently an intertwined process of cognition and affect, we hope to inspire more discussions between algorithmic AI research and the study of the human-AI interface. Finally, by considering most recent technological advances and best practices, our research offers practical guidance on when and how marketing practitioners should realize artificial empathy in their AI applications. As many empathic capabilities already exist at today’s technological level, the potential gain for marketing practice is immediately relevant.

What is artificial empathy?

Empathy in the interpersonal domain

The root of artificial empathy lies in empathy in interpersonal domains such as clinical psychology, social psychology, and ethics (Yalcin and DiPaola 2018). Empathy refers to the capacity to sense, understand, and share the thoughts and feelings of another person (Wieseke et al. 2012). As an important social glue, empathy has been shown to promote rapport (Gremler and Gwinner 2008), increase cooperative behavior (Adam et al. 2021), reduce opportunism, and enhance relationship quality (Ndubisi and Nataraajan 2018). Marketing scholars have demonstrated the importance of empathy in various contexts such as personal selling (Weibhaar and Huber 2016) and customer-employee service interactions (Wieseke et al. 2012).

From the human empathy literature, a commonly adopted approach to empathy is a hierarchical three-layer structure of empathic development, from more unconscious emotional empathy to more conscious cognitive empathy (de Waal 2008). This three-layered structure has been frequently applied in previous empathy research. It has been empirically validated in the marketing context (e.g., McBane 1995; Wieseke et al. 2012) and has also been conceptually applied to AI/robotics (Asada 2015). At the core of empathy lies emotional contagion, which involves the emotional state-matching with another person (de Waal 2008). For example, seeing someone else sad, an individual actually experiences sadness him or herself. Emotional contagion reflects a perception-action mechanism, which is an automatic, spontaneous, and unconscious activation of an observer’s own representations for a target.

Built upon the perception-action mechanism, the next layer of empathy consists of empathic concern, which refers to the ability to intuit others’ emotional state and to express concern toward others (Wieseke et al. 2012). In other words, empathic concern is composed of “focus on the plight of another person and feeling compassion-like or sympathetic-like emotions” (Bagozzi et al. 2012, p. 648). The expression of empathy through empathic concern is an important and necessary step in the empathy process (Barrett-Lennard 1981) and is crucial for engendering trust and commitment in customer interactions (Weibhaar and Huber 2016).

The outmost layer of empathy is perspective-taking, which belongs to the cognitive, more effortful domain. Perspective-taking refers to the capacity to understand someone else’s perspectives and needs apart from oneself (de Waal 2008). The idea of perspective-taking is closely related to Theory of Mind, which represents individuals’ ability to explain and predict other people’s behavior by ascribing to them independent mental states such as desires, intentions, and beliefs (Byom and Mutlu 2013). This ability to understand others’ minds and predict others’ behavior can facilitate conversation and enhance social interaction (Smith 2006).

From empathy to artificial empathy

Although empathy is inherent in human interactions to facilitate harmonious relationships among people, the same cannot be said about human-AI interactions. Prior research reports that consumers are often reluctant to use AI for tasks involving subjectivity, intuition, and affect, as AI lacks the empathy required to perform such tasks (Davenport et al. 2020). This mentality suggests a need to better integrate empathy into AI applications, leading some marketing scholars to envision “empathetic AI” as a future evolutionary stage of AI (Huang and Rust 2018). Based on existing research on interpersonal empathy and artificial empathy research in computer science and robotics fields, we define artificial empathy as the codification of human cognitive and affective empathy through computational models in the design and implementation of AI agents (see Table 1 for a list of this and other key construct definitions). Simply put, artificial empathy can be viewed as the coding of empathy into AI algorithms and agents (Asada 2015).

We note two critical differences between human empathy and artificial empathy. First, AI agents cannot feel or experience like humans, at least at today’s technology level. Therefore they can only simulate human empathy by displaying pseudo-mental features of empathy (Airenti 2015). This makes artificial empathy a codified capability through computational algorithms. Second, the components of artificial empathy do not follow the same hierarchical developmental order as those of human empathy. For example, while the core layer of empathy, emotional contagion, is natural and automatic in humans, it is challenging to embed such a process into a computational machinery (Asada 2015). In contrast, the more effortful cognitive perspective-taking from a human perspective is relatively easier to implement in AI as it is based on logical understanding and thus can be more readily translated into machine learning of accumulated data. Consequently, based on machine algorithms’ capability for handling cognitive versus emotional tasks, we believe that the hierarchy of human empathy should be reversed in artificial empathy, with perspective-taking at its core, followed by empathic concern, and emotional contagion as the outmost layer. We discuss these components in more detail in the next section.

What are the dimensions of artificial empathy?

Having articulated the meaning of artificial empathy, we now take a closer look at its three components: perspective-taking, empathetic concern, and emotional contagion. Among the three components, perspective-taking represents the cognitive aspect of artificial empathy, while the other two represent the affective aspects of artificial empathy. The joint contribution of cognitive and affective processes to the realization of artificial empathy provides a richer and more complete view of empathy than cognitive empathy or affective empathy alone (Asada 2015). Together, these three components comprise the higher-order construct of artificial empathy.

Perspective-taking

Perspective-taking in an AI context refers to the computational learning and modeling of individuals’ thoughts and inference processes in a given situation. Considering the developmental history of AI, our view of perspective-taking moves the state of AI from “analytical intelligence” with logical, systematic, and rule-based learning capabilities to more advanced “intuitive intelligence” with more holistic, flexible, and experience-based thinking capabilities (Huang and Rust 2018). Implementing perspective-taking in AI agents can be broken down into three elements with progressive levels of difficulty: preference construction, personality assessment, and goal inference. These elements have all been major considerations in the understanding and prediction of consumer choices using AI (Gal and Simonson 2021).

Preference construction

The first element of perspective-taking, preference construction, is the frequent subject of AI and machine learning and involves retrospective inference of consumers’ preferences based on their behaviors and choices (Brei 2020). For example, Huang and Luo (2016) developed a multi-step method with a fuzzy Support Vector Machine (SVM) active learning algorithm to elicit individual-level preference estimates when consumers take into consideration a large number of product attributes. Liu and Toubia (2018) estimated consumer content preferences associated with online search queries by introducing a topic model based on Hierarchically Dual Latent Dirichlet Allocation (HDLDA), which is appropriate when search queries are semantically related to search results. As a practice example, the Audi Intelligence ExperienceFootnote 3 is equipped with an AI-based navigation system, which uses machine learning to explore consumers’ driving habits the brand has recorded previously.

More advanced preference construction can be exemplified by conversational AI, which identifies consumer interests through real-time conversations to assist consumers with their problem-solving (Musto et al. 2019). One example is 1–800-Flowers’ Gwyn,Footnote 4 a personal gifting concierge chatbot with conversational capability. Gwyn asks customers 5–6 questions about the gift recipient to recommend the perfect product based on the consumers’ shopping needs. Another example is Humana’s AI-enabled call center,Footnote 5 which quickly analyzes the nuances of customer calls using natural language processing. Based on user input data collected by Humana, AI agents can process heterogeneous voices and inquiries to understand customer and call center employee content, distinguish between sub-intents, and serve real-time answers to inquiries.

Personality assessment

The second element of perspective-taking involves deducing consumers’ personality traits. Compared with preference construction that usually applies to specific contexts, personality assessment takes a more holistic view of the individual and identifies pervasive individual idiosyncrasies that can be generalized to different contexts. This more holistic assessment of the consumer using AI is less prevalent than preference construction. But recent research has demonstrated the potential of integrating personality traits to improve the personalization and persuasiveness of AI-based marketing (Shumanov et al. 2021). More importantly, AI has shown great promise in deciphering consumers’ personality traits from a variety of data. For example, a recent study shows that AI can infer people’s “Big Five” personality traits—openness, conscientiousness, extraversion, agreeableness, and neuroticism—from selfies better than human raters (Neuroscience News 2020). AI is also able to infer personality traits from texts, such as in the case of virtual interviewers automatically inferring an individual’s personality traits from a text-based conversation (Zhou et al. 2019).

A practical application of personality assessment is the Empathy-Based Affective Portrait Painter (EBAPP).Footnote 6 The EBAPP processes visual, vocal, and natural language data to infer the emotional and Big Five personality traits of the interacting user, which is then rendered into an artistic portrait of the user. Such inferences of individuals’ personality can play an important role in future AI-enabled marketing, For example, the future of smart retailing can adapt the ambience of the shopping environment to a consumer’s personality traits. As another example, given the importance of self-brand personality congruence (Aaker 1997), AI agents with personality assessment capabilities can recommend brands adapted to the user’s personality.

Goal inference

The third element of perspective-taking, goal inference, refers to AI’s more advanced capability to discover the motivation behind a consumer’s actions and decisions. This aspect of perspective-taking recognizes that individual decision-making is driven by not only stable preferences and traits but also the goals in a situation or during a specific period in life. For example, buying shoes for the goal of becoming healthier vs. to get ready for a new job leads to very different perspectives and purchase decisions. Being able to infer a consumer’s goals in a given situation can add significantly to understanding the consumer’s perspective. It can help identify consumer needs in not only known scenarios but also novel situations.

Computationally, expectations about others’ behaviors can be captured by reinforcement learning, which identifies actions that maximize known rewards (Sutton and Barto 2018). In contrast, expectations about others’ goals are achieved by inverse reinforcement learning. It first tries to find the hidden reward function from observed actions, and then trains a strategy to decide what actions should be performed according to the rewards (Jara-Ettinge 2019). Under this approach, the AI is aware that it doesn’t know what people want to achieve and tries to infer people’s goals by observing their behaviors, instead of inferring behaviors based on known goals. Although inverse reinforcement learning is more computationally expensive than reinforcement learning, it can successfully produce human-like judgments when inferring people’s goals, beliefs, and desires (Jara-Ettinge 2019). For example, Zhi-Xuan et al. (2020) developed a “sequential inverse plan search” based on Bayesian inverse reinforcement learning to infer the goals of others, assuming that people might plan and act sub-optimally, fail to achieve their goals, or make mistakes due to the difficulty of planning. As another example, Wu et al. (2020) applied maximum entropy inverse reinforcement learning to autonomous driving by acquiring humans’ arbitrary complex factors from real traffic data such as uncertainties in the trajectories of vehicles and the interaction with the environment.

Empathic concern

Empathic concern in an AI context involves algorithmically recognizing an individual’s distress and creating the impression of caring and concern from an AI agent to the individual. As an affective dimension of artificial empathy, empathic concern involves a domain that AI and machines in general are considered not very competent in due to the inherent complexity of emotions (Ho et al. 2018; Longoni and Cian 2022). However, recent advances in affective computing are making emotionally adaptive AI increasingly promising. Programming empathic concern into an AI agent involves two essential steps. The first is emotion recognition, which involves detecting a consumer’s emotional state at different points in time. Without first recognizing emotions, the AI agent would have no basis for empathic concern. The second step is the adaptive communication of such concerns, which is an important and necessary step in empathy (Barrett-Lennard 1981; Weibhaar and Huber 2016). In the case of AI-enabled marketing interactions, the AI agent needs to formulate an appropriate response to the consumer’s emotions identified in the first step to create the impression of empathic concern.

AI and emotion recognition

Recognizing emotions is a key research topic in affective computing, an interdisciplinary field that “enables intelligent systems to recognize, feel, infer, and interpret human emotions” (Poria et al. 2017, p.98). Marketing can now draw on a variety of methods and data to identify consumers’ emotions. To date, the most used data for automated emotion detection have been textual (e.g., social media postings). Much of the existing literature on detecting emotions in textual data has employed sentiment analysis, which combines natural language processing and machine learning to extract the valence of the text authors’ emotions (Berger et al. 2020). Sample applications include detecting negative brand events (Herhausen et al. 2019), understanding consumer responses in online communities (Homburg et al. 2015), and identifying sentiment in consumer chatter after an experiential event (Meire et al. 2019).

Another type of data for emotion recognition is voice data. Emotion detection in voice leverages acoustic features such as pitch, talking speed, and intensity of the speech to identify the speaker’s emotions (Poria et al. 2017). Commercial solutions are being developed in this area, such as SoundNet’s detection of anger in audio (Elshaer et al. 2019) and Amazon Alexa’s research on identifying speaker emotions (Parthasarathy et al. 2019). So far, marketing scholars have paid limited attention to emotion detection in consumer voice. One exception is Cavanaugh et al. (2016), which used layered voice analysis to detect embarrassment in digitally recorded consumer responses. As AI-powered voice assistants such as Siri and Alexa become widely adopted, the examination of consumer vocal data will likely rise in the coming years.

Finally, consumers’ emotions can also be extracted from visual data, such as user-generated images and videos and interactions with service bots. Emotion recognition in these circumstances is based mainly on two forms of visual data: facial expressions and body movements. On the former, Liu et al. (2018) used an algorithm to detect emotions in video viewers’ facial expressions and then leveraged the insight to create optimized video clips to increase watch intention. This research shows promise in using automated facial analysis in AI marketing applications. The second type of visual data, body movements, are typically reduced to limited dimensional vectors that are then used to extract emotional states such as aggression and excitement (e.g., Bartlett et al. 2019). When the analyses leverage dyadic data, physical distance and relative position between the interacting parties can also reveal their feelings toward each other (e.g., Joo et al. 2019). Automated emotion detection via body movements has yet to make its way into marketing research. However, as customer interactions through service bots become common, the potential for such analyses is significant. For example, a hotel greeting bot can detect a guest’s emotions through body movements and adapt the interaction accordingly.

AI and expression of empathic concern

Once a consumer’s emotional state is identified, the next step in empathic concern is to communicate the concern. Although the services and sales literatures have repeatedly emphasized the importance of empathic concern, surprisingly little research has been done on exactly how to express it. We draw instead from healthcare and psychology research to identify three mechanisms that AI agents can leverage to express concern: empathic listening and probing, acknowledgement, and proactive adaptation.

The first mechanism involves empathic listening and probing (Barrett-Lennard 1981). Different from the implicit recognition of emotions, empathic “listening” is a more active and visible process. When the hint of distress is detected, caring can be signaled by questions and comments that encourage the consumer to elaborate on the situation and to “vent”. This approach is commonly used by healthcare professionals to render empathic patient care (Byland and Makoul 2005). Applied to an AI context, empathic listening can be implemented as a series of adaptable sequential dialogues activated by the detection of a distressful emotion. The information collected from the user in this process then works as further input into the dialogue. The ELSA chatbot from the MIT Media Lab is a good example in this area, which encourages interactive journaling to better understand the user and improve the user’s mental health.

The second mechanism, acknowledgement, explicitly recognizes and affirms the other person’s distress. This mechanism represents the empathic resonance phase in Barret-Lennard’s (1981) cyclic empathic model. Existing studies of empathic concern have often used acknowledgement to successfully manipulate the construct, such as “I understand how frustrating this must be to you.” Although seemingly simple, Liu and Sundar (2018) found that such phrases can increase users’ perception of how much the chatbot understands and supports them.

Finally, AI can show empathic concern through proactive adaptation of the message or the communication interface. This mechanism echoes the consoling behaviors that primates use to express empathic concern (de Waal 2008). An early prototype of such proactive adaptive abilities is a dynamic user interface that uses CSS and JavaScript to adapt to the user’s mood in real time, such as when the user is feeling frustrated with the navigation experience (Märtin et al., 2016). Other examples include automotive interfaces adapted to the driver’s mood (Braun et al. 2020) and ambient music tailored to emotions detected in conversation partners’ facial expressions (Kummer et al. 2012). As AI technology matures, such emotion-based adaptations will play an increasing role in simulating empathic concern in AI-enabled marketing interactions.

Emotional contagion

Emotional contagion in an interpersonal context involves synchronizing one’s feelings with those of another person. However, due to current AIs’ inability to truly experience emotions, emotional contagion in the strictest sense is not possible for AI at the present stage. Instead, artificial emotional contagion can be better defined as the conveyance of an artificial sense or illusion of an AI agent experiencing the same emotions as the interacting party through emotion mirroring and mimicry (i.e., appearing happy in response to the other person’s happiness; Nofz and Vendy 2002). While some may consider this illusion of emotional contagion as superficial, neuroscience research has identified imitation and neural mirroring as important mechanisms for emotional contagion and subsequent empathic reactions (Iacoboni 2009). The logistics of implementing artificial emotional contagion can be broken down into three steps: (1) recognizing the emotions experienced by the consumer; (2) deciding whether to mirror the emotion or not; and (3) mirroring the consumer’s emotion if emotional contagion is deemed appropriate (Paiva et al. 2015). Since we already discussed emotion recognition in the last section, below we focus on the second and third steps in creating artificial emotional contagion.

When to mirror emotions

Similar to how human beings often experience emotions following an appraisal process, the decision to mirror an emotion or not in an AI agent can be implemented through an appraisal routine to evaluate the situation and determine the appropriate emotional response (Paiva et al. 2015). Such a routine may be implemented in diverse ways, including computation of the valence of a situation and fuzzy logic that is adapted to the AI agent’s goals (Kowalczuk and Czubenko 2016). An emotion is mirrored if the appraisal routine determines that mimicking the emotion would likely facilitate the goal of the interaction.

Previous research suggests two logical elements that may help appraise the appropriateness of emotion mirroring in a given situation. First, the appraisal should consider the valence of the emotion to be mirrored. Emotional contagion and mirroring in marketing interactions are likely to create a spiraling influence on subsequent interactions (Liu et al. 2019). This has led to the common practice by well-trained frontline employees to actively restrain from transmitting negative emotions to consumers. It is reasonable to assume that AI agents should also mirror positive instead of negative emotions back to consumers. This focus on positive emotion mimicry is supported by the social regulation function of emotional mimicry that serves an affiliative purpose (Hess and Fischer 2013). Negative emotions, especially externally directed ones such as anger or frustration, often signal the opposite of an affiliative intent. They should therefore have a low likelihood of replication by an interacting party.

Second, the appraisal should consider the expressiveness of a consumer’s emotional state. Individuals are heterogeneous in how they express their emotions, and they follow different feeling rules (Hochschild 1979). One consumer may “wear her heart on her sleeve”, while another may look stoic and conceal her emotional responses most of the time. Recent research shows that in a social exchange involving emotional information, individuals who share similar emotional expressiveness are more satisfied with the interaction than individuals who are mismatched (Kidwell et al. 2020). Consequently, although both of these consumers may be experiencing the same emotion (e.g., happiness), the proper reaction to each should differ. Explicit emotional mimicry is more appropriate for the more expressive consumer, whereas the less expressive consumer may not want to see her emotion reflected back to her explicitly.

Expression of synthetic emotions

After the appraisal routine determines that it is appropriate for the AI agent to mimic the consumer’s emotion, the next step involves actually expressing that emotion. Such emotions expressed by an artificial agent are called synthetic emotions or artificial emotions, which are a key component of truly social AI agents (Hortensius et al. 2018). To date, research in this area has focused primarily on the six basic emotions of joy, anger, surprise, sadness, fear, and disgust (Paiva et al. 2015). Other discrete emotions have been considered but are far less understood and implemented. This limited set will be a major technology shortcoming when it comes to marketing interactions, where the emotions involved in an interaction episode tend to be much richer and more dynamic than these basic emotions.

Mechanisms for expressing synthetic emotions can be classified into three broad categories: verbal, facial, and bodily expressions. Within the verbal category, emotion expression can be accomplished through word choices, paralanguage features such as emoticons, and vocal variations. Word choices can draw from years of research on emotional appeals in marketing and advertising as well as more recent advances in textual sentiment analysis. Paralanguage features have been examined more recently but can be equally powerful in expressing emotions. Luangrath et al. (2017) developed a comprehensive system of paralinguistic features and show that such features can fulfill rather complex functions such as to indicate motion and touch. Their typology combined with the psychology literature on human emotion expression provides a key starting point for investigating how paralinguistic features can be used by an AI agent to mimic a consumer’s emotional state. Finally, vocal features such as loudness, pitch, and steadiness can also be leveraged to convey emotions in an AI agent (Bänziger et al. 2014).

The second broad category, facial expression, is generated through a combination of movements and positions of different facial muscles. Following the emotions literature, facial expression in virtual agents has often been constructed based on either a discrete or a dimensional model of emotions (Ochs et al. 2015). Discrete approaches build specific facial expressions for different discrete emotions, often based on the Facial Action Coding System (FACS; Ekman et al. 2002). This system consists of many “action units” involving different facial muscles such as the frontalis, the buccinator, and the mentalis. Virtual agents use various combinations of these action units to express specific emotions. Dimensional models of facial emotion expression define emotions along dimensions such as pleasure, arousal, and dominance and map changes in facial expressions along these dimensions (e.g., Zhang et al. 2007). Such models allow a larger number of subtler emotions to be expressed.

Finally, bodily postures and movements can be used to express emotions by an embodied AI agent. For example, the openness of the body can signal emotional potency, while the stretching of the arms and the distance between two heels can express emotional valence (Kleinsmith and Bianchi-Berthouze 2007). A systematic scheme for coding bodily expressions called The Body Action Coding System has been developed over the last decade (Huis in ‘t Veld et al. 2014). Echoing the FACS, the Body Action Coding System links actions of different bodily muscle groups to emotions. Currently this system is more developed for negative emotions such as fear and anger than for positive emotions. To become more appropriate for marketing interactions, more work is needed to extend the approach to expressing positive emotions.

The relationship among artificial empathy components

Having articulated the structure and implementation of artificial empathy in AI marketing applications, we would like to briefly comment on the relationships among the three components of artificial empathy. First, perspective-taking, empathic concern, and emotional contagion are not sequential in nature. We pointed out earlier that the computational implementation of each component may vary in difficulty and follow a developmental trajectory (Asada 2015). However, from a marketing interaction standpoint, one element of artificial empathy does not require the presence of another to work. For example, an AI agent taking the perspective of a consumer does not need to involve the mimicking of the consumer’s emotions. Conversely, an AI agent can detect and mimic a consumer’s emotions without fully understanding the consumer’s innate needs and broader goals that may have triggered such emotions. Second, although not sequential, the three artificial empathy components can work synergistically, particularly between the cognitive and affective components. In the example above, being able to take the consumer’s perspective and understand his/her innate needs and goals may help the AI agent better detect the consumer’s emotional state and subsequently identify the optimal response. By integrating the hidden reward function discovered via perspective-taking into emotion detection and appraisal, an AI agent can anticipate a consumer’s emotional reaction (e.g., frustration) before the emotion is explicitly manifested by the consumer. Finally, the relative need for each artificial empathy component may vary in a situation. Some interactions with an AI agent such as ordinary information acquisition or recommendation may not need affective components of artificial empathy as much as other interactions such as addressing customer service problems. The exact emotions involved in a situation (e.g., anger vs. joy) may also dictate whether emotional mimicry or empathic concern is more appropriate. Overall, the three components of artificial empathy hold distinct but complementary roles to create the overall level of empathy in an AI agent.

How does artificial empathy create value?

When implemented in the right situations and toward the right individuals, integrating artificial empathy in AI marketing applications can create value for customers such as better need fulfillment, higher relationship satisfaction, and improved well-being, which in turn increases value for the firm via higher trust in and commitment to the firm, customer loyalty, and customer equity. We theorize that artificial empathy brings about these outcomes through enriched affective and social customer experience. In this section, we focus on how artificial empathy can enhance customer experience. We then explore when artificial empathy actually creates value later in the paper. We build these theoretical discussions on the overall idea of artificial empathy as the higher-order construct rather than on the three individual dimensions, because of the joint roles of the dimensions in creating the sense of empathy as discussed earlier.

Customer experience refers to a consumer’s subjective responses to interactions with a firm and firm-related stimuli (Brakus et al. 2009; Lemon and Verhoef 2016). Since its emergence in the late 1990s, customer experience has served as a central construct for characterizing firm-customer interactions. Existing research shows that customer experience represents a key value generating mechanism for both customers and firms (Gentile et al. 2007; McColl-Kennedy et al. 2019). It leads to important downstream consequences such as customer loyalty, commitment, retention, and word-of-mouth (Brakus et al. 2009; Lemke et al. 2011).

Customer experience encompasses an interrelated set of physical/sensorial, cognitive, affective, behavioral, and social responses (De Keyser et al. 2020). As our summary of current AI marketing applications in the Web Appendix 1 shows, most existing applications and associated research to date have focused on influencing the cognitive and behavioral dimensions of customer experience, such as using AI algorithms to recommend products in order to reduce information overload and increase purchase likelihood. Although the cognitive value provided by existing AI tools is clear (Hoyer et al. 2020), it often comes at the sacrifice of other aspects of the customer experience such as emotional and social interactions (Puntoni et al. 2021). This creates a significant gap between AI-based and human-based marketing interactions, impeding businesses’ ability to fully incorporate AI into a cohesive brand experience. We argue below that artificial empathy can help bridge this gap by going beyond cognitive and behavioral components to improve affective and social AI-enabled customer experience too.

Artificial empathy and affective customer experience

The affective dimension of customer experience refers to the experience of moods and emotions in response to interactions with a firm (Brakus et al. 2009). Previous research shows that affective customer experience plays a critical role in customer and firm value outcomes such as customer satisfaction, well-being, willingness to pay, and loyalty (e.g., McColl-Kennedy et al. 2017; Terblanche 2018). Compared with human interactions, current AI interactions frequently fall short on affective customer experience. The perception of AI agents as cold unfeeling machines and the prevalent implementation of AI agents as pre-programmed choices and responses significantly handicap the ability of AI-enabled marketing interactions to create the same high-quality affective customer experience possible with human agents. Yet the favorable responses users exhibit toward emotionally responsive artificial agents (Hortensius et al. 2018) points to the need for future AI agents to deliver not only intellectual value but also affective benefits. This need for better affective customer experience through AI is echoed by recent research showing that hotel AI tools that address customers’ both cognitive and affective needs had significant positive effects on customer loyalty (Prentice et al. 2020).

We propose that the gap in affective customer experience quality between AI- and human-based marketing interactions can be mitigated through artificial empathy, by amplifying positive emotions and regulating negative emotions. Previous research on interpersonal emotional dynamics suggests that the emotions of interacting parties can affect each other in a cyclic and reciprocal fashion (Hareli and Rafaeli 2008). The key mechanisms for creating such an emotional cycle are shared emotional understanding and emotional contagion (Butler 2011). Although artificial empathy involves an AI agent instead of a human employee, preliminary research suggests that people do sense, “catch”, and adapt to emotional expressions by artificial agents (Hortensius et al. 2018). Hence, when an AI agent mimics the positive emotion of a consumer, it can further enhance the consumer’s mood and create a positive emotional cycle.

What happens in negatively valenced situations such as addressing a service failure? Emotional mimicry may not be suitable here due to the threat of a downward spiral. Instead, an empathic AI agent can leverage the perspective-taking and empathic concern components of artificial empathy to alleviate negative emotions. Previous research suggests that individuals faced with negative emotions often engage in one of two emotion regulation strategies: reappraisal, which involves re-evaluating a given situation to reduce or shift the negative emotion; and suppression, which simply inhibits emotion-expressive behaviors (Gross 1998). The specific emotional regulation strategy used has distinct affective consequences, with reappraisal being more helpful than suppression at decreasing negative emotional experiences and promoting individual well-being (Gross and John 2003; Haga et al. 2009). An empathic AI agent can employ perspective-taking to understand the situation and leverage empathic concern to recognize the consumer’s affective state and provide emotional support to the consumer, resembling what an effective human employee may do in such a situation. Instead of suppressing negative emotions, an empathic AI agent encourages consumers to express their emotions through active listening and acknowledgement of such emotions. These actions can shift the consumers’ perspective and facilitate the reappraisal of the situation (Groth and Grandey 2012). Supporting this view, previous research shows that empathy serves as an important interpersonal emotion regulation mechanism (Zaki 2020). Taken together, the ability of artificial empathy to amplify positive emotions and regulate negative emotions should enhance the quality of AI-enabled affective customer experience, bringing it closer to a human-based interaction.

-

P1 Artificial empathy will moderate the effect of agent type on affective customer experience quality such that the gap in affective customer experience quality between AI-enabled and human-based marketing interactions will be smaller at a higher level of artificial empathy.

Artificial empathy and social customer experience

The social dimension of customer experience refers to consumers’ relational and social identity-related responses to interactions with a firm (Gentile et al. 2007), which carries significant implications for customer engagement, loyalty, and general well-being (Puntoni et al. 2021; van Doorn et al. 2017). Social experience is an essential component of human-AI interaction (van Doorn et al. 2017). While previous research has considered social AI experience as particularly valuable when the alternative is no interaction at all (Puntoni et al. 2021), we recognize the many situations where AI agents are deployed in place of human-to-human interactions, such as in sales and customer service settings. Therefore, the competence of AI agents in creating a good social customer experience still needs to be benchmarked against human-based social interactions. Badly implemented AI-enabled social experience can result in significant customer alienation rather than achieving positive outcomes (Puntoni et al. 2021). We theorize that artificial empathy can bring AI-enabled social customer experience closer to that with human agents in two ways: (1) activating a stronger social customer experience through increased social presence, and (2) enhancing the quality of social customer experience through rapport-building.

Previous research suggests that the dimensions of customer experience may be activated to a different degree (from weak to strong) in different situations (De Keyser et al. 2020). Unlike the de facto consideration of interaction with another human being as social in nature, not all interactions with AI are construed as a social interaction. For example, using an AI agent to retrieve hotel booking information may be perceived simply as extracting information from a computer program, which involves a minimal level of social response. This is a marked departure from completing the same task via a human agent. We argue that artificial empathy can enhance the feeling of social presence in AI interaction, which facilitates the activation of social response and makes the experience more closely resemble that with a human agent.

Social presence refers to “the salience of the interactants and their interpersonal relationship during a mediated conversation” (Oh et al. 2018, p.2). Existing studies show that empathic behaviors in a virtual agent can increase the user’s feeling of being socially present with the agent (e.g., Adam et al. 2021; Guadagno et al. 2011). As artificial empathy focuses on adapting to and echoing consumers’ needs and mood state, its adaptive nature creates a sense of synchrony and immediacy that is key to social presence (Oh et al. 2018; Short et al. 1976). Being emotionally expressive and simulating human emotions in an interaction can also increase the perceived social presence of the interaction (Bailensen and Yee 2005; Pereira et al. 2014). Taken together, the enhanced sense of social presence due to artificial empathy should increase the likelihood that consumers treat their interaction with AI as a social interaction and hence exhibit social responses at a level more comparable to a human interaction.

-

P2 Artificial empathy will moderate the effect of agent type on social customer experience activation such that the gap in the activation of social customer experience between AI-enabled and human-based marketing interactions will be smaller at a higher level of artificial empathy.

Besides activating a stronger social experience through increased social presence, artificial empathy can also enhance the quality of the social experience through rapport building. The quality of current social experiences with AI agents lags those with human agents. Artificial empathy can make up for the gap by promoting rapport between interacting parties. Rapport is a quality of dyadic social interactions characterized by harmony, accord, and affinity (Bernieri et al. 1996). Within the context of interpersonal communications, empathy has been shown to increase rapport (Bove 2019; Norfolk et al. 2007). For example, empathic behaviors play an important role in rapport building during both retail and business-to-business sales encounters (Gremler and Gwinner 2008; Kaski et al. 2018). Although rapport is typically defined between two humans, it can exist between a human and a technology-based agent (Gremler and Gwinner 2000). Emerging research shows that empathic concern and emotional contagion can effectively establish intimacy and genuine connection between individuals and AI agents (Johanson et al. 2020). Within a marketing context, a recent study by Adam et al. (2021) finds that integrating empathic traits into an AI chatbot increases customers’ compliance with the chatbot’s request for service feedback. This ability of artificial empathy to increase rapport in customer-AI interactions is not trivial, as rapport has been shown to bring about highly beneficial outcomes such as satisfaction, word-of-mouth, and loyalty (Gremler and Gwinner 2000).

-

P3 Artificial empathy will moderate the effect of agent type on social customer experience quality such that the gap in the quality of social customer experience between AI-enabled and human-based marketing interactions will be smaller at a higher level of artificial empathy.

The effect of artificial empathy on customer experience: A pilot study

To test if artificial empathy can indeed bridge the gap between AI- and human-based customer experiences as suggested in P1 ~ P3, we conducted an online pilot study featuring a 2 (high vs. low empathy) × 2 (human vs. AI agent) full-factorial design. 525 US participants (median age = 53; 62.67% females) recruited from the Qualtrics online panel were randomly assigned to one of the four conditions. To ensure that participants understand the identity of an AI agent, all participants were prescreened to have interacted with a service chatbot in the past.

To manipulate empathy level and agent identity, we developed and pretested four versions of a dialogue between a consumer and a customer service agent involving a product return request (see Web Appendix 2). Compared with the low-empathy condition, the high-empathy dialogue incorporated all three empathy components, with the service agent more proactively identifying the consumer’s needs, responding to the consumer’s frustration, and generally acting more emotionally in sync with the consumer. To manipulate agent identity, the dialogue was introduced as a consumer’s chat with either a customer service employee (human) or a customer service chatbot. At the beginning of the dialogue, the service agent also self-introduced as either a customer service representative or a customer service chatbot.

All participants first read the service dialogue for their assigned condition. They then assessed the agent’s empathy level and rated the consumer’s affective and social customer experience. We adapted existing scales to measure the three components of empathy (Davis, 1983; McBane 1995; Stiff et al. 1988; Wieseke et al. 2012), affective customer experience quality (Brakus et al. 2009; Verleye 2015), social customer experience activation (Kaptein et al. 2011) and quality (Gefen and Straub 2003) (see Web Appendix 3 for the items and measurement model assessment). We also asked “how much empathy did the customer service agent exhibit during the service encounter?” to assess the overall perception of empathy manifested by the agent. Finally, participants rated how human the customer service agent appeared as a manipulation check for agent identity and answered several demographic questions.

Results

To check the success of the empathy manipulation, we first averaged participants’ responses to items within each of the three empathy dimensions to create the agent’s perceived perspective-taking (⍺ = .91), empathic concern (⍺ = .86), and emotional contagion scores (⍺ = .84). We ran a MANCOVA with these scores as dependent variables, and empathy condition, agent condition, and their interaction as independent variables. Age and gender were included as controls. Results showed a significant main effect of empathy condition (Wilks’ Λ = .92; F3,517 = 12.30, p < .001). Table 2(a) shows the mean perspective-taking, empathic concern, and emotional contagion scores under each condition. For both human and AI agents, the average scores for all three empathy components were significantly higher in the high-empathy condition than in the low-empathy condition, suggesting the successful manipulation of empathy. Echoing these findings, the single-item overall empathy measure also showed a significant difference between the two empathy conditions for both human and AI agents (see Table 2(b)). For agent identity manipulation, an ANCOVA with perceived humanness of the agent as the dependent variable showed significant effects of agent condition (F1,519 = 41.88, p < .001), empathy condition (F1,519 = 23.97, p < .001), and their two-way interaction (F1,519 = 8.44, p = .004). Supporting the successful manipulation of agent identity, the human agent was perceived as significantly more human than the chatbot under both low (M = 6.23 vs. 4.52, F1,519 = 106.30, p < .001) and high empathy (M = 6.41 vs. 5.37, F1,519 = 41.88, p < .001) scenarios (see Table 2(c)). This gap in humanness perception was much smaller under the high-empathy condition than under the low-empathy condition, providing preliminary evidence that empathy can bring AI closer to humans.

We now turn to the effect of empathy on customer experience. We first derived each participant’s affect experience quality (⍺ = .85), social experience activation (⍺ = .91), and social experience quality ratings (⍺ = .89) by averaging the items for each construct. To test P1 ~ P3, we conducted a MANCOVA with these three customer experience ratings as the dependent variables and empathy condition, agent identity, and their interaction as the independent variables. Again, age and gender were included as covariates. The analysis showed a significant main effect of empathy condition (Wilks’ Λ = .93, F3,517 = 12.02, p < .001) and a significant interaction between empathy and agent conditions (Wilks’ Λ = .97, F3,517 = 5.88, p < .001).

As shown in Fig. 2, under the low-empathy condition, affective customer experience quality with the human agent was rated significantly higher than that with the AI agent (M = 6.14 vs. 5.65, F1,519 = 16.12, p < .001). In contrast, when empathy was high, the difference in affective experience quality between the human and AI agents was no longer significant (M = 6.10 vs. 5.96, F1,519 = 1.49, p = .22). These results provide support for P1. Similar results were found for social experience activation and quality. Participants under the low-empathy condition considered the customer experience with the human agent more social than that with the AI chatbot (Msocial activation = 5.44 vs. 4.62, F1,519 = 19.72, p < .001). They also rated the social experience with the human agent as higher quality than that with the chatbot (Msocial quality = 5.68 vs. 4.60, F1,519 = 43.83, p < .001). These differences disappeared under the high-empathy condition (Msocial activation = 5.55 vs. 5.33, F1,519 = 1.81, p = .18; Msocial quality = 5.74 vs. 5.59, F1,519 = .97, p = .33). These findings provide support for P2 and P3.

Taken together, increasing agent empathy in our study brought about more comparable affective and social customer experiences between AI-enabled and human-based marketing interactions. Further contrasts between high- and low-empathy conditions within each agent type suggest that empathy significantly improved all three customer experience outcomes for the AI agent but not for the human agent. This difference was the largest for social customer experience quality (M = 4.60 vs. 5.59 for low- vs. high- empathy AI, F = 33.19, p < .001), pointing to potentially high sensitivity to artificial empathy from a social perspective.

When does artificial empathy create value?

Our pilot study confirmed the positive impact of artificial empathy on affective and social customer experience. But the discussion of artificial empathy is incomplete without considering when artificial empathy is beneficial vs. unnecessary or even detrimental. Logically, the ability of artificial empathy to create value through improved customer experience rests on two conditions: (1) artificial empathy indeed improves customer experience, and (2) such improved customer experience translates into downstream benefits. When either of these conditions is not met, the ability of artificial empathy to create value will be undermined. In this section, we discuss the contingency factors that may reinforce or interfere with each of these conditions for affective and social customer experience respectively.

Affective customer experience

From artificial empathy to affective customer experience

As an empathic AI agent relies on consumers’ emotional manifestations to determine the proper course of actions, the success of these mechanisms depends on the availability of quality emotional signals in the environment. Without such signals, the AI agent may misread the emotional needs of a situation and respond inappropriately (Purdy, Zealley, and Maseli 2019). Artificial empathy built on such wrong information can backfire and exacerbate consumers’ feeling of being misunderstood (Puntoni et al. 2021), leading to more negative customer experience (Paiva et al. 2015).

A few things can affect the availability of quality emotional signals in an interaction. First, previous research suggests a potential advantage of consumers with high emotional intelligence, which refers to “a person’s ability to skillfully use emotional information to achieve a desired consumer outcome” (Kidwell et al. 2008, p. 154). As these high emotional intelligence consumers can supply more precise information about their emotional state and tend to be more expressive in their emotional coping (Tsarenko and Strizhakova 2013), they can offer the AI agent better-quality affective signals and improve empathic accuracy (Cuff et al. 2016). Second, the temporal proximity of the consumer and the AI agent during an interaction (i.e., synchronous vs. asynchronous interactions) also matters. Individuals’ emotional state is often transient, moving from one state to another as circumstances change. Being able to capture emotions in real time when the consumer and the AI agent are simultaneously present can increase the accuracy of affective signals and the resulting empathic actions in the interaction.

Third, the quality of affective signals in an interaction also depends on communication modality. Different modalities convey different types of information, such as the text modality carrying only verbal content (i.e., what is said), voice conveying both verbal content and vocal cues, and the visual modality offering nonverbal information such as facial expressions. Previous research suggests that vocal cues such as pitch and sharpness are more reliable signals of true feelings than nonverbal cues (Kraus 2017), due to the less controllability of voice and greater difficulty in masking emotions in one’s voice (Ekman and Friesen 1969). Hence, voice-based AI interactions can yield potentially more accurate portrayals of consumers’ true emotional state.

Finally, situational complexity can impact the availability and quality of emotional signals. When a situation is straightforward (e.g., a one-on-one routine information retrieval), the interaction tends to occur quickly with minimal emotional signals. There is little room for artificial empathy to enhance such an interaction. At the other extreme, in a rather complex situation (e.g., a high-stake sales interaction with not only the consumer but also her family), emotional signals in the environment may be abundant but highly ambiguous and difficult to disentangle (Pelaez et al. 2013). Therefore, artificial empathy’s ability to improve affective customer experience may follow an inverted-U-shaped trajectory as situational complexity increases, with the most effective situations being the ones with a moderate level of complexity.

-

P4 The ability of artificial empathy to bridge the human-AI gap in affective customer experience is contingent on the availability of high-quality emotional signals. Quality emotional signals are more likely when the consumer possesses high emotional intelligence, when the interaction is synchronous and voice-based, and when the situation is moderately complex.

From improved affective customer experience to value creation

When does improved affective customer experience due to artificial empathy add value? This depends on the necessity and compatibility of affective customer experience for a marketing interaction. From the consumers’ perspective, individuals have varying levels of need for affect, which refers to a chronic tendency to approach (vs. avoid) emotional experiences (Maio and Esses 2001). Different from emotional intelligence, need for affect reflects a motivation toward emotional experiences rather than an ability to do so. Individuals with high need for affect are open to strong emotions and tend to seek out affective experiences (Maio and Esses 2001). In contrast, individuals with low need for affect prefer less extreme emotional responses and are less likely to become involved in emotional stimuli. To such individuals, affective experience is less important and in extreme cases may even be unwelcome. The value of artificial empathy through affective customer experience is likely limited for these low need-for-affect individuals.

-

P5 The ability of artificial empathy to create value by bridging the human-AI affective experience gap depends on consumers’ need for affect. Artificial empathy benefits high need-for-affect consumers but is unnecessary or even detrimental to low need-for-affect consumers.

Besides individual differences, the task context can also influence the need for and value of affective customer experience. Previous research differentiates between more instrumental, goal-oriented contexts and those where the experience can be an end in itself (Fishbach and Choi 2012). In a more predominantly experiential context, the enhanced affective customer experience through artificial empathy can directly add value to the interaction by making the experience more pleasant (Jantzen et al. 2012). But in an instrumental, goal-oriented context, the value of improved affective experience is likely a secondary consideration behind the need for goal completion. Chen et al. (2021) investigated service failures by a human agent vs. a machine. They found that individuals were more forgiving of a human agent than of a machine. Furthermore, while empathy from a human employee reduced consumers’ anger and other negative responses in such a situation, empathy from the machine did not. These findings suggest that in an instrumental context, the value of improved affective customer experience due to artificial empathy is contingent on the AI agent’s functional competence in helping consumers to achieve their goal (e.g., recommending the right product to meet a consumer’s need).

-

P6 The ability of artificial empathy to create value by bridging the human-AI affective experience gap is higher in an experiential context than in an instrumental, goal-oriented context.

-

P7 In an instrumental, goal-oriented context, the ability of artificial empathy to create value by bridging the human-AI affective experience gap depends on the AI’s functional competence.

Social customer experience

From artificial empathy to social customer experience

Artificial empathy creates a more human-like social customer experience by establishing the AI agent’s social presence and building rapport between the AI agent and the consumer. The success of these social mechanisms rests on a consumer’s willingness to accept AI as social partners. Humans often show biases against machines and algorithms (e.g., Chen et al. 2021; Thomas and Fowler 2021), even when algorithms produce objectively superior outcomes. Schmitt (2020) attributes this to speciesism, which views humans as a superior species and discriminates against other non-human species. Individuals with a high level of speciesism can categorically reject the notion of AI agents as equal social partners. For these individuals, artificial empathy can be perceived as an unwelcome imitation of humans and threaten their self-identity (Mende et al. 2019), particularly in consumption contexts that are central to their identity (Leung et al. 2018). In this sense, being more empathic not only does not help with social experience but may lead to rejection of AI interactions altogether in favor of a human agent. If a human agent is unavailable, a non-empathic AI that simply gets the job done may work better for these consumers instead.

-

P8 The ability of artificial empathy to bridge the human-AI gap in social customer experience is contingent on consumers’ willingness to accept AI as social partners. Artificial empathy can have a detrimental effect for consumers with a high level of speciesism.

Related to the general willingness to accept AI as social partners, artificial empathy must also overcome the hurdle of authenticity to have a meaningful impact on social customer experience. By definition, artificial empathy is the simulation of empathy coded as computational models into AI agents (Asada 2015). Compared with “real” empathy manifested by humans, it may be perceived as inauthentic, which can diminish the quality of the social interaction similar to what would happen with a phony interaction partner. To combat this tendency, the quality of artificial empathy implementation matters, as proficiency and accuracy are considered key components of authenticity (Nunes et al. 2021). But beyond implementation quality, the believability of artificial empathy and the general social nature of an AI agent also depends on the agent’s perceived autonomy and agency (Nass and Moon 2000). In a comprehensive discussion of agency in AI, Legaspi et al. (2019) show that AI needs to be adaptive, responsive, and flexible. In this sense, many chatbots in use today that rely on limited, pre-programmed responses (e.g., “select one of the responses below”) would signal low agency to users. Pairing artificial empathy with such a rigid, mechanical chatbot interface can trigger the perception of inauthenticity and may have harmful consequences for the social experience.

-

P9 The ability of artificial empathy to bridge the human-AI gap in social customer experience is contingent on the perceived authenticity of the experience. Authenticity is affected by both the quality of empathy implementation and the perceived autonomy and agency of the AI agent.

Lastly, artificial empathy’s ability to bridge the AI-human gap in social customer experience depends on the compatibility between artificial empathy and the anthropomorphic design of an AI agent (Crolic et al. 2022; Mende et al., 2019). Since empathy is an inherently human trait, artificial empathy may be seen as more congruent with a human-like AI agent. At the same time, too much human-likeness combined with artificial empathy can trigger the uncanny valley effect, which refers to increased human-likeness of a robot initially eliciting more positive reactions from individuals but eventually causing feelings of eeriness when the robot becomes too human-like (Mori 2012). This suggests a potentially non-linear effect of anthropomorphic design on the ability of artificial empathy to improve social customer experience. Artificial empathy is likely most suitable in a moderately anthropomorphic AI agent.

-

P10 The ability of artificial empathy to bridge the human-AI gap in social customer experience is contingent on the anthropomorphic design of the AI agent. Artificial empathy is most suitable when paired with a moderately (as opposed to low or high) anthropomorphic AI agent.

From improved social customer experience to value creation

When artificial empathy indeed minimizes the AI-human agent gap in social customer experience, the resulting improvement may not always be valuable. Previous research suggests that social considerations matter more in higher-involvement purchases (e.g., Liu-Thompkins et al. 2022; Vesel and Zabkar 2009). This is partly attributed to consumers’ greater desire to engage in relational exchanges under higher-involvement situations. In a low-involvement context, such as the straight rebuy of a product, consumers are more concerned with convenience and efficiency and are less interested in comprehensive interactions with the brand (Melancon, Noble, and Noble 2011). Adding social elements into such a low-involvement situation may be seen as superfluous and unwelcome, as it may get in the way of efficient completion of the task.

-

P11 The ability of artificial empathy to create value by bridging the human-AI social experience gap is contingent on situational involvement. Artificial empathy creates more value in a high-involvement context but is unnecessary and possibly detrimental in a low-involvement context.

The value of better-quality social customer experience also differs across brands. Consumers often attribute uniquely human characteristics and features to a brand in the form of brand anthropomorphism (Puzakova et al. 2013). Different from anthropomorphized AI agents, brand anthropomorphism imbues the brand entity itself with human traits and mindfulness (Puzakova et al. 2013). This mindset tends to activate human schemas and trigger social interaction goals and social cognition among consumers (Aggarwal and McGill 2012). As an integral touchpoint in the holistic brand experience (Lemon and Verhoef 2016), an AI agent representing an anthropomorphized brand is expected to carry out the same social norms governing the brand. Among such expectations are reduced reliance on instrumental criteria (e.g., utilitarian functionality) and increased reliance on fulfilling emotional and social needs (Yang et al. 2020). This makes the quality of social interactions involving an anthropomorphized brand potentially more impactful than that of a non-anthropomorphized brand.

-

P12 The ability of artificial empathy to create value by bridging the human-AI social experience gap is higher for brands with high anthropomorphism than for those with low anthropomorphism.

Conclusion, limitations, and further research

AI technologies continue to transform marketing interactions. While the potential gain from AI is evident, the value of AI-enabled marketing interactions is much less clear and has led to doubts about the short- to medium-term potential of AI in marketing (Davenport et al. 2020; Guha et al. 2021). Compared with firms’ eagerness to jump onto the AI wagon, consumers are much less enthusiastic toward AI-enabled interactions and often opt for “real” interactions with human employees instead of AI agents. Addressing these divergent views of AI from firms and consumers, we argue that artificial empathy needs to become an important consideration in the next generation of AI-enabled marketing interactions. This infusion of care and understanding from the customer’s perspective will help align firm and customer interests, bridge the AI-human gap, and lead to more consistent customer experiences across different marketing touchpoints.

The importance of achieving empathic AI in marketing has been raised by researchers previously (e.g., Huang and Rust 2018). However, the field lacks systematic guidance on how cold and unfeeling AI agents can come across as empathic. Furthermore, despite significant developments in empathic AI from computer science and robotics disciplines, marketing researchers are often unaware of the extent of those developments. Filling these gaps, we draw from research in diverse disciplines to create a conceptual framework of artificial empathy in the context of AI-enabled marketing interactions. We elaborate the concept, structure, and implementation of artificial empathy in AI marketing agents. We further explore how artificial empathy can generate value through enriched affective and social customer experience. Recognizing that artificial empathy may not always be desirable, we identify the requirements for artificial empathy to create value and deduce situations when it is unnecessary or harmful.

As a partial test of our conceptual framework, we conducted a small-scale pilot experiment in the context of a customer service interaction. The results confirmed the ability of artificial empathy to bridge the customer experience gap between AI and human agents. Specifically, under low empathy, both affective and social customer experiences were considered superior when dealing with a human agent than with an AI agent. This difference disappeared under high empathy, such that interaction with a human agent vs. an AI agent brought about comparable levels of affective and social customer experience quality. The magnitude of artificial empathy’s impact on social customer experience was especially large, pointing to the particularly high value of artificial empathy from a social interaction perspective.

Our research adds to the anthropomorphism literature. Recent work by Crolic et al. (2022) showed that adding anthropomorphic features to an AI agent backfired when dealing with angry customers. In that research, anthropomorphism was achieved by visual appearance, a human name, and first-person language. But what makes us human goes deeper than visual appearance and verbal language, with empathy being one of the fundamental traits (Dial 2018). In a negative context, superficial transformation of an AI agent into a more human-like form without the underlying empathy expected from a human under such situations may violate social interaction norms. This incongruence between a human-like appearance and low empathy may partly explain why anthropomorphism backfired in angry situations. Our pilot study showed that the high-empathy AI agent was perceived as more human-like than the low-empathy AI agent. Compared with simple visual or verbal changes, artificial empathy can represent an alternative way of achieving anthropomorphism and may be what is needed in negative social encounters.

Taken together, our proposed framework and empirical findings offer significant guidance on when and how to integrate artificial empathy in marketing interactions. In the meantime, we recognize several limitations in our work that warrant further research. First, our pilot study represents a preliminary test of artificial empathy effects using a simplistic scenario-based design. The simultaneous variation of empathy components and between human and AI may introduce complexities and confounds that need to be teased out in further research. We also did not consider the contingencies that may affect consumer response to artificial empathy. Much more empirical work is needed to better understand the role of artificial empathy in marketing interactions. One significant challenge in carrying out such empirical work is the associated technological barrier in operationalizing artificial empathy. We offer some suggestions in Web Appendix 4 on how to manipulate and measure artificial empathy in future empirical studies.

Second, throughout our discussion, we assumed that consumers are aware that they are interacting with an AI agent. However, the disclosure of AI identity is itself an interesting question, and awareness of the AI identity at the onset of an interaction has been shown to cause negative consumer responses (Luo et al. 2019). We believe artificial empathy may be able to change the disclosure dynamics and reduce consumers’ negative reactions even when disclosure is made up front. This solution may be superior to hiding the AI identity until after the interaction, as post-interaction disclosure can lead to feelings of deception and betrayal. What happens in cases where consumers are unaware of the AI identity at all? Assuming the non-disclosure is ethically justified, we conjecture that artificial empathy will likely have a smaller effect in such cases, as consumers would treat the interaction as human-to-human and the corresponding expectation of empathy would be higher. What matters more under such circumstances may be the absence of empathy rather than its presence. How consumer awareness of the AI identity interacts with artificial empathy is a worthwhile question for future research.

Third, although we discussed situations where artificial empathy may be undesirable, we did not consider the full range of unintended or unknown consequences of artificial empathy. For example, artificial empathy can have significant implications for consumer privacy. The ability to fully realize artificial empathy in AI relies on the availability of customer information, such as voice or facial expression data, to infer consumers’ emotional state. This data requirement can heighten consumers’ privacy concerns, hampering the potential for empathic AI. One might argue that similar information is also revealed when interacting with a human agent. However, the spontaneous processing of such information by a human vs. the potentially permanent recording and processing of such information by a machine may be perceived quite differently. The trust dynamics in the two situations may also be different. Therefore, even though artificial empathy has the potential to bridge the AI-human gap in customer experience, its implications for consumer privacy may be quite different and need to be examined in future research.