Abstract

Recently, the efficient numerical solution of Hamiltonian problems has been tackled by defining the class of energy-conserving Runge-Kutta methods named Hamiltonian Boundary Value Methods (HBVMs). Their derivation relies on the expansion of the vector field along a suitable orthonormal basis. Interestingly, this approach can be extended to cope with more general differential problems. In this paper we sketch this fact, by considering some relevant examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of Hamiltonian problems has been the subject of many investigations in the last decades, due to the fact that Hamiltonian problems are not structurally stable against generic perturbations like those induced by a general-purpose numerical method used to approximate their solutions.

The main features of a canonical Hamiltonian system are surely the symplecticness of the map and the conservation of energy. It is well-known that these two properties are mutually exclusive when Runge-Kutta or B-series methods are considered [1]. This is the reason why numerical methods have been devised in order of either defining a symplectic discrete map, giving rise to the class of symplectic methods (see, e.g., the monographs [2,3,4,5], and references therein, and the review paper [6]), or being able to conserve the energy, resulting in the class of energy-conserving methods (see, e.g., [7,8,9,10,11,12,13,14] and the monograph [15]).

The methods we shall deal with belong to the class of energy-conserving methods: they are named Hamiltonian Boundary Value Methods (HBVMs) [12, 13, 15, 16]. HBVMs, in turn, can be also regarded as a local projection of the vector field onto a finite-dimensional function space [17]. It is worth mentioning that the same idea has been also named in different ways (e.g., functionally fitted methods [18]). Remarkably, this strategy is even more general and can be adapted to other classes of problems, also allowing the use of the methods as spectral methods in time [19,20,21,22]. This means that the accuracy of the approximation is attained by increasing the dimension of the functional space, rather than reducing the timestep. Test problems, comparing the computational cost of such methods w.r.t. collocation or other standard methods, can be found in [22], showing the good potentialities of the spectral approach. We mention that spectral methods have been also extensively used in other settings [23,24,25,26].

In this paper, we sketch how the approach resulting into HBVMs can be extended to cope with additional differential problems: for some of such instances a thorough analysis is still in progress, but the general framework can be outlined even at this stage.

With this premise, the structure of the paper is as follows: in Sect. 2 we provide a brief introduction to HBVMs; in Sect. 3 the approach is extended to cope with general initial value problems (ODE-IVPs), including special second-order problems and delay differential equations (DDEs); Sect. 4 is devoted to implicit differential algebraic equations (IDEs/DAEs), including linearly implicit DAEs; Sect. 5 concerns the case of fractional differential equations (FDEs); a few conclusions are given in Sect. 6.

2 Hamiltonian problems

A canonical Hamiltonian problem is in the form

with \(H:\mathbb {R}^{2m}\rightarrow \mathbb {R}\) the Hamiltonian function (or energy) of the system.Footnote 1 As is easily understood H is a constant of motion, since

being J skew-symmetric. The simple idea on which HBVMs rely on is that of reformulating the previous conservation property in terms of the line integral of \(\nabla H\) along the path defined by the solution y(t):

Clearly, the integral at the right-hand side of the previous equality vanishes, because the integrand is identically zero, so that the conservation holds true for all \(t>0\). The solution of (1) is the unique function satisfying such a conservation property for all \(t>0\). However, if we consider a discrete-time dynamics, ruled by a timestep h, there exists infinitely many paths \(\sigma \) such that:

The path \(\sigma \) obviously defines a one-step numerical method that conserves the energy, since

even though now the integrand is no more identically zero. The methods derived in this framework have been named line integral methods, due to the fact that the path \(\sigma \) is defined so that the line integral (2) vanishes. Such methods have been thoroughly analyzed in the monograph [15] (see also the review paper [27]). Clearly, in the practical implementation of the methods, the integral is replaced by a quadrature of enough high-order, thus providing a fully discrete method. However, for the sake of brevity, we shall hereafter continue to use the integral, and not the quadrature, so that the exposition is greatly simplified and more concise.Footnote 2

After some initial attempts to derive methods in this class [8,9,10, 12, 30], a systematic way for their derivation was found in [17], which is based on a local Fourier expansion of the vector field in (1). In fact, by setting

and using hereafter the more convenient notation, depending on the needs, one may rewrite problem (1), on the interval [0, h], as:

where \(\{P_j\}_{j\ge 0}\) is the Legendre orthonormal polynomial basis on [0, 1],

\(\Pi _i\) is the vector space of polynomials of degree at most i, \(\delta _{ij}\) is the Kronecker symbol, and

are the corresponding Fourier coefficients. The solution of the problem is formally obtained, in terms of the unknown Fourier coefficients, by integrating both sides of Eq. (4):

A polynomial approximation \(\sigma \in \Pi _s\) can be formally obtained by truncating the previous series to a finite sum,

and

respectively, with \(\gamma _j(\sigma )\) defined according to (6), upon replacing y with \(\sigma \). Whichever the degree \(s\ge 1\) of the polynomial approximation, the following result holds true, where the same notation used above holds.

Theorem 1

Assume that the Hamiltonian H has continuous partial derivatives. Then, \(H(y_1)=H(y_0)\).

Proof

In fact, one has:

due to the fact that J is skew-symmetric. \(\square \)

The next result states that the methods has order 2s.

Theorem 2

Assume that the function (3) is of class \(C^{(2s+1)}[0,h]\). Then, \(y_1-y(h)=O(h^{2s+1})\).

Proof

See [17, Theorem 1]. \(\square \)

Remark 1

It is worth noticing that, due to the fact that (see (5))

the new approximation (see (2) and (9)) is given by

which can be regarded as an approximation of the Fundamental Theorem of Calculus.

3 Initial value problems

The result in Theorem 2 does not depend on the Hamiltonian structure of the problem. This suggests that the above procedure also makes sense for a generic initial value problem which, on the interval [0, h] can be cast, without loss of generality, as

To cope with more general problems, hereafter we consider an alternative but equivalent approach to devise this family of methods. Consider again

We determine the unknown coefficients

in order that the residual function

be orthogonal to \(\Pi _{s-1}\), namely

Substituting the expansion for \(\dot{\sigma }(ch)\) in (12) and taking into account the orthogonality relations (5) again lead to the equations defining the unknown coefficients \(\gamma _i\) (compare with (6)):

Remark 2

As was anticipated above, in order to get a fully discrete problem, the integrals appearing in (14) need to be approximated by suitable quadrature formulae: to this purpose, a Gauss-Legendre quadrature of order 2k is employed. The resulting integrator, first devised in the context of Hamiltonian systems, is denoted by HBVM(k, s) to highlight the dependence on the two discretization parameters. When \(k=s\) the method reduces to the s-stage Gauss-Legendre collocation method but, if necessary, one can choose k large enough to ensure that the integrals are approximated to within machine precision. This, in turn, can be done without increasing too much the overall computational cost: as matter of fact, the discretized problem (14) has (block) dimesion s, independently of k. This fact is an important feature, in the case of Hamiltonian problems, as the next Example 1 will show. The resulting set of s nonlinear equations (14) can be efficiently solved by means of the blended iteration described in [31], adapted from [32, 33] (see also [34] for related approaches): in fact, such iteration only requires the factorization of one matrix of the size of the continuous problem, whichever is the value of s considered. We mention that the state-of-art Matlab code hbvm.m, implementing HBVMs, is available at the website of the monograph [15].

We observe that, in light of (14) and (12), the new approximation can be written as

which is easily evaluated, once the system (14) has been solved.

It is worth mentioning that, in the actual implementation of (12), it is conveniently exploited the known relation between the Legendre polynomials and their integrals:

Example 1

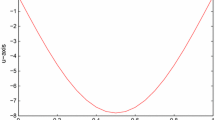

Let us consider the Hamiltonian problem defined by [15]:

When the initial point is chosen as

the solution trajectory, in the phase space, is a Cassini oval, as is shown on the left of Fig. 1, with very close branches near the origin (see the right-plot in the same figure). The solution is then periodic, with period \(T\approx 3.131990057003955\). However, when numerically solving the problem, if the Hamiltonian is not precisely conserved, then the numerical branches may intersect, thus giving a wrong simulation. If we fix \(s=2\) in (14) and we use a Gauss-Legendre formula of order 8 (\(k=4\)), then the HBVM(4,2) method is exactly energy conserving (according to Theorem 1), since the integrals are exact. We use this method and, for comparison, the 2-stage Gauss collocation method (i.e., HBVM(2,2)), with a timestep \(h=10^{-2}\). This latter method, though symplectic, is not energy-conserving. As is clearly shown in Fig. 2, the former method (left-plot) provides a correct numerical solution, whereas the latter (right-plot) does not. The two simulations have been done by using the Matlab code hbvm.m on an Intel 3GHz Intel Xeon W10 core computer, with 64GB of memory, running Matlab 2020b. Despite the very different outcomes, the computational time for the two simulations is approximately the same (about 10 sec.). This fact confirms that using values of k larger than s, for a HBVM(k, s) method, is not a big issue.

3.1 Special second-order problems

A relevant instance of ODE-IVPs is the case of special second-order problems, namely problems in the form

As an example, separable Hamiltonian problems fall in this class. The particular structure of the problem allows to gain efficiency, with respect to just recasting the problem as the following first order problem of doubled size:Footnote 3

Proceeding as before, we introduce the polynomial approximations

satisfying the initial conditions \(\sigma (0)=y_0, \, \mu (0)=z_0\), and require the residual

be orthogonal to \(\Pi _{s-1}\). The s orthogonality conditions imposed on the first component of r(ch) allow us to remove the unknowns \(\hat{\gamma }_i\):

so that, integrating both (21) and (22) from 0 to c, we get the two polynomial approximations of degree s for the solution and its derivative,

involving only the s unknown coefficients \(\gamma _j\), \(j=0,\dots ,s-1\). Such coefficients are determined from the orthogonality conditions imposed on the second component of r(ch):

By taking into account (16) and (23)–(24), the expression of the polynomial \(\sigma \), in terms of the above coefficients, turns out to be:

Once the system (25)–(26) has been solved, the new approximations are obtained, as done before, as

i.e., recalling (10),

Remark 3

Also in this case, there exist efficient iterative procedures for solving the discrete counterpart of (25) (see [31]).Footnote 4 In particular, the modified blended iteration follows from the analysis in [35] (see also [33]).

We conlclude this section by mentioning that the whole approach can be extended, in a straightforward way, to higher-order equations [29].

3.2 Delay differential equations

Delay differential equations have been the subject of many investigations in the past years (we refer to the monographs [36, 37] and references therein for further informations). Here, we extend the approach described in the previous sections to cope with delay differential equations in the form

with \(\tau >0\) a fixed delay, and \(\phi :\mathbb {R}\rightarrow \mathbb {R}^m\) a known function. In fact, by using a timestep

for a suitable \(K\in \mathbb {N}\), and setting

the approximation to the solution over the interval Footnote 5

then, for \(n\ge 1\), the unknown coefficients \(\gamma _i^n\), \(i=0,\dots ,s-1\), solve the system of equations:

with the new approximation given by \(\sigma _n(h)=:y_n\approx y(t_n)\), i.e.,

We refer to [38] for full details on this approach.

4 Implicit differential algebraic equations

The procedure defined above to cope with ODE-IVPs and DDE-IVPs can be quite easily extended to solve implicit differential equations, too. For sake of simplicity we continue to deal with initial value problems, even though different kind of conditions could in principle be used, as seen in [39] in the Hamiltonian case. Let us then consider the problem

with \(F:\mathbb {R}^m\times \mathbb {R}^m\rightarrow \mathbb {R}^m\). We can then look for a polynomial approximation of degree s in the form (12), with the new approximation at h given by \( \sigma (h)=:y_1\approx y(h)\), formally still given by (15). As was done before, by requiring the corresponding residual be orthogonal to \(\Pi _{s-1}\), the unknown coefficients \(\gamma _i\), \(i=0,\dots ,s-1\), are the solution of the system of equations:

This approach, which is still under investigation, will be fully analyzed elsewhere.

4.1 Linearly implicit DAEs

An important simplification is obtained in the case of linearly implicit DAEs, for which the problem is assumed to be in the form

with \(M\in \mathbb {R}^{m\times m}\) a (possibly singular) known matrix. In this case, in fact, one may look again for a polynomial approximation of degree s in the form (12). As a result, we obtain that the unknown coefficients \(\gamma _i\), \(i=0,\dots ,s-1\), solve the system of equations:

Remark 4

It is worth mentioning that the iterative solution of (32) can be carried out via a modified blended iteration, w.r.t. that defined in [31] for HBVMs, by following similar steps as done in [40].

5 Fractional differential equations

Fractional differential equations deserve a separate treatment, due to the fact that the underlying differential operator differs from the usual one (see, e.g., [41]; moreover, we refer to [42] for a review on numerical methods for such problems). Let us then consider, for a given \(\alpha \in (0,1]\), the FDE

where \(D^\alpha \) is the Caputo fractional derivative. This latter derivative is defined, for a given differentiable function \(g:\mathbb {R}\rightarrow \mathbb {R}^m\), as

The corresponding Riemann-Liouville integral of order \(\alpha \) is defined as

It is known that:

With this premise, the polynomial approximation to the solution of (33) is now looked in the form

instead of (12).

Remark 5

Clearly, when \(\alpha =1\) (12) and (34) coincide, due to the fact that

As was done before, by formally plugging \(D^\alpha \sigma (ch)\) and \(\sigma (ch)\) into (33), the unknown coefficients \(\gamma _i\), \(i=0,\dots ,s-1\), are determined by requiring the residual be orthogonal to \(\Pi _{s-1}\), thus leading to the system of equations:

In the actual implementation of (35), it is useful knowing how to compute the fractional integrals of the Legendre polynomials.Footnote 6 For this purpose, the following known result will be useful:

In fact, by recalling the explicit expression of the Legendre polynomials,

from (36) one derives:

This approach for solving FDEs, at first sketched in [19], will be analysized in detail elsewhere. We mention that a related approach can be found in [43].

6 Conclusions

In this paper we have recalled the basic facts about the numerical solution of differential problems via the expansion of the derivative, either integer or fractional, along the Legendre polynomial basis. Integrating such an approximation then provides a polynomial approximation to the solution.Footnote 7 The coefficients of the polynomial approximation, in turn, are determined by imposing the orthogonality of the residual to a suitable polynomial space. In addition, we mention that the obtained methods can be also used as spectral methods in time, as was described in [20,21,22] in the case of Hamiltonian problems (see also [19, 44,45,46]). Future directions of investigation will concern the extension of this framework to different kinds of differential problems. Also the numerical solution of integral equations could be a further subject of investigation.

Notes

In fact, for isolated mechanical systems, H has the physical meaning of the total energy.

Runge-Kutta-Nyström methods are in fact devised for this purpose.

This discrete counterpart, as usual, is obtained by approximating the involved integrals by using a Gauss-Legendre quadrature of order 2k, thus resulting into a HBVM(k, s) method for special second-order problems.

Actually, the solution is known from the initial condition in (27), for \(n=1-K,\dots ,0\).

I.e., we need a result similar to (16) for the problem at hand.

Actually, a polynomial-like approximation, in the case of FDEs.

References

Chartier, P., Faou, E., Murua, A.: An algebraic approach to invariant preserving integrators: the case of quadratic and Hamiltonian invariants. Numer. Math. 103, 575–590 (2006). https://doi.org/10.1007/s00211-006-0003-8

Sanz-Serna, J.M., Calvo, M.P.: Numerical Hamiltonian Problems. Chapman & Hall, London, UK (1994)

Leimkuhler, B., Reich, S.: Simulating Hamiltonian Dynamics. Cambridge University Press, Cambridge, UK (2004)

Hairer, E., Lubich, Ch., Wanner, G.: Geometric Numerical Integration, 2nd edn. Springer, Berlin, Germany (2006)

Blanes, S., Casas, F.: A Concise Introduction to Geometric Numerical Integration. CRC Press, Boca Raton, FL, USA (2016)

Sanz-Serna, J.M.: Symplectic Runge-Kutta schemes for adjoint equations, automatic differentiation, optimal control, and more. SIAM Rev. 58, 3–33 (2016). https://doi.org/10.1137/151002769

McLachlan, R.I., Quispel, G.R.W., Robidoux, N.: Geometric integration using discrete gradients. Phil. Trans. R. Soc. Lond. A 357, 1021–1045 (1999)

Iavernaro, F., Pace, B.: \(s\)-Stage trapezoidal methods for the conservation of Hamiltonian functions of polynomial type. AIP Conf. Proc. 936, 603–606 (2007). https://doi.org/10.1063/1.2790219

Iavernaro, F., Pace, B.: Conservative block-Boundary Value Methods for the solution of polynomial Hamiltonian systems. AIP Conf. Proc. 1048, 888–891 (2008). https://doi.org/10.1063/1.2991075

Iavernaro, F., Trigiante, D.: High-order Symmetric Schemes for the Energy Conservation of Polynomial Hamiltonian Problems. JNAIAM J. Numer. Anal. Ind. Appl. Math. 4, 87–101 (2009)

Celledoni, E., McLachlan, R.I., McLaren, D.I., Owren, B., Quispel, G.R.W., Wright, W.M.: Energy-preserving Runge-Kutta methods. M2AN Math. Model. Numer. Anal. 43, 645–649 (2009). https://doi.org/10.1051/m2an/2009020

Brugnano, L., Iavernaro, F., Trigiante, D.: Hamiltonian BVMs (HBVMs): A family of “drift-free’’ methods for integrating polynomial Hamiltonian systems. AIP Conf. Proc. 1168, 715–718 (2009). https://doi.org/10.1063/1.3241566

Brugnano, L., Iavernaro, F., Trigiante, D.: Hamiltonian Boundary Value Methods (Energy Preserving Discrete Line Integral Methods. JNAIAM J. Numer. Anal. Ind. Appl. Math. 5, 17–37 (2010)

Hairer, E.: . Energy preserving variant of collocation methods. JNAIAM J. Numer. Anal. Ind. Appl. Math. 5, 73–84 (2010)

Brugnano, L., Iavernaro, F.: Line Integral Methods for Conservative Problems. Chapman et Hall/CRC, Boca Raton, FL, USA (2016). http://web.math.unifi.it/users/brugnano/LIMbook/

Brugnano, L., Iavernaro, F., Trigiante, D.: The lack of continuity and the role of infinite and infinitesimal in numerical methods for ODEs: The case of symplecticity. Appl. Math. Comput. 218, 8053–8063 (2012). https://doi.org/10.1016/j.amc.2011.03.022

Brugnano, L., Iavernaro, F., Trigiante, D.: A simple framework for the derivation and analysis of effective one-step methods for ODEs. Appl. Math. Comput. 218, 8475–8485 (2012). https://doi.org/10.1016/j.amc.2012.01.074

Li, Y.-W., Wu, X.: Functionally fitted energy-preserving methods for solving oscillatory nonlinear Hamiltonian systems. SIAM J. Numer. Anal. 54, 2036–2059 (2016). https://doi.org/10.1137/15M1032752

Amodio, P., Brugnano, L., Iavernaro, F.: Spectrally accurate solutions of nonlinear fractional initial value problems. AIP Conf. Proc. 2116, 140005 (2019). https://doi.org/10.1063/1.5114132

Brugnano, L., Montijano, J.I., Rández, L.: On the effectiveness of spectral methods for the numerical solution of multi-frequency highly-oscillatory Hamiltonian problems. Numer. Algorithms 81, 345–376 (2019). https://doi.org/10.1007/s11075-018-0552-9

Brugnano, L., Iavernaro, F., Montijano, J.I., Rández, L.: Spectrally accurate space-time solution of Hamiltonian PDEs. Numer. Algorithms 81, 1183–1202 (2019). https://doi.org/10.1007/s11075-018-0586-z

Amodio, P., Brugnano, L., Iavernaro, F.: Analysis of Spectral Hamiltonian Boundary Value Methods (SHBVMs) for the numerical solution of ODE problems. Numer. Algorithms 83, 1489–1508 (2020). https://doi.org/10.1007/s11075-019-00733-7

Boyd, J.P.: Chebyshev and Fourier Spectral Methods, 2nd edn. Dover Publ. Inc., New York (2000)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods. Springer-Verlag, Berlin (2007)

Gheorghiu, C.I.: Spectral methods for non-standard eigenvalue problems. Fluid and structural mechanics and beyond. SpringerBriefs in Mathematics. Springer, Cham (2014)

Shen, J., Tang, T., Wang, L.-L.: Spectral methods. Algorithms, analysis and applications. Springer Series in Computational Mathematics, vol. 41. Springer, Heidelberg (2011)

Brugnano, L., Iavernaro, F.: Line Integral Solution of Differential Problems. Axioms 7(2), 36 (2018). https://doi.org/10.3390/axioms7020036

Amodio, P., Brugnano, L., Iavernaro, F.: A note on the continuous-stage Runge-Kutta-(Nyström) formulation of Hamiltonian Boundary Value Methods (HBVMs). Appl. Math. Comput. 363, 124634 (2019). https://doi.org/10.1016/j.amc.2019.124634

Amodio, P., Brugnano, L., Iavernaro, F.: Continuous-Stage Runge-Kutta approximation to Differential Problems. Axioms 11, 192 (2022). https://doi.org/10.3390/axioms11050192

Brugnano, L., Iavernaro, F., Trigiante, D.: Analisys of Hamiltonian Boundary Value Methods (HBVMs): A class of energy-preserving Runge-Kutta methods for the numerical solution of polynomial Hamiltonian systems. Commun. Nonlinear Sci. Numer. Simul. 20, 650–667 (2015). https://doi.org/10.1016/j.cnsns.2014.05.030

Brugnano, L., Iavernaro, F., Trigiante, D.: A note on the efficient implementation of Hamiltonian BVMs. J. Comput. Appl. Math. 236, 375–383 (2011). https://doi.org/10.1016/j.cam.2011.07.022

Brugnano, L., Magherini, C.: Blended Implementation of Block Implicit Methods for ODEs. Appl. Numer. Math. 42, 29–45 (2002). https://doi.org/10.1016/S0168-9274(01)00140-4

Brugnano, L., Magherini, C.: Recent advances in linear analysis of convergence for splittings for solving ODE problems. Appl. Numer. Math. 59, 542–557 (2009). https://doi.org/10.1016/j.apnum.2008.03.008

Brugnano, L., Frasca-Caccia, G., Iavernaro, F.: Efficient implementation of Gauss collocation and Hamiltonian Boundary Value Methods. Numer. Algorithms 65, 633–650 (2014). https://doi.org/10.1007/s11075-014-9825-0

Brugnano, L., Magherini, C.: Blended Implicit Methods for solving ODE and DAE problems, and their extension for second order problems. J. Comput. Appl. Math. 205, 777–790 (2007). https://doi.org/10.1016/j.cam.2006.02.057

Bellen, A., Zennaro, M.: Numerical methods for Delay Differential Equations. Clarendon Press, Oxford (2003)

Brunner, H.: Collocation methods for Volterra integral and related functional equations. Cambridge University Press, Cambridge (2004)

Brugnano, L., Frasca-Caccia, G., Iavernaro, F., Vespri, V.: A new framework for polynomial approximation to differential equations. arXiv:2106.01926 [math.NA]. https://doi.org/10.48550/arXiv.2106.01926

Amodio, P., Brugnano, L., Iavernaro, F.: Energy-conserving methods for Hamiltonian Boundary Value Problems and applications in astrodynamics. Adv. Comput. Math. 41, 881–905 (2015). https://doi.org/10.1007/s10444-014-9390-z

Brugnano, L., Magherini, C., Mugnai, F.: Blended Implicit Methods for the Numerical Solution of DAE Problems. J. Comput. Appl. Math. 189, 34–50 (2006). https://doi.org/10.1016/j.cam.2005.05.005

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Garrappa, R.: Numerical solution of fractional differential equations: a survey and a software tutorial. Mathematics 6(2), 16 (2018). https://doi.org/10.3390/math6020016

Zhang, Z., Zeng, F., Karniadiakis, G.E.M.: Optimal error estimates of Petrov-Galerkin and collocation methods for initial value problems of fractional differential equations. SIAM J. Numer. Anal. 53, 2074–2096 (2015). https://doi.org/10.1137/140988218

Brugnano, L., Montijano, J.I., Rández, L.: High-order energy-conserving Line Integral Methods for charged particle dynamics. J. Comput. Phys. 396, 209–227 (2019). https://doi.org/10.1016/j.jcp.2019.06.068

Brugnano, L., Gurioli, G., Zhang, C.: Spectrally accurate energy-preserving methods for the numerical solution of the “Good’’ Boussinesq equation. Numer. Methods Partial Differential Equations 35, 1343–1362 (2019). https://doi.org/10.1002/num.22353

Barletti, L., Brugnano, L., Tang, Y., Zhu, B.: Spectrally accurate space-time solution of Manakov systems. J. Comput. Appl. Math. 377, 112918 (2020). https://doi.org/10.1016/j.cam.2020.112918

Funding

Open access funding provided by Università degli Studi di Firenze within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare no conflict of interests.

Additional information

Dedicated to the memory of professor Ilio Galligani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brugnano, L., Iavernaro, F. A general framework for solving differential equations. Ann Univ Ferrara 68, 243–258 (2022). https://doi.org/10.1007/s11565-022-00409-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11565-022-00409-6