Abstract

This research addresses issues in the design of online scaffolds for regulation within inquiry learning environments. The learning environment in this study included a physics simulation, data analysis tools, and a model editor for students to create runnable models. A regulative support tool called the Process Coordinator (PC) was designed to assist students in planning, monitoring, and evaluating their investigative efforts within this environment. In an empirical evaluation, 20 dyads received a “full” version of the PC with regulative assistance; dyads in the control group (n = 15) worked with an “empty” PC which contained minimal structures for regulative support. Results showed that both the frequency and duration of regulative tool use differed in favor of the PC+ dyads, who also wrote better lab reports. PC− dyads viewed the content helpfiles more often and produced better domain models. Implications of these differential effects are discussed and suggestions for future research are advanced.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This paper addresses issues in the design of online tool support within scientific inquiry learning environments. These environments enable students to learn science by doing science, offering resources to develop sound scientific understanding by engaging in knowledge inference processes such as hypothesis generation, experimentation, and drawing conclusions (De Jong 2006; Swaak et al. 1998). However, knowledge gains through inquiry are also influenced by metacognitive factors such as the learners’ knowledge and regulation of their own cognitions (Chin and Brown 2000; Kuhn et al. 2000; Schoenfeld 1992). Expert learners are thought to employ planning, monitoring, and evaluation processes while utilizing self-knowledge, task requirements, and a repertoire of strategies to achieve academic goals and objectives (Ertmer and Newby 1996; Schraw 1998). Research has demonstrated that students who actively regulate their cognitions through these processes show higher learning gains over students who do not (Azevedo et al. 2004).

Despite these benefits, students typically evidence very few instances of regulation during their inquiry work (De Jong and Van Joolingen 1998; Land 2000; Manlove and Lazonder 2004). This is especially relevant for technology-enhanced inquiry learning where students are offered several software tools to infer domain knowledge, but require guidance for the regulative aspects of their inquiry. In order to compensate for low levels of regulation, researchers have turned to the development of regulative support tools. White and Frederiksen’s (1998) Thinker Tools curriculum promoted regulative skills via scaffolds for planning, monitoring, and evaluation. Veenman et al. (1994) utilized system-generated prompts to direct students’ attention to the regulatory aspects of their inquiry task. Quintana et al. (2004), Kapa (2001), and Zhang et al. (2004) also espoused the value of promoting and supporting regulative skills such as process management, reflection, and meta-level functioning within inquiry learning environments.

Although at face value the potential of regulative tool support is quite compelling, there have been few systematic evaluations of their effectiveness. The current research therefore attempts to offer empirical evidence regarding the potentials of a software tool which supports inquiry learning through planning, monitoring, and evaluation. Prior to explicating the design of the study, a brief overview of a framework of self-regulation is given in order to contextualize the design rationale and the features of the support tool.

Self-regulation in inquiry learning

Self-regulated learning refers to a student’s active and intentional generation of thoughts, feelings, and actions which are planned and adapted cyclically for goal attainment (Zimmerman 2000). Regulation of cognition is an area of self-regulated learning (Pintrich 2000) which focuses on the strategies students use to control and regulate their thinking during learning or task performance. Cognitive regulation is a recursive process which comprises three main phases: planning, monitoring, and evaluation. These phases are consistent with the regulative processes students should engage in during inquiry learning (Njoo and De Jong 1993).

Planning

When highly self-regulating students are first introduced to a learning task, they begin to assimilate and coordinate conditions about the task. The result of this problem orientation process is the students’ definition of what they are to do (Winne 2001). Goals and subgoals formed from this initial task understanding help students decide on specific outcomes of learning or performance (Zimmerman 2000). Goals of highly self-regulated individuals according to Zimmerman “are organized hierarchically, such that process goals operate as proximal regulators of more distal outcome goals” (p. 17). In situations calling for scientific reasoning, the phases of scientific inquiry (orientation, hypothesis formation, experimentation, and drawing conclusions) become these process goals (White and Frederiksen 1998). Key activities within each phase may be transformed into subgoals. The resulting goal hierarchies, once established, become the strategic plans students use to perform an academic task (Winne 2001), and the standards against which they monitor and evaluate their performance. As self-regulation is a recursive process, students will most likely abandon, adapt, or refine plans, task definitions, and standards as they progress through the task.

Monitoring

Once highly self-regulated students begin to execute their strategic plans, they begin to monitor their comprehension and task performance. Monitoring involves a comparison of students’ current knowledge or the current state of a learning product to goal, task, or resource standards (Azevedo et al. 2004; Winne 2001). It is a process which can be triggered either internally (by the student) or externally (by the environment). Highly self-regulated learners are sensitive to both types of stimuli. In case of internal triggering they act on a perceived personal need to check progress and understanding. External triggering occurs when cues in the environment (e.g., contradictory simulation output) attend students to possible comprehension or task performance failures.

Effective strategies for monitoring include self-questioning and elaboration (Lin and Lehman 1999). Self-questioning such as “Do I understand what I am doing?” and “How does this result compare to what the assignment says” assists students in comparing the current learning states to the goals established during planning. Elaboration strategies such as note taking and self-explaining are also utilized effectively by strong self-regulating students, as the process of elaborating often shows discrepancies in knowledge which highly self-regulating students attend to (Chi et al. 1994; Schraw 1998). Self-questioning and elaboration strategies generate feedback students can use to make metacognitive judgments about their learning. Only if these judgments point at comprehension or task performance failures, highly self-regulating students will enact a new strategy or tactic, or adapt an “in use” strategy or tactic in order to more closely match a goal state (Winne 2001).

Evaluation

Evaluation activities entail assessments of learning processes and learning outcomes, as well as generalizations relating these processes and products to a broader context (De Jong 2006; Ertmer and Newby 1996). Evaluation of learning processes involves any reflection on the quality of the students’ planning, or how well they executed their plans. Issues highly self-regulating students might address to evaluate their learning include hypotheses plausibility, representation effectiveness, experimentation systematicity, exploitation of surprising results, and adequate comparison of predictions with results (Klahr et al. 1990; Lavoie and Good 1988).

Standards such as those illustrated are based on goals and task standards set during planning. Evaluation with them sets itself apart from the monitoring which occurs during task execution by looking at products in relation to the entire task. Strong self-regulators might use their overall goal or the task description as a reference point to determine the quality of their products and knowledge gains. In addition, students who generalize learning outcomes or products to a broader context reflect on the link between past and future actions promoting transfer of both domain and metacognitive knowledge to new situations (Von Wright 1992).

Problems and solutions

When high-school students engage in inquiry learning, they perform very few of the activities discussed above. They often have poorly constructed plans, or no plans at all. Manlove and Lazonder (2004) found that students mostly determined what to do as they went, making only ad-hoc plans to respond to an immediate realization of a current need, rather than taking a systematic or global approach. Such reactive methods of self-regulation are generally ineffective because they fail to provide the necessary goal structure and strategic plans for students to progress consistently and monitor and evaluate their learning effectively (Zimmerman 2000).

A process model might help overcome these planning problems (Lin and Lehman 1999). Process models give students a global understanding of the task by outlining the stages an expert would go through in performing the inquiry task at hand. A process model thus conveys the top level goals in the hierarchy. It can be supplemented with more specific goals within each of the phases, thus providing students with input to establish strategic plans, and standards for monitoring and evaluating their learning. Process models and goal hierarchies were applied successfully in the Thinker Tools curriculum (White and Frederiksen 1998), and learning environments such as ASK Jasper (Williams et al. 1996) and Model It (Jackson et al. 1996).

The problems high-school students have with monitoring are threefold. First, most student fail to recognize they do not understand something (internal triggering) and often are unaware of environmental cues (external triggering) which can provide feedback points for monitoring (Chi et al. 1994; Davis and Linn 2000; Ertmer and Newby 1996). Secondly, even if students identify comprehension failures, they often do not express a detailed understanding of specifically what they do not understand (Manlove and Lazonder 2004). Finally, the few students who manage to become aware at a detailed level that they are experiencing a comprehension or task performance failure, often do not have the strategies or tactics needed to fix their problems (Schraw 1998).

Research has shown that both the quantity and the quality of students’ monitoring activities can be enhanced by explicit prompting and direct strategy use feedback. Software cues (e.g., pop-ups) can point students to features of the learning environment which can be used for monitoring, and encourage students to write down the results of monitoring in a note (Butler and Winne 1995). Self-explanation prompts (“What is meant by ‘a relationship’ between two variables?”) can help students detect and elaborate comprehension problems within note content (Davis and Linn 2000). Reasons-justification prompts (“How did you come up with these relationships?”) can assist students in judging the effectiveness and appropriateness of the procedures they utilized in their inquiries (Lin and Lehman 1999). Proposing specific strategies for comprehension and task performance failures will also assist students in the final phase of monitoring (Schraw 1998).

The difficulties high-school students have with evaluating are very similar to the problems encountered during monitoring. Students typically are unaware of the issues they should attend to in evaluating learning processes and outcomes, or the way these issues should be addressed in their evaluations. For example, Schauble et al. (1991) showed that students hardly reflect on the experimental setup or how methodological issues relate to the research question. Chinn and Brewer (1993) reported that students tend to discount results which are inconsistent with their expectations, and Njoo and De Jong (1993) found that students hardly generalize their learning processes and outcomes to different situations.

Prompting has been found to be as successful in advancing evaluation type activities as it has been to promote monitoring. White and Frederiksen (1998) utilized self-reflective assessments to prompt evaluative activities. These assessments included criteria and rating scales to assist students in assessing their learning processes and products. Self-assessments can be integral to report writing. Lab reports have been a common way for students to report conclusions in science classrooms (White et al. 1999). To assist them in writing their reports, students could be given a report template which augments the process model by unpacking the goals and subgoals associated with each step, and provides issues and suggestions against which learning processes and products can be evaluated.

Investigating regulative tool support

The solutions above propose ways to assist high-school students in planning, monitoring, and evaluating their inquiry learning processes and products through online tool support. The effectiveness of these regulative scaffolds was evaluated in an empirical study wherein students performed a scientific inquiry task. Students utilized a support tool called the Process Coordinator (PC) to regulate their inquiry. Students in the experimental condition (PC+) had access to a “full” version of this tool. It included a process model and preset goal hierarchy to support planning, as well as hints, cues, and prompts to promote monitoring through note taking. A separate report template with embedded suggestions for structure and content from which students could develop quality criteria was available for evaluation. Students in the comparison condition (PC−) received an “empty” support tool which contained none of the regulative support measures available to PC+ students. Instead, the PC− equipped students with an electronic facility to set their own goals, take notes, and structure their reports however they deemed appropriate. Given these differences in regulative support, students in the PC+ condition were expected to achieve higher learning outcomes and use the PC more often to plan, monitor, and evaluate than PC− students.

Materials and methods

Participants

Seventy students (30 males and 40 females, aged 16–18) from three international secondary schools in the Netherlands participated in the study. Based on a review of school curricula, and teacher statements, none of the students were familiar with fluid dynamics, the topic of the inquiry activity. Students were classified by their teachers as high, average, or low-achievers based on their science grades. (Student grades themselves were not available due to student record confidentiality). Participants of different achievement levels were randomly grouped into medium-range mixed-ability dyads. Medium range heterogeneous ability grouping promotes more productive conversations during science learning (Gijlers and De Jong 2005a), peer guidance (e.g., Webb et al. 2002), and equality of participation (Webb 1991). Dyads thus comprised either a high and average achiever, or an average and low achiever. Given the fact of within-class grouping, 6 medium achievers and 4 high achievers had to be grouped homogeneously. All 35 dyads were then randomly assigned to the PC+ condition (n = 20) or the PC− condition (n = 15). Preliminary checks revealed no between-condition differences based on achievement level (Lχ 2 (4, N = 35) = 2.80, p = 0.59).

Materials

Dyads in both conditions worked on an inquiry task within fluid dynamics which invited them to discover which factors influence the time to empty a water tank. This task was performed within Co-Lab, an inquiry learning environment in which dyads could experiment through a computer simulation of a water tank, and express acquired understanding by making a runnable system dynamics model (see Van Joolingen et al. 2005, for an elaborate description of Co-Lab). By judging model output against the simulation’s results, students could adjust or fine-tune their model and thus build an increasingly elaborate domain understanding. Helpfiles explained the operation of the tools in the environment and presented domain information that was too difficult to infer from interactions with the simulation. These files covered physics topics such as “Torricelli’s Law” and “water volume” as well as information about system dynamics modeling variables and relationships. Within the modeling help files, information about the domain was often mixed with procedural information related to the operation of the model editor. The same helpfiles were available in both conditions.

The Process Coordinator (PC) supported dyads in regulating their inquiry learning process. Dyads in the PC− condition received an “empty” version of this tool which contained no process model, preset goal hierarchy, goal descriptions, hints, cues, prompts, or report template. The PC− was functional in that students could use it to set, monitor, and evaluate their own goals. Dyads in the PC+ condition were given a “full” version of the PC. This tool contained a process model, a preset goal hierarchy, and goal descriptions which outlined the phases students should process in performing their inquiry (see Fig. 1). Each goal came with one or more hints students could view by clicking the “Show hints” button. Hints proposed strategies for goal attainment. Doing so required students to click the “Take or edit notes” button to open up a note taking form. As explained in the introduction, prompts were written based on Davis and Linn’s (2000) description of self-explanation prompts (e.g., “Which variables are you most and least sure have an effect on the time it takes to empty a pool?”), and Lin and Lehman’s (1999) description of reason-justification prompts (e.g., “For the variables you are most sure have an effect, why do you think so?”). Each note form contained one of each type of prompt to stimulate students to check their comprehension and provide evidence for it. Cues reminded students to take notes. Cues appeared as pop-ups in the environment, either when students had not taken a note for 10 min or when they switched to a different activity. Since imposed strategy use can be counterproductive and can be seen as an extra cognitive burden (Lan 2005), students were not forced to make a note in response to a cue. Notes were automatically attached to the active goal and could be inspected by clicking the “History” tab. As the right image of Fig. 1 shows, this action changed the outlook of the PC such that it revealed the goals and the notes students attached to them in chronological order.

Students in both conditions received a simple text editing tool to write their final reports. This report editor was embedded within the environment and enabled students to copy the contents of their notes into their reports. Lab report writing was a common practice in the participating school’s science classes. As such all students were familiar with what a lab report should contain. PC+ dyads were given a report template, which served as a reminder of the structure of a lab report and offered issues and suggestions for content which students could use to reflectively evaluate their learning processes and products. It was predicted that the presence of the template would promote more structured and elaborate lab reports. PC− dyads were not given this template but were informed that they would be writing a lab report within the PC− introduction and in the task assignment.

Procedure

The experiment was conducted over five 50-min lessons that were run in the school’s computer lab. The first lesson involved a guided tour of Co-Lab and an introduction to modeling. During the guided tour students in both conditions were taught to plan, monitor, and evaluate their learning with the version of the PC tool they would receive. The modeling tutorial familiarized students with system dynamics modeling language and the operation of Co-Lab’s modeling tool. It contextualized the modeling process within a common situation: the inflow and outflow of money from a bank account. Students completed the modeling introduction individually within 20 min. In the next four lessons students worked on the inquiry task. They were seated in the computer lab with group members face-to-face in front of one computer. Students were directed to begin by reading the assignment, to use the PC tool for regulation and to refrain from talking to other groups. At the beginning of each lesson the experimenter reminded the students to use the PC tool. At the beginning of lesson 4, students were told to complete their modeling work, and at the beginning of lesson 5 they were told to stop their modeling work and complete their lab reports. Assistance was given on computer technical issues only.

Coding and scoring

Learning outcomes

Learning outcomes were assessed from the dyads’ final models and lab reports. As models convey students’ conceptual domain knowledge from variable and relationship specification (White et al. 1999), a model quality score was computed from the number of correctly specified variables and relations in the models. One point was awarded for each correctly named variable, with “correct” referring to a name identifying a factor which influences the outflow of the water tank (i.e., water volume, tank level, tank diameter, drain diameter, outflow rate). One additional point was given in case a variable was of the correct type (i.e., stock, auxiliary, constant). Concerning relations, one point was awarded for each correct link between two variables. Up to two additional points could be earned if the direction and type of the relation was correct. The maximum model quality score was 26. All models were scored by two raters; inter-rater reliability estimates (Cohen’s κ) for variables and relations were 0.95 and 0.91 respectively.

A rubric was developed to evaluate the structure and content of students’ lab reports. Both measures were scored for completed reports only. Report structure concerned the logical organization of the students’ writing, and was indicated by the presence of sections specified in the report template (i.e., introduction, method, results, conclusion, discussion). One point was awarded for each included section, leading to a maximum score of five points. A report content score represented the extent to which students’ reports addressed the topics of evaluation subsumed under each section in the report template. Examples include “state your general research question,” “list your hypotheses,” “present data for each hypothesis,” and “describe pros and cons of your working method”. The template contained 11 topics, and the report content score reflected the number of topics included in a report. All reports were scored by two raters; inter-rater agreement reached 85.14% for report structure and 86.49% for report content.

Regulative activities

Analyses of regulative activities focused on students’ use of the regulative scaffolds. All data were assessed from the logfiles. The scope of participants’ regulative activities was indicated by the duration and frequency of PC and report editor use. At a more detailed level, participants’ actions with these tools were classified as being either a planning, monitoring, or evaluation act. Given the differences in regulative support across conditions, the coding of these measures showed some cross-condition variation as well.

Planning was defined as the number of times participants’ added a goal (PC− condition only), and viewed a goal and its description (both conditions). PC actions associated with monitoring were note taking and note viewing. Note taking concerned the number of times participants created a new note or edited an existing note; note viewing was the number of times participants checked existing notes. Note taking instances of PC+ dyads were further classified as either spontaneous or cued. The number of instances in which participants viewed hints was also indicative of monitoring in the PC+ condition. Dyads in the PC− condition had no access to hints, making the helpfiles their only source of assistance. Instances of helpfile usage were counted for both conditions. Evaluation was assessed from the number of times students viewed the report template, and could be assessed in the PC+ condition only.

Data analysis

Data analysis focused on between-group differences in regulative activities and learning outcomes. Given the small sample size, Kolmogorov–Smirnov tests were performed to test the normality assumption. Levene’s tests were used to check the homogeneity of variances among cell groups for all dependent variables. In case of homogeneity, one-way univariate ANOVA’s were used to examine the effect of the regulative scaffolds on that variable. Variables with unequal variances were analyzed by means of t tests with separate variance estimates. In case of significance, the standardized difference between groups (Cohen’s d) was computed to indicate the magnitude of effects. Correlational analyses were performed to examine the relationships between tool use for regulative activities and learning outcomes.

To ensure that observed differences were attributed solely to the presence and use of regulative scaffolds, all of the above analyses were re-run using the dyads’ achievement level as an additional factor. Achievement was indicated by the teacher-assigned level of the highest achieving group member. As none of the analyses for regulative activities revealed a significant main effect of achievement level or a significant condition × achievement interaction, these result were not reported for the sake of readability. Achievement level did affect learning outcomes however, so two-way univariate ANOVAs were reported for the analyses of the dyads final models and lab reports.

Results

The data summarized in Table 1 shows that overall dyads in both conditions spent a comparable amount of time on the inquiry task (F(1,33) = 0.07, p = 0.80). However, participants from both conditions organized their time differently, particularly with respect to balancing task performance and regulation. On average, PC+ dyads used the Process Coordinator about three times as long and twice as much as PC− dyads did. Both differences were statistically significant (time: t(24.01) = 3.78, p < 0.01, d = 1.21>; frequency: t(22.81) = 2.08, p < 0.05, d = 0.66). PC+ dyads also activated the report editor more often than PC− dyads, but this difference did not reach significance (F(1,33) = 2.60, p = 0.12). Nor did comparison between the conditions on report editor use time (F(1,26) = 0.01, p = 0.92).

Table 2 gives a more detailed account of the participants’ regulative tool use. As the PC− contained no regulative directions, dyads in this condition had to set their own goals during planning. Goal setting was observed in 12 of the 15 PC− dyads, with scores ranging from 1 to 8 goals (mode = 2). PC− dyads could view the goals they added to the PC throughout their inquiry, just like PC+ dyads could view the preset goals in their version of the tool. As shown in Table 2, PC+ dyads viewed goals nine times more often than PC− dyads (t(21.48) = 5.39, p < 0.01, d = 1.72). Table 2 also shows a high standard deviation in the goal viewing scores of the PC+ dyads. Closer examination of the frequencies indicated that PC+ dyads viewed goals 6 to 72 times (median = 21).

Concerning monitoring, dyads in the PC+ condition took notes more than twice as often as their PC− counterparts did. Yet this difference was not significant at the 0.05 level (t(21.03) = 1.82, p < 0.10, d = 0.35), which is probably due to a high variability of scores. On average, 9.82% of the PC+ dyads’ note taking occurred in response to a cue. Still, a mere 3.83% of all cues triggered note taking activity, while 5.98% of the cues resulted in a regulative action with the PC other than note taking. The scores in Table 2 further indicate that viewing the contents of existing notes occurred as often in both conditions (F(1,33) = 0.30, p = 0.59). PC+ dyads viewed the hints 0 to 24 times. The distribution in scores was skewed, with 75% of the dyads viewing 5 hints or less. As hints were not available in the PC−, dyads in this condition could only turn to the helpfiles for assistance. Results showed that they consulted these files more often than PC+ dyads did (F(1,33) = 10.92, p < 0.01, d = 1.13).

Central to evaluation activities was report writing. The template that assisted PC+ dyads herein was consulted by 16 of the 20 dyads. The majority of these dyads viewed the template once or twice during the writing process. The outcomes of participants’ evaluative efforts differed in favor of the PC+ dyads. As Table 1 shows, report structure scores were significantly higher in the PC+ condition (F(1,22) = 9.57, p < 0.01, d = 1.49), indicating that PC+ dyads produced better structured reports. Achievement level had no effect on this measure (F(1,22) = 0.29, p = 0.60); the condition × achievement interaction was not significant either (F(1,22) = .1.47, p = .24). PC+ dyads also gave a more complete account of their inquiry activities and outcomes, as evidenced by higher report content scores. This difference too was statistically significant (F(1,24) = 22.66, p < .01, d = 1.74). There was no significant main effect of achievement level (F(1,22) = .05, p = 83) and no significant interaction (F(1,22) = .01, p = .92).

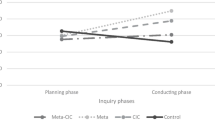

In addition to lab reports, learning outcomes were indicated by the quality of the groups’ final model solutions (see Table 1). The model quality scores of PC− dyads were significantly higher than those of the PC+ dyads (F(1,31) = 9.98, p < 0.01, d = 1.26). Achievement level had no effect on model quality (F(1,31) = 0.40, p = 0.53), but the condition × achievement interaction proved statistically significant (F(1,31) = 6.76, p < 0.05). Figure 2 illustrates how the effect of condition was moderated by achievement level. As these graphs indicate, lower-achieving PC− dyads on average obtained a 10-point higher model quality score than their PC+ counterparts. For higher-achieving dyads this difference was 1.1 point.

Correlational analyses further revealed that higher model quality scores were associated with lower instances of goal viewing and note taking (see Table 3). The correlation between model quality and helpfile viewing approached significance (p = 0.07), suggesting that dyads who consulted the helpfiles more often also built more accurate models. A reverse pattern was obtained for both lab report scores, with significant positive correlations for frequencies of regulative tool use, and negative correlations for instances of helpfile viewing.

Discussion

This study examined the effects of regulative tool support on students’ regulative activities and learning outcomes. Students who had access to a scaffold with regulative directions were expected to use the tool more often for regulation, and to construct better domain models and write better lab reports than students who received an “empty” version of this tool.

Results for regulative activities generally confirm the hypotheses on tool use and time measures. PC+ dyads used their Process Coordinator more frequently and for a longer amount of time. In terms of tool use, two activities in particular stand out as differing from the PC− condition: goal viewing and note taking. PC+ dyads viewed more goals than their PC− counterparts. While PC− dyads set very few goals—and thus had fewer goals to view—the process model and goal hierarchy was the most used feature for PC+ dyads, suggesting that students might follow and utilize goal lists more often then setting or revisiting their own goals. PC+ dyads also had higher note taking frequencies but this difference was not supported by standard measures of statistical significance. In addition, almost 10% of the notes in the PC+ condition were taken in response to a cue, and about 6% of the cues triggered other PC actions such as goal viewing. In view of these findings it is probably fair to conclude that cues have a moderate but positive contribution to regulative tool use in general and note taking in particular.

The hypothesis with respect to learning outcomes is partially supported by the results. As expected, both low and high-achieving PC+ dyads produced structurally better lab reports and gave a more complete account of their inquiry activities than PC− dyads. The fact that most PC+ groups used the report template during the writing process may have contributed to this effect. Positive correlations between lab report scores and regulative activities further indicate that PC+ students who viewed more goals and took more notes were given a head start on their lab report work.

Contrary to expectations, PC− dyads created significantly better models than their more supported counterparts. The significant condition × achievement further indicates that this conclusion applies to low-achieving dyads only. Two factors may have contributed to this unexpected outcome. First, given the substantial differences in time spent on regulative tool use, it seems plausible that using a fully specified PC simply takes too much time away from modeling work. This seems pertinent to low-achieving dyads who, due to their lower levels of domain proficiency, may require more time to grasp the regulative directions offered by the PC. Secondly, PC− dyads as a whole viewed helpfiles more often than PC+ dyads, and helpfile viewing was positively associated with model quality (significant at the 0.10 level). As the helpfiles contained domain and model-specific information, it may have been more useful for modeling than the process-oriented regulative support. PC− dyads who, in absence of regulative support, focused on the helpfiles thus may have had an advantage in their modeling work. These findings also suggest that PC+ students relied on regulative support more than domain support.

The implied dichotomy between choosing appropriate scaffolds for different learning outcomes may account for the discrepancy between this study’s findings and those mentioned in the introduction where student learning gains benefited from regulative support. Although the PC+ did refer students to specific helpfiles within the hints, it apparently did not go far enough in stressing that domain support would enhance their modeling work. This points to a need for scaffolds designed for different purposes to work in tandem with each other. One way to make this possible is to link regulative support more closely to domain supports and activities. Future research might investigate whether such integrated support yields higher use and perceived usefulness than stand-alone regulative support.

Although the present study was not designed to capture students’ views on the usefulness of the PC, the high data variance across all measures indicates that its use was widely divergent. This could mean that students are not equally compliant in regulative scaffolding use—nor perhaps should that be the expectation. After all, students enter a learning environment with a variety of learning skills, prior knowledge and learning styles. As the analysis related to dyad achievement shows, prior knowledge did impact student’s model quality. Although future research which gathers specific pre-test measures of ability might illuminate this factor more precisely, the high variance in scores could indicate that the use and usefulness of the PC depends on the synergy existent between students’ existing regulative abilities and knowledge. Higher-achieving dyads may use the tool less, and find it less useful because the regulative directions conflict with their own regulative strategies; lower achieving dyads may be overwhelmed with the comprehensive support the PC supplied. In the future, adaptive and non-intrusive support structures, although difficult to create, seem called for. Suggestions for how to achieve these two qualities are further elaborated below.

Non-intrusive support for regulation could imply that students perform regulative activities as a “natural” course of conducting their inquiry. The format of a support feature and its intended outcome might thus play a role in its perceived usefulness. Monitoring through note taking is a good example of how this may be achieved. Note taking in this study was situated away from the simulation and model editor. Provision of an annotation function to simulation output or the ability to add comments to models might be a more natural, less obtrusive way to monitor task performance and comprehension. The non-intrusiveness of note taking could be further enhanced by adapting its representation to the representation used in the inquiry activity. The system dynamics models students had to create rely almost exclusively on a graphical representation of information. The PC+ supports for modeling however were text-based. The negative correlation between note taking activity and model quality scores suggests that text-based note formats could be inconsistent with or unfruitful for graphical modeling work. Evidence supporting this notion can be gleaned from the work of Gijlers and De Jong (2005b), who found that supplying students with a concept map tool significantly enhanced their understanding of structures and interrelations in the domain. The work of Larkin and Simon (1987) and Van der Meij and De Jong (2006) point to the idea that different types of representations are useful for different activities pertaining to “…their representational and computational efficiency” (p. 200). The processing and use of different representations is also accompanied by an assumption of limited capacity, meaning that the amount of representational processing that can take place within information processing channels for visual or verbal information is extremely limited (Mayer 2003). Thus note taking which is closer to the graphical nature of modeling may be more beneficial than plain text-based note taking. Future research should look at adapting note representations to task characteristics especially with the rather innovative learning outcomes often found in scientific inquiry learning environments.

The issue of intrusiveness certainly applies to the use of cues to stimulate students to take notes. In this study the timing of cues was based on the prior work of Manlove and Lazonder (2004), who found that natural monitoring points occur at virtual room changes within the environment (which mark the end of an activity), but also that students often spend long periods of time in one room. Timing the cues for every 10 min came from considering that without frequent room changes or note taking (as the cue times were reset if students took a note) students would receive on average five cues per session. So despite efforts to minimize intrusion, the relatively low cue responses in this study suggest that students may still have seen them as interruptive. Future research should investigate the appropriate timing and placement of cues and their effect on note taking to see when and where they help rather than hinder work from a student’s point of view.

However, the use of cues and regulative supports in general may have been influenced by the fact that students performed the inquiry task in dyads. Collaboration was used because it is a common form of learning during hands-on science activities in secondary schools. Although not an object of study in this research (students in both conditions worked collaboratively), collaboration may have had an effect on students’ regulatory or help seeking behavior. A study by Manlove et al. (2006) revealed no differences in the amount of regulative talk between PC+ and PC− groups, but in a broader sense, the presence of a peer may have lowered the frequency of tool use in both conditions. For example, students may not always consult the PC or helpfiles if they can just ask their partner for help. The magnitude of this effect could be examined by comparing regulative tool use during collaborative and individual inquiry learning.

Overall the results of this study point to implications for practice. The educational benefits of regulative scaffolding depend on factors such as amount of domain and process support and their perceived match to learning activities, outcomes and context. Teachers who wish to use technology-enhanced inquiry learning environments should assist students in the appropriate selection of supports for task activities. They may, for instance, point out that helpfiles might be more beneficial for modeling activities but that responding to prompts within notes will assist them in report writing. Educational designers also need to be aware of this need to “regulate regulative tool use.” Designs which situate regulative support closely to task activities and make apparent how their use benefits learning outcomes may be utilized more frequently and be seen as more beneficial by students. In this way regulation and its role within inquiry learning environments might strike a balance between being salient, and implicit when facilitating domain and process knowledge construction in science learning.

References

Azevedo, R., Guthrie, J. T., & Seibert, D. (2004). The role of self-regulated learning in fostering students’ conceptual understanding of complex systems with hypermedia. Journal of Educational Computing Research, 30(1/2), 87–111.

Butler, D. L., & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of Educational Research, 65, 245–281.

Chi, M. T. H., De Leeuw, N., Chiu, M. H., & LaVancher, C. (1994). Eliciting self-explanations improves understanding. Cognitive Science, 18, 439–477.

Chin, C., & Brown, D. E. (2000). Learning in science: A comparison of deep and surface approaches. Journal of Research in Science Teaching, 37, 109–138.

Chinn, C. A., & Brewer, W. F. (1993). The role of anomalous data in knowledge acquisition: A theoretical framework and implications for science instruction. Review of Educational Research, 63, 1–49.

Davis, E. A., & Linn, M. C. (2000). Scaffolding students’ knowledge integration: Prompts for reflection in KIE. International Journal of Science Education, 22, 819–837.

De Jong, T. (2006). Computer simulations: Technological advances in inquiry learning. Science, 312(5773), 532–533.

De Jong, T., & Van Joolingen, W. R. (1998). Scientific discovery learning with computer simulations of conceptual domains. Review of Educational Research, 68, 179–201.

Ertmer, P. A., & Newby, T. J. (1996). The expert learner: Strategic, self-regulated, and reflective. Instructional Science, 24, 1–24.

Gijlers, A. H., & De Jong, T. (2005a). The relation between prior knowledge and students’ collaborative discovery learning processes. Journal of Research in Science Teaching, 42, 264–282.

Gijlers, H,. & De Jong, T. (2005b). Confronting ideas in collaborative scientific discovery learning. Paper presented at the AERA 2005, Montreal, CA.

Jackson, S. L., Stratford, S. J., Krajcik, J., & Soloway, E. (1996). Making dynamic modeling accessible to pre-college science students. Interactive Learning Environments, 4, 233–257.

Kapa, E. (2001). A metacognitive support during the process of problem solving in a computerized environment. Educational Studies in Mathematics, 47, 317–336.

Klahr, D., Dunbar, K., & Fay, A. L. (1990). Designing good experiments to test bad hypotheses. In J. Shrager, & P. Langley (Eds.) Computational models of discovery and theory formation (pp. 355–402). San Mateo, CA: Morgan-Kaufman.

Kuhn, D., Black, J., Keselman, A., & Kaplan, D. (2000). The development of cognitive skills to support inquiry learning. Cognition and Instruction, 18(4), 495–523.

Lan, W. (2005). Self-monitoring and its relationship with educational level and task importance. Educational Psychology, 25, 109–127.

Land, S. M. (2000). Cognitive requirements for learning with open-ended learning environments. Educational Technology Research and Development, 48, 61–78.

Larkin, J. H., & Simon, H. A. (1987). Why a diagram is (sometimes) worth ten thousand words. Cognitive Science, 11, 65–99.

Lavoie, D. R., & Good, R. (1988). The nature and use of prediction skills in a biological computer-simulation. Journal of Research in Science Teaching, 25, 335–360.

Lin, X. D., & Lehman, J. D. (1999). Supporting learning of variable control in a computer-based biology environment: Effects of prompting college students to reflect on their own thinking. Journal of Research in Science Teaching, 36, 837–858.

Manlove, S., & Lazonder, A. (2004). Self-regulation and collaboration in a discovery learning environment. Paper presented at the First Meeting of the EARLI-SIG on Metacognition, Amsterdam, The Netherlands.

Manlove, S., Lazonder, A. W., & De Jong, T. (2006). Regulative support for collaborative scientific inquiry learning. Journal of Computer Assisted Learning, 22, 87–98.

Mayer, R. E. (2003). The promise of multimedia learning: Using the same instructional design methods across different media. Learning and Instruction, 13, 125–139.

Njoo, M., & De Jong, T. (1993). Exploratory learning with a computer simulation for control theory: Learning processes and instructional support. Journal of Research in Science Teaching, 30, 821–844.

Pintrich, P. R. (2000). The role of goal orientation in self-regulated learning. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.) Handbook of self-regulation (pp. 452–502). San Diego, CA: Academic.

Quintana, C., Reiser, B. J., Davis, E. A., Krajcik, J., Fretz, E., Duncan, R. G., et al. (2004). A scaffolding design framework for software to support science inquiry. Journal of the Learning Sciences, 13, 337–386.

Schauble, L., Klopfer, L. E., & Raghavan, K. (1991). Students’ transitions from an engineering model to a science model of experimentation. Journal of Research in Science Teaching, 28, 859–882.

Schoenfeld, A. H. (1992). Learning to think mathematically: Problem solving, metacognition, and sense-making in mathematics. In D. A. Grouws (Ed.) Handbook of research on mathematics learning and teaching (pp. 334–370). New York: Macmillan.

Schraw, G. (1998). Promoting general metacognitive awareness. Instructional Science, 26, 113–125.

Swaak, J., Van Joolingen, W. R., & De Jong, T. (1998). Supporting simulation-based learning; the effects of model progression and assignments on definitional and intuitive knowledge. Learning and Instructions, 8, 235–253.

Van der Meij, J., & De Jong, T. (2006). Supporting students’ learning with multiple representation in a dynamic simulation-based learning environment. Learning and Instruction, 16, 199–212.

Van Joolingen, W. R., De Jong, T., Lazonder, A. W., Savelsbergh, E. R., & Manlove, S. (2005). Co-Lab: Research and development of an online learning environment for collaborative scientific discovery learning. Computers in Human Behavior, 21, 671–688.

Veenman, M. V. J., Elshout, J. J., & Busato, V. V. (1994). Metacognitive mediation in learning with computer-based simulations. Computers in Human Behavior, 10, 93–106.

Von Wright, J. (1992). Reflections on reflection. Learning and Instruction, 2, 59–68.

Webb, N. M. (1991). Task-related verbal interaction and mathematics learning in small groups. Journal for Research in Mathematics Education, 22, 366–389.

Webb, N. M., Nemer, K. M., & Zuniga, S. (2002). Short circuits or superconductors? Effects of group composition on high-achieving students’ science performance. American Educational Research Journal, 39, 943–989.

White, B. Y., & Frederiksen, J. R. (1998). Inquiry, modeling, and metacognition: Making science accessible to all students. Cognition and Instruction, 16, 3–118.

White, B. Y., Shimoda, T. A., & Frederiksen, J. R. (1999). Enabling students to construct theories of collaborative inquiry and reflective learning: Computer support for metacognitive development. International Journal of Artificial Intelligence in Education, 10, 151–182.

Williams, S. M., Bareiss, R., & Reiser, B. J. (1996). Ask Jasper: A multimedia publishing and performance supported environment for design. Paper presented at the American Education Research Association, New York, USA.

Winne, P. H. (2001). Self-regulated learning viewed from models of information processing. In B. J. Zimmerman, & D. H. Schunk (Eds.) Self-regulated learning and academic achievement: Theoretical perspectives (pp. 153–189). Mahwah: Lawrence Erlbaum.

Zhang, J. W., Chen, Q., Sun, Y. Q., & Reid, D. J. (2004). Triple scheme of learning support design for scientific discovery learning based on computer simulation: Experimental research. Journal of Computer Assisted Learning, 20, 269–282.

Zimmerman, B. J. (2000). Attaining self-regulation: A social cognitive perspective. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.)Handbook of self-regulation (pp. 13–35). San Diego, CA: Academic.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Manlove, S., Lazonder, A.W. & de Jong, T. Software scaffolds to promote regulation during scientific inquiry learning. Metacognition Learning 2, 141–155 (2007). https://doi.org/10.1007/s11409-007-9012-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11409-007-9012-y