Abstract

The Ising model is one of the most widely analyzed graphical models in network psychometrics. However, popular approaches to parameter estimation and structure selection for the Ising model cannot naturally express uncertainty about the estimated parameters or selected structures. To address this issue, this paper offers an objective Bayesian approach to parameter estimation and structure selection for the Ising model. Our methods build on a continuous spike-and-slab approach. We show that our methods consistently select the correct structure and provide a new objective method to set the spike-and-slab hyperparameters. To circumvent the exploration of the complete structure space, which is too large in practical situations, we propose a novel approach that first screens for promising edges and then only explore the space instantiated by these edges. We apply our proposed methods to estimate the network of depression and alcohol use disorder symptoms from symptom scores of over 26,000 subjects.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Undirected graphical models, also known as Markov random fields (MRFs; Kindermann & Snell, 1980), have become an indispensable tool to describe the complex interplay of variables in many fields of science. The Ising model (Ising , 1925), or quadratic exponential model (Cox, 1972), is one MRF that attracted the interest of psychologists. It is defined by the following probability distribution over the configurations of a p-dimensional vector \(\mathbf {x}\), with \(\mathbf {x} \in \{0\text {, }1\}^p\),

which covers all main effects \(\mu _i\) and pairwise associations \(\sigma _{ij}\) of the p binary variables. The pairwise associations encode the conditional dependence and independence relations between variables in the model: If an association is equal to zero, the two variables are independent given the rest of the variables, and there is no direct relation between them. Otherwise, the two variables are directly related. These relations can be visualized as edges in a network, where the model’s variables populate the network’s nodes. This view of the Ising model in psychological applications inspired the field of network psychometrics (Epskamp, Maris, Waldorp, & Borsboom, 2018; Marsman et al., 2015), which now spans research in, among others, personality (Constantini et al., 2012; Cramer et al., 2019), psychopathology (Borsboom & Cramer, 2013; Cramer et al.,2016), attitudes (Dalege et al., 2019; Dalege, Borsboom, van Harreveld, & van der Maas, 2016), educational measurement (Marsman, Maris, Bechger, & Glas, 2015; Marsman, Tanis, Bechger, & Waldorp, 2019), and intelligence (Savi, Marsman, van der Maas, & Maris, 2019; van der Maas, Kan, Marsman, & Stevenson, 2017).

The primary objective in empirical applications of the Ising model is determining the network’s structure or topology. Three practical challenges complicate this objective. The first practical challenge is the normalizing constant \(\text {Z}(\varvec{\mu }\text {, }\varvec{\Sigma })\) in Eq. (1), which is a sum over all \(2^p\) possible configurations of the binary vector \(\mathbf {x}\). Even for small graphs, this normalizing constant can be expensive to compute. For example, for a network of 20 variables, the normalizing constant consists of more than one million terms. Given that the normalizing constant is repeatedly evaluated in numerical optimization or simulation approaches to estimate the model’s parameters, the direct computation of the likelihood is computationally intractable. The second practical challenge in determining the Ising model’s structure is the balance between model complexity and data. With p main effects and \({p\atopwithdelims ()2}\) pairwise interactions, the number of free parameters can quickly overwhelm the limited information in available data. The third practical challenge is the efficient selection of a structure with desirable statistical properties from the vast space of possible structures. For a network of 20 variables, the structure space comprises \(2^{190} = 1.57 \times 10^{57}\) potential structures, which is simply too large to enumerate in practice.

In psychology, eLasso (van Borkulo et al., 2014) is the structure selection solution for the Ising model and overcomes all three challenges. First, it adopts a pseudolikelihood approach to circumvent the normalizing constant. The pseudolikelihood replaces the joint distribution of the vector variable \(\mathbf {x}\)—i.e., the full Ising model in Eq. (1)—with its respective full-conditional distributions:

where \(\varvec{\sigma }_i^{(i)} = (\sigma _{i1}\text {, }\dots \text {, }\sigma _{i(i-1)}\text {, }\sigma _{i(i+1)}\text {, }\dots \sigma _{ip})^\mathsf{T}\). Observe that the pseudolikelihood is equivalent to Eq. (1) except that it replaces the intractable normalizing constant with a tractable one. Second, eLasso balances structure complexity with the information available from the data at hand using the Lasso (Tibshirani, 1996): An \(l_1\)-penalty is stipulated on the pseudolikelihood parameters (i.e., minimize \(-\ln p^*(x_i \mid \mu _i\text {, }\varvec{\sigma }_i^{(i)})\) subject to the constraint \(\sum _{j \ne i} |\sigma _{ij}| \le \rho \)) to effectively shrink negligible effects to precisely zero. Ravikumar, Wainwright, and Lafferty (2010) showed that the pseudolikelihood in combination with Lasso can consistently uncover the true topology (see also Meinshausen & Bühlmann, 2006). Third, eLasso selects the structure that optimizes the parameters subject to the \(l_1\) constraint, which is specified up to its tuning parameter \(\rho \). It performs the optimization for a range of values for the tuning parameter and then selects the value that minimizes an extended Bayesian information criterion (Barber & Drton, 2015; Chen & Chen, 2008). Thus, structure selection with eLasso is analogous to selecting the tuning parameter. This combination of methods allows eLasso to efficiently perform structure selection for the Ising model, which is why it has become widely popular in psychometric practice.

We, however, have two concerns with frequentist regularization methods for estimating the Ising model, such as those used by eLasso. Our first concern is that traditional, frequentist approaches cannot express the uncertainty associated with a selected structure, and thus do not inform us about other structures that might be plausible for the data at hand. A structure’s plausibility is disclosed in its posterior probability. To compute posterior probabilities, we have to entertain multiple structures and take their prior plausibility into account. But eLasso searches for a single optimal structure instead. Our second concern is that eLasso does not articulate the precision of the parameters it estimates. Standard expressions for parameter uncertainty are unavailable for Lasso estimation (Tibshirani, 1996), since the limiting distribution of the Lasso estimator is non-Gaussian with a point mass at zero (e.g., Knight & Fu, 2000; Pötscher & Leeb, 2009). Basic solutions such as the bootstrap, although frequently used (see, for instance, Epskamp, Borsboom, & Fried,2018; Tibshirani, 1996), can therefore not be used to obtain confidence intervals or standard errors (e.g., Bühlmann, Kalisch, and Meier, 2014, Section 3.1; Pötscher and Leeb, 2009, Williams, 2021). Bayesian formulations of the Lasso offer a more natural framework for uncertainty quantification (Kyung, Gill, Ghosh, & Casella, 2010; Park & Casella, 2008; van Erp, Oberski, & Mulder, 2019), but approximate confidence intervals/standard errors could also be obtained by desparsifying the Lasso (Bühlmann et al., 2014; van de Geer, Bülmann, Ritov, & Dezeure, 2014).

In light of these concerns, our goals are threefold. Our primary goal is to introduce a new Bayesian approach for learning the topology of Ising models. Bayesian approaches to model selection often introduce binary indicators \(\gamma \) for the selection of variables in the model (e.g., George & McCulloch, 1993; O‘Hara & Sillanpää, 2009). We will use these indicators here to model edge selection: If the indicator \(\gamma _{ij}\) equals one, the edge between variables i and j is included. Otherwise, the edge is excluded. A structure s is then a specific configuration of a vector of \({p\atopwithdelims ()2}\) indicator variables \(\varvec{\gamma }_s\), and the collection of network structures is equal to

We wish to estimate the posterior structure probabilities \(p(\varvec{\gamma } \mid \mathbf {x})\), since they convey all the information that is available on the structures \(\varvec{\gamma } \in \mathcal {S}\) and can be used to express the plausibility of a particular structure or the inclusion of a specific edge for the data at hand. To unlock these Bayesian benefits (see Marsman & Wagenmakers, 2017; Wagenmakers, Marsman, et al., 2018, for detailed examples), we have to connect the indicator variables to the selection problem at hand.

Our secondary goal is to formulate a continuous spike-and-slab approach, initially proposed by George and McCulloch (1993) for covariate selection in regression models, for edge selection in Ising networks. In this approach, the binary indicators are used to hierarchically model the prior distributions of focal parameters by assigning zero-centered diffuse priors to effects that should be included and priors that are sharply peaked about zero to negligible effects. These continuous spike-and-slab components are usually Gaussian (e.g., George & McCulloch, 1993; Ročková & George, 2014) or Laplace distributions (e.g., Ročková, 2018; Ročková & George, 2018). Even though the Laplace distribution generates a Bayesian Lasso (Park & Casella, 2008), its drawback is that its posterior distribution is difficult to approximate using computational tools other than simulation. We therefore adopt Gaussian spike-and-slab components in our edge selection approach.

Our tertiary goal is to analyze the full or joint pseudolikelihood in Eq. (2) instead of analyzing the full-conditionals in isolation. Analyzing the full-conditionals in isolation is common practice since it is fast. However, it leads to two potentially divergent parameter estimates for the associations and does not provide a coherent procedure for quantifying parameter uncertainty. By analyzing the joint pseudolikelihood, we can formulate a single prior distribution for the focal parameters to obtain a single posterior distribution that we can analyze in a meaningful way. The disadvantage of using the joint pseudolikelihood is its increased computational expense for some numerical procedures and the inability to analyze the full-conditionals in parallel. However, this increase in computational expense is negligible for the network sizes typically encountered in psychological applications.

The continuous spike-and-slab approach to select a network’s topology poses three critical challenges that we address in this paper. The first challenge that we address is the consistency of the structure selection procedure. In a recent analysis of covariate selection in linear regression, Narisetty and He (2014) showed that the continuous spike-and-slab approach is inconsistent if the hyperparameters are not correctly scaled. We extend this observation to the current structure selection problemFootnote 1 and prove that a correct scaling of the hyperparameters leads to a consistent structure selection approach in an embedding with p fixed, n increasing. The second challenge that we address is the specification of tuning parameters. The effectiveness of the continuous spike-and-slab setup crucially depends on their specification. Unfortunately, objective methods to specify these parameters are currently unavailable, and tuning them is difficult and context dependent (e.g., George & McCulloch, 1997; O’Hara & Sillanpää, 2009). To overcome this issue, we develop a new procedure to automatically set the tuning parameters in such a way that we achieve a high specificity. The final challenge that we address is the practical exploration of the structure space \(\mathcal {S}\). Even for relatively small networks, the structure space \(\mathcal {S}\) can be vast, and exploring it poses a significant challenge. Moreover, even the most plausible structures have relatively small posterior probabilities and many similar structures exist (George, 1999). As a result, valuable computational effort is wasted on relatively uninteresting structures and it is difficult to estimate their probabilities with reasonable precision. To overcome this issue, we propose a novel, two-step approach. We first employ a deterministic estimation approach (Ročková & George, 2014), utilizing an expectation-maximization (EM; Dempster, Laird, & Rubin, 1977) variant of the continuous spike-and-slab approach to screen for a subset of promising edges. We then use a stochastic estimation approach (George & McCulloch, 1993), utilizing a Gibbs sampling (Geman & Geman, 1984) variant to explore the structure space instantiated by these promising edges. In sum, we propose a coherent Bayesian methodology for structure selection for the Ising model. The freely available R package rbinnet implements the proposed methods.Footnote 2

The remainder of this paper is organized as follows. After this introduction, we first specify our Bayesian model, i.e., we discuss the pseudolikelihood and prior setup. Then, we analyze the consistency of our spike-and-slab approach for structure selection and show that it is consistent if suitably scaled. We wrap up the blueprint of our Bayesian model with the objective specification of hyperparameters for our spike-and-slab setup. We then present an EM and a Gibbs implementation of our Bayesian structure selection setup used for edge screening and structure selection, respectively. In our suite of Bayesian tools, edge screening most closely resembles eLasso, and we will compare the performance of these two methods in a series of simulations. Finally, we present a full analysis of data on alcohol abuse and major depressive disorders from the National Survey on Drug Use and Health. As far as we know, these two disorders have not been analyzed on a symptom level together in a network approach.

1 Bayesian Model Specification

The setup of any Bayesian model comprises two parts: The likelihood of the model’s parameters and their prior distributions. We start with the likelihood dictated by the Ising model, and the pseudolikelihood approach that we adopt to circumvent the computational intractability of the full Ising model. We follow-up with the specification of prior distributions for the Ising model’s parameters, tying George and McCulloch’s (1993) continuous spike-and-slab prior setup for edge selection.

1.1 The Ising Model Pseudolikelihood

In this paper, we will adopt the pseudolikelihood approach of Besag (1975), as presented in Eq. (2). We will furthermore assume that the observations are independent and identically distributed, such that the full pseudolikelihood becomes

where \(\mathbf {X} = (\mathbf {x}_1^\mathsf{T}\text {, }\dots \text {, }\mathbf {x}^\mathsf{T}_n)^\mathsf{T}\), and we have adopted v to index the n independent and identically distributed observations. Both maximum pseudolikelihood and Bayesian pseudoposterior estimates are consistent as n increases (e.g., Arnold & Strauss, 1991; Geys, Molenberghs, & Ryan, 2007; Miller, 2019) and can consistently uncover the unknown graph structure of the full Ising model (Barber & Drton, 2015; Csiszár & Talata, 2006; Meinshausen & Bühlmann, 2006; Ravikumar et al., 2010). As a result, the pseudolikelihood has become an indispensable tool in the structure selection of Ising models.

1.2 The Continuous Spike-and-Slab Prior Setup and Its Relation to Other Approaches

There are several ways to bring the indicator variables into our Bayesian model (e.g., Dellaportas, Forster, & Ntzoufras, 2002; George & McCulloch, 1993; Kuo & Mallick, 1998). O’Hara and Sillanpää 2009 and Consonni, Fouskakis, Liseo, and Ntzoufras Consonni et al. (2018) provide two recent overviews. One interesting approach was recently proposed by Pensar, Nyman, Niiranen, and Corander Pensar et al. (2017), which essentially uses the indicator variables to draw a Markov blanket in the full-conditional distributions of the Ising model and then, construct a marginal pseudolikelihood to select the network’s structure. A key aspect of their approach is that they formulated a Bayesian model on the individual pseudolikelihoods rather than the model’s parameters, and, using a few simplifying assumptions, they were able to derive analytic expressions for the marginal pseudolikelihoods. Unfortunately, this also required treating the pairwise associations as nuisance parameters. As a result, inference on the model’s parameters remains out of reach, and, in addition, it is unclear how the priors on the pseudolikelihoods translate to the model’s parameters. We will take a different route, but a numerical comparison between our approach and that of Pensar et al. Pensar et al. (2017)—implemented in the R package |BDgraph| (R. Mohammadi & Wit, 2019)—can be found in the online appendix.

In this paper, we adopt the continuous spike and slab approach, which comprises two parts. First, a mixture of two zero-centered normal distributions is imposed on the focal parameters. Here, the focal parameters are the pairwise associations \(\sigma _{ij}\). The indicator variables are then used to distinguish between the two mixture components, and thus, the prior distribution on the focal parameters becomes

where \(\mathcal {N}(0\text {, }\nu )\) denotes the normal distribution with a mean equal to zero and a variance equal to \(\nu \). A small but positive variance \(\nu _0 > 0\) is assigned to the component that is associated with \(\gamma _{ij} = 0\) to encourage the exclusion of negligible nonzero values, and a large variance \(\nu _1>> \nu _0\) is assigned to the component associated with \(\gamma _{ij}=1\) to accommodate all plausible values of the interaction. The continuous spike-and-slab approach is a computationally convenient alternative to the discontinuous spike-and-slab approach that is common in model selection.

In the discontinuous spike-and-slab approach, the continuous spike distribution is replaced with a Dirac delta measure at zero. In other words, the association is set to zero for structures in which the relation is absent. The discontinuous spike-and-slab setup is popular in structure selection for Gaussian graphical models (GGMs) (GGMs; e.g., Carvalho & Scott, 2009; A. Mohammadi & Wit, 2015) and generalizations such as the copula GGM for binary and categorical variables (e.g., Dobra & Lenkoski, 2011) and the multivariate probit model for binary variables (e.g., Talhouk, Doucet, & Murphy, 2012)—variants of which are also implemented in the R packages |BDgraph| (R. Mohammadi & Wit, 2019) and |BGGM| (Williams & Mulder, 2020b). For the GGM and its generalizations, the slab priors are assigned to the inverse-covariance or precision matrix (i.e., the matrix of partial correlations) and thus, often use Wishart-type priors rather than the normal distribution that we propose for the Ising model’s associations.

The upside of using discontinuous over continuous spike-and-slab priors is that one only needs to consider the slab prior specification and that structure selection consistency is more easily attained. The downside, however, is that for models such as the Ising model, we run into severe computational challenges. The EM and Gibbs solutions that we advocate in this paper would not work for the Ising model if we would use the discontinuous spike-and-slab setup. The primary reason for this is that one cannot analytically integrate out the focal parameters for updating the edge indicators. Pensar et al. (2017) were able to derive their analytic solutions by stipulating the Ising model’s pseudolikelihood as the focal parameter and assuming orthogonality between different full-conditions. The continuous spike-and-slab approach proposed in this paper does not require an analytic integration of effects from the likelihood and is thus opportune to use in combination with the Ising model. Wang (2015) also applied it to edge-selection for the GGM, which is implemented in the R package |ssgraph| (R. Mohammadi, 2020).

The second part of our spike-and-slab approach is the specification of a prior distribution on the selection variables. Here, the selection variables are a priori modeled as i.i.d. Bernoulli\((\theta )\) variables, which implies the following prior distribution on the structures \(\varvec{\gamma }_s\),

where \(\gamma _{s++} = \sum _{i=1}^{p-1}\sum _{j=i+1}^p\gamma _{sij}\), with \(\gamma _{ij} = \gamma _{ji}\). Once the hyperparameters \(\nu _0\), \(\nu _1\) and \(\theta \) are set, and the nuisance parameters are assigned a prior distribution, the posterior structure probabilities can then be estimated using, for example, a Gibbs sampler (Geman & Geman, 1984; George & McCulloch, 1993). We stipulate independent standard-normal prior distributions on the nuisance parameters \(\varvec{\mu }\) and make the objective specification of hyperparameters the topic of the ensuing sections.

2 Structure Selection Consistency

In this section, we analyze posterior selection consistency, the ability of our method to determine the correct network structure consistently. As alluded to in the introduction, selection consistency using George and McCulloch’s spike and slab approach crucially depends on the hyperparameters \(\nu _0\) and \(\nu _1\). Unfortunately, fixing these parameters does not guarantee that our structure selection procedure is consistent. Narisetty and He (2014) showed that the use of fixed constants may lead to an inconsistent selection procedure in the context of linear regression. Below, we will demonstrate that this is also the case in the context of structure selection for Ising models. However, we will also show that our selection approach is consistent if the spike variance \(\nu _0\) shrinks as a function of n.Footnote 3 Narisetty and He presented a similar result for linear regression.

We first work out the concepts relevant for selection consistency, such as the posterior structure probability, and derive an approximate Bayes factor that is useful for the large-sample analysis. Then, we analyze the case with fixed hyperparameters and show that the selection procedure is inconsistent for fixed p, increasing n. Finally, we analyze the situation where the spike variance shrinks with n and show that this shrinking hyperparameter setup leads to a consistent selection procedure for fixed p, increasing n.

2.1 Selection Consistency

We assume that the true structure t is in the set \(\mathcal {S}\).Footnote 4 We quantify our uncertainty in selecting a structure s, \(s\in \mathcal {S}\), using the posterior structure probability

where \(p^*(\mathbf {X} \mid \varvec{\gamma }_s)\) denotes the integrated pseudolikelihood for the structure s, \(\text {BF}^*_{st}\) the Bayes factor pitting structure s against the correct structure t, and \(\text {o}_{st}\) denotes the prior model odds of the two structures. Selection consistency requires us to show that the posterior structure probabilities \(p(\varvec{\gamma }_s \mid \mathbf {X})\) tend to zero for structures \(s \ne t\), and that \(p(\varvec{\gamma }_t \mid \mathbf {X})\) tends to one as the sample size grows. This is equivalent to showing that the Bayes factors \(\text {BF}_{st}\) tend to zero for structures \(s \ne t\). Unfortunately, analytic expressions for the Bayes factors are currently unavailable. To come to a workable expression for the Bayes factor, we first redefine it in terms of the expected prior odds under the correct posterior distribution

which is the posterior expectation of the ratio of the prior distributions of \(\varvec{\Sigma }\) for the two models, s and t, under the correct structure specification \(\varvec{\gamma }_t\). This is a convenient representation, as we only have to consider the pseudoposterior distribution under the correct network structure. Observe that this representation also holds when the full Ising likelihood is used, except that in the latter case the Bayes factor \(\text {BF}_{st}\) is expressed as the expected prior odds w.r.t the posterior distribution and not the pseudoposterior distribution. For a fixed network of p variables, the posterior distribution can be accurately approximated with a normal distribution as n becomes large (see, for instance, Miller, 2019, Theorem 6.2), and the same holds for the pseudoposterior distribution (see, for instance, Miller, 2019, Theorems 3.2 and 7.3). To come to a workable expression of the Bayes factor we approximate the pseudoposterior with a normal distribution (i.e., a Laplace approximation), which leads to the following first-order approximation of the Bayes factor (Tierney, Kass, & Kadane, 1989, Eq. 2.6),

where \(\widehat{\varvec{\Sigma }} = [\hat{\sigma }_{ij}]\) is the mode of \(p^*(\varvec{\Sigma }\text {, }\varvec{\mu }\mid \mathbf {X}\text {, }\varvec{\gamma }_t)\), or \(p(\varvec{\Sigma }\text {, }\varvec{\mu }\mid \mathbf {X}\text {, }\varvec{\gamma }_t)\) if the full Ising likelihood is used. Tierney et al. (1989) show that the error of the first-order approximation—the rest term \(\mathcal {O}(n^{-1})\)—is of order 1/n. Since the pseudoposterior is consistent (c.f., Miller, 2019, Theorem 7.3), the Bayes factor using the pseudolikelihood and the full likelihood will converge to the same number.

We will show next that the approximate Bayes factors \(\text {BF}^*_{st}\), for \(s \ne t\), do not shrink to zero with the three hyperparameters fixed, but do shrink to zero if \(\nu _0\) shrinks to zero at a rate \(n^{-1}\). The approximate Bayes factor comprises a product of the edge specific functions

which consists of two parts: The selection variables \(\gamma _{s\text {, }ij}\) and \(\gamma _{t\text {, }ij}\) that inform about the differences in edge composition of structures s and t, and the function \(\sqrt{\nu _0/\nu _1}\exp \left( \hat{\sigma }_{ij}(\nu _1-\nu _0)/2\nu _1\nu _0\right) \) that weighs in the contribution of the pseudoposterior. The edge specific function \(f_{ij}\) is equal to one if the edge is present in both structures, or is absent from both structures, since then \(\gamma _{t\text {, }ij} - \gamma _{s\text {, }ij}=0\). We therefore only have to consider what happens to the function \(f_{ij}\) for cases where \(\gamma _{s\text {, }ij} \ne \gamma _{t\text {, }ij}\).

2.1.1 The Fixed Hyperparameter Case

If \(\gamma _{t\text {, }ij}\) is equal to zero, and \(\gamma _{s\text {, }ij}\) is equal to one, the correct value for the interaction parameter \(\sigma _{ij}\) is zero, and we observe that

which, even though it is smaller than one and signals a preference for structure t, does not converge to zero as it should if the structure selection procedure would be consistent. If \(\gamma _{t\text {, }ij}\) is equal to one, and \(\gamma _{s\text {, }ij}\) is equal to zero, on the other hand, such that \(|\sigma _{ij}|>0\), we observe that

which does not converge to zero either. In fact, it may even signal a preference for the absence of the edge in structure s. These two observations indicate that the Bayes factors \(\text {BF}^*_{st}\) do not converge to zero, and thus, the posterior probability \(p(\varvec{\gamma }_t \mid \mathbf {X})\) does not converge to one. In sum, the proposed structure selection procedure is inconsistent in the case that the three hyperparameters are fixed.

2.1.2 The Shrinking Hyperparameter Case

We next consider the case where \(\nu _0\) shrinks at a rate \(n^{-1}\) and define \(\nu _0 = \tfrac{\nu _1 \xi }{n}\). Here, \(\xi \) is a fixed (positive) penalty parameter that allows us some flexibility to emphasize the distinction between the spike and slab components. If, in this case, \(\gamma _{t\text {, }ij}\) is equal to one and \(\gamma _{s\text {, }ij}\) is equal to zero, the function \(f_{ij}\) is equal to

where the first factor tends to infinity, and the second factor tends to zero. Because the second factor tends to zero faster than the first factor tends to infinity, their product, again, tends to zero, as it should. On the other hand, if \(\gamma _{t\text {, }ij}\) is equal to zero, the function \(f_{ij}\) becomes

where the first factor tends to zero, and the second factor tends to one because \(\sqrt{n}\hat{\sigma }_{ij}\) tends to zero (\(\hat{\sigma } =\mathcal {O}_p(1/\sqrt{n})\)). Therefore, \(f_{ij}\) tends to zero, as it should. In sum, the structure selection procedure is consistent if \(\nu _0\) shrinks at a rate of \(n^{-1}\).

3 Objective Prior Specification

We follow the results in the previous section, and set the spike variance to \(\nu _0 = \tfrac{\nu _1\xi }{n}\), which leaves the specification of the slab variance \(\nu _1\), the penalty parameter \(\xi \), and the prior inclusion probability to complete our Bayesian model blueprint. We first discuss a default setting for the spike and slab variances, i.e., the specification of \(\nu _1\) and \(\xi \). We then discuss two options for the prior inclusion probabilities that we adopt in this paper.

3.1 Specification of the Spike and Slab Variances

One approach to find default values for the slab variance is to set it equal to n times the inverse of the Fisher information matrix \(\mathcal {I}_{\Sigma }(\hat{\varvec{\Sigma }}\text {, }\hat{\varvec{\mu }})^{-1}\), which approximately gives the information about \(\sigma _{ij}\) in a single observation, hence the name unit information (Kass & Wasserman, 1995). Kass and Wasserman 1995 showed that the logarithm of the Bayes factor—pitting one network structure against another—is approximately equal to the difference in Bayesian information criteria (BIC; Schwarz, 1978) of the two structures when we use unit information priors (see also, Raftery, 1999; Wagenmakers, 2007, for details). This result, combined with the fact that unit information priors can be automatically selected, makes them a popular approach in Bayesian variable selection. We follow the approach of Ntzoufras (2009), who achieved good results by setting the off-diagonal elements of \(\mathcal {I}^{-1}\) to zero in the prior specification. This renders the spike-and-slab prior densities independent, and sets the slab variance to \(\nu _{1\text {, }ij} = n \text {Var}(\hat{\sigma }_{ij})\).Footnote 5 If we set the slab variance equal to the unit information, the spike variance is equal to \(\nu _{0\text {, }ij}= \xi \, \text {Var}(\hat{\sigma }_{ij})\). Our structure selection procedure will still consistently select the correct structure, since \(\nu _{0\text {, }ij}\) shrinks with rate n because \(\text {Var}(\hat{\sigma }_{ij})\) does (e.g., Miller, 2019, Section 5.2).

The spike-and-slab parameters are specified up to the constant \(\xi \), which acts as a penalty parameter on the inclusion and exclusion of effects in the spike-and-slab prior. Larger values for \(\xi \) increase the overlap between the spike-and-slab components and consequently, make it more likely that an effect is excluded, i.e., ends up in the spike component. It is the opposite case for smaller values. It is thus absolutely crucial to find a good value for this penalty. We wish to specify the tuning parameter \(\xi \) such that the performance of our edge selection approach is similar to that of eLasso. To that aim, we introduce an automated procedure to specify the tuning parameter such that the corresponding continuous spike-and-slab setup is geared towards achieving a high specificity, or low type-1 error, similar to eLasso. The idea that we pursue here is to set the intersection of the spike-and-slab components equal to an approximate credible interval about zero. The left panel in Fig. 1 illustrates the idea.

George and McCulloch (1993) show that the two densities intersect at

If we fill in our definitions for the spike and slab variances, the expression for \(|\delta |\) boils down to

Where George and McCulloch (1993) discuss the subjective specification of \(\delta \), we explore its automatic specification by matching it to the approximate credible interval. We first determine the range of parameter values \((-|\delta |\text {, }|\delta |)\) considered to be insignificant, and then select the value of \(\xi \) such that the spike and slab components intersect at \(\pm |\delta |\). When n is sufficiently large, the pseudoposterior distribution of an association parameter \({\sigma }_{ij}\) is approximately normal (Miller, 2019), and \(\text {Var}(\hat{\sigma }_{ij})\) is its approximate variance. Thus, \((\hat{\sigma }_{ij}\,\pm \,3\sqrt{\text {Var}(\hat{\sigma }_{ij})})\) offers an approximate \(99,7\%\) credible interval about the posterior mean \(\hat{\sigma }_{ij}\). To set the variance of the spike distribution for negligible effects, it is opportune to use the interval \((\pm \,3\sqrt{\text {Var}(\hat{\sigma }_{ij})})\), which offers an approximate credible interval about zero, i.e., the credible interval assuming that the edge i–j should, in fact, be excluded from the model. Equating the expression for \(|\delta |\) on the right side of Eq. (5) with \(3\sqrt{\text {Var}(\hat{\sigma }_{ij})}\) gives:

which we can solve numerically to obtain a value for \(\xi \). Observe that, by specifying \(\delta \) in this particular way, the penalty parameter \(\xi \) depends on the sample size but not the data or network’s size. We denote the value of the penalty parameter that matches the intersection \(\delta \) to the credible interval with \(\xi _\delta \). The relation between \(\xi _\delta \) and sample size is illustrated in the right panel of Fig. 1.

3.2 Specification of the Prior Inclusion Probability

Assuming that the correct structure is in \(\mathcal {S}\), i.e., the \(\mathcal {S}\)-closed view of structure selection, a default choice to express ignorance or indifference between the structures in \(\mathcal {S}\) is to stipulate a uniform prior distribution over the topologies in \(\mathcal {S}\):

where \(|\mathcal {S}|\) denotes the cardinality of the structure space. Here, the uniform prior is equal to

and we can impose this prior on the structure space by fixing the prior inclusion probability \(\theta \) in Eq. (3) to \(\tfrac{1}{2}\). However, the uniform prior on the structure space does not take into account structural features of the models under consideration, such as sparsity. Various priors have been proposed as an alternative to accommodate these features (see Consonni et al., 2018, Section 3.6, for a detailed discussion). One particular issue inherent in structure comparisons is multiplicity, and Scott and Berger (2010) argue that the prior distribution should account for this. Consonni et al. (2018) show that stipulating a hyperprior on the prior inclusion probability \(\theta \) accounts for multiplicity. In particular, they showed that the uniform hyperprior \(\text {Beta}(1\text {, }1)\) leads to the following prior on the structure space

where c denotes the complexity of structures, with \(c \in (0\text {, }1\text {, }\dots \text {, } {p\atopwithdelims ()2})\), i.e., the number of edges in the topology. Thus, instead of a uniform prior on the structure space, the hierarchical prior stipulates a uniform prior on the structure’s complexity. As a result, it favors models that have a relatively extreme level of complexity, e.g., are densely connected or are sparsely connected.

The left panel illustrates how the two prior distributions assign probabilities to structure complexity. The right panel illustrates how the two prior distributions assign probabilities to different structures with the same complexity. The prior probabilities in the right panel are shown on a log scale. For both panels, \(p = 10\).

Figure 2 illustrates the different probabilities that the two distributions assign to structure complexity, a priori, and the probabilities they assign to structures that have the same complexity (shown on a log scale). The left panel of Fig. 2 shows that whereas the hierarchical prior is uniform on the complexity, the uniform prior is not and favors structures that have approximately half of the available edges. However, the right panel of Fig. 2 illustrates that the hierarchical prior emphasizes structures at the extremes of complexity. We will adopt both the uniform prior distribution on the structure space and the uniform prior distribution on the structure’s complexity, and analyze them further in the section on numerical illustrations. Based on Fig. 2, however, we expect that for small samples, the hierarchical prior will place much emphasis on extremely sparse structures, since our penalty selection approach already gears toward sparse solutions.

4 Bayesian Edge Screening and Structure Selection for the Ising model

George and McCulloch (1993) proposed stochastic search variable selection (SSVS) as a principled approach to Bayesian variable selection. SSVS uses the spike-and-slab prior specification to emphasize the posterior probability of promising structures and Gibbs sampling to extract this information from the data at hand. The Gibbs sampler is a powerful tool for the exploration of the posterior distribution of potential network structures. However, since the structure space \(\mathcal {S}\) can be quite large in practical settings, it might take a while to sufficiently explore the posterior distribution and produce reliable estimates of the posterior structure probabilities. We, therefore, wish to prune the structure space by selecting the promising edges before running the Gibbs sampler. We explore an EM variable selection approach for this initial edge screening and then, follow-up with an SSVS approach for structure selection on the set of promising edges.

4.1 Edge Screening with EM Variable Selection

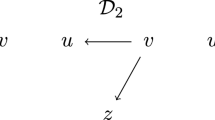

Ročková and George (2014) were the first to propose the use of EM for Bayesian variable selection, in combination with the spike-and-slab prior specification of George and McCulloch (1993), to covariate selection of linear models. The EM algorithm aims to find the posterior mode of the pseudoposterior distribution \(p^*(\varvec{\Sigma }\text {, }\varvec{\mu }\text {, }\theta \mid \mathbf {X})\) and does this by iteratively maximizing the “complete data” pseudoposterior distribution \(p^*(\varvec{\Sigma }\text {, }\varvec{\mu }\text {, }\theta \text {, }\varvec{\gamma }\mid \mathbf {X})\), treating the selection variables \(\varvec{\gamma }\) as missing or latent variables. The algorithm alternates between two steps. In the expectation or E-step, we compute the expected log-pseudoposterior distribution, or Q-function,

with respect to posterior distribution of the latent variables \(p(\varvec{\gamma } \mid \varvec{\Sigma }^k\text {, }\theta ^k)\), where \(\varvec{\Sigma }^k\), and \(\theta ^k\) denote the estimates in iteration k. The E-step is followed by a maximization or M-step in which we find the values \(\varvec{\Sigma }^{k+1}\), \(\varvec{\mu }^{k+1}\) and \(\theta ^{k+1}\) that maximize the Q-function. The two steps are repeated until convergence.

The E-step of the EM algorithm involves expectations of the latent or missing variables, i.e., the vector of selection variables \(\varvec{\gamma }\). Since the latent selection variables only operate in the spike-and-slab prior distributions, the derivation of the E-step will follow the derivation of Ročková and George (2014). For a complete treatment of EMVS, however, we include an analysis of both the E-step and the M-step in “Appendix A”. “Appendix A” also includes details about estimating the (asymptotic) posterior standard deviations from the EM output.

4.1.1 Edge Screening

The EM algorithm that we outlined in the previous section identifies a posterior mode \((\widehat{\varvec{\Sigma }}\text {, }\hat{\varvec{\mu }}\text {, }\hat{\theta })\), and we threshold the modal estimates to obtain a tightly matching network structure \(\hat{\varvec{\gamma }}\). The idea of Ročková and George (2014) that we pursue here is that "large" interaction effect estimates define a set of promising edges, and we can thus prune edges that link to "small" interaction effect estimates. We define the structure \(\hat{\varvec{\gamma }}\) that closely matches the modal estimates \((\widehat{\varvec{\Sigma }}\text {, }\hat{\varvec{\mu }}\text {, }\hat{\theta })\) to be the most probable structure \(\varvec{\gamma }\) given the parameter values \(({\varvec{\Sigma }}\text {, }{\varvec{\mu }}\text {, }{\theta }) = (\widehat{\varvec{\Sigma }}\text {, }\hat{\varvec{\mu }}\text {, }\hat{\theta })\), i.e.,

For our Bayesian model, the posterior inclusion probabilities for the different edges are conditionally independent, and the posterior inclusion probability for an edge i–j is given by

Thus, we obtain \(\hat{\varvec{\gamma }}\) from maximizing the inclusion and exclusion probabilities in Eq. (7), for each of the \({p\atopwithdelims ()2}\) edges, which means that

and we prune the edges for which \(p(\gamma _{ij} = 0 \mid \hat{\sigma }_{ij}\text {, }\hat{\theta }) \ge 0.5\). This edge selection and pruning approach leads to a structure \(\varvec{\gamma }\) that is a median probability model, as defined by Barbieri and Berger (2004) to be the structure comprising edges that have a posterior inclusion probability at or above a half.Footnote 6 Ročková and George (2014) show that instead of selecting the structure \(\hat{\varvec{\gamma }}\) based on the posterior inclusion probabilities, we may equivalently select it through thresholding the values of \(\hat{\sigma }_{ij}\). Specifically,

Such a connection between the magnitude of the modal estimates \(\hat{\sigma }_{ij}\) and promising edges i–j, we envisioned from the beginning. Observe that, since \(n\text {Var}(\hat{\sigma }_{ij})\) is the unit information, i.e., it is a constant, the right-most factor shrinks with n. Moreover, it shrinks much faster than that \(\log (\sqrt{n})\) tends to infinity, such that the threshold moves to smaller values as n increase, as it should.

4.2 Structure Selection with SSVS

The EMVS approach enables us to screen for a promising set of edges by locating a local posterior mode and pruning edges associated with small modal parameters. The structure \(\varvec{\gamma }^\prime \) that comes out of this pruned edge set is a local median probability structure. We now wish to directly explore \(p^*(\varvec{\gamma }\mid \mathbf {X})\), the pseudoposterior distribution of network structures, to find out if \(\varvec{\gamma }^\prime \) is also the global median probability model, and if there are other promising structures for the data at hand. We do this using the stochastic search and variable selection (SSVS) approach of George and McCulloch (1993), which essentially combines the spike-and-slab prior setup with Gibbs sampling to produce a sequence

which converges in distribution to samples from \(\varvec{\gamma } \sim p(\varvec{\gamma } \mid \mathbf {X})\). We then shift our focus to structures \(\varvec{\gamma }_s\) that occur frequently in the generated sequence, which are the structures that have a high posterior probability. We cut down the potentially large number of network structures that the Gibbs sampler needs to explore by applying SSVS only to the edges screened by EMVS.Footnote 7

The Gibbs sampler operates by iteratively simulating values from the conditional distributions of (a subset of) the model parameters given the (other parameters and the) observed data. Unfortunately for us, the full-conditional distributions of our Bayesian model are not available in closed form, as the normal prior distributions that we have specified are not conjugate to the pseudolikelihood. However, since the pseudolikelihood comprises a sequence of logistic regressions, we can use the data-augmentation strategy that was proposed by Polson, Scott, and Windle (2013a) to facilitate a simple Gibbs sampling approach, with full-conditionals that are easy to sample from. A similar approach to the Ising model’s pseudolikelihood was considered by Donner and Opper (2017). Here, we extend this idea to SSVS for the Ising model.

Polson et al. (2013a) proposed an ingenious data augmentation strategy based on the identity

where \(p(\omega )\) is a Pólya–Gamma distribution. A key aspect of this augmentation strategy is that it relates the logistic function of a parameter \(\psi \) on the left to something that is proportional to a normal distribution on the right. Since our prior distributions are all (conditionally) normal, and the normal distribution is its own conjugate, the data-augmented full-conditionals will all be normal. To wit, applied to the pseudolikelihood in Eq. (2), we find

and with normal prior distributions for the pseudolikelihoods parameters we readily find normal full-conditional distributions when we condition on the augmented variables \(\varvec{\omega }\). Another important aspect of the augmentation strategy is that the conditional distribution of the augmented variables \(\omega \) given the pseudolikelihood parameters and the observed data \(\mathbf {X}\) is again a Pólya-Gamma distribution. Polson et al. (2013a) and Windle, Polson, and Scott (2014) provide efficient rejection algorithms to simulate from this distribution.

With the Pólya-Gamma augmentation strategy in place, the Gibbs sampler iterates between five steps, which are detailed in “Appendix C”. The Gibbs output allows us to estimate a number of important quantities. For example, the posterior structure probabilities can be estimated as

where \(I(\cdot )\) is an indicator function that is equal to one if its conditions are satisfied and equal to zero otherwise, and the (global) posterior inclusion probabilities as,

were \(\varvec{\gamma }^{(r)}\), for \(r = 1, \text {, }\dots \text {, }R\), denotes R iterates of the Gibbs sampler. In a similar way, one can compute quantities related to the model-averaged posterior distribution of the model parameters, e.g.,

or any of its marginals. In sum, the Gibbs sampler grants us the full Bayesian experience.

5 Numerical Illustrations

In this section, we will focus on a comparison of our procedures with eLasso. A comparison between our edge screening and structure selection approaches and the approach of Pensar et al. (2017)—as implemented in |BDraph| (R. Mohammadi & Wit, 2019)—can be found in the online appendix. We have also included model selection for the multivariate probit model (e.g., Talhouk et al., 2012)—as implemented in |BGGM| (Williams & Mulder, 2020b)—in that comparison. There were some small variations, but overall the three different approaches performed very similar in terms of edge detection.

5.1 Edge Screening on Simulated Data with Sparse Topologies

The eLasso approach of van Borkulo et al. (2014) is the most popular method for analyzing Ising network models in psychology. We wish to find out how our EMVS approach stacks up against eLasso, and we, therefore, use the simulation setup of (van Borkulo et al. 2014) to compare both methods. Specifically, we focus on the simulations that lead to their Table 2, where an Erdős and Rényi (1960) model is used to generate underlying sparse topologies, and normal distributions are used to simulate the model parameters.Footnote 8 In these simulations, we vary \(\pi \), the probability of generating an edge between two variables, p, the number of variables, and n, the number of observations and generate 100 datasets for each combination of values for \(\pi \), p, and n.

We analyze the simulated datasets using eLasso, using the default settings implemented in the IsingFit program (van Borkulo, Epskamp, & Robitzsch, 2016), i.e., the AND-rule and an EBIC penalty equal to 0.25. We also analyze the simulated datasets using EMVS in combination with the \(\xi _\delta \) method and the uniform and hierarchical specifications of the prior structure probabilities. We follow van Borkulo et al. (2014) and express the quality of the estimated solution using its sensitivity and specificity. Sensitivity is the proportion of present edges that are recovered by the method,

i.e., the true positive rate. Specificity is equal to the proportion of absent edges that are correctly recovered,

i.e., the true negative rate. For eLasso, edge inclusion refers to a nonzero association estimate using the AND approach. For EMVS, it is taken to mean that the posterior inclusion probability exceeds 0.5.

Table 1 shows the result of these simulations for the eLasso method in the column labelled “eLasso” and are similar to the results reported in Table 2 in van Borkulo et al. (2014). The first thing to note about these results is that eLasso has a high true negative rate across all simulations. This was to be expected, as \(l_1\)-regularization gears towards edge exclusions, which is why it performs best in the sparse network settings considered here. Indeed, it’s specificity goes down as the networks become more densely connected (i.e., larger values of \(\pi \)). The true positive rate of eLasso is significantly worse than its specificity, especially for the smaller sample sizes. However, the sensitivity increases with sample size, which underscores earlier results that a larger sample size helps overcome the prior shrinkage effect of the lasso (e.g., Epskamp, Kruis, & Marsman, 2017).

Next, we consider the performance of EMVS. The results for EMVS when the penalty \(\xi \) is set to \(\xi _\delta \), the penalty value for which the intersection of the spike and the slab components aligns with the \(99,7\%\) approximate credible interval, c.f. Eq. (6), are shown in the columns labeled \(\xi _\delta \) in Table 1. We analyzed the data using the uniform prior on the model space—\(\xi _\delta \) (U)—and with the hierarchical model—\(\xi _\delta \) (H). The first striking result is that the performance of the \(\xi _\delta \) approach combined with a uniform prior on the structure space performs almost identical to eLasso, making it a valuable Bayesian alternative to the classical eLasso approach. Observe that the specificity equals the coverage probability of the credible interval for all but the smallest sample size. Thus, as one might expect, the coverage probability specified dictates the method’s type-1 error or specificity. The hierarchical prior on the structure space leads to an improvement to the already high specificity. For the smaller sample sizes, however, the method’s sensitivity is very low, suggesting that it is, perhaps, too conservative for settings with small sample sizes.

5.2 Parameter Estimation on Simulated Data with Dense Topology

We continue with an illustration of the estimation of parameters and inclusion probabilities. For this analysis, we simulate data for \(n = 20,000\) cases on a \(p = 15\) variable network. The main effects were simulated from a Uniform\((-1\text {, }1)\) distribution, and the matrix of associations \(\varvec{\Sigma }\) was set to \(\mathbf {u}\mathbf {u}^\mathsf{T}\), where \(\mathbf {u}\) is a p-dimensional vector of Uniform\((-\tfrac{1}{2}\text {, }\tfrac{1}{2})\) variables, such that the elements in \(\varvec{\Sigma }\) lie between \(-\tfrac{1}{4}\) and \(\tfrac{1}{4}\) and concentrate around zero. Observe that, in principle, this is a densely connected network as all edges have a nonzero value, although most effects will be very small and negligible. A second data set of \(n=2,000\) cases was used to compare the performance across different sample sizes.

Figure 3 shows the posterior mode estimates for the two sample sizes using a standard normal prior distribution in Panels (a) and (b) and using our spike-and-slab setup, i.e., edge screening, in Panels (c) and (d). Observe that the effects are relatively small, and thus, many observations are needed to retrieve reasonable estimates (Panels (a) and (b)). We, therefore, cull considerably more of the effects in the edge screening step for the smaller sample size than for the larger sample size (white dots indicate culled associations in Panels (c) and (d)). The horizontal gray lines in Panels (c) and (d) reveal the spike-and-slab intersections for the different associations (there are 210 different lines, which all lie very close to each other), the thresholds from Eq. (8). Effects that lie in between the two intersection points end up in the spike (not selected; white dots); otherwise, they end up in the slab (selected; gray dots). Note that the intersections points lie closer to each other for the larger sample size, as expected. Panels (e) and (f) show the maximum pseudolikelihood estimates for eLasso, subject to the \(l_1\) constraint, which selects considerably fewer effects for the larger sample size, and a substantial shrinkage effect on the associations.Footnote 9

The posterior modes of the association parameters using a standard normal prior are shown in the top two panels, and the posterior modes of the associations using our spike-and-slab prior setup, i.e., edge screening, are shown in the middle two panels. The horizontal gray lines in (c) and (d) reveal the thresholds from Eq. (8). The bottom two panels show the maximum pseudolikelihood estimates produced by eLasso. The dashed lines are the bisection lines.

Figure 4 illustrates the various shrinkage effects in edge screening using EM and structure selection using the Gibbs sampler. Panels (a) and (b), for example, show that the procedures produce point estimates that are close to each other. Still, there is also variation between the two methods, especially around the spike and slab intersection lines. Although we did not show it here, the posterior estimates from EM and the Gibbs sampler were identical when we used a standard normal prior distribution instead of our spike-and-slab setup. These observations suggest that the differences gleaned from Panels (a) and (b) come from the fact that the edge screening procedure optimizes the vector of inclusion variables with EM while the structure selection procedure averages over them in the Gibbs sampler. These differences become even more apparent when we compare the inclusion probabilities they estimate. Panels (c) and (d) show the inclusion probabilities against the posterior mode estimates for the edge screening approach, and Panels (e) and (f) show the inclusion probabilities against the posterior mean estimates for the structure selection procedure. Whereas the inclusion probabilities lie close to zero or one for the EM approach, they show a much smoother relation for the Gibbs sampling approach. The ability to estimate inclusion probabilities that are close to one or zero is called separation, and it is clear that the EM approach shows a better separation than the Gibbs approach. But the spike-and-slab Gibbs sampling approach, i.e., SSVS, already shows excellent separation compared to other methods (e.g., O’Hara & Sillanpää, 2009). Even though the edge screening approach shows better separation, it is also more liberal, as it includes more effects into the model than the structure selection procedure does. Panels (a) and (b) indicate these points in gray in Panels (a) and (b).

The top two panels show scatterplots of the posterior means and posterior modes of the association parameters that were obtained from our structure selection and edge screening procedures, respectively. The gray points are points of disagreement. The middle two panels show the edge screening inclusion probabilities and the bottom two panels the structure selection inclusion probabilities. The dashed lines are the bisection lines.

6 Network Analysis of Alcohol Use Disorder and Depression Data

For an empirical illustration of our Bayesian methods, we assess the relationship between symptoms of alcohol use disorder (AUD) and major depressive disorder (MDD) using data from the National Survey on Drug Use and Health (NSDUH; United States Department of Health and Human Services, 2016). The NSDUH is an American population study on tobacco, alcohol, and drug use, and mental health issues in the USA. The goal of the NSDUH is to provide accurate estimates on current patterns of substance abuse and its consequences for mental health. The survey is conducted in all 50 states, aiming at a sample of 70,000 individuals; participants have to be above the age of 12 and are randomly selected based on household addresses. We focus on the data on alcohol use and depression obtained in 2014.

The 2014 data comprises 55,271 participants. We exclude participants below the age of 18, that never drank alcohol, or that did not drink alcohol on more than six occasions in the past year. The final data analyzed here comprises 26,571 participants.

We included the seven items related to the DSM-V (American Psychiatric Association, 2013) criteria for AUD, and the nine symptoms in the NSDUH survey data comprising the DSM-V criteria for MDD in our analysis. The NSDUH derives the MDD symptoms from survey items formulated in a skip-structure. In this setup, participants are allowed to skip certain items based on the answers they provide. Therefore, some specifics of symptoms are not assessed for participants, which may cause more absence scores for symptoms or problems than is the case.

In our analyses below, we first screen the network for promising edges, and then select plausible structures from the structure space instantiated by the set of promising edges. We will also perform structure selection without this initial pruning to illustrate the necessity of the edge screening step.

6.1 Edge Screening

In total, there were \(p = 16\) variables, and \({16\atopwithdelims ()2} = 120\) associations or possible edges to consider. We ran the edge screening procedure using EMVS on the selected NSDUH data. The EMVS setup with a uniform prior on the structure space selected the same edges as the EMVS setup with a uniform prior on structure complexity. We continue here using the results from the former. The edge screening procedure identified 62 promising edges, pruning almost half of the available connections. Edge screening using a uniform prior distribution on structure complexity gave the same results. The eLasso method identified 61 edges, three of which were not identified by our edge screening procedure. There were four edges identified by our edge screening procedure, that were not identified by eLasso. Figure 5a shows the network generated by the screened edges, where blue edges constitute positive associations, and red edges constitute negative associations.

We glean several important observations from Fig. 5a. First, with 33 out of \({9\atopwithdelims ()2} = 36\) possible connections between its nine symptoms, MDD appears to be densely connected. This result may be due, in part, to the skip structure that underlies the NSDUH assessment of MDD symptoms. However, it is in line with other results about MDD symptoms in the general population (e.g., Caspi et al., 2014). Second, with 20 out \({7\atopwithdelims ()2} = 21\) possible connections between its seven symptoms, AUD also appears to be densely connected. The estimated associations are less strong than with MDD, which may be due to the skip structure that underlies the assessment of MDD symptoms. Third, there are relatively few estimated connections between the two disorders. Fourth, our edge screening procedure identified a negative association between depressed mood and withdrawal symptoms. Negative associations are scarce in cross-sectional analyses, such as the one reported here.

6.2 Structure Selection

We identified 62 promising edges with our screening procedure, which generates a local median probability structure (LMS, c.f. Fig. 5a). We now wish to find out what the plausible structures are for the data at hand and how the LMS in Fig. 5a relates to the global median probability structure (GMS), i.e., the structure with edges that have marginal posterior inclusion probabilities

Barbieri and Berger (2004) showed that this GMS has, in general, excellent predictive properties. We again use the uniform prior on the structure space, which is consistent with the edge screening results shown above.

We ran the Gibbs sampler for 100, 000 iterations, which visited 62 out of \(2^{66}\approx 7e^{19}\) possible structures. Pitting the visited structures against the most frequently visited structure using the Bayes factor,Footnote 10

where \(\varvec{\gamma }_1\) denotes the most frequently visited model, we identified three structures for which the most visited structure was less than ten times as plausible. A Bayes factor \(\text {BF}_{1s}\) of ten or greater is often interpreted to provide strong evidence in favor of \(\varvec{\gamma }_1\) (see, for instance Jeffreys, 1961; Lee & Wagenmakers, 2013; Wagenmakers, Love, et al., 2018). The structures for which \(\text {BF}_{1s}\) was less than ten, and their estimated posterior structure probabilities, are shown in panels (b), (c) and (d) in Fig. 5. The three structures only differed in the relations between the two disorders.

In Fig. 6a, we plot the posterior inclusion probabilities obtained from the edge screening analysis against those obtained from the structure selection analysis on the pruned structure space. We glean two things from this figure. First, the local inclusion probabilities are at the extremes, i.e., the values zero and one, whereas the global inclusion probabilities show a broader range of values. This difference in separation was also observed in the analysis of simulated data in Fig. 4. The bottom left corner comprises culled edges that have a zero probability of inclusion. Second, there is a great agreement about which edges are or are not in the median probability structure. The LMS and GMS differed in only one edge (indicated in white; points of agreement are in gray). In Fig. 8, we plot the GMS and a difference plot, which reveals the differences between the LMS and GMS (red edges indicating edges that are in the LMS, but not the GMS). Figure 5e shows that the negative association between nodes four and eight is not in the GMS (\(p(\gamma _{4,8}=1\mid \mathbf {X}) = .101\)). Thus, the LMS produced by our edge screening approach (c.f., Fig. 5a) is an excellent approximation to the GMS identified on the pruned space (c.f., Figs. 5e and 5f). Similar to our simulated example, the edge screening procedure proved to be more liberal than the structure selection approach, i.e., more edges were included in the LMS than in the GMS.

Edge screening and structure selection for NSDUH data. a indicates the network generated by the promising edges identified by edge screening; the local median probability structure. b–d indicate the three (most) plausible structures identified by structure selection on the pruned space. e indicates the global median probability structure, and f indicates the difference between the two median probability structures. The network plots are produced using the R package qgraph (Epskamp, Cramer, Waldorp, Schmittmann, & Borsboom, 2012).

6.2.1 Parameter Uncertainty

One of the main benefits of using a Bayesian approach to estimate the network is that it provides a natural framework for quantifying parameter uncertainty. We have two ways to express this uncertainty. The first is the asymptotic posterior distribution based on EM output, which is the posterior distribution associated with the modal structure \(\hat{\varvec{\gamma }} = \mathbb {E}(\varvec{\gamma } \mid \hat{\varvec{\Sigma }})\). This is thus a conditional posterior distribution. The second is the model-averaged posterior distribution

that can be estimated from the Gibbs sampler’s output.

The model-averaged posterior distribution of the network parameters incorporates both the uncertainty that is associated with selecting a structure from the collection of possible structures, and the uncertainty that is associated with the parameters of the individual structures. In this way, the model-averaged posterior distributions offer robust estimates of the network parameters and their uncertainty. Since the model-averaged posterior embraces both sources of uncertainty, the posterior variance of a model-averaged quantity tends to be larger than that of a conditional posterior (i.e., conditioning on a specific structure selected), on average.Footnote 11 For some parameters, this does not lead to striking differences, as Fig. 7a illustrates for one of the associations in the NSDUH data example. In some occasions, however, single-model inference leads us to put faith in a model that assumes parameter values that are not supported by other plausible models. Figure 7b is an illustration of this.

Estimated posterior distributions for two association parameters in the NSDUH example. We plot the asymptotic posterior distributions (i.e., the normal approximations) based on the EM edge screening output in gray, and the model-averaged posterior distribution based on Gibbs sampling in black. The density was estimated using the logspline R package (Kooperberg, 2019). The gray dot reflects the estimated posterior medians. The \(95\%\) highest posterior density intervals on top were estimated using the HDInterval R package (Meredith & Kruschke, 2020).

The illustrations above underscore the fact that model-averaging leads to more robust inference on the model parameters than single-model inference (e.g., the output of |rbinnet|’s Edge Screening procedure or the output from |IsingFit|). A benefit of using the Gibbs sampler to estimate the model-averaged posterior distributions is that we can use it’s output to construct model-averaged posterior distributions of other measures of interest. For example, Huth, Luigjes, Marsman, Goudriaan, and van Holst (in press) recently used the Gibbs output to estimate the model-averaged posterior distributions of node centrality measures.

6.3 Structure Selection Without Pruning

To analyze the benefit of our two-step procedure, with edge selection preceding structure selection to prune the structure space, we performed a structure selection analysis without pruning the structure space. We ran the Gibbs sampler for 100, 000 iterations, starting at the posterior mode, which visited 39, 885 out of \(2^{120}\approx 1e^{36}\) possible structures. This result immediately underscores the importance of pruning the structure space before structure selection. The posterior structure probabilities of such a large collection of models cannot be estimated with great precision in a reasonable amount of time. Pitting the visited structures against the most frequently visited structure using the Bayes factor identified 52 plausible models. Two questions arise. The first question is about identifying the GMS and how it fares against the LMS identified with edge screening. Second, we wish to determine how the three previously identified structures stack up against the 39, 885 visited structures in the structure selection on the full structure space.

In Fig. 6b, we plot the posterior inclusion probabilities obtained from the edge screening analysis against those obtained from the structure selection analysis on the full structure space. As before, the local inclusion probabilities are mostly located at the extreme ends of zero and one, whereas the global inclusion probabilities are more variable. This difference is emphasized in the bottom left corner of Fig. 6b, since the previously culled edges now received nonzero probabilities. However, Fig. 6b also reveals that there is great agreement about which edges are or are not in the median probability structure. The LMS and GMS on the full structure space differed in three edges.

In Fig. 8, we plot the GMS from the full space and a difference plot. Figure 8b shows that, as before with the pruned space, the negative association between nodes four and eight is not in the GMS (\(p(\gamma _{4,8}=1\mid \mathbf {X}) = .487\)). The edge between nodes four and twelve was also not in the second plausible structure observed before (c.f. Fig. 5c; \(p(\gamma _{4,12}=1\mid \mathbf {X}) = .394\)). The edge between nodes five and sixteen, however, was not screened before (\(p(\gamma _{5,16}=1\mid \mathbf {X}) = .491\)). In sum, the LMS produced by our edge screening approach (c.f., Fig. 5a) served as a good approximation to the GMS identified on the pruned space (c.f., Figs. 5e and 5f) and on the full space (c.f., Figs. 8a and 8b).

Application of Structure Selection to NSDUH data on the full structure space. a indicates the global median probability structure on the full structure space, and b indicates the difference between the local and global median probability structures. See text for details. The network plots are produced using the R package qgraph (Epskamp et al., 2012).

The Gibbs sampler on the full structure space visited 39, 885 structures. Of these 39, 885 structures, 75 were visited between 100 and 1, 450 times, indicating posterior probabilities between .0008 and .015. The remaining 39, 785 structures were visited less than 100 times, indicating a posterior probability of less than .0008. However, in total, the probabilities of these 39, 785 structures added up to .762. Thus, structure selection on the full structure space wastes valuable computational efforts on estimating insignificant structures. This is a prime example of dilution (George, 1999), and once more underscores the importance of pruning the structure space before performing structure selection. The posterior probabilities of the three structures identified earlier were .008, .015 and .008, and with that they were the 7th, 1st and 6th most visited models, respectively. Nevertheless, given the vast amount of visited structures and the tiny probabilities associated with it, their estimates are highly uncertain.

7 Discussion

In this paper, we have introduced a novel objective spike-and-slab approach for structure selection for the Ising model, and we have illustrated the full suite of Bayesian tools using simulated and empirical data. The empirical analysis allowed us to underscore the importance of trimming the structure space before its exploration, and that edge screening is capable of identifying relevant edges. The default specification of the spike-and-slab variances resulted in a selection method with consistently high specificity in our simulations, i.e., a low type-1 error rate in edge detection. Posterior estimates of the parameters are easy to obtain for both edge screening and structure selection procedures. Our structure selection procedure opened up the full spectrum of Bayesian tools, and, when paired with edge screening, it quickly zoomed in on plausible structures and promising effects. In sum, we have presented a complete Bayesian methodology for structure determination for the Ising model.

A caveat in our suite of Bayesian tools is the Bayes factor comparing two specific topologies. In principle, we can compute the Bayes factor from the posterior structure probabilities obtained from our structure selection procedure, but only if the Gibbs sampler visited the two structures under scrutiny. However, there is no guarantee that the Gibbs sampler visits the two structures, and even if the Gibbs sampler visits them, their estimated posterior probabilities can be uncertain. We need a more dedicated approach to estimate the Bayes factor if we wish to compare two particular structures of interest. Dedicated procedures have been developed for GGMs (e.g., Williams & Mulder, 2020a; Williams, Rast, Pericchi, & Mulder, 2020) and implemented in the R-package |BGGM| (Williams & Mulder, 2020b). However, these procedures have not yet been developed for the Ising model. We believe that the Laplace approximation that we have used in the paper will be a good starting point for computing the marginal likelihoods. Another option would be the Bridge sampler (Gronau et al., 2017; Meng & Wong, 1996), which fits seamlessly with our Gibbs sampling approach.

In practice, however, we often do not have specific structures that we wish to compare, while we do have hypotheses about entire collections of structures. As an example, consider the hypothesis \(\mathcal {H}_1\) that a particular edge should be included in the network. This hypothesis spans the collection of all structures that include the edge. The posterior plausibility for \(\mathcal {H}_1\) is therefore the collective plausibility of all structures in the hypothesised collection:

i.e., the edge-inclusion probability. The posterior plausibility for the complementary hypothesis \(\mathcal {H}_0\) of edge exclusion is \(p(\mathcal {H}_0 \mid \mathbf{X} ) = 1- p(\mathcal {H}_1 \mid \mathbf{X} )\). The ratios of the prior and posterior plausibility of these two competing hypotheses then determine the edge-inclusion Bayes factor.Footnote 12 Crucially, the edge-inclusion Bayes factor can quantify the evidence for \(\mathcal {H}_1\)—edge inclusion—and \(\mathcal {H}_0\)—edge-exclusion (Jeffreys, 1961; Wagenmakers, Marsman, et al., 2018). Moreover, the Bayes factor can tease apart the evidence of absence (i.e., edge-exclusion) from the absence of evidence. We therefore believe that the edge-inclusion Bayes factor is a valuable tool for analyzing psychological networks. The methods advocated in this paper—implemented in the R package |rbinnet|—can be used to estimate the edge-inclusion Bayes factors. Huth et al. (in press) recently used it to estimate the evidence for in- and exclusion of edges in networks of alcohol abuse disorder symptoms.

In a recent preprint, Bhattacharyya and Atchade (henceforth BA; 2019) also proposed a continuous spike-and-slab edge selection approach for the Ising model using the pseudolikelihood. The two methods were designed with a different focus, however. Whereas BA focused on networks with many variables, we focused on psychological networks that are relatively small in comparison. As a result, the two approaches differ in several key aspects that make our approach more appealing to analyze psychological networks. For example, BA did not trim the structure space before exploring it with a Gibbs sampler. Our empirical example illustrated why we believe that this is a bad idea. At the same time, we addressed some outstanding issues in this paper that BA left open. For example, BA analyzed the p full-conditionals in Eq. (2) in isolation, which provided them an opportunity for fast parallel processing. However, this also forced them to stipulate two independent prior distributions on each focal parameter, which means that they ended up with two posterior distributions for each association. Unfortunately, BA provided no principled solution for combining these estimates for either structure selection or parameter estimation. Another issue is that their spike-and-slab approach required the specification of tuning parameters, but they offered no guidance or automated procedure for their specification. In sum, our method (i) offers an objective specification of the prior distributions that lead to sensible answers, (ii) trims the structure space to circumvent issues related to dilution, and (iii) allows for a meaningful interpretation of the estimated posteriors. Despite these crucial differences, however, the approach of BA is broader than ours, as they also analyzed networks of polytomous variables, while we exclusively focus on the binary case in this paper.

Our specification of the hyperparameters stipulates a mixture of two unit information priors, one fixed and one shrinking, that a priori match an approximate credible interval. We chose this setup to mimic the eLasso approach of van Borkulo et al. (2014) and aimed for high specificity. However, researchers might have a different aim and wish to have methods available that have a higher sensitivity (e.g., see the considerations in Epskamp et al., 2017) or that aim for a low false discovery rate instead (e.g., Storey, 2003). In principle, penalty tuning procedures and prior structure probabilities could be tailored to achieve different goals. For example, we could adopt the eLasso approach and select the penalty \(\xi \) that minimizes the Bayesian information criterion (BIC; Schwarz, 1978) or the extended BIC (EBIC\(_\lambda \), where \(\lambda \) is a penalty on complexity; Chen & Chen, 2008) instead of matching the spike-and-slab intersections to credible intervals. These two criteria usually achieve higher sensitivity than Lasso and naturally tie in with the two prior distributions on the structure space that we have used here: A uniform prior distribution on the structure space is consistent with BIC, and a uniform prior distribution on structure complexity is compatible with EBIC\(_1\). Furthermore, several alternative prior distributions that account for multiple testing have been discussed in the variable selection literature (e.g., Castillo, Schmidt-Hieber, & van der Vaart, 2015; Womack, Fuentes, & Taylor-Rodriguez, 2015). In sum, there are plenty of options to tailor the spike-and-slab approach to the specific needs of empirical researchers.

The prior specification options that we discussed above are geared towards situations in which researchers have limited or only general ideas about the network they are analyzing. In principle, researchers could have substantive ideas or knowledge about the network under scrutiny, and it is opportune to use this information in its analysis. Prior information could be used to define \(\delta \) (e.g., George & McCulloch, 1997) or it could guide the specification of the prior inclusion probabilities of the network’s edges. A parameter’s sign is another common source of information since most relations are usually positive in psychological applications (see Williams & Mulder, 2020a, for an implementation of this idea for GGMs). Investigating how substantive knowledge can be best included in the Bayesian model—and what that implies for the spike-and-slab setup—is another fruitful area of future research.