Abstract

Hydropower represents the world’s largest renewable energy source. As a flexible technology, it enhances reliability and security of the electricity system. However, climate change and market liberalization may hinder investment due to the evolution of water runoffs and electricity prices. Both alter expected revenue and bring uncertainty. It increases risk and deters investment. Our research assesses how climate change and market fluctuation affect annual revenue. But this paper focuses on the uncertainty, rather than on forecasting. This transdisciplinary topic is investigated by means of a mixed method, i.e. both quantitative and qualitative. The quantitative approach uses established models in natural sciences and economics. The uncertainty is accounted for by applying various scenarios and various datasets coming from different models. Based on those results, uncertainty is discussed through an analysis discerning three dimensions of uncertainty. Uncertainty analysis requires the assessment of a large panel of models and data sets. It is therefore rarely carried out. The originality of the paper also lies on the combination of quantitative established models with a qualitative analysis. The results surprisingly show that the greenhouse gas scenarios may in fact represent a low source of uncertainty, unlike electricity prices. Like forecasting, the main uncertainties are actually case study related and depend on the investigated variables. It is also shown that the nature of uncertainty evolves. Runoff uncertainty goes from variability, i.e. inherent randomness, to epistemic, i.e. limitation of science. The reverse situation occurs with the electricity price. The implications for scientists and policy makers are discussed.

Similar content being viewed by others

1 Introduction

Hydropower plays an important role in balancing the inherent intermittence of solar and wind energy (IEA 2005). It also represents a significant source of revenue for mountainous regions (SHARE project 2013). However, future developments have been blurred by the appearance of new risks and the changing environmental and economic dynamics (Gaudard and Romerio 2014). Whilst climate change affects hydropower potential (Finger et al. 2015; Francois et al. 2015; Hamududu and Killingtveit 2012; Madani and Lund 2010; Schaefli et al. 2007), energy policy often produces sudden shifts in energy supply (THINK Project 2013). Electricity companies must therefore deal with a higher financial risk in their investments and refurbishments. Due to the long payback periods on these technologies, a long-term perspective must be assessed and accounted for.

This paper mainly deals with the uncertainties and their relationships. We investigate the impact of climate change and power market fluctuations on hydropower annual revenue. If those drivers are considered independently, the overall impact and accumulated uncertainty cannot be evaluated. Both are important, as low-expected revenue and high uncertainty tend to jeopardize investment. Without a clear assessment, decision-makers would not be in a position to define strategies that are able to cope with uncertainty and mitigate risks (Hill Clarvis et al. 2014).

Firstly, the impact of water runoff and wholesale electricity price evolution on the revenue of a hydropower plant is quantified. This assessment considers a wide range of parameters, scenarios, and models (3 greenhouse gases (GHG) emission scenarios, 10 combinations of global and regional climate models (GCM-RCM), 2 downscaling methods, 2 initial ice volumes, 3 electricity price models). The simulations therefore provide a comprehensive, but not exhaustive, overview. The computed results are compared, thus providing information on the uncertainty. As highlighted by Majone et al. (2015), very few comprehensive analyses of uncertainty have been carried out, due to the computational time required to carry out such assessments. This paper aims to plug this gap.

Based on the above results, a qualitative analysis of uncertainty is carried out following the approach of Walker et al. (2003). Mixed methods are a pragmatic way to integrate results from natural sciences and economics (Creswell and Plano Clark 2011). As highlighted by Schaefli (2015), no consensus has been reached on the method to assess uncertainty in hydrology. This statement is all the more true in a transdisciplinary study. We try to deal with this issue by considering qualitative rather than purely quantitative uncertainty analysis.

This paper states various conclusions about uncertainty. First, the future level of GHG emitted does not necessarily affect the results significantly. We show that climate change influences the runoff, but it has only a limited impact on long-term uncertainty. In fact, the revenue variations amongst the different GHG emission scenarios turn out to be small. Second, the current epistemic uncertainty, i.e. limitation of science, is related to the adaptation of the GCM-RCM model to local features. A poor estimation of initial ice distribution of the glacier and even more so an inaccurate downscaling method affects the results. The uncertainty owing to GCM-RCM is restrained by considering a panel of them. Third, electricity prices present the greatest area of uncertainty. It can jeopardize future investments and can even threaten energy transition and security. In the long-term, the socio-economic randomness overtakes the epistemic uncertainty. Therefore, it belongs to the decision makers to manage it through the market design and the risk-hedging tools.

2 Taxonomy of Uncertainty

Before presenting the quantitative simulations, we define ‘uncertainty’ since it represents the backbone of this paper. This notion is controversial, particularly when dealing with multiple disciplines in natural sciences and humanities. To overcome this obstacle, we base our analysis on the framework developed by Walker et al. (2003), who provide a general definition of uncertainty ‘as being any deviation from the unachievable ideal of completely deterministic knowledge of the relevant system’ (page 5).

Walker et al. (2003) consider the following three dimensions of uncertainty:

Location:

The position of the uncertainty within the model complex. Walker et al. (2003) identify five generic locations; context, model, inputs, parameters, and outcomes.

Level:

Degree of uncertainty, from complete deterministic knowledge to total ignorance; distinction should be made between statistical uncertainty, scenarios and ignorance.

Nature:

Two categories are defined; epistemic and variability. Epistemic uncertainty ‘is due to the imperfection of our knowledge, which may be reduced by more research and empirical efforts’ (p.13, Walker et al. (2003)). Variability is brought about by the inherent randomness of nature, human behavior, socio-economic and cultural dynamics, as well as unexpected technological innovations.

Walker et al. (2003) suggest completing an uncertainty matrix. Our model merging various submodels, we opts to identify and discuss the level and nature occurring at each location. Nevertheless, we keep the framework with the three dimensions and their definitions.

Two goals drive the use of this three-dimensional approach. First, it synthesizes the information. The quantitative model computes the expected annual revenue and its standard deviation. It provides an aggregated quantity for the scenarios and models tested. It allows the carrying out of sensitivity analysis to determine the level of uncertainty but overlooks the nature and location. The distinction between epistemic and variability uncertainty is not always clearcut but allows to identify the measure to tackle the uncertainty. We determine the nature by using our knowledge of the model and the quantitative results. Second, the study analyses long-term trends with socio-economic components. We cannot consider the value obtained as a forecast. The discussion must be uncoupled from the purely quantitative results in order to get a broader overview. The three-dimensional approach covers this step.

3 Method and Data

We attempt to simulate future hydropower revenue which is determined by runoff, wholesale electricity prices, and supply schedules (Gaudard et al. 2013a). Figure 1 highlights the logical structure of our modeling framework, which is detailed in the following sections. The grey areas represent input data we obtained.

3.1 Climate Data

We consider three GHG emission scenarios; A1B, A2 and RCP3PD.

-

A1B is considered a ‘reasonable’ scenario, which generates a global warming of + 1.7 ∘C to + 4.4 ∘C between the years 1980–1999 and 2090–2099 (IPCC 2000).

-

A2 is the second-worst scenario in the special report emissions scenarios frame with a temperature increase of + 2 ∘C to 5.4 ∘C (IPCC 2000).

-

RCP3PD, also named RCP2.6, expected global warming is of + 0.3 ∘C to 1.7 ∘C between 1986–2005 and 2081–2100 (IPCC 2013; Meinshausen et al. 2011).

Climate data is provided by the ENSEMBLES project (2013) for 10 combinations of global and regional climate models (GCM-RCM), which have a grid of 25 km × 25 km. This horizontal resolution is insufficient to consider local characteristics especially in mountain areas where local variability is high. For example, the altitude of the nearest grid point may differ by several hundreds of metres in comparison with the actual location. This is the reason why downscaling is necessary

Adapting GCM-RCM data to local situations has been performed by two methods, i.e. the Delta Change Method (DCM) (Bosshard et al. 2011) and the Empirical Distribution Delta Method (EDDM) (Gaudard et al. 2013a). For the whole panel of GHG emission scenarios (A1B, A2 and RCP3PD), we used the data provided by C2SM (CH2011, C2SM (2014)). This team applied the DCM (Bosshard et al. 2011). This consists of moving the mean temperature and precipitation in historical data to reflect climate forecasting. C2SM simulates the meteorological variability by running a random function. It therefore obtains a set of 10 time series for each GCM-RCM, i.e. 100 by GHG emission scenarios.

For the scenario A1B, we also applied EDDM (Gaudard et al. 2013a). It differs from the previous one, because it takes into account the variation in distribution, not only the mean variation. The impact of climate change on interannual variability is therefore considered. In contrast to DCM, EDDM provides only one path per GCM-RCM. This is due to the fact that the variability is not generated by a stochastic variable like with DCM.

3.2 Glacio-Hydrological Model

High-mountain catchment areas generally have a long-lasting snow cover and a considerable degree of glacier coverage. This influences the runoff regime and needs to be taken into account in the runoff modeling. For this reason the combined glacio-hydrological model GERM (Farinotti et al. 2012; Huss et al. 2008) is employed to assess the impact of climate change on the evolution of runoff. The model considers the processes of accumulation and the melt of snow and ice masses as well as the glacier evolution. Ablation is basically a function of air temperature and potential solar radiation. Accumulation is modeled by using precipitation lapse rates and air temperature to distinguish between liquid and solid precipitation. Snow redistribution processes (wind, avalanches) are also included. In the last step, the model evaluates the local water balance given by liquid precipitation, melt water and evaporation and routes the water through a couple of linear reservoirs in order to mimic the retention of the water in the catchment area.

The model is forced by daily temperature and precipitation time series from the 1980–2100 time period. Past meteorological data is taken from MeteoSwiss (2013) weather stations in the vicinity of the catchment area. For future time series the downscaled climate scenarios are used (see Section 3.1). The empirical character of the glacier and runoff model requires calibration of various model parameters which is done by means of past ice volume changes, direct mass balance measurements and runoff records. Gabbi et al. (2012) describe the application of the GERM model to our case study. It provides further details about calibration and validation. If it presents the A1B GHG scenario results, the A2 and RCP3PD ones are unpublished so far.

For glacier projections the distribution of ice mass in the modeling domain has to be known. Due to scarce or non existent measurements of ice-thickness, estimation approaches are commonly used (Farinotti et al. 2009). Our own previous estimations showed a total ice volume of 4.41 ± 1.02 km 3. However, recently performed area-wide ice-thickness measurements revealed a smaller ice volume of 3.69 ± 0.31 km 3 (-16 %). We test both initial ice volumes to quantify the sensitivity of our results to this parameter.

3.3 Wholesale Electricity Price Models

For electricity price, we consider one reference, called Ref, which is the repetition of historical prices, and three price models, explained below. They are parameterized with EEX German electricity spot prices (EEX 2013). This index is more relevant for the long-term forecasting, since it is the expression of the leading exchange market in Europe. The period of calibration of all our models is 01-Apr-2001 to 31-Mar-2012. In order to get the real price, we use the Harmonised Index of Consumer Prices (HICP) for Germany provided by Eurostat (2013). Thus all our revenues are year 2005 € equivalent.

We consider an explanatory scenario based on past data (EEA 2000; Kowalski et al. 2009). Our models do not aimed at forecasting electricity prices, which is almost impossible in the long-term. We instead adopt a statistical approach and consider various price models. We instead focus on the comparison of uncertainty created by runoff with electricity prices, rather than price forecasting. Based on our underlying aim, our method provides relevant outcomes.

3.3.1 Common Components to All Models

We provide a detailed explanation of the price models. We thoroughly describe this component since it is not described in our previous papers. This component is new.

We consider the logarithm of the prices in order to keep the variance more stable (Tsay 2010; Wooldridge 2009). The model is therefore as follows:

where P t is the hourly electricity spot price [€ MWh −1], \({X^{s}_{t}}\) is the seasonal factor, \(D_{i,t}^{hours}\) and \(D_{24,t}^{we}\) are dummy variables that control hourly variation and gaps between weekday and weekends. Short term volatility is denoted as 𝜖 t .

The volatility is approached with an ARMA(1,1)-GARCH(1,1) model (Bollerslev 1986; Box and Pierce 1970). (2) represents the ARMA(1,1) process while (3) to (4) are the GARCH(1,1) processses. The model is commonly used to forecast short-term electricity prices because it is well suited for market with high volatility (Garcia et al. 2005). We choose an order (1,1,1,1), which means that the error and its variability are determined by error at time t−1.

where the set of models is m o d t o t ∈{MR y ,MR s ,MRJD} and δ t is the remaining volatility of the ARMA(1,1) model. At time t, it is normally distributed and simulated with a standard normal random variable, z t , and a standard deviation, σ t . The latter varies and follows a GARCH(1,1) process.

3.3.2 The Three Electricity Price Models

The difference between the electricity price models lies in the way they treat the seasonal factor, \({X^{s}_{t}}\), in (1). By taking into consideration various price models, it can identify the uncertainty of future prices, in particular linked to seasonality. We also assess whether interannual and intra-annual variability is important for future revenues. Below we briefly describe the models and provide some relevant references.

We consider two mean-reversing models, called MR y and MR s (Uhlenbeck and Ornstein 1930). These processes are commonly used in raw commodity investment analyses because under certain hypotheses prices are attracted by production costs. They are defined as follows:

where the set of models is m o d∈{MR y ,MR s }, κ is the reversion speed and μ the long-term mean. η is the Brownian motion term, which explains why ΔW t follows the Wiener process represented in (6). As in (3), z t is a standard normal random variable.

MR y is realitively stable. Its mean yearly price may change, but the intra-annual profile do not change, as in:

where \(X^{\text {MR}_{y}}_{t}\) is an annual mean [€ MWh −1] that follows a mean reversion process.

MR s considers that the mean price of each season, \(X^{\text {MR}_{s}}_{t}\), follows a mean reversion process. In contrast to MR y , the season with the mean highest prices may change from the historical pattern. This price model is then formulated as:

where \(X^{\text {MR}_{s}}_{t}\) is a seasonal mean [€ MWh −1], i.e. average over three months, that follows a mean reversion process.

The mean-reversing jump diffusion model, called MRJD, allows to consider that price is attracted by production cost, like the two previous ones (Kaminski 1997). However, some shocks may perturb the price. One may observe a jump over a certain period of time, which may be formalized as:

where \(X^{\text {MRJD}}_{t}\) is the daily mean price [€ MWh −1] and q t is a poisson process that produces infrequent jump of size J.

3.4 Hydropower Plant Management

The operator of the hydropower installation aims to maximize profit. Because the variable costs are small for this technology, they can be ignored in this analysis. The objective function is given by Forsund (2007):

where g is the acceleration due to gravity [m s −2], ρ the water density [kg m −3], η the plant efficiency, f the water flow through the turbine [m 3 s −1], h t the hydraulic head [m], P t the electricity spot price [€ Wh −1]. The objective function is defined on the time horizon T and for a binary variable b t which indicates whether one produces or not.

The optimization problem and its constraints are formalized as:

where V t is the reservoir content at time t [m 3], I t the water intake [m 3 s −1], h t is a function of V t , and V min, V max are the capacity limits of the reservoir.

We optimize over a time horizon of two years and validate the first one. Then, we move the windows for the following optimization one year later. To tackle the residual water volume, R T , we fix the final volume after two years at the initial level.

The objective function is maximized with a local search method, called Threshold Accepting (Dueck and Scheuer 1990; Moscato and Fontanari 1990). The algorithm starts with a random turbine schedule, it evaluates the corresponding objective function value. Then it considers a solution in the neighborhood by introducing a small random perturbation to the current schedule and evaluates the new corresponding objective function value. The new solution is accepted if it is no worse than a given threshold. This means that the algorithm also allows down steps in order to escape local minima. Of course, the threshold decreases gradually to zero which means that in the final steps only solutions which improve the objective functions are accepted. A data driven procedure determines the threshold sequence as described by Winker and Fang (1997) and adapted by Gilli et al. (2006). This algorithm as a whole has proven its effectiveness to find an acceptable optimum. We do however acknowledge that other algorithms also exist as reviewed by Ahmad et al. (2014). For instance, some papers consider a genetic algorithm (Hincal et al. 2011; Cheng et al. 2008) or a simulated annealing one (Teegavarapu and Simonovic 2002). Some papers also investigate multi-objective optimization rather than one objective function (Liao et al. 2014; Kougias et al. 2012).

We assume a clear-sighted manager, i.e. he knows in advance the price and runoff. Even if this hypothesis is optimistic (Tanaka et al. 2006), it is commonly used in papers for computational efficiency (Francois et al. 2015; Maran et al. 2014; Hendrickx and Sauquet 2013; Schaefli et al. 2007). Since we look at trends and do not aim to forecasting exact future revenue, this hypothesis does not affect our conclusions.

We validated our model by using historical data. Once the optimization performed with past runoff and electricity prices, we have compared the hourly, weekly and seasonal production with the actual production. We also verified that the lake level followed the same pattern in our simulation as in the real life. Gaudard et al. (2013a) provides more details about the management model.

3.5 Field Site

The case study is based on the hydropower plant of Mauvoisin, situated in the Swiss Alps (7o35′E, 46∘00′N). A reservoir gathers the runoff coming from nine glaciers (Petit Combin, Corbassière, Tsessette, Mont Durand, Fenêtre, Crête Sèche, Otemma, Brenay, Giètro), which covered in 2009 40 % of the catchment area (Gabbi et al. 2012).

The reservoir volume is 192 × 106 m 3, which represents 624 GWh. The mean multi-year production is 1040 GWh (years 1999–2009), 53 % in winter and 47 % in summer (FMM 2009). The reservoirs in the Alps transfer energy from summer, when snow and glaciers melt, to winter, when electricity demand is high.

Several studies were carried out on the impact of climate change in the Bagne Valley where the Mauvoisin power plant is situated (Gaudard 2015; Gaudard et al. 2013a; Gabbi et al. 2012; Terrier et al. 2011; Schaefli et al. 2007). So far, the operators have taken advantage of the glacier melting, which increases the annual volume of water. According to Gabbi et al. (2012), this trend will persist for the next 20 years. The annual runoff will subsequently decrease in the second half of the century. By 2100, glaciers are expected to disappear in this catchment area. Thanks to the reservoir, the power plant operator may, however, mitigate the effects resulting from the runoff seasonality (Gaudard et al. 2013a). A new pumped-storage may even be built from 2040 on the surface released by the glacier and therefore increases energy production on the site (Gaudard 2015; Terrier et al. 2011)

4 Results

In this section, we show how the scenarios and models affect the key variables of our study, i.e. climate, runoff, prices and revenue. The uncertainty analysis follows.

4.1 Climate Data

The evolution of air temperature and precipitation depends on the GHG emission scenario. The warmest scenario, i.e. A2, expects a mean local air temperature of + 2 ∘C for the period 2091–2100 (data corresponds to an altitude of 2921 m a.s.l.). It is 3.7 ∘C warmer than the historical records for the period 2000-2009. Scenario A1B provides a medium situation. The mean local air temperature is + 1.4 ∘C for 2091–2100. The coolest scenario, i.e. RCP3PD, stabilizes the mean yearly temperature at −0.7 ∘C which is below the melting point. Albeit those three GHG emission scenarios cover a large range of future evolutions, none affects the mean precipitation over the century. It stays at 3.7 mm day −1.

In this study, we consider 10 GCM-RCM with 10 paths for each. The gaps between the extreme GCM-RCM may be significant. In the case of the A1B scenario and considering the end of the century, the gap is about 2 ∘C for air temperature and 0.4 mm day −1 for precipitation. Whilst the coolest GCM-RCM predicts a mean temperature close to 0 ∘C, the warmest reaches 2 ∘C. It shows that the variability between the GCM-RCM is not far from the variability between GHG emission scenarios. Research investigations can fortunately reduce this epistemic uncertainty by using data from a large set of GCM-RCM, as done in our study.

The downscaling method, i.e. EDDM and DCM, affects the outcome. The expected mean temperature does not significantly differ between the two methods unlike the interannual temperature variability. The latter remains stable with DCM while the EDDM method slightly influences it. Beside temperatures, DCM does not affect the mean precipitation and its interannual equivalent, while the EDDM affects both. The mean precipitation increases by 0.1 mm day −1, while the interannual variability rises. This downscaling step represents a source of epistemic uncertainty currently tackled by climatologists.

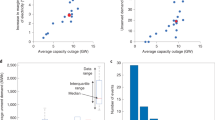

4.2 Glacier Evolution and Runoff

Figure 2 represents the evolution of inflows in the Mauvoisin reservoir for the three GHG emission scenarios. All emission scenarios have the same general pattern. The runoff increases slightly until 2040 compared to present-day conditions. Afterwards, inflows decrease by a similar value. While the mean recorded runoff was of 292 × 106 m 3 for 1981–2010, it becomes for the period 2021–2050 of 302, 300 and 297 × 106 m 3 for A1B, A2 and RCP3PD, respectively. For the period 2071–2100, the computed runoff is 243, 245 and 242 × 106 m 3. While the GHG emission scenario largely affect the warming, it unexpectedly represents a low source of uncertainty in terms of runoff.

The 10 GCM-RCM do not predict the same pattern. The mean runoff for 2071–2100 goes from 224 × 106 m 3 to 260 × 106 m 3. The percentage error is about 8 %. It shows that studies based on few GCM-RCM suffer of high epistemic uncertainty.

The downscaling method (shown in the upper left-hand panel) has a higher impact by affecting the mean runoff. The EDDM forecasts a mean runoff of 262 × 106 m 3 for 2071–2100. This is 19 × 106 m 3 higher than the mean runoff obtained with the DCM. This is also beyond the extreme GCM-RCM outcomes.

The projected interannual variability is not affected by the DCM. It varies around the historical one between 26 and 31 × 106 m 3. But it rises to 36 × 106 m 3 with EDDM. As argued by Quintana Segui et al. (2010), the downscaling method brings epistemic uncertainty and could be further improved The sensitivity of our results to the initial ice volume is relatively low. The bottom right graph of Fig. 2 shows the influence of overestimating this variable for the runoff considering the A1B GHG emission scenario. The runoff increases by about 10 % in a first phase and then it decreases to attain a mean annual runoff of 241 × 106 m 3 y −1 in the last decades of the 21 st century. It is surprisingly lower, but within the error term, than with the correct ice volume. Moreover, Gabbi et al. (2012) have already highlighted that the shape of the glacier, not only the volume, has a major influence on the results.

4.3 Electricity Prices

Table 2, in Annex, shows that the parameters of the price models are highly significant. The validation of each models is based on a comparison between the simulated prices and the historical ones. We have checked that the daily, weekly, and if applicable seasonal variations reflect the price behavior in the Continental Central-South area of Europe.

The volatility partially affects the annual mean prices and its interannual variability. They are 41 (9.7), 46 (6.1), and 40 (2.0) €MWh −1 in the case of MR y , MR s , and MRJD respectively (interannual variability in brackets). These values remain stable over the 120-year period, thanks to the mean reversion process. They therefore remain close to the historical mean price, which was about 39 (10.3) € MWh −1, while the interannual volatility is underestimated. It underlines the difficulty of finding models that are representative yet maintain a level of variability.

Our models investigate the impact of seasonality only. We also tested a geometric Brownian motion model, which is not presented in this paper. The price was highly volatile since no reverting process was considered. The quantitative results led to one main obvious conclusion; if the annual price can evolve, the variability of the price overtakes all other uncertainties. The interannual variability of the revenue became larger than the expected revenue.

4.4 Revenue

The annual revenue represents a merging outcome enabling comparison between the impacts of every input. Table 1 presents it in terms of a mean and a standard deviation. We show the results for each electricity price scenario for the A1B GHG emission, otherwise we only refer to the historical price, i.e. Ref price model. This table also includes the impact of the downscaling method and initial ice volume. Trends from the various scenarios or models must be compared, rather than the numbers being reviewed in isolation. As already stated, we are not aiming to forecast future revenue here, we are in fact investigating uncertainty.

Our results show that climate change affects mean annual revenue, although the variation between various GHG emission scenarios is low (lines 1, 6 and 7). This is particularly striking for the period 2071–2100. During this period, the runoff resulting from the melting glacier will be low because of glacier exhaustion (A1B and A2) or air temperature stabilization (RCP3PD). The uncertainty is not necessarily shown to lie in the GHG emission scenarios.

It is interesting to note that annual revenue is not linearly related to the annual runoff. In the medium term, the A2 and RCP3PD GHG emission scenarios do not (negatively) affect revenue as we notice a runoff rise. In contrast, the A1B scenario computed revenue is surprisingly high whereas the annual runoff was close to the two other GHG emission scenarios. The seasonality can explain this result, even if the reservoir partially addresses this.

The difference between the 10 considered GCM-RCM ranges from 63 to 73.5 × 106 € for the A1B GHG emission scenario and the period 2071–2100 (not given in the table). This gap is higher than the difference between GHG emission scenarios. Therefore, studies based on few climate models carry a high degree of epistemic uncertainty.

The uncertainty brought by the initial ice volume is not as high as the uncertainty resulting from the downscaling method (comparison line 1, 8 and 9). The computed mean revenue with the EDDM downscaling method is higher than the most extreme GCM-RCM computed with the DCM method. EDDM also tends to smooth the revenue evolution. It increases less in the medium term and decreases less in the second half of the century. Due to a lack of data, we were not able to assess more downscaling methods, however this would have been beneficial. This is an ongoing research in climatology (Fowler et al. 2007). The initial ice volume surprisingly has a relatively small impact.

The electricity price models comparison can barely demonstrate trends (lines 1 to 4). Our models are conservative as they do not consider drift. However, even through this hypothesis, the mean revenue widely differs from model to model. For the initial period 1981–2010, the mean revenue is not linearly correlated to the mean price. For instance, Ref and MRJD models have a close mean price, but the revenues differ significantly. If the mean annual revenue matters, the seasonality and the volatility of the price do too. The trouble is that if the mean price has proven to be hard to predict, the volatility is even more pronounced.

This issue is particularly important as we can demonstrate that revenue is mainly correlated to price. For 2071–2100, the revenue gap between annual revenue is higher when considering various price models rather than various GHG emission scenarios. In fact, the correlation coefficient between mean runoff and revenue is 0.16, while it is 0.91 for mean annual price and revenue. Therefore, price is the key factor for determining future revenue, however it is also the least predictable factor.

4.5 The Three Dimensions of Uncertainty

We discuss the main uncertainties detailed in our research, in particular those uncertainties that are most relevant to policy makers. In this regard, we cover the various elements identified by Walker et al. (2003). Based on the above results, the level of uncertainty is classified as statistical, scenarios and ignorance. We also distinguish the nature, i.e. epistemic or variability.

Context:

Level: Climate and energy policy should factor in the high degree of uncertainty. Because it is impossible to predict the GHG emissions and the electricity prices in the long-term, scenarios should be used. Concerning electricity prices, a level of ignorance should be recognized because market architecture and market structure are still evolving.

Nature: In the medium term, these uncertainties have an epistemic nature because they could be reduced by improving models. In the long-term, they should be characterized as ‘variability uncertainty’ because the randomness of nature, human behavior, socio-economic and technological dynamics prevail. Over such a period, the is not a model that appears more relavent than another and our approach, i.e. to compare various models and scenarios, appears the most appropriate.

Model:

Level: The level of uncertainty linked to the model is for the most part statistical.

Nature: One should expect improvements in modeling as significant public and private funding is now available. Uncertainty is therefore of an epistemic nature, although it remains relatively low compared to the variability provoked by runoff, future prices, market design, etc. (see ‘context’ above and ‘inputs’ below).

Inputs:

Level: Uncertainty relating to runoff and electricity price scenarios turns out to be closely linked to ‘context’. Here, we discuss the scenarios as opposed to the outcomes themselves. The nature and level therefore corresponds to climate and energy policy. Concerning data, the electricity price time series shows a statistical level of uncertainty.

Nature: Regarding the nature, one should point out an epistemic uncertainty in the assessment of the initial ice volume and in the downscaling methods (DCM and EDDM). We therefore provide a sensitivity analysis with respect to these inputs. However, most data shows ‘variability uncertainty’.

Parameters:

Level: The parameters are determined by statistical methods (calibration techniques included).

Nature: They are tainted by epistemic uncertainty as far as estimations can be perfected. Given the fact that longer time series will be available in time, estimators will, as a result, improve.

Outcomes:

Level: The level of uncertainty of the intermediate and final outcomes, i.e. runoffs, electricity prices and revenue, are essentially ‘scenario’. The GHG emission and electricity price scenarios directly influence the outcomes. However Mauvoisin’s long-term runoff uncertainty becomes mostly statistical due to the minimal gap between GHG scenarios.

Nature: The nature of the runoff uncertainty evolves with time. In our research, we consider a large panel of GCM-RCM therefore limiting the resulting uncertainty. The main cause of uncertainty is therefore determined by other sources. In the medium term, uncertainty is primarily brought by the three GHG emission scenarios which depend on socio-economic variables. Those variables are barely predictable thus leading to a dominant variability uncertainty. In the long run, the situation switches. The downscaling method significantly impacts the mean runoff for the period 2071–2100. It overtakes the gap between the three GHG emission scenarios, which end up with a similar mean runoff. Climatologists are currently improving the downscaling method. We can therefore state that the long-run uncertainty is mostly epistemic.

The nature of electricity price uncertainty follows the reverse trend. In the medium-term, the epistemic uncertainty is high. Scientists constantly build better models to forecast electricity prices. They also adapt the forecasting in light of new information. The uncertainty up to 2021–2050 will remain mostly epistemic. In the long-run, the nature uncertainty becomes ‘variability’, because it is impossible to forecast precise prices over such a long timeframe regardless of the model’s quality. The unpredictable behavior component becomes fundamental. As already mentioned, the use of different models and sensitivity analyses seems to be the most appropriate approach.

The uncertainty of revenue is directly induced by future runoff and electricity price uncertainty. Following the discussion of the two former paragraphs, the nature of revenue uncertainty is established as both epistemic and variability.

5 Discussion

We should point out that information about uncertainty is of great value to decision-makers. Improving the models reduces the epistemic uncertainty, whereas business and regulatory strategies can address variability. The dimension ‘nature’ shows who should deal with the uncertainty, i.e. scientists or decision-makers.

The ‘level’ may help in identifying the tools that should be considered to manage the uncertainty. In economics, the statistical uncertainty is tackled by risk management methods, e.g. value at risk. The scenario level is close to the knightian definition of uncertainty, i.e. probability cannot be estimated. Many rational decision making methods address these kinds of issues, e.g. minimum regret criteria. Finally, the ignorance cannot really be managed in any way. We cannot assume to know the unknown. However the context of the study must be clearly defined in order to limit the impact of ignorance on the results as far as possible.

This paper discusses the epistemic uncertainty occurring at various levels of the model. However specific literature on the diverse components is wide. The uncertainty relating to the climate modelis discussed at global and regional level by Deque et al. (2007) and Murphy et al. (2004). The various Intergovernmental Panel on Climate Change reports also provide valuable discussion on this issue (IPCC 2015). Monier et al. (2015) present a new framework to assess climate uncertainty which is close to ours. Their results show that the largest uncertainty in terms of temperature comes from climate policy. Gabbi et al. (2014) test five different glacier melt models and show that the choice of the melt approach is crucial. Finally, Teng et al. (2012) analyse a case study where uncertainty is mostly led by GCM rather than the rainfall model. These studies, together with our results, show that uncertainty, like forecasting, is case study and model related.

As pointed out all throughout this paper, price uncertainty is far more critical than runoff uncertainty. Linderoth (2002) tests various energy consumption forecasts performed by the Internation Energy Agency. He shows that forecast errors are significant. Given that price is even less predictable than consumption, we can conclude that the problem is even wider. By arguing that scenario analysis and mixed method are valuable in short-term management too, O’Mahony (2014) supports our approach and conclusion. Therefore, uncertainty related to energy price quickly shifts from epistemic uncertainty to variability.

If decision makers must account for the epistemic uncertainty, they essentially must manage variability. First, they can act on the ‘level’. One way is to develop market design and risk-hedging tools. Future markets already exist but should be enhanced. However the most important is that energy policy can reduce uncertainty at the ignorance level. Several energy utilities at present are facing forms of turmoil that were only partially predictable. The phase-out of nuclear power in some countries or the large subsidies to the renewables are worth mentioning. The alternative way to deal with variability is by increasing flexibility. However, the regulatory framework can represent an obstacle from this point of view. For instance, the water rights in some countries limit the plant operator’s flexibility. The legal framework often makes it difficult to adopt a real option approach. For instance, some hydropower operators must re-negotiate their water right if they want to update the design of their installation. This constraint makes it difficult to manage high uncertainty.

6 Conclusions

Decision-makers cannot avoid uncertainty. Several studies focus on projections, but an assessment of the uncertainties is seldom carried out. Our model integrates runoff and electricity prices in a common framework in order to evaluate revenue. Based on those quantitative results, we carry out an uncertainty analysis, which is a critical issue for the future of hydropower.

Specific tools must be developed in order to tackle uncertainty. Hydropower is a capital-intensive technology with a long lifetime. Forecasting revenue over the entire life of the project is impossible. The limits of previsions should be acknowledged and some methods must be developed accordingly. Assessing uncertainty is only the first step in a strategy to ensure the best possible future for hydropower.

7 Annex

References

Ahmad A, El-Shafie A, Razali S, Mohamad Z (2014) Reservoir Optimization in Water Resources: a Review. Water Resour Manag 28:3391–3405. doi:10.1007/s11269-014-0700-5

Bollerslev T (1986) Generalized autoregressive conditional heteroskedasticity. J Econ 31:307–327. doi:10.1016/0304-4076(86)90063-1

Bosshard T, Kotlarski S, Ewen T, Schär C (2011) Spectral representation of the annual cycle in the climate change signal. Hydrol Earth Syst Sci 15:2777–2788

Box G, Pierce D (1970) Distribution of residual autocorrelations in autoregressive-integrated moving average time series models. J Am Stat Assoc 65:1509–1526

C2SM (2014) www.c2sm.ethz.ch (site accessed 06 February 2014). Center for Climate Systems Modeling, ETHZ

Cheng CT, Wang WC, Xu DM, Chau KW (2008) Optimizing hydropower reservoir operation using hybrid genetic algorithm and chaos. Water Resour Manag 22:895–909. doi:10.1007/s11269-007-9200-1

Creswell J, Plano Clark V (2011) Designing and conducting mixed methods research, 2nd. SAGE Publications

Deque M, Rowell DP, Luethi D, Giorgi F, Christensen JH, Rockel B, Jacob D, Kjellstrom E, De Castro M, Van den Hurk B (2007) An intercomparison of regional climate simulations for Europe: assessing uncertainties in model projections. Clim Chang 81:53–70. doi:10.1007/s10584-006-9228-x

Dueck G, Scheuer T (1990) Threshold accepting. A general purpose optimization algorithm superior to simulated annealing. J Comput Phys 90:161–175

EEA (2000) Cloudy crystal balls - an assessment of recent European and global scenario studies and models www.eea.europa.eu/publications/Environmental_issues_series_17 (site accessed 05 January 2015). European Environment Agency

EEX (2013) www.eex.com (site accessed 04 August 2013). European Energy Exchange

ENSEMBLES project (2013) www.ensembles-eu.org (site accessed 01 October 2013)

Eurostat (2013) ec.europa.eu/eurostat (site accessed 31 August 2013)

Farinotti D, Huss M, Bauder A, Funk M, Truffer M (2009) A method to estimate the ice volume and ice-thickness distribution of alpine glaciers. J Glaciol 55:422–430

Farinotti D, Usselmann S, Huss M, Bauder A, Funk M (2012) Runoff evolution in the Swiss Alps: Projections for selected high-Alpine catchments based on ENSEMBLES scenarios. Hydrol Processes 26:1909–1924

Finger D, Vis M, Huss M, Seibert J (2015) The value of multiple data set calibration versus model complexity for improving the performance of hydrological models in mountain catchments. Water Resour Res 51:1939–1958. doi:10.1002/2014WR015712

FMM (2009) Management reports. Forces motrices de Mauvoisin

Forsund F (2007) Hydropower Economics. In: International Series in Operations Research and Management Science. Springer

Fowler HJ, Blenkinsop S, Tebaldi C (2007) Linking climate change modelling to impacts studies: recent advances in downscaling techniques for hydrological modelling. Int J Climatol 27:1547–1578. doi:10.1002/joc.1556

Francois B, Hingray B, Creutin JD, Hendrickx F (2015) Estimating water system performance under climate change: influence of the management strategy modeling. Water Resour Manag 29:4903–4918. doi:10.1007/s11269-015-1097-5

Gabbi J, Carenzo M, Pellicciotti F, Bauder A, Funk M (2014) A comparison of empirical and physically based glacier surface melt models for long-term simulations of glacier response. J Glaciol 60:1140–1154

Gabbi J, Farinotti D, Bauder A, Maurer H (2012) Ice volume distribution and implications on runoff projections in a glacierized catchment. Hydrol Earth Syst Sci 16:4543–4556

Garcia R, Contreras J, Van Akkeren M, Garcia J (2005) A GARCH forecasting model to predict day-ahead electricity prices. IEEE Trans Power Syst 20:867–874. doi:10.1109/TWRS.2005.846044

Gaudard L (2015) Pumped-storage project: A short to long term investment analysis including climate change. Renew Sust Energy Rev 49:91–99. doi:10.1016/j.rser.2015.04.052

Gaudard L, Gilli M, Romerio F (2013a) Climate change impacts on hydropower management. Water Resour Manag 27:5143–5156

Gaudard L, Romerio F (2014) The future of hydropower in Europe: Interconnecting climate, markets and policies. Environ Sci Policy 37:172–181

Gilli M, Këllezi M, Hysi H (2006) A data-driven optimization heuristic for downside risk minimization. J Risk 8(3):1–18

Hamududu B, Killingtveit A (2012) Assessing climate change impacts on global hydropower. Energies 5:305–322

Hendrickx F, Sauquet E (2013) Impact of warming climate on water management for the Ariege River basin (France). Hydrol Sci J 58:976–993. doi:10.1080/02626667.2013.788790

Hill Clarvis M, Fatichi S, Allan A, Fuhrer J, Stoffel M, Romerio F, Gaudard L, Burlando P, Beniston M, Xoplaki E, Toreti A (2014) Governing and managing water resources under changing hydro-climatic contexts: The case of the Upper-Rhône basin. Environ Sci Policy 43:56–67. doi:10.1016/j.envsci.2013.11.005

Hincal O, Altan-Sakarya AB, Ger AM (2011) Optimization of multireservoir systems by genetic algorithm. Water Resour Manag 25:1465–1487. doi:10.1007/s11269-010-9755-0

Huss M, Farinotti D, Bauder A, Funk M (2008) Modelling runoff from highly glacierized Alpine drainage basins in a changing climate. Hydrol Process 22:3888–3902

IEA (2005) Variability of wind power and other renewables: Management options and strategies. International Energy Agency, Paris

IPCC (2000) Special report on emissions scenarios. www.ipcc.ch (site accessed 14 January 2014). Intergovernmental Panel on Climate Change, Geneva

IPCC (2013) Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovern- mental Panel on Climate Change. In: Stocker TF, Qin D, Plattner G-K, Tignor M, Allen SK, Boschung J, Nauels A, Xia Y, Bex V, Midgley PM (eds). Intergovernmental Panel on Climate Change, Geneva. Cambridge University Press, NY, USA

IPCC (2015) www.ipcc.ch (site accessed 15 November 2015)

Kaminski V (1997) The challenge of pricing and risk managing electricity derivatives. In: The US Power Market. Risk Books, London, pp 149–171

Kougias I, Katsifarakis L, Theodossiou N (2012) Medley multiobjective harmony search algorithm: Application on a water resources management problem. Eur Water 39:41–52

Kowalski K, Stagl S, Madlener R, Omann I (2009) Sustainable energy futures: Methodological challenges in combining scenarios and participatory multi-criteria analysis. European Journal of Operational Research 197:1063–1074. doi:10.1016/j.ejor.2007.12.049. 19th Mini EURO Conference on Operational Research Models and Methods in the Energy Sector, Coimbra, Portugal, Sep 06-08, 2006

Liao X, Zhou J, Ouyang S, Zhang R, Zhang Y (2014) Multi-objective artificial bee colony algorithm for long-term scheduling of hydropower system: A case study of china. Water Util J 7:13–23

Linderoth H (2002) Forecast errors in IEA-countries’ energy consumption. Energy Policy 30:53–61. doi:10.1016/S0301-4215(01)00059-3

Madani K, Lund JR (2010) Estimated impacts of climate warming on california’s high-elevation hydropower. Clim Chang 102:521–538. doi:10.1007/s10584-009-9750-8

Majone B, Villa F, Deidda R, Bellin A (2015) Impact of climate change and water use policies on hydropower potential in the south-eastern alpine region. Science of The Total Environment in press. doi:http://www.sciencedirect.com/science/article/pii/S004896971530067X, 10.1016/j.scitotenv.2015.05.009

Maran S, Volonterio M, Gaudard L (2014) Climate change impacts on hydropower in an alpine catchment. Environ Sci Policy 43:15–25

Meinshausen M, Smith SJ, Calvin K, Daniel JS, Kainuma MLT, Lamarque JF, Matsumoto K, Montzka SA, Raper SCB, Riahi K, Thomson A, Velders GJM, Van Vuuren DP (2011) The RCP greenhouse gas concentrations and their extensions from 1765 to 2300. Clim Chang 109:213–241

MeteoSwiss (2013) www.meteosuisse.ch (site accessed 13 July 2013). Federal Office of Meteorology and Climatology

Monier E, Gao X, Scott JR, Sokolov AP, Schlosser CA (2015) A framework for modeling uncertainty in regional climate change. Clim Chang 131:51–66. doi:10.1007/s10584-014-1112-5

Moscato P, Fontanari J (1990) Stochastic versus deterministic update in simulated annealing. Phys Lett A 146:204–208

Murphy J, Sexton D, Barnett D, Jones G, Webb M, Stainforth D (2004) Quantification of modelling uncertainties in a large ensemble of climate change simulations. Nature 430:768–772. doi:10.1038/nature02771

O’Mahony T (2014) Integrated scenarios for energy: A methodology for the short term. Futures 55:41–57. doi:10.1016/j.futures.2013.11.002

Quintana Segui P, Ribes A, Martin E, Habets F, Boe J (2010) Comparison of three downscaling methods in simulating the impact of climate change on the hydrology of Mediterranean basins. J Hydrol 383:111–124. doi:10.1016/j.jhydrol.2009.09.050

Schaefli B (2015) Projecting hydropower production under future climates: a guide for decision-makers and modelers to interpret and design climate change impact assessments. WIREs Water 2:271–289

Schaefli B, Hingray B, Musy A (2007) Climate change and hydropower production in the Swiss Alps: Quantification of potential impacts and related modelling uncertainties. Hydrol Earth Syst Sci 11:1191–1205

SHARE project (2013) Handbook: A problem solving approach for sustainable management of hydropower and river ecosystems in the Alps. www.alpine-space.eu (site accessed 14 January 2014)

Tanaka SK, Zhu T, Lund JR, Howitt RE, Jenkins MW, Pulido MA, Tauber M, Ritzema RS, Ferreira IC (2006) Climate warming and water management adaptation for California. Clim Chang 76:361–387. doi:10.1007/s10584-006-9079-5

Teegavarapu R, Simonovic S (2002) Optimal operation of reservoir systems using simulated annealing. Water Resour Manag 16:401–428. doi:10.1023/A:1021993222371

Teng J, Vaze J, Chiew FHS, Wang B, Perraud JM (2012) Estimating the relative uncertainties sourced from gcms and hydrological models in modeling climate change impact on runoff. J Hydrometeorol 13:122–139. doi:10.1175/JHM-D-11-058.1

Terrier S, Jordan F, Schleiss A, Haeberli W, Huggel C, Künzler M (2011) Optimized and adapted hydropower management considering glacier shrinkage scenarios in the Swiss Alps. In: Proceeding of International Symposium on Dams and Reservoirs under Changing Challenges. CRC Press, Taylor & Francis Group, London, pp 497–508

THINK Project (2013) Some thinking on European energy policy: Think tank advising the European Commission on mid- and long-term energy policy. think.eui.eu (site accessed 13 January 2014)

Tsay S.R (2010) Analysis of Financial Time Series. Wiley

Uhlenbeck G, Ornstein L (1930) On the theory of Brownian motion. Phys Rev 36:823–841

Walker W, Harremoës P, Rotmans J, Van Des Sluijs J, Van Asselt M, Janssen P, Krayer Von Krauss M (2003) Defining uncertainty - a conceptual basis for uncertainty management in model-based decision support. Integr Assess 4:5–17

Winker P, Fang K (1997) Application of threshold-accepting to the evaluation of the discrepancy of a set of points. Siam J Numer Anal 34:2028–2042. doi:10.1137/S0036142995286076

Wooldridge JM (2009) Introductory Econometrics: A Modern Approach, 4th. CENGAGE Learning, Canada

Acknowledgments

This study was carried out within the framework of the Swiss research programme 61(www.nfp61.ch). We are grateful to prof. Martin Funk and prof. Manfred Gilli for providing valuable scientific inputs and proofreading the paper. We also thank Force Motrices de Mauvoisin for sharing their expertise.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Gaudard, L., Gabbi, J., Bauder, A. et al. Long-term Uncertainty of Hydropower Revenue Due to Climate Change and Electricity Prices. Water Resour Manage 30, 1325–1343 (2016). https://doi.org/10.1007/s11269-015-1216-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-015-1216-3