Abstract

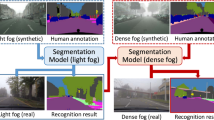

This work addresses the problem of semantic foggy scene understanding (SFSU). Although extensive research has been performed on image dehazing and on semantic scene understanding with clear-weather images, little attention has been paid to SFSU. Due to the difficulty of collecting and annotating foggy images, we choose to generate synthetic fog on real images that depict clear-weather outdoor scenes, and then leverage these partially synthetic data for SFSU by employing state-of-the-art convolutional neural networks (CNN). In particular, a complete pipeline to add synthetic fog to real, clear-weather images using incomplete depth information is developed. We apply our fog synthesis on the Cityscapes dataset and generate Foggy Cityscapes with 20,550 images. SFSU is tackled in two ways: (1) with typical supervised learning, and (2) with a novel type of semi-supervised learning, which combines (1) with an unsupervised supervision transfer from clear-weather images to their synthetic foggy counterparts. In addition, we carefully study the usefulness of image dehazing for SFSU. For evaluation, we present Foggy Driving, a dataset with 101 real-world images depicting foggy driving scenes, which come with ground truth annotations for semantic segmentation and object detection. Extensive experiments show that (1) supervised learning with our synthetic data significantly improves the performance of state-of-the-art CNN for SFSU on Foggy Driving; (2) our semi-supervised learning strategy further improves performance; and (3) image dehazing marginally advances SFSU with our learning strategy. The datasets, models and code are made publicly available.

Similar content being viewed by others

References

Abu Alhaija, H., Mustikovela, S. K., Mescheder, L., Geiger, A., & Rother, C. (2017). Augmented reality meets deep learning for car instance segmentation in urban scenes. In Proceedings of the British machine vision conference (BMVC).

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., & Süsstrunk, S. (2012). SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 34(11), 2274–2282.

Arbeláez, P., Pont-Tuset, J., Barron, J., Marques, F., & Malik, J. (2014). Multiscale combinatorial grouping. In IEEE conference on computer vision and pattern recognition (CVPR).

Bar Hillel, A., Lerner, R., Levi, D., & Raz, G. (2014). Recent progress in road and lane detection: A survey. Machine Vision and Applications, 25(3), 727–745.

Berman, D., Treibitz, T., & Avidan, S. (2016). Non-local image dehazing. In IEEE conference on computer vision and pattern recognition (CVPR).

Bronte, S., Bergasa, L. M., & Alcantarilla, P. F. (2009). Fog detection system based on computer vision techniques. In International IEEE conference on intelligent transportation systems.

Brostow, G. J., Shotton, J., Fauqueur, J., & Cipolla, R. (2008). Segmentation and recognition using structure from motion point clouds. In European conference on computer vision.

Buch, N., Velastin, S. A., & Orwell, J. (2011). A review of computer vision techniques for the analysis of urban traffic. IEEE Transactions on Intelligent Transportation Systems, 12(3), 920–939.

Buciluǎ, C., Caruana, R., & Niculescu-Mizil, A. (2006). Model compression. In International conference on knowledge discovery and data mining (SIGKDD).

Camplani, M., & Salgado, L. (2012). Efficient spatio-temporal hole filling strategy for Kinect depth maps. In SPIE/IS&T electronic imaging.

Chen, L. C., Papandreou, G., Kokkinos, I., Murphy, K., & Yuille, A. L. (2018). DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(4), 834–848.

Colomb, M., Hirech, K., André, P., Boreux, J. J., Lacote, P., & Dufour, J. (2008). An innovative artificial fog production device improved in the European project FOG. Atmospheric Research, 87(3), 242–251.

Comaniciu, D., & Meer, P. (2002). Mean shift: A robust approach toward feature space analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(5), 603–619.

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S., & Schiele, B. (2016). The Cityscapes dataset for semantic urban scene understanding. In IEEE conference on computer vision and pattern recognition (CVPR).

Dai, D., Kroeger, T., Timofte, R., & Van Gool, L. (2015). Metric imitation by manifold transfer for efficient vision applications. In IEEE conference on computer vision and pattern recognition (CVPR).

Dai, D., & Yang, W. (2011). Satellite image classification via two-layer sparse coding with biased image representation. IEEE Geoscience and Remote Sensing Letters, 8(1), 173–176.

Dosovitskiy, A., Fischery, P., Ilg, E., Häusser, P., Hazirbas, C., Golkov, V., van der Smagt, P., Cremers, D., & Brox, T. (2015). FlowNet: Learning optical flow with convolutional networks. In IEEE international conference on computer vision (ICCV).

Everingham, M., Van Gool, L., Williams, C. K., Winn, J., & Zisserman, A. (2010). The PASCAL visual object classes (VOC) challenge. IJCV, 88(2), 303–338.

Fattal, R. (2008). Single image dehazing. ACM Transactions on Graphics (TOG), 27(3), 72.

Fattal, R. (2014). Dehazing using color-lines. ACM Transactions on Graphics (TOG), 34(1), 13.

Federal Meteorological Handbook No. 1: Surface Weather Observations and Reports. (2005). U.S. Department of Commerce/National Oceanic and Atmospheric Administration.

Gallen, R., Cord, A., Hautière, N., & Aubert, D. (2011). Towards night fog detection through use of in-vehicle multipurpose cameras. In IEEE intelligent vehicles symposium (IV).

Gallen, R., Cord, A., Hautière, N., Dumont, É., & Aubert, D. (2015). Nighttime visibility analysis and estimation method in the presence of dense fog. IEEE Transactions on Intelligent Transportation Systems, 16(1), 310–320.

Geiger, A., Lenz, P., & Urtasun, R. (2012). Are we ready for autonomous driving? The KITTI vision benchmark suite. In IEEE conference on computer vision and pattern recognition (CVPR).

Girshick, R. (2015) Fast R-CNN. In International conference on computer vision (ICCV).

Gupta, A., Vedaldi, A., & Zisserman, A. (2016). Synthetic data for text localisation in natural images. In IEEE conference on computer vision and pattern recognition.

Gupta, S., Hoffman, J., & Malik, J. (2016). Cross modal distillation for supervision transfer. In The IEEE conference on computer vision and pattern recognition (CVPR).

Hautière, N., Tarel, J. P., Lavenant, J., & Aubert, D. (2006). Automatic fog detection and estimation of visibility distance through use of an onboard camera. Machine Vision and Applications, 17(1), 8–20.

He, K., Sun, J., & Tang, X. (2011). Single image haze removal using dark channel prior. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(12), 2341–2353.

He, K., Sun, J., & Tang, X. (2013). Guided image filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(6), 1397–1409.

Hinton, G., Vinyals, O., & Dean, J. (2015). Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531.

Hirschmüller, H. (2008). Stereo processing by semiglobal matching and mutual information. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30(2), 328–341.

Hoffman, J., Tzeng, E., Park, T., Zhu, J. Y., Isola, P., Saenko, K., Efros, A. A., & Darrell, T. (2017). CyCADA: Cycle-consistent adversarial domain adaptation. ArXiv e-prints.

Janai, J., Güney, F., Behl, A., & Geiger, A. (2017). Computer vision for autonomous vehicles: Problems, datasets and state-of-the-art. arXiv preprint arXiv:1704.05519.

Jensen, M. B., Philipsen, M. P., Møgelmose, A., Moeslund, T. B., & Trivedi, M. M. (2016). Vision for looking at traffic lights: Issues, survey, and perspectives. IEEE Transactions on Intelligent Transportation Systems, 17(7), 1800–1815.

Johnson-Roberson, M., Barto, C., Mehta, R., Sridhar, S. N., Rosaen, K., & Vasudevan, R. (2017). Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks? In IEEE international conference on robotics and automation.

Kendall, M. G. (1938). A new measure of rank correlation. Biometrika, 30(1/2), 81–93.

Kendall, M. G., & Smith, B. B. (1940). On the method of paired comparisons. Biometrika, 31(3/4), 324–345.

Koschmieder, H. (1924). Theorie der horizontalen Sichtweite. Beitrage zur Physik der freien Atmosphäre.

Levin, A., Lischinski, D., & Weiss, Y. (2004). Colorization using optimization. In ACM SIGGRAPH.

Levinkov, E., & Fritz, M. (2013). Sequential bayesian model update under structured scene prior for semantic road scenes labeling. In IEEE international conference on computer vision.

Li, Y., Tan, R. T., & Brown, M. S. (2015). Nighttime haze removal with glow and multiple light colors. In IEEE international conference on computer vision (ICCV).

Li, Y., You, S., Brown, M. S., & Tan, R. T. (2016). Haze visibility enhancement: A survey and quantitative benchmarking. CoRR arXiv:1607.06235.

Lin, G., Milan, A., Shen, C., & Reid, I. (2017). Refinenet: Multi-path refinement networks with identity mappings for high-resolution semantic segmentation. In IEEE conference on computer vision and pattern recognition (CVPR).

Ling, Z., Fan, G., Wang, Y., & Lu, X. (2016). Learning deep transmission network for single image dehazing. In IEEE international conference on image processing (ICIP).

Miclea, R. C., & Silea, I. (2015). Visibility detection in foggy environment. In International conference on control systems and computer science.

Narasimhan, S. G., & Nayar, S. K. (2002). Vision and the atmosphere. International Journal of Computer Vision, 48(3), 233–254.

Narasimhan, S. G., & Nayar, S. K. (2003). Contrast restoration of weather degraded images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(6), 713–724.

Negru, M., Nedevschi, S., & Peter, R. I. (2015). Exponential contrast restoration in fog conditions for driving assistance. IEEE Transactions on Intelligent Transportation Systems, 16(4), 2257–2268.

Nishino, K., Kratz, L., & Lombardi, S. (2012). Bayesian defogging. International Journal of Computer Vision, 98(3), 263–278.

Pavlić, M., Belzner, H., Rigoll, G., & Ilić, S. (2012). Image based fog detection in vehicles. In IEEE intelligent vehicles symposium.

Pavlić, M., Rigoll, G., & Ilić, S. (2013). Classification of images in fog and fog-free scenes for use in vehicles. In IEEE intelligent vehicles symposium (IV).

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in neural information processing systems (pp. 91–99).

Ren, W., Liu, S., Zhang, H., Pan, J., Cao, X., & Yang, M. H. (2016). Single image dehazing via multi-scale convolutional neural networks. In European conference on computer vision.

Richter, S. R., Vineet, V., Roth, S., & Koltun, V. (2016). Playing for data: Ground truth from computer games. In European conference on computer vision. Springer.

Ros, G., Sellart, L., Materzynska, J., Vazquez, D., & Lopez, A. M. (2016). The SYNTHIA dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In The IEEE conference on computer vision and pattern recognition (CVPR).

Rubinstein, M., Gutierrez, D., Sorkine, O., & Shamir, A. (2010). A comparative study of image retargeting. ACM Transactions on Graphics, 29(6), 160:1–160:10.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., et al. (2015). Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3), 211–252.

Shen, J., & Cheung, S. C. S. (2013). Layer depth denoising and completion for structured-light RGB-D cameras. In IEEE conference on computer vision and pattern recognition (CVPR).

Silberman, N., Hoiem, D., Kohli, P., & Fergus, R. (2012). Indoor segmentation and support inference from RGBD images. In European conference on computer vision.

Spinneker, R., Koch, C., Park, S. B., & Yoon, J. J. (2014). Fast fog detection for camera based advanced driver assistance systems. In International IEEE conference on intelligent transportation systems (ITSC).

Tan, R. T. (2008). Visibility in bad weather from a single image. In IEEE conference on computer vision and pattern recognition (CVPR).

Tang, K., Yang, J., & Wang, J. (2014). Investigating haze-relevant features in a learning framework for image dehazing. In IEEE conference on computer vision and pattern recognition.

Tarel, J. P., Hautière, N. (2009). Fast visibility restoration from a single color or gray level image. In IEEE international conference on computer vision.

Tarel, J. P., Hautière, N., Caraffa, L., Cord, A., Halmaoui, H., & Gruyer, D. (2012). Vision enhancement in homogeneous and heterogeneous fog. IEEE Intelligent Transportation Systems Magazine, 4(2), 6–20.

Tarel, J. P., Hautière, N., Cord, A., Gruyer, D., & Halmaoui, H. (2010). Improved visibility of road scene images under heterogeneous fog. In IEEE intelligent vehicles symposium (pp. 478–485).

Vázquez, D., Lopez, A. M., Marin, J., Ponsa, D., & Geronimo, D. (2014). Virtual and real world adaptation for pedestrian detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(4), 797–809.

Wang, L., Jin, H., Yang, R., & Gong, M. (2008). Stereoscopic inpainting: Joint color and depth completion from stereo images. In IEEE conference on computer vision and pattern recognition (CVPR).

Wang, W., Yuan, X., Wu, X., & Liu, Y. (2017). Fast image dehazing method based on linear transformation. IEEE Transactions on Multimedia, 19(6), 1142–1155.

Wang, Y. K., & Fan, C. T. (2014). Single image defogging by multiscale depth fusion. IEEE Transactions on Image Processing, 23(11), 4826–4837.

Xu, Y., Wen, J., Fei, L., & Zhang, Z. (2016). Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access, 4, 165–188.

Yu, F., & Koltun, V. (2016). Multi-scale context aggregation by dilated convolutions. In International conference on learning representations.

Zhang, H., Sindagi, V. A., & Patel, V. M. (2017). Joint transmission map estimation and dehazing using deep networks. CoRR arXiv:1708.00581.

Zhang, J., Cao, Y., & Wang, Z. (2014). Nighttime haze removal based on a new imaging model. In IEEE international conference on image processing (ICIP) (pp. 4557–4561).

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. In IEEE conference on computer vision and pattern recognition (CVPR).

Acknowledgements

The authors would like to thank Kevis Maninis for useful discussions. This work is funded by Toyota Motor Europe via the research project TRACE-Zürich.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Adrien Gaidon, Florent Perronnin and Antonio Lopez.

Rights and permissions

About this article

Cite this article

Sakaridis, C., Dai, D. & Van Gool, L. Semantic Foggy Scene Understanding with Synthetic Data. Int J Comput Vis 126, 973–992 (2018). https://doi.org/10.1007/s11263-018-1072-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-018-1072-8