Abstract

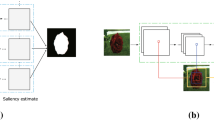

Existing computational models for salient object detection primarily rely on hand-crafted features, which are only able to capture low-level contrast information. In this paper, we learn the hierarchical contrast features by formulating salient object detection as a binary labeling problem using deep learning techniques. A novel superpixelwise convolutional neural network approach, called SuperCNN, is proposed to learn the internal representations of saliency in an efficient manner. In contrast to the classical convolutional networks, SuperCNN has four main properties. First, the proposed method is able to learn the hierarchical contrast features, as it is fed by two meaningful superpixel sequences, which is much more effective for detecting salient regions than feeding raw image pixels. Second, as SuperCNN recovers the contextual information among superpixels, it enables large context to be involved in the analysis efficiently. Third, benefiting from the superpixelwise mechanism, the required number of predictions for a densely labeled map is hugely reduced. Fourth, saliency can be detected independent of region size by utilizing a multiscale network structure. Experiments show that SuperCNN can robustly detect salient objects and outperforms the state-of-the-art methods on three benchmark datasets.

Similar content being viewed by others

References

Achanta, R., Hemami, S., Estrada, F., & Susstrunk, S. (2009). Frequency-tuned salient region detection. In CVPR (pp. 1597–1604).

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., & Ssstrunk, S. (2012). SLIC superpixels compared to state-of-the-art superpixel methods. IEEE TPAMI (pp. 2274–2282).

Arbelaez, P., Maire, M., Fowlkes, C., & Malik, J. (2011). Contour detection and hierarchical image segmentation. IEEE TPAMI, 33(5), 898–916.

Avidan, S., & Shamir, A. (2007). Seam carving for content-aware image resizing. ACM TOG, 26(3), 10.

Bell, R., & Koren, Y. (2007). Lessons from the netflix prize challenge. SIGKDD Explorations Newsletter, 9(2), 75–79.

Borji, A. (2012). Boosting bottom-up and top-down visual features for saliency estimation. In CVPR (pp. 438–445).

Borji, A., Sihite, D., & Itti, L. (2012). Salient object detection: A benchmark. In ECCV.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

Cheng, M., Zhang, G., Mitra, N., Huang, X., & Hu, S. (2011). Global contrast based salient region detection. In CVPR (pp. 409–416).

Ciresan, D., Meier, U., Masci, J., & Schmidhuber, J. (2011). A committee of neural networks for traffic sign classification. In IJCNN (pp. 1918–1921).

Ciresan, D.C., Meier, U., & Schmidhuber, J. (2012). Multi-column deep neural networks for image classification. In CVPR (pp. 3642–3649).

Collobert, R., Kavukcuoglu, K., & Farabet, C. (2011). Torch7: A Matlab-like environment for machine learning. In BigLearn NIPS Workshop.

Einhauser, W., & Konig, P. (2003). Does luminance-contrast contribute to a saliency map for overt visualn attention? European Journal of Neuroscience, 17(5), 1089–1097.

Farabet, C., Couprie, C., Najman, L., & Lecun, Y. (2013). Learning hierarchical features for scene labeling. IEEE TPAMI, 35(8), 1915–1929.

Girshick, R., Donahue, J., Darrell, T., & Malik, J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR.

Goferman, S., Zelnik-Manor, L., & Tal, A. (2010). Context-aware saliency detection. In CVPR.

Harel, J., Koch, C., & Perona, P. (2007). Graph-based visual saliency. In NIPS (pp. 545–552).

He, S., & Lau, R. (2014). Saliency detection with flash and no-flash image pairs. In ECCV (pp. 110–124).

Hinton, G., Srivastava, N., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2012). Improving neural networks by preventing co-adaptation of feature detectors. CoRR abs/1207.0580.

Intriligator, J., & Cavanagh, P. (2001). The spatial resolution of visual attention. Cognitive Psychology, 43(3), 171–216.

Itti, L., & Koch, C. (2001). Computational modelling of visual attention. Nature Reviews Neuroscience, 2(3), 194–203.

Itti, L., Koch, C., & Niebur, E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE TPAMI, 20(11), 1254–1259.

Jiang, H., Wang, J., Yuan, Z., Liu, T., & Zheng, N. (2011). Automatic salient object segmentation based on context and shape prior. In BMVC.

Jiang, H., Wang, J., Yuan, Z., Wu, Y., Zheng, N., & Li, S. (2013). Salient object detection: A discriminative regional feature integration approach. In CVPR (pp. 2083–2090).

Jiang, P., Ling, H., Yu, J., & Peng, J. (2013). Salient region detection by ufo: Uniqueness, focusness and objectness. In ICCV.

Koch, C., & Ullman, S. (1985). Shifts in selective visual attention: Towards the underlying neural circuitry. Human Neurobiology, 4, 219–227.

Krizhevsky, A., Sutskever, I., & Hinton, G. (2012). Imagenet classification with deep convolutional neural networks. In NIPS (pp. 1106–1114).

LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324.

Lee, T., & Mumford, D. (2003). Hierarchical Bayesian inference in the visual cortex. Journal of the Optical Society of America A, 20(7), 1434–1448.

Li, S., Liu, Z.Q., & Chan, A. (2014). Heterogeneous multi-task learning for human pose estimation with deep convolutional neural network. In IJCV (pp. 1–18).

Liu, T., Yuan, Z., Sun, J., Wang, J., Zheng, N., Tang, X., et al. (2011). Learning to detect a salient object. IEEE TPAMI, 33(2), 353–367.

Lu, Y., Zhang, W., Jin, C., & Xue, X. (2012). Learning attention map from images. In CVPR (pp. 1067–1074).

Ma, Y., & Zhang, H. (2003). Contrast-based image attention analysis by using fuzzy growing. In ACM Multimedia (pp. 374–381).

Macaluso, E., Frith, C., & Driver, J. (2002). Directing attention to locations and to sensory modalities: Multiple levels of selective processing revealed with pet. Cerebral Cortex, 12(4), 357–368.

Marchesotti, L., Cifarelli, C., & Csurka, G. (2009). A framework for visual saliency detection with applications to image thumbnailing. In CVPR (pp. 2232–2239).

Margolin, R., Tal, A., & Zelnik-Manor, L. (2013). What makes a patch distinct? In CVPR.

Ming-Chng, Warrell, J., Lin, W., Zheng, S., Vineet, V., & Crook, N. (2013). Efficient salient region detection with soft image abstraction. In ICCV.

Moore, A., Prince, S., Warrell, J., Mohammed, U., & Jones, G. (2008). Superpixel lattices. In CVPR (pp. 1–8).

Osadchy, M., LeCun, Y., & Miller, M. (2007). Synergistic face detection and pose estimation with energy-based models. Journal of Machine Learning Research, 8, 1197–1215.

Parkhurst, D., Law, K., & Niebur, E. (2002). Modeling the role of salience in the allocation of overt visual attention. Vision Research, 42(1), 107–123.

Perazzi, F., Krähenbühl, P., Pritch, Y., & Hornung, A. (2012). Saliency filters: Contrast based filtering for salient region detection. In CVPR (pp. 733–740).

Pinheiro, P., & Collobert, R. (2014). Recurrent convolutional neural networks for scene parsing. In ICML (pp. 82–90).

Shen, C., Mingli, S., & Zhao, Q. (2012). Learning high-level concepts by training a deep network on eye fixations. In Deep Learning and Unsupervised Feature Learning NIPS Workshop.

Sun, Y., Wang, X., & Tang, X. (2013). Deep convolutional network cascade for facial point detection. In CVPR (pp. 3476–3483).

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., & Rabinovich, A. (2014). Going deeper with convolutions. CoRR abs/1409.4842.

Tatler, B. (2007). The central fixation bias in scene viewing: Selecting an optimal viewing position independently of motor biases and image feature distributions. Journal of Vision, 7(14), 4, 1–17.

Toet, A. (2011). Computational versus psychophysical bottom-up image saliency: A comparative evaluation study. IEEE TPAMI, 33(11), 2131–2146.

Winnemoller, H., Kyprianidis, J., & Olsen, S. (2012). Xdog: An extended difference-of-gaussians compendium including advanced image stylization. Computers & Graphics, 36(6), 740–753.

Yan, Q., Xu, L., Shi, J., & Jia, J. (2013). Hierachical saliency detection. In CVPR.

Yang, C., Zhang, L., Lu, H., Ruan, X., & Yang, M. (2013). Saliency detection via graph-based manifold ranking. In CVPR.

Acknowledgments

We would like to thank the anonymous reviewers for their insightful comments and constructive suggestions. The work described in this paper was partially supported by a GRF Grant and an ECS grant from the RGC of Hong Kong (RGC Ref.: CityU 115112 and CityU 21201914).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Cordelia Schmid.

Rights and permissions

About this article

Cite this article

He, S., Lau, R.W.H., Liu, W. et al. SuperCNN: A Superpixelwise Convolutional Neural Network for Salient Object Detection. Int J Comput Vis 115, 330–344 (2015). https://doi.org/10.1007/s11263-015-0822-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-015-0822-0