Abstract

The level set approach has proven widely successful in the study of inverse problems for interfaces, since its systematic development in the 1990s. Recently it has been employed in the context of Bayesian inversion, allowing for the quantification of uncertainty within the reconstruction of interfaces. However, the Bayesian approach is very sensitive to the length and amplitude scales in the prior probabilistic model. This paper demonstrates how the scale-sensitivity can be circumvented by means of a hierarchical approach, using a single scalar parameter. Together with careful consideration of the development of algorithms which encode probability measure equivalences as the hierarchical parameter is varied, this leads to well-defined Gibbs-based MCMC methods found by alternating Metropolis–Hastings updates of the level set function and the hierarchical parameter. These methods demonstrably outperform non-hierarchical Bayesian level set methods.

Similar content being viewed by others

Notes

i.e., the function has s weak (possibly fractional) derivatives in the Sobolev sense, and the \(\lfloor s \rfloor \)th classical derivative is Hölder with exponent \(s-\lfloor s \rfloor \).

For any subset \(A\subset \mathbb {R}^d\) we will denote by \(\overline{A}\) its closure in \(\mathbb {R}^d\).

The thresholding function F is defined pointwise, so can be considered to be defined on either \(\mathbb {R}^N\) or \(\mathbb {R}\), with \(F(u)_n = F(u_n)\).

KL modes are the eigenfunctions of the covariance operator, here ordered by decreasing eigenvalue.

In the EIT literature the conductivity field is often denoted \(\sigma \); however, we have already used this in denoting the marginal variance of random fields.

References

Adler, A., Lionheart, W.R.B.: Uses and abuses of EIDORS: an extensible software base for EIT. Physiol. Meas. 27(5), S25–S42 (2006)

Agapiou, S., Bardsley, J.M., Papaspiliopoulos, O., Stuart, A.M.: Analysis of the Gibbs sampler for hierarchical inverse problems. J. Uncertain. Quantif. 2, 511–544 (2014)

Agapiou, S., Bardsley, J.M., Papaspiliopoulos, O., Stuart., A.M.: Analysis of the Gibbs sampler for hierarchical inverse problems. SIAM/ASA J. Uncertain. Quantif. 2(1), 511–544 (2014)

Alvarez, L., Morel, J.M.: Formalization and computational aspects of image analysis. Acta Numer. 3, 1–59 (1994)

Arbogast, T., Wheeler, M.F., Yotov, I.: Mixed finite elements for elliptic problems with tensor coefficients as cell-centered finite differences. SIAM J. Numer. Anal. 34, 828–852 (1997)

Bear, J.: Dynamics of Fluids in Porous Media. Dover Publications, New York (1972)

Beskos, A., Roberts, G.O., Stuart, A.M., Voss, J.: MCMC methods for diffusion bridges. Stoch. Dyn. 8, 319–350 (2008)

Bishop, C.M.: Pattern Recognition and Machine Learning. Springer, New York (2006)

Bolin, D., Lindgren, F.: Excursion and contour uncertainty regions for latent Gaussian models. J. R. Stat. Soc. Ser. B 77(1), 85–106 (2015)

Borcea, L.: Electrical impedance tomography. Inverse Probl. 18, R99–R136 (2002)

Burger, M.: A level set method for inverse problems. Inverse Probl. 17(5), 1327–1355 (2001)

Calvetti, D., Somersalo, E.: A Gaussian hypermodel to recover blocky objects. Inverse Probl. 23(2), 733–754 (2007)

Calvetti, D., Somersalo, E.: Hypermodels in the Bayesian imaging framework. Inverse Probl. 24(3), 34013 (2008)

Carrera, J., Neuman, S.P.: Estimation of aquifer parameters under transient and steady state conditions: 3. application to synthetic and field data. Water Resour. Res. 22(2), 228–242 (1986)

Chung, E.T., Chan, T.F., Tai, X.-C.: Electrical impedance tomography using level set representation and total variational regularization. J. Comput. Phys. 205(1), 357–372 (2005)

Cotter, S.L., Roberts, G.O., Stuart, A.M., White, D.: MCMC methods for functions modifying old algorithms to make them faster. Stat. Sci. 28(3), 424–446 (2013)

Da Prato, G., Zabczyk, J.: Second Order Partial Differential Equations in Hilbert Spaces, vol. 293. Cambridge University Press, Cambridge (2002)

Dashti, M., Stuart, A.M.: The Bayesian approach to inverse problem. In: Ghanem, R., Higdon, D., Owhadi, H. (eds.) Handbook of Uncertainty Quantification. Springer, Heidelberg (2016)

Dorn, O., Lesselier, D.: Level set methods for inverse scattering. Inverse Probl. 22(4), R67–R131 (2006)

Dunlop, M.M., Stuart, A.M.: The Bayesian formulation of EIT: analysis and algorithms. arXiv:1508.04106 (2015)

Filippone, M., Girolami, M.: Pseudo-marginal Bayesian inference for Gaussian processes. IEEE Trans. Pattern Anal. Mach. Intell. 36(11), 2214–2226 (2014)

Franklin, J.N.: Well posed stochastic extensions of ill posed linear problems. J. Math. Anal. Appl. 31(3), 682–716 (1970)

Fuglstad, G-A., Simpson, D., Lindgren, F., Rue, H.: Interpretable priors for hyperparameters for Gaussian random fields. arXiv:1503.00256 (2015)

Geirsson, Ó.P, Hrafnkelsson, B., Simpson, D., Siguroarson H.: The MCMC split sampler: a block Gibbs sampling scheme for latent Gaussian models. arXiv:1506.06285 (2015)

Girolami, M., Calderhead, B.: Riemann manifold Langevin and Hamiltonian Monte Carlo methods. J. R. Stat. Soc. Ser. B 73(2), 123–214 (2011)

Hairer, M., Stuart, A.M., Vollmer, S.J.: Spectral gaps for Metropolis–Hastings algorithms in infinite dimensions. Ann. Appl. Prob. 24, 2455–2490 (2014)

Hanke, M.: A regularizing Levenberg–Marquardt scheme, with applications to inverse groundwater filtration problems. Inverse Probl. 13, 79–95 (1997)

Iglesias, M.A.: A regularizing iterative ensemble Kalman method for PDE-constrained inverse problems. Inverse Probl. 32(2), 025002 (2016)

Iglesias, M.A., Dawson, C.: The representer method for state and parameter estimation in single-phase Darcy flow. Comput. Methods Appl. Mech. Eng. 196(1), 4577–4596 (2007)

Iglesias, M.A., Law, K.J.H., Stuart, A.M.: The ensemble Kalman filter for inverse problems. Inverse Probl. 29(4), 045001 (2013)

Iglesias, M.A., Lu,Y., Stuart, A.M.: A Bayesian level set method for geometric inverse problems. Interfaces and Free Boundary Problems, (2016) (to appear)

Kaipio, J.P., Somersalo, E.: Statistical and Computational Inverse Problems. Springer, New York (2005)

Lasanen, S.: Non-Gaussian statistical inverse problems. Part I: posterior distributions. Inverse Probl. Imagin. 6(2), 215–266 (2012)

Lasanen, S.: Non-Gaussian statistical inverse problems. Part II: posterior convergence for approximated unknowns. Inverse Probl. Imag. 6(2), 215–266 (2012)

Lasanen, S., Huttunen, J.M.J., Roininen, L.: Whittle-Matérn priors for Bayesian statistical inversion with applications in electrical impedance tomography. Inverse Probl. Imag. 8(2), 561–586 (2014)

Lehtinen, M.S., Paivarinta, L., Somersalo, E.: Linear inverse problems for generalised random variables. Inverse Probl. 5(4), 599–612 (1999)

Lindgren, F., Rue, H.: Bayesian spatial modelling with R-INLA. J. Stat. Softw. 63(19), 63–76 (2015)

Lorentzen, R.J., Flornes, K.M., Naevdal, G.: History matching channelized reservoirs using the ensemble Kalman filter. Soc Pet. Eng. J. 17(1), 122–136 (2012)

Lorentzen, R.J., Nævdal, G., Shafieirad, A.: Estimating facies fields by use of the ensemble Kalman filter and distance functions-applied to shallow-marine environments. Soc. Pet. Eng. J. 3, 146–158 (2012)

Mandelbaum, A.: Linear estimators and measurable linear transformations on a Hilbert space. Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 65(3), 385–397 (1984)

Matérn, B.: Spatial Variation, vol. 36. Springer Science & Business Media, Berlin (2013)

Marshall, R.J., Mardia, K.V.: Maximum likelihood estimation of models for residual covariance in spatial regression. Biometrika 71(1), 135–146 (1984)

Osher, S., Sethian, J.A.: Fronts propagating with curvature dependent speed: algorithms based on Hamilton–Jacobi formulations. J. Comput. Phys. 79, 12–49 (1988)

Ping, J., Zhang, D.: History matching of channelized reservoirs with vector-based level-set parameterization. Soc Pet. Eng. J. 19, 514–529 (2014)

Rasmussen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning. The MIT Press, Cambridge (2006)

Robert, C., Casella, G.: Monte Carlo Statistical Methods. Springer Science & Business Media, Berlin (2013)

Santosa, F.: A level-set approach for inverse problems involving obstacles. ESAIM 1(1), 17–33 (1996)

Sapiro, G.: Geometric Partial Differential Equations and Image Analysis. Cambridge University Press, Cambridge (2006)

Srinivasa Varadhan, S.R.: Probability Theory. Courant Lecture Notes. Courant Institute of Mathematical Sciences, New York (2001)

Somersalo, E., Cheney, M., Isaacson, D.: Existence and uniqueness for electrode models for electric current computed tomography. SIAM J. Appl. Math. 52(4), 1023–1040 (1992)

Stein, M.L.: Interpolation of Spatial Data: Some Theory for Kriging. Springer Science & Business Media, Berlin (2012)

Stuart, A.M.: Inverse problems : a Bayesian perspective. Acta Numer. 19, 451–559 (2010)

Tai, X.-C., Chan, T.F.: A survey on multiple level set methods with applications for identifying piecewise constant functions. Int. J. Numer. Anal. Model. 1(1), 25–48 (2004)

Tierney, L.: A note on Metropolis–Hastings kernels for general state spaces. Ann. Appl. Prob. 8(1), 1–9 (1998)

van der Vaart, A.W., van Zanten, J.H.: Adaptive Bayesian estimation using a Gaussian random field with inverse gamma bandwidth. Ann. Stat. 37, 2655–2675 (2009)

Xie, J., Efendiev, Y., Datta-Gupta, A.: Uncertainty quantification in history matching of channelized reservoirs using Markov chain level set approaches. Soc. Pet. Eng. 1, 49–76 (2011)

Zhang, H.: Inconsistent estimation and asymptotically equal interpolations in model-based geostatistics. J. Am. Stat. Assoc. 99(465), 250–261 (2004)

Acknowledgments

AMS is grateful to DARPA, EPSRC, and ONR for the financial support. MMD was supported by the EPSRC-funded MASDOC graduate training program. The authors are grateful to Dan Simpson for helpful discussions. The authors are also grateful for discussions with Omiros Papaspiliopoulos about links with probit. The authors would also like to thank the two anonymous referees for their comments that have helped improve the quality of the paper. This research utilized Queen Mary’s MidPlus computational facilities, supported by QMUL Research-IT and funded by EPSRC grant EP/K000128/1.

Author information

Authors and Affiliations

Corresponding author

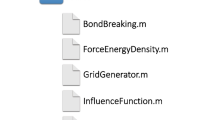

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

1.1 Proof of theorems

Proof

(Theorem 1)

-

(i)

Note that it suffices to show that \(\mu _0^\tau \sim \mu ^0_0\) for all \(\tau > 0\). (Here \(\sim \) denotes “equivalent as measures”). It is known that the eigenvalues of \(-\Delta \) on \(\mathbb {T}^d\) grow like \(j^{2/d}\), and hence the eigenvalues \(\lambda _j(\tau )\) of \(\mathcal {C}_{\alpha ,\tau }\) decay like

$$\begin{aligned} \lambda _j(\tau ) \asymp (\tau ^2 + j^{2/d})^{-\alpha },\;\;\;j\ge 1. \end{aligned}$$Using Proposition 3 below, we see that \(\mu _0^\tau \sim \mu _0^0\) if

$$\begin{aligned} \sum _{j=1}^\infty \left( \frac{\lambda _j(\tau )}{\lambda _j(0)} - 1\right) ^2 < \infty . \end{aligned}$$Now we have

$$\begin{aligned} \left| \frac{\lambda _j(\tau )}{\lambda _j(0)} - 1\right|&\asymp \left| \left( 1 + \frac{\tau ^2}{j^{2/d}}\right) ^{-\alpha } - 1\right| \\&\le \left| \exp \left( \frac{\alpha \tau ^2}{j^{2/d}}\right) - 1\right| \\&\le C\frac{\alpha \tau ^2}{j^{2/d}}. \end{aligned}$$Here we have used that \((1+x)^{-\alpha }-1 \le \exp (\alpha x)-1\) for all \(x \ge 0\) to move from the first to the second line, and that \(\exp (x)-1 \le Cx\) for all \(x \in [0,x_0]\) to move from the second to third line. Now note that when \(d \le 3\), \(j^{-4/d}\) is summable, and so it follows that \(\mu _0^\tau \sim \mu _0^0\).

-

(ii)

The case \(\tau = 0\) is Theorem 2.18 in Dashti and Stuart (2016); the general result follows from the equivalence above.

-

(iii)

Let \(v \sim N(0,\mathcal {D}_{\sigma ,\nu ,\ell })\) where \(\mathcal {D}_{\sigma ,\nu ,\ell }\) is as given by (2). Then we have

$$\begin{aligned} \mathcal {D}_{\sigma ,\nu ,\ell }&= \beta \ell ^d(I - \ell ^2\Delta )^{-\nu -d/2}\\&= \beta \ell ^{d}\ell ^{-2\nu -d}(\ell ^{-2}I - \Delta )^{-\nu -d/2}\\&= \beta \tau ^{2\alpha - d}(\tau ^2I - \Delta )^{-\alpha }\\&= \beta \tau ^{2\alpha - d}\mathcal {C}_{\alpha ,\tau }. \end{aligned}$$Hence, letting \(u \sim N(0,\mathcal {C}_{\alpha ,\tau })\), we see that

$$\begin{aligned} \mathbb {E}\Vert u\Vert ^2&= \mathrm {tr}(\mathcal {C}_{\alpha ,\tau })\\&= \frac{1}{\beta }\tau ^{d-2\alpha }\mathrm {tr}(\mathcal {D}_{\sigma ,\nu ,\ell })\\&= \frac{1}{\beta }\tau ^{d-2\alpha }\mathbb {E}\Vert v\Vert ^2. \end{aligned}$$

\(\square \)

Proof

(Theorem 2) Proposition 1 which follows shows that \(\mu _0\) and \({\varPhi }\) satisfy Assumptions 2.1 in Iglesias et al. (2016), with \(U = X\times \mathbb {R}^+\). Theorem 2.2 in Iglesias et al. (2016) then tells us that the posterior exists and is Lipschitz with respect to the data. \(\square \)

Proposition 1

Let \(\mu _0\) be given by (3) and \({\varPhi }:X\times \mathbb {R}^+\rightarrow \mathbb {R}\) be given by (10). Let Assumptions 1 hold. Then

-

(i)

for every \(r > 0\) there is a \(K = K(r)\) such that, for all \((u,\tau ) \in X\times \mathbb {R}^+\) and all \(y \in Y\) with \(|y|_{\varGamma }< r\),

$$\begin{aligned} 0 \le {\varPhi }(u,\tau ;y) \le K; \end{aligned}$$ -

(ii)

for any fixed \(y \in Y\), \({\varPhi }(\cdot ,\cdot ;y):X\times \mathbb {R}^+\rightarrow \mathbb {R}\) is continuous \(\mu _0\)-almost surely on the complete probability space \((X\times \mathbb {R}^+,\mathcal {X}\otimes \mathcal {R},\mu _0)\);

-

(iii)

for \(y_1,y_2 \in Y\) with \(\max \{|y_1|_{\varGamma },|y_2|_{\varGamma }\} < r\), there exists a \(C = C(r)\) such that for all \((u,\tau ) \in X\times \mathbb {R}^+\),

$$\begin{aligned} |{\varPhi }(u,\tau ;y_1) - {\varPhi }(u,\tau ;y_2)| \le C|y_1-y_2|_{\varGamma }. \end{aligned}$$

Proof

-

(i)

Recall the level set map F defined by (7) defined via the finite constant values \(\kappa _i\) taken on each subset \(D_i\) of \(\overline{D}\). We may bound F uniformly:

$$\begin{aligned} |F(u,\tau )| \le \max \{|\kappa _1|,\ldots |\kappa _n|\} =: F_{\max }, \end{aligned}$$for all \((u,\tau ) \in X\times \mathbb {R}^+\). Combining this with Assumption 1(ii), it follows that \(\mathcal {G}\) is uniformly bounded on \(X\times \mathbb {R}^+\). The result then follows from the continuity of \(y\mapsto \frac{1}{2}|y-\mathcal {G}(u,\tau )|_{\varGamma }^2\).

-

(ii)

Let \((u,\tau ) \in X\times \mathbb {R}^+\) and let \(D_i(u,\tau )\) be as defined by (6), and define \(D_i^0(u,\tau )\) by

$$\begin{aligned} D_i^0(u,\tau )&= \overline{D}_i(u,\tau )\cap \overline{D}_{i+1}(u,\tau )\\&= \{x \in D\,|\,u(x) = c_i(\tau )\},\, i=1,\ldots ,n-1. \end{aligned}$$We first show that \(\mathcal {G}\) is continuous at \((u,\tau )\) whenever \(|D_i^0(u,\tau )| = 0\) for \(i=1,\ldots ,n-1\). Choose an approximating sequence \(\{u_\varepsilon ,\tau _\varepsilon \}_{\varepsilon >0}\) of \((u,\tau )\) such that \(\Vert u_\varepsilon - u\Vert _\infty + |\tau _\varepsilon -\tau | < \varepsilon \) for all \(\varepsilon > 0\). We will first show that \(\Vert F(u_\varepsilon ,\tau _\varepsilon ) - F(u,\tau )\Vert _{L^p(D)}\rightarrow 0\) for any \(p \in [1,\infty )\). As in Iglesias et al. (2016) Proposition 2.4, we can write

$$\begin{aligned}&F(u_\varepsilon ,\tau _\varepsilon ) - F(u,\tau )\\&\quad = \sum _{i=1}^n\sum _{j=1}^n (\kappa _i - \kappa _j)\mathbbm {1}_{D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau )}\\&\quad = \sum _{\begin{array}{c} i,j=1\\ i\ne j \end{array}}^n (\kappa _i - \kappa _j)\mathbbm {1}_{D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau )}. \end{aligned}$$From the definition of \((u_\varepsilon ,\tau _\varepsilon )\),

$$\begin{aligned} u(x) - \varepsilon< u_\varepsilon (x)< u(x) + \varepsilon ,\;\;\;\tau - \varepsilon< \tau _\varepsilon < \tau + \varepsilon \end{aligned}$$for all \(x \in D\) and \(\varepsilon > 0\). We claim that for \(|i-j| > 1\) and \(\varepsilon \) sufficiently small, \(D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau ) = \varnothing \). First note that

$$\begin{aligned} D_i(u_\varepsilon ,\tau _\varepsilon )&= \big \{x \in D\;\big |\; \tau _\varepsilon ^{d/2-\alpha }c_{i-1} \le u_\varepsilon (x)< \tau _\varepsilon ^{d/2-\alpha }c_i\big \}\\&= \big \{x \in D\;\big |\; c_{i-1} \le \tau _\varepsilon ^{\alpha -d/2}u_\varepsilon (x) < c_i\big \}. \end{aligned}$$Then we have that

$$\begin{aligned}&D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau ) \\&\quad =\{x \in D|c_{i-1} \le \tau _\varepsilon ^{\alpha -d/2}u_\varepsilon (x)< c_i,\\&\qquad c_{j-1} \le \tau ^{\alpha -d/2}u(x) < c_j\}. \end{aligned}$$Now, since u is bounded,

$$\begin{aligned} \tau ^{\alpha -d/2}u(x) -\mathcal {O}(\varepsilon )<&\tau _\varepsilon ^{\alpha -d/2}u_\varepsilon (x)\\< & {} \tau ^{\alpha -d/2}u(x) + \mathcal {O}(\varepsilon ) \end{aligned}$$and so

$$\begin{aligned}&D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau ) \subseteq \\&\quad \{x \in D\;|\;c_{i-1}-\mathcal {O}(\varepsilon ) \le \tau ^{\alpha -d/2}u(x)< c_i + \mathcal {O}(\varepsilon ),\\&\quad c_{j-1} \le \tau ^{\alpha -d/2}u(x) < c_j\}. \end{aligned}$$From the strict ordering of the \(\{c_i\}_{i=1}^n\) we deduce that for \(|i-j| > 1\) and small enough \(\varepsilon \), the right-hand side is empty. We hence look at the cases \(|i-j| = 1\). With the same reasoning as above, we see that

$$\begin{aligned}&D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_{i+1}(u,\tau )\\&\quad \subseteq \big \{x\in D\;\big |\;c_i -\mathcal {O}(\varepsilon ) \le \tau ^{\alpha -d/2}u(x)< c_i + \mathcal {O}(\varepsilon ) \big \}\\&\quad \rightarrow \big \{x \in D\;\big |\; \tau ^{\alpha -d/2}u(x) = c_i\big \}\\&\quad = \big \{x \in D\;\big |\; u(x) = \tau ^{d/2-\alpha }c_i\big \}\\&\quad = D_i^0(u,\tau ) \end{aligned}$$and also

$$\begin{aligned}&D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_{i-1}(u,\tau ) \\&\quad \subseteq \big \{x\in D\;\big |\; c_{i-1} - \mathcal {O}(\varepsilon )< \tau ^{\alpha -d/2}u(x) < c_{i-1}\big \}\\&\quad \rightarrow \varnothing . \end{aligned}$$Assume that each \(|D_i^0(u,\tau )| = 0\), then it follows that \(|D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau )|\rightarrow 0\) whenever \(i \ne j\). Therefore we have that

$$\begin{aligned} \Vert F(u_\varepsilon ,\tau _\varepsilon )&- F(u,\tau )\Vert _{L^p(D)}^p\\&= \sum _{\begin{array}{c} i,j=1\\ i\ne j \end{array}}^n \int _{D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau )} |\kappa _i - \kappa _j|^p\,\mathrm {d}x\\&\le (2F_{\max })^p \sum _{\begin{array}{c} i,j=1\\ i\ne j \end{array}}^n |D_i(u_\varepsilon ,\tau _\varepsilon )\cap D_j(u,\tau )|\\&\rightarrow 0. \end{aligned}$$Thus F is continuous at \((u,\tau )\). By Assumption 1(i) it follows that \(\mathcal {G}\) is continuous at \((u,\tau )\). We now claim that \(|D_i^0(u,\tau )| = 0\) \(\mu _0\)-almost surely for each i. By Tonelli’s theorem, we have that

$$\begin{aligned}&\mathbb {E}|D_i^0(u,\tau )|\\&\quad = \int _{X\times \mathbb {R}^+}|D_i^0(u,\tau )|\,\mu _0(\mathrm {d}u,\mathrm {d}\tau )\\&\quad =\int _{X\times \mathbb {R}^+}\left( \int _{\mathbb {R}}\mathbbm {1}_{D_i^0(u,\tau )}(x)\,\mathrm {d}x\right) \mu _0(\mathrm {d}u,\mathrm {d}\tau )\\&\quad =\int _{\mathbb {R}^d}\left( \int _{X\times \mathbb {R}^+}\mathbbm {1}_{D_i^0(u,\tau )}(x)\,\mu _0(\mathrm {d}u,\mathrm {d}\tau )\right) \mathrm {d}x\\&\quad =\int _{\mathbb {R}^d}\left( \int _0^\infty \left( \int _X \mathbbm {1}_{D_i^0(u,\tau )}(x)\,\mu _0^\tau (\mathrm {d}u)\right) \,\pi _0(\mathrm {d}\tau )\right) \mathrm {d}x\\&\quad =\int _{\mathbb {R}^d}\left( \int _0^\infty \mu _0^\tau (\{u \in X\;|\;u(x) = c_i(\tau )\})\,\pi _0(\mathrm {d}\tau )\right) \mathrm {d}x. \end{aligned}$$For each \(\tau \ge 0\) and \(x \in D\), u(x) is a real-valued Gaussian random variable under \(\mu _0^\tau \). It follows that \(\mu _0^\tau (\{u \in X\;|\;u(x) = c_i(\tau )\}) = 0\), and so \(\mathbb {E}|D_i^0(u,\tau )| = 0\). Since \(|D_i^0(u,\tau )| \ge 0\) we have that \(|D_i^0(u,\tau )| = 0\) \(\mu _0\)-almost surely. The result now follows.

-

(iii)

For fixed \((u,\tau ) \in X\times \mathbb {R}^+\), the map \(y\mapsto \frac{1}{2}|y-\mathcal {G}(u,\tau )|_{\varGamma }^2\) is smooth and hence locally Lipschitz. \(\square \)

Proof

(Theorem 4) Recall that the eigenvalues of \(\mathcal {C}_{\alpha ,\tau }\) satisfy \(\lambda _j(\tau ) \asymp (\tau ^2 + j^{2/d})^{-\alpha }\). Then we have that

It follows that

-

(i)

We first prove the ‘if’ part of the statement. We have \(u \sim N(0,\mathcal {C}_0)\), and so \(\mathbb {E}\langle u,\varphi _j\rangle ^2 = \lambda _j(0)\). Since the terms within the sum are non-negative, by Tonelli’s theorem we can bring the expectation inside the sum to see that that

$$\begin{aligned} \mathbb {E}\sum _{j=1}^\infty \left( \frac{1}{\lambda _j(\tau )} - \frac{1}{\lambda _j(0)}\right) \langle u,\varphi _j\rangle ^2&= \sum _{j=1}^\infty \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) \end{aligned}$$which is finite if and only if \(d < 2\), i.e., \(d=1\). It follows that the sum is finite almost surely. For the converse, suppose that \(d \ge 2\) so that the series in (15) diverges when \(p=1\). Let \(\{\xi _j\}_{j\ge 1}\) be a sequence of i.i.d. N(0, 1) random variables so that \(\langle u,\varphi _j\rangle ^2\) has the same distribution as \(\lambda _j(0)\xi ^2\). Define the sequence \(\{Z_n\}_{n\ge 1}\) by

$$\begin{aligned} Z_n&= \sum _{j=1}^n \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) \xi _j^2\\&= \sum _{j=1}^n \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) + \sum _{j=1}^n \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) (\xi _j^2-1)\\&=: X_n + Y_n. \end{aligned}$$Then the result follows if \(Z_n\) diverges with positive probability. By assumption we have that \(X_n\) diverges. In order to show that \(Z_n\) diverges with positive probability it hence suffices to show that \(Y_n\) converges with positive probability. Define the sequence of random variables \(\{W_j\}_{j\ge 1}\) by

$$\begin{aligned} W_j = \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) (\xi _j^2-1). \end{aligned}$$It can be checked that

$$\begin{aligned} \mathbb {E}(W_j) = 0,\;\;\;\text {Var}(W_j) = 2\left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) ^2. \end{aligned}$$The series of variances converges if and only if \(d\le 3\), using (15) with \(p = 2\). We use Kolmogorov’s two series theorem, Theorem 3.11 in Srinivasa Varadhan (2001), to conclude that \(Y_n = \sum _{j=1}^n W_j\) converges almost surely and the result follows.

-

(ii)

Now we have

$$\begin{aligned} \log \left( \frac{\lambda _j(\tau )}{\lambda _j(0)}\right) =&-\log \left( 1-\left( 1-\frac{\lambda _j(0)}{\lambda _j(\tau )}\right) \right) \\ =&\left( 1-\frac{\lambda _j(0)}{\lambda _j(\tau )}\right) + \frac{1}{2}\left( 1-\frac{\lambda _j(0)}{\lambda _j(\tau )}\right) ^2\\&+ \text {h.o.t.} \end{aligned}$$Let \(\{\xi _j\}_{j\ge 1}\) be a sequence of i.i.d. N(0, 1) random variables, so that again we have that \(\langle u,\varphi _j\rangle ^2\) has the same distribution as \(\lambda _j(0)\xi ^2\). Then it is sufficient to show that the series

$$\begin{aligned} I = \sum _{j=1}^\infty \left[ \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) \xi _j^2 + \log \left( \frac{\lambda _j(\tau )}{\lambda _j(0)}\right) \right] \end{aligned}$$is finite almost surely. We use the above approximation for the logarithm to write

$$\begin{aligned} I =&\sum _{j=1}^\infty \left( \frac{\lambda _j(0)}{\lambda _j(\tau )}-1\right) (\xi _j^2-1)\\&+ \sum _{j=1}^\infty \left[ \frac{1}{2}\left( 1-\frac{\lambda _j(0)}{\lambda _j(\tau )}\right) ^2 + \text {h.o.t.}\right] . \end{aligned}$$The second sum converges if and only if \(d<4\), i.e., \(d\le 3\). The almost-sure convergence of the first term is shown in the proof of part (i). \(\square \)

Proposition 2

Let \(D\subseteq \mathbb {R}^d\). Define the construction map \(F:X\times \mathbb {R}^+\rightarrow \mathbb {R}^D\) by (7). Given \(x_0 \in D\) define \(\mathcal {G}:X\times \mathbb {R}^+\rightarrow \mathbb {R}\) by \(\mathcal {G}(u,\tau ) = F(u,\tau )|_{x_0}\). Then \(\mathcal {G}\) is continuous at any \((u,\tau ) \in X\times \mathbb {R}^+\) with \(u(x_0) \ne c_i(\tau )\) for each \(i=0,\ldots ,n\). In particular, \(\mathcal {G}\) is continuous \(\mu _0\)-almost surely when \(\mu _0\) is given by (3). Additionally, \(\mathcal {G}\) is uniformly bounded.

Proof

The uniform boundedness is clear. For the continuity, let \((u,\tau ) \in X\times \mathbb {R}^+\) with \(u(x_0) \ne c_i(\tau )\) for each \(i=0,\ldots ,n\). Then there exists a unique j such that

Given \(\delta > 0\), let \((u_\delta ,\tau _\delta ) \in X\times \mathbb {R}^+\) be any pair such that

Then it is sufficient to show that for all \(\delta \) sufficiently small, \(x_0 \in D_j(u_\delta ,\tau _\delta )\), i.e., that

From this it follows that \(G(u_\delta ,\tau _\delta ) = G(u,\tau )\).

Since the inequalities in (16) are strict, we can find \(\alpha > 0\) such that

Now \(c_j\) is continuous at \(\tau > 0\), and so there exists a \(\gamma > 0\) such that for any \(\lambda > 0\) with \(|\lambda -\tau | < \gamma \) we have

We have that \(\Vert u_\delta - u\Vert _\infty < \delta \), and so in particular,

We can combine (17)–(19) to see that, for \(\delta < \gamma \),

and so in particular, for \(\delta < n\{\gamma ,\alpha /2\}\),

\(\square \)

1.2 Radon–Nikodym derivatives in Hilbert spaces

The following proposition gives an explicit formula for the density of one Gaussian with respect to another and is used in defining the acceptance probability for the length-scale updates in our algorithm. Although we only use the proposition in the case where H is a function space and the mean m is zero, we provide a proof in the more general case where m is an arbitrary element of separable Hilbert space H as this setting may be of independent interest.

Proposition 3

Let \((H,\langle \cdot ,\cdot \rangle ,\Vert \cdot \Vert )\) be a separable Hilbert space, and let A, B be positive trace-class operators on H. Assume that A and B share a common complete set of orthonormal eigenvectors \(\{\varphi _j\}_{j\ge 1}\), with the eigenvalues \(\{\lambda _j\}_{j\ge 1}\), \(\{\gamma _j\}_{j\ge 1}\) defined by

for all \(j \ge 1\). Assume further that the eigenvalues satisfy

Let \(m \in H\) and define the measures \(\mu = N(m,A)\) and \(\nu = N(m,B)\). Then \(\mu \) and \(\nu \) are equivalent, and their Radon–Nikodym derivative is given by

Proof

The assumption on summability of the eigenvalues means that the Feldman–Hájek theorem applies, and so we know that \(\mu \) and \(\nu \) are equivalent. We show that the Radon–Nikodym derivative is as given above.

Define the product measures \({\hat{\mu }},{\hat{\nu }}\) on \(\mathbb {R}^\infty \) by

where \({\hat{\mu }}_j = N(0,\lambda _j)\), \({\hat{\nu }}_j = N(0,\gamma _j)\). As a consequence of a result of Kakutani, see Prato and Zabczyk (2002) Proposition 1.3.5, we have that \({\hat{\mu }}\sim {\hat{\nu }}\) with

We associate H with \(\mathbb {R}^\infty \) via the map \(G:H\rightarrow \mathbb {R}^\infty \), given by

Note that the image of G is \(\ell ^2 \subseteq \mathbb {R}^\infty \), and \(G:H\rightarrow \ell ^2\) is an isomorphism. Since A and B are trace-class, samples from \({\hat{\mu }}\) and \({\hat{\nu }}\) almost surely take values in \(\ell ^2\). \(G^{-1}\) is hence almost surely defined on samples from \({\hat{\mu }}\) and \({\hat{\nu }}\). Define the translation map \(T_m:H\rightarrow H\) by \(T_m u = u + m\). Then by the Karhunen–Loève theorem, the measures \(\mu \) and \(\nu \) can be expressed as the push forwards

Now let \(f:H\rightarrow \mathbb {R}\) be bounded measurable, then we have

From this is follows that we have

\(\square \)

Remark 5

The proposition above, in the case \(m=0\), is given as Theorem 1.3.7 in Prato and Zabczyk (2002) except that, there, the factor before the exponential is omitted. This is because it does not depend on u, and all measures involved are probability measures and hence normalized. We retain the factor as we are interested in the precise value of the derivative for the MCMC algorithm, in particular its dependence on the length-scale.

Rights and permissions

About this article

Cite this article

Dunlop, M.M., Iglesias, M.A. & Stuart, A.M. Hierarchical Bayesian level set inversion. Stat Comput 27, 1555–1584 (2017). https://doi.org/10.1007/s11222-016-9704-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-016-9704-8