Abstract

We use archetypoid analysis as a new tool to categorize institutions and faculties of economics. The approach identifies typical characteristics of extreme (archetypal) values in a multivariate data set. Each entity under investigation is assigned relative shares of the identified archetypoid, which show the affiliation of the entity to the archetypoid. In contrast to its predecessor, the archetypal analysis, archetypoids always represent actual observed units in the data. The approach therefore allows to classify institutions in a rarely used way. While the method has been recognized in the literature, it is the first time that it is used in higher education research and as in our case for institutions and faculties of economics. Our dataset contains seven bibliometric indicators for 298 top-level institutions obtained from the RePEc database. We identify three archetypoids, which are characterized as the top-, the low- and the medium-performer. We discuss the assignment of shares of the identified archetypoids to the institutions in detail. As a sensitivity analysis we show how the classification changes when for four and five archetypoids are considered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The performance measurement of research institutions and universities takes up an increasing role in current policy discussions. While the associated debate on rankings and efficiency evaluations has been taken up in the scientific literature (Bolli et al. 2016; Gnewuch and Wohlrabe 2018; Gralka 2018) the underlying classification of institutions seems to have been largely neglected. However, a classification—a creation of a framework for diverse institutions—is one of the most challenging tasks a researcher can be confronted with (Hazelkorn 2007). A well-known, recurrently discussed grouping is the Carnegie Classification, which has served as a framework for American colleges and universities for more than 30 years (Pike and Kuh 2005). Although the system has its advantages, the framework is context specific and quite complex. Most researchers, especially if interested in a country without an already existing classification structure, require a simpler method for the classification of institutions. Even more so if sub-groups, as faculties, are the evaluation unit of interest.

We argue that archetypal analysis offers a suitable and straight-forward way to classify institutions based on observed data. The method was originally formulated by Cutler and Breiman (1994) and imitates the human tendency of representing a group of objects by its extreme elements (Davis and Love 2010; Epifanio 2016). The aim of the method is to find pure types (the archetypes) in such a way that the other observations are a mixture of them. Hence, archetypes can be seen as data-driven extreme points. This makes the approach an interesting tool for researches, in particular if policy recommendations are striven for. Computationally, the approach is data-driven, and requires the factors to be probability vectors: these make archetypal analysis a computationally demanding tool, yet brings better interpretability. However, the classic archetypal analysis has an important drawback: archetypes are a combination of the sampled units, but they are not necessarily observed institutions. This situation can cause interpretation problems for analysts. In order to address this limitation, a new archetypal concept was introduced: the archetypoid, which is a real (observed) archetypal case (Vinué et al. 2015). Thus, archetypoids allow an intuitive understanding of the results even for non-experts (Thurau et al. 2012).

Archetypal analysis has aroused the interest of researchers working in various fields, such as remote sensing (Chan et al. 2003), climate (Steinschneider and Lall 2015), machine learning (Mørup and Hansen 2012; Seth and Eugster 2016), neuroscience (Hinrich et al. 2016), navigation (Feld et al. 2015) and sports (Seth and Eugster 2016). The same applies for the archetypoid analysis, which was used for the evaluation within fields such as astrophysics (Sun et al. 2017), sports (Vinué and Epifanio 2017) and the financial stock market (Moliner and Epifanio 2018). Building upon the study by Seiler and Wohlrabe (2013) who evaluate scientists, we look at economics faculties and institutions within the present study.Footnote 1 In contrast to the previous study, we employ the archetypoid instead of the archetypal analysis, given the more intuitive understanding of the former method. Parallel to the authors we employ RePEc (Research Papers in Economics) data for our study.

A short description of the method of analysis is provided in the next section, followed by an examination of the dataset. The presentation of the results is complemented by a sensitivity analysis that pays special attention to the number of archetypoids considered. A concluding section brings together the main findings and offers several suggestions for future research.

Methodology

In this section archetypal analysis, as the basis for identifying archetypoids, is outline first. Consider an N×m matrix, where X represents a multivariate data set with N observations (in our case faculties and institutions) and m characteristics (e.g. works, citations, downloads, etc.). For a given number of archetypes k, the algorithm finds the matrix Z by minimizing the residual sum of squares

where α denotes the coefficients of the archetypes with dimensions N×k and ‖∙‖2 the L2 norm. Equation (1) is minimized subject to the following constraints

for i =1,…N. The k archetypes are then convex combinations of the data, i.e.

where β is an N × k matrix. The constrains

are imposed for estimating Eq. (2). The last statement shows that the archetypes are convex combinations of the original data set X. Equations (1) and (2) form the basis for the estimation algorithm: it alternates between finding the best α for given archetypes Z and finding the best archetypes for Z given α. Cutler and Breiman (1994) popularized this approach as alternating least squares.

A consequence of the approach outlined in Eqs. (1) and (2) is that archetypes are not represented by real observed units in the data set. Vinué et al. (2015) proposed a slight modification of the original approach and introduced the term archetypoids. They proposed to modify the constrains for \(\beta\) in the following way

This condition ensures that Z must be a point in the dataset. Hence, archetypoids can be viewed as extreme points in the data.

A central question for the analysis concerns the optimal number of archetypoids for a given data set. In contrast to principal component analysis, archetypoid analysis allows to extract more archetypoids than dimensions of the data set. There is no formal rule for the determination. In praxis, usually the “elbow” criterion for the Residual Sum of Squares (RSS) curve, the so-called scree-plot, is applied. A “flattening” of the curve suggests a potential value of k. We are aware that this choice is arbitrary. We therefore suggest investigating several numbers of archetypoids and to take a look on the concrete interpretation of each. Our experience is the more archetypoids are considered the more similar some archetypoids become.

For a detailed description of archetypoid analysis including computational issues, we refer to Vinué et al. (2015). We extract the archetypoids using the R package Anthropometry version 1.13 by Vinué (2017). As our indicators (described in the next section) exhibit different scales we standardize them prior to determining the archetypoids. We do so by subtracting the mean and dividing by the standard deviation.

Data

We illustrate archetypoid analysis using a large data set of institutions and faculties of economics from the RePEc (Research Papers in Economics) website (http://www.repec.org). In socio-economic sciences RePEc has become an essential source for the spread of knowledge and ranking of individual authors and academic institutions.Footnote 2 RePEc is based on the active participation principle, that is authors, institutions and publishers register and provide information to the network. This approach has the main advantage that a clear assignment of works and citations to authors and articles is possible without any problems of name disambiguation. Each registered author sets a share by which he or she is affiliated with an institution. In case of no self-setting, RePEc calculates shares based on the other affiliated members of the institution. The scores are allocated to the corresponding institution accordingly. In the following, we call these accumulated author shares full-time equivalents (FTE), given that an institution with 10 authors, who all identify themselves with 80%, would have the same accumulated author share (or FTE) as an institution with 8 authors who all identify with 100%.

RePEc has become quite a success, as of December 2019 there were 2.8 million pieces of research from 3200 journals and 5000 working paper series. Additionally, more than 55,000 authors and 14,000 institutions from 101 countries are listed on the website.

RePEc currently offers 37 rankings for institutions and faculties based on bibliometric data which are shown in Table 1. There are five main categories: number of (published) works, citations, citing authors, journal pages, and RePEc access statistics. Each of these main categories can be combined with different weighting schemes: simple or recursive impact factors, number of authors and combinations of them. In the category “distinct number of works” different versions of a paper are counted only once. Published work is counted only if, first the publisher provides the meta data to RePEc and second, the author assigns the work to his/her account. Table 1 reveals that there is a focus on citations both directly and indirectly. In 14 rankings, citations are counted with quality and time adjustments. Moreover, citations matter indirectly through the different impact factors. The simple impact factor captures the quality level of a journal and is similarly defined as the impact factor published by Thomson Reuters Journal Citation Reports.

We downloaded the 37 publicly available rankings from the RePEc website in early December 2019, where the data refers to the November ranking. For these rankings only the top 5% of world-wide institutions are available. The bibliometric scores are highly correlated, as shown by Zimmermann (2013) and Seiler and Wohlrabe (2013) in case of authors and by Gnewuch and Wohlrabe (2018) for economic institutions and faculties. We therefore follow the idea of Seiler and Wohlrabe (2013) and use only a subset of the 37 rankings which can be captured in four groups. These are the following seven ones:

Published work, which includes working papers, books, software codes, and chapters

Number of distinct works, unweighted

Number of distinct works, weighted by simple impact factor

Citations, which represent the impact of an author

Number of citations, unweighted

Number of citations, weighted by the simple impact factor

Pages, which accounts for the published articles

Number of pages, unweighted

Number of pages, weighted by the simple impact factor

Number of downloads, which shows the access

For each of our m = 7 bibliometric indicators we collected the corresponding institutions and faculties where the scores were publicly available. We ended up with N = 298 which can be found in the “Appendix”, Table 5. The list comprises both faculties (as for example the Economics department at the MIT), institutions (ifo Institute), central banks (ECB) or scientific networks (NBER).

The descriptive statistics for the original and normalized indicators can be found in Table 2. On average 77 scientists are employed at a faculty or university. The corresponding full-time equivalent is with about 60 people slightly lower. The largest institute is the research network Institute of Labor Economics (IZA) and the smallest the economics faculty is located at the Johns Hopkins University, with respectively 902 and 19 affiliated economists. An average economics institution has published around 1700 articles, which contain around 16,500 pages in (refereed) journals and were downloaded around 8900 times. The typical institution has received around 26,900 citations. It is thereby important to bear in mind, that the numbers describe stock levels (up to November 2019), which explains the high values of the indicators. Even though a comparison to the previous literature is difficult, since we consider stocks (instead of flows) of faculties (instead of universities), the values seem to be in line with the literature. Using the number of publications in efficiency evaluations, Bolli et al. (2016) assume that European universities publish around 800 articles per year, while Gralka, Wohlrabe and Bornmann (2019) consider that German universities produces around 1000 articles per year. Evaluating German faculties of economics in particular Sternberg and Litzenberger (2005) show that the largest faculty, the faculty of economics at the University Mannheim, produces around 300 articles and receive around 500 citations over a time span of 10 years (1993–2002). The smaller, more average institutions, as the faculty of economics at the University Frankfurt, produces around 100 articles and receive around 100 citations in the same time period. It has to be noted, that the use of stocks instead of flows might bias the results or be even graded as unfair. RePEc unfortunately does not provide flow data. If one researcher changes its employer he or she takes his/her full publication history to the new institution. This favors the new institution and downgrades the previous one. However, economists or scientists in general often change institutions over the work life. It might be a realistic assumption that all institutions in our sample are affected in both ways: positively and negatively. Nevertheless, it is not possible to determine the net effect.

To account for the size of institutions, which influences their productivity (as shown by Worthington and Higgs 2011; Wolszczak-Derlacz and Parteka 2011 and Johnes and Johnes 2016), we normalize the indicators by the number of FTE. Without normalization the identified archetypoids would reflect mostly the size of the institutions. Large institutions produce more articles and potentially receive more citations and would therefore define the extreme values in the data. Hence, the second part of Table 2 shows the normalized indicators which are used in the analysis. Within an average institution of economics around 32 articles per capita are published, which contain around 322 pages in (refereed) journals and were downloaded around 172 times (again per capita). Per FTE the typical institution has received around 570 citations. It is thereby important to bear in mind again, that these numbers describe stock levels and that the per capita view concerns the number of current FTE.

Results

Given the seven indicators for each institution it is not obvious how many archetypoids are reasonable. The elbow criterion, is supposed to help to extract a clear cut-off point. In Fig. 1 we show the corresponding scree-plot of the RSS, which is used to determine the number of archetypoids to retain. Based on the scree-plot we perform an analysis with three potential archetypoids. However, since a potential flattening can also be detected for 4 and 5 archetypoids we discuss how the results change, if more archetypoids are considered, in the subsequent section five.

Figure 2 shows the bar-plots representing percentiles for three archetypoids. Bar-plots thereby serves as a different graphical representation of the convex hull. The height of each bar-plot denotes the share of the convex hull relative to the maximum in the category. Hence, the y-axis denotes the relevance of the indicator for the archetype. Table 3 provides additional context for the percentiles shown in Fig. 2. The table reports the archetypal value for each bibliometric indicator, when the reported percentiles are applied to the original (normalized) dataset. To give an example: while Archetypoid 1 published 14 works, Archetypoid 2 published 60 articles.

The three archetypoids can be interpreted as follows:

Archetypoid 1 represent the low performer with a relatively low number of working papers and articles (14), citations (101) and downloads (81).

Archetypoid 3 denotes the excellent performer among the top-level institutions, given the other archetypoids. The institution performs well in all indicators, even if the indicators are quality weighted. Table 3 show that the corresponding values for each indicator are at least five times larger than the those for Archetypoid 1. In the case of citations, it is even more than 26 times larger.

Archetypoid 2 denotes institutions between the previous two extremes, with a relatively high number of published work and pages. Nevertheless, both figures are smaller than for Archetypoid 3. In addition, compared to Archetypoid 3, the quality adjusted indicators are clearly lower as well as the citations and downloads. Still, they are larger than the figures of Archetypoid 1. The quantity dominates somewhat the quality of publications. Table 3 shows that the corresponding scores of Archetypoid 2 are substantially lower than those of Archetypoid 3 but are not as small as for Archetypoid 1.

Nevertheless, as we extracted the best economic institutions from RePEc, the terminus low performer for the first archetypoid must be interpreted cautiously. Compared to all institutions listed in RePEc every institution in our sample can be classified as top, nevertheless, compared among each other some perform worse than other ones.

Besides the aggregated analysis it is also possible to look at the relative share of each archetypoid for each institution. In practice, percentages of all three archetypoids are allocated to the institutions. This implies, that each institution is assigned three values, which add up to one. In Fig. 3 we show the box-plots for all percentage shares of the three identified archetypoids. It displays that most of the institutions in our data set are characterized by Archetype 2, the medium-performer, as it represents the largest relative shares. In comparison, the Archetype 1, the low performer, is less frequent. Of particular interest is the third archetypoid, the top-performer. As one would suspect, the majority of institutions in our sample have a low share of this archetypoid and only some—the true top-performer—are assigned a large share of this archetypoid.

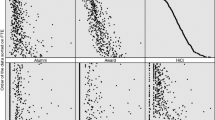

In addition to the more general view at the percentage shares of the three identified archetypoids, it also interesting to look at the association of single institutions to the three archetypoids. Figure 4 displays the allocation of each institutions to the identified types within a ternary plot. The edges of the triangle denote the shares and each circle denotes one institution. The diagram confirms that the Archetypoid 2 is the most frequent one. But is also shows that many institutions are a mixture of Archetypoid 1 and 2. The portion of Archetype 3 is often very small (see also Table 5).

In Table 4 we report the top five institutions for each archetypoid, i.e. institutions with the largest relative share for the respective archetypoid. The values for each institution of the sample can be found in the “Appendix” in Table 5. A value of 1.000 denotes that the respective institution represents the identified archetypoid perfectly. By assumption, this is the case for at least one institution for all three archetypoids. The faculty of economics at the Massachusetts Institute of Technology (MIT) represents the Archetypoid 3, the top-performer. In contrast, the Department of Agricultural and Resource Economics at the University of California-Davis represents the Archetypoid 1, the low-performer. More than one institution, to be precise 34 institutions, represent the medium-performer, which is plausible since the type is most common among the three archetypoids. Nevertheless, in most cases, institutions are a mixture of the three different archetypoids.

Sensitivity Analysis

Since the scree-plot of Fig. 1 showed that the number of archetypoids is arbitrary, we show what happens if we consider 4 or 5 archetypoids instead of 3. This addition not only shows the robustness of the results, but also provides some additional insights. Figure 5 displays that the already classified archetypoids 1 to 3 seem to remain, even if more classes are allowed. Moreover, we see, that the inclusion of a fourth archetypoid, allows to distinguish between two medium-performers: the ones which appear agreeable when the absolute numbers are assessed (Archetypoid 1) and the ones which hold their performance also when the output is weighted (Archetypoid 2). Similarly, the inclusion of fifth archetypoid allows to distinguish between the true low-performer (Archetypoid 3) and faculties that have a larger outreach, indicated in particular by the number of downloads (Archetypoid 5).

Conclusions

In this paper we introduced archetypoid analysis to the evaluation of institutions and faculties of economics. We argue that the method offers a suitable and straight-forward way to classify institutions based on observed data. The analysis allows to extract typical characteristics (archetypoids) within a multivariate data set. We evaluate 298 economic institutions obtained from the RePEc database. We have seven bibliometric scores for each institution, spanning over various measures of (quality-weighted) number of published work, citations and access statistics.

We identified three main archetypoids, which are characterized as top- and low-performer and the institutions between these two extremes. The results are robust for the allowance of additional archetypoids. We must mention two caveats for our analysis. Firstly, we employ stock levels for the classification. While this is a typical approach for the creating of rankings, it has obvious disadvantages. For instance, institutions profit from the whole publication record of their scientists, even though some of the work could have been done at a previous institution. Secondly, our set of economic institutions and faculties are quite heterogeneous. We focus on research related indicators and leave out aspects as teaching which might influence our analysis. Thus, future research should include further characteristics of science, as teaching and the acquisition of grants, among others.

References

Bolli, T., Olivares, M., Bonaccorsi, A., Daraio, C., Aracil, A. G., & Lepori, B. (2016). The differential effects of competitive funding on the production frontier and the efficiency of universities. Economics of Education Review,52, 91–104.

Bornmann, L., & Wohlrabe, K. (2019). Normalisation of citation impact in economics. Scientometrics,120(2), 841–884.

Chan, B. H., Mitchell, D. A., & Cram, L. E. (2003). Archetypal analysis of galaxy spectra. Monthly Notices of the Royal Astronomical Society,338(3), 790–795.

Cutler, A., & Breiman, L. (1994). Archetypal analysis. Technometrics,36(4), 338–347.

Davis, T., & Love, B. C. (2010). Memory for category information is idealized through contrast with competing options. Psychological Science,21(2), 234–242.

Epifanio, I. (2016). Functional archetype and archetypoid analysis. Computational Statistics & Data Analysis,104, 24–34.

Feld, S., Werner, M., Schönfeld, M., & Hasler, S. (2015). Archetypes of alternative routes in buildings. In 2015 International conference on indoor positioning and indoor navigation (IPIN) (pp. 1–10). IEEE.

Gnewuch, M., & Wohlrabe, K. (2018). Super-efficiency of education institutions: an application to economics departments. Education Economics,26(6), 610–623.

Gralka, S. (2018). Persistent inefficiency in the higher education sector: evidence from Germany. Education Economics,26(4), 373–392.

Gralka, S., Wohlrabe, K., & Bornmann, L. (2019). How to measure research efficiency in higher education? Research grants vs publication output. Journal of Higher Education Policy and Management,41(3), 322–341.

Hazelkorn, E. (2007). The impact of league tables and ranking systems on higher education decision making. Higher Education Management and Policy,19(2), 1–24.

Hinrich, J. L., Bardenfleth, S. E., Røge, R. E., Churchill, N. W., Madsen, K. H., & Mørup, M. (2016). Archetypal analysis for modeling multisubject fMRI data. IEEE Journal of Selected Topics in Signal Processing,10(7), 1160–1171.

Hsieh, C.-S., Konig, M. D., Liu, X., & Zimmermann, C. (2018). Superstar Economists: Coauthorship networks and research output. CEPR Discussion Paper, No. DP13239, 1–47.

Johnes, G., & Johnes, J. (2016). Costs, efficiency, and economies of scale and scope in the English higher education sector. Oxford Review of Economic Policy,32(4), 596–614.

Moliner, J., & Epifanio, I. (2018). Bivariate functional archetypoid analysis: an application to financial time series. In Mathematical and statistical methods for actuarial sciences and finance (pp. 473–476). Springer.

Mørup, M., & Hansen, L. K. (2012). Archetypal analysis for machine learning and data mining. Neurocomputing,80, 54–63.

Pike, G. R., & Kuh, G. D. (2005). A typology of student engagement for American colleges and universities. Research in Higher Education,46(2), 185–209.

Rath, K., & Wohlrabe, K. (2016). Recent trends in co-authorship in economics: evidence from RePEc. Applied Economics Letters,23(12), 897–902.

Seiler, C., & Wohlrabe, K. (2013). Archetypal scientists. Journal of Informetrics,7(2), 345–356.

Seth, S., & Eugster, M. J. (2016). Probabilistic archetypal analysis. Machine Learning,102(1), 85–113.

Steinschneider, S., & Lall, U. (2015). Daily precipitation and tropical moisture exports across the eastern United States: an application of archetypal analysis to identify spatiotemporal structure. Journal of Climate,28(21), 8585–8602.

Sternberg, R., & Litzenberger, T. (2005). The publication and citation output of German Faculties of Economics and Social Sciences-a comparison of faculties and disciplines based upon SSCI data. Scientometrics,65(1), 29–53.

Sun, W., Yang, G., Wu, K., Li, W., & Zhang, D. (2017). Pure endmember extraction using robust kernel archetypoid analysis for hyperspectral imagery. ISPRS Journal of Photogrammetry and Remote Sensing,131, 147–159.

Thurau, C., Kersting, K., Wahabzada, M., & Bauckhage, C. (2012). Descriptive matrix factorization for sustainability adopting the principle of opposites. Data Mining and Knowledge Discovery,24(2), 325–354.

Vinué, Guillermo. (2017). Anthropometry: An R package for analysis of anthropometric data. Journal of Statistical Software,77(6), 1–39.

Vinué, G., & Epifanio, I. (2017). Archetypoid analysis for sports analytics. Data Mining and Knowledge Discovery,31(6), 1643–1677.

Vinué, Guillermo, Epifanio, I., & Alemany, S. (2015). Archetypoids: A new approach to define representative archetypal data. Computational Statistics and Data Analysis,87, 102–115.

Wolszczak-Derlacz, J., & Parteka, A. (2011). Efficiency of European public higher education institutions: a two-stage multicountry approach. Scientometrics,89(3), 887–917.

Worthington, A. C., & Higgs, H. (2011). Economies of scale and scope in Australian higher education. Higher Education,61(4), 387–414.

Zimmermann, C. (2013). Academic rankings with RePEc. Econometrics,1(3), 249–280.

Acknowledgements

Open Access funding provided by Projekt DEAL.

Funding

This research received no external funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wohlrabe, K., Gralka, S. Using archetypoid analysis to classify institutions and faculties of economics. Scientometrics 123, 159–179 (2020). https://doi.org/10.1007/s11192-020-03366-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03366-z