Abstract

The purpose of this study is to examine efficiency and its determinants in a set of higher education institutions (HEIs) from several European countries by means of non-parametric frontier techniques. Our analysis is based on a sample of 259 public HEIs from 7 European countries across the time period of 2001–2005. We conduct a two-stage DEA analysis (Simar and Wilson in J Economet 136:31–64, 2007), first evaluating DEA scores and then regressing them on potential covariates with the use of a bootstrapped truncated regression. Results indicate a considerable variability of efficiency scores within and between countries. Unit size (economies of scale), number and composition of faculties, sources of funding and gender staff composition are found to be among the crucial determinants of these units’ performance. Specifically, we found evidence that a higher share of funds from external sources and a higher number of women among academic staff improve the efficiency of the institution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The development of nonparametric methods such as Data Envelopment Analysis (DEA), Free Disposal Hull (FDH) and others (e.g. Malmquist indices) have resulted in burgeoning literature on efficiency assessments of decision-making units (DMUs) across different industries. However, the issue of university/school efficiency was the subject of a limited number of studies. For example, a bibliographic database of DEA articles published in scientific journals in the years 1950–2007, maintained by Gattoufi et al. (2010), records only about 3.5% of studies dedicated to the higher education issues.Footnote 1

Existing studies on the efficiency of tertiary education institutions have been mainly based on country-specific data, and only a small sample of countries has been covered, as apart from a few exceptions (concerning, for example, HEIs in the UK or in Finland) micro data on HEIs are not easily obtainable and comparable across countries and time periods. For the review of the first empirical studies utilising frontier efficiency measurement techniques in education, see Worthington’s 2001 study.

Interestingly, Australian universities have already been analyzed in depth, e.g., see Abbott and Doucouliagos 2003; Avkiran 2001; Carrington et al. 2005; Worthington and Lee 2008. Among European countries, the UK has a particularly long and rich tradition in formal analysis of the efficiency and productivity of the higher education sector (among others: Flegg et al. 2004; Glass et al. 1995; Izadi 2002; Johnes and Johnes 1995; Johnes 2006a, b). Other country-specific studies on tertiary education systems’ efficiency in Europe considered HEIs in Italy (Abramo et al. 2008; Agasisti and DalBianco 2006; Agasisti and Salerno 2007; Bonaccorsi et al. 2006; Ferrari and Laureti 2005; Tommaso and Bianco 2006), Austria (Leitner et al. 2007), Germany (Fandel 2007; Kempkes and Pohl 2010; Warning 2004), Poland (Wolszczak-Derlacz and Parteka 2010) and Finland (Räty 2002). Cross-country studies are difficult to perform due to problems with gathering comparable microdata on HEI performance.

Only a few studies have looked at the efficiency of HEIs from more European countries. Bonaccorsi et al. (2007a) covered universities from Italy, Spain, Portugal, Norway, Switzerland and the UK; Bonaccorsi et al. (2007b) compared universities this time by research field from Finland, Italy, Norway and Switzerland; Agasisti and Johnes (2009) compared the technical efficiency of English and Italian universities in the period 2002/2003 to 2004/2005.

The aim of this research is not only to evaluate the relative technical efficiency of European higher education institutions in a comparative setting, but also to reveal external determinants of their performance.

To achieve this, analysis is enriched by the second step in which the DEA scores are regressed on a couple of potential determinants of efficiency with the use of Simar and Wilson’s bootstrap procedure (2007), in order to ensure statistical proficiency.

In the context of the determinants of school or university performance, a two-stage procedure has been already used. For example, Ray (1991) utilised OLS in the second step in the analysis of the impact of socioeconomic characteristics on the efficiency scores of 122 Connecticut high schools, finding that parents’ education level had a positive impact on the pupil’s performance, and that belonging to a minority ethnic group and being raised in a single parent family had a negative impact. Mancebón and Bandrés (1999) analysed Spanish secondary schools, trying to detect, through descriptive analysis, and without a formal second step regression, characteristic differences between the most efficient and least efficient schools, and as such to point out an urban location. The Tobit model is most often used in a second step that is explained by the boundedness of DEA scores. Among others: Kirjavainen and Loikkanen (1998) employ the tobit model in the analysis of Finnish senior secondary schools, finding that inefficiency decreases with class size and the parents’ education level. Similarly, the tobit model was utilised by Kempkes and Pohl (2010), who regress the efficiency scores of the German universities obtained through the DEA on regional GDP per capita and dummies for the existence of engineering and/or medical departments. They conclude that HEIs located in more prosperous regions (Western German lands) are more likely to benefit, in terms of efficiency, from the environment.

Oliviera and Santos (2005) and Alexander et al. (2010) appear to be the only ones (to the best of our knowledge) who have thus far implemented the bootstrapping procedure created by Simar and Wilson (2007) to study the issue of educational institutions. Oliviera and Santos (2005) analysed the efficiency of 42 Portuguese public schools, finding that school efficiency can be explained positively by the number of physicians per 1,000 people and negatively by the unemployment rate of the region where the school is located. In the second study, Alexander et al. (2010) analysed the secondary school sector in New Zealand, and found that the school type—integrated versus state, girls’ versus co-educational—affects school efficiency, as well as the location (urban vs. rural areas), and teacher quality.

Alternatively, Bonaccorsi et al. (2006) use the ratio of conditional to unconditional efficiency scores to investigate the effects of external variables on performance in the set of Italian universities. However, in their case, the conditional measures of efficiency allow them to check the impact of external factors only one by one, and not simultaneously, as in our approach. They conclude that neither economies of scale (size of the unit) nor economies of scope (interdisciplinary of unit) are significant factors in explaining research and education productivity.

The limits of the existing literature usually concern restricted country and time coverage, and the use of inappropriate estimation methods [censored (tobit) regression].Footnote 2

We paid attention and attempted to rectify the shortcomings of previous studies, using an original and vast set of data on individual characteristics of HEIs from 7 countries for the period 1995–2005. In conjunction with a consistent estimation methodology, this study presents an important extension of the existing literature.

To the best of our knowledge, this is one of the first attempts to analyse the technical efficiency of European academic units from more than two countries, but also the first study that tries to identify the determinants of HEIs’ performance from several countries. Such a broad view of the efficiency evaluation of higher education units is necessary if one considers the growing pressure to provide high-quality research publishable in international journals, high competitiveness for external funding (European grants etc.) and the internationalisation of studies. The need for such a broad analysis was expressed in previous studies (e.g., Agasisti and Johnes 2009).

The rest of the study is structured as follows. In section “Two-stage bootstrap DEA analysis”, we present a theoretical and methodological basis for the non-parametric analysis of efficiency performance. Section “Empirical setting” contains the description of our panel and data, along with key descriptive statistics on European HEIs from our sample. In section “Results of the empirical analysis on efficiency performance” we present the results of our empirical assessment of the efficiency of European HEIs: the first stage of our analysis is based on the computation of DEA scores, while the second stage is dedicated to the exploration of potential determinants of inefficiency.

Our principal results indicate a relatively low level of efficiency of HEIs in the sample of 7 European countries. When looking at the mean efficiency scores over the period of analysis, they could improve output by as much as 55% by keeping their inputs stable. The mean efficiency score hides a considerable variability of efficiency scores within and between countries. Consequently, there is no one country that can be chosen as having “the best,” meaning the most efficient, higher education system. Finally, the second-step analysis confirmed that unit size (economies of scale), number and composition of faculties, source of funding and gender staff composition are among crucial determinants of the units’ performance. The results indicate that the higher the share of funds from external sources and higher the number of women among academic staff, the lower the inefficiency of the institution. These findings can have clear policy implications, and can be especially important from the point of view of HEIs’ managers.

Two-stage bootstrap DEA analysis

We focus on the assessment of the efficiency of European public higher education institutions, where efficiency is understood not in absolute terms but as performance relative to an efficient technology (represented by a frontier function). The frontier can be estimated through DEAFootnote 3 or by stochastic frontier methods.

In the context of higher education, the DEA is a very useful tool, as it allows the researcher to capture multiple inputs and multiple outputs at the same time, focusing on the nonparametric treatment of the efficiency frontier. The analysis of education institutions’ productivity is different from standard productivity measurements, not only because no profit is maximised here, but also because HEIs are not standard firms with one output and a set of inputs. On the contrary, HEIs are producers of at least two outputs: teaching and research. The methodology of efficiency measurement has to take this specificity into account.

Nonparametric treatment of the efficiency frontier does not assume a particular functional form (as in the case of parametric methods), but relies on general regularity properties, such as monotonicity, convexity, and homogeneity. DEA is based on a linear programming algorithm, constructing an efficiency frontier from data on single decision-making units (DMU)—in our case, universities (or, more generally, HEIs).

Turning to the formal presentation of the method, we present the concept of DEA, largely following the notation and exposition provided by Simar and Wilson (2000, 2007). In the context of HEIs, output-oriented models are most frequently used because the quantity and quality of inputs, such as student entrants or academic staff, are assumed to be fixed exogenously, and universities cannot influence these numbers or characteristics, at least not in the short run (Bonaccorsi et al. 2006). Consequently, we present here an output-oriented version of the model.

The production process is constrained by the production set:

where x represents a vector of N inputs and y the vector of M outputs.

The production frontier is the boundary of Ψ. In the interior of the Ψ there are units that are technically inefficient, while technically efficient ones operate on the boundary of Ψ, i.e. the technology frontier. If we describe the production set Ψ by its sections, then the output requirement set is described for all \( x \in R_{ + }^{N} \):

Then the (output-oriented) efficiency boundary \( \partial Y(x) \) is defined for a given \( x \in R_{ + }^{N} \) as:

and the output measure of efficiency for a production unit located at \( (x,y) \in R_{ + }^{N + M} \) (x, y) is:

Because the production set Ψ is unobserved, in practice, efficiency scores λ(x, y) are obtained by DEA estimators, for example, for output orientation with constant returns to scale (CRS), the solution is found through the linear program:

In the second stage, we use the DEA efficiency scores (previously calculated) as the dependent variable (\( \hat{\lambda }_{i} \)) regressing them on potential exogenous (environmental) variables (z i ):

where ε i is a statistical noise with the distribution restricted by: \( \varepsilon_{i} \ge 1 - a - z_{i} \beta \) since DEA efficiency scores are larger than or equal to one in the output-orientation approach.

A couple of problems arise due to the fact that true DEA scores are unobserved and replaced by the previously estimated \( \hat{\lambda }_{i}\), which in turn are serially correlated in an unknown way. Additionally, the error term ε i is correlated with z i since inputs and outputs can be correlated with environmental variables. To obtain unbiased beta coefficients and valid confidence intervals, we follow the bootstrap procedure of Simar and Wilson (2007). It involves obtaining estimates of \( \hat{\lambda }_{i} \) in the first step and then regressing them on potential covariates (z i ) with the use of a bootstrapped truncated regression. Alternatively, as a robustness check we follow so-called double bootstrap method in which DEA scores are bootstrapped in the first stage to obtain bias corrected efficiency scores, and then the second step is performed, as before, on the bases of the bootstrap-truncated regression.

Practically, to obtain the DEA efficiency scores, we utilize Wilson’s FEAR 1.15 software (2008) which is freely available online, and the truncated regression models were then performed in STATA.Footnote 4

Empirical setting

The data and panel composition

The analysis is based on the university-level database, containing information on the outputs and inputs of public higher education institutions from a set of EU (Austria, Finland, Germany, Italy, Poland and the United Kingdom) and non-EU (Switzerland) countries for which it was possible to gather comparable micro data.Footnote 5 Table 4 in the Appendix contains the information on the number of HEIs from every country, while a detailed list of all universities covered by our study is presented in Table 5, also in the Appendix.

The collection of micro data (at the level of single HEIs) is not a trivial issue. Countries differ in availability and coverage of university-level data. In Table 6 in the Appendix, the source of the data is presented. From the sample of our countries, the most comprehensive databases concerning HEIs exist in Finland, the UK and Italy, with freely available online platforms giving access to all statistics that are not confidential.Footnote 6 For Swiss, Austrian and German HEIs, the data was kindly provided by the staff of the respective Central Statistical Offices. Part of the data (e.g., year of foundation or location) can be accessed through the HEIs’ web pages. In the case of Poland, unfortunately, micro-data on HEIs practically does not exist for research purposes. There is no online platform containing the data; some statistics are available in a paper version in various sources published by the Ministry of Science and Higher Education or Central Statistical Office. Consequently, the data on Polish HEIs that we have managed to gather come from multiple sources—both from officially published statistical sources, and through direct contact with statistical offices possessing the data (detailed information is available from the authors upon request).

Even though our data comes from various sources and concerned institutions from distinct countries, particular attention has been put on assuring a maximum level of comparability of crucial variables across countries, in accordance with the UNESCO-UIS/OECD/Eurostat’s (UOE) 2004 data collection manual, and with the Frascati manual (OECD 2002). Table 7 in the Appendix presents the definition of core variables that were used either in the first or second step of the analysis. As for the input measures, our dataset contains information on the total number of students, academic staff and total revenues. The total revenues, which were originally reported in national currencies, have been recalculated into real (2005 = 100) purchasing power in standard Euros.

Given the double mission of higher education institutions (teaching and research)Footnote 7 as outputs, we consider teaching output (measured in terms of graduations) and research output, quantified by means of bibliometric indicators. The research output is measured by the number of publication records of individual HEIs’ indexes in Thomson Reuters’ ISI Web of Science database, (being a part of the ISI Web of KnowledgeFootnote 8) which lists publications from quality journals in all scientific fields.Footnote 9 We count all publications (scientific articles, proceedings papers, meeting abstracts, reviews, letters, notes etc.) published in a given year, with at least one author declaring as an affiliate institution the HEI under consideration.Footnote 10 The units with the most missing observations concerning publication records or ambiguous affiliations used for the identification of the publication recordFootnote 11 were not taken into consideration.

Additionally, we dispose of the following information on individual HEIs: year of foundation, faculty composition, number of different faculties and dummy variables indicating whether medicine/pharmacy faculty is included, location and statistics related to the level of economic development of the region where a single HEI is located, gender structure of the academic staff and source of funding. In order to create the last variable, we divide total revenues into two streams: core budget and third-party funding. In general, data on third-party funding includes: grants from national and international funding agencies for research activities, private income, student fees and others. Alternatively, the core funding comes mainly from the government (central, regional or local) in the form of teaching or/and operating grants. See Table 7 in the Appendix for the detailed breakdown of funding by country.

Crucial variables concerning inputs and outputs needed for the computation of DEA efficiency scores are available for HEIs from all countries and across the whole period of 2001–2005. The coverage of other variables, used in the second stage analysis, is sometimes limited, but it will only affect the number of observations used in the second stage estimation. For example, in the case of Italy, due to the problematic breakdown between core and third-party funding, the variable describing the funding source was not considered (Bonaccorsi et al. 2010).

Our initial sample includes 266 HEIs. Aware that the nonparametric methods we are going to utilise are especially sensitive to outliers, we follow the procedure written by Wilson (1993) to detect atypical observations. Finally, a sample of 259 HEIs remains.Footnote 12

Key characteristics of European HEIs from our sample

The HEIs covered by our study comprise a very heterogeneous sample—they differ in size, structure, financial resources or scientific output. In Table 1 we show key figures describing HEIs from separate countries, from the point of view of output/input relations. Note that measures such as the number of publications per academic employee can be treated as partial indicators of efficiency (in this case: scientific efficiency).

Taking into account country averages, the lowest publication record was found for HEIs from Poland—on average, an academic staff member employed at a Polish HEI has a third of the number of publications per year listed in the ISI Web of Knowledge as the average Italian, Austrian or British academic staff member. However, in the case of the number of graduates per academic staff members, Polish HEIs lead the pack, together with the UK and Italy. Moreover, HEIs differ greatly in size. The biggest universities, in terms of the number of students, exist in Poland and Italy. The smallest HEIs function in Switzerland and Finland. Unsurprisingly, also the amount of funding is very uneven, even if we take into account large differences in the level of prices across countries through PPS. On average, Polish HEIs are confirmed as having the lowest level of funding—Austria, Finland and Germany have similar levels of funding (2 times higher than that of Poland), and Switzerland has very well-funded universities, with almost 7 times the per student-revenue than Poland. At the same time, the proportion of money coming from governmental sources in the form of core funding accounts for the larger share of funds in all countries, except the UK, where on average it constitutes 44% of funding. Women represent the biggest share of academic staff members in the UK and Germany, while in Austria, only one out of five academicians on average is female.

Results of the empirical analysis on efficiency performance

First step DEA results

For each year of analysis, we run an output-oriented (CRS) efficiency model. Our basic specification considers two outputs and three inputs. As inputs we consider the number of total academic staff, the number of students and total revenues. The set of outputs include the number of graduations (teaching outcome) and the number of scientific publications (research outcome) as described in the previous section. As suggested in the recent study by Daraio et al. (2011), in such a heterogeneous panel there is a need for standardization, and consequently all inputs and outputs are expressed as ratios with respect to the country mean (country average = 100).

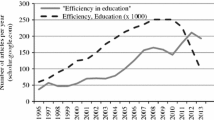

Because our task is not to rank universities, but instead to explore the determinants of their efficiency, due to the space constraints, we present here only the results expressed as the country averages. The mean value of the efficiency score for the whole sample is 1.55, the highest efficiency score (meaning the lowest relative efficiency) is 3.2 and only 5% of HEIs are 100% efficient, obtaining efficiency scores equalling one. Since we are assuming an output-oriented approach, the inefficient university would have to increase its output by the factor (DEA score-1) × 100% in order to reach the frontier. Therefore, the efficiency score of 1.55 indicates that, when examining the universities in all seven countries analyzed here, their output could improve by as much as 55%, keeping their inputs stable. Of course, this average efficiency score is the result of different country patterns that also change over time. The kernel distribution of efficiency scores (pooling all years) country by country is shown in Fig. 1. All countries are characterised by a unimodal and skewed distribution, with the concentration of mass in the lower tail, in the direction of more efficient units. The units are more efficient the closer they come to the value of one. In the case of Switzerland, the dispersion of the distribution is smaller, without longer tails, which suggests that the universities are similar in their efficiency. Additionally, unbiased efficiency scores obtained by bootstrap method described by Simar and Wilson (2000) are presented. Their distributions are moved slightly to the right indicating lower efficiency (higher efficiency scores) in relation to the original ones.Footnote 13 In Table 2, we present the average DEA scores by country and their dynamics over time. In 2001, Austria had the lowest score at 1.301, meaning it had on average the most efficient HEIs (with respect to the average scores from other countries), followed by Switzerland at 1.387 and Italy at 1.389. Since then, in all the years for which analysis was done, universities from Switzerland obtained the best efficiency scores. The dynamics in DEA scores show a rise in scores (fall in efficiency) from 2001 to 2004, and the trend is then reversed.Footnote 14 Again, the average scores covered large country deviations (see Fig. 1 and the last rows of Table 2).

Interestingly, in all countries (except Austria and Finland) units exist that are situated at the efficiency frontier. However, there are only two universities that, regardless of the year of the analysis and DEA specifications (3 inputs vs. 2 inputsFootnote 15), have an efficiency score equal to one in all cases: The University of York (UK) and Humboldt-Universität Berlin (Germany).

Given that the efficiency scores of HEIs exhibit high variability, both across countries and within countries, it is interesting to discover what the determinants of universities’ performance are and, consequently, what can be done to improve the efficiency of single university units. This task will be performed in the second step of the analysis presented below.

Second step—determinants of efficiency scores in European HEIs

Empirical specification

At this stage, DEA scores are linked through a parametric model with additional variables, describing institutional setting, faculty composition, funding schemes, specific characteristics for the country and region, etc. The model to be estimated takes on the following form:

where i refers to single HEI, t denotes time period and j country where HEI i is located; \( \lambda_{i,j,t} \) is DEA scores calculated as in (5); GDP n,t is the real GDP per capita in euro PPS of the region n (NUTS2) where the university is located; nofac i,t is the number of different faculties; med i is a dummy variable, equals 1 if university has medical or pharmacy faculty, 0 otherwise, yearfound i year of foundation; Rev_core i,t is the share of core funding revenues in total revenues; Women i,t is the share of women in the academic staff.

A summary of the statistics is presented in Table 8 in the Appendix. Additionally, we include a set of country and time dummies. Time dummies control for exogenous changes in technology and/or for the change in the number of publications that are indexed in the ISI database. Country-specific effects are introduced to control for differences, for example, due to the cross-country diversity of education systems.

The choices of independent (environmental) variables, together with predictions concerning their impact on HEIs’ efficiency scores, are discussed briefly below.

A university’s location can be an important determinant of its performance, as rich and poor regions offer different business surroundings and a local climate for the HEI. In order to check this proposition, we use the value of real GDP per capita in euro PPS of the NUTS2 region n, in which the university is located (GPD n,t ). For example: Kempkes and Pohl (2010) found a positive impact of a wealthier location on school efficiency, while Bonaccorsi and Daraio (2005) and Oliviera and Santos (2005) did not confirm the agglomeration effect.

Furthermore, we introduce the variable (nofac i ). The number of different faculties that can be a proxy for the degree of a unit’s interdisciplinarity. This refers to the concept of economies of scope, and answers the question of whether increasing the variety of different faculties brings a growth in efficiency, or if specialisation in fewer fields is more beneficial to the university. An intensive review of the previous empirical studies concerning the potential existence of economies of scope in the education sector is presented in Bonaccorsi et al. (2006), and the overall picture is mixed, without unambiguous conclusions. The variable (nofac i ) reflects not only the interdisciplinary of a unit, but also is related to the size of the university, as larger universities usually have a larger number of faculties. This is confirmed by the pairwise correlation between nofac i and the total number of students Stud i,t . (see Table 9 in the Appendix, where partial correlation coefficients between all the variables are presented). Assuming that institutions that operate under a large scale can realize greater productivity growth due to positive economies of scale, we would expect a negative coefficient in front of this variable. However, there is no consensus regarding whether economies of scale exist in the higher education sector (see for example Cohn et al. (1989) versus Felderer and Obersteiner (1999), and for the in-depth literature review and discussion of the economies of scale in higher education, see Bonaccorsi et al. (2006)). Diseconomies of scale may also occur due to bureaucracy in big units and a possible waste of resources. In this case we would expect a positive variable in front of this parameter.

Next, we consider a dummy variable med i equalling one if the HEI has medical or pharmacy faculty to take into account the specificity of faculty composition. A similar approach was performed by Kempkes and Pohl (2010).

Then, we proxy the level of tradition of a given HEI using its year of foundation, (yearfound i ). It is often perceived that HEIs with a longer tradition have a better reputation, but it could also be the case that younger HEIs have more flexible and modern structures, assuring a more efficient performance.

Additionally, we introduce into the Eq. 7 the share of core funding in total revenues (Rev_core i,t ), which allows us to investigate whether the source of funding (public versus private) matters to the research outcome. Moreover, in the literature, the importance of universities’ autonomy for its performance is often underlined, which can be proxies by the share of non-governmental funds in its total revenue (Bonaccorsi and Daraio, 2007; Aghion et al., 2009).

Subsequently, we test the relation between the gender composition of academic staff and university units’ DEA scores. The structure of the academic staff is measured by the ratio of women to the total staff (Women i,t ).

As for the estimation strategy, we use the procedure described in section “Two-stage bootstrap DEA analysis” involving a truncated regression with 1000 bootstrap replicates (the number of L replicates from point 2 in the described algorithm), which should ensure the statistical correctness of the findings. This is followed by numerous robustness checks.

Results

Firstly, we estimate the regression (7) with DEA scores obtained from the 3 inputs 2 outputs model. The results are presented in Table 3 where we show three alternative models, depending on the variables included.

Recalling output DEA formulation from Eq. 4, a positive sign of the estimated regression parameter indicates that, ceteris paribus, an increase in a variable corresponds to higher inefficiency (lower efficiency), while a negative sign of estimated parameter indicates lower inefficiency (greater efficiency).

In the first column, bias-adjusted coefficients of a basic regression are presented. Next, two columns show the lower and upper bounds of the 95% bootstrap confidence interval, which is used to check the statistical significance of the estimated coefficients. The statistical significance indicates that the value of zero does not fall within the confidence interval associated with a coefficient under examination.

The estimation results reveal that the coefficient associated with the GDP per capita of the region where the university is located is not statistically significant, so development level of the region is not among statistically significant determinants of HEIs efficiency. This is confirmed in all three specifications of the model. When including a dummy variable for medical faculty (column 1), we found a coefficient to be negative and significant, which indicates a higher efficiency for universities with medical faculty. Similarly, we confirmed the statistical significance of the number of different faculties. The negative parameter in front of the nofac i variable shows that HEIs with a higher number of different faculties have lower DEA scores (which means they are more efficient), which in turn can be a sign of the economy of scope and/or economies of scale. Finally, younger universities are less efficient (a positive coefficient for the yearfound i variable).

Additionally, we ran an augmented regression, including the percentage of revenues from the core funding in total revenues (Rev_core i,t ) and the ratio of female staff Women i,t to the total academic staff model (2). In the case of both variables, we do not dispose of information for the whole sample of HEIs (e.g., the lack of data for Italy), so the number of observations drops. All signs of the coefficients and the statistical significance of the variables that were already included in the model (1) are as they were in the first basic specification. The coefficient in front of Rev_core i,t is positive and statistically significant, indicating that an increase in the share of the university budget represented by core funding is negatively associated with the technical efficiency of analysed universities. However, it should be underlined that determining a strict causal relationship can be difficult. Efficient universities can attract more third-party funding; on the other hand, universities with a higher share of external funding may benefit from more financial resources and improve their efficiency. Finally, we found that higher share of women employed in academia is positively correlated with efficiency (note negative and statistical significant coefficient of Women i,t ).

Robustness checks

We assessed the robustness of the estimations results in several ways. First of all, we considered the restricted DEA model with 2 inputs and 2 outputs, without the number of students as an input, like the study by Mancebón and Bandrés (1999), who underline that students are not normal inputs of university production. Generally, the DEA scores obtained through the 2 input, 2 output model give very similar results to the basic 3 input 2 output specification. The Spearman rank correlation coefficient that tests the correlation between the rankings equals 0.72. Then we repeat the second step, with the DEA scores obtained in the 2 input 2 output model. Additionally, in this case we could include in the regression the variable directly indicating the size of the institution measured by the total number of students (Stud it ), as this variable was not among the inputs in the first step. However, to be sure that there is no multicollinearality between covariates, we exclude nofac i from the independent variables. The results of the truncated regression are presented in Table 10 in Appendix.

In general, most of the previous findings are confirmed: the parameter associated with the country’s or region’s GDP is still not statistically significant. The negative parameters of med i and Women i,t and positive parameters of yearfound i (the latter is statistically significant in two out of three regressions) are confirmed. Additionally, the size of the institution when measured by the number of students (Stud it ) seems to be an important factor of the units’ efficiency. The higher the number of students, the higher the institutions’ efficiency; this can indicate economies of scale in big units (negative parameter). The only differences concern the coefficient associated with Rev_core i,t , which lose its statistical significance.

Similarly, the change in the number of bootstrap replications performed in the second step did not have a considerable impact on the results (we have considered 500 as well 2000 replications).

Additionally, we utilised the so-called double bootstrap methodFootnote 16 in which DEA scores are bootstrapped in the first stage, and then the second step is performed, as before, on the bases of the bootstrap-truncated regression. The results from the double bootstrap procedure are shown in Table 11 in Appendix. The estimation is very similar to one obtained previously, but in the augmented model (3) the coefficient of the gender structure is statistically significant at a lower level. Moreover, in most of the cases the actual coefficient estimates tend to be slightly larger.

Finally, we change the point of truncation in the second stage. Originally, in the truncated regression, only scores greater than one were included; the efficient units were excluded, and in this sense part of the information was lost (Monchuk et al. 2010). Alternatively, we used a truncated point near one (e.g., 0.99). The comparison of the results obtained with 1.00 and 0.99 truncation is presented in Table 12 in Appendix. Regardless of the point of truncation, the sign, statistical significance and the value of the coefficients are substantially the same.

Conclusions

The main aim of this research was to evaluate efficiency in a large sample of universities from as many European countries as possible, and to assess the importance of potential factors in improving their performance.

We have proposed a two-stage analysis, combining non-parametric and parametric methods. First, with the use of non-parametric frontier techniques, we measured the technical efficiency for 259 HEIs from 7 European countries within the years 2001–2005. Given that universities differ in the ‘production process’ from standard firms or companies, due to the presence of multiple inputs and multiple outputs, we have adopted an output-oriented formulation of DEA. Two specifications of DEA analysis were performed, one with two outputs (publications and graduations) and three inputs (total academic staff, total number of students and total revenues) and the second with two outputs and two inputs (total academic staff and total revenues).

On average, universities in the seven countries analysed exhibit rather poor levels of efficiency in publication and graduations, with a mean DEA score of 1.55. However, due to the high variability of scores within each country, we cannot point out one country as possessing a superiorly efficient higher education system that could constitute a benchmark for the other countries.

At the second stage of our analysis, we linked the technical efficiency scores of single HEIs with characteristics describing their location, faculty composition, year of foundation, funding sources, structure of employment and size. Contrary to the previous studies, we utilised a bootstrapped truncated regression in order to guarantee the accuracy of the estimates. In all specifications, we include country and year-specific characteristics to be sure that the impact of the covariates is not due to the country/period characteristics. By doing so we were able to determine factors crucial in promoting efficiency gains in the context of public higher education. Several interesting conclusions can be drawn that may be important from the policy point of view.

In general, it seems that the size of the institution is an important factor in its efficiency: the higher the number of students or the number of faculties, the higher institutions’ efficiency. The latter variable can be also a crude proxy for university interdisciplinarity. The importance of faculty composition also has to be taken into consideration when assessing efficiency. We found that universities with medical/pharmacy faculty are characterised by higher efficiency. Additionally, we found that the gender structure of the academic staff can be also important for institutions’ performance, with the presence of women being positively correlated with efficiency. Tradition (proxied by the year of founding) was among the other statistically significant determinants of efficiency: younger universities seemed to be less efficient.

As far as location is considered, HEIs from our sample that are located in more prosperous regions (with higher GDP levels per capita) were not found to register higher efficiency. In fact, the coefficient for GDP per capita did not prove to be statistically significant in any of our specifications, and its sign was not stable.

Moreover, in the model where DEA was calculated on the basis of 3 inputs and 2 outputs, it was confirmed that funding structure is an important performance factor: an increase in the share of core funding in total revenues can be matched with a drop in efficiency. Such a result suggests that HEIs funded predominantly from the public funds exhibited higher inefficiency. This result can have clear policy implications and can serve as guidance, especially for those who manage individual HEIs, regarding ways to improve their performance.

We addressed the robustness of our findings in several ways. We changed the number of replications in the bootstrap procedure, employed different procedures of estimation and changed the truncation point. None of these changes influence the results in a considerable way, and the main conclusions hold.

Our study shows that in the context of higher education sector analysis, further effort is needed in order to extend the country sample and time dimension. We strongly call for a more transparent policy concerning microdata collection and dissemination at the European level. It would also be very interesting to confront patterns of efficiency in public and private academic units, but unfortunately the unavailability of data (especially concerning funding) for private universities remains the main obstacle in doing so.

Notes

The search was performed on April 2, 2011 with the use of Version 0.70 of the DEA bibliographic database containing 3911 studies (deabib.org) and returned 65 hits for the “university” or “universities,” 44 for “schools” and 27 hits were obtained for the phrase “higher education.”

Simar and Wilson (2007) discuss in detail why the traditionally used censored (tobit) regression is not adequate here.

Stata codes are available from authors upon request.

Contrary to the aggregated data on the higher education system, there is a lack of a unique, integrated database providing comparable information on individual HEIs from different European countries. There are some attempts to create foundations for regular data collection by national statistical institutes on individual higher education institutions in the EU-27 Member States (for more information about the Aquameth project, see Daraio et al. 2011. For its continuity under the EUMIDA project, see Bonaccorsi et al. 2010 and for the current state of the micro data collection consider EUMIDA webpage: www.euimida.org).

In case of the UK, data are not free of charge, see Table 6 in the Appendix.

Additionally, the so-called ‘third mission’ (links of HEIs with industrial and business surroundings) can be considered. Due to the unavailability of comparable across-country data that would permit us to measure the degree of links between HEIs and the business sector, we are not able to include a third mission in our study.

In 2009, the Web of Science covered over 10,000 of the highest impact journals worldwide, and over 110,000 conference proceedings. However, the coverage of the database is field sensitive (see EUMIDA final report for a detail discussion: www.eumida.org).

Note that studies co-authored by persons affiliated at the same institution are counted once.

For example, we excluded the University of London from our analysis, because as a confederational organization it is composed of several colleges. It was not possible to identify the publication record because we cannot be sure whether the academic staff of the University of London, as her/his affiliation, would give the name of the college or the “University of London.”.

In the case of the DEA approach, outliers are understood as the most efficient units with the biggest impact on the frontier, Wilson (1993). Seven universities were detected as outliers and deleted from the sample: Sapienza University of Rome, The University of Cambridge, The University of Oxford, The University of Bologna, The University of Vienna, University of Munich, and University of Naples Federico II.

Unbiased efficiency scores will be used in the second stage as a robustness check in so-called double bootstrap method. See section “Robustness checks”.

To analyse the dynamics in productivity, the so-called Malmquis index should be constructed, see for example Parteka and Wolszczak-Derlacz (2011).

As a robustness check, we also perform a 2 input, 2 output model. See section “Robustness checks”.

Algorithm 2 from Simar and Wilson 2007.

References

Abbott, M., & Doucouliagos, C. (2003). The efficiency of Australian universities: a data envelopment analysis. Economics of Education Review, 22(1), 89–97.

Abramo, G., D’Angelo, C. A., & Pugini, F. (2008). The measurement of Italian universities’ research productivity by means of non parametric-bibliometric methodology. Scientometrics, 76(2), 225–244.

Agasisti, T., & DalBianco, A. (2006). Data envelopment analysis to the Italian university system: theoretical issues and political implications. International Journal of Business Performance, 8(4), 344–367.

Agasisti, T., & Johnes, G. (2009). Beyond frontiers: Comparing the efficiency of higher education decision-making units across countries. Education Economics, 17(1), 59–79.

Agasisti, T., & Salerno, C. (2007). Assessing the cost efficiency of Italian universities. Education Economics, 15(4), 455–471.

Aghion, P., Dewatripont, M., Hoxby, C., Mas-Colell, A., & Sapir, A. (2009). The governance and performance of research universities: Evidence from Europe and the US, NBER Working Paper No. 14851.

Alexander, W. R. J., Haug, A. A., & Jaforullah, M. (2010). A two-stage double-bootstrap data envelopment analysis of efficiency differences of New Zealand secondary schools. Journal of Productivity Analysis, 34(2), 99–110.

Avkiran, N. K. (2001). Investigating technical and scale efficiencies of Australian universities through data envelopment analysis. Socio-Economic Planning Sciences, 35(1), 57–80.

Bonaccorsi, A., & Daraio, C. (2005). Exploring size and agglomeration effects on public research productivity. Scientometric, 63(1), 87–120.

Bonaccorsi, A., & Daraio, C. (2007). Universities and strategic knowledge creation: Specialization and performance in Europe. Cheltenham/Northampton, MA: Edward Elgar Publishing.

Bonaccorsi, A., Daraio, C., & Simar, L. (2006). Advanced indicators of productivity of universities an application of robust nonparametric methods to Italian data. Scientometrics, 66(2), 389–410.

Bonaccorsi, A., Daraio, C., & Simar, L. (2007a). Efficiency and productivity in European universities: exploring trade-offs in the strategic profile, Chap. 5. In A. Bonaccorsi & C. Daraio (Eds.). Universities and strategic knowledge creation: Specialization and performance in Europe (pp. 144–206). Cheltenham/Northampton, MA: Edward Elgar Publishing.

Bonaccorsi, A., Daraio, C., Räty, T. & Simar, L. (2007b). Efficiency and university size: Discipline-wise evidence from European universities. MPRA Paper No. 10265, Munich Personal RePEc Archive.

Bonaccorsi, A., Brandt, T., De Filippo, D., Lepori, B., Molinari, F., Niederl, A., Schmoch, U., Schubert, T., & Slipersaeter, S. (2010). Final study report. Feasibility study for creating a European university data collection. The European Communities.

Carrington, R., Coelli, T., & Rao, P. D. S. (2005). The performance of Australian universities: Conceptual issues and preliminary results. Economic Papers—Economic Society of Australia, 24(2), 145–163.

Coelli, T. J., Rao, D. P., O’Donnell, C. J., & Battese, G. E. (2005). An introduction to efficiency and productivity analysis (2nd ed.). New York: Springer.

Cohn, E., Rhine, S. L. W., & Santos, M. C. (1989). Institutions of higher education as multi-product firms: economies of scale and scope. Review of Economics and Statistics, 71, 284–290.

Cooper, W. W., Seiford, L. M., & Kaoru, T. (2000). Data envelopment analysis. A comprehensive text with models, applications, references and DEA-Solver software. Boston/Dordrecht, London: Kluwer Academic Publishers.

Daraio, C., Bonaccorsi, A., Geuna, A., Lepori, B., Bach, L., Bogetoft, P., et al. (2011). The European university landscape: A micro characterization based on evidence from the aquameth project. Research Policy, 40(1), 148–164.

Fandel, G. (2007). On the performance of universities in north rhine-Westphalia, Germany: Government? Redistribution of funds judged using DEA efficiency measures. European Journal of Operational Research, 176(1), 521–533.

Farrell, M. J. (1957). The measurement of productive efficiency. Journal of the Royal Statistical Society, Series A, 120(3), 253–290.

Felderer, B., & Obersteiner, M. (1999). Efficiency and economies of scale in academic knowledge production, Economics Series no. 63, Institute for Advanced Studies, Vienna.

Ferrari, G., & Laureti, T. (2005). Evaluating technical efficiency of human capital formation in the Italian university: Evidence from florence. Statistical Methods and Applications, 14(2), 243–270.

Flegg, A. T., Allen, D. O., Field, K., & Thurlow, T. W. (2004). Measuring the efficiency of British universities: A multi-period data envelopment analysis. Education Economics, 12(3), 231–249.

Gattoufi, S., Becker D., Chandel, J. K, & Sander, M. (2010). Deabib.org: A bibliographic database about data envelopment analysis. Version 0.70.

Glass, J. C., McKillop, D. G., & Hyndman, N. S. (1995). Efficiency in the provision of university teaching and research: an empirical analysis of UK universities. Journal of Applied Econometrics, 10, 61–72.

Izadi, H. (2002). Stochastic frontier estimation of a CES cost function: The case of higher education in Britain. Economics of Education Review, 21, 63–71.

Johnes, J. (2006a). Data envelopment analysis and its application to the measurement of efficiency in higher education. Economics of Education Review, 25(3), 273–288.

Johnes, J. (2006b). Measuring teaching efficiency in higher education: An application of data envelopment analysis to economics graduates from UK universities 1993. European Journal of Operational Research, 174(1), 443–456.

Johnes, J., & Johnes, G. (1995). Research funding and performance in UK. University Departments of economics: A frontier analysis. Economics of Education Review, 14(3), 301–314.

Kempkes, G., & Pohl, C. (2010). The efficiency of German universities: Some evidence from nonparametric and parametric methods. Applied Economics, 42, 2063–2079.

Kirjavainen, T., & Loikkanen, H. A. (1998). Efficiency differences of Finnish senior secondary schools: An application of DEA and tobit analysis. Economics of Education Review, 17, 377–394.

Leitner, K.-H., Prikoszovits, J., Schaffhauser-Linzatti, M., Stowasser, R., & Wagner, K. (2007). The impact of size and specialisation on universities’ department performance:A DEA analysis applied to Austrian universities. Higher Education, 53, 517–538.

Mancebón, M.-J., & Bandrés, E. (1999). Efficiency evaluation in secondary schools: The key role of model specification and of ex post analysis of result. Education Economics, 7(2), 131–152.

Monchuk, D., Zhuo, Ch., & Bonaparte, Y. (2010). Explaining production inefficiency in China’s agriculture using data envelopment analysis and semi-parametric bootstrapping. China Economic Review, 21, 346–354.

OECD (2002). Frascati manual. Proposed standard practice for surveys on research and experimental development, OECD, Paris.

Oliviera, M. A., & Santos, C. (2005). Assessing school efficiency in Portugal using FDH and bootstrapping. Applied Economics, 37, 957–968.

Parteka, A. & Wolszczak-Derlacz, J. (2011). Dynamics of productivity in higher education. Cross-European evidence based on bootstrapped Malmquist indices, University of Milan Department of Economics Working Paper No. 2011-10.

Räty, T. (2002). Efficient facet based efficiency index: A variable returns to scale specification. Journal of Productivity Analysis, 17, 65–82.

Ray, S. C. (1991). Resource-use efficiency in public schools: A study of connecticut data. Management Scence, 37(12), 1620–1628.

Simar, L., & Wilson, P. W. (2000). A general methodology for bootstrapping in non-parametric frontier models. Journal of Applied Statistics, 27(6), 779–802.

Simar, L., & Wilson, P. W. (2007). Estimation and inference in two-stage, semi-parametric models of production processes. Journal of Econometrics, 136, 31–64.

Tommaso, A., & Bianco, A. D. (2006). Data envelopment analysis to the Italian university system: theoretical issues and policy implications. International Journal of Business Performance Management, 8(4), 344–367.

Unesco-IUS/OECD/Eurostat data collection manual. (2004). Data collection on education systems. OECD, Paris.

Warning, S. (2004). Performance differences in German higher education: empirical analysis of strategic group. Review of Industrial Organization, 24, 393–408.

Wilson, P. W. (1993). Detecting outliers in deterministic nonparametric frontier models with multiple outputs. Journal of Business and Economic Statistics, 11(3), 319–323.

Wilson, P. W. (2008). FEAR: A software package for frontier efficiency analysis with R. Socio-Economic Planning Sciences, 42(4), 247–254.

Wolszczak-Derlacz, J., & Parteka, A. (2010). Scientific productivity of public higher education institutions in Poland: A comparative bibliometric analysis. Warsaw: Ernst & Young.

Worthington, A. C. (2001). An empirical survey of frontier efficiency measurement techniques in education. Education Economics, 9(3), 245–268.

Worthington, A. C., & Lee, B. L. (2008). Efficiency, technology and productivity change in Australian universities, 1998–2003. Economics of Education Review, 27(3), 285–298.

Acknowledgments

The research was performed under the Polish Ministry of Science and Higher Education’s project “Efficiency of Polish public universities” (budgetary funds for science 2010–2012). The authors gratefully acknowledge the support of Ernst and Young’s “Better Government Programme” research grant, enabling part of the data collections. The paper has benefited from the comments of participants of Max Weber Fellows Lustrum Conference, European University Institute, June 2011, Warsaw International Economic Meeting, University of Warsaw, July 2011 and from seminar participants at the Department of Economics, Universita’ Politecnica delle Marche, Ancona, June 2011. Daniel Monchuk graciously provided Stata codes used to program the second step analysis. Finally, authors acknowledge comments and suggestions from anonymous referee.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

See Tables 4, 5, 6, 7, 8, 9, 10, 11 and 12.

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Wolszczak-Derlacz, J., Parteka, A. Efficiency of European public higher education institutions: a two-stage multicountry approach. Scientometrics 89, 887–917 (2011). https://doi.org/10.1007/s11192-011-0484-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-011-0484-9