Abstract

Purpose

The Quality of Life, Enjoyment, and Satisfaction Questionnaire-Short Form (Q-LES-Q-SF) is a recovery-oriented, self-report measure with an uncertain underlying factor structure, variously reported in the literature to consist of either one or two domains. We examined the possible factor structures of the English version in an enrolled mental health population who were not necessarily actively engaged in care.

Methods

As part of an implementation trial in the U.S. Department of Veterans Affairs mental health clinics, we administered the Q-LES-Q-SF and Veterans RAND 12-Item Health Survey (VR-12) over the phone to 576 patients across nine medical centers. We used a split-sample approach and conducted an exploratory factor analysis (EFA) and multi-trait analysis (MTA). Comparison with VR-12 assessed construct validity.

Results

Based on 568 surveys after excluding the work satisfaction item due to high unemployment rate, the EFA indicated a unidimensional structure. The MTA showed a single factor: ten items loaded on one strong psychosocial factor (α = 0.87). Only three items loaded on a physical factor (α = 0.63). Item discriminant validity was strong at 92.3%. Correlations with the VR-12 were consistent with the existence of two factors.

Conclusions

The English version of the Q-LES-Q-SF is a valid, reliable self-report instrument for assessing quality of life. Its factor structure can be best described as one strong psychosocial factor. Differences in underlying factor structure across studies may be due to limitations in using EFA on Likert scales, language, culture, locus of participant recruitment, disease burden, and mode of administration.

Similar content being viewed by others

Introduction

Clinical, research, and administrative interest in quality of life (QOL) has increased over the past three decades. QOL is generally defined as an individual’s subjective, holistic view of life circumstances across physical, psychological, and social domains [1,2,3,4]. It is a valuable predictor of patients’ overall health status, their perceptions of health services, and how to improve those services [3, 5, 6]. To that end, measures of patient-reported outcomes that emphasize patients’ subjective perspectives like QOL are increasingly used in psychiatry [6] and relevant given the growing attention to self-defined recovery as the goal of healthcare [7]. Many tools that measure QOL have been developed and examined to determine their reliability, validity, feasibility, and sensitivity to change [8].

One such self-report tool is the Quality of Life Enjoyment and Satisfaction Questionnaire (Q-LES-Q), designed to measure a patient’s satisfaction and enjoyment in different areas of daily functioning. The original scale consists of 93 questions, which were grouped into eight subscales on the basis of expert clinical opinion: physical health, subjective feelings, leisure time activities, social relationships, work, school/coursework, household duties, and general activities [9]. The abbreviated version (Q-LES-Q-SF) consists of 14 items derived from the long form’s general activities subscale, plus two questions about medication and overall life satisfaction. Both versions are among the most frequently used QOL measures in psychopharmacology and clinical trials [10], and have been translated into several languages.

A number of studies have assessed the reliability, validity, and factor structure of the Q-LES-Q and Q-LES-Q-SF to date (see Table 1). Notably, there is little consensus regarding the factor structure for the Q-LES-Q-SF [10], with research indicating one factor [2, 9], two factors [5], or an equal chance of one or two factors [11]. Psychometric studies have involved work with versions in Chinese, French, and other languages and cultures, which may explain the lack of consensus in the results [2, 3]. A variety of methods (exploratory factor analysis using Pearson’s or polychoric correlations, principal components analysis, confirmatory factor analysis, structural equation modeling) may have contributed to diverse findings [2, 5, 9, 11]. Moreover, investigations of the psychometric properties of the Q-LES-Q-SF in English have been sparse, and none have addressed its factor structure.

In addition, samples used for the majority of Q-LES-Q-SF factor analyses have been recruited during an episode of inpatient or outpatient care or when patients arrived at medical facilities for treatment. It is unclear whether the factor structure remains stable for populations who complete the survey removed from the point of care, that is, outside of a clinic and not necessarily actively care-seeking. Testing the factor structure on patients outside the point of care would thus entail analysis in a more diverse sample of patients. Measuring QOL within this population is important for population-based healthcare systems, such as national health services and accountable care organizations (ACOs) which must proactively manage patient care, as well as for payers and organizations monitoring healthcare delivery [11, 12]. Furthermore, there are no psychometric data on telephone administration of this survey which allows for interactive self-reports outside the point of care. Given the variation in Q-LES-Q-SF results to date and the limitations in the populations studied, the purpose of our current study is to advance understanding of the possible reasons for previous findings of both uni- and bi-dimensional latent structures elsewhere by exploring possible Q-LES-Q-SF factor structures in a population of individuals outside the point of care enrolled in treatment. General mental health clinics across nine United States Department of Veteran Affairs medical centers (VAMCs) provide treatment. These analyses aim to contribute to understanding the dimensionality of the Q-LES-Q-SF in enrolled populations with varied psychiatric diagnoses and physical comorbidities and to the sparse literature on the reliability of the English language version.

Methods

This study was approved by the VA Central Institutional Review Board. We obtained a waiver of written informed consent and obtained verbal informed consent from all individual participants included in the study. Data were collected at baseline for a controlled implementation trial that focused on evidence-based team care and its downstream impact on healthcare outcomes and satisfaction [13].

Sample and data collection

The study population consisted of Veterans who had at least two behavioral health visits in the prior year (with at least one visit within the past three months) to a mental health clinic at one of the nine VAMCs, excluding those who received a diagnosis of dementia during this interval (n = 5596 as the sample frame). From these, up to 500 individuals from each VAMC were randomly selected for telephone interviews, up to 85 per site, with a total goal of 765 participants at baseline based on power calculations for the original trial. Women were oversampled for gender balance. Potential participants received opt-out instructions if they chose not to be called and study information in the mail 2–6 weeks prior to calls. Non-clinician phone interviewers received extensive training including assigned readings, role-playing, supervised full-length practice interviews, frequent peer conferencing, and access to a study clinician in order to standardize administration. Interviewers administered the survey battery to Veterans over the telephone in English. Potential participants who could not be reached after three calls were excluded, resulting in a total sample of 576 completed interviews.

Instruments

The Q-LES-Q-SF’s 16 self-report items evaluate overall enjoyment and satisfaction with physical health, mood, work, household and leisure activities, social and family relationships, daily functioning, sexual desire/interest/performance, economic status, vision, ability to get around physically, overall well-being, medications, and contentment [13]. Items are rated on a five-point Likert scale (“very poor,” “poor,” “fair,” “good,” “very good”), with higher scores indicating better enjoyment and satisfaction with life. The scoring of the Q-LES-Q-SF involves summing the first 14 items to yield a total score. The last two items about medication and overall contentment were added to the short form for clinical reasons and are scored separately [10]. The total score ranges from 14 to 70 and is expressed as a percentage based on the maximum total score of the items completed (0–100). The normal range that represents community sample scores is 70–100 [6, 9].

To measure construct validity of the Q-LES-Q-SF, we compared it to a similar QOL assessment with clearly established factor structure. The Veterans RAND 12-Item Health Survey (VR-12) was adapted for Veteran populations from the SF-12, an abbreviated version of the SF-36 [14,15,16]. This 12-item health status measure contains two summary ratings: the Mental Component Score (MCS) and Physical Component Score (PCS). The MCS and PCS provide an assessment of overall mental and physical health status, respectively, over the past month. Each score ranges from 1 to 100, with a higher score indicating a more favorable health status.

We also asked background questions regarding current employment status and race/ethnicity. As part of the interview protocol, we administered a harm risk screener consisting of the Patient Health Questionnaire (PHQ)-9 and, if appropriate, the P4 Screener, both used for suicide risk stratification [17]. Additional demographic and diagnoses data such as service utilization were gathered from the VA Corporate Data Warehouse (CDW).

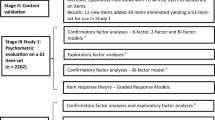

Psychometric analyses

The analysis proceeded by testing the reliability and validity of the Q-LES-Q-SF items using a random split-sample (n=288 and n=288), a common psychometric approach [18, 19], to conduct an exploratory factor analysis (EFA) followed by multi-trait scaling analysis (MTA) to validate the EFA, as we have done in prior work [20]. We started with EFA, given the conflicting findings regarding the dimensional structure of the instrument (i.e., both single- and dual-factor solutions) [21, 22]. We used oblique promax rotation (i.e., allowing the factors to correlate). The EFA calculates communalities, which represent the level of shared variance of each item with the other items. Communalities are considered low if r < 0.4, moderate if between 0.4 and 0.79 inclusive, and high if r ≥ 0.8 [23].

Two rules were applied to decide the number of factors to retain and rotate: the Kaiser–Guttman rule (i.e., factors with eigenvalues > 1), and Cattell’s scree plot method [20]. While these criteria used along are not recognized as best practice for deciding the number of factors to retain [24,25,26], they have nonetheless been widely applied, and their use here allowed us to explore both single- and dual-factor solutions to compare with the literature. To supplement these approaches, however, we also applied two additional methods recognized as best practice [24] to further inform the number of factors to retain and rotate: the full sample (n = 568): Velicer’s minimal average partial (MAP) and Horn’s parallel analysis (PA) using simulation [27].

To explore the EFA-derived structure in view of existing two-factor solutions [2, 6], we ran an MTA on the other half sample [28, 29]. MTA, based on Campbell and Fiske’s [30] multi-trait, multi-method approach, assesses the pattern of correlations between all questionnaire items and the hypothesized scale scores computed from those items [31, 32]. Validity of the theorized scales is determined through the pattern of convergence and discrimination among the correlations. MTA begins with the assignment of each item to a hypothesized scale; items with EFA loadings ≥ 0.40 on two different factors were provisionally assigned to the factor with the highest loading. Item convergent validity was considered adequate if there was at least a 0.30 correlation between the item and its hypothesized scale. Item discriminant validity (i.e., scaling success) was judged to be supported if the correlation between an item and its hypothesized scale was higher (probable success) or significantly higher (definite success) than the correlation between that item and any other scale. Item discriminant validity was not supported (i.e., scaling failure) if the correlation between the items was more strongly correlated with other scales rather than its hypothesized scale, representing probable or definite scaling failures, respectively. Significance testing was two-tailed; we used the 0.05 level of tolerance for Type 1 error as our criterion for statistical significance [33].

Based on the empirical evidence, MTA results for the initial hypothesized scale structure and conceptual considerations, items were reassigned to other factors or dropped from the analysis to improve convergent validity (consistency within subscales) and discriminant validity (distinctions between factors). We then ran the MTA again using the revised item-to-scale assignments, and the cycle was repeated until no further improvements in validity were achieved. Cronbach’s alpha coefficients were computed as part of MTA and monitored throughout the process of item reassignment to ensure adequate scale internal consistency reliability for group comparisons (α ≥ 0.70) [20]. Floor and ceiling effects were measured and considered acceptable if fewer than 15% of respondents answered with the lowest or uppermost answer option [34].The internal consistency of the final overall Q-LES-Q-SF and resultant subscales were assessed using the Cronbach’s alpha coefficient. Internal consistency was classified as satisfactory at 0.70–0.79, good from 0.80 to 0.89, and excellent at ≥ 0.90 [16].

To determine if clustering at the medical center level occurred among scores beyond slight variation due to expected regional differences [17], we calculated inter-class correlations (ICC). ICC(1) provides an estimate of the reliability of one respondent’s score as an estimate of a relevant group mean; values between 0.05 and 0.30 are typical indicators of clustering [34].

Construct validity of the overall instrument and its subscales was assessed by correlations between the Q-LES-Q-SF factors and the VR-12 MCS and PCS using Pearson’s correlation coefficients. All statistical analysis was conducted using SAS software, Version 9.4 [35].

Results

Demographics

Eight respondents of the 576 Veterans surveyed did not answer any Q-LES-Q-SF items and were removed. Of the remaining 568 Veterans, 20.4% were female, with a mean age of 54.2 ± 13.7 years (Table 2). Of the sample, 18.2% worked full-time, 4.6% worked part-time, 6.7% were unemployed, 35.1% were retired, and 30.0% were disabled. The most common mental health diagnoses were post-traumatic stress disorder (PTSD) (48.2%) and major depressive disorder (46.1%). The most common medical diagnoses were pain (49.5%) and hypertension (35.1%). The average score on the full Q-LES-Q-SF scale was 53.28 ± 19.4 (Table 3). Minimal floor and ceiling effects were observed (< 15%).

We excluded Item 3 on the Q-LES-Q-SF (work) from analyses due to very low employment rates (71.8% unemployed, disabled, or retired; Table 2). Respondents with greater than 50% of items missing were removed from each half sample, resulting in (n = 234) for EFA and (n = 283) for MTA.

Explanatory factor analysis

The EFA using oblique promax rotation yielded one or two factors (Table 3). Eigenvalues were strong for Factor 1 (10.56) and borderline for Factor 2 (1.07) in the dual-factor solution, which we pursued further for comparison with prior literature. The scree plot mirrored this finding. Ten items loaded on Factor 1 and two items loaded on Factor 2. Although item 9 (sex) loaded on Factor 2 more strongly than on Factor 1, it did not meet the 0.40 cut-off for either factor (Table 3). Communalities were low for items 6, 9, 10, 11, and 13 (r < 0.4) and markedly high for item 12 (ability to get around physically: r = 0.91). However, the Minimal Average Partial and Horn’s parallel analysis suggested a single factor.

Multi-trait analysis

In view of the borderline EFA results and prior literature [2, 6, 16, 36], the MTA was used to explore goodness of fit of the 2-factor model. The internal consistency of the entire Q-LES-Q-SF was substantial and consistent with prior work (α = 0.85). Initially, based on conceptual considerations, and a desire to use as many of the available items as possible, we placed item 9 (sexual desire) with items 12 (ability to get around physically) and 13 (vision) together to constitute a “physical” subscale despite item 9’s overall lack of substantial loading in the EFA. The other factor, a “psychosocial” subscale, included the 10 items that loaded on Factor 1. Item 1 (physical health) was also moved to the “physical” subscale based on face validity. Internal consistency of the psychosocial and physical subscales was strong to moderate (α = 0.85 and 0.68, respectively). No substantial increase in alphas for either scale was achieved by eliminating any item. Despite these promising results, the initial MTA yielded low significant item discriminant validity (46%), in large measure due to item 9 in the proposed physical subscale. Therefore, we reassigned item 9 to the psychosocial factor.

Our final MTA (Table 4) incorporated item 9 (sex) into the psychosocial subscale, and placed items 1 (physical health), 12 (ability to get around physically), and 13 (vision) into the physical subscale. This eliminated item-level discriminant validity failures for all items and raised the scale’s significant item discriminant validity from 46% to 92%. Internal consistency was strong for the psychosocial subscale (α = 0.87), and just below satisfactory for the physical subscale (α = 0.63). Alpha-if-item-deleted statistics from the second MTA indicated that no substantial gains in internal consistency could be achieved by eliminating any item. The inter-scale correlation improved (i.e., was considerably lower) compared to that observed in the initial MTA (r = 0.50 versus initial r = 0.60). Item discriminant validity was strong at 92.3%.

Across all nine sites, means of responses were 51.96 ± SD20.18 for the psychosocial factor and 58.45 ± SD22.88 for the physical factor. The inter-class correlations ICC (1) did not show clustering for the full scale (0.02), the two subscales (psychosocial = 0.01 and physical = 0.03), or for any item (− 0.003–0.047).

Construct validity

We evaluated construct validity by comparing VR-12 scores with the full sample of Q-LES-Q-SF survey respondents (n = 568). The mean VR-12 PCS score was 35.33 ± 13.08 and the mean MCS score was 36.69 ± 14.72. The full Q-LES-Q-SF demonstrated moderate correlation with the MCS (r = 0.65; p < 0.001) and weaker correlation with the VR-12 PCS (r = 0.38; p < 0.001). Notably, the PCS showed a significant moderate correlation with the physical subscale we propose (r = 0.54; p < 0.001), compared to a weaker correlation with the psychosocial subscale (r = 0.28; p < 0.001). Conversely, the MCS correlated with moderate to strong significance with the psychosocial scale (r = 0.68; p < 0.001) compared to the physical scale (r = 0.35; p < 0.001).

Discussion

We investigated the factor structure of the Q-LES-Q-SF based on data collected during telephone interviews with 568 Veterans enrolled in general mental health services but not at the point of care at the time of survey completion. Notably, our sample had substantial physical and mental health burden (Table 2). Using EFA and MTA in a split-half approach to consider both uni- and bi-dimensional solutions, we identified single- and dual-factor solutions, the latter with a strong psychosocial factor (k = 10) and a possible weaker physical health factor (k = 3).

Interpreting our bi-dimensional results is informed by reviewing the two prior studies of Q-LES-Q-SF factor structure, one in Chinese [6] and the other in French [2, 16]. The former, using a primary care locus of recruitment, identified both a psychosocial and a physical factor, while the latter, recruiting from a substance abuse facility, identified a single, overall factor and a possible second factor. Ethnic/cultural variability in the expression of general mental illnesses, particularly major depressive disorder, is well documented; for example, compared to depressed Western populations, Chinese patients often endorse physical rather than psychological symptoms [37]. Such tendencies may partly explain the difference in the Q-LES-Q-SF factor structure identified by Chinese and French studies. Further, the degree to which differences in language, culture, locus of sample recruitment, or a combination of these factors contribute to the differences in findings between those previous studies and the present one cannot be determined for certain.

Our results provide insight into this divergence of findings. Similar to both prior studies, we found a strong primary factor in our sample representing a psychosocial subscale. The physical health factor was much weaker and, in our estimation, equivocal. Recalling that the original Q-LES-Q-SF was constructed based on expert opinion without formal psychometrics, it is not surprising to observe some instability in factor structure across languages and populations. However, from our and prior [2, 6] analyses, it is clear that a strong psychosocial factor can be distinguished. In fact, relying solely on the EFA results, one could make a strong case for a unidimensional factor—Factor 1 in this study—which also includes item 1 (physical health). Indeed, the MAP and Horn’s Parallel Analysis tests support the existence of a single factor.

In contrast, the presence of a separate, distinctly physical health factor is uncertain. The Q-LES-Q was initially reported in the psychopharmacologic literature [9] and extensively used in medication treatment trials. The three specific physical health items included by experts (physical ability, vision, sex) correspond to side effects frequently encountered in the medications typically investigated at that time (tricyclic antidepressants and first generation antipsychotics), and this may be a reason behind the inclusion of these specific symptoms. Changes in medication usage and their side effects in the years since the introduction of the Q-LES-Q, combined with differences in population characteristics across studies, may mitigate the usefulness of these three items to distinguish differences in patient experience.

Nonetheless, the pattern of convergent and discriminant validity of the proposed Q-LES-Q-SF factors with the VR-12 suggest the possibility of a two-factor structure. The proposed Q-LES-Q-SF psychosocial factor correlates more strongly with the MCS than the PCS. Conversely, the proposed Q-LES-Q-SF physical factor correlates more strongly with the PCS than the MCS.

Correlations of the full Q-LES-Q-SF with the MCS and PCS reveal differences in relative strength that suggest a unidimensional interpretation that emphasizes the psychosocial content of the measure. The Q-LES-Q-SF overall score correlated strongly with the VR-12 MCS and less so with the PCS. Consistent with our findings, Bourion-Bédès and colleagues’ [2] also found a strong correlation between Q-LES-Q-SF total score and the MCS from the SF-12 (from which the VR-12 derives), and weaker correlation with the SF-12 PCS. In Lee and colleagues’ [6] study, their psychosocial factor correlates more strongly with the MCS, and the physical factor with the PCS as in Bourion-Bédès’ and our Western samples. The entire Q-LES-Q-SF in Lee’s study shows modest, nearly identical correlations with both the SF-12 PCS (r = 0.35) and MCS (r = 0.38).

All three studies, across three distinct populations, cultures, and languages, converge around a strong psychosocial factor. In the present study and that of Lee and colleagues [6], this is complemented by a weaker, separate physical factor, while Bourion-Bédès and colleagues’ study [2, 16] resulted in a single overall factor that is heavily psychosocially weighted.

Thus researchers and program evaluators can have confidence in using the Q-LES-Q-SF as a single, psychosocial factor. The two-factor solution can be used with little confidence due to equivocal support for a distinct physical factor in the measure as it currently exists.

Limitations

Our study has several limitations. We used Pearson’s rather than polychoric correlations to allow for closer comparison to Lee and colleagues (2014) bi-dimensional results. Future research in this area could focus on alternative methods utilizing polychoric correlations for ordinal scales. We also note that the Kaiser criterion and scree plot method used here to mirror the procedures used by Lee and colleagues (2014) and many others may lead to overdimensionalization, especially when Likert scales are involved [24,25,26]. To at least partially mitigate this possibility, we also applied Velicer’s minimal average partial (MAP) and Horn’s parallel analysis (PA) and recommend similar multiple procedures be used in future research.

We also had high missing data rate for item 3 (work), likely due to high rates of retirement, disability, and unemployment in our sample. However, this has been found in other studies involving mental health and substance use populations [2, 4, 10, 16, 38]. Following Bishop and colleagues’ [5] example, we excluded questions related to work because morbidity rates in their populations were too high.

It is also possible that mode of administration affected results. Patients may be less willing to disclose about sensitive topics in real-time conversation compared to mail-out surveys. However, both Lee and colleagues [6] and Bourion-Bédès and colleagues [2, 12] administered surveys within clinics, which may feel even less anonymous than phone interviews. Additionally, paper administration for clinical purposes includes instructions on circling specific facets of the topic in question that cause dissatisfaction within items, i.e., Item 9: “sexual desire, interest, and/or performance” (Endicott, personal communication). When the survey is administered aloud, all three elements of sexual experience must be mentally combined in some fashion and judged together. Similar conflicting interpretations of questions with options may explain the low communalities of items 11 (“living or housing situation?”) and 13 (“vision, in terms of work or hobbies?”) and the unusually high communality for item 12 (“able to get around without feeling dizzy or unsteady or falling?”). Although the aspects of items chosen on paper do not affect the total Q-LES-Q-SF score, the options inherent in the items mean that these responses may be more variable than other items.

The higher proportion of males within our sample may have also affected how item 9 (sex) loaded in factor analyses. Even when oversampling for females, 79.6% of the participants were male (Table 1). For item 9 in particular, differential item functioning has been observed based on sex [2].

Finally, our study may be limited in that results may differ in populations treated in systems different from the VA, a large, publicly funded healthcare system. Patients receiving care within the VA comprise an aging population with a high proportion of males who have high rates of physical comorbidities [39]. These comorbidities are shown to contribute to perceptions of QOL and overall health outcomes [40]. However, this type of population will become increasingly relevant as a higher proportion of the world-wide population ages and as general mental health care becomes more integrated into primary and specialty services [41,42,43].

Conclusions

This study confirms that the Q-LES-Q-SF is a valid and reliable recovery-oriented self-report instrument within a general mental health population assessed not at the point of care. The factor structure may be best described as one clear psychosocial factor and one possible weaker physical factor, a structure which may vary according to factor structure extraction methods, treatment of Likert scales as ordinal versus categorical, degree of disease burden, culture, language, and mode of administration. While the psychosocial factor is notably stable across three populations, cultures, and languages, future research may reduce the instability of the second physical factor, perhaps even incorporating additional items. In addition, further assessment of the effect of administration mode (i.e., paper versus phone) on the Q-LES-Q-SF responses and factor structure is needed.

Based on current evidence, researchers and program evaluators can be secure in using the full Q-LES-Q-SF score or the single 10-item psychosocial factor. In the evaluation of interventions or other studies with a particular focus on the physical quality of life, breakout scoring of the physical subscale might be examined with considerable caution. However, in those circumstances where the assessment of physical quality of life is critical, the use of supplemental validated measures of that dimension is recommended.

References

Rapaport, M. H., Fayyad, C. C., & Endicott, R. J Quality of Life Impairment in Depression and Anxiety Disorder American Journal of Psychiatry.

Bourion-Bedes, S., Schwan, R., Laprevote, V., Bedes, A., Bonnet, J. L., & Baumann, C. (2015). Differential item functioning (DIF) of SF-12 and Q-LES-Q-SF items among french substance users. Health and Quality of Life Outcomes, 13, 172. https://doi.org/10.1186/s12955-015-0365-7.

Zubaran, C., Forest, K., Thorell, M. R., Franceschini, P. R., & Homero, W. (2009). Portuguese version of the quality of life enjoyment and satisfaction questionnaire: A validation study. Revista Panamericana de Salud Publica/Pan American Journal of Public Health, 25(5), 443–448.

Pitknen, A., Vlimki, M., Endicott, J., Katajisto, J., Luukkaala, T., Koivunen, M., et al. (2012). Assessing quality of life in patients with schizophrenia in an acute psychiatric setting: Reliability, validity and feasibility of the EQ-5D and the Q-LES-Q. Nordic Journal of Psychiatry, 66(1), 19–25. https://doi.org/10.3109/08039488.2011.593099.

Bishop, S. L., Walling, D. P., Dott, S. G., Folkes, C. C., & Bucy, J. (1999). Refining quality of life: validating a multidimensional factor measure in the severe mentally ill. Qual Life Res, 8(1–2), 151–160.

Lee, Y. T., Liu, S. I., Huang, H. C., Sun, F. J., Huang, C. R., & Yeung, A. (2014). Validity and reliability of the Chinese version of the Short Form of Quality of Life Enjoyment and Satisfaction Questionnaire (Q-LES-Q-SF). Quality of Life Research, 23(3), 907–916. https://doi.org/10.1007/s11136-013-0528-0.

Ellison, M. L., Belanger, L. K., Niles, B. L., Evans, L. C., & Bauer, M. S. (2016). Explication and definition of mental health recovery: A systematic review. Administration and Policy in Mental Health and Mental Health. https://doi.org/10.1007/s10488-016-0767-9.

Prigent, A., Simon, S., Durand-Zaleski, I., Leboyer, M., & Chevreul, K. (2014). Quality of life instruments used in mental health research: Properties and utilization. Psychiatry Research, 215(1), 1–8. https://doi.org/10.1016/j.psychres.2013.10.023.

Endicott, J., Nee, J., Harrison, W., & Blumenthal, R. (1993). Quality of life enjoyment and satisfaction Questionnaire: A new measure. Psychopharmacology Bulletin, 29(2), 321–326.

Stevanovic, D. (2011). Quality of Life Enjoyment and Satisfaction Questionnaire-short form for quality of life assessments in clinical practice: A psychometric study. Journal of Psychiatric and Mental Health Nursing, 18(8), 744–750. https://doi.org/10.1111/j.1365-2850.2011.01735.x.

Fisher, E. S., McClellan, M. B., Bertko, J., Liberman, S. M., Lee, J. J., Lewis, J. L., et al. (2009). Fostering accountable health care: Moving forward in medicare. Health Affairs, 28, W219–W231.

Shortell, S. M., & Casalino, L. P. (2010). Implementing qualifications criteria and technical assistance for accountable care organizations. JAMA, 303(17), 1747–1748.

Bauer, M. S., Miller, C., Kim, B., Lew, R., Weaver, K., Coldwell, C., et al. (2016). Partnering with health system operations leadership to develop a controlled implementation trial. Implementation Science, 11, 22. https://doi.org/10.1186/s13012-016-0385-7.

Kazis, L. E., Selim, A. J., Rogers, W., Ren, X. S., Lee, A., & Miller, D. R. (2006). Dissemination of methods and results from the veterans health study final comments and implications for future monitoring strategies within and outside the veterans healthcare system. Journal of Ambulatory Care Management, 29(4), 310–319.

Selim, A. J., Rogers, W., Fleishman, J. A., Qian, S. X., Fincke, B. G., Rothendler, J. A., et al. (2009). Updated U.S. population standard for the Veterans RAND 12-item Health Survey (VR-12). Quality of Life Research, 18(1), 43–52. https://doi.org/10.1007/s11136-008-9418-2.

Bourion-Bedes, S., Schwan, R., Epstein, J., Laprevote, V., Bedes, A., Bonnet, J. L., et al. (2015). Combination of classical test theory (CTT) and item response theory (IRT) analysis to study the psychometric properties of the French version of the Quality of Life Enjoyment and Satisfaction Questionnaire-Short Form (Q-LES-Q-SF). Quality of Life Research, 24(2), 287–293. https://doi.org/10.1007/s11136-014-0772-y.

Dube, P., Kurt, K., Bair, M. J., Theobald, D., & Williams, L. S. (2010). The p4 screener: Evaluation of a brief measure for assessing potential suicide risk in 2 randomized effectiveness trials of primary care and oncology patients. Primary Care Companion to the Journal of Clinical Psychiatry. https://doi.org/10.4088/PCC.10m00978blu.

DeVellis, R. F. (1991). Scale development: Theory and applications. Newbury Park: Sage Publications, Inc.

Helfrich, C. D., Li, Y. F., Mohr, D. C., Meterko, M., & Sales, A. E. (2007). Assessing an organizational culture instrument based on the Competing Values Framework: Exploratory and confirmatory factor analyses. Implementation Science, 2, 13. https://doi.org/10.1186/1748-5908-2-13.

Restuccia, J. D., Mohr, D., Meterko, M., Stolzmann, K., & Kaboli, P. (2014). The association of hospital characteristics and quality improvement activities in inpatient medical services. Journal of General Internal Medicine, 29(5), 715–722. https://doi.org/10.1007/s11606-013-2759-8.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299.

Widaman, K. F. (2007). Common factors versus components: Principals and principles, errors and misconceptions. In R. Cudeck & R. C. MacCallum (Eds.), Factor analysis at 100: Historical developments and future directions (pp. 177–203). Mahwah: Lawrence Erlbaum.

Costello, A. B., & Osborne, J. W. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment Research & Evaluation, 10(7), 1–9.

Lorenzo-Seva, U., Timmerman, M. E., & Kiers, H. A. L. (2011). The Hull method for selecting the number of common factors. Multivariate Behavioral Research, 46(2), 340–364. https://doi.org/10.1080/00273171.2011.564527.

Timmerman, M. E., & Lorenzo-Seva, U. (2011). Dimensionality assessment of ordered polytomous items with parallel analysis. Psychol Methods, 16(2), 209–220. https://doi.org/10.1037/a0023353.

van der Eijk, C., & Rose, J. (2015). Risky business: Factor analysis of survey data—Assessing the probability of incorrect dimensionalisation. PLoS ONE, 10(3), e0118900. https://doi.org/10.1371/journal.pone.0118900.

Courtney, M. G. R. (2013). Determining the number of factors to retain in EFA: Using the SPSS R-Menu v2.0 to make more judicious estimations. Practical Assessment, Research & Evaluation, 18(8), 1–14.

Hays, R. D., & Hayashi, T. (1990). Beyond internal consistency reliability: Ratonale and user’s Guide for Multitrait Analysis Program on the microcomputer. Behavior Research Methods, Instruments, & Computer, 22(2), 167–175.

Stewart, A. L., Hays, R. D., & Ware, J. E. J. (1992). Methods of constructing health measures. In A. L. Stewart & J. E. J. Ware (Eds.), Measuring functioning and well-being: The medical outcomes study approach (pp. 67–85). Durham: Duke University Press.

Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the mutritrait-multimethod matrix. Psychological Bulletin, 56(2), 81–105.

J, H. (1997). Measuring Health: A review of quality of life measurement scales. Journal of Clinical Effectiveness, 2(3), 96–96.

Ware, J. E., Harris, W. J., Gandek, B., Rogers, B. W., & Reese, P. R. (1997). MAP-R for Windows: Multitrait/multi-item analysis program-revised user’s guide. Boston: Health Assessment Lab.

Sullivan, J. L., Meterko, M., Baker, E., Stolzmann, K., Adjognon, O., Ballah, K., et al. (2013). Reliability and validity of a person-centered care staff survey in veterans health administration community living centers. Gerontologist, 53(4), 596–607. https://doi.org/10.1093/geront/gns140.

Terwee, C. B., Bot, S. D., de Boer, M. R., van der Windt, D. A., Knol, D. L., Dekker, J., et al. (2007). Quality criteria were proposed for measurement properties of health status questionnaires. Journal of Clinical Epidemiology, 60(1), 34–42. https://doi.org/10.1016/j.jclinepi.2006.03.012.

. SAS, S., & Version, S. T., A. T. (2003). (9.4 ed.). Cary, NC: SAS Institute.

Stevanovic, D. (2014). Is the Quality of Life Enjoyment and Satisfaction Questionnaire-Short Form (Q-LES-Q-SF) a unidimensional or bidimensional instrument? Quality of Life Research, 23(4), 1299–1300. https://doi.org/10.1007/s11136-013-0566-7.

Kleinman, A. (2004). Culture and depression. New England Journal of Medicine, 351(10), 951–953.

Ritsner, M., Kurs, R., Gibel, A., Ratner, Y., & Endicott, J. (2005). Validity of an abbreviated quality of life enjoyment and satisfaction questionnaire (Q-LES-Q-18) for schizophrenia, schizoaffective, and mood disorder patients. Quality of Life Research, 14(7), 1693–1703.

Hoerster, K. D., Lehavot, K., Simpson, T., McFall, M., Reiber, G., & Nelson, K. M. (2012). Health and health behavior differences: U.S. Military, veteran, and civilian men. American Journal of Preventive Medicine, 43(5), 483–489. https://doi.org/10.1016/j.amepre.2012.07.029.

Fenn, H. H., Bauer, M. S., Altshuler, L., Evans, D. R., Williford, W. O., Kilbourne, A. M., et al. (2005). Medical comorbidity and health-related quality of life in bipolar disorder across the adult age span. Journal of Affective Disorders, 86(1), 47–60. https://doi.org/10.1016/j.jad.2004.12.006.

Shim, R., & Rust, G. (2013). Primary care, behavioral health, and public health: Partners in reducing mental health stigma. American Journal of Public Health, 103(5), 774–776. https://doi.org/10.2105/AJPH.2013.301214.

Sederer, L. I., Derman, M., Carruthers, J., & Wall, M. (2016). The New York State Collaborative Care Initiative: 2012–2014. Psychiatric Quarterly, 87(1), 1–23. https://doi.org/10.1007/s11126-015-9375-1.

Das, P., Naylor, C., & Majeed, A. (2016). Bringing together physical and mental health within primary care: A new frontier for integrated care. Journal of the Royal Society of Medicine, 109(10), 364–366. https://doi.org/10.1177/0141076816665270.

Acknowledgements

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the U. S. Department of Veterans Affairs or the United States government.

Funding

This study was funded by the U.S. Department of Veteran Affairs Health Services Research and Development Team-Based Behavioral Health Quality Enhancement Research Initiative (QUERI 15-289).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Author MS Bauer has received about $250 a year in royalties from two manuals published by Springer and New Harbinger that may conceivably increase sales due to the findings of the study. There are no other potential conflicts of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Riendeau, R.P., Sullivan, J.L., Meterko, M. et al. Factor structure of the Q-LES-Q short form in an enrolled mental health clinic population. Qual Life Res 27, 2953–2964 (2018). https://doi.org/10.1007/s11136-018-1963-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-018-1963-8