Abstract

This article presents findings from interviews that were conducted with agriculture and food system researchers to understand their views about what it means to conduct ‘responsible’ or ‘trustworthy’ artificial intelligence (AI) research. Findings are organized into four themes: (1) data access and related ethical problems; (2) regulations and their impact on AI food system technology research; (3) barriers to the development and adoption of AI-based food system technologies; and (4) bridges of trust that researchers feel are important in overcoming the barriers they identified. All four themes reveal gray areas and contradictions that make it challenging for academic researchers to earn the trust of farmers and food producers. At the same time, this trust is foundational to research that would contribute to the development of high-quality AI technologies. Factors such as increasing regulations and worsening environmental conditions are stressing agricultural systems and are opening windows of opportunity for technological solutions. However, the dysfunctional process of technology development and adoption revealed in these interviews threatens to close these windows prematurely. Insights from these interviews can support governments and institutions in developing policies that will keep the windows open by helping to bridge divides between interests and supporting the development of technologies that deserve to be called “responsible” or “trustworthy” AI.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Agriculture and food system researchers engage with one of the most fundamental aspects of human experience—the food that people set on their tables and feed to their families. Aside from being essential to physical survival, food preferences are embedded deeply in human identities, emotions, and cultures. People establish identities, form relationships, and govern communities in part through how they produce, prepare, and consume or avoid various foods (Mintz & Du Bois, 2002). The physical, psychological, spiritual, social, and cultural significance people assign to food sets apart the ethics of Artificial Intelligence (AI) in the food system from issues that arise in AI technologies in manufacturing equipment, tools, or similar commodities.

Due to the unique nature of food in society, everyone is a stakeholder in the food system. Concerns regarding AI in the food system connect to many sensitive and deeply held beliefs, norms, needs, and expectations. People often worry about what is in their food and make purchasing decisions based on how their food is grown or produced (Hwang et al., 2005; Kaptan & Kayısoglu, 2015; Waldman & Kerr, 2018). People hold on to certain foods as a means of connecting with their cultural heritage and memory, while in other cases they set aside well-loved traditional foods due to new health concerns (Long, 2013). Consumers may express a willingness to purchase more expensive products that may have been grown “organically” or locally, but often do not follow through with paying the high costs for these items when shopping (Saitone & Sexton, 2017). While changing expectations raise the cost of food, many people increasingly perceive access to affordable food as a basic human right that governments should protect and facilitate (Hearing before the U.S. House of Representatives, 2021; Jenkins & Hsu, 2008–2009). These beliefs and conflicting expectations make trust essential but fragile, elevate concerns about impacts on people—especially laborers, small farmers, and poor consumers—and often lead individuals to adopt dichotomous views on food markets and policy.

Calls have arisen for ethical and regulatory frameworks to increase trust in AI technologies. These calls have increased since the interviews reviewed here were conducted in the spring of 2021. Particularly in the early months of 2023 following the release of ChatGPT and increased awareness of AI technologies based on large language models, such calls have become more frequent and more urgent. In January, the National Institute of Standards and Technology (NIST) released a voluntary or “soft law” framework as a first step toward establishing expectations for developing responsible AI technologies (Raimondo & Locascio, 2023, January). In February, the FTC warned companies not to make false claims to consumers about AI in their products (Atleson, 2023). And many groups are calling for strict AI regulation, with the EU AI Act poised to be the first legislation specifically targeted at managing AI risks (European Commission, 2021). These steps, while positive, indicate that the development of AI technologies has been rapidly moving forward within a vacuum of policy and consensus. They also reveal both a concern that AI technologies may sometimes not work as well as claimed, and also a concern that AI technologies sometimes work too well, with the potential to displace human labor or cause other widescale harms. And in either case, these technologies may be designed with differing and sometimes conflicting values in mind.

While the models and capabilities used in AI food system technologies may, in many cases, be of a different kind than the ones gaining the most exposure in media and public discourse, trust is all the more essential for agriculture and food system technologies given food’s pervasiveness and significance to human life (Tzachor et al., 2022). For ethical or regulatory frameworks to be effective in facilitating trust, an important first step is to ask who is responsible for identifying ethical concerns, developing ethical frameworks, and implementing them in practice. This article does not attempt to definitively answer this question, as though it can be answered once and for all. Rather, it reports upon initial efforts, viz., research interviews and a scholarly analysis of key issues identified in them, undertaken to better understand how AI researchers, policy makers, and institutions, can support the public’s trust in AI food system technologies.

This approach is affirmed by Robert L. Zimdahl and Thomas O. Holtzer, who argue that “the truest test of the moral condition of any scientific or other discipline, indeed of one’s life, is a willingness to examine its moral condition,” asserting that “professors in U.S. colleges of agriculture had not engaged in this test” (Zimdahl & Holtzer, 2016). What is reported here is an attempt to “engage in this test” with AI agriculture and food system researchers. This effort is further bolstered by recent research on Responsible Research and Innovation (RRI). This approach argues that responsible research involves “a collective, ongoing commitment to evaluate potential positive and negative consequences of research and innovation in dialogue with a broad range of stakeholders and through a reflective process that delivers responsible outcomes” (da Silva et al., 2019). Other research also suggests that high levels of reflexivity are required for successful innovation (Cullen et al., 2014), and that co-innovation relies on “plan-do-reflect” cycles (Botha et al., 2017; Schut et al., 2016).

As described below, interviews with researchers in the Artificial Intelligence Institute for Food Systems (AIFS) posed several questions. However, one question in the interview guide has underpinned this trust-focused work to date: “How vigilant are AI developers and producers in attempting to identify new ethical concerns and who is responsible, or what steps are taken, to address issues that arise?” This question was derived from prior work, designed to investigate trustworthiness and the concept of “deserved trust” in the biomedical research arena, that involved leaders from a range of public and private sectors that all require the public’s trust. This research explored what industry leaders do to support that trust and restore it when there is a breach in it (Hudson, 2003; Yarborough et al., 2009; Yarborough, 2021). These findings emphasized the importance of including all individuals engaged in the development and deployment of a technology in taking shared ownership for public safety in its use. It advocates on-going reflection, vigilance, and active participation by all parties. This involvement increases awareness of potential problems and challenges, and ideas for resolving them, to prevent system failures and breaches of public trust. Shared ownership, in essence, leverages the strengths of integrity-based management styles to make up for the shortcomings of compliance-based approaches (Maesschalck, 2004). This approach might suggest that researchers are obviously among those who should be included as responsible parties.

However, the interviews analyzed here do much more than simply identify and include responsible parties. In fact, the interviews suggest that developing ‘responsible AI’ or ‘trustworthy AI’ is easier said than done. These terms, along with ‘ethics’, can all be used to describe a desired state in which AI will achieve intended benefits while minimizing risk and harm. The goal of this study was not to define these terms precisely or draw distinctions between them—although a very precise definition of trust was derived from the interviews and contextualized within current literature on trust. Rather, the goal was to get behind these now popular expressions to understand what researchers think about conducting research in these emerging AI technologies that so many in the public will potentially trust and use. The interviews revealed no simple answers, but instead the complexities, challenges, and insights that come from moving from theoretical ideas about ‘responsible AI’ and ethical frameworks to practices that can be practically implemented in a researcher’s day-to-day work. In fact, if these interviews suggest any answer to the question of “Who is responsible for responsible AI?” it is that currently the answer is unclear, and even if researchers believe themselves to be partially responsible, or other responsible parties can be identified and included, current institutional and sectoral gaps would make any exercise of responsibility alarmingly difficult to achieve.

The findings discussed here reveal the uneven and shifting terrain in which AI researchers currently work, how they currently navigate this terrain, and possible pathways for implementation of ethical ideals. Interview questions allowed researchers considerable freedom to steer their responses toward areas of research, interest, or concern that were the most important or urgent to them. This approach intentionally allowed several themes to emerge. These themes interact dynamically. For instance, the findings suggest that the harder it becomes to collect data (due to lack of trust, once damaged), the more necessary it becomes to collect data in order to build more reliable technologies that can then restore trust. This article consequently evaluates these themes conjointly, contextualizing researchers’ insights alongside the broad range of conceptual issues relevant to food systems AI. This richly textured approach allows the depth and breadth of complexities to be fully appreciated in a way that would be less clear, or hidden altogether, if these themes were analyzed in isolation. In short, the whole is greater than the sum of the parts. The interviews, taken together, suggest that many gaps exist where ethical and regulatory frameworks, even if developed, may fail. But they also offer glimpses of how researchers are currently addressing issues of ethical disconnect and trust. These glimpses, presented in the findings, suggest components that could be incorporated into models for possible solutions that could and should be considered for further research and implementation at scale.

This article relies on these interviews to provide an on-the-ground view of how AI agriculture and food system technology research is conducted within, and impacted by, the current regulatory and logistical landscape. It reveals the challenges AIFS researchers experience in attempting to align their research in advancing technologies with rapidly evolving and misaligned ethical standards and regulations. While there is some research that examines the emergence of high-level principles and ethics frameworks (Jobin, 2019; Zhu et al., 2022), it became clear as synthesis work with the interviews was conducted that there are also two critical gaps where a failure to adhere to principles may occur: (1) the standards, ethical principles, and interests of researchers, funders, and food system partners may be not only misaligned but incompatible and in conflict with one another, and (2) in part because of the first issue, researchers’ decisions may or may not align with the values stated in ethics frameworks. In other words, it may not be possible to adhere to divergent principles and interpretations at the same time. Therefore, researchers are forced to make decisions about which values, interests, and standards to prioritize. Simply passing more regulation or developing more ethical frameworks and guidelines will not be a sufficient remedy for the challenges researchers face. Without making efforts to address these challenges, it is possible that ethics and regulatory programs may exacerbate these challenges or, at the very least, will not do the work they are meant to do.

It should be emphasized that this article presents the views of academic AI researchers. More research is needed to understand farmers’ and commercial developers’ perspectives on specific technologies in order to confirm or contextualize these researchers’ views. Such research should attend to the wide variance among commercial enterprises as well as farmers and growers, to avoid “false homogenization” (Cullen et al., 2014; Luttrell & Quiroz, 2009) so that more precise assessments of practices and perspectives could be made.

The insights from AI researchers provided in this article can guide policymakers and institutions in crafting meaningful, transformative policies that avoid what has been described by some AI scholars as “ethics-washing” or “ethics-shopping” (Wagner, 2018; Yeung, 2020). Instead, well crafted policies can support researchers in creating a sense of shared ownership for the risks and ethical implications of AI-based technologies. Leveraging knowledge derived from researchers positioned at the early stages of research and development helps to anticipate ethical standards and protocols that may be needed to keep pace with AI technologies. These steps should be seen not as an alternative to regulation, but as a complementary and necessary component of comprehensive AI governance solutions that will build credibility, accountability, and trust with current and future users of products and technologies that utilize AI in the food system.

Key insights from the scholarly literature

There is a substantial body of scholarly work that is relevant to the themes that emerged from our interviews, including vigorous scholarly debate about how food should be grown and distributed. Many scholars increasingly recognize the importance of interdisciplinary and collaborative approaches to addressing food and resource security. These approaches should include demographic and climate data. They should also recognize human systems in decisions for using and sharing resources, and the importance of a stable, well-governed water supply for the food system (Bakker, 2012, 2013; Rosegrant, 2002; Vorosmarty, 2000). Much debate exists currently on what sustainable agriculture means and how to achieve it (Segerkvist, 2020; Velten, 2015). Likewise, some scholars argue that caution should be used in attributing economic development, food production, and resource insecurity solely to population growth (Lambin et al., 2001; Merchant & Alexander, 2022; Wynants et al., 2019) or in assuming that technological advances will be sufficient to alleviate food and resource insecurity in the future (Symposium, 2015). Just as population growth alone cannot explain scarcity, simply producing more food will not necessarily alleviate all food insecurity in the future any more than it does today (Bernard & Lux, 2017).

These underlying debates regarding food system practices correspond to similar tensions regarding the potential for benefits and risks of precision agriculture and AI-based technologies, or whether they should be developed or used in certain sectors of society at all. Recent criticism in agriculture and AI ethics scholarship suggest that efforts to make AI (or any technology) more “ethical” often fail to address reinforcement of power structures that currently influence who benefits from these technologies, and who loses (Barrett & Rose, 2022; Bronson & Knezevic, 2016; Basu & Chakraborty, 2011; Cullen et al., 2014; Duncan et al., 2021; Rose et al., 2021; Turner et al., 2020; Tzachor et al., 2022). Food system literature argues that transnational agribusiness corporations dominate global and national food markets (Downs & Fox, 2021; Gonzalez et al., 2011; Gronski & Glenna, 2008; Konefal, 2005). This view holds that if underlying political, cultural, and economic systems and existing technologies do not currently produce and deliver food sustainably, then adding AI-based technologies to make these systems more efficient would only exacerbate these problems. In other words, the food system would become more efficient at being unjust. AI technologies that recreate or accelerate the social and political construction of scarcity (Scoones et al., 2019) cannot be considered “ethical,” or “responsible,” or “trustworthy.” If AI technologies are deployed in service of “sustaining elite and capitalist power through justifying resource acquisitions and enclosures, large-scale policy reforms in the name of ‘austerity’ and intensification of extraction whilst politically side-stepping more thorny politics of (re)distribution, mis-appropriation, dispossession and social justice” (Mehta et al., 2019), it cannot be called ethical or trustworthy. Some scholars propose that a genuinely ethical approach to AI development would take seriously “the possibility of not doing something” —not developing AI technologies—rather than considering AI technology development to be inevitable (Greene, 2019; Hagendorff, 2021; Miles, 2019).

This debate underscores the significance that concepts such as trust have begun to hold for AI-based food system technologies. Akullo et al. (2018). argue that in a conventional, rules-based understanding of institutions, trust is used to support informal behavior that deviates from preferred rules. However, as this article will show, AI technology research is so new and rules and legal frameworks for governing AI are so absent or insufficient that informality may currently be the only option. This does not mean, however, that AIFS can necessarily be best understood as an institute in the conventional sense. Rather, a performance-based definition seems to best describe the responses discussed in this article. As will be shown, many of the ways that AIFS researchers navigate and respond to research scenarios are highly influenced by “expected behavior” or “a pattern of performing operations exhibiting a common style” (Akullo et al., 2018). These interviews and supporting literature suggest that trust is becoming a key component of the “common style” expected in AI technology research.

This article will situate AIFS researchers’ ideas of trust within the definitions put forward by Gardezi and Stock (2021). These scholars have recently examined the social construction of ‘trust’ in precision agriculture—a form of agriculture that AI-based technologies support (Gardezi & Stock, 2021). Drawing on established literature, they situate trust in precision agriculture within two categories: (1) specific versus generalized trust, and (2) strategic versus moralistic trust. First, they argue that agritech firms tend to promote precision agriculture among farmers not by building “specific trust” —a form of trust based on personal relationships between individuals—but rather by promoting “generalized trust” —the belief that others are part of one’s own moral community. Whereas specific trust depends on an assessment of others’ trustworthiness, generalized trust relies on cultural transmission where no such assessment is necessary or possible.

Gardezi and Stock argue, further, that generalized trust forms the foundation for “moralistic trust” —the belief that most people share their own values. These scholars argue that agritech firms rely on the production of moralistic trust, persuading farmers and food producers to trust precision agriculture as a system. Gardezi and Stock argue that agritech firms tend to deploy a combination of moralistic and generalized trust as a a “trojan horse” to persuade farmers to adopt methods that may be detrimental to farmers’ long-term interests (Gardezi & Stock, 2021; Ryan, 2021). In contrast to “moralistic trust,” strategic trust relies on a careful assessment of others’ past behaviors as indicators of their trustworthiness and competence. This assessment depends on the “accuracy of knowledge or tools shared” (Gardezi & Stock, 2021; Hardin, 2002). As the Discussion Section will demonstrate, the type of trust AIFS researchers seek to cultivate is the opposite of that deployed by agritech firms. Rather than relying on generalized and moralistic trust, AIFS researchers attempt to build specific and strategic trust. They rely on long-term personal relationships with farmers, food producers, and other food system partners to carry out their research.

Efforts to build strategic trust with stakeholders through a track record of solid performance makes the quality of academic research especially important. However, recent studies point to problems with reproducibility in the social sciences and machine learning (Kapoor & Narayanan, 2022; Schneider, 2021). While on the one hand, such research presents disturbing evidence of the limitations of academic peer review and publishing, on the other, the fact that members of the academic community are examining these issues and calling for rigorous review and protocols for ensuring reproducibility in machine learning indicates that the academic community has a vested interest in maintaining boundaries of integrity in the research it produces. The interviews conducted in this study are an example of efforts to ensure early and rigorous interrogation of research methods and practices, and they identify the range of ethical problems that arise in the development of new technologies.

Methods

Interviews were conducted with thirty-nine federally funded agriculture and food system researchers whose research includes AI technologies. The researchers are affiliated with the AIFS, a new partnership sponsored by the National Institute of Food and Agriculture (NIFA), the U.S. Department of Agriculture (USDA), and the National Science Foundation (NSF). The partnership brings together more than fifty researchers at University of California Davis, University of California Berkeley, Cornell University, University of Illinois Urbana-Champaign, and University of California Agriculture and Natural Resources. The institute focuses on improving AI technologies that can potentially reduce resource requirements within the food system, while increasing food safety and human well-being. The definition used for “AI technologies” in this study is broad and reflects the definition adopted by the National Science Foundation as stated in the Program Solicitation for the grant awarded to AIFS in 2020. Artificial intelligence, according to this document, “is inherently multidisciplinary, encompassing the research necessary to understand and develop systems that can perceive, learn, reason, communicate, and act in the world; exhibit flexibility, resourcefulness, creativity, real-time responsiveness, and long-term reflection; use a variety of representation or reasoning approaches; and demonstrate competence in complex environments and social contexts” (2019; see also National Science and Technology Council, 2016).

Given this very broad definition, AIFS researchers come from many disciplines, including computer science, molecular biology, chemistry, epidemiology, mechanical engineering, food science, plant sciences, molecular breeding, biological engineering, civil engineering, plant pathology, economics, agricultural economics, agricultural engineering, nuclear engineering, electrical engineering, geography, law, philosophy, and history. These researchers are working on some of the most advanced theoretical and practical problems concerning AI applications in all phases of the food system from plant genomics to food safety and human nutrition and consumer choices. Their perspectives constitute a valuable corpus for understanding the values and practices prevalent in AI development.

Analyzing interviews from this broad cross-section of scholars serves three critical purposes. First, it minimizes disciplinary bias in the evaluation of AI research and development practices, and provides insight into issues, values, assumptions, and concerns prevalent in all aspects of the food system. Second, these interviews provide insights and perspectives on ethical standards, quality, impact, and unintended consequences of these rapidly changing technologies. These researchers’ views can serve as indicators of the types of rationales, considerations, assumptions, practices, challenges, or “institutional logics” that influence this research, and the institutional cultures and values that are being embedded—literally encoded—into AI models (Botha et al., 2017; Bronson, 2019; Stilgoe, 2013). Third, the interviews also serve as a baseline for future comparative and follow-up studies that will facilitate tracking the impact of soft law governance mechanisms and training on institutional culture and research practices and values. In other words, these interviews are but one step in what should become a practice of sustained “examination of the moral condition” of AI technology research and development.

The study adhered to best practices for interviews as outlined by Young, et al. (2018). Several informal pilot interviews were conducted to identify areas of concern and refine a list of final interview questions, as listed in Table 1. Requests for interviews were then sent to all PIs, researchers, and staff that were involved in AIFS as of February 2021. In-depth, semi-structured interviews were then conducted between February and May of 2021. Strong precautions were taken to create a safe environment where interviewees felt comfortable speaking candidly. Participation in the interviews was voluntary and strictly confidential to encourage meaningful and honest reflection, rather than perfunctory compliance or skewed responses. Recognizing the vulnerability of the participants in disclosing information that might be seen as sensitive and unfavorable to specific institutions, funders, fields, or partners with which they are affiliated, and given the small sample size and that their affiliation with AIFS is made known in this article, the interviews were structured to protect participants’ and non-participants’ identities, to avoid any sense that participation might be coerced. Of those invited to be interviewed, 75% of researchers responded and participated in the interviews. Informed consent was obtained prior to each interview. Interviews were conducted virtually and recorded and transcribed in Zoom. The average length of the interviews was approximately 45 min. Videos, transcripts, and identities of all interviewees have been kept confidential. Details regarding the demographic data, roles, and research of those interviewed are not included so that the identities of the individuals cannot be inferred.

The study has several limitations. First, it is positive that the federal funding for this institute is contingent on a strong commitment to ethics, but this commitment forms the basis for the funding that was received and reserved for the authors and the interviews conducted. The authors come from separate fields than those of other AIFS researchers, which does provide some distance between the authors and interviewees. But the study is still an internal one, and therefore may be subject to bias in favor of the funding institute. It would have been impossible to keep the identity of the institute confidential given the high profile of the grant awarded for the unique focus and combination of research that AIFS supports, and therefore, the possibility of a completely anonymous study was foreclosed from the outset. Also, the small size of the sample, still large in comparison to the size of the institute, and the decision to make the interviews voluntary, while possibly increasing meaningful participation, may mean that only researchers most invested in ethics and responsible research chose to participate. However, such a bias should not be too swiftly inferred, as other explanations may exist for non-participation. Some reasons include the loose structure of the institute with varying degrees of funding and participation in the institutional goals, COVID-19, and teaching and sabbatical schedules that impacted researchers’ availability.

It is best to regard this study as a pilot or small proof-of-concept study that could be replicated on a larger scale with multiple institutes, partners, and stakeholders, in particular those who will eventually use these technologies (da Silva et al., 2019). In that case, demographic and disciplinary data could be collected and released in the aggregate with no risk to confidentiality. Any bias of researchers, developers, or particular stakeholders in favor of their own methods, positions, or perspectives, would be balanced against those of others to achieve more well-rounded results. Other limitations that also flow from the study’s strengths include the broad disciplinary and research focus, and the scope and open-endedness of the subject matter. Even in interviews that were an hour or more in length, there was not enough time for a researcher to speak exhaustively about their research and concerns.

Beginning with the list of questions as a starting point, interviewees were invited to think creatively and provide freeform responses that tailored the questions to their specific interests, concerns, and expertise. This approach served two research goals: (1) to facilitate the collection of insights, perspectives, and values from a team of researchers positioned on the cutting edge of AI research and technology, and (2) to provide the interviewees with an important opportunity to reflect honestly and candidly regarding questions pertaining to ethics, aspirations, and challenges of conducting AI research in their field of expertise. Interview transcripts and videos were analyzed inductively and key themes were identified. All quotes have been anonymized to protect the identities of the interviewees. An effort has been made, through inclusion and contextualization of quotes, to highlight the precise language used by the interviewees. These quotes express important problems and nuances in the AI research process that cannot be adequately captured through summary or analysis alone.

Results

Findings are broken down into four key themes as indicated in Table 2: (1) data access and related ethical problems; (2) regulations and their impact on AI food system technology research; (3) barriers to the development and adoption of AI-based food system technologies; and (4) bridges of trust that researchers feel are important in overcoming the barriers they identified. All four themes also discuss gray areas and contradictions that suggest the divide between academic and commercial research is not clear, and that both domains suffer from similar trends and pressures. These findings should be considered if AI-based technologies are to be developed in a way that ameliorates rather than exacerbates food system challenges.

As may be expected, interviewees expressed a strong sense of responsibility and ownership for the outcomes of their work. However, there was a general sense that although oversight of AI technology development is needed, it is not clear what group or entity should be responsible for ensuring that this happens. There was generally more confidence expressed in academic research outcomes and practices, but pervasive doubt regarding the safety and ethics of commercial technologies. Researchers emphasized that they are extremely careful to be clear about the capabilities and limitations of their models and tools to preclude any breach of trust in their research. Most researchers expressed confidence that the peer review process in their respective fields provided adequate accountability for the reliability of the research itself but expressed concern about their ability to predict or control the effects once research moved forward into development for commercial use. The potential issues surrounding researcher bias in favor of their own methods and peer review have been addressed in the Methods and Literature sections above.

However, as researchers responded to other interview questions on challenges and practices, it became clear that ensuring ethical research of these technologies even within academia is far more complicated than a simple reliance on peer review might accomplish. The social and political landscape researchers are working in is rapidly becoming far more complex and untenable as new partnerships and standards for ethics are introduced. The results presented here offer a picture of this complex landscape.

Key findings suggest that the conditions for research of AI technologies are facilitated more by informal negotiation and trust between researchers, partners, and consumers than by government oversight or regulation. Regulations and oversight are contradictory, ambiguous, implausible, or absent, leaving many gray areas to be negotiated between researchers and private or commercial partners. Conditions that are negotiated include (1) access to, use, and handling of data, (2) whether efforts will be made to work toward “ethical” AI development, (3) which values or interests will be prioritized, and (4) how these values and interests will shape decisions and protocols.

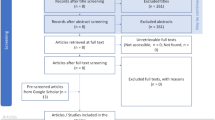

Researchers face several challenges in negotiating partnerships for research. First, they report that it is becoming increasingly difficult to secure data amid growing concerns regarding conflicting ethical standards such as data privacy on the one hand and transparency on the other. Second, regulations have been creating incentive for increased interest in AI-based technologies for several years, but commercial firms have been able to pivot more quickly than academic research to move into this market. This is consistent with research showing that commercial firms are the main driver of development in AI technologies (Birner et al., 2021), but according to interviewees, these technologies have at times failed to deliver on promises, causing some growers and food producers to write off AI-based technologies as ‘snake oil.’ Poor performance is excused as within a normal range of algorithmic error, so that food producers cannot hold anyone accountable for the failure. These practices have created an uphill battle for researchers, and presumably also for reputable commercial companies, who attempt to provide research and technologies that are more reliable and credible. This dysfunctional pattern of technology adoption will be addressed in more detail in the Discussion, along with recent changes that it is hoped will lead to a healthier system (Figs. 1 and 2).

Negotiations and agreements for food system partnerships do not take place in a vacuum. Rather, they are embedded in a nuanced and shifting environmental, social, economic, and regulatory context that has evolved over many decades. This context influences food system negotiations and agreements in three important ways. First, shifting natural environments exert pressures through conditions such as drought, flooding, temperature changes, and the current pandemic, which are in turn influenced by human decisions and systems (Dudek, 1988; Knox & Scheuring, 1991; Martin, 2020; Merrill et al., 2011; Nash, 2005; Niles, 2014; Štreimikienė et al., 2021). Second, and often in response to environmental factors, state and federal agencies influence food system negotiations through regulations that provide for oversight of academic research, and that alter economic pressures in agricultural production. Governmental agencies incentivize data sharing and demand for AI-based technologies by influencing the costs, access, and limits pertaining to inputs such as water, fertilizers, or labor. Third, these negotiations and the outcomes they facilitate create external consequences. For instance, laborers in fields and food processing plants, consumers, and residents of surrounding areas or around the globe, must contend with health and economic conditions influenced by food system decision-makers. Most AIFS researchers see themselves as offering AI-driven technological tools to alleviate these increasing pressures. This may be because calls for technological improvements feature prominently in models for climate change mitigation in California agriculture, which is where most of our interviewees conduct their field work, as well as in responses to labor shortages during the COVID-19 crisis (Barrett, 2010; Benson & Craig, 2017; Hanak et al., 2019; Harris & Spiegel, 2019; Medellín-Azuara et al., 2011; Zurek et al., 2021). Next, the findings will be discussed further under each of the four key themes.

Data: incentivizing data sharing, negotiating for data access, and the ethical problems of both

The demand for AI technologies that environmental, economic, and regulatory pressures help build incentivizes data sharing. Data fuels all forms of AI development. Data is integral to, and therefore transcends, all phases of AIFS research and development (Diebold, 1979; Goodhue et al., 1988; Kerssens, 2018; Levitin & Redman, 1998; Mahanti, 2021). Historical and proprietary data, much like water, land, or oil (Diebold, 1979; Goodhue, 1988; Kerssens, 2018; Levitin & Redman, 1998; Mahanti, 2021; Nolin, 2019; Neri, 2020; Puschmann & Burgess, 2014), are often scarce or inaccessible to public or research use. Data must be “discovered,” “mined,” or otherwise acquired—which may be impossible if archival records are either incomplete, missing, or unsuitable for machine reading. In many cases, depending on location and time period, records may not have been kept at all. Even if historical data exists and can be physically acquired, the rights to use it must be also secured (Ramirez-Villegas & Challinor, 2012; White et al., 2021).

Regulatory, environmental, and economic pressures work together to increase interest in new food system technologies, which in most cases depend on data for testing and implementation. In this way, regulatory pressures indirectly incentivize growers and other food system partners to share their data with AIFS researchers. But even where such incentives encourage willingness among potential partners to share data, data scarcity remains a major challenge in AI-based food system research that researchers continually work to overcome. Furthermore, AIFS researchers expressed concern that these incentives may make smaller operators more vulnerable because they cannot afford the legal support necessary to guard their interests.

AIFS researchers must contend with these problems of data scarcity and acquisition through negotiation. Negotiations and agreements for food system partnerships do not take place in a vacuum, but are embedded in the complex landscape described above. One AIFS researcher acknowledged that “[AI] is a very data-intensive approach to solving problems,” one that makes research heavily contingent, at times completely so, on securing large amounts of privately held granular data. The researcher described, “From the growers’ side, we do need their yield data at block level, because historically, we only have USDA, county-wide statistics, we don’t have block level, field level yield…The only way we can get [field-level data] is if the growers are willing to share those data…If we don’t have those historical data…at the field level, it’s almost impossible [to build our models].” This response can be described in terms of the “power cube” (Cullen et al., 2014) as a one-sided dependency, where one group is able to leverage a resource—in this case data—to increase their bargaining power (Turner et al., 2020). Some AIFS researchers are developing digital twin technologies as a way to decrease dependence on data or mitigate gaps in real-time data, but for many AIFS researchers, data remains an essential yet often scarce resource for research and development.

When researchers, growers, and food producers negotiate food system partnerships, both governmental and private power drive decision-making at the bargaining table. As will be discussed later in this article, AIFS researchers report that the growers and food producers they work with often consider themselves to be “targets” of over-regulation. Researchers state that, due to fines and rising costs, many growers and food producers have “no choice” but to adapt their practices in order to remain compliant with newer regulatory standards (McCullough & Hamilton, 2021; Sneeringer, 2011). However, formal power to adapt and collaborate with AIFS researchers currently remains in the hands of growers, producers, distributors, and consumers, making it challenging for AIFS researchers to secure the amounts and types of data they need to build reliable models. The fact that AIFS researchers face significant challenges in gaining access to data underscores the power that food system actors still hold. Food producers may choose to share data and implement AI-based tools, or they may choose to continue using current or alternative methods. They also choose with whom to partner and whose products or services they will use. In this changing and high-stakes landscape, the interviews reveal researchers’ beliefs that food system actors make decisions based on trust.

The power of data and markets is leveraged as the right to decide whether to release or withhold resources. Both types of power are rooted in laws that presume and safeguard the right to own and be responsible for property, including agency or rights of ownership of data pertaining to one’s property or person (Brenkert, 1998; Bryson, 2020; Halewood, 2008; Johnson & Post, 1998; Kumm, 2016; Lacey & Haakonssen, 1991; Marneffe, 2013; Nemitz, 2018). Business and legal scholars and practitioners categorize the right to share data or buy technologies, products, or food as subtypes of the right to voluntarily enter a real or virtual space of negotiated exchange and consent to transfer limited or total ownership or access rights, along with attendant moral and legal responsibilities, from another party onto oneself or vice versa (Kotler, 1972; Miller & Wertheimer, 2009; Radford & Hunt, 2008).

While generally unfamiliar with specific governmental regulations that directly constrain their research, or with the broad legal base underpinning ownership, consent, and transfer of rights and liability, all AIFS researchers indicate deep practical awareness that they must contend with the power and consequent leverage attached to data and markets. Government in the U.S. is limited in its ability to influence this power and leverage and thus plays an indirect, though not insignificant, role in incentivizing food system decisions and practices. AIFS researchers take great care to understand and meet the expectations of potential partners and users or consumers of the technologies they create, because if they do not, they will not be granted access to needed data or markets.

AIFS researchers must negotiate the terms of access in ways that align with government regulation and policy while also reassuring partners that their interests will be protected. However, because researchers require funding and must work within the terms of the funding agency, very often this also forms another “one-sided dependency” (Cullen et al., 2014; Turner et al., 2020) with governments and funders holding most of the power in relationships with researchers. Because interests of governments, funding agencies, and private partners are often misaligned, researchers must negotiate from a middle ground between them, with a one-sided dependency in at least two directions. This is not to say that researchers do not exercise any power. They can influence research questions, stakeholder selection, and many other decisions that occur as part of the innovation process (Akullo et al., 2018; Cullen et al., 2014; Turner et al., 2020). However, researchers may still be in a somewhat tenuous position. Negotiating from this middle ground requires time, skill, good judgment, and creativity to navigate. For their research projects to succeed, they must build trust and common ground with government agencies, policy makers, and funders on the one hand, and with partners on the other. Even when researchers are successful in building these partnerships, the dilemmas that arise from misalignment between contradictory or counterproductive ethical guidelines can often pose significant or insurmountable challenges.

Private, public, and non-profit entities supporting AI development need data, and adopt different strategies in negotiating access to data, and how data are treated. For instance, one AIFS researcher asked farmers who they share their data with, why they choose to share or not share their data, and what their relationships are with private sector companies like John Deere, which “with the combine, extracts enormous amounts of data from the farmers….” This researcher stated, “They’ll say, ‘Because it’s a contract that you sign when you buy a John Deere harvester.’ And so you quote unquote ‘share the data.’ You have no choice, if you want to buy the harvester, you…‘share the data’ with John Deere.”

This researcher’s comment highlights a central ethics problem in the expanding use of big data: no one can provide what is known as “morally transformative” consent—even if the consent can be considered legally valid—if they do not have a full understanding of the risks they are accepting and also have the power and resources to decline consent. The ability to leverage equipment, tools, seeds, and other items essential to agriculture as bargaining chips gives private sector companies tremendous coercive power in negotiating access to field-level data, possibly rendering consent meaningless (Jones, 2018; Miller & Wertheimer, 2009; Pechlaner, 2010).

In contrast, public sector and non-profit groups attempt to secure access to data in ways that ensure principles such as fairness, equity, and transparency, but their approaches can backfire. For instance, a funder may set policies to increase transparency and diversity, and then leverage funding as a means of ensuring that researchers will comply with these policies. But these two principles often work against one another, with researchers caught in the middle. For instance, one researcher reported an increasing trend among funding agencies who mandate that data used in funded projects should be made public when publishing results to facilitate transparency. But such policies often cause concerns for potential partners who wish their data to be kept private. Potential partners may then shy away from collaborating on projects and sharing their data when transparency is required, which can decrease the amount and diversity of data available to researchers. In this way, mandates on transparency and similar regulations unwittingly work against calls among AI ethicists and critics that AI developers should collect more diverse data sets (Jobin, 2019).

Diverse data are often needed to help mitigate bias in order to build generalizable algorithms (Gebru, 2020; Christian, 2020; Leavy, 2021; Benjamin, 2019; Wachter & Mittelstadt, 2019). This is no less true in agriculture. One AIFS researcher stated that it is important that researchers expand the network of growers and producers with whom they are working, because the number of partners currently providing data is small. This researcher observed,

Getting data from some growers is still relatively challenging. Especially for this AI-based approach, we really need…a large amount of data. For example, for yield data so far, we only have maybe around 15 large growers who are willing to share and we only have maybe on the order of 150 orchards. It’s still…a small fraction of the data you can [potentially] get from the growers. Some see the value and they are willing to share, but some [are] still very reluctant to share those data, so I think that’s a big challenge.

Agricultural algorithms may not be transferrable due to the unique attributes of each field. For this reason, researchers need a broad array of partners and data in order to ensure greater accuracy and reliability. But these misalignments between ethical standards regarding privacy, transparency, and consent mean that researchers find it difficult to find growers and food producers who are willing to share their data.

Regulations: problems in developing ‘ethical’ AI agriculture and food system technologies

Our interviews suggest that development of AI in the food system suffers from excessive or misaligned regulation in some cases, and too little regulation in others. Many researchers stated that government regulations are out of touch with actual experiences and concerns of researchers and partners. Yet, when asked in the interviews, “How familiar are you with government regulations that pertain to your AI-related work, and how effective do you think those regulations are?” most AIFS researchers responded that they interacted very little or only indirectly with government regulation, and that regulation had very little direct influence on their research other than through an Institutional Review Board (IRB).

According to the interviews, regulation intersects with AIFS research along three pathways. First, the FDA and HHS support university oversight of human subject research through an IRB. This pathway, while formally integrated into institutional research workflows and relatively strong in helping researchers implement protocols for informed consent and data privacy, lag behind or do not account for (1) the technical capacities of evolving AI-based tools, (2) issues beyond consent and privacy, or (3) the complex social, economic, and regulatory landscape in which researchers must negotiate their projects.

Second, some regulations, aimed at increasing ethical standards and outcomes of research, such as those discussed below, in some cases inhibit research altogether. These regulations, while crafted with good intent, are often disconnected from the practical realities of implementation—such as legal costs required for tasks that would allow a project to comply with regulations. The disconnect between policy-setting and implementation ultimately undermines the purpose of ethical research by making it difficult, if not impossible, for researchers to proceed with their work.

The third pathway of regulation increases pressures on growers and food producers to reduce impact on natural and environmental resources. This type of regulation initially gives potential partners incentives to collaborate with AIFS researchers to explore and develop alternative and more sustainable AI-based methods. While well-intentioned, this type of regulation opens a window of opportunity for the introduction of AI-based methods, but private sector products—if not well grounded in validated research—may derail the long-term adoption of AI-based tools that would facilitate a more sustainable food system (Fig. 1).

All three regulation pathways were reported as insufficient, disconnected from the realities of implementation, or lagging behind technical capabilities in AI technology research and new ethical issues arising from these tools. As one AIFS researcher stated, regarding the technical capabilities for invasion of privacy through high-resolution imagery, “The technology is really pushing…a lot faster than…a legal framework is keeping up with it.”

National and global regulations ‘impeding’ food system research

Our interviews suggest that this disconnect between governance and implementation has the potential to impede research in multiple contexts. One example of regulatory disconnect is Sect. 889 of the Federal Acquisition Regulation (FAR) of the John S. McCain National Defense Authorization Act (NDAA) for Fiscal Year (FY) 2019 (Pub. L. 115–232). The U.S. Department of Defense (DoD), U.S. General Services Administration (GSA), and the U.S. National Aeronautics and Space Administration (NASA) recently amended this section to prohibit contracting with any vendor that cannot certify that they have not purchased equipment that may have been manufactured or sold by companies in China. This regulation delayed some of the research in one AIFS research cluster because a main vendor did not yet have a system in place to certify that their equipment met these criteria. The cluster was able to resolve this issue by securing the necessary certifications from alternative vendors, but it is unclear how this issue may yet impact other food system research.

This same researcher discussed similar challenges with two other projects. One project, regarding meta-genomes conducted in collaboration with European researchers, was stalled for months because of disconnect in the contracts between various U.S. and European agencies regarding informed consent requirements regarding which entities would manage participants’ data. Yet another project has been impacted by a USDA regulation that bans the use of gift cards to compensate human participants in USDA-funded studies. This researcher acknowledged that the intent behind this regulation—to prevent fraud—was good, but that cutting checks and submitting forms to the IRS to comply with the regulation was more difficult, for both research staff as well as for participants. This researcher suggested that the regulation might be amended to allow gift cards up to a certain dollar amount. This adjustment might successfully prevent fraud while also simplifying the process of recruiting and compensating study participants, but this researcher noted that there is no good mechanism to communicate such recommendations up the chain of communication to policy makers. While these problems in some cases were temporary, the researcher observed that regulation, if not structured with an understanding of the effects for those implementing it, “can dramatically impede progress…and that can actually be more harmful [due to] not being able to deliver benefits.”

While this researcher regarded regulation on informed consent to be especially strong and well-managed in their field of research, they observed that once AI-based tools migrate from research to the commercial domain, there would be little if any regulation regarding informed consent. The researcher stated, “In the research world, [consent] is very well controlled, but I have concerns about the non-research world, which seems very Wild West to me right now…when I say Wild West, I’m thinking lack of regulation.” This comment was supported by other interviews perceptions and references to the “Wild West” and the California Gold Rush of the 1850s to describe the lack of regulation of AI development. This researcher noted that consumers of these technologies may consent to allow their health data to be used without realizing how their identity and security could be tracked and compromised in this process, or “used against [them], whether it’s in…health insurance, or some form of discrimination.”

Another AIFS researcher described similar challenges with the Nagoya Protocol as an example of a regulation that demonstrates a disconnect between the agencies making regulations and the individuals responsible for implementing and complying with them. The Nagoya Protocol is a treaty for Access and Benefit Sharing (ABS) ratified in 2014 by United Nations (U.N.) and European Union (E.U.) members. Although the United States has not signed or ratified this treaty, it has far-reaching implications for U.S. scientists, research, collections of genetic material, and technology transfer into commercial domains (McCluskey et al., 2017). As this researcher explained, “It’s a protocol which basically says that if I take germplasm resources from…another country, from indigenous people…and we make something and we benefit from it, then some of that benefit should go back to where that originally came from. No one has a problem with that. It’s beautiful.”

However, the researcher noted that despite their wholehearted support for the principles of this protocol, challenges in implementation threaten to bring some scientific research to a standstill. This researcher observed,

The problem is dealing with…nations which…don’t really have any way to implement that or to deploy a mechanism to gather that benefit. So, we may want to return the benefit, but we will look at the cost of doing that, because the legal costs might be $20 million…just to have our legal team in the [University of California] figure this out, who are they talking to, how can we do this, and so, then [the legal team] will say, ‘No, we just can’t do that, we can’t work with that nation…it’s not going to work out,’ so then scientific discovery is being held back by that sort of framework.

In these cases, AIFS researchers must navigate the complexities of U.S. federal regulation as it unfolds within the context of national security and foreign and global governance.

State regulations creating time-sensitive opportunities for innovation in agriculture

Similar conundrums arise with individual U.S. state regulations. State regulations in California are incentivizing growers and food producers to look to AI solutions to mitigate costs and maintain compliance for their operations. As will be discussed in the following section, these incentives often lead to lost opportunities. Nonetheless, this policy approach has been particularly common in California.

Food system regulation is strong in California. One AIFS member stated, “California has the highest level of regulations on the food and agriculture industry of any state in the United States. We’re pretty close to the level of Europe [which] has very high standards. The growers have told me, ‘It costs us a lot of time and money, but also…it means California farm products are of the highest quality in the world, because we’re under so much regulation.’”.

Regardless of the benefits of regulation, this AIFS member also noted, “The growers feel incredibly squeezed by all of [these regulations]. I do think it’s an opportunity where technology innovation can help play a mitigating role. All the regulations add up, and what that translates to is cost that eats into profit.” This AIFS member stated that regulatory reporting, on issues such as electricity consumption, has become a major driver for different agricultural startups. This individual observed, “Many California farmers are in the business of four things: they’re in the business of agriculture, energy, land, and water…So [there are] many farmers adopting [AI] technologies to better get a handle on managing the regulatory burden workload.”

In addition, this AIFS member noted that the costs of inputs such as energy, water, and labor, are rising, leaving many growers with little choice but to adapt their methods to reduce costs. The individual stated,

Water is more expensive, especially now that we’re in a drought year, it’s going to get really hard to come by and expensive. PG&E (energy) rates…always go up and up, to where…companies are looking at creating their own micro grids now, so, inputs…are always going up. Labor’s 30 dollars or more an hour in some places for high value crops, yet market prices aren’t increasing. In fact the retailers always push farmers to lower prices, because consumers want cheap food, basically. Unless there’s a differentiated consumer product….That’s where [there’s an] opportunity for AI to identify new novel food products that can command a higher value.

Another AIFS researcher offered a similar perspective, stating, “Lawyers and policymakers are making laws to protect the environment…but then farmers feel like they’re targets of…overregulation.” The researcher acknowledged the need to manage resources effectively, but noted the problems that occur due to the gaps of knowledge and operational scale that exist between policymakers and food producers:

We work with different stakeholders. Growers are on one side, then you have policymakers on the other side, and they also have different needs. And they work at different scales. Policymakers want to see things at a larger scale, scaled impacts…and growers [are] looking at their individual farm or field where data needs are different, models that are required are different, and so on….

Policies are mostly made on the watershed or ground water basin scale, but then the implementation to address problems is mostly done on a field scale or farm scale…laws or regulations now require growers to meet certain standards. For example, if they over-discharge nitrates, they can violate the irrigated lands program regulations, for example, and they can be fined for lack of compliance. Or if they are in a groundwater basin that is overdrafted, the state could come and say no more pumping…so that is on a regulatory scale…and that’s…affecting what kind of decisions growers have to make in their operations…

This researcher stated that one goal of their research with AIFS is to “provide data, models, and knowledge…to generate data that regulators could use [to make better policies].” According to this researcher, policymakers and AI developers need, but often lack, an awareness of cultural and practical conditions that impact agriculture at the field, farm, or other site scale. As this researcher stated, “Somebody [may have] knowledge, but they may not understand the cultural settings in which agriculture happens or in which policy is made or companies work….” Lodged in this middle ground between policymakers and partners, AIFS researchers strive to deliver data to regulators and solutions to growers, while building trust with both. But this is not an easy task.

Barriers: challenges impacting adoption of AI food system technologies

While a desire to increase profits may provide incentive for exploring technologies that can reduce production costs, barriers to adoption of AI technologies still exist. As noted above, recent regulation aims at intensifying the incentive to explore or shift to AI-based technologies in the food system (Hanak et al., 2019), but the mismatch in timing of regulations that incentivize interest, and reliable technologies that deliver results, can give growers negative first experiences with AI, and paradoxically, reinforce reluctance rather than encourage adoption of AI technologies. As will be discussed in detail below, recent years of increased regulation have therefore unwittingly contributed to a dysfunctional cycle for AI technology adoption in the food system (Fig. 1).

As policy-makers raise costs and tighten constraints on inputs and effects, they drive growers and food producers to become more open to exploring AI-based solutions to reduce costs and inputs and manage an ever-growing regulatory burden. As demonstrated in Fig. 1, these economic incentives open time-sensitive windows of opportunity, where growers and food producers become more interested and willing to leverage newer AI-based tools. This is not to say that farmers and food producers are not already interested. On the contrary, many farmers have good reasons for not adopting AI-based technologies, and resent the implication or assumption that if they do not adopt early, it is because they are “not modern,” or “laggards,” or unwilling to adopt sustainable methods (Ryan, 2021). In fact, important material issues can form barriers to adoption of technologies. For instance, AIFS researchers report that some AI-based technologies do not integrate well with other current technologies or regulatory reporting regimes (see also Higgins, 2017).

Traditional cultures, local knowledge, and ‘snake oil’ in current agricultural practices

Researchers state that growers often come from older, traditional cultures and communities and that these individuals usually trust their “gut feelings,” their experiences, and historic data. As one researcher observed, “Agriculture is very unique. Almost each farm, even just in the Central Valley…is different. If you’re in the Sacramento region, even the attitudes of the growers [are] different from [those] in the San Joaquin. So you really have to understand the entire ecosystem.” Given the unique nature of each farm and region, long years of experience, hard-earned knowledge, and intuitive methods guide decision making (Niles & Wagner, 2017). But this type of deep familiarity is harder to maintain in large operations where it is difficult to be precisely aware of the input needs of many diverse crops. Some AIFS researchers reported that this uncertainty at times leads growers to apply excess inputs as a form of insurance, or a way to ensure that their crops survive, but these methods often lead to an excess of nitrogen in soil and groundwater and poor soil health over the long term.

Various AI technology companies step in with products to attempt to help growers see and manage their fields with greater precision. The researcher continued, “There are companies coming up all the time and trying to sell things to growers that don’t work. There are many companies that are trying to get into the agricultural space and they have no clue about agriculture…sometimes it feels like somebody has a hammer and they’re looking for a nail to hit…farmers feel like they’re being sold snake oil.” As another researcher noted, “growers in general are reluctant to use new technological tools…it has to be extremely easy to use and also better than their intuition if you’re going to have any hope that they’re going to use it.”

The long-term impact of past experiences

AIFS researchers report that partner and consumer expectations are shaped by negative past experiences with for-profit vendors. An AIFS researcher stated that the commercial aerial sensing services offered by some companies are not reliable due to their improper data calibration and interpretation process. This researcher states that such approaches ultimately yield poor results but while there is no systematic validation process in place, it may take a few years before the grower realizes that the analytics results do not match the reality and stops using that company’s product. A grower may then return to more conventional agricultural methods they regard as tried and true, and become more reluctant to try similar AI technologies in the future, even when developed by academic researchers (Fig. 1).

Researchers strive to demonstrate respect for growers’ views, practices, and dilemmas. They also note many agricultural practices, though now deeply entrenched and considered “traditional” are actually relatively new, having sprung up since the mid-twentieth century. As one AIFS researcher observes, “there are things that we would consider traditional now because…that’s how they’ve been done for the last hundred years…[but they] are really not ideal. [For instance] a breeding method that’s been in process since industrial agriculture started in the 50s is not [necessarily] there because it’s good. It’s just there because that was all we could do at the time.” Another AIFS member states, “there’s this…nostalgic, rustic depiction of…the breeder out in the field noticing the most important things and…the importance of the breeder’s observational abilities.” Most AIFS researchers expressed that it is necessary to work within these cultural and knowledge frameworks, rather than attempt to dislodge or alter them. However, the views expressed seem to sit somewhere between definitions of a technology as an input or instrument, and as an affordance (Akullo et al., 2018). On the one hand, AIFS researchers hold the view that AI tools should be used in highly specific ways for them to work, but on the other hand, they expressed awareness that the tools will be embedded within highly varied cultures and environments.

These technologies must fit within technical, regulatory, and social landscapes as well as natural ones, but often do not meet these criteria. For instance, as noted above, AI-based technologies are used increasingly for managing the increasing regulatory burden, but new tools do not always align with reporting requirements. This disconnect creates practical and financial disincentives for adoption. Also, some newer technological methods have met with widespread popular dissent. According to one AIFS researcher, efforts to build public trust in methods such as genetically modified organism (GMO) breeding have backfired. This researcher noted, for example, that attempts by scientists to generate public acceptance of GMO technologies by demonstrating “the biological similarities between the effect of a mutation created through some sort of a transformation protocol and the effects of mutation that occur naturally” had the opposite effect. These statements “erode[d] trust [in traditional breeding] rather than gaining trust [in GMO breeding].”

While several AIFS researchers stated that it is imperative to develop plans and methods for effectively communicating with consumers regarding the technologies being used in the food system to facilitate trust and to prevent misconceptions from forming, scholars note that communication in itself is not enough. Even supporters of these technologies acknowledge the problems that have come from the “virtual monopoly of GM traits in some parts of the world” through the extension of intellectual property rights through patent laws. As stated by Godfrey et al., “Finding ways to incentivize wide access and sustainability, while encouraging a competitive and innovative private sector to make best use of developing technology, is a major governance challenge” (Godfray et al., 2010).

Bridges: building relationships of trust to facilitate AI food system technology research

In the absence of clear guidelines and adequate regulations that specify who is responsible for developing trustworthy or “responsible” AI technologies, or how it will be developed, AIFS researchers work within the current cultural and logistical landscape by personally building trust with stakeholders. Researchers report that they navigate this complex landscape by building and carefully maintaining relationships of trust that are long-term and mutual. They state also that building these relationships depends on producing high quality, reliable research that is compatible with existing culture, practices, and infrastructure. As noted in the Literature section, the trust AIFS researchers aim to build is specific and strategic trust (Gardezi & Stock, 2021). AIFS researchers assume that their personal integrity and delivery of reliable technologies form the basis for securing partners and data for their research.

When asked if they believed that AI food system researchers deserved the trust they solicited from partners, all AIFS researchers responded with yes, but most provided caveats. They believed academic researchers deserved trust provided they were following proper protocols and validating their data. As one researcher stated,

I try to earn [trust]. When I earn it, I am very careful not to lose it. For example, I tell my students and postdocs to treat relationships with our stakeholders—whether growers, commodity boards, or state agency officials—very delicately, because, these are relationships that can be easily lost, and they are hard to get.

When opportunities arise to collaborate with partners, AIFS researchers stated that they do all they can to meet partner expectations on four critical fronts: (1) presenting AI technologies as an aid, not just a displacement of human labor and decision-making; (2) privacy of the data with which they are entrusted, (3) reliability and accuracy in the algorithms they use the data to produce; and (4) building long-term relationships of mutual trust.

AI technologies as an aid, not a displacement

While working within current cultural, environmental, and economic constraints, AIFS researchers consistently expressed their belief that conventional, human-based practices would be improved by AI tools that support growers in making decisions regarding their crops. But they present AI technologies to potential partners as tools that will assist rather than displace human growers, breeders, laborers, and food producers. By emphasizing that AI technologies, in many cases, can assist human agents in the decision-making process, rather than making decisions for them, one researcher hopes that these technologies may be more appealing to potential partners. This researcher compared the use of AI technologies to “bringing a new third base person” onto a baseball team: “the AI methods offered cannot substitute the whole team or the whole game, but AI methods can improve the team’s ability to perform a specific task.” This response reflects an understanding of technology as an “affordance” that will be used as part of a repertoire that growers may use to achieve a variety of goals (Akullo et al., 2018). Such careful framing has the added benefit of showing growers and food producers options that allow them to maintain control of their operations so that the technology aligns with their interests. This approach also carefully manages expectations so that farmers and food producers understand the levels of risk and range of benefits associated with the use of these technologies.

That said, given that much of what makes AI “AI” is that it learns and makes decisions in ways that humans cannot fully understand, control, or predict. In fact, AI’s ability to automate activities through learning and decision-making is both an inherent advantage as well as a weakness of AI-based technologies. It may therefore be disingenuous to suggest to farmers and food producers that they will be able to maintain the same levels of decision-making power to which they are accustomed. Interviewees did not have time to discuss the details of how they frame these risks and tradeoffs to their partners, but such disclosures may be important to add to ethical and regulatory frameworks to offset the temptations to make claims that mischaracterize AI risks and capabilities as an appeal to “sell” AI to those who may not fully understand the risks. Such claims pose an ethical problem because they undermine meaningful and transformative consent, as discussed above. (Jones, 2018; Pechlaner, 2010; Miller & Wertheimer, 2009).

Ensuring data privacy

AIFS researchers make strong efforts to ensure data security. These efforts often involve formal protection of proprietary information. For instance, one researcher stated that their team routinely includes data management plans in their agreements with partners such that there is “very little left to speculation in terms of how that data is stored, when it will be deleted, [and] in what form it will be communicated.” Another researcher stated that they must “carefully vet who has access” to confidential data, using different technologies to ensure security in some cases. Still another stated that “companies are very protective of their data” and that their team is required “to sign an agreement every time” regarding data security. Protection of data also frequently includes subtle, informal agreements, such as avoidance of stated or implied uses of private or even public data that partners fear might be averse to their own or their consumers’ or constituents’ profits and interests.

At times, partners’ desires for data privacy come from concerns that they would be found to be out of compliance with regulations or social justice activism. As one researcher stated, “a big barrier in the past, is…trust….Growers are reluctant to share [their data] because they may run into other problems [such as] environmental justice…issues, so unless they trust you they won’t share those data with you.” Another researcher stated,

We obviously promise and assure confidentiality and privacy. It can be a little more tricky when we study, for example, antimicrobial use in livestock, because we are asking questions where answers could get the participants in trouble….There has to be a high level of trust. They trust us, they trust with whom we are connected, and how we are going to use the data and, depending on their level of trust, they will share.

Another researcher also stated that partners fear damage to their brands, for example, if data that are potentially detrimental to owners were leaked to the public. They also fear loss of competitive advantage if their data were released to competitors. As these interviews reveal, the centrality of data to AI development can place AIFS researchers in a position of needing to commit to not exposing privileged information as part of their effort to help growers and food producers achieve more cost-effective and sustainable outcomes.

It appears that for-profit companies are also becoming more concerned with data privacy and recently have begun tightening the restrictions they place on their users’ data. As one researcher stated, “user privacy or data privacy laws are rapidly changing…this is very recent, [within the last] year or so, the data privacy issue is getting more and more important. Previously, certain big companies would even sell their user data, but now you…can’t sell your data and you can’t use it for your own product design….I definitely see the companies’ policies getting stricter and stricter every year in terms of data privacy…there are very strict rules in certain [large] companies.”

Despite this tightening of restrictions, this researcher also reported that there are “gray areas” regarding how these restrictions are implemented and what sort of data or data management might create privacy breeches. “In the medical field, you have HIPAA, but on the consumer side, you don’t really have that, so it’s really hard to define what is sensitive information and what is not sensitive information. So the rule is strictly you can’t use sensitive information, but [how this term is defined is] definitely a gray area.”

Ensuring accuracy and reliability