Abstract

Intelligent robots for fruit harvesting have been actively developed over the past decades to bridge the increasing gap between feeding a rapidly growing population and limited labour resources. Despite significant advancements in this field, widespread use of harvesting robots in orchards is yet to be seen. To identify the challenges and formulate future research and development directions, this work reviews the state-of-the-art of intelligent fruit harvesting robots by comparing their system architectures, visual perception approaches, fruit detachment methods and system performances. The potential reasons behind the inadequate performance of existing harvesting robots are analysed and a novel map of challenges and potential research directions is created, considering both environmental factors and user requirements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Fruit growers are facing an increasingly severe labour shortage due to the labour workforce’s diminishing interest in agriculture (Luo & Escalante, 2017). The problem is exacerbated by recent international travel restrictions in pandemic conditions, which have dramatically limited agricultural productivity due to the unavailability of migrant workers. As a result, tons of fresh produce were left unharvested and rotting on fields where farms had long relied on seasonal overseas workers (Zahniser et al., 2018; Associated Press 2020; Ieg, 2020; Chandler, 2020).

Harvesting robots can counter labour shortages and provide an economically viable solution to rising labour costs. The robots can also be a tool for applying precision agriculture. specifically, the application of the harvesting robots makes the harvesting process controllable, traceable, and customisable, meanwhile harvesting robots can gather, process and analyse temporal, spatial, and individual data of the target fruits (ripe time, harvest time, fruit position and pose, fruit ripeness and defect, etc.) as well as the environment information (branch distribution, leaves occlusion, disease infection), and combines it with other information (plant habit, plant traits, etc.) to provide support to decision-makers.

The technology of robotic harvesting has been actively developed over the past three decades (Bac et al., 2014b). In terms of the technology status of the harvesting robot, the robotic systems analysed can be categorized into two groups: fully integrated systems, and subsystems used in harvestings, such as vision (Tang et al. 2020), gripper (Zhang et al., 2020a), and control (Zhao et al., 2016a). Since each of these subsystems is quite a broad research area by itself, this paper will be focused on reviewing integrated systems.

One important aspect of harvesting robots that have not received much attention from the research community until recently is the role of humans in the overall process. Although robots are theoretically capable of performing harvesting tasks autonomously, widespread use of harvesting robots in orchards is yet to be seen. Apart from the technology bottlenecks to be addressed, one of the reasons preventing widespread adoption is that human is an indispensable element in the process. This requires researchers and robot designers to consider the human element from the beginning of the design process. This issue was discussed in some detail by Rose et al (2021), in which the authors advocate for the responsible development of agricultural robots. It is inevitable that the adoption of robots may also introduce social, legal, and ethical issues, but the suitable tools for assessing these issues remain an open question for the community.

Existing review papers on integrated systems have collected and compared the harvesting robots in one or several specific perspectives. Li et al. (2011) examined mechanical harvesting systems as well as robotic harvesting applications before 2011 and discussed the machine vision approaches used in harvesting robots. Bac et al. (2014b) characterized the crop environment, summarized quantitative performance indicators for crop harvesting and conducted a thorough comparison on existing robots before 2014. In the last 6 years, Bachche (2015) emphasized analysing the design strategies behind various applications, Zhao et al. (2016a) focused on vision control techniques and their potential applications in fruit or vegetable harvesting robots, Shamshiri et al. (2018) and Fountas et al. (2020) both collected and presented around twenty recent robotic harvesting applications as one section of their review paper on agricultural robots, and Jia et al. (2020) and Zhang (2020) reviewed apple harvesting robots and harvest assist platforms.

There are two major gaps in this work attempts to fill, regarding a state-of-the-art review of harvesting robots. Firstly, to the best of the authors’ knowledge, none of the existing reviews in the past six years have compared the robot performance in recent applications to three key performance indicators that are critical for potential commercial viability: harvest success rate, cycle time, and damage rate. Only one review paper (Bac et al., 2014b) has covered performance indicators among 50 applications, but there was a lack of performance data to provide a conclusive comparison between harvesting robots at the time.

Secondly, there is a lack of systematic investigation on the underlying reasons for the inadequate performance of existing harvesting robots, relative to progress towards commercialisation. This paper will summarise all the challenges reported in recent literature and analyse the origin of these challenges from the point of view of both environment and requirements of fruit growers. With this investigation, a connected map of the challenges and corresponding research topics that link the environmental challenges of harvesting with customer requirements is identified for the first time.

The objective of this work is to present a systematic review of the status of the fruit harvesting technology and to propose a standardised framework to quantitatively compare the state of the art robots in fruit harvesting and to gauge their commercial potential. The framework of this research involves the following steps: (i) development of the review protocol regarding the inclusion and exclusion criteria; (ii) exploration and selection of related data; (iii) classification of selected data; (iv) analysis and interpretation of the selected data; and (v) reporting findings and results.

In order to investigate the similarities and the differences of fruit harvesting robots among projects and fruit varieties, the data in the literature were selected and analysed against the following research philosophy: (1) The included data/studies are collected from multidisciplinary databases (such as Scopus, IEEExplorer, Wiley, ScienceDirect, and SpringerLink) and open online sources (such as open-access journals, commercial websites, conference proceedings, government documents, and doctoral thesis); (2) This review work only included data and references related to fruit farming, applications that are closely related to integrated fruit harvesting robotic systems that underwent field tests and operations within the last 20 years (including the year of 2020). Fruit harvesting applications published before the year 2000 are excluded. To make the robot performance data comparable, those integrated prototypes that have not been tested in the field (including open field and greenhouse) are included in the discussion part but excluded in the system analysis section; Studies focusing on only subsystems (vision, control, manipulator, path planning, and end-effector) of the selected harvesting robots are also included in this work to present the audience a systematic review of the state of the art robotic harvesting technology; (3) The included harvesting robots were then reviewed against the aspects of the fruit detection methods (vision sensors and algorithms), fruit detachments methods (end-effector design and flexibilities) and obstacle handling approaches (manipulator and path planning), the performance of the included robots were then quantitively compared on three most used indicators: harvest success rate, cycle time/overall speed and damage rate. (4) The challenges were extracted directly from the literature involved in this work to present the robot developers’ original records of the issues encountered, and the discussions on the potential research directions were based on the systematic analysis of the challenges.

The below questions were addressed by this review:

-

Which fruit varieties have been included in the investigation of robotic harvesting?

-

What are the quantitive indicators to assess the performance of harvesting robots?

-

What are the potential issues of applying these indicators?

-

Under what environment are the performance indicators been recorded?

-

What is the overall performance of robots developed during the past 20 years?

-

What are the challenges encountered in the robotic harvesting field?

-

What measures have been taken to address these challenges?

-

What are the reasons behind the fruit damage?

-

What is the relation between the environmental inputs and the customer requirements in robotic fruit harvesting?

-

What are the potential research directions?

This paper is organised as follows: The Background section defines harvesting categories and production environments. The Recent developments section analyses 47 existing robotic harvesting applications and compares the applications based on system architecture, fruit detection approaches, fruit detachment methods and obstacle handling approaches. The System Analysis section presents a systematic analysis of performance, system performance, and encountered challenges, The Potential Research Directions section clarifies and categorises future research directions based on the analysis conducted in the previous section, lastly, the Conclusion section summarises the authors’ analysis.

Background

Harvesting methods

Two types of harvesting approaches have been implemented by agricultural practitioners to reduce orchard overheads on labour expenses: selective harvesting and bulk harvesting.

Selective harvesting is a harvesting method for robotic systems utilising robotic manipulators with end-effectors for grasping. These are typically mounted on a mobile platform with an end-effector and machine vision to selectively pick individual ripe fruit (Bac et al., 2014b). Since robotic systems may potentially combine the efficiency of machines with the selectivity of humans on a long-term basis (Shewfelt et al., 2014), it is believed that the robotic harvesting approach has the potential to completely replace human pickers (Sanders, 2005). Therefore, it has attracted extensive attention from both academia and industry and has emerged as the preferred method of robotic harvesting amongst fruit growers. The ongoing rapid development of artificial intelligence (AI) and robotics technologies is paving the last mile of commercial robotic harvesting, hence the focus of this review will be on robotic systems utilising selective harvesting.

The other method of harvesting is called the bulk harvesting method and is based on the principle of applying vibration to the fruit tree to force separate the fruit (Mehta et al., 2016). It has been adopted by growers of various fruits such as apples, oranges, and cherries (De Kleine & Karkee, 2015; Torregrosa et al., 2009; Zhou et al., 2016). Although mass harvesting systems come with high efficiency (Sola-Guirado et al., 2020), there are significant disadvantages. Growers have raised concerns over the excessive damage caused by the machines to both canopies and the fruit (Moseley et al., 2012). Since fruit damage affects its market acceptance, the research on reducing the bulk harvesting damage remains an active field (Pu et al., 2018; Wang et al., 2019). Another disadvantage of bulk harvesting is that the quality of picked fruits can vary considerably, where less mature fruits are inevitably harvested together with over mature fruits (Sanders, 2005). Co-ordinating rates of maturity of fruit across an entire orchard is not a trivial task, and under a bulk harvesting scheme, the timing of harvest can depend on minimising losses due to harvesting immature and over-mature fruits.

Production environment and cultivar systems

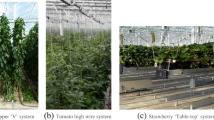

Fruit plants can be grouped into trees, vines, and bushes or shrubs based on their growth habit. Trees and bushes can stand up on their own, while vines require physical support to attach themselves. Fruits that grow on vines and bushes tend to have pliable herbaceous stems which can be bent without breaking, like berries, kiwi, tomatoes, and grapes, while fruits that grow on trees normally have hard woody stems that will break when bending, such as apples, pears, citrus, peaches, plums, and figs (FAO, 1976). It is important to note that some of the annual vegetables including tomatoes and peppers are very different from other annual vegetables and have been botanically regarded as fruits. In production environments, two major groups are widely practised: open fields (orchards), and greenhouses. Typically, fruit trees are grown in orchards, fruit vines are planted in open fields and greenhouses, and fruit bushes and shrubs can be found in both open fields and greenhouses. Fruits grown in greenhouses are more consistent in colour, shape, position, and size, as the climate conditions can be controlled precisely (Bac et al., 2014b).

Cultivation systems also play a significant role in affecting environmental complexity. Elements such as scaffolds and trellis introduce additional obstacles to harvesting tasks, but the elevation or support from these structures provide fruit with better lighting and ventilation (Van Dam et al., 2005) as well as consistent distribution. The accessibility and visibility of fruit can also be improved by practising proper cultivation systems (Bac et al., 2014b). In this work, production environments with elevated or supported cultivation systems are labelled modified environments.

Recent developments

Schertz and Brown (1968) proposed the idea of applying machines to perform citrus harvesting as early as the 1960s, with research and development on robotic harvesting gradually conducted (D’Esnon, 1985; Kondo & Ting, 1998). However, it was only until the recent two decades that fruit harvesting robotic applications have fully utilised the advances in machine vision, artificial intelligence, and robotics technology. This review covers 47 of the most recent or relevant applications on various fruit varieties that meet the criteria for analysis and comparison through the proposed standardised framework. The number of robotic harvesting systems by fruit variety is presented in Fig. 1. Note that sweet peppers were included in this comparison, as they can be regarded as fruit from a botanical perspective (Pennington & Fisher, 2009) as well as their harvesting process is closer to fruit than a vegetable. Images of different applications are shown in Fig. 2, where different robot systems targeting the same fruits are arranged together and labelled, respectively.

Robotic harvesting applications. “sb”, “tm”, “ap”, “sp”, “cc”, “kw”, “ct”, “rb”, “lc”, “mg”, “pl” represent strawberry, tomato, apple, sweet pepper, cucumber, kiwifruit, citrus, raspberry, litchi, mango, and plum, respectively., sb-1 Hayashi, 2010, sb-2 Feng, 2012, sb-3 Shibuya Seiki, 2014, sb-4 Yamamoto, 2014, sb-5 DogTooth 2018, sb-6 Agrobot-2018, sb-7 Xiong 2019, sb-8 Traptic-2019, sb-9 Harvest CROO-2019, Sb-10 Octinion-2019, sb-11 Advanced Farm-2019, sb-12 Tortuga-2020, tm-1 Kondo, 2010, tm-2 Yaguchi, 2016, tm-3 Zhao, 2016, tm-4 Wang, 2017, tm-5 Feng, 2018, tm-6 Panasonic-, 2018, tm-7 MetoMotion-2019, tm-8 Botian-2019, tm-9 ROOT AI-2019, ap-1 Baeten, 2008, ap-2 Zhao, 2011, ap-3 Nguyen, 2013, ap-4 Siwal, 2017, ap-5 Abundant Robotics-2019, ap-6 FFRobotics-2020, ap-7 Ripe Robotics-2020, ap-8 Kang, 2020, sp-1 Bac, 2017, sp-2 Lehnert, 2016, sp-3 SWEEPER-2018, kw-1 Scarfe, 2012, kw-2 Williams, 2019, kw-3 Mu, 2020 cc, -1 Ven Henten 2002 cc, -2 Ji, 2011 cc, -3 IPK, 2018, ct-1 Muscato, 2005, ct-2 ENERGID, 2012, rb Fieldwork Robotics-2020, lc Xiong, 2018, pl Brown, 2020, mg Walsh, 2019

Finally, Table 1 (see Appendix) presents detailed information about each application. To form a clear view of the major issues and challenges encountered in each project, the potential limitation of each system is summarised in the Issues to be improved column, as extracted from the Discussion or Conclusion sections in the publications reviewed, or the accessible comments on commercial websites.

System architecture

A harvesting robot is usually an integrated multi-disciplinary system that incorporates advanced features and functionalities from multiple fields, including sensing, perception, environment mapping and navigation, motion planning, and robotic manipulation. Thus, existing fruit harvesting robots generally consist of multiple sub-systems:

-

a mobile base to carry the robot around the target,

-

a machine vision system to detect and percept the environment,

-

a control system to achieve overall control of the robot,

-

a collector for storing the harvested fruits,

-

one or several manipulators to approach the fruit while avoiding the obstacles, and.

-

one or several end-effectors to detach the target fruit from the plant.

Robots in Bac et al. (2014a, 2017), Lehnert et al. (2016, 2017, 2020), Arad et al. (2020) have vertical lifting devices to extend the robot’s workspace, while Traptic (2019) and FFRobotics (2019) have conveyors to prevent fruit damage in the process of collecting.

Fruit detection methods, visual sensors and algorithms

A functional robotic vision camera serves as the hardware interface for communication between the robotic harvesting system and the environment. The primary function of the vision system is to perform fruit detection and localisation to achieve autonomous harvesting.

Sensors

To detect and localise the fruit, the images of the environment which record the information of targets and backgrounds are recorded and analysed, utilising either two-dimensional (2D) or three-dimensional (3D) imaging sensors.

2D imaging sensors record the two-dimensional information of the scene, typically in red, green, and blue colours (RGB), infrared (IR), spectral sensors, or a combination of any of them (Li et al., 2014). RGB cameras detect the target fruits from the background by extracting and analysing the fruit properties like colour, geometric shape, and texture by traditional machine learning methods or by using deep learning, which is known to be robust, accurate and efficient. However, RGB cameras are highly sensitive to illumination (Gongal et al., 2015) and detecting the target fruits that have similar colour with background is also challenging. Spectral sensors that provide spectral and spatial information, can be utilised to perform fruit detection based on the various reflectance at different wavelengths. Spectral imaging processing can potentially address the issue caused by the less distinct colour signature of target fruits and background, but the time-consuming data collection and image processing limits its practical application (Li et al., 2014). Thermal sensors record the temperature information of the objects and canopies, which can benefit the detection and segmentation of fruits from a less distinct background. This is because the fruits can absorb more heat and emit more heat compared to the canopies. However, its limitations are apparent when detecting fruits in shadowed areas located in the deep tree canopies. While 2D imaging sensors are low cost and easy to access, normally they alone cannot provide the accurate 3D spatial location of the target fruits in the work environment for robots utilising the selective harvesting method.

Several types of 3D visual sensors exist on the market, such as stereo cameras, Light Detection and Ranging sensors (LiDAR) and RGB-D (RGB-depth) cameras (Chen et al., 2021; Jayakumari et al., 2021). The practical applications of these cameras are shown in Fig. 3, with the maximum number of applications focused on the stereo cameras.

Stereo cameras take images using two or multiple RGB cameras separated by a fixed distance. The images are then fused, with depth calculated through triangulation (Gennery, 1979). While effective, this sensor is slow due to the imaging fusing process and requires frequent calibration, which limits its real-time practicality. LiDAR is a remote range sensor that captures spatial information using pulsed laser reflections to generate a two or three-dimensional point cloud. These sensors are robust and generally perform well under strong natural light, but they cannot provide colour information that is critical for vision-based fruit detection. Although LiDAR can be combined with RGB cameras, data fusion can be slow and the high price of millimetre accurate LiDARs limits its wider application (Wolcott & Eustice, 2017). RGB-D cameras are functionally identical to 2D RGB cameras, but can also process depth images simultaneously, offering a real-time stream of RGB images fused with depth information. These cameras use either time-of-flight (ToF) or structured light methods to calculate the depth distance. ToF sensors utilise an infrared light emitter, which emits pulses of light to measure the distance to the target (Gongal et al., 2015). In the structure light technique, infrared light is emitted in a pattern, and depth information can be calculated based on the level of distortion of this light pattern when returning to the receiver (Gongal et al., 2015).

Compared with stereo cameras, RGB-D cameras are far superior in the localisation accuracy, robustness, and computational efficiency, and are much cheaper than LiDAR sensors for the same accuracy, albeit with a smaller viewing range. These advantages of the RGB-D camera over the others make it more suitable for practical applications and it has been an essential component of vision systems in agriculture applications (Fu et al., 2020; Tu et al. 2020). However, the RGB-D camera will experience performance degradation at a close scanning distance or under extreme weather conditions like strong sunlight. This is because of the lower quality of depth images generated under the above-mentioned scenarios (Wang et al., 2022; Zheng et al., 2021).

All the visual sensors that were used in the reviewed projects have been listed in Fig. 4 with their publication year. As observed, different 3D detection sensors can be combined for better accuracy such as Stereo and ToF. Also, RGB-D cameras (ToF and Structured light) have become increasingly popular for practical applications.

Traditional machine learning methods

By working with the sensors mentioned in the previous section, traditional vision algorithms can extract and encode the features such as colour, geometric shape, and texture from the targets, then the machine-learning-based classifier is applied to classify and recognise objects.

In a single feature-based fruit recognition system, the colour remains one of the most explored features due to its proven accuracy and robustness in controlled environments (Tang et al., 2020). Arefi et al. (2011) processed colour information from RGB, Hue-Saturation-Intensity (HIS), and Luminance- In-phase Quadrature (YIQ) spaces to detect ripe tomatoes under greenhouse environments, and an accuracy of 96.36% on detection of the tomato was mentioned. However, most colour-based methods are suitable for specific fruit, with the colour space carefully selected to distinguish them from the background. Its performance is limited when processing fruits with similar colours to the leaves under varying light conditions. Hence, this method is only feasible under regulated lighting conditions when the illuminations have less influence on their colours, due to its reliance on RGB cameras. To circumvent this issue, other features in the image can be utilised such as texture, light intensity, and edge detection to complement the RGB image. Kang et al., (2020a, 2020b) proposed an algorithm that applies the hierarchy multi-scale feature extraction of colour and shape features, then a K-mean was applied to achieve the classification of extracted regions of interest. This is called multiple feature-based detection systems, in which multiple features are fused into a single data structure for object detection. The multiple feature-based methods can enhance the detection performance in accuracy and robustness when handling uneven illumination conditions, partially occluded targets, and fruits with less distinct colour with background (Tang et al., 2020). Although multiple feature-based fruit recognition methods improve the performance, there is still a gap in the real-time detection performance such as computational efficiency, accuracy, and robustness to natural illumination that needs to be bridged to satisfy the requirements for autonomous fruit harvesting. Deep learning-based methods were developed as a proposed solution.

Deep learning methods

Deep learning methods based on the artificial neural network are widely explored. With multiple-layer perceptron, deep learning methods can form more high-level attribute features (Tang et al., 2020). Both low level and high-level features can be analysed and utilised for final target detection. Among different types of deep learning, a convolution neural network (CNN) is a supervised deep learning method that involves convolution and back-propagation to extract the features of the targets, which greatly improves the accuracy and generalisation of the recognition algorithm (Koirala et al., 2019).

Depth images that are acquired by an RGB-D camera can be used for fruit detection. Gene-Mola et al. (2019) utilised the depth images taken from Kinect v2 to detect apples using Faster R-CNN with Visual Geometry Group (VGG) 16, which has an average precision of 0.613. This is because the depth images are more sensitive to the ambient conditions compared with the RGB images. To overcome the degradation of the depth image, Zheng et al (2021) proposed a key point-based method for stem retention and stem length control during fruit harvesting. Kang et al. (2020a, 2020b) applied a redesigned one-stage detection network for RGB images based on YOLO-V3, to perform object detection in apple orchards, and an F1-score of 0.83 was achieved. Liu et al. (2019) processed the raw RGB images taken from Kinect v2 as the input to the two-stage detection network, faster R-CNN with VGG16 to detect kiwifruits, and an F1-score of 0.884 was achieved. The fruit detection with RGB images can be affected by variation in the ambient lighting, level of maturity, and uncertain background features. Instead, researchers utilised infrared images taken from Kinect v2 to detect kiwifruit with the faster R-CNN with VGG16, and it achieved an average precision of 0.892. Researchers have been working to use multi-modality sensors and multi-images to detect fruit under complex orchard environments. RGB and infrared images were combined to detect sweet pepper through Kinect v2 and achieved an improvement of 4.1% on F1-score compared with a single image detection algorithm (Sa et al., 2016). RGB and depth images were combined to detect kiwifruit, and a 3% higher detection rate was recorded. Although the deep learning method can be applied to various types of raw data such as depth images, RGB images, infrared images, or different combinations of these can achieve high detection accuracy, the training of the algorithm requires a long time as well as the large number of raw images labelling.

Fruit detachment methods and end-effector designs

Fruit detaching methods have a direct effect on fruit damage rate, harvest success rate, and cycle time. Various detaching approaches have been demonstrated in all applications analysed in this paper, but to get a clear view of the status of robotic fruit handling techniques in the harvesting process, each method of fruit detaching and pericarp handling should be analysed in detail.

Many fruit detaching methods rely on stem detachment from the branch by exerting external force directly or indirectly on the stems, which can be categorised into five groups based on force application: stem cut, stem pull, fruit twist, fruit pull, and vacuum. Stem cut and stem pull methods apply their respective actions to the fruit while holding the stem, fruit twist and fruit pull apply their respective actions to the fruit, and vacuum methods create a suction force to extract the fruit without direct contact with either the stem or fruit. Figure 5a shows the stem cut and fruit twist tend to be two major detaching methods, with corresponding average harvest success rates presented in Fig. 5b, where applications applied fruit twisting and stem cut recorded 75% and 65% average harvest rate respectively. Among the four systems adopted where stem pull is the detachment method, only two systems reported a 90% average harvest success rate. However, because the data sample is small, it is premature to claim that any detachment method is superior to another, especially due to the sparsity of the fruit damage data that is currently provided in the literature (only 13 of the 47 systems had their damage rate specified in their works).

Fruit handling methods were also analysed, with 27 of the 47 applications applying various forms of external force on the fruit. These are shown in Fig. 5c, where external forces include clamping, grasping, suctioning or a combination of suction and grasping. As observed, grasping is the preferred method for pericarp contact in fruit handling. There are 20 systems that were published with no pericarp contact during the entire harvesting process. Contactless handling is advantageous as it minimises fruit damage rate and is mostly used in harvesting fruits with high fragility such as strawberries and tomatoes. Figure 6 shows a detailed breakdown of detachment methods per fruit and vice versa. Observation indicates that fruits with relatively fragile skin or pericarp are more likely to be detached via stem cutting, while fruit twisting can be applied on both fragile fruits like strawberries and relatively tough fruits like apples.

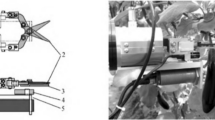

The above fruit detaching methods and handling methods were achieved by various end-effector designs with integrated actuators implementing different control strategies. Three actuator types are most used among fruit harvesting grippers: vacuum (Baeten et al., 2008, Abundant Robotics, 2019), pneumatic (Zhang et al., 2018; Brown et al. 2020), and electric (Xiong et al., 2019a, 2019b). There are also grippers applying multiple actuators (Chiu et al., 2013) to combine the advantages of different actuation methods (Zhang et al., 2020a).

In terms of sensors and control strategies applied to the grippers, besides all the grippers integrated with a robot system having one or more visual sensors to achieve position control, most of the 47 fruit harvesting end-effectors were applying sensorless design, these end-effectors rely on feedforward control via PWM signal to trigger planned motions on either motors or solenoid valves. Beyond the 47 selected robot systems, researchers have adopted proximity sensors to guide the gripper motion (Patel et al., 2011); there are also more and more designs that utilised tactile sensors to implement interactive grasping (Canata et al. 2005; Becedas et al., 2011; Lambercy et al., 2015). These innovations that are not integrated into a harvesting system, may demonstrate great potential if they can validate their performance on an integrated harvesting system via research collaboration.

In order to secure a good harvest success rate while minimising the potential fruit damage, the end effector has to be able to apply proper force to the fruit and adapt to various shapes and sizes (Zhang et al., 2020a, 2020b). Researchers have implemented compliant mechanisms (Siwal et al. 2017), soft materials (Brown et al. 2020) and sensor-based force control (Wang et al., 2018) to improve grasping flexibility. Among the selected 47 applications, 28 of them adopted either compliant mechanisms or soft materials or both.

Fruit occlusions and obstacles handling approaches

The occlusion of fruit from leaves stems, and other obstacles presents a challenge to harvesting robots in grasping and path planning (Yamamoto et al., 2014). To simplify the problem, many published works require modification or simplification of the environment, such as applying proper supports to the plant for better lighting (Van Dam et al., 2005) as well as better accessibility and visibility for robotic systems. Only 8 of the applications claimed that their robot is applied in unmodified environments. For soft-obstacle occlusions such as leaves and stems, several approaches to temporarily move them aside have been demonstrated, such as using mechanical means to gather up leaves so that the target fruit can be fully exposed to the camera (Harvest CROO 2019), or utilising dual-arm cooperation to imitate human movements of pushing leaves to the side (Gesellschaft, 2018).

For non-compliant obstacles such as branches and trellis, or complex structures in general, a multiple Degrees of Freedom (DOF) manipulator with a properly designed path planning algorithm is usually implemented to avoid obstacles. The number of DoF implemented amongst researchers varies, including six (Kang et al., 2020b; Lehnert et al., 2017; Arad et al. 2020; Almendral et al. 2018), seven (Baeten et al., 2008; Mehta & Burks, 2014) and nine (Bac et al., 2017; Nguyen et al., 2013). However, it is observed that a high DoF manipulator tends to increase the system complexity and thus may lead to lower picking efficiency. For example, Kang’s 6 DOF manipulator reported a 6.5 s cycle time (Kang et al., 2020b), while both Abundant Robotics’ and FFRobotics’ low DoF straight-line approach manipulator achieved less than 2 s cycle time (Courtney & Mullinax, 2019). This approach resorts to using vision algorithms to filter out difficult-to-reach fruits, and those not filtered are assumed to be graspable using the straight-line path. Such methods have been applied to harvesting tomatoes (ROOT AI, 2020), apples (Wheat, 2019), and oranges (Energid, 2012; Harrell et al., 1990).

The final mode of occlusion via fruit clustering is a significant challenge for harvesting robots in which no reliable solution to the problem has been found to date. Many researchers have tried various methods to separate the clustering of ripe and unripe fruits, which is commonly seen in strawberry harvesting. Methods such as applying compressed air to blow adjacent fruits away (Yamamoto et al., 2014), or the use of active obstacle‐separation algorithms to isolate fruits (Xiong et al., 2019a) presented only a 50–75% harvest success rate. This rate worsens to just 5% if the target fruit is completely occluded by unripe fruit.

Systems analysis

To evaluate the performance of the selected fruit harvesting robots in Table 1, three general performance indicators (Bac et al., 2014b) are adopted to make the below comparison.

-

Harvest success rate quantifies the number of successfully harvested ripe fruits per total number of ripe fruits in the canopy. It should be noted that successfully harvested fruits may also include damaged fruits.

-

Cycle time measures the average time of a full harvest operation cycle, including recognition, localisation, path planning, grasping, collection, and movement between fruit. Time lost due to failed attempts, where reported is included in the cycle time.

-

Fruit damage rate indicates the number of damaged fruits. This includes peduncle damages per total number of localised ripe fruit.

Note that these performance indicators are not crop-independent, due to the large variation of geometrical fruit distribution and leaves and branch density among different crops or different cultivars of one crop. For example, the crop distribution of different cultivars of sweet peppers can vary significantly (Bac et al., 2014b), which alters the cycle time for different cultivars for the same robot, making comparisons difficult to quantify. Similar concerns may also apply to some other performance indicators like harvest success rate and damage rate. A normalising factor can be introduced for each indicator to make the performance data comparable among different robots or crop cultivars, but this requires significant data collection and analysis to achieve a fair normalisation factor. Data of this magnitude is not available at this time, hence the performance indicators used in this comparison are not normalised. However, by focusing on the comparison of each robotic system through its fruit application, it can provide an overall picture of the current state of harvesting robots.

Harvest success rate

28 out of the 47 applications have their harvest success rate documented. As shown in Fig. 7, although none of the existing robots has achieved a 100% harvest rate in apple, tomato and cucumber harvesting, significant progress has been made in harvesting rates with multiple prototypes and products recording higher than 80% harvest success rate. Reported success rates for strawberry and sweet pepper harvesting are relatively low, citing environment complexity as a possible reason with a harvest success rate significantly increasing after environment simplification.

Cycle time and overall speed

Cycle time refers to the speed of harvesting for a single robotic arm, with a comparison of systems in different fruit harvesting applications shown in Fig. 8. For each fruit, the advised cycle time for commercial viability is indicated by the area within the dotted rectangular region on each graph (Van Henten et al., 2009; Nguyen et al., 2014; Panasonic, 2018; Hemming et al., 2014; Harrell, 1987; Muscato et al., 2005; William et al. 2019; Tang et al., 2020). Among the analysed systems with cycle time reported, most applications cannot compete with human counterparts except for three systems by Octinion company (2019), HarvestCROO Robotics (2020), and Abundant Robotics (Courtney & Mullinax, 2019).

Although cycle time provides a good metric for commercial viability, it alone cannot determine a system’s commercial viability. Other factors like harvest success rate, fruit damage rate, robot costs and potential continuous operational time are equally important for commercial feasibility. In addition, different researchers may have different definitions of cycle time, achieving faster times when excluding fruit recognition time (Silwal et al., 2017) or fruit-to-fruit traversal time (Lehnert et al., 2017; Muscato et al., 2005), or slower times with the inclusion of mobile base traversal time (Williams et al., 2019). To indicate these alternate definitions, ++ or −− marks are included in the comparison charts to indicate a shorter or longer than standard cycle time definition for a particular application.

Researchers and developers have also implemented multiple arms in a single robotic system as well as robot fleet concepts to further enhance cycle time and productivity. Xiong reduced the cycle time of their robot from 6.1 to 4.6 s per fruit when switching from single to dual arms (Xiong et al., 2019a). Agrobot (2018) and Advanced Farm (2019) mounted many independent picking systems on a single mobile base, and Harvest CROO’s strawberry harvesting system equipped with 16 robotic heads and 16 arm-camera-gripper sets can pick 3 fruits every 10 s (Harvest CROO 2019). FFRobotics claimed that their 12-arms apple harvester can pick 10 times faster than human labours (FFRobotics, 2019).

Damage rate

Ideally, fruit harvesting robots are expected to preserve the quality of the fruit during the picking cycle. This is very difficult for robots, as there are many opportunities to damage fruit when implementing pinching, clamping, or grasping actions with pulling, bending, and twisting motions (Bu et al., 2020).

Only 13 of the 47 reviewed applications had their damage rate reported. As shown in Fig. 9, 8 of the 13 applications presented a damage rate higher than or equal to 10%, and 5 claimed a damage rate equal to or lower than 5%. Only one system has verified its 5% damage rate with extensive field test data with 240 samples (Mu et al., 2020), while the rest have not made their field test data public.

Of the systems that report fruit damage, most focus on skin or flesh damage, which has the highest influence on fruit marketability. However, other forms of damage such as broken or removed stems can also affect its shelf life, respectively, as seen in apples, pears, cherries, and some types of plums (Paltrinieri, 2002). Baeten et al (2008), no reported skin damage on apples harvested, but approximately 30% of them had stems removed.

Another form of damage a harvesting robot can inflict is the trees and canopies that the fruit are attached to. Spur damage occurs when the fruit is extracted along with the branch or green matter it is attached to. If this contains a spur or bud, then this part of the tree will no longer yield any fruit, affecting the next season’s harvest. Only one robot has recorded spur damage, recording 6.3% skin damage and 26% spur damage on harvested fruit (Silwal et al., 2017). Furthermore, tree damage can also come in the form of accidental contact with fruit during harvesting. This is particularly problematic if unripe fruits are damaged or knocked off the canopy, as it means the fruit cannot grow further and is permanently lost. Again, only one system has reported this kind of damage, where the reported 25% overall damage rate consisted of a 15.3% drop rate and 6.7% knock off rate (Williams et al., 2019).

Relation between robot performance and commercial viability

The potential for commercialisation of a proposed harvesting robot is frequently mentioned in reported literature (Hemming et al., 2014; Muscato et al., 2005; Nguyen et al., 2013; Tibbets, 2018; Van Henten et al., 2009; Williams et al., 2019). Although different thresholds for commercial viability have been cited, neither harvest success rate nor an outstanding cycle time by itself could secure the commercial success of a harvesting robot if the damage rate is unacceptably high. Hence, it is important to understand the determining criteria for the commercial viability of harvesting robots.

Economic factors should be considered, primarily cost and profit. If a robotic system can achieve a comparable harvest success rate and damage rate as a human picker, then the threshold for commercial viability can be assessed by two the robot’s annual cost and its efficiency. The overall annual cost of robots should be no higher than the yearly cost of human labour when picking the same amount of fruits, and the robot’s daily picking amount should be no lower than a human counterpart. Alternatively, if a robot cannot compete with human labourers on either harvest success rate or damage rate, the harvest task could be shared by the robot and human pickers. In this case, the annual profit of the farm after adopting the system should be equal to or higher than the original annual profit. With such criteria, the relationship between a harvesting robot’s performance and its commercial viability can be formulated. The effect of each performance indicator (harvest success rate, damage rate, cycle time) on the commercial viability can then be accurately assessed. At the current stage, the essential data (robot cost, robot lifespan, maintenance cost, etc.) for calculating the cost and profit of the robotic harvesting business is still far from ready.

Synopsis of current research challenges in robotic harvesting

Significant efforts have been made to apply robots in fruit harvesting tasks in the past two decades, but very few of them have been proven reliable for commercial operation. In most cases, existing harvesting robots do not meet the requirements of low damage, high harvest rate and fast speed at the same time. Thus, most farms are still relying on human labours to perform harvesting tasks (Zhao et al., 2016a).

To gain a direct impression of the challenges and issues encountered in robotic harvesting development, data has been extracted from the literature and compiled in the ‘issues to be improved’ column of Table 1, and represented in Fig. 10. The reported challenges and issues have been categorised into five groups: environment control, system challenges, path planning, end-effector, and sensing challenges. The number reported to the right of each bar indicates the occurrences that the item has been cited as a challenge for their robot, and items marked with blue bars represent challenges and issues reported in less than 4 applications. From the chart, the efficiency tends to be the most reported issue that needs improving in further development of the fruit harvesting robot, while end-effector adaptability, obstacle detection and handling, vision detection under occlusion, stem detection and fruit cluster handling can also be regarded as common challenges in the field.

Since this summary and statistical analysis is based on the information reported by literature, it is possible that some research challenges are underestimated or missed. Therefore, to develop a better understanding of the challenges and potential research directions of robotic fruit harvesting, a systematic review is conducted based on environmental challenges in harvesting versus customer requirements. Figure 11 shows a map diagram that links observed environmental complexities in harvesting to the left, to meeting customer requirements on the right, through a series of research challenges and associated potential research directions which could address customer requirements by improving harvest success rate, limiting the damage rate, enhancing the picking efficiency or reducing the robot cost. Therefore, all issues and challenges discussed in the literature can be matched with their origin in the environment input and connected with potential research directions, which could eventually meet customers’ demands. More critically, new insights for potential research topics (items marked *) are formed in the process of completing this map diagram, which are topics that can drive harvesting robots further towards commercialisation.

Potential research directions

Based on the authors’ analysis of all robot applications of fruit harvesting which are suitable for the aforementioned framework, the following frontier topics that can be significant drivers for harvesting robot commercialisation are identified.

Occlusion mitigation

Occlusion is identified as one of the most significant issues of robotic harvesting, as it affects the recognition and localisation accuracy of harvesting robots (Tang et al., 2020). Decreased recognition accuracy will result in lower harvest success rates and higher damage rates. Therefore, measures should be taken to mitigate occlusion from different aspects.

Cultivar training

Fruit trees are grown in a variety of shapes, but natural field-grown plants tend to be more unstructured than trained plants in a greenhouse or orchard in terms of size, foliage volume, and limb pattern (Fernández 2018). Before the emergence of harvesting robots in recent years, fruit growers had already started applying manipulation of the tree form or shape to encourage fruit production (Costes et al., 2010). For example, apple trees were pruned into a planar canopy to simplify manual or mechanised harvesting by providing better fruit accessibility (Nguyen et al., 2013). However, this practice also encouraged uniformity in fruit size, colour, and maturity (Hohimer et al., 2019). In 2019, apple growers in the US have presented their willingness to remove fruits adjacent to trellis wires and trunks to optimize fruit distribution for robot harvesting (Hohimer et al., 2019). There is also a recent push to build indoor farming systems, were compared with outdoor farming, indoor farms require much less space whilst securing much higher yields. For example, indoor tomato farms can have a yield ten times greater than outdoor farms (Shieber, 2018). These significant advantages will lead to higher motivation of crop growers to make the change, which will benefit robotic harvesting as indoor farms could be designed with trained cultivar systems best suit harvesting robots (Bac et al., 2014b). Thus, environment complexity could be significantly reduced in the future.

In the state-of-the-art, nearly all the harvesting robot systems that claimed to be commercial are designed exclusively for customised cultivation systems. In this case, trees and plants have been specifically trained to be well structured, providing the robot with a workspace of occlusion and obstacles. Further improvements to the workspace can be achieved by pruning, reducing occlusion further (Van Henten et al., 2002) and reducing cycle times (Edan et al., 1990).

Fruit and leaf thinning

Occlusion mitigation can be implemented by fruit and leaf thinning. Such operations are currently conducted mostly by human labour but will be gradually assisted by robots (Priva 2020).

Fruit thinning of annual bearing cultivars is widely practised by growers to improve fruit size and quality (Dennis, 2000). For robotic harvesting, fruit thinning can be implemented to avoid fruit clusters, as overlapping fruit pose a challenge to the localisation of individual fruits (Jiao et al., 2020) while clustered fruit make the task of detachment significantly complicated (Bac et al., 2014b).

Leaf thinning or leaf removal through manual or mechanical means has been a common practice in modern viticulture to increase ventilation and sunlight exposure and thus improve fruit quality and production (Zhuang et al., 2014; Bogicevic 2015). Researchers have explored mechanical approaches to clear leaves temporarily for fruit extraction (Lee & Rosa, 2006), However, As the soft robotic gripper becomes significantly popular for autonomous grasping applications (Crooks et al., 2016; Wang et al., 2020, 2021), Zhou in Kang et al., (2020a) developed an adaptive gripper that can reach through the canopy and pushing away the leaves around the target fruit without damaging the canopy structure.

Fruit damage prevention

Mechanical damage during harvesting tends to result in a substantial reduction in fruit quality (Li & Thomas, 2014). Mechanical abrasions and bruising on fruit can accelerate water loss, increasing the chance of rotting fungi and bacteria penetrating the fruit and causing rapid decay (Trimble, 2021). Therefore, harvesting robot researchers should explore all possible measures to minimise fruit damage. Most existing studies only focused on avoiding mechanical bruises of target fruit, but the damage could also occur to adjacent fruits due to direct robot contact or the unexpected detachment triggered by unintentional branch oscillation. Thus, the following points aim to reduce or prevent fruit damage.

Preserving target fruit

Target fruit can sometimes be surrounded by incompliant obstacles such as thick branches, trellis wires and sprinkler lines. Existing harvesting robots have difficulty picking these fruits without causing unexpected damage due to limited obstacle-sensing capabilities, difficulty locating and segmenting objects in unstructured areas, and lack of dexterity of robotic arm and end-effector. There are two potential methods of reducing fruit damage in these instances—handling or avoiding these scenarios effectively through sensors and software, or modifying the robot’s hardware to reduce or eliminate fruit damage.

In unstructured environments, machine vision is not effective due to the existence of leaves and other obstacles leading to partial or full occlusion. Additional sensing technologies such as tactile sensing can provide an alternative sense to assist the robot in planning. This can be effective in handling branch interference or collisions with trellis wires and other fruit.

The robot’s gripper can also be modified to minimise the chance of damaging the fruit. Applying soft material to the end effector surface minimises damage caused by direct contact between fruit and end-effector components (Komarnicki et al., 2017). However, the grasping of foreign objects such as branches can also damage fruit, regardless of the compliance of the gripper. To the knowledge of the authors, no solution to this problem has yet been found.

Preserving adjacent fruit

A frequently observed yet rarely mentioned form of fruit damage occurs during the grasping phase, where adjacent fruits are disturbed, this can potentially damage them in two ways—direct contact damage or indirect damage, causing the fruit to detach unintentionally. Precise environment modelling, robust path planning, and appropriate end-effector footprint optimisation can address these issues. Particular focus can be given to path optimisation in detaching fruit, as it is shown to be very effective in reducing fruit damage of this form (Nguyen, 2012).

Sensing enhancement

Detection of fruits with a similar colour to the background

Most existing fruit recognition methods distinguish fruit from leaves and branches by analysing colour differences. However, this method is challenging in low lighting or low contrast environments (Kelso, 2009), especially for fruits with less distinct colour from the background.

For the fruits with different colours with leaves, once they are exposed to low lighting or a contrast environment, several measures can be taken to control the lighting condition for imaging in the orchard. Nighttime artificial lighting can be one effective solution as the illumination can be controlled from the lighting source. However, this will limit the operation time of the fruit picking robot. Gongal et al. (2015) reported the use of an over-the-row platform with a special tunnel structure to control the lighting conditions. The way of controlling the lighting condition can be one potential solution as it can effectively control the lighting condition and guarantee the robot’s work day and night.

For the fruits with similar colour compared to the background, spectral imaging can be used to improve recognition rates (Bao et al., 2016; Fernández et al., 2018; Li et al., 2017; Mao et al., 2020) to above 80% in most cases (Van Henten et al., 2002). The development of faster spectral imaging processing algorithms will save time and increase the feasibility of spectral imaging methods.

Researchers have also utilised morphology or texture features to distinguish the fruit from the background with low contrast. Fruit recognition algorithms that utilised the shape features are universal for detecting the sphere fruit such as apples, tomatoes, and citrus (Zhao et al., 2016a, 2016b). The images taken in a natural outdoor environment have texture differences that can be utilised to distinguish fruit from its background. By using texture features and checking the intensity level of illumination distributed on the surface of the citrus and background objects, immature citrus fruits can be recognised. However, this method is highly affected by intense light. To improve reliability under all lighting conditions, some researchers used multiple features such as colour, morphology, and texture with data fusion approaches. This was applied successfully in reliable apple recognition (Rakun et al., 2011). Overall, utilising deep learning-based methods with multi-model sensors showed promising results and performance in fruit detection.

Exogenous disturbances

Exogenous disturbances of trees and canopies include lighting variation, wind, rain, and unintentional robot-plant contact. For the lighting variation, except for controlling the light condition of the environment, image quality enhancement can also be utilized like adjusting the intrinsic parameters for the cameras such as exposure, contrast. The imaging quality can be enhanced for better detection performance. Some of these disturbances can change the original pose of the fruit, which can reduce the accuracy of grasping if the positions of the fruit are not updated accordingly. To handle these disturbances, a combination of resilient end-effector design, efficient vision servo and robust control technology should be implemented. From the visual aspect, a high frequency of detecting process can be implemented to refresh the detecting result to get real-time fruit distribution.

Ripeness and defect detection

In traditional agricultural practice, determining the proper harvest maturity of fruit largely relies on farmers’ experience, as maturity dates of fruits grown on the same tree may vary at weekly intervals for a month or more (Paltrinieri, 2002). Therefore, it is necessary to detect fruit ripeness when harvesting to maximise yield. The challenge in monitoring the ripeness of fruit for consumers lies in finding solutions that can provide non-destructive testing at a minimal cost. The use of penetrometers and refractometers are two methods that are destructive to the fruit, either by breaking the skin (to measure sugar content) or causing bruising (to quantify firmness) (Torregrosa et al., 2009). Non-destructive methods such as imaging, spectroscopy, and hyperspectral imaging are preferred, as they do not require physical contact with the fruit. Spectrometer-based approach splits light signals into a fruit and then measures the light that is emitted, absorbed, or scattered by the fruit for ripeness inference (Torregrosa et al., 2009). However, both techniques based on colour imaging and spectroscopy are substantially determined by the occlusion and lighting conditions (Tan et al., 2018).

Tactile sensing can be an alternative solution for real-time fruit ripeness detection, which has recently been applied to mangoes with an 88% accuracy in the lab (Scimeca et al., 2019).

Stem retention and stem length control

Proper handling methods during harvesting and post-harvest processes are critical for extending the shelf life of fruit (Shewfelt et al., 2014). For fruit varieties like apples, mangoes, citrus, and avocados, a pulled-out stem may act as an ideal entrance for fungi and bacteria to break into the fruit and lead to a rapid decay (Paltrinieri, 2002). However, very few applications in the literature have reliably solved the stem retention problem, due to the unpredictability of fruit growth orientation (Silwal et al., 2017). In addition to stem retention, stem length for specific fruit varieties is also important for extending shelf life, such as for mangoes (1.5 cm) and avocados (1 cm) (Paltrinieri, 2002). For post-harvest handling of premium apple varieties with soft skins such as Honeycrisp and Fuji, stems must be clipped to avoid puncturing when stacking for storing (Hohimer et al., 2019). To address these challenges, further development of visual stem detection and tactile sensing is proposed (Gesellschaft, 2018).

Optimising fruit detaching methods

Different fruits have different optimal detachment methods that consume the least energy harvesting and produce minimal damage to the fruit, according to a report released by the Food and Agriculture Organization of The United Nations. For example, a lift, twist and pull series of movements are believed as the best picking method for tomatoes and passion fruit, as they grow a pedicel abscission zone (natural breakpoint) to shed ripe fruits naturally (Ito & Nakano, 2015). While for fruits like apple, citrus, mango, and avocado which have woody stalks, the best way to remove them from the tree is to clip them, such that there remains a small section of stem attached to the fruit (Paltrinieri, 2002).

Although these detachment methods have been proven and practised by human workers all over the world, they are rarely implemented by robots on fruits like apples and oranges due to the difficulty of accurately detecting stems and stalks in a dense canopy (Silwal et al., 2017). Before a reliable stem detection technology is available, efforts to explore suboptimal detachment methods could also improve the efficiency of harvesting robots. Experimental investigation of apple picking patterns (Li et al. 2016) and tomato grasp types (Li et al., 2019) are good examples.

Other potential directions

Multiple end-effectors and swarms

Applying multiple end-effectors can significantly increase the harvest efficiency for individual robots, making it possible for robots to surpass human picking speed (Zhao et al., 2016a). FF Robotics has demonstrated that 12 arms can work simultaneously to pick apples with productivity ten times higher than human pickers (Courtney & Mullinax, 2019). Xiong has reduced the cycle time of strawberry harvesting from 6.1 to 4.6 s by integrating two Cartesian arms into their system (Xiong et al., 2019a). Swarms of harvesting robots can also work together to boost productivity by utilising rapid developing deep learning algorithms to enable training of interactive behaviour, such that the performance of robot swarms can be further enhanced with time (Shamshiri 2018).

Human–robot collaborative approaches

Before harvesting robots have developed sufficient intelligence to be able to perform fully autonomous harvesting tasks without human intervention or supervision, human–robot collaborative approaches can be an alternative research direction to be explored to bypass the technology bottleneck. Shamshiri illustrated a scenario of human–robot collaboration before robots can achieve a 100% harvest rate, where any remaining fruit missed by robot vision could be picked with humans intervening via a touchscreen interface (Shamshiri 2018).

Alternative technologies for versatile cross crop harvesting

Alternative solutions such as fruit picking drones (Tevel Aerobotics, 2020; Saracco, 2020), climbing robots (Megalingam et al., 2020), and continuum robots (Gao et al., 2021) have been demonstrated, but highlight the ingenuity of researchers and how one should not constrain harvesting robots to simply robotic arms on mobile bases. They also show how different types of robots and technologies can work for various crops.

Most crops share a similar harvesting process. Therefore, robots also tend to have similar capabilities, such as object detection, motion planning, target approaching and crop detaching with similar hardware. Only the task of crop detaching may require different end-effectors for different crops, which is a quick operation, therefore one specific robot can operate with multiple end-effectors and software packages to harvest multiple crops with similar growing environments. Cross crop harvesting has been attempted and demonstrated in tomatoes, strawberries, cucumber (ROOT AI, 2020), oranges, apples (Barber, 2020), melons, and peppers (Heater, 2020). Furthermore, by extending crop detection technology to detect other objects like branches, stems, flowers or even trellis support wires, together with applying specialized end-effectors, current harvesting robots can be adapted to other agricultural tasks such as pruning, thinning, pollination, de-leafing (MetoMotion, 2020; Wheat, 2019).

Conclusion

A comprehensive review of the state of the art of fruit harvesting robots was conducted, including the analysis and comparison of the 47 applications over the last 20 years, where the overall performance of each system and observed research trends have been presented. The performance indicators chosen for comparison are harvest success rate, harvesting speed, and damage rate, which are critical performance indicators for a robot’s commercial viability.

The detailed analysis and comparison of the harvesting robots’ performance indicate that there is still a significant gap between current robotic harvesting technology and commercialisation. Not only has the overall performance of the robots hasn’t reached its human counterparts’ level, but also the essential data for commercialisation is still far from ready. It is also worth mentioning that, besides the 47 integrated robot systems thoroughly reviewed in this work, there is a large volume of work that focused on developing the subsystems of a harvesting robot. However, the optimisation and integrations of the subsystems developed by different research groups are not easily implemented. An open collaborative culture would enable the community to pool the talent and strengths of different research groups and speed up the solutions.

A close scrutinisation of the origin of the fruit damage in the robotic harvesting process has been performed. Although direct damage of harvested fruit is often the focus in existing literature, the indirect damage of the adjacent fruits is often overlooked. This paper analyses the potential source of indirect fruit damage, and potential prevention measures are proposed.

The reasons behind the inadequate performance of existing harvesting robots have been systematically examined. From this, a connected map of the challenges and corresponding research topics that link the environmental challenges of harvesting with customer requirements has been summarized for the first time in the literature. This map provided new insights to potential high-yield research directions, including vision systems to better identify obstacles and identify fruits with occlusions, fruit extraction optimisation to reduce stem and tree damage, and tactile sensing for stem and ripeness detection. These directions will help drive potential robotic harvesting systems closer to commercialisation and help solve the socio-economic problems that farmers face with seasonal fruit harvesting.

Data availability

As per the regulations of our institute, we can share the data acquired in this research upon individual request until December 2021.

Code availability

Not applicable.

References

Abundant Robotics. (2019). Retrieved January 16, 2021, from https://www.abundantrobotics.com/

Advanced Farm. (2019). Retrieved December 6, 2020, from https://www.advanced.farm/

Agrobot Company. (2018). E-series. Retrieved December 06, 2020, from https://www.agrobot.com/e-series

Almendral, K. A. M., Babaran, R. M. G., Carzon, B. J. C., Cu, K. P. K., Lalanto, J. M., & Abad, A. C. (2018). Autonomous fruit harvester with machine vision. Journal of Telecommunication, Electronic and Computer Engineering (JTEC), 10(1–6), 79–86.

Aloisio, C., Mishra, R. K., Chang, C. Y., & English, J. (2012). Next generation image guided citrus fruit picker. In 2012 IEEE international conference on technologies for practical robot applications (TePRA) 2012 (pp. 37–41).

Arad, B., Balendonck, J., Barth, R., Ben‐Shahar, O., Edan, Y., Hellström, T., Hemming, J., Kurtser, P., Ringdahl, O., Tielen, T. and van Tuijl, B. (2020). Development of a sweet pepper harvesting robot. Journal of Field Robotics, 37(6), 1027–1039.

Arefi, A., Motlagh, A. M., Mollazade, K., & Teimourlou, R. F. (2011). Recognition and localization of ripen tomato based on machine vision. Australian Journal of Crop Science, 5(10), 1144–1149.

Associated Press. (2020, April 8). Thousands of acres of Florida fruits, veggies left to rot amid coronavirus pandemic. WTSP. Retrieved October 21, 2020, from https://www.wtsp.com/article/news/health/coronavirus/florida-fruits-vegetables-rot-business-coroanvirus/67-1a6cbf8d-2438-4bbf-9ece-21fd6117809d

Bac, C. W., Hemming, J., & Van Henten, E. J. (2014a). Stem localization of sweet-pepper plants using the support wire as a visual cue. Computers and Electronics in Agriculture, 105, 111–120.

Bac, C. W., Hemming, J., Van Tuijl, B., Barth, R., Wais, E., & Van Henten, E. J. (2017). Performance evaluation of a harvesting robot for sweet pepper. Journal of Field Robotics, 34(6), 1123–1139.

Bac, C. W., Van Henten, E. J., Hemming, J., & Edan, Y. (2014b). Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. Journal of Field Robotics, 31(6), 888–911.

Bachche, S. (2015). Deliberation on design strategies of automatic harvesting systems: A survey. Robotics, 4(2), 194–222.

Baeten, J., Donné, K., Boedrij, S., Beckers, W., & Claesen, E. (2008). Autonomous fruit picking machine: A robotic apple harvester. Springer Tracts in Advanced Robotics, 42, 531–539.

Bao, G., Cai, S., Qi, L., Xun, Y., Zhang, L., & Yang, Q. (2016). Multi-template matching algorithm for cucumber recognition in natural environment. Computers and Electronics in Agriculture, 127, 754–762.

Barber, A. (2020, November 18). Robotic harvesting to kick off in GV. Apple & Pear Australia Ltd (APAL). Retrieved December 22, 2020, from https://apal.org.au/robotic-harvesting-to-kick-off-in-gv/

Becedas, J., Payo, I., & Feliu, V. (2011). Two-flexible-fingers gripper force feedback control system for its application as end effector on a 6-DOF manipulator. IEEE Transactions on Robotics, 27, 599–615.

Bogicevic, M., Maras, V., Mugoša, M., Kodžulović, V., Raičević, J., Šućur, S., & Failla, O. (2015). The effects of early leaf removal and cluster thinning treatments on berry growth and grape composition in cultivars Vranac and Cabernet Sauvignon. Chemical and Biological Technologies in Agriculture, 2(1), 1–8.

Botian Company. (2019, February 25). Fruit and vegetable picking robot. Retrieved December 6, 2020, from http://www.szbotian.com.cn/en/Pr_d_gci_60_id_27_typeid_1.html

Brown, J., & Sukkarieh, S. (2021). Design and evaluation of a modular robotic plum harvesting system utilizing soft components. Journal of Field Robotics, 38(2), 289–306.

Bu, L., Hu, G., Chen, C., Sugirbay, A., & Chen, J. (2020). Experimental and simulation analysis of optimum picking patterns for robotic apple harvesting. Scientia Horticulturae, 261(108), 937.

Bulanon, D. M., & Kataoka, T. (2010). Fruit detection system and an end effector for robotic harvesting of Fuji apples. Agricultural Engineering International: CIGR Journal, 12(1), 203.

Cannata, G., & Maggiali, M. (2005). An embedded tactile and force sensor for robotic manipulation and grasping. In Humanoid robots, 2005 5th IEEE-RAS international conference (pp. 80–85).

Chandler, A. (2020, July 23). Fruit and veg risk rotting in Australia on second Covid-19 wave. Bloomberg. Retrieved October 21, 2020, from https://www.bloomberg.com/news/articles/2020-07-22/fruit-and-veg-risk-rotting-in-australia-on-second-covid-19-wave

Charles, D. (2018, March 20). Robots are trying to pick strawberries. So far, they're not very good at it. NPR. Retrieved December 2, 2020, from https://www.npr.org/sections/thesalt/2018/03/20/592857197/robots-are-trying-to-pick-strawberries-so-far-theyre-not-very-good-at-it

Chen, Y., An, X., Gao, S., Li, S., & Kang, H. (2021). A deep learning-based vision system combining detection and tracking for fast on-line citrus sorting. Frontiers in Plant Science, 12, 171.

Chiu, Y.-C., Yang, P.-Y., & Chen, S. (2013). Development of the end-effector of a picking robot for greenhouse-grown tomatoes. Applied Engineering in Agriculture, 29, 1001–1009.

Costes, E., Lauri, P. E., & Regnard, J. L. (2010). Analyzing fruit tree architecture: Implications for tree management and fruit production. Horticultural Reviews, 32, 1–61.

Courtney, R., & Mullinax, T. J. (2019, December 2). Washington orchards host robotic arms race. GoodFruit. Retrieved December 6, 2020, from https://www.goodfruit.com/washington-orchards-host-robotic-arms-race/

Crooks, W., Vukasin, G., O’Sullivan, M., Messner, W., & Rogers, C. (2016). Fin ray® effect inspired soft robotic gripper: From the robosoft grand challenge toward optimization. Frontiers in Robotics and AI, 3, 70.

Davidson, J. R., Silwal, A., Hohimer, C. J., Karkee, M., Mo, C., & Zhang, Q. (2016) Proof-of-concept of a robotic apple harvester. In 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS) 2016 Oct 9 (pp. 634–639). IEEE.

De Kleine, M. E., & Karkee, M. (2015). A semi-automated harvesting prototype for shaking fruit tree limbs. Transactions of the ASABE, 58(6), 1461–1470.

De Preter, A., Anthonis, J., & De Baerdemaeker, J. (2018). Development of a robot for harvesting strawberries. IFAC-PapersOnLine, 51(17), 14–19.

Dennis, F. J. (2000). The history of fruit thinning. Plant Growth Regulation, 31(1), 1–16.

D’Esnon, A. G. (1985). Robotic harvesting of apples. In Agri-Mation 1, Chicago, Ill. (USA), 25–28 Feb 1985, ASAE.

DogTooth Company. (2018). Robotic Harvesting. DogTooth. Retrieved October 21, 2020, from https://dogtooth.tech/

Edan, Y., Flash, T., Shmulevich, I., Sarig, Y., & Peiper, U. (1990). An algorithm defining the motions of a citrus picking robot. Journal of Agricultural Engineering Research, 46, 259–273.

Energid. (2012). Retrieved December 2, 2020, from https://www.energid.com/industries/agricultural-robotics

FAO. (1976). The plant the stem the buds it's leaves. Food and Agriculture Organization of the United Nations (pp. 1–30).

Feng, Q., Zou, W., Fan, P., Zhang, C., & Wang, X. (2018). Design and test of robotic harvesting system for cherry tomato. International Journal of Agricultural and Biological Engineering, 11(1), 96–100.

Fernández, R., Montes, H., Surdilovic, J., Surdilovic, D., Gonzalez-De-Santos, P., & Armada, M. (2018). Automatic detection of field-grown cucumbers for robotic harvesting. IEEE Access, 6, 35,512–35, 527.

FFRobotics. (2019). Retrieved January 16, 2021, from https://www.ffrobotics.com/

Fountas, S., Mylonas, N., Malounas, I., Rodias, E., Santos, C. H., & Pekkeriet, E. (2020). Agricultural robotics for field operations. Sensors, 20(9), 2672.

Fu, L., Gao, F., Wu, J., Li, R., Karkee, M., & Zhang, Q. (2020). Application of consumer rgb-d cameras for fruit detection and localization in field: A critical review. Computers and Electronics in Agriculture, 177(105), 687.

Gao, G., Liu, C., & Wang, H. (2021). Kinematic accuracy of picking robot constructed by wire-driven continuum structure. Proceedings of the Institution of Mechanical Engineers, Part e: Journal of Process Mechanical Engineering, 235(2), 299–311.

Gennery, D. B. (1979). Stereo-camera calibration. In Proceedings ARPA IUS Workshop (pp. 101–107).

Gesellschaft, F. (2018, February 1). Lightweight robots harvest cucumbers, PHYS.ORG. Retrieved December 2, 2020, from https://phys.org/news/2018-02-lightweight-robots-harvest-cucumbers.html

Gongal, A., Amatya, S., Karkee, M., Zhang, Q., & Lewis, K. (2015). Sensors and systems for fruit detection and localization: A review. Computers and Electronics in Agriculture, 116, 8–19.

Griffin, M. (2020). Strawberry picking robot that out performs human pickers heads to the fields. Retrieved December 19, 2020, from https://www.fanaticalfuturist.com/2020/12/strawberry-picking-robot-that-out-performs-human-pickers-heads-to-the-fields

Harrell, R. (1987). Economic analysis of robotic citrus harvesting in Florida. Transactions of the ASAE, 30(2), 298–304.

Harrell, R., Adsit, P. D., Munilla, R., & Slaughter, D. (1990). Robotic picking of citrus. Robotica, 8(4), 269–278.

Harvest CROO. (2019). Retrieved December 6, 2020, from https://harvestcroo.com/

Hayashi, S., Shigematsu, K., Yamamoto, S., Kobayashi, K., Kohno, Y., Kamata, J., & Kurita, M. (2010). Evaluation of a strawberry-harvesting robot in a field test. Biosystems Engineering, 105(2), 160–171.

Heater, B. (2020). Traptic uses 3d vision and robotic arms to harvest ripe strawberries. Retrieved December 16, 2020, from https://tcrn.ch/2JFQS4E

Hemming, J., Bac, C. W., van Tuijl, B. A., Barth, R., Bontsema, J., Pekkeriet, E. J., & Van Henten, E. (2014). A robot for harvesting sweet-pepper in greenhouses. In 2014 international conference of agriculture engineering C0114.

Herrick, C. (2017, May 8). Abundant Robotics Gets $10M Investment for an Apple Harvester. GrowingProduce. Retrieved November 26, 2020. from https://www.growingproduce.com/fruits/apples-pears/abundant-robotics-gets-10m-investment-for-an-apple-harvester/

Hodge, K. (2020). Coronavirus accelerates the rise of the robot harvester. Retrieved October 21, 2020, from https://www.ft.com/content/eaaf12e8-907a-11ea-bc44-dbf6756c871a

Hohimer, C. J., Wang, H., Bhusal, S., Miller, J., Mo, C., & Karkee, M. (2019). Design and field evaluation of a robotic apple harvesting system with a 3d-printed soft-robotic end-effector. Transactions of the ASABE, 62(2), 405–414.

Hornyak, T. (2020). $50,000 strawberry-picking robot to go on sale in japan, Retrieved October 21, 2020, from https://www.cnet.com/news/50000-strawberry-picking-robot-to-go-on-sale-in-japan/

Ieg, V. (2020, April 9). Coronavirus triggers acute farm labour shortages in Europe. IHS Markit. Retrieved October 21, 2020, from https://ihsmarkit.com/research-analysis/article-coronavirus-triggers-acute-farm-labour-shortages-europe.html

Ito, Y., & Nakano, T. (2015). Development and regulation of pedicel abscission in tomato. Frontiers in Plant Science, 6, 442.

Jayakumari, R., Nidamanuri, R. R., & Ramiya, A. M. (2021). Object-level classification of vegetable crops in 3D LiDAR point cloud using deep learning convolutional neural networks. Precision Agriculture, 26, 1–7.

Ji, C., Feng, Q., Yuan, T., Tan, Y., & Li, W. (2011). Development and performance analysis on cucumber harvesting robot system in greenhouse. Jiqiren (robot), 33(6), 726–730.

Ji, W., Zhao, D., Cheng, F., Xu, B., Zhang, Y., & Wang, J. (2012). Automatic recognition vision system guided for apple harvesting robot. Computers and Electrical Engineering., 38(5), 1186–1195.

Jia, W., Zhang, Y., Lian, J., Zheng, Y., Zhao, D., & Li, C. (2020). Apple harvesting robot under information technology: A review. International Journal of Advanced Robotic Systems, 17(3), 1–16.

Jiao, Y., Luo, R., Li, Q., Deng, X., Yin, X., Ruan, C., & Jia, W. (2020). Detection and localization of overlapped fruits application in an apple harvesting robot. Electronics, 9(6), 1023.

Kang, H., & Chen, C. (2019). Fruit detection and segmentation for apple harvesting using visual sensor in orchards. Sensors, 19(20), 4599.

Kang, H., & Chen, C. (2020). Fast implementation of real-time fruit detection in apple orchards using deep learning. Computers and Electronics in Agriculture, 168(105), 108.

Kang, H., Zhou, H., & Chen, C. (2020a). Visual perception and modeling for autonomous apple harvesting. IEEE Access, 8, 62151–62163.