Abstract

Gathering labeled data to train well-performing machine learning models is one of the critical challenges in many applications. Active learning aims at reducing the labeling costs by an efficient and effective allocation of costly labeling resources. In this article, we propose a decision-theoretic selection strategy that (1) directly optimizes the gain in misclassification error, and (2) uses a Bayesian approach by introducing a conjugate prior distribution to determine the class posterior to deal with uncertainties. By reformulating existing selection strategies within our proposed model, we can explain which aspects are not covered in current state-of-the-art and why this leads to the superior performance of our approach. Extensive experiments on a large variety of datasets and different kernels validate our claims.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To train classifiers with machine learning algorithms in a supervised manner, we need labeled data. Whereas gathering unlabeled instances is easy, the annotation with class labels is often expensive, exhaustive, or time-consuming and needs, consequently, to be optimized. Active learning (AL) algorithms aim to reduce annotation costs efficiently and effectively (Settles 2009). For that purpose, a selection strategy successively chooses the most useful labeling candidate from the pool of unlabeled instances and acquires the corresponding label from an oracle. Throughout this article, we focus on omniscient oracles that always provide labels according to the correct label distribution.

Many AL algorithms completely rely on the information provided by the classifier that is to be optimized (Settles 2009). This might lead to problems: Originally, classifiers are designed to get training data that is representative for the learning task. But this assumption is not valid for AL tasks, as the distribution of labeled instances is biased by the selection strategy (Dasgupta 2009). As a consequence, the estimates (e. g., the class probabilities) from a classifier are also biased, which may lead to a poor assessment of the usefulness of labeling candidates.

In this article, we propose to use a decision-theoretic approach to measure the usefulness of a labeling candidate in terms of (expected) performance gain. To determine the performance of a classifier, we evaluate its predictions on what we know about the data from a data-driven perspective. Although we can estimate the data distribution, we are uncertain about the true class posterior probabilities. Accordingly, we model these class posterior probabilities as a random variable based on our current observations in the dataset. For this model, we use a Bayesian approach by incorporating a conjugate prior to our observations. Thereby, we obtain more robust usefulness estimates for the candidates.

Our approach builds on three pillars that also explain the title of this contribution. Although the general ideas of these pillars have been mentioned in literature before, this article should not be seen as a simple extension of these works as we changed the existing models substantially. (1) We approximate the usefulness of one candidate on a representative subset, as introduced in “toward optimal AL” by Roy and McCallum (2001). (2) We estimate the usefulness by determining the decision-theoretic gain in performance, as introduced in “probabilistic AL” by Kottke et al. (2016). (3) We use a Bayesian approach and introduce a conjugate prior distribution to calculate the predictive posterior distribution. Thereby, we consider the certainty of a classifier on its predictions (Murphy 2006).

The contributions of this article are as follows:

-

We propose a new decision-theoretic selection strategy xPAL, which calculates the gain in performance using a Bayesian approach over a set of unlabeled evaluation instances.

-

The equations of xPAL can serve as a unifying model for decision-theoretic AL methods. Hence, we can describe other AL methods with simple replacements in xPAL and argue why these modifications impair the candidates’ selection.

-

Our experiments on 29 datasets from different domains confirm our approach’s superiority compared to several baselines across different kernels.

-

We visualize how the selection strategies assess the usefulness of selection candidates differently on a toy dataset.

The remainder of this article is structured as follows: First, we discuss related work in Sect. 2. In Sect. 3, we define our problem and provide the foundations for our model. In Sect. 4, we propose our new method xPAL and show how it theoretically and empirically relates to state-of-the-art approaches in Sect. 5. We evaluate our results experimentally and discuss our key findings in Sect. 6. We close this article with a conclusion and an outlook on our future work in that field.

2 Related work

The central component of an AL algorithm is the selection strategy. The most naïve one is to choose the next candidate randomly (Settles 2009). A common heuristic is uncertainty sampling (Lewis and Gale 1994). The idea is to use, e. g., the estimated class posteriors of probabilistic classifiers or the distance to the decision boundary to build a usefulness score (Settles 2012). This exploits the current classification hypothesis by labeling instances close to the decision boundary. In contrast to density-based approaches (Nguyen and Smeulders 2004), it ignores the representativeness of selected instances for the entire training set, and fails to perform exploration (Bondu et al. 2010; Osugi et al. 2005). That is, it does not search the instance space for large regions with incorrect classifications. This might lead to even worse performance compared to random sampling (Settles 2012). Hence, there exist variants that add random sampling (Žliobaitė et al. 2014; Thrun and Möller 1992), use reinforcement learning (Osugi et al. 2005) or simulated annealing (Zoller and Buhmann 2000) to balance exploitation and exploration, or combine it with a density weight (Donmez et al. 2007) and a variety of further factors, including sample diversity (Weigl et al. 2015; Xu et al. 2007; Brinker 2003) and class priors (Calma et al. 2018).

Uncertainty sampling is a special case of adaptive submodular maximization (Cuong et al. 2014), and several works have established links between submodularity and AL (Cuong et al. 2014; Golovin and Krause 2010; Guillory and Bilmes 2010). An example for a recent approach, built on these works, is filtered active submodular selection (FASS) (Wei et al. 2015). FASS combines uncertainty sampling with a submodular data subset selection framework, capturing both sample informativeness and representativeness. For Gaussian Process classifiers, a Bayesian information theoretic AL approach is Bayesian Active Learning by Disagreement (BALD) (Houlsby et al. 2011). BALD aims to select instances with the highest marginal uncertainty about the class label but simultaneously high confidence for the individual settings of the model’s parameters.

The query by committee (QBC) method (Seung et al. 1992) builds classifier ensembles and aims to reduce the disagreement between them. To improve balancing of exploration and exploitation in ensembles of active learners, Baram et al. (2004) proposed a formulation as a multi-armed bandit problem. Here, each active learner corresponds to one slot machine whose relative progress in performance is tracked over time, and on each trial one active learner is chosen for selecting an instance using the EXP4 algorithm. Furthermore, reinforcement learning approaches have been proposed that learn a policy for selecting active learners, for example by modelling active learning as a Markov decision process (Konyushkova et al. 2018).

In 2001, Roy and McCallum (2001) proposed expected error reduction. As shortly addressed in the introduction, they aim to estimate the expected generalization error if a candidate gets an additional label. Thus, they simulate each label for each labeling candidate and evaluate the mean error using the unlabeled instances. To estimate the probabilities, they use the class posteriors provided by probabilistic classifiers. Chapelle (2005) noticed that these estimates are highly unreliable (esp. at the beginning of the training) and therefore suggested the use of a beta prior.

Krempl et al. (2015) and Kottke et al. (2016) address the issue pointed out by Chapelle and named their approach probabilistic AL. They propose to use a distribution of the class posterior probability instead of using the classifier outputs directly. Calculating the expectation over this posterior leads to a decision-theoretic approach and leads to a mathematically sound approach.

3 Problem formulation and foundations

In “The Nature of Statistical Learning Theory,” Vapnik (1995) introduced a holistic concept on how to learn from examples. He defined three different components that take part in such a process, namely a generator, a supervisor, and a learning machine.Footnote 1 The generator creates random vectors \({\varvec{x}}\in {\mathbb {R}}^D\) (D-dimensional feature space) independently drawn from a fixed but unknown probability distribution \(p({\varvec{x}})\). The supervisor provides class labels \(y \in {\mathcal {Y}} = \{1, \dots , C\}\) (C is the number of classes) for every instance \({\varvec{x}}\) according to a conditional distribution \(p(y|{\varvec{x}})\) which is also fixed but unknown. In our case, the learning machine is a classifier \(f_{\varvec{\theta }}({\varvec{x}})\) with some parameters \(\varvec{\theta }\). The goal is to choose that learning machine that approximates the supervisor’s response best.

We adopt the above definition for the active learning scenario by refining the role of the (omniscient) supervisor:

Definition 1

(Supervisor) A supervisor consists of:

-

1.

A ground truth which is an unknown but fixed, deterministic function \(t :{\mathbb {R}}^D \rightarrow [0,1]^C\) that maps an instance \({\varvec{x}}\) to a probability vector \({{\varvec{p}}}= t({\varvec{x}})\) with \(\sum _{i = 1}^C {p_{i}} = 1\). Each element describes the true probability for the corresponding class given the instance \({\varvec{x}}\).

-

2.

An oracle which provides a class label \({y \in {\mathcal {Y}}}\) for every instance \({\varvec{x}}\) according to the ground truth \({{{\varvec{p}}}= t({\varvec{x}})}\). Hence, the label is sampled from a categorical distribution \(y \sim \mathrm {Cat}(t({\varvec{x}}))\). Note that this implies an omniscient oracle.

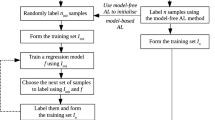

We visualize the learning process in Fig. 1. The generator provides instances \({\varvec{x}}\) for which the oracle provides the class label y based on the ground truth \(t({\varvec{x}}) = {{\varvec{p}}}= (p_1, \dots , p_C)\). Unfortunately, we solely have information about the instance-label-pair \(({\varvec{x}},y)\) but not on the generator, the ground truth, or the oracle.

In the technical community, the process of data generation is often described from a model-driven perspective: Then it is assumed that each class y has its own data generator \(p({\varvec{x}} |y)\). Hence, every instance \({\varvec{x}}\) has exactly one label, which is also called ground truth. Due to noise during data generation, different classes might appear in the same region, but still, the true label exists. Our view (as given in Definition 1 and Fig. 1) is purely data-driven: Looking at the data, we do not know why there are different labels in the same region. It could be due to noise in the data generation or due to the imperfectness of the oracle. When learning a classifier, the reason does not matter: We only observe that the oracle provides different labels for similar instances according to some proportion \({{\varvec{p}}}\) which we call ground truth.

In the field of active learning, we assume to have an unlabeled dataset \({\mathcal {U}} = \{{\varvec{x}}_1, \dots , {\varvec{x}}_N\}\) (candidate pool) given by the generator. Labels are usually not available at the beginning but can be acquired from the oracle (Settles 2009), which chooses the label according to the ground truth.

A selection strategy selects an instance \({\varvec{x}}\in {\mathcal {U}}\), and we acquire the corresponding label \(y \in {\mathcal {Y}}\) from the oracle. We remove the newly labeled instance from the candidate pool \({\mathcal {U}} \leftarrow {\mathcal {U}} \setminus \{{\varvec{x}}\}\), add the instance-label-pair to the labeled set \({\mathcal {L}} \leftarrow {\mathcal {L}} \cup \{({\varvec{x}},y)\}\), and retrain the classifier on \({\mathcal {L}}\).

We use a kernel-based classifier with kernel K which describes the similarity of two instances \({\varvec{x}}\) and \({\varvec{x}}'\). In our experiments, we use three different kernels (see Sect. 6) but our method is not restricted to these kernels.

Definition 2

(Kernel Frequency Estimate) The kernel frequency estimate \({{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}}\) of an instance \({\varvec{x}}\) is determined using the set of labeled instances \({\mathcal {L}}\). The y-th element of that C-dimensional vector describes the similarity-weighted number of labels of class y:Footnote 2

We denote \(f^{\mathcal {L}}\) as a classifier which uses the labeled data \({\mathcal {L}}\) for training.Footnote 3 Similar to the Parzen Window Classifier (PWC) used in Chapelle (2005), the classifier \(f^{\mathcal {L}}\) predicts the most frequent class:

Our method requires estimating kernel frequencies which is straight-forward for the PWC but also possible for other classifiers. For example, Beyer et al. (2015) estimates kernel frequencies (called label statistics) for Naive Bayes, k-Nearest Neighbour, and Tree-Based classifiers.

4 Toward optimal probabilistic active learning using a Bayesian prior

The idea of our approach is to estimate the expected performance gain that a new instance would provide if we would acquire its label from the oracle. Then, we select the most promising instance for actual labeling. Within the next subsections, we explain the necessary steps towards the final method.

4.1 Estimating the risk

In this article, we use the misclassification error as our performance measure (this can easily be changed). To optimize this performance, we minimize the estimated risk using the zero-one loss similarly to Vapnik (1995).

Definition 3

(Risk, Zero-one Loss) The risk describes the expected value of the loss L with respect to the joint distribution \(p({\varvec{x}},y)\) given a classifier \(f^{\mathcal {L}}\):

The zero-one loss returns 0 if the prediction of the classifier \(f^{\mathcal {L}}({\varvec{x}})\) is equal to the true class y and 1 otherwise:

As the generator \(p({\varvec{x}})\) is not observable, we use a Monte-Carlo integration using a set of instances \({\mathcal {E}}\) which is able to represent the generator. For simplicity, we use the complete set of available instances, i. e. the labeled and the unlabeled data (\({\mathcal {E}}= \{{\varvec{x}}:({\varvec{x}},y) \in {\mathcal {L}}\} \cup {\mathcal {U}}\)). Following the notation of Japkowicz and Shah (2011), we calculate the empirical risk \(R_{{\mathcal {E}}}\) as follows:

4.2 Introducing a conjugate prior

The conditional class probability \(p(y|{\varvec{x}})\) from Eq. (7) depends on the ground truth t which is unknown (see Fig. 1):

As a consequence, the probability \(p(y |{\varvec{x}})\) is exactly the y-th element of the unknown ground truth vector \({{\varvec{p}}}\). We can use the nearby labels from \({\mathcal {L}}\) (represented in \({{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}}\), Definition 2) to estimate the ground truth \({{\varvec{p}}}\) as the oracle provides the labels according to \({{\varvec{p}}}\) (see Fig. 1). If we assume a smooth distribution \({{\varvec{p}}}\) (in the sense that small changes of \({\varvec{x}}\) do not change \({{\varvec{p}}}\) much), the estimate with an appropriate kernel is close to the ground truth with sufficiently many labels. Although the latter cannot be assumed in all cases, domain experts usually have lots of experience when describing a kernel function for their data. Moreover, the results of our experiments in Sec. 6 show that our method also works with a kernel using a simple heuristic. For estimation, we use a Bayesian approach by determining the posterior predictive distribution, i. e. calculating the expected value over all possible ground truth values \({{\varvec{p}}}\) (see Murphy (2006) for details on predictive distributions):

To determine the posterior probability \(p({{\varvec{p}}} |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}})\) of the ground truth \({{\varvec{p}}}\) at instance \({\varvec{x}}\), we use Bayes’ theorem in Eq. (10). The likelihood \(p({{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}} |{{\varvec{p}}})\) is a multinomial distribution as each label y has been drawn from \(Cat(y | {{\varvec{p}}})\) (see Fig. 1).Footnote 4 We introduce a prior \(p({{\varvec{p}}})\) which we choose to be a Dirichlet distribution with parameter \({\varvec{\alpha }}\in {\mathbb {R}}^C\) as this is the conjugate prior of the multinomial distribution. We choose an indifferent prior and set each element to the same value (\(\alpha _{1} = \ldots = \alpha _{C} \in {\mathbb {R}}^{>0}\)) such that none of the classes is favoured. Using this prior can be seen as adding \(\alpha _{y}\) pseudo-instances to every class y (Bishop 2006, p. 77). This means that in case of high values of \({\varvec{\alpha }}\), we need many labeled instances (i. e., high frequency estimates \({{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}}\)) to get distinct posterior probabilities.

As we use the conjugate prior of the multinomial likelihood, there exists an analytic solution for the posterior which is a Dirichlet distribution (Murphy 2006).

Now, we determine the conditional class probability \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}})\) from Eq. (9) by calculating the expected value of the Dirichlet distribution (Murphy 2006):

The last term describes the y-th element of the normalized vector \({{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}}+ {\varvec{\alpha }}\). For normalization, we use the sum of all elements denoted as the 1-norm \(|| \cdot ||_1\).

4.3 Risk difference using the conjugate prior

We insert Eq. (14) into the empirical risk (Eq. (7)). As we approximate \(p(y |{\varvec{x}})\) with \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}})\), this is an approximation of the empirical risk based on the labeled data \({\mathcal {L}}\). Hence, we add \({\mathcal {L}}\) as an argument of the estimated empirical risk:

We now assume that we add a new labeled candidate \(({\varvec{x}}_c, y_c)\) to the labeled set \({\mathcal {L}}\) and denote the new set \({{\mathcal {L}}^+ = {\mathcal {L}}\cup \{({\varvec{x}}_c, y_c)\}}\). To determine how much this new instance-label-pair improved the performance of our classifier f, we estimate the gain in terms of risk difference using the probability \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}^+})\) to estimate the ground truth. We here use the same observations \({{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}^+}\) to estimate the risk of both the current and the new classifier (with simulated labels) whereas Roy and McCallum (2001) use \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}})\) for estimating the performance of \(f^{{\mathcal {L}}}\) and \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}^+})\) for \(f^{{\mathcal {L}}^+}\). We have two reasons to believe that we are correct: (1) When using different ground truth estimates for calculating the risks, we would not find out if a difference comes from the change in the classifier or from the change in the ground truth function. (2) In this article, we assume that the oracle is omniscient and, therefore, always correct. Consequently, we can assume that more labels should provide more accurate estimates. Accordingly, \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}^+})\) should also be used for the current classifier \(f^{\mathcal {L}}\).

4.4 The expected probabilistic gain

If we reduce the error under the new model \({\mathcal {L}}^+\), the risk difference in Eq. (17) becomes negative. Therefore, we negate this term as we aim to maximize the gain in Definition 4.

Definition 4

(Expected Probabilistic Gain) The probabilistic gain describes the expected change in classification risk R when acquiring the label \(y_c\) of candidate \({{\varvec{x}}_c}\in {\mathcal {U}}\). As the label \(y_c\) and the corresponding ground truth \(t({{\varvec{x}}_c})\) are unknown, we estimate \(p(y_c |{\varvec{x}}_c)\) with \(p(y_c |{{\varvec{k}}}_{{\varvec{x}}_c}^{\mathcal {L}})\) according to Eq. (14) using \(\mathrm {Dir}({\varvec{\beta }})\) as prior. We write \({\mathcal {L}}^+ = {\mathcal {L}}\cup \{({\varvec{x}}_c, y_c)\}\).

For simplicity, we set \({\varvec{\beta }}= {\varvec{\alpha }}\).

We define the selection strategy xPAL to choose the candidate that optimizes the \(\mathrm {xgain}\) score.

Definition 5

(Selection Strategy: xPAL) The selection strategy xPAL (Expected Probabilistic Gain for AL) chooses this candidate \({\varvec{x}}_c^* \in {\mathcal {U}}\) with:

5 Theoretical and qualitative comparison

To provide an understanding of how the xPAL selection strategy works, we compare our new method to the most similar selection strategies by reformulating their approaches within our mathematical framework wherever possible. We provide the proofs for all theorems in the supplemental material. In Table 1, we summarize the primary differences and show the computational complexity.

Visualization of acquisition behavior for different selection strategies. The green color indicates how useful a selection strategy considers a region. The usefulness depends on the selection criterion of the strategy. The eight labeled instances have been selected by the corresponding selection strategy. Thereby, one can see where the selection strategy selected instances in the past and how the usefulness is spatially distributed to select the next instance for labeling

In Fig. 2, we illustrate how the theoretical differences affect the actual choice of eight candidates on a toy dataset with two classes (blue diamonds and red rectangles). For classification, we use the same setup as in Sect. 6. The first eight labeled instances, chosen by the selection strategy, are marked with a gray circle. The background color shows how the respective selection strategy rates the usefulness of an area—darker areas are considered more useful than brighter areas.

5.1 Expected probabilistic gain for AL (xPAL)

As seen in Fig. 2, the currently labeled set \({\mathcal {L}}\) of xPAL is evenly spaced across the input space. That is, xPAL queried representative samples of the data set in the more explorative phase at the beginning, which leads to a rather good decision boundary with only eight labels. Focusing on the current usefulness scores indicated by green background color, we see that regions close to the decision boundary and regions with very few labels (green area at the bottom) are preferred. Moreover, we notice more usefulness at the right decision boundary compared to the left one as this area is seen as being more relevant (due to the higher density).

5.2 Expected error reduction (EER)

Theorem 1

The selection criterion of expected error reduction (EER) byRoy and McCallum (2001) can be written as follows. The extension of adding a beta-prior \(\varvec{\epsilon }\) proposed by Chapelle (2005) is given in blue color.

Comparing Eqs. (21) to (19), we see that there are only a few differences highlighted in orange color. The main difference is the optimization objective as expected error reduction tries to query instances that minimize the expected error instead of the expected gain as in xPAL. Originally, Roy and McCallum (2001) also introduced the reduction in error as the optimization criterion. By using \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}})\) to estimate the risk for the current classifier, the corresponding term is constant for all candidates and can be omitted (see also the discussion in Sect. 4.3). Second, EER neglects the labeled instances \({\mathcal {L}}\) as it only uses \({\mathcal {U}}\) for Monte-Carlo integration. They assume that the unlabeled instances approximate the generator \(p({\varvec{x}})\) sufficiently well. In the original version, Roy and McCallum (2001) point out that the posterior estimates need to be reliable. Later, Chapelle (2005) addresses this limitation by introducing a beta-prior \({\epsilon }\) (highlighted in blue), which serves a similar goal as our prior \({\varvec{\alpha }}\).

Although the theoretical differences of the two strategies seem to be small, we see a clear difference in the acquired instances and in the usefulness estimation in Fig. 2. Interestingly, the region close to the decision boundary is considered the least useful. Accordingly, EER neglects information there. Hence, this detail in the model has a huge impact on the selection and also the performance.

5.3 Probabilistic active learning (PAL)

Theorem 2

The selection criterion of (multi-class) probabilistic active learning (PAL) by Kottke et al. (2016) can be written as follows.

The probabilistic active learning approach by Kottke et al. (2016) does not consider a set \({\mathcal {E}}\) for risk estimation but estimates the risk locally only for the candidate \({\varvec{x}}_c\). Hence, we set \({\mathcal {E}}= \{{\varvec{x}}_c\}\). Instead, they include an estimated density weight \({\hat{p}}({\varvec{x}}_c)\) for their local gain. As a prior distribution, they use the indifferent prior \(\mathbf {1}\). The original method is non-myopic. As xPAL is myopic, we ignored this for the theoretical discussion. Note that the original PAL version also uses \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}})\) to estimate the performance of the current classifier similarly to Roy and McCallum (2001). Due to a difference in calculating the performance estimate, we can show that the original PAL equations can also be written as in Eq. 22. So in fact, they were using \(p(y |{{\varvec{k}}}_{{\varvec{x}}}^{{\mathcal {L}}^+})\) without knowledge. The complete 2-pages proof is given in the appendix.

In general, we see a similar acquisition behavior of PAL and xPAL (see Fig. 2). We see areas of high usefulness near the decision boundary and in sparely labeled regions. It seems that xPAL is more sensitive to the actual position of the instances as it considers the set \({\mathcal {E}}\), and PAL only approximates this by using the density \({\hat{p}}({\varvec{x}}_c)\). Hence, the influence of a new label on the complete classification task is only approximated in PAL.

5.4 Uncertainty sampling (US)

Theorem 3

The selection criterion of confidence-based uncertainty sampling (US) by Lewis and Gale (1994) can be written as follows.

Uncertainty sampling does not consider a set for risk estimation, but it solely estimates the error at the candidate \({\varvec{x}}_c\) based on the current observations without any prior. Hence, it completely relies on the class posterior estimates from the classifier. Therefore, it might overestimate its certainty.

We observe this problem in Fig. 2 as US only finds one decision boundary and sticks at exploiting this. As it is not aware that the class posteriors on the left are highly unreliable (no labeled data here), it will only consider this region if the labels of all other candidates have been acquired. We notice a lack of exploration.

5.5 Active learning with cost embedding (ALCE)

The approach proposed by Huang and Lin (2016) uses an embedding with some special distance measure in a hidden space with non-metric multidimensional scaling. As this follows an entirely different way of approaching the problem, it is not possible to transfer this algorithm to our framework. As shown in Fig. 2, this approach explores the data space quite uniformly and is rather exploratory than exploitative.

5.6 Query by committee (QBC)

Query by committee (Seung et al. 1992) uses an ensemble of classifiers that are trained on bootstrapped replicates of the labeled set \({\mathcal {L}}\). With few labels, the strategy explores the dataset due to high randomness in the subsets (see Fig. 2). Later, it starts exploiting more.

6 Experimental evaluation

To evaluate the quantitative performance of xPAL, we conduct experiments on real-world datasets.Footnote 5 We provide information on the used datasets, algorithms, and the experimental setup. We compare xPAL to state-of-the-art methods using scikit-activemlFootnote 6 (Kottke et al. 2021) and show how the prior parameter affects the results.

6.1 Datasets and competitors

We selected 27 datasets from the openML library (Vanschoren et al. 2013) and two pre-processed text datasets from Hernández-González et al. (2018) with TF-IDF features. For the latter, we assigned the majority vote as the true class. In the supplemental material, we list all used datasets with their openML-identifier and show specific characteristics such as the number of instances, features, and instances per class.

Next to xPAL, we use multi-class probabilistic AL (PAL) by Kottke et al. (2016), confidence-based uncertainty sampling (US) by Lewis and Gale (1994), active learning with cost embedding (ALCE) by Huang and Lin (2016), query by committee (QBC) by Seung et al. (1992), expected error reduction (EER) by Chapelle (2005), and a random selector. We set all parameters according to the default values in the paper. For QBC, the disagreement within the randomly drawn sets, measured by the Kulback-Leibler divergence, describes the usefulness of a candidate. We use 25 classifiers as the committee and each of them is trained on a bootstrapped version of \({\mathcal {L}}\) with only a selection of features according to (Shi et al. 2008).

Additionally, we implemented a baseline that has additional access to all labels of the unlabeled set \({\mathcal {U}}\). It successively (greedily) selects the candidate, which minimizes the true empirical risk on \({\mathcal {U}}\) and \({\mathcal {L}}\), called GREEDY-ALL. It is equal to xPAL where the estimated class probability from Eq. 8 is set to one for the true class.

6.2 Experimental setup

To evaluate our experiments, we randomly split each dataset into a training set consisting of \(60 \%\) of the instances and a test set containing the remaining \(40 \%\) and repeat that 100 times. As we start without any labeled instances, \({\mathcal {U}}\) contains the whole training set at the beginning, and \({\mathcal {L}}\) is empty. We acquire 200 labels for every dataset or stop when \({\mathcal {U}}\) is empty.

For classification, we use the Parzen window classifier for all selection strategies. We applied three different kernels depending on the type of data. For numerical data, we z-standardize all features and use a radial basis function (RBF) kernel with bandwidth \(\gamma\) which is defined as follows:

We set the bandwidth of the kernel (\(\gamma = 1/(2s^2)\)) according to the mean criterion proposed by Chaudhuri et al. (2017) with \(\sigma _p = 1\):

For categorical data, we use the hamming-distance kernel proposed by Hutter et al. (2014) :

where the hyperparameter \(\gamma\) is again determined through the mean bandwidth criterion.

For the text datasets which contain TF-IDF features, we apply the cosine similarity kernel

6.3 Comparison between xPAL and competitors

We visualize our results using learning curves in Fig. 3 and rank statistics in Figs. 4, 5, and 6. More results are given in the appendix. The learning curves show the misclassification error (averaged over the 100 repetitions) on the test set after each label acquisition for every combination of an algorithm and a dataset. The learning curve that reaches a low error fast is considered best. For each curve, we show the standard error \(\frac{\sigma }{\sqrt{N}}\) over all repetitions. Due to space limitations, we only show xPAL with \({\varvec{\alpha }}= \mathbf {10^{-3}}\) and \({\varvec{\alpha }}= \mathbf {1}\). In Sect. 6.4, we show that xPAL is superior for all tested priors and that the results do not change much.

Learning curves for six selected datasets. Each plot shows the misclassification error of xPAL and the competing algorithms w. r. t. the number of acquired labels. The learning curve that reaches a low error fast is considered best. The bars denote the standard error (\(\frac{\sigma }{\sqrt{n}}\)). The plots of the remaining 16 datasets are given in the supplemental material

Almost all learning curves show that the supervised baseline (GREEDY-ALL) performs perfectly in an early phase. This is not surprising as it knows all labels (even from the unlabeled set \({\mathcal {U}}\)) to optimize the error on the training set. As seen in steel-plates-fault, this baseline does not achieve the best performance in all cases because of the greedy selection (no look-ahead). In that example, an optimal baseline would need to create a strategy for more than just the upcoming candidate. Also, the xPAL approach (green, bold line) with \({\varvec{\alpha }}= \mathbf {10^{-3}}\) performs well. For convenience, we plotted the xPAL also with \({\varvec{\alpha }}= \mathbf {1}\) as another alternative. The differences between both curves are rather small.

The mean rank for all combinations of selection strategies and numerical datasets (RBF kernel) across 100 repetitions. The best strategy is printed in bold. Three stars (***) indicate significantly better results of xPAL with p value .001, two stars (**) indicate a p-value of 0.01 and one star (*) of .05. Analogously, significantly better performance of a competitor is shown with \(\dag\)

As it remains difficult to quantitatively assess the performance due to the large amount of datasets, we provide the mean rank plot in Figs. 4, 5, and 6. For this purpose, we calculated the rank of the area under the learning curve for each of the 100 repetitions and average this rank for every combination of a selection strategy and a dataset. As stated in Sect. 6.2, we calculated the performance for up to 200 labels. From this point, most learning curves do not change much anymore, as one can see in Fig. 3. Hence, the area under the learning curve provides a good overview of the whole learning process. To investigate the learning process at one specific point (e.g., at the beginning), the learning curves (Figs. 3, 10, 11 and 12) are more meaningful. We use color to visualize the performance: blue color means good rank, and red color indicates bad performance. The rank of the best algorithm is printed in bold. Moreover, we performed a Wilcoxon-signed-rank test to assess if the pairwise differences between xPAL and its competitors are significant. Three stars (***) indicate significantly better results of xPAL with a p value of .001, two stars (**) indicate a p value of 0.01 and one star (*) of .05. Analogously, significantly better performance of a competitor is shown with \(\dag\). We yield the mean column (right) by averaging the ranks over all datasets. The pattern (a/b/c) in the second row of each cell summarizes a) the number of highly significant wins, c) the number of highly significant losses, and b) neither of both.

We separated the ranking plots w. r. t. the kernel function. Figure 4 shows results with the RBF kernel, Fig. 5 with the hamming-distance kernel, and Fig. 6 with the cosine similarity kernel. One can observe that xPAL has the lowest mean rank for all kernels and is always printed in blueish color across the datasets. No other algorithm performs as robust. The strongest competitor is PAL. But on the categorical data, we observe a clear performance difference between PAL and xPAL. One reason might be the difficulty of obtaining a reliable density estimation for categorical data.

6.4 Robustness of prior parameter

In Fig. 7, we show the mean ranking over all numerical datasets for different choices of priors \({\varvec{\alpha }}\). Compared to the other strategies (left image), there is only a small difference across all choices. Comparing xPAL with \({\varvec{\alpha }}=\mathbf {10^{-3}}\) to the other priors (right image), we see that there are datasets where the selected xPAL is significantly outperformed, but in general, the effect is negligible. Also, all mean ranks are between 3.27 and 3.63, which validates the robustness of our parameter. We propose to use \({\varvec{\alpha }}=\mathbf {10^{-3}}\) as default.

6.5 Computation time

In Table 1, we already showed the theoretical time complexity. In this section, we now show the actual computation time which of course also depends on the efficiency of the implementation. Therefore, we artificially generated datasets with \(500, 1000, \dots , 2500\) instances and 2, 4, 6 classes. With every selection strategy, we acquired 200 labels and report the mean computation time on a personal computer in Fig. 8. For EER and xPAL, we clearly see the dependence on \(|{\mathcal {U}}|\) and \(|{\mathcal {E}}|\), respectively. As xPAL only needs to calculate the loss difference on instances, where the decision actually changes, we can reduce the computation time by a significant amount. Because of the inefficient optimization in PAL, we are even comparably fast to PAL for dataset with less than 1000 instances.

7 Conclusion

In this article, we moved toward optimal probabilistic AL by proposing xPAL. It is a decision-theoretic approach that determines the expected performance gain for labeling a candidate using a conjugate prior. We used this model to show the similarities and differences to the most related approaches and compared them by showing how each method selects their instances in a synthetic example. Moreover, we provide an exhaustive experimental evaluation indicating the superiority of xPAL and the robustness of its prior parameter.

In future work, we aim to apply this idea to other cost-sensitive loss functions and for error-prone annotators as this is a current limitation of this article. Moreover, we research possibilities to use the concept of xPAL to define a stopping criterion and to apply it for other classifier types. The combination of xPAL with methods of deep learning is also promising. However, several challenges need to be addressed, such as unreliable estimates of the class probabilities and the estimation of the vector \({{\varvec{k}}}_{{\varvec{x}}_c}^{{\mathcal {L}}}\). The former might be solvable by using techniques that improve the returned probabilities (e. g., by using Bayesian neural networks). The latter could be addressed by transforming samples into a latent representation (e. g., by using variational autoencoders). The resulting features would allow for a kernel density estimation. To extend this idea to regression problems, it will be necessary to combine the normally distributed output with a conjugate prior distribution (e. g., Gaussian-Wishart). This would allow for an analytic solution of the posterior which enables reliable estimation of the risk.

Notes

We adapt the terms and notation slightly. We use calligraphy for sets, bold font for vectors, and \(p( \cdot )\) is either the probability density function or the probability mass of a discrete probability space. Please note the difference between \({{\varvec{p}}}\) and \(p(\cdot )\) (the latter is always a function).

\(\mathbb {1}_{cond}\) denotes the indicator function which returns 1 if cond is true and 0 otherwise.

To simplify the notation, we do not mention the parameters \(\varvec{\theta }\).

Normally, the multinomial distribution only allows non-negative integers as observations. Hence, we use it as an analogy. As our probability is normalized, we can also calculate its density for real-valued observations \({{\varvec{k}}}_{{\varvec{x}}}^{\mathcal {L}}\).

References

Baram, Y., Yaniv, R. E., & Luz, K. (2004). Online choice of active learning algorithms. Journal of Machine Learning Research, 5, 255–291.

Beyer, C., Krempl, G., & Lemaire, V. (2015). How to select information that matters: A comparative study on active learning strategies for classification. In Proceedings of the 15th international conference on knowledge technologies and data-driven business, association for computing machinery, i-KNOW ’15, New York, NY, USA.

Bishop, C. M. (2006). Pattern recognition and machine learning. Springer.

Bondu, A., Lemaire, V., & Boullé, M. (2010). Exploration vs. exploitation in active learning: A Bayesian approach. In International joint conference on neural networks (IJCNN) (pp. 1–7). IEEE.

Brinker, K. (2003). Incorporating diversity in active learning with support vector machines. In Proceedings of the 20th international conference on machine learning (ICML) (pp. 59–66).

Calma, A., Reitmaier, T., & Sick, B. (2018). Semi-supervised active learning for support vector machines: A novel approach that exploits structure information in data. Information Sciences, 456, 13–33.

Chapelle, O. (2005). Active learning for parzen window classifier. In Proceedings of the 10th international workshop on artificial intelligence and statistics (AISTATS) (Vol. 5, pp. 49–56).

Chaudhuri, A., Kakde, D., Sadek, C., Gonzalez, L., & Kong, S. (2017). The mean and median criteria for kernel bandwidth selection for support vector data description. In International conference on data mining workshops (ICDMW) (pp. 842–849). IEEE.

Cuong, N. V., Lee, W. S., & Ye, N. (2014). Near-optimal adaptive pool-based active learning with general loss. In Proceedings of the 30th conference on uncertainty in artificial intelligence (UAI) (pp. 122–131).

Dasgupta, S. (2009). The two faces of active learning. In International conference on discovery science (pp. 35–35). Springer.

Donmez, P., Carbonell, J. G., & Bennett, P. N. (2007). Dual strategy active learning. In Proceedings of the European conference on machine learning (ECML) (pp. 116–127). Springer.

Golovin, D., & Krause, A. (2010). Adaptive submodularity: A new approach to active learning and stochastic optimization. In Proceedings of the 23rd conference on algorithmic learning theory (ALT) (pp. 333–345).

Guillory, A., & Bilmes, J. (2010). Interactive submodular set cover. In Proceedings of the 27th International conference on machine learning (ICML).

Hernández-González, J., Rodriguez, D., Inza, I., Harrison, R., & Lozano, J. A. (2018). Two datasets of defect reports labeled by a crowd of annotators of unknown reliability. Data in Brief, 18, 840–845.

Houlsby, N., Huszár, F., Ghahramani, Z., & Lengyel, M. (2011). Bayesian active learning for classification and preference learning. arXiv:1112.5745 [stat.ML].

Huang, K., & Lin, H. (2016). A novel uncertainty sampling algorithm for cost-sensitive multiclass active learning. In Proceedings of the 16th international conference on data mining (ICDM) (pp. 925–930). IEEE.

Hutter, F., Xu, L., Hoos, H. H., & Leyton-Brown, K. (2014). Algorithm runtime prediction: Methods & evaluation. Artificial Intelligence, 206, 79–111.

Japkowicz, N., & Shah, M. (2011). Evaluating learning algorithms: A classification perspective. Cambridge University Press.

Konyushkova, K., Sznitman, R., & Fua, P. (2018). Discovering general purpose active learning strategies. arXiv:1810.04114v2 [cs.LG].

Kottke, D., Krempl, G., Lang, D., Teschner, J., & Spiliopoulou, M. (2016). Multi-class probabilistic active learning. In Proceedings of the European conference on artificial intelligence (ECAI) (pp. 586–594). IOS Press.

Kottke, D., Herde, M., Minh, T. P., Benz, A., Mergard, P., Roghman, A., Sandrock, C., & Sick, B. (2021). scikit-activeml: A library and toolbox for active learning algorithms. Preprints, 2021030194.

Krempl, G., Kottke, D., & Lemaire, V. (2015). Optimised probabilistic active learning (OPAL). Machine Learning, 100(2–3), 449–476.

Lewis, D. D., & Gale, W. A. (1994). A sequential algorithm for training text classifiers. In Proceedings of the 17th annual international conference on research and development in information retrieval (SIGIR) (pp. 3–12). Springer.

Murphy, K. P. (2006). Binomial and multinomial distributions. Technical report, University of British Columbia.

Nguyen, H. T., & Smeulders, A. (2004). Active learning using pre-clustering. In Proceedings of the 21st international conference on machine learning (ICML) (pp. 79–86). ACM Press.

Osugi, T., Kim, D., & Scott, S. (2005). Balancing exploration and exploitation: A new algorithm for active machine learning. In Proceedings of the 5th international conference on data mining (ICDM) (pp. 330–337). IEEE.

Roy, N., & McCallum, A. (2001). Toward optimal active learning through Monte Carlo estimation of error reduction. In Proceedings of the 18th international conference on machine learning (ICML) (pp. 441–448).

Settles, B. (2009). Active learning literature survey. Technical report, University of Wisconsin-Madison Department of Computer Sciences.

Settles, B. (2012). Active learning. No. 18 in Synthesis lectures on artificial intelligence and machine learning. Morgan and Claypool Publishers.

Seung, H. S., Opper, M., & Sompolinsky, H. (1992). Query by committee. In Proceedings of the 5th annual workshop on computational learning theory (COLT) (pp. 287–294). ACM.

Shi, S., Liu, Y., Huang, Y., Zhu, S., & Liu, Y. (2008). Active learning for kNN based on bagging features. In Proceedings of the 4th international conference on natural computation (pp. 61–64), Jinan, China.

Thrun, S. B., & Möller, K. (1992). Active exploration in dynamic environments. In Advances in neural information processing systems (pp. 531–538).

Vanschoren, J., van Rijn, J. N., Bischl, B., & Torgo, L. (2013). Openml: Networked science in machine learning. SIGKDD Explorations, 15(2), 49–60.

Vapnik, V. N. (1995). The nature of statistical learning theory. Springer.

Wei, K., Iyer, R., & Bilmes, J. (2015). Submodularity in data subset selection and active learning. In Proceedings of the 32rd international conference on machine learning (ICML) (pp. 1954–1963).

Weigl, E., Heidl, W., Lughofer, E., Radauer, T., & Eitzinger, C. (2015). On improving performance of surface inspection systems by online active learning and flexible classifier updates. Machine Vision and Applications, 27(1), 103–127.

Xu, Z., Akella, R., & Zhang, Y. (2007). Incorporating diversity and density in active learning for relevance feedback. In Proceedings of the European conference on information retrieval (ECIR) (pp. 246–257). Springer.

Zoller, T., & Buhmann, J. M. (2000). Active learning for hierarchical pairwise data clustering. In Proceedings 15th international conference on pattern recognition (ICPR) (pp. 186–189). IEEE.

Žliobaitė, I., Bifet, A., Pfahringer, B., & Holmes, G. (2014). Active learning with drifting streaming data. Transactions on Neural Networks and Learning Systems, 25(1), 27–39.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editors: Annalisa Appice, Sergio Escalera, Jose A. Gamez, Heike Trautmann.

Appendix

Appendix

1.1 Proof for Theorem 1

In Sect. 2, Roy and McCallum (2001) describe the algorithm: The estimate the expected loss from Eq. (4) using a Monte-Carlo approach over \({\mathcal {P}}\). They describe to use the unlabeled pool for that. In our work, we call this the candidate set \({\mathcal {U}}\). Their algorithm consists of 4 steps: In short, they calculate the average expected loss for every instance \({\varvec{x}}_c \in {\mathcal {U}}\). Therefor, they consider every possible label \(y_c \in {\mathcal {Y}}\) and add the pair \(({\varvec{x}}_c, y_c)\) to the training set \({\mathcal {D}}\) (here: \({\mathcal {L}}\)). They call the resulting set \({\mathcal {D}}^*\) (here \({\mathcal {L}}^+\)). The resulting expected losses are averaged, weighted with the respective posterior probability \(p(y_c |{\varvec{x}}_c)\).

The posterior probabilities for our kernel-based classifier are determined using Eq. 28. Chapelle (2005) proposed to include a beta-prior and thereby extended the approach by Roy and McCallum (2001).

The resulting equation can be simplified as follows:

\(\square\)

1.2 Proof of Theorem 2

Multi-class probabilistic active learning (PAL) by Kottke et al. (2016) describes the expected gain in accuracy. Instead of evaluating this gain on a representative subset, they solely consider the gain locally. To proof Theorem 2, we need to set the m parameter of PAL to \(m=1\), which means that we only consider one possible label acquisition in each iteration. Kottke et al. (2016) model the hypothetical labels using a labeling vector \(\mathbf {l} \in {\mathbb {N}}^C\) which describes the number of potentially added labels for each class. As we only consider one label at a time (\(m=1\)), these vectors are unit vectors with a 1 at element of the considered class \(y_c\) and 0 otherwise. Hence, \(l \in \{\mathbf {e}_1, \dots , \mathbf {e}_C\}\).

For simplicity, we use \({{\varvec{k}}}\) instead of writing \({{\varvec{k}}}_{{{\varvec{x}}_c}}\) as PAL solely considers the candidate \({{\varvec{x}}_c}\) and no other instance. Moreover, we know that \({\varvec{k}}^{{\mathcal {L}}^+}= {{\varvec{k}}}+ \mathbf {e}_{y_c}\), as we increment the frequency estimate of the simulated class \(y_c\) by 1 (the similarity of \({{\varvec{x}}_c}\) to \({{\varvec{x}}_c}\) is always 1). Additionally to \(\mathbf {l}\), Kottke et al. (2016) model the classifier’s decision using a vector \(\mathbf {d}\), which is 1 for the class of the future decision and 0 otherwise.

For simplicity, we do not write the iterators at sums and products if they iterate from \(i=1\) to C. Based on the old classifier \(f^{\mathcal {L}}\) and the new classifier \(f^{{\mathcal {L}}^+}\), we write \({\hat{y}} = f^{\mathcal {L}}({{\varvec{x}}_c})\) and \({\hat{y}}^+ = f^{{\mathcal {L}}^+}({{\varvec{x}}_c})\) for the old and the new prediction.

We now insert I, II, III back into Eq. 37.

We divide the sum into two parts: (A) The subset of all labels (\({\mathcal {Y}}_{\ne }\)) that change the decision, (B) and the labels (\({\mathcal {Y}}_=\)) that do not change the decision. Please remember that a new label \(y_c\) could change the decision of \({\hat{y}}^+\) as it includes the new label. They are defined as follows:

Now, we consider both cases independently.

A) Labels that change the decision For all \(y_c \in {\mathcal {Y}}\) with \({\hat{y}} \ne {\hat{y}}^+\), we know that \({\hat{y}}^+ = y_c\).

It follows that \(L(y_c, {\hat{y}}^+) - L(y, {\hat{y}}) = -1\).

B) Labels that do not change the decision Here, we can use the following implications to rewrite the cases from Eq. 45 into the sum:

-

\(y_c = {\hat{y}} \Rightarrow {\hat{y}}^+ = y_c\)

-

\(y_c \ne {\hat{y}} \Rightarrow {\hat{y}}^+ \ne y_c\)

In the last step, we use that \({\hat{y}} \ne {\hat{y}}^+ \Longrightarrow L({\hat{y}}, {\hat{y}}^+) - L({\hat{y}}, {\hat{y}}) = 1\). Additionally, we use that \(y_c \ne {\hat{y}}\) applies and thus \(k_{{\hat{y}}} = k_{{\hat{y}}}^{{\mathcal {L}}^+}\). Next, we combine both cases:

Because of \(L(y, {\hat{y}}^+) - L(y, {\hat{y}}) = 0 \text { for } y \notin \{y_c, {\hat{y}}\}\) and for \(y_c \in {\mathcal {Y}}_=\), we can change this equation to

\(\square\)

1.3 Proof of Theorem 3

According to Settles (2009), the usefulness score for “least confidence uncertainty sampling” is determined by the following equation and can easily be rewritten. We denote: \({\hat{y}} = f^{\mathcal {L}}({{\varvec{x}}_c})\).

\(\square\)

1.4 Description of datasets

A detailed description of the datasets is available in Tab. 2. We provide the openML identifierFootnote 7, the dataset’s name, the number of instances and features, and the distribution of classes (the list describes the fraction of class 1 in the first element, the fraction of class 2 in the second element, etc).

1.5 More experimental results

In this section, we provide more plots from our experimental evaluation. Please refer to the original paper for the detailed explanation of the experimental setup and the discussion of the results.

1.6 Usefulness plots with randomly selected labels

See Fig. 9.

Visualization of acquisition behavior for different selection strategies. The green color indicates how useful a selection strategy considers a region. The usefulness depends on the selection criterion of the strategy. The eight labels have been randomly selected and are similar for all strategies to emphasize how different selection strategies assess the usefulness of different regions

1.7 Learning curves

1.8 Area under the learning curve

Table 3 describes the averaged area under the learning curve including standard deviations and significance testing with the Wilcoxon signed rank test. The notation is similar to the one from the paper.

1.9 Detailed ranking plots for different parameters

Figure 13 is the detailed version of Fig. 7 (right) in the original paper.

1.10 Execution times and computing infrastructure

Table 4 provides an overview of the execution times over the different selection strategies and datasets. The execution times are averaged over 100 repeated runs each with a maximum number of 200 instance selections. A single execution time entry indicates the average time in seconds to select a single instance for a given dataset and selection strategy. The execution times are primarily depended on the number of instances but also on aspects like the number of features and classes as calculations might become more complex.

All experiments were run on an heterogeneous computer cluster which might lead to irregular results as the speed between the cluster nodes vary.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kottke, D., Herde, M., Sandrock, C. et al. Toward optimal probabilistic active learning using a Bayesian approach. Mach Learn 110, 1199–1231 (2021). https://doi.org/10.1007/s10994-021-05986-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-05986-9