Abstract

The current trend in science curricula is to adopt a context-based pedagogical approach to teaching. New study materials for this innovation are often designed by teachers working with university experts. In this article, it is proposed that teachers need to acquire corresponding teaching competences to create a context-based learning environment. These competences comprise an adequate emphasis, context establishment, concept transfer, support of student active learning, (re-)design of context-based materials, and assistance in implementation of the innovation. The implementation of context-based education would benefit from an instrument that maps these competences. The construction and validation of such an instrument (mixed-methods approach) to measure the context-based learning environment is described in this paper. The composite instrument was tested in a pilot study among 8 teachers and 162 students who use context-based materials in their classrooms. The instrument’s reliability was established and correlating data sources in the composite instrument were identified. Various aspects of validity were addressed and found to be supported by the data obtained. As expected, the instrument revealed that context-based teaching competence is more prominently visible in teachers with experience in designing context-based materials, confirming the instrument’s validity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Context-based education is the innovative approach to science education chosen in many countries to further the interest of students in science and to increase the coherence of the concepts studied in national curricula (Bennett and Lubben 2006; Gilbert 2006; Pilot and Bulte 2006b). A trend in this innovation is to encourage science teachers to collaborate with university experts in designing new study materials (Parchmann et al. 2006; Pilot and Bulte 2006a). The collaborative process of developing and improving materials is expected to lead to a change in the cognition of the teachers (Coenders 2010) and to permanent changes in their teaching practice (Mikelskis-Seifert et al. 2007). Changes in cognition and teaching practice touch on the core teacher competences mentioned in national governmental directives (cf European Commission Directorate-General for Education and Culture 2008). Such a change in teaching competence is essential for the innovation to succeed. The nature of the change in teaching competence required for context-based teaching is thus far undefined.

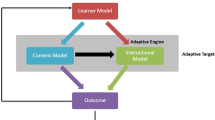

This study aimed to describe both the teaching competences needed for creating a context-based learning environment and a composite instrument to map the context-based learning environment. This composite instrument includes learning environment questionnaires (cf Taylor et al. 1993), classroom observations, and semi-structured interviews.

The context-based teaching competence can be derived from the learning environment that teachers are able to create in their classrooms. Reliability and various aspects of validity (Trochim and Donnelly 2006) of the composite instrument were addressed. Dutch teachers who are working with the context-based materials for Advanced Science, Mathematics and Technology (ASMaT),Footnote 1 Biology, Chemistry and Physics were studied to consolidate the competences identified from the literature. Some teachers have worked in design teams creating the material; others have not. Both groups of teachers should show the competences required to teach the innovative material because they use the material in class. However, it is expected that teachers who have experience in designing the innovative materials are better able to create a context-based learning environment and thus show more of the competences than their colleagues who have not had this experience. Such a result would provide evidence for the instrument’s sensitivity. The proposed instrument is intended to map context-based learning environments, which is useful for research in the area of teacher professional development and studies into context-based teaching practices.

Context-based education

In this study, the definition of a context-based learning environment follows that of the context-based approach by Bennett et al. (2007) in their review of research into the subject: “Context-based approaches are approaches adopted in science teaching where contexts and applications of science are used as the starting point for the development of scientific ideas. This contrasts with more traditional approaches that cover scientific ideas first, before looking at applications” (p. 348). To describe the nature of the contexts used in context-based education, we follow Gilbert (2006) who considers that contexts should have:

…a setting within which mental encounters with focal events are situated; a behavioural environment of the encounters, the way that the task(s), related to the focal event, have been addressed, is used to frame the talk that then takes place; the use of specific language, as the talk associated with the focal event that takes place; a relationship to extra-situational background knowledge (Duranti and Goodwin 1992, pp. 6–8).

An important element of a context-based learning environment is active learning (Gilbert 2006; Parchmann et al. 2006): the students are required to have a sense of ownership of the subject and are responsible for their own learning. The combination of self-directed learning and the use of contexts is consistent with a constructivist view of learning (Gilbert 2006). As current research in science education points out, people construct their own meanings from their experiences, rather than acquiring knowledge from other sources (Bennett 2003).

In addition to traditional characteristics of context-based learning environments, in this study, the trend mentioned earlier for teachers to design (and teach) the innovative materials in close cooperation with pedagogical experts is added. Teachers designing and teaching innovative materials are expected to create support from the teaching field for the innovation and, more importantly, to make the materials more applicable to the teaching practice (Duit et al. 2007; Parchmann et al. 2006). This study was conducted in the Netherlands where a context-based innovation is taking place, involving teachers in designing curriculum materials for this innovation. Hence this specific addition was chosen.

The theory of context-based education is expected to proceed through a number of interpretative steps before it reaches classroom practice (Goodlad 1979). This study concerned the perceived, operational and experimental curriculum levels, because it aimed at teacher competences. The teacher perceives context-based education, interprets it and makes it operational in the classroom where it is experienced by students.

The focus in this study was elements of a context-based learning environment from a teacher competency perspective and it aimed to develop an instrument to map the competences that can be used broadly for the four different science subjects.

Teacher competences for context-based teaching

Teacher competence forms the starting point for creating and investigating context-based classes. In this study, the definition of a competent teacher as formulated by Shulman and Shulman (2004) was used: “a member of a professional community who is ready, willing and able to teach and to learn from his or her teaching experience” (p. 259). ‘Ready and willing’ imply abilities within the affective dimension and ‘able’ implies abilities within the cognitive and behavioural dimension. This research was concerned with the affective, cognitive and behavioural aspects of teacher competence because it is known that, in teacher (and student) learning, many different psychological factors (e.g. emotional, cultural, motivational, cognitive) have to be taken into account (Shuell 1996). A number of competences for context-based education are distinguished. These are well founded in literature and are recognised by experts (de Putter-Smits et al. 2009; Plomp et al. 2008).

Context handling

The nature of the context-based approach to teaching requires teachers to be able to familiarise themselves with the context expressed in the material used in class. This differs from a traditional educational approach in which the concepts are of foremost importance and are usually explained first before embarking on applications. The required teacher attitude towards contexts is phrased by Gilbert (2006) as: “[…], the teacher needs to bring together the socially accepted attributes of a context and the attributes of a context as far as these are recognised from the perspective of the students” (p. 965). The context should cause a need for students to explore and learn concepts and to apply them to different situations. Teachers have to be able to establish scientific concepts through context-based education (Parchmann et al. 2006) and aware of the need for concept transfer (to other contexts) (van Oers 1998). The teacher competence in handling contexts, establishing concepts and making the concepts transferable to other contexts is referred to in this article as context handling.

Regulation

In a constructivist view of learning the following four learning, dimensions are distinguished by Labudde (2008). The first dimension concerns the individual (i.e. knowledge is a construction of the individual learner). The second dimension concerns social interactions (i.e. the knowledge construction occurs in exchange with other people). The third dimension concerns the content. “If learning is an active process of constructing new knowledge based on existing knowledge […] then the contents to be learned must be within the horizon of the learner” (Labudde 2008, p 141). The fourth dimension concerns the teaching methods (i.e. the role of the teacher). The latter two dimensions are particularly important for context-based education because these entail active learning of scientific concepts within a context. Labudde (2008) concludes that:

…the learning process of the individual and the co-construction of new knowledge can be to some extent be supported by ex-cathedra teaching and classroom discussions. But for promoting learning as an active process and for stimulating the co-construction of knowledge other teaching methods seem to be more suitable, e.g. students’ experiments and hands-on activities, learning cycles, project learning, or case studies (p. 141).

These so-called other teaching methods can be characterised as student active learning or student self-regulated learning. According to Vermunt and Verloop (1999) and Bybee (2002), such learning calls for specific teaching activities. The use of these teaching activities in evaluating context-based education has been shown to be effective by Vos et al. (2010). Vermunt and Verloop (1999) identify activities under three levels of teacher control—strong, shared and loose. To achieve an intermediate or high degree of self-regulated learning a shared or loose control strategy by the teacher is called for (Bybee 2002). Teaching activities to support a shared control strategy include having students make connections with their own experiences, giving students personal responsibility for their learning, giving students freedom of choice in subject matter, objectives and activities, and having students tackle problems together (Vermunt and Verloop 1999). Teachers should be competent in handling these kinds of activity (i.e. to guide rather than control the learning process of the students). The teacher competence of regulating student learning is called the regulation competence in this study.

Emphasis

To facilitate the learning of concepts through contexts, a different teaching emphasis from that usual in traditional science education is necessary (Gilbert 2006). Emphasis has been defined thus by Roberts (1982):

A curriculum emphasis in science education is a coherent set of messages to the student about science […]. Such messages constitute objectives which go beyond learning the facts, principles, laws, and theories of the subject matter itself -objectives which provide answers to the student question: “Why am I learning this?” (p. 245).

Three emphases have been described for chemistry education by van Driel et al. (2005, 2008) based on the work of Roberts (1982) and van Berkel (2005) on the emphasis of the different science curricula. A similar classification of emphases to van Driel et al. (2005) intended for science education in general has been proposed in a parallel study (de Putter-Smits et al. 2011). These emphases are also used here: a fundamental science (FS) emphasis in which theoretical notions are taught first because it is believed that such notions later on can provide a basis for understanding the natural world, and are needed for the students’ future education; a knowledge development in science (KDS) emphasis in which students should learn how knowledge in science is developed in socio-historical contexts, so that they learn to see science as a culturally determined system of knowledge, which is constantly developing; and a science, technology and society (STS) emphasis in which students should learn to communicate and make decisions about social issues involving scientific aspects.

From the literature, it is apparent that a KDS and/or STS emphasis is an important success factor for context-based innovation (Driessen and Meinema 2003; Gilbert 2006). The use of emphasis to typify teachers’ intentions in the classroom when teaching context-based materials has been shown to be effective by Vos et al. (2010). Teaching from a KDS or STS emphasis is a teacher competence referred to in this study as emphasis.

Design

Seen from a constructivist perspective, the context-based materials available are not automatically suited to every classroom or to the needs of all individual learners. Furthermore, the active role for the student embedded in the context-based curriculum material requires activities like experiments and puts a demand on the timetables. Not all schools might have the opportunity to meet these requirements and demands, creating the necessity for the teachers to redesign the material to the schools’ facilities. Another consequence of active learning is the sometimes unpredictable learning demands from the students, causing the teachers to redesign education on the spot. Learning issues that might confront students are not generally predictable, requiring teachers to change their lesson plan to the demands of the student. Teachers are therefore expected to (re-)‘design’ the context-based material to their needs. Hence, it might also be expected that the educational design of curriculum materials teacher competence is more demanding in context-based education than in traditional education.

School innovation

Finally, the context-based innovation cannot succeed if it is supported only by isolated teachers (Fullan 1994). The teachers are to be seen as representatives of this innovative approach in their schools: explaining the ins and outs of context-based education to colleagues, providing support on context-based issues, and collaborating with teachers from other science subjects to create coherence (Boersma et al. 2007; Steering Committee Advanced Science, Mathematics and Technology 2008). The teachers have to implement the context-based approach together with colleagues of their subject field and even possibly across science subjects to create coherence in the science curricula. In a study into the learning effects among teachers involved in developing ‘Chemie im Kontext’ (ChiK), an increased belief and wish to collaborate within schools were observed. These teachers are also likely to be involved in multifaceted collaborative relationships in their own schools (Gräsel et al. 2006). The teacher competence involving collaboration is referred to in this study as school innovation.

A list of five teacher competences for context-based education was thus deducted from literature: context handling, regulation, emphasis, design, and school innovation. The teacher competences concerning context handling, emphasis and teaching regulation have also been used in the ‘physics in context’ (piko) studies (Mikelskis-Seifert et al. 2007). In the study described here, an attempt was made to develop a learning environment instrument to measure these components of teachers’ context-based competences using three dimensions: affective, cognitive and behavioural. Reliability and validity (Trochim and Donnelly 2006) were evaluated with a small sample of teachers.

Dutch context-based innovation: teachers designing context-based education

The Dutch Ministry of Education, Culture and Science instituted committees for the high school subjects of biology, chemistry, physics, and ASMaT. The assignment was to develop new science curricula that would better suit modern science, relieve the current overloaded curricula and possibly interest more students in choosing science in further studies (Kuiper et al. 2009). The science curricula in this innovation were meant for years 10 and 11 of senior general secondary education and for years 10–12 of pre-university education. The subject ASMaT differs from other science subjects in that it is a new school subject for which, up to then, no curriculum or learning goal had been designed (Steering Committee Advanced Science, Mathematics and Technology 2008).

The innovation committees were committed not to present a ‘list of concepts’ to the Ministry of Education, Culture and Science, but to provide a tried and working curriculum from year 10 up to the national examinations (Driessen and Meinema 2003). To this end, they enlisted teachers to develop aspects of the curriculum (i.e. curriculum modules, textbooks, workbooks, worksheets for experiments and so on). Each of the novel science curricula has been tested in test-schools for 3 years. The nation-wide implementation is now pending the Minister’s approval.

All Dutch innovation committees decided to use a form of context-based education (Boersma et al. 2006). However each committee differed in the specification of the elements of context-based education, the extent to which this approach should be visible in the curriculum materials, and how design teams for curriculum materials should be setup.

The work in design teams, such as the Dutch innovation committees instigated, is an example of circumstances that generally lead to successful professional development of teachers in small groups (of teachers) (Deketelaere and Kelchtermans 1996). For context-based education, changes in the cognitive and behavioural dimensions in the teaching practices of teachers designing their own curriculum materials were found (Mikelskis-Seifert et al. 2007). The context-based design experience of the teachers who designed curriculum materials and used theses materials in class is thus considered an opportunity for teacher professional development, leading to the assumption that teachers with design experience have more context-based teacher competences than teachers without this experience. No instruments have yet been constructed to measure context-based teaching competency. Such an instrument would enable research in the area of context-based teaching competence and the development of such competence.

Research questions

The first research question was:

Can a composite instrument be developed to measure the identified context-based teacher competences of science teachers teaching context-based oriented science classes in a reliable and valid manner?

Assuming that the answer to the first research question would be positive, the second research question was:

Is there a positive correlation between context-based teacher competence and the use of context-based materials in class and/or experience in designing context-based teaching materials?

The research questions were answered by designing and evaluating a composite instrument in a pilot study into the context-based learning environment of teachers who use the innovative context-based material in their classes. These teachers varied in their years of teaching experience, in whether or not they had context-based design experience, and in whether or not they used their own context-based materials in class. This allowed an evaluation of the sensitivity of the instrument.

Method

Instrument design

Competence measurement can be described as: “Detailed research into the actions of a teacher in the classroom practice; an empirical analysis of professional work” (Roelofs et al. 2008, p. 323). Mapping the learning environment that a teacher is able to create therefore is also mapping a part of the competence of this teacher on the aspect concerned.

The mapping of a learning environment calls for a mixed-methods approach, to draw upon the strengths and minimise the weaknesses associated with different methods of research (Johnson and Onwuegbuzie 2004, p 17). An instrument that consisted of quantitative and qualitative components was composed to map each of the five identified teaching competences on each of the three dimensions. An overview of the composite instrument with the competences and dimensions it covers, and the number of variables that map each competence, is provided in Table 1. An overview of the questions in the interviews and questionnaires can be requested from the corresponding author.

The instrument was a composite of two international standard questionnaires, two semi-structured interviews, classroom observations (and subsequent interview) and an analysis of curriculum materials. These six components within the instrument provided a total of 65 variables that are described below. Each variable was used as an indicator for a teacher competence in the 15-cell competence matrix as depicted in Table 1.

Qualitative data were given a score by two researchers independently. Six researchers (including first and second author) in the field of context-based education and teacher competence were involved in the research, both in establishing codebooks for scoring qualitative data as in the scoring of these data. Examples of such scoring of qualitative data are provided for each component in subsequent sections. The corresponding variables from each of the instrument components were combined to arrive at 15 competence scores. Each cell of the matrix contained at least three variables to enable triangulation (Johnson and Onwuegbuzie 2004) of the different scores. Inter-source correlations were checked to ensure the combination of the data sources. If a low inter-source correlation was found, true differences with respect to that competence between the sources was further investigated and checked for relevance, rather than considered a measurement error. For instance, student and teacher perceptions of teacher-student interaction might differ and be meaningful (den Brok et al. 2006b). At least two data sources for each variable were kept.

To support the described triangulation of data, a competence description was formulated for each participating teacher. All teachers were then asked to comment on this description of their teaching competence.

The design, reliability, and validity of the components in the composite instrument are detailed in the subsequent sections.

Teacher and class questionnaire: WCQ

The first component of the instrument was a questionnaire based on appropriate scales from the What Is Happening In this Class? (WIHIC), the Constructivist Learning Environment Survey (CLES) and the Questionnaire on Instructional Behaviour (QIB) questionnaires, abbreviated to WCQ (questionnaire). The questionnaires mentioned measure more and differences learning environment elements than those that have been identified in this article as indicative for a context-based learning environment. Therefore, only the scales from these questionnaires linked to one of the five context-based competences were selected.

The WIHIC questionnaire originally developed by Fraser et al. (1996) measures school students’ perceptions of their classroom environment, including among others student involvement, cooperation, teacher support, and equity. The Investigation scale measures the extent to which there is emphasis on skills and inquiry and their use in problem solving and investigation in a classroom (den Brok et al. 2006a). Typical values of Cronbach’s alpha for this scale range from 0.85 to 0.88 (den Brok et al. 2006a, b; Dorman 2003). This scale relates to the KDS emphasis defined in the theory section of this paper and was used as a variable measuring the emphasis competence.

The CLES, constructed by Taylor et al. (1997), measures the development of constructivist approaches to teaching school science and mathematics. Scales include Personal Relevance, Uncertainty, Critical Voice, Shared Control and Student Negotiation. In this study, the scales and descriptions from Johnson and McClure (2004) were used. To measure the context handling competence, the Personal Relevance scale was used. For the emphasis competence, the Uncertainty scale was used. Shared Control and Student Negotiation were used to measure the regulation competence. Typical values of Cronbach’s alpha for these scales range from 0.76 to 0.91 (Johnson and McClure 2004; Lee and Fraser 2000; Taylor et al. 1997).

The Questionnaire on Instructional Behaviour (QIB) (Lamberigts and Bergen 2000) was originally developed to measure teachers’ instructional behaviour. Scales included Clarity, Classroom Management and Teacher Control. From the QIB, the scales for strong, shared and loose teacher control were selected, corresponding to the context-based teacher competence regulation. Typical values of Cronbach’s alpha for these scales lie between 0.64 and 0.86 when used in Dutch classrooms (den Brok et al. 2006a, b).

All scales selected from the different questionnaires use a five-point Likert scale ranging from I Completely Disagree to I Agree Completely. In Table 2, the selected scales, a sample item and the competence that it is expected to map are provided, as well as a summary of the resulting WCQ questionnaire.

The questionnaires were translated into Dutch. Two versions of the WCQ questionnaire were used, one to map teachers’ perceptions and one to map students’ perceptions. The teacher questionnaire mapped teachers’ self-perceptions of their class (the affective dimension). The student questionnaire mapped students’ perceptions of the way in which the class is taught (the behavioural dimension). The student questionnaires were completed by the class anonymously to obtain unbiased answers. Each scale corresponded to either an instrument variable for the affective dimension (teacher questionnaire) or the behavioural dimension (student questionnaire), providing 18 variables for the composite instrument.

Emphasis teacher questionnaire

The second component of the instrument was a rephrased version of a questionnaire used by van Driel et al. (2008) to measure the preferred curriculum emphasis of chemistry teachers in secondary science education. The questionnaire originally contained 45 statements to which answers were obtained using a five-point Likert scale. The 45 items were divided into three scales, each corresponding with a different teaching emphasis. The scores for each emphasis (FS, KDS and STS) were obtained by adding the scores of the corresponding items. Typical alpha coefficients for the original questionnaire ranged from 0.71 to 0.82. The questionnaire mapped the opinion of teachers (affective dimension). In a parallel study, the questions were rephrased to represent science education in general and science subject-specific questions were rephrased as that they also represened biology and physics (de Putter-Smits et al. 2011). For ASMaT teachers, the assumption was that the general science education questions represented the teachers’ view of ASMaT. The scales for ASMaT therefore consisted of fewer items. When the rephrased questionnaire was validated in a study among 213 science teachers, alpha coefficients ranged from 0.73 to 0.90 (de Putter-Smits et al. 2011).

In the present study, scores on the three emphasis scales were combined into one variable by adding the scores for STS and KDS emphasis and subtracting the score for FS emphasis, in accordance with the preferred emphasis competence outlined in the theory section. The mutual correlation between KDS and STS was 0.45 (N = 213; p < 0.00, de Putter-Smits et al. 2011), indicating that these concepts partly overlapped (convergent validity). Hence the combined variable represented the common core of context-based teaching emphasis.

Interview on context-based education

The teachers’ affective and cognitive skills and their classroom behaviour regarding context-based education were measured using a semi-structured interview. The questions were based on the five components of context-based teacher competence discussed in the literature section. The face validity of the interview model and the code book for categorising the answers (Ryan and Bernard 2000) was confirmed by a panel of researchers (first and second author and one independent researcher). The answers were analysed and entered as variable scores.

For instance, the question “Could you explain what context-based education is, your personal opinion?” and “What do you feel is the difference between context-based science education and traditional education?” required the teacher to respond at the cognitive dimension and possibly behavioural dimension on the teacher competence of context handling and regulation. The answers were compared to the context-based teacher competence definitions derived from literature (see theory section). The teachers were then compared to each other using fractional ranking (Field 2005) for each variable and scored one for the total number of teachers in the study (in this study eight), the highest number representing the most proficient teacher in this variable. Teachers with no score on a variable were not included in the fractional ranking of that variable.

An example of such ranking is: Teacher A tells the researcher that “context-based education requires strict teacher instruction and a constant monitoring of progress, including good old-fashioned deductions of concepts’ cause the context-based material otherwise do not result in students learning the concepts”. Teacher B does not see a difference in regulating student learning between standard science education and context-based education. “It is maybe just that the students seem to have different questions.” Teacher C tells the researcher that he is now “guiding the students towards learning the concepts, rather than just telling them. It is more work,’ cause I have to coach each group of students separately and differently, but it is worthwhile.” Ranking these teachers would result in Teacher A scoring 1, because she expresses views contrary to the intentions of context-based education, Teacher B scoring 2 because she sees only a small difference that she cannot position, and Teacher C scoring 3 because he is describing behaviour expected in context-based education.

Classroom observation and interview

Classroom observations and subsequent interviews were used to establish context-based proficiencies on the three dimensions. For each teacher, up to three classes were video-taped and the voice of the teacher was recorded separately. The subject of the classes observed were: the start of a new study module for which the context (focal event) would be most visible; a class which would be an example of student-active learning; a class for which concepts would be most prominent e.g. the last lesson prior to a test. The lesson was then analysed for the five teacher competences on each of the three dimensions using codebooks (Ryan and Bernard 2000). Face validity of the codebook was confirmed by the panel of researchers (first and second author and one independent researcher).

Typical context-based teaching behaviour was counted (tallied) for the behavioural dimension. For instance, the teachers embarks on an explanation of the context and, in class, recapitulates three times what he has said about it. This would lead to a count of 4 on the behavioural dimension of context-handling competence. When teachers expressed a train of thought when engaging in, for instance, a teaching activity, this was categorised and counted on the cognitive dimension. For instance, the teacher described what activities are expected of the students and how they are responsible for the learning of their group. She continues to coach two of the groups along these lines. On the cognitive dimension, the total would be 1 on the regulation competence and, on the behavioural dimension, the competence would count as 2. When teachers expressed an opinion about one of the competences, this was categorised and counted on the affective dimension.

After the observed class, the teachers were interviewed about how they though or felt the context-based class went. All remarks in their context-based teaching were checked against the definitions of context-based education from the theory section of this article. Answers indicating context-based teaching competence were also counted.

The scores were then calculated by dividing the counted occurrences of the context-based behaviour and context-based after-class discussion remarks by the number of minutes of class observed. This part of the instrument also provided 15 variables per teacher.

Analysis of designed material

Curriculum material that teachers designed themselves was analysed for evidence of the five context-based teacher competences identified from literature. The scores were added as five variables on the behavioural dimension because the teachers concretise their ideas and knowledge and put them into action in the curriculum material.

The material was analysed for the use of contexts to introduce concepts, concept transfer, the use of a KDS and/or STS emphasis, the stimulating of active learning and cooperation with other science domains. For this, a codebook was used. Face validity of the codebook was confirmed by the panel of researchers.

An example of context-based style material would be a remark encouraging students to look up unfamiliar scientific concepts that they encountered in the context before proceeding (student regulated learning). The material was scored on a three-point scale from ‘opposite from context-based education’ to ‘in accordance with context-based education’. The example given would thus receive the score 3 for the regulation competence. Teachers who had not made any material for their classes were not given a score on these variables.

Interview about design and context-based skills

The teachers with experience in designing materials for the current science innovation were interviewed in depth about their experience. Using a interview combined with a storyline technique (Taconis et al. 2004), changes in the different context-based competence components of the teachers were established. The semi-structured interview started with general questions about teaching experience and general questions about what context-based education entails. Next, the teachers were asked whether their attitude, knowledge, skills and classroom behaviour towards context-based education had changed because of their design experience, using a storyline technique (Beijaard et al. 1999). To ensure that all context-based design-related learning was discussed, the teachers were asked to name subjects related to their design experience that were not covered in the interview questions.

The questions covered all competence cells, providing 15 variables for this part of the instrument. The face validity of the interview model and the code book for categorising the answers was confirmed by the panel of researchers. Similar to the interview about context-based education skills, the answers for each competence were checked against the definitions of context-based education derived from literature. Teachers were ranked (fractional) from one to number of teachers, the highest number representing the most proficient teacher. For instance, Teacher A replied that designing context-based education was only different in that the applications of scientific concepts were now treated first, rather than the concepts. Otherwise, for him, the structure of the lessons remained the same. Teacher B replied that designing context-based curriculum materials was very time-consuming because he had to come up with a suitable context that would cover all the concepts that he intended to be part of the curriculum module. Comparing these two teachers, Teacher A was ranked 1 and Teacher B was ranked 2.

Cases

Eight teachers using the innovative material in their classrooms in the south-east region of the Netherlands were selected randomly by inviting teachers by email through the innovation network. This request was answered positively by three teachers in the domain ASMaT, one biology teacher, three chemistry teachers and one physics teacher. Four of these teachers had experience in designing context-based material. Two of the four teachers taught using the material that they designed themselves. For seven of the eight teachers, it was their first year teaching the innovative material. For one teacher, it was his second year.

The unequal number of cases for each science subject and unequal distribution for each year level are both attributable to the limited response to the request. One class of students for each teacher (with year level relevant to the innovation) was selected for observation. The limited number of cases arose because of the limited number of teachers (around 60) who were using the innovative material in class at the time of this research. On the other hand, a sample of eight teachers is quite customary for mixed-methods approaches and would allow a first evaluation of reliability and validity.

Analysis

Two researchers independently analysed all data using the codebooks to obtain a score in the different cells. An inter-rater kappa (Cohen 1988) was calculated to ensure that the instrument was measuring reliably.

The scores on all variables were recalculated into standard scores (z scores) based on the mean and standard deviation before they were used in the analyses to correct for influences from the different scales of measurement. The (standardised) scores obtained on the variables for each component for each cell were correlated to establish reliability and convergent validity (Trochim and Donnelly 2006) of the instrument. Correlated and non-correlated variables were thus identified and non-correlated variables were excluded in future use of the instrument, unless the low correlation found corresponded to a theoretically expected difference in perceptions of that issue that was considered relevant in the light of prior research.

To be able to answer the second research question and to validate the instrument fully, competence scores for each competence cell were calculated for each teacher. After identifying and removing the non-correlated variables in Table 1, the remaining variables for each competence cell were averaged, first within each actor’s perception (student, teacher, researcher) and then across all perceptions. This was done to give equal weight to each party’s opinion. The accumulation of opinions was based on research that shows that consensus in opinions about someone increases with an increasing number of observers (Kenny 2004). Missing data were treated as empty cells.

An example of a calculation of a score for each teacher in the cell of context handling within the affective dimension, the WCQ scale Personal Relevance gave an average that was then standardised to 0.34, meaning that the teacher scores (relatively) above average and towards context-based teaching. (A score of 1 was for being exactly according to context-based education intentions). Then the z scores for the answers to the interview on context-based education questions were averaged with the scores for answers to questions from the design and context-based skills interview to yeild a score of −0.21, meaning that the teacher scored (relatively) below average and contrary to context-based education intentions. Next these two averages where again averaged to obtain the final score for each teacher of 0.065, meaning that the teacher showed (almost) no competence for the context-handling context-based teaching competence on the affective dimension.

A context-based competence table of z scores was thus created for each teacher. The scores were then put into words; for example, an emphasis competence score of −0.75 within the affective dimension was described as “you prefer to teach the concepts first and explain what they are used for later”, and a score of 0.87 for the regulation competence within the behavioural dimension was described as: “You have a preference for a shared regulation teaching strategy in your classes, which is both observed in your classes as indicated by the student questionnaires.” All of the 15 scores were thus translated back to words and sent to the teachers to allow comparison of teachers’ self perceptions with this competence description. The teachers were asked to give both feedback on each competence described and a percentage of agreement with their competence description. This feedback from the teachers provides an indication of the instrument’s reliability.

The competence z scores for each cell for each teacher were used to establish differences between teachers with and teachers without relevant design experience by correlating scores and ‘design experience’ using Spearman’s rho for ranked variables. As mentioned in a previous section, we expected teachers with design experience to show more context-based competences. The competence scores for each cell were also correlated with ‘using self-designed context-based materials’, because teachers who used the material that they designed themselves in their classes are more aware of the intention of the material than are other teachers. To ensure that these were the only influencing factors, teaching experience was also correlated with the scores for each cell. If design experience, use of self-designed material and more teaching experience correlated with higher context-based competence scores, this added to the instrument’s (concurrent) validity.

A quantitative data analysis was performed, even though the number of cases was small (n = 8) to test the instrument’s reliability and validity. The result only has an indicative meaning for the ultimate goal of mapping teachers context-based learning environment and corresponding teaching competences.

Results

The first main question was whether the identified context-based competence components can be measured in a reliable and valid manner.

Reliability

The composite instrument was found to give reliable results. The WCQ student questionnaire was returned by 88 % of the students. Incomplete questionnaires were excluded from the analysis. The alpha coefficient for the scales used in the student WCQ questionnaire ranged from 0.74 to 0.87 (n = 162), which is comparable to previously-published results for the scales used in this questionnaire (den Brok et al. 2006a, b; Dorman 2003; Taylor et al. 1997)

For each teacher, one or more (up to three) classes were observed. The interpretation of the observed classes, using video and audio footage, was undertaken simultaneously and independently by a second researcher. Cohens’ kappa was calculated to be 0.65. Next, the general impression of the class observed was discussed and agreed upon and context-based competence scores were compared until full agreement was obtained. A ‘design and context-based interview’ was conducted with four of the eight teachers. The different interviews were analysed and scored by two researchers independently, reaching an inter-rater agreement of 0.72. Any curriculum materials that the teachers designed for their classes were analysed and scored independently by two researchers, who reached complete agreement.

The resulting teacher competence description was compiled by two researchers independently, which also was in complete agreement. Seven teachers returned their context-based competence description with comments on the descriptions of the different competences. The overall agreement (teacher self-perceived and researchers’ perception) with the presented competence description was 85 %. All five context-based teacher competences were recognised by the participants. Four participants were of the opinion that active learning can occur even when the teacher has a strong control regulative approach to teaching.

Convergent validity

For each cell of the instrument matrix, the scores for the different variables were correlated. Variables correlating above 0.35, indicating a substantial overlap of the variables, were selected for further study. Because the number of cases was small, the statistical significance of the correlations cannot be given. In Table 3, the range of Pearson correlation values and the number of correlated variables for each are given. In some cases, only one of the instrument components supplied data, and so no correlations could be provided.

The WCQ scale Shared Control (CLES) correlated significantly (0.59–0.64) with the Strong Control scale from the QIB. The mean student score on the Shared Control (CLES) was rather low compared to the mean of the Shared Control (QIB) (2.72, SD = 0.28 vs. 3.22, SD = 0.31). The WCQ scale Strong Control (QIB) seemed to be linked to the other two QIB scales in that it did not measure the opposite construct (positive correlations). This effect was not apparent when correlating scores with other data such as interviews and classroom observations, for which a negative correlation was obtained.Footnote 2 It can therefore be argued that these scales should therefore be removed from the composite instrument.

The instrument appeared to be valid for the of competences context handling and regulation and partly for the competences of emphasis and design, as indicated by rather high correlations (convergent validity) between the variables.

For the competences of emphasis, design and school innovation, no correlations could be calculated because of a lack of data (input from the teachers, hardly any material was designed, no cooperation with other teachers taking place, etc.).

Using the positively correlated variables only (32 of the 65), a Cronbach’s alpha of 0.84 was obtained for the composite of the context-based competence instrument.

The means and standard deviations of the (standardised) scores of the participants were calculated and are shown in Table 4. The results show that the scores of our participants as a group approach zero, indicative of a correct use of the z-score procedure. The standard deviations are large however, indicating that the instrument was able to discriminate between the different teachers and thus that the individual competence scores are meaningful and indicative of context-based teaching competence.

Sensitivity evaluated through teacher characteristics

The second research question dealt with the differences in context-based competence between teachers with and without design experience, teachers with more years of teaching experience, and teachers using self-designed materials in class. The individual competence scores for each teacher for correlated variables (from Table 3) from the composite instrument were used to obtain nine scales of context-based competences (the non-italicised cells in Table 4). The nine scales included three for the context handling competence, two for the emphasis competence, three for the regulation competence and one for the design competence. Intercorrelations were between 0.37 and 0.99, indicating good convergent validity (Cohen 1992) of the nine scales.

The scores for competence for each teacher were correlated with design experience, years of teaching experience and use of self-designed material versus given material using Spearman’s rho for ranked variables. The correlations are shown in Table 5, which provides some strong indications of the instrument’s sensitivity (with the average r equalling 0.30).

First, the correlations found indicate that, for the eight teachers studied, design experience resulted in a higher context-based teacher competence for context handling and emphasis on the cognitive dimension (r = 0.87 and r = 0.77, respectively).

Second, more teaching experience correlated with higher context-based teacher competence for context handling on the affective dimension and design on the cognitive dimension (r = 0.88 and r = 0.95, respectively).

Third, using self-designed material correlated with higher context-based teacher competence for context handling on the cognitive dimension (r = 0.76). In terms of effect size, this correlation is considered large (Cohen 1992).

Finally, when correlating the total context-based competence score for the eight teachers with design experience, years of teaching experience and use of one’s own material instead of given material, years of teaching experience correlated significantly (r = 0.74) with the affective dimension and design experience with the cognitive dimension (r = 0.87).

Given the theoretically expected influences used in the correlations with the context-based teaching competences, it could be concluded that the instrument’s validity is supported for the constructs of context handling, emphasis and design and was thus valid for measuring context-based teacher competence.

Conclusions and implications

The composite instrument for mapping the context-based learning environment created by the teacher developed in this pilot study has been shown to be reliable. The inter-rater results, Cronbach’s alpha coefficients and the agreement of the teachers’ self-perception with the researchers’ constructed competence descriptions were high, indicating that the instrument measured reliably (convergent reliability). The various components of the WCQ questionnaire were of undisputed translational validity (Trochim and Donnelly 2006). The composite instrument was validated by identifying the correlated components. The high teacher agreement on their competence descriptions adds to the concurrent validity. Relational validity was addressed by looking at expected influences of teaching and design experience on context-based teaching competence. Teachers using their self-designed context-based material showed a higher competence score on the cognitive dimension. Hence discriminant validity appeared to be supported. This was not so for the regulation competence. No conclusions could be drawn for the discriminant validity for this competence. The significant correlations indicated that the criterion validity of the instrument was sound. Correlating components could be used in further research. Considering the sample size, further use of the instrument should include consideration of the reliability and validity to ensure that the instrument will be able to discriminate between different learning environments. Also, some improvements are advisable, as suggested below.

The WCQ scale Shared Control (CLES) was not unambiguous, judging from the high correlation with the QIB Strong Control scale and the difference in means with the QIB Shared Control scale. The QIB Strong Control scale correlated negatively with other data sources for strong control and has been described by others as measuring the whole concept of teacher control (den Brok et al. 2004). Because the composite instrument is extensive, it could be suggested that these two scales could be omitted from the WCQ questionnaire in future.

The first and second author found it difficult to discriminate between affective and cognitive competence elements. Discrimination between these two dimensions in teacher research is known to be a challenge (Fenstermacher 1994) and often the two dimensions are combined (Meirink 2007). To increase the practicality of the instrument when using it in future research, the affective and cognitive dimensions could be collapsed, resulting in two dimensions in which the context-based competences are measured, with one dimension depicting teachers’ opinions and knowledge of context-based education and one depicting the behavioural/classroom performance of context-based education (from a competence perspective). A similar analysis structure has been used by Meirink (2007) in analysing individual teacher learning when working in groups.

Using the data from the research presented here, the reliability for context handling, regulation and emphasis within such a combined affective/cognitive dimension is sufficient (Cronbach’s alpha = 0.95, 0.71 and 0.64, respectively). The collapsing of the two dimensions also makes the analysis of the data shorter and less complex, because there would then only be 10 scores to calculate.

For the behavioural dimension, positive correlations were found for the variables of the context handling competence. The reliability coefficients for the emphasis and regulation scale were 0.80 and 0.93, respectively.

Apart from the proposed changes, it could be concluded that the instrument was reliable and valid for measuring the context-based learning environment using both quantitative and qualitative data. The composite learning environment instrument appeared to be able to measure the five context-based teaching competences for the affective/cognitive dimension and three of the competences (context handling, regulation and emphasis) for the behavioural dimension. For design only, measurement on the affective/cognitive dimension was possible.

The design competence could be measured reliably for the cognitive dimension, but could not be measured for the affective dimension or behavioural dimension, probably because of limited number of participants. However, the proposed collapsing of the affective and cognitive dimension resolves the measurement problem for the affective dimension. More research on the measuring of the design competence for the behavioural dimension is needed because design skills are required when embarking on context-based education (see theory section).

The school innovation competence gave results only for one variable for the cognitive dimension (teacher’s self-perception), making it impossible to obtain correlations. Further data could be obtained not only by studying the teacher point of view for this competence, but also by asking the teacher’s direct colleagues about the school innovation competence. Also more observations by the researcher in the school where the teacher works would reveal more on this competence. Triangulation of the data would then be possible and practicality, reliability and validity of data thus obtained could then be studied.

The competences teachers need to create a context-based science learning environment, as presented in the theory section of this article, have all been found in the context-based learning environments studied here, judging from the teachers’ standard deviations (Table 4). The competences of school innovation and design, however, were far less prominent than the other three (missing data in Tables 3 and 4). This can be explained because it is only recently that such teaching competencies have been called for (e.g. SBL: Stichting beroepskwaliteit leraren en ander onderwijspersoneel [Association for the Professional Qualities of Teachers] 2004).

Four teachers in this study were of the opinion that active learning can occur even when the teacher has a strong control regulative approach to teaching. This contrasts strongly with research by Vermunt and Verloop (1999) and Bybee (2002) who state that, for active learning to be successful, a shared or loose control strategy is necessary. It demonstrates the necessity for teacher professional development in order for the context-based innovation to succeed.

The composite instrument resulting from this study was shown to be able to measure context-based competence of science teachers. Despite that the sample size (n = 8) is small, positive indications were found for the instrument’s validity. In future research, the instrument could be evaluated further with a larger sample size in studying teachers’ context-based competences in the Netherlands and other countries.

So far, context-based science education has been evaluated in terms of the learning outcomes of students and the motivation of students (Bennett et al. 2007), but not in terms of the actual change in teaching in the classroom. One use of this instrument is to map the learning of teachers who start teaching context-based education. Using the instrument, comparisons could be made between the context-based learning environments in different countries where context-based science education has been implemented (e.g. Germany, UK and the Netherlands). It also could be used to establish differences in context-based learning environments and teacher competences for different science subjects.

Notes

New Dutch science subject for year 10–12 that involves students in discovering social issues and the science involved. Concepts usually exceed the normal science curricula.

In this study, the focus was on the overlap between the various perceptions on each of the competences as long as this overlap was found to be large enough, rather than on the possible meaning of contrasting opinions about a particular competence.

References

Beijaard, D., van Driel, J., & Verloop, N. (1999). Evaluation of story-line methodology in research on teachers’ practical knowledge. Studies in Educational Evaluation, 25, 47–62.

Bennett, J. (2003). Teaching and learning science. London: Continuum Press.

Bennett, J., & Lubben, F. (2006). Context-based chemistry: The Salters’ approach. International Journal of Science Education, 28, 999–1015.

Bennett, J., Lubben, F., & Hogarth, S. (2007). Bringing science to life: A synthesis of the research evidence on the effects of context-based and STS approaches to science teaching. Science Education, 91, 347–370.

Boersma, K., Eijkelhof, H., van Koten, G., Siersma, D., & van Weert, C. (2006, June). De relatie tussen context en concept [The relationship between context and concept]. Betanova. http://www.betanova.nl/verbinding/contexten/.

Boersma, K., van Graft, M., Harteveld, A., de Hullu, E., de Knecht-van Eekelen, A., Mazereeuw, M., et al. (2007). Leerlijn biologie van 4 tot 18 jaar [A biology learning continuity pathway for K to 12]. Utrecht: CVBO. www.nibi.nl.

Bybee, R. W. (2002). Scientific inquiry, student learning, and the science curriculum. In R. W. Bybee (Ed.), Learning science and the science of learning (pp. 25–36). Arlington, VA: NSTA.

Coenders, F. (2010). Teachers’ professional growth during the development and class enactment of context-based chemistry student learning material. Doctoral dissertation, Twente University.

Cohen, J. (1988). Statistical power analysis for the behavorial sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum.

Cohen, J. (1992). Quantative methods in psychology: A power primer. Psychological Bulletin, 112, 155–159.

de Putter-Smits, L. G. A., Taconis, R., Jochems, W. M. G., & van Driel, J. H. (2009). Developing an instrument for measuring teachers’ proficiency in context-based science education: A case from the current dutch “coco innovation”. In M. F. Tasar & G. Cakankci (Eds.), Proceedings of the 2009 ESERA conference, Istanbul, Turkey.

de Putter-Smits, L. G. A., Taconis, R., Jochems, W. M. G., & van Driel, J. (2011). De emphases van docenten biologie, natuurkunde en scheikunde en de gevolgen voor curriculum vernieuwingen [science teachers’ emphases and consequences for curriculum innovations]. Tijdschrift voor Didactiek der bèta-wetenschappen, 28, 32–48.

Deketelaere, A., & Kelchtermans, G. (1996). Collaborative curriculum development: An encounter of different professional knowledge systems. Teachers and Teaching, 2, 71–85.

Den Brok, P., Bergen, T., & Brekelmans, M. (2006a). Convergence and divergence between students’ and teachers’ perceptions of instructional behaviour in dutch secondary education. In D. L. Fisher & M. S. Khine (Eds.), Contemporary approaches to research on learning environments: Worldviews (pp. 125–160). Singapore: World Scientific.

den Brok, P., Bergen, T., Stahl, R., & Brekelmans, M. (2004). Students’ perceptions of teacher control behaviours. Learning and Instruction, 14, 425–443.

den Brok, P., Fisher, D., Rickards, T., & Bull, E. (2006b). Californian science students’ perceptions of their classroom learning environments. Educational Research and Evaluation, 12, 3–25.

Dorman, J. P. (2003). Cross-national validation of the What Is Happening In this Class? (WIHIC) questionnaire using confirmatory factor analysis. Learning Environments Research, 6, 231–245.

Driessen, H., & Meinema, H. (2003). Chemie tussen context en concept: Ontwerpen voor vernieuwing [Chemistry between context and concept: Designing for innovation]. Enschede: SLO Stichting Leerplanontwikkeling. www.nieuwescheikunde.nl.

Duit, R., Mikelskis-Seifert, S., & Wodzinski, C. (2007). Physics in context—A program for improving physics instruction in germany. In R. Pinto & D. Couso (Eds.), Contributions from science education research (pp. 119–130). Dordrecht: Springer.

Duranti, A., & Goodwin, C. (Eds.). (1992). Rethinking context: Language as an interactive phenomenon. Cambridge: University Press.

European Commission Directorate-General for Education and Culture. (2008). Common European principles for teacher competences and qualifications. http://ec.europa.eu/education/policies/2010/doc/principles-en.pdf.

Fenstermacher, G. (1994). The knower and the known: The nature of knowledge in research on teaching. Review of Research in Education, 20, 3.

Field, A. (2005). Discovering statistics using SPSS (2nd ed.). London: Sage.

Fraser, B. J., Fisher, D. L., & McRobbie, C. J. (1996, April). Development, validation and use of personal and class forms of a new classroom environment instrument. Paper presented at the annual meeting of the American Educational Research Association, New York.

Fullan, M. (1994). The new meaning of educational change (3rd ed.). London: Continuum Press.

Gilbert, J. (2006). On the nature of context in chemical education. International Journal of Science Education, 28, 957–976.

Goodlad, J. I. (1979). Curriculum inquiry: The study of curriculum practice. New York: McGraw-Hill.

Gräsel, C., Fussangel, K., & Parchmann, I. (2006). Professional development of teachers in learning communities: Teachers’ experiences and beliefs concerning collaboration. Zeitschrift fur erziehungswissenschaft, 9, 545–561.

Johnson, B., & McClure, R. (2004). Validity and reliability of a shortened, revised version of the Constructivist Learning Environment Survey (CLES). Learning Environments Research, 7, 65–80.

Johnson, R. B., & Onwuegbuzie, A. (2004). Mixed methods research: A research paradigm whose time has come. Educational Researcher, 33(7), 14–26.

Kenny, D. A. (2004). PERSON: A general model of interpersonal perception. Personality and Social Psychology Review, 8, 265–280.

Kuiper, W., Folmer, E., Ottevanger, W., & Bruning, L. (2009, January). Curriculumevaluatie bètaonderwijs tweede fase: Vernieuwings- en invoeringservaringen in 4 havo/vwo (2007–2008). [Curriculum evaluation science education second phase: Experiences with the innovation and implementation in 4 havo/vwo] (Interimrapportage nieuwe scheikunde). Enschede: Stichting Leerplanontwikkeling SLO.

Labudde, P. (2008). The role of constructivism in science education: Yesterday, today, and tomorrow. In S. Mikelskis-Seifert, U. Ringelband, & M. Brückmann (Eds.), Four decades in research of science education—From curriculum development to quality improvement (pp. 139–156). Münster: Waxmann Verlag.

Lamberigts, R., & Bergen, T. (2000, April). Teaching for active learning using a constructivist approach. Paper presented at the Annual meeting of the American Educational Research Association, New Orleans.

Lee, S. S. U., & Fraser, B. J. (2000, June). The constructivist learning environment of science classrooms in Korea. Paper presented at the annual meeting of the Australasian Science Education Research Association, Fremantle, Western Australia.

Meirink, J. A. (2007). Individual teacher learning in a context of collaboration in teams. Doctoral dissertation, Leiden University.

Mikelskis-Seifert, S., Bell, T., & Duit, R. (2007). Ergebnisse zur lehrprofessionalisiering im program physik im kontext. In D. Höttecke (Ed.), Kompetenzen, kompetenzmodelle, kompetenzentwicklung (Vol. 28, pp. 110–112). Berlin: Lit-Verlag.

Parchmann, I., Gräsel, C., Baer, A., Nentwig, P., Demuth, R., Ralle, B., et al. (2006). “Chemie im Kontext”: A symbiotic implementation of a context-based teaching and learning approach. International Journal of Science Education, 28, 1041–1062.

Pilot, A., & Bulte, A. (2006a). The use of “contexts” as a challenge for chemistry curriculum: Its successes and the need for further development and understanding. International Journal of Science Education, 28, 1087–1112.

Pilot, A., & Bulte, A. (2006b). Why do you ‘need to know’ context-based education. International Journal of Science Education, 28, 953–956.

Plomp, T., Bokhove, C., Dierdorp, A., de Putter-Smits, L., Schaap, S., & Visser, T. (2008). Het dudoc-programma: Onderzoek ter ondersteuning van de vakvernieuwing in de exacte vakken in het vo. In W. Jochems, P. den Brok, T. Bergen, & M. van Eijk (Eds.), Proceedings of the ord 2008—Licht op leren (pp. 396–407).

Roberts, D. A. (1982). Developing the concept of curriculum emphases in science education. Science Education, 66, 243–260.

Roelofs, E. C., Nijveldt, M., & Beijaard, D. (2008). Ontwikkeling van een zelfbeoordelingsinstrument voor docentcompetenties [development of an instrument for self-evaluation of teacher competence]. Pedagogische Studiën, 85, 319–341.

Ryan, G. W., & Bernard, H. R. (2000). Data management and analysis methods. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (2nd ed., pp. 769–802). Thousand Oaks, CA: Sage.

SBL: Stichting beroepskwaliteit leraren en ander onderwijspersoneel [Association for the Professional Qualities of Teachers]. (2004). Good quality teachers for good quality education. http://www.bekwaamheidsdossier.nl/cms/bijlagen/SBLcompetenceprehig.pdf.

Shuell, T. J. (1996). Teaching and learning in a classroom context. In D. C. Berliner & R. C. Calfee (Eds.), Handbook of educational psychology (pp. 726–764). New York: Macmillan.

Shulman, L., & Shulman, J. (2004). How and what teachers learn: A shifting perspective. Journal of Curriculum Studies, 36, 257–271.

Steering Committee Advanced Science, Mathematics and Technology. (2008). Outline of a new subject in the sciences: A vision of an interdisciplinary subject: Advanced science, mathematics and technology. Enschede: SLO. (www.nieuwbetavak-nlt.nl).

Taconis, R., van der Plas, P., & van der Sanden, J. (2004). The development of professional competencies by educational assistants in school-based teacher education. European Journal of Teacher Education, 27, 215–240.

Taylor, P. C., Fraser, B. J., & Fisher, D. L. (1993, April). Monitoring the development of constructivist learning environments. Paper presented at the annual meeting of the American Educational Research Association. New Orleans, LA.

Taylor, P. C., Fraser, B. J., & Fisher, D. L. (1997). Monitoring constructivist classroom learning environments. International Journal of Educational Research, 27, 293–302.

Trochim, W. M., & Donnelly, J. P. (2006). The research method knowledge base (3rd ed.). Cincinnati, OH: Atomic Dog.

van Berkel, B. (2005). The structure of current school chemistry. Doctoral dissertation, Utrecht University.

van Driel, J. H., Bulte, A. M., & Verloop, N. (2005). The conceptions of chemistry teachers about teaching and learning in the context of a curriculum innovation. International Journal of Science Education, 27, 303–322.

van Driel, J. H., Bulte, A. M., & Verloop, N. (2008). Using the curriculum emphasis concept to investigate teachers’ curricular beliefs in the context of educational reform. Journal of Curriculum Studies, 40, 107–122.

van Oers, B. (1998). From context to contextualizing. Learning and Instruction, 8, 473–488.

Vermunt, J. D., & Verloop, N. (1999). Congruence and friction between learning and teaching. Learning and instruction, 9, 257–280.

Vos, M., Taconis, R., Jochems, W., & Pilot, A. (2010). Teachers implementing context-based teaching materials: A framework for case-analysis in chemistry. Chemistry Education Research and Practice, 11, 193–206.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

de Putter-Smits, L.G.A., Taconis, R. & Jochems, W.M.G. Mapping context-based learning environments: The construction of an instrument. Learning Environ Res 16, 437–462 (2013). https://doi.org/10.1007/s10984-013-9143-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10984-013-9143-9