Abstract

Aerosol samples collected on filter media were analyzed using HPGe detectors employing varying background-reduction techniques in order to experimentally evaluate the opportunity to apply ultra-low background measurement methods to samples collected, for instance, by the Comprehensive Test Ban Treaty International Monitoring System (IMS). In this way, realistic estimates of the impact of low-background methodology on the sensitivity obtained in systems such as the IMS were assessed. The current detectability requirement of stations in the IMS is 30 μBq/m3 of air for 140Ba, which would imply ~106 fissions per daily sample. Importantly, this is for a fresh aerosol filter. One week of decay reduces the intrinsic background from radon daughters in the sample allowing much higher sensitivity measurement of relevant isotopes, including 131I. An experiment was conducted in which decayed filter samples were measured at a variety of underground locations using Ultra-Low Background (ULB) gamma spectroscopy technology. The impacts of the decay and ULB are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The Comprehensive Test Ban Treaty (CTBT), when in force, will prohibit nuclear explosions. The Verification Regime of this treaty exists to detect nuclear explosions. Seismic, hydroacoustic, and infrasound waveform technologies ‘listen’ for vibrations in the Earth’s rock, oceans, and atmosphere, respectively. These technologies rapidly obtain information about the size and location of a suspect event. Radionuclide technologies, aerosol and xenon monitoring, exist to capture some of the radioactive atoms emitted by the explosion and by doing so confirm the nuclear nature of the event and possibly screen out unrelated civilian or natural phenomena, such as mining blasts and earthquakes. Aerosol samples of particular relevance to treaty compliance, i.e., samples that show evidence of two or more fission or activation products, are sent to specially certified IMS laboratories for confirmatory measurements at higher sensitivity levels. All these technologies comprise a network of 321 stations around the Earth: the International Monitoring System (IMS) which continuously monitors the environment and transmits data to a central data facility, the International Data Center (IDC), in Vienna, Austria.

The xenon IMS component is particularly valuable for detecting leakage from underground tests, as noble gases are the most likely to escape the containment of an underground test and be detectable via radioactive decay some distance downwind. Xenon was observed as a leakage from a recent underground test [1], showing the value of this method for verification, although xenon leakage from US tests at the Nevada Test Site has been documented previously in DOE/NV-317 [2]. The leakage of radioisotopes from historic US underground testing is more instructive, however, as many more isotopes were observed to leak, and leakage fractions were documented from 10−3 to somewhat below 10−7. Iodine isotopes were the most commonly observed besides xenon. This is not unexpected, as iodine initially exists as a highly volatile gas. In the atmosphere a significant fraction of it reacts rapidly to form aerosol and organic species in addition to gas.

The aerosol monitoring IMS component was originally designed to detect atmospheric tests though the sensitivity of the aerosol equipment, 30 μBq/m3 for 140Ba, is orders of magnitude below that observed for historical atmospheric tests. While the system performance of the aerosol network is computed to verify that the network meets a notional design criterion of 90% probability of detection of a 1 kt atmospheric test within 2 weeks using 140Ba, the consideration of the capability of the aerosol network to detect underground tests is an important but so far unexplored area. The detection of the 2006 event in the DPRK by just one IMS xenon system suggests that improving the chances of a marginal detection in aerosol could greatly increase the confidence of detecting an underground explosion. This is especially important since the xenon network is less dense than the aerosol network, 40 stations compared to 80.

Source strength

To assess the capability of the aerosol network for underground tests, a source strength estimate is needed, together with average atmospheric dilution that would occur between source and station, and the sensitivity of the stations to iodine isotopes. Leakage from US NTS tests can be estimated by using stated yield and leaked activity from DOE/NV-317, assuming 1.4 × 1023 fissions/kt and a nominal decay time for the activity released. From this result, we take the leakage fraction to be 10−7, almost a worst case. That is, one in every 10 million fission atoms escapes containment. This is a very crude analysis, and does not consider many factors such as the impacts of differing rock type, yield level, and containment sophistication. For a hypothetical 1 kt explosion, this means that 1016 fission atoms escape.

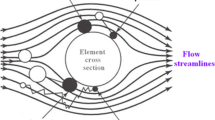

Atmospheric dilution scenario

The dilution expected from a surface release at a distance downwind similar to the spacing of the IMS aerosol stations would be about 1015. For the hypothetical case above, the release of 1016 atoms released, there would be 10 atoms/m3 at the monitoring station. The IMS aerosol systems have a minimum sample size of 12,000 m3, such that, for a plume that covered the monitoring location for a full 24 h, 1.2 × 105 fission debris atoms could be collected, leading to about 3600 131I atoms, and 7200 atoms each of 140Ba and 99Mo. The significance of only a part (perhaps as low as 1/3) of the iodine being in particulate form and the possibility that the aerosol might be too large to be supported by the atmosphere will be reintroduced later.

System sensitivity

The sensitivity (in fissions atoms) for an IMS aerosol system and for several alternative detection systems [3] has been estimated using a simple calculation based on the Currie formulation [4] of the critical limit (Lc) with a 95% confidence level. An IMS aerosol system would detect several isotopes clearly when 106 fission atoms are collected, or about 100 atoms/m3. By comparison, Table 1 compares estimated sensitivity values for a normal IMS station to a variety of detector types (p-type, well type, multi-crystal) in various low background configurations (a 30 m water equivalent (mwe) underground site and a site located deeper than 1000 mwe). The RNL multi-crystal system is described by another paper in this volume (Keillor et al.), and for the purposes of this table is operated as a single large detector, i.e., not in coincidence mode.

Experimental approach

To test the estimates made previously, an experiment was designed to send IMS filters to a series of underground laboratories. A collection and measurement station in Vienna, Austria, compliant to IMS requirements was used; i.e., >12,000 m3 of air collected in 24 h. Each of these filters was handled and measured in the normal IMS procedure, in which a large volume of air is drawn for 24 h, a subsequent 24 h decay period reduces Rn decay products, then an HPGe assay is performed. Afterwards, each filter was compressed to a disk 5 mm thick by 50 mm diameter. The result was a cylindrical geometry that could be placed directly on the endcap of the germanium detector, the closest possible geometry. This leads to summing effects for isotopes with multiple simultaneous gamma emissions, but is nevertheless the most sensitive geometry available for this type of sample and detector where additional reduction of the sample volume by ashing is disallowed.

The samples were rapidly shipped to several laboratories employing background reduction techniques of several kinds: careful shielding, special clean materials for shielding and detector construction, muon veto systems, and location below a burden of shielding material, ranging over three orders of magnitude. The participating laboratories are listed in Table 2.

The goal was for the compressed samples to be measured for about 1 week after 1 week of decay. Some samples were received and measured about a week late, but measurements done at one underground lab (IRMM) covering 3.5 weeks showed that a week of excess decay on the filter was insignificant for the purposes of this work, since the resultant spectrum did not change appreciably and the back-correction for decay could be artificially adjusted to eliminate the unwanted decay. This would not be possible in real operations, of course, as the desired Treaty isotopes would have undergone sensitivity-reducing decay, even though the backgrounds do not reduce appreciably after the first week.

Results and discussion

Given that the filters varied somewhat in the mix of Rn progeny and Be activity, no comparison of this type would fairly reflect the capability of the laboratories involved. The results are intended to show generally what might be accomplished on average, but with only one sample per laboratory, variations in the samples due to local weather in Vienna skew the results to some degree. For instance, one laboratory (IAEA-MEL) received a filter with 1/5 the 7Be activity of the average of the others.

One conclusion is that while low background systems could improve the detection sensitivity to 131I (364 keV, 82% probability) the Compton continuum below the 477 keV 7Be line creates a limiting factor for ultra-low background detectors for all isotopes detected in this energy range, seen in spectra published previously [3]. Above 477 keV, the main limitations of the ultra-low background approach are the efficiency of the detector, the time available for measurement. In fact, the 131I sensitivity via 637 keV line emitted with 7.2% probability is likely better than the 364 keV line in all underground detectors.

The preliminary results from the laboratory measurement are shown in Table 3. Because of the masking effect of 7Be Compton scatters, the 99Mo and 140Ba-La are calculated to be of similar or greater nuclear explosion detection sensitivity than 131I. This comparison is misleading, because of the much higher probability of iodine leakage. Table 4 shows a summary of the frequency of various isotopes being detected in the leakage from US tests at the Nevada Test Site, as compiled from DOE/NV-317. Iodine, specifically 131I, was reported three times as often as 140Ba, and yet 140Ba may have only been observed in gross venting scenarios. Of the 356 individual isotope detections, 219 were iodine isotopes. Of the 80 identified leaking tests, 70 were listed as having measurable iodine. The preference for iodine leakage may be much stronger even than these statistics indicate, and the more complete the containment, the stronger the preference may be.

The comparison of Tables 3 to 1 is interesting. It might be thought that the deepest lab would return the most sensitive results, but that may be impossible for a relatively short measurement (7 days) and where natural radioactivity (7Be, 40K, etc.) remains after the decay period. In fact, the shallowest laboratory had excellent detection capability for every isotope, far better than the Table 1 estimates for the shallow p-type detector, even approaching the deep underground detector (TWIN) in sensitivity. In fact, the 131I signal could endure a factor of ten reduction for fall-out losses near the vent location and a factor of three in speciation loss and still be easily detectable by the shallowest laboratory.

This presents a conclusion that in the scenario discussed above, the use of ultra-low background technology could make 131I detectable by the IMS. Detector size, depth, peak-to-Compton ratio, cosmic veto systems and Compton suppression systems may affect the relative performance of the participating laboratories. These and other factors must be considered to create a tailored, cost effective approach that could be adapted to each of the 16 CTBT radionuclide laboratories. In addition, the laboratory results show that the original calculation approach was conservative. This may suggest that the computed station sensitivity enhancements could be similarly improved.

References

Becker A, Wotawa G, Ringbom A, Saey P (2008) Backtracking of noble gas measurements taken in the aftermath of the announced October 2006 event in North Korea by means of the PTS methods in nuclear source estimation and reconstruction. Geophys Res Abstr, vol 10, SRef-ID: 1607-7962/gra/EGU2008-A-11835

Schoengold CR, DeMarre ME, Kirkwood EM (1996) Radiological effluents released from U.S. continental tests 1961 through 1992, DOE/NV-317 (Rev. 1), UC-702. http://dx.doi.org/10.2172/414107

Miley HS, Aalseth CE, Bowyer TW, Fast JE, Hayes JC, Hoppe EW, Hossbach TW, Keillor ME, Kephart JD, McIntyre JI, Seifert A (2009) Alternative treaty monitoring approaches using ultra-low background measurement. Appl Radiat Isot 67:746–749. doi:10.1016/j.apradiso.2009.01.069

Currie LA (1968) Limits for qualitative detection and quantitative determination. Anal Chem 40(3):568–593

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Aalseth, C., Andreotti, E., Arnold, D. et al. Ultra-low background measurements of decayed aerosol filters. J Radioanal Nucl Chem 282, 731–735 (2009). https://doi.org/10.1007/s10967-009-0307-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-009-0307-0