Abstract

Nearly 40 years ago Congress laid the foundation for federal agencies to engage in technology transfer activities with a primary goal to make federal laboratory research outcomes widely available. Since then, agencies generally rely on universal metrics such as licensing income and number of patents to measure the benefit of their technology transfer program. However, such metrics do not address the requirements set by the current and previous administrations, which require agencies to better gauge the effectiveness and return on investment of their technology programs. Here we evaluate two metrics, filing ratio and transfer rate, and empirically evaluate these metrics using data from Department of the Navy’s most transactionally active laboratory, as well as recently released agency-reported data available from the FY 2015 annual technology transfer report (15 U.S.C. Section 3710). We additionally propose other federally-relevant metrics for which agency data are not currently available. Results presented here indicate that these modernized metrics may potentially fulfill the requirements set by executive guidance. The study findings also point out to other metrics that are relevant to practitioners, program managers, and policymakers in the evaluation of technology transfer programs for better measurement of effectiveness, efficiency, and return on investment.

Similar content being viewed by others

1 Introduction

“Federal technology transfer” classically refers to transactional mechanisms and the means through which innovations stemming from federal laboratories are transferred to the private sector for development and commercialization. Research activities occurring at any specific federal laboratory are typically in support of a particular agency’s mission, such as improving and promoting public health or security. Prior to 1980, there was essentially no emphasis on commercialization and seeing tangible benefits from technologies developed at federal laboratories (Economist 2002). In order to provide maximum benefit to the public from the growing federal expenditure on research activities, congress laid the foundation for federal technology transfer by passing the Stevenson-Wydler Technology Innovation Act of 1980 (PL 96-480). The act required each federal laboratory to establish an Office of Research and Technology Applications (ORTA), staffed by personnel proficient in the technical field of the laboratory and who are capable of performing commercial assessments of the internally-developed technologies, and whose responsibilities include engaging industry partners for the purposes of seeking further development and commercialization of laboratory technologies, as well as general economic development (Spann et al. 1993). In the same year, another landmark law, the Bayh-Dole Act of 1980 (PL 96-517), motivated universities, not-for-profit organizations and small businesses to take control of commercially-relevant inventions resulting from federally-funded research, and transform them into commercial products (Gardner et al. 2010). As a result of these two laws, a significant increase in patenting and licensing activities was observed across all science and technology sectors (Rhoten and Powell 2007).

Since licensing income is an obvious outcome of commercialization activities and is required to be reported under the Technology Transfer Commercialization Act of 2000 (PL 106-404), licensing income as well as patents became common metrics to measure technology transfer programs. This led to a belief that the most successful ORTAs and technology transfer offices (TTOs, the university equivalent to the federal ORTA) are those that are able to generate the most income through licensing (McDevitt et al. 2014). However, use of either patenting activities or licensing income as metrics is highly misleading, especially within the context of a federal laboratory, as the vast majority of patents are never licensed, and it is common that a single license disproportionally accounts for nearly all of the income received by a particular TTO (or ORTA), which may otherwise have low or moderate performance by other measures (Vinig and Lips 2015; Lemley and Feldman 2016). Over the years, a general perception has emerged that investments in federal laboratories have not resulted in adequate returns (Spann et al. 1995), and technology transfer activities at federal laboratories broadly lag behind those in the private sector (Carr 1992a), but relative measures and empirical analyses are hard to come by.

Traditionally, metrics are developed to measure the desired expectations or outcomes, and are aligned with organizational mission. In deciding which technology transfer metrics to use, policymakers and practitioners must examine their underlying motivations for measuring the performance. The classical goal of an ORTA is to transfer commercially relevant technologies to the private sector and make those outcomes broadly available for the nation’s benefit (Spann et al. 1993). As federal laboratories are supported by appropriated funds and as the technologies themselves are nascent, this purpose generally does not prioritize acquiring income from industry partners to augment the cost of federal research (Chapman 1989). Revenue generation through research programs may not be the primary goal of universities (Woodell and Smith 2017; Abrams et al. 2009), even if universities still tend to be more sensitive to economic fluctuations and focus more on generating revenue for the university through the use of licensing and equity positions in spin-offs (Siegel et al. 2007). Given the wide variation in scope, mission and criteria for success across federal agencies and universities, measuring both tangible and intangible effects of technology transfer using a common metric is unrealistic and misleading (Franza and Srivastava 2009).

This lack of relevant metrics to evaluate the benefits of technology transfer activities, especially for federal laboratories, is well recognized (Premus and Jain 2005). A Presidential Memorandum (Obama 2011) attempted to address this by directing agencies to establish performance goals as per their agency’s mission, improve and expand appropriate metrics, and track the pace of technology transfer and commercialization activities. More recently, the President’s Management Agenda further recommended agencies to improve methods of evaluating the return on investment (ROI) in federal laboratories, and develop more effective transfer mechanisms (Trump 2018). Toward this end, federal agencies need to shift away from the use of patents or monetary metrics, and focus more on measurements that specifically gauge the efficiency and effectiveness of technology transfer processes and ROI (Copan 2018).

Toward that end, the purpose of this paper is to examine current technology transfer metrics and both explore and develop metrics that offer a holistic assessment of an agency’s program in an effort to better gauge ongoing efficiency, effectiveness, and an agency’s prudent use of resources. Here, we present improved metrics, and empirically evaluate their use using agency data and an example federal laboratory, and compare against non-federal sector-wide norms. We then discuss our conclusions and implications for policy.

2 Metrics for federal agencies

Literature evaluating technology transfer in federal laboratories is extremely limited, apparently due to the inaccessibility of agency-specific data (Chen et al. 2018; Papadakis 1995; Jaffe et al. 1998). A few studies have developed various theoretical models to assess technology transfer based on expected outcomes (Carr 1992b; Franza and Grant 2006; Landree and Silberglitt 2018). These models focus on measuring outcomes such as number of licenses, number of agreements, royalty income earned, profits, and cost savings. Some agencies have also made efforts to establish guidelines for data collection and provide tools to measure and track technology transfer activity (Rood 1998). In 1987, pursuant to Executive Order 12,591, an Interagency Working Group on Technology Transfer (IAWGTT) was established of policymakers to coordinate technology transfer issues and exchange information (Chapman 1989). Following the Presidential Memorandum of 2011, that same group of policymakers developed guidance for federal agencies regarding the use of appropriate metrics to track their technology transfer activities (IAWGTT 2013), with the data collected then compiled into an annual technology transfer report by National Institute of Standards and Technology (NIST) under the authority of 15 USC 3710(g)(2). A number of measurements are already required to be reported under 15 USC 3710(f), such as reporting the number of patent applications filed and patents received, number of each type of active licenses (exclusive, partially exclusive or nonexclusive) producing income for the agency, disposition of licensing income, and anecdotal examples of public benefit. Given that Cooperative Research and Development Agreements (CRADAs) are the chief way by which federal laboratories and industry collaborate together and CRADAs (rather than license agreements) dominate the formal channels of federal technology transfer, the number of CRADAs are also routinely reported by agencies (Adams et al. 2003; Berman 1994). While those metrics are useful for reporting outcomes, it is notable that none address measures of efficiency related to ongoing practices of the agencies, including measures of “pace” or “effectiveness” explicitly mentioned in the 2011 Presidential Memorandum (Obama 2011) and 2018 President’s management agenda (Trump 2018), respectively. Additionally, the lack of standardization across the agencies for even the currently required metrics, routinely leads to ambiguity between the reporting of data on agency websites, and that reported in the federal-wide annual reports (HHS 2017).

Given the similarity between ORTAs and university TTOs, metrics used by academic institutions were also evaluated. Most studies used surveys to propose numerous metrics that measure direct results of technology transfer activities such as number of patents (Jaffe and Lerner 2001; Hsu et al. 2015), patenting control ratio (Tseng et al. 2014), licensing income (Kim et al. 2008; Anderson et al. 2007) and number of start-ups (Fraser 2009; Ustundag et al. 2011). Souder et al. proposed interesting metrics such as the number of products resulting from technology transfer adopted by users (Souder et al. 1990). Studies have also proposed metrics such as economic benefits (Geisler 1994) and measurement of nonmonetary benefits (Sorensen and Chambers 2008) resulting from technology transfer activities. However, use of such indirect metrics typically involve significant assumptions (Bozeman et al. 2015) and require a long term study to access the benefits resulting from such activities (Hertzfeld 2002). Overall, there are few, if any, measurements that are consistently applied; and although some articles do propose to use number of employees or amount of research funding in dollars as denominators to create comparable ratios, this has not been widely applied.

One article proposed using “Licensing Success Rate” (LSR) as a metric to assess technology transfer operations (Stevens and Kosuke 2013). LSR was calculated by measuring the number of licenses granted out of the total number of invention disclosures received by a TTO in a given year. The average LSR from one hundred and forty-two U.S. institutions over a time period of 20 years (1991–2010) was observed to be 25% (i.e., x licenses/y disclosures = 0.25). Naturally, when the LSR from individual institutions was compared for a given year, a wide distribution (standard deviations of 37.6 for 1993 and 45.2 for the year 2010) was seen. It was also observed that in general academic universities engaged in fundamental research have significantly lower than the average 25% LSR, while those with more applied research programs had higher LSRs. The authors further proposed beating the 25% LSR to be a worthy aspirational goal and the LSR serves as a good metric to determine the efficiency and effectiveness of a TTO (Stevens and Kosuke 2013).

As the LSR metric aligns well with both congressional and executive guidance, we thought to explore a variation of LSR as a metric to evaluate and analyze the activities of federal laboratories. Given the challenge in obtaining data from most federal laboratories (Link et al. 2011; Papadakis 1995; Jaffe et al. 1998), we decided to apply this and other metrics to analyze the activities of the Navy’s most transactionally active ORTA (the ORTA serving laboratories within the Navy Medicine Research and Development enterprise, NMR&D), and also more broadly analyze data from the FY 2015 annual technology transfer report for all agencies (NIST 2018).

3 Metrics to gauge performance

3.1 Filing ratio, a measure of the prudent use of resources

The Filing Ratio can be calculated from currently reported data and is the ratio of number of patent applications that represent a distinct and novel invention filed, divided by total number of new invention disclosures in a fiscal year (FY). The numerator includes either any new US non-provisional or provisional application filed during the fiscal year, excluding double counting with any given invention counting only a single time (i.e., the same invention filed repeatedly or in more than one jurisdiction still counts as a single filing). For example, a new provisional application filing would be counted, but the filing of the corresponding non-provisional application 1 year later, would not. The Filing Ratio can also be applied at the individual laboratory level, thereby providing information related to current practices of the specific laboratory, as well as at an agency level. In both cases it provides insight into how discriminate an agency is and whether or not invention disclosures are judiciously evaluated prior to filing a patent application, indicating how prudent an agency and laboratory are in their use of patenting resources. According to the analyses of Stevens et al., most universities file patent applications for about 60% of the invention disclosures they receive in a year (Stevens 2017). Therefore, using the 60% Filing Ratio as a benchmark industry norm for comparison purposes, a Filing Ratio greater than 60% would be consistent with relatively indiscriminate filing, and a Filing Ratio less than 60% would be consistent with a more judicious approach and prudent use of resources (Fig. 1).

Source: NIST report (2018)

Average filing ratio (± SE) of federal agencies from FY 2011 to 2015. 60% filing ratio is considered to be the university industry-wide norm, and is marked with a dashed line. Values above this would suggest a less discriminate approach towards evaluation of patents, while lower values would indicate a more discriminative approach.

3.2 Transfer rate, a broad measure of effectiveness

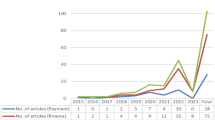

Transfer Rate is the ratio of number of new patent licenses granted over the total number of patent applications filed, and can also be calculated from currently-reported data. Due to significant resources invested in patenting, the metric is restricted to only licenses involving patents and patent applications, and does not include licenses to the non-patented technologies, datasets, or materials that lack patent support. The Transfer Rate differs from LSR (Stevens and Kosuke 2013) because the Transfer Rate provides specific information related to how many of those patented technologies were actually licensed by the agencies. Since the amount of licensing occurring in a given year results from inputs over multiple years (see Fig. 2), the Transfer Rate is a metric that measures laboratory and agency output resulting from activities performed over the current year, as well as recent past years. Collectively, the Transfer Rate and Filing Ratio provide information related to invention-related input and output activities of a laboratory and are easily scalable to the agency level, especially as agencies already track the component metrics (i.e., number of disclosures, number of patent applications filed, and number of licenses). Since the Transfer Rate differs from the LSR metric by tying the ratio to patent filings as opposed to disclosures, in order to calculate a comparable benchmark value to the 25% industry-wide LSR, we used the 60% Filing Ratio noted by Stevens et al. as the TTO industry norm, resulting in the following formula: LSR * 1/Filing Ratio = (number of new patent licenses/number of new disclosures) * (number of new disclosure/number of new patent applications filed) = 0.25/0.6 = 0.4167. Therefore, the industry-wide Transfer Rate is approximately 42%, a number that can be used to gauge each agency’s (and individual laboratory’s) relative effectiveness.

Source: NIST report (2018)

Average transfer rate (± SE) of federal agencies from FY 2011 to 2015. A 42% transfer rate is the industry-wide norm and is marked with a dashed line. Values above the line would suggest more effective programs.

3.3 Applying these measures to laboratories and agencies

We tested the functionality of these two metrics using numbers from the ORTA responsible for NMR&D. Briefly, the NMR&D laboratory enterprise is engaged in a broad spectrum of research and development activities, from basic and applied laboratory-based science to field studies at various sites in the world. The NMR&D ORTA hosts the largest CRADA portfolio in the Navy and routinely executes resource-leveraging partnerships as the primary mechanisms to incent industry to invest resources in a technology before potentially transitioning it to the Navy for use (Hughes et al. 2011). As shown in Table 1, NMR&D patenting staff filed 115 US provisional applications for 138 invention disclosures that were received from FY 2006 to 2016, resulting in a Filing Ratio of 83.4%, which is among the more judicious numbers within the DoD, if still higher than the industry average. Eventually, 99 related subsequent patent applications were filed that claimed priority to those initial provisional applications (69 Non-provisional, 17 Divisional applications (DIVs), 6 Continuation applications (Conts.), 7 Continuation-in-part applications (CIPs)). Over the span of 11 years, 34 domestic patents were ultimately issued to NMR&D, a number that also includes granted DIVs, Conts., and CIPs. Out of those 115 filed provisional applications, the ORTA executed 18 licenses (Table 1), resulting in an average Transfer Rate of 15.6 ± 23.2%, which is high within the context of DoD, albeit notably lower than the benchmark 42% TTO average. We also determined the Transfer Rate for each fiscal year (Table 1). Even though the ORTA had a relatively exceptional year in FY 2008 (Table 1) when 2 out of 8 filed applications were licensed non-exclusively to 5 different companies, other years were comparatively bare, resulting in low average Transfer Rate of 15.6 ± 23.2%; mean ± SD. It is likely that the lower average Transfer Rate is a result of a high Filing Ratio.

Using data from the most recent public technology transfer report (NIST 2018) covering the years FY 2011–2015, we then evaluated the Filing Ratio and Transfer Rate for each agency (Figs. 1, 2). Since patenting activities are higher in some technical fields (e.g., health sciences) and lower in others (e.g., physics or computing) (Finne et al. 2009; Hicks et al. 2001), and there are large differences in agency resourcing, it is worth noting that only 3–4 agencies (DoD, DoE, HHS, and NASA) account for nearly all of the federal government’s patenting and licensing activities. Furthermore, just two agencies (DoD and DoE) together accounted for ~ 80% of the government’s total reported patent filings (Fig. S1). However, as described above, number of patents is an exceedingly poor measure of technology transfer given the high amount of patenting activity of non-commercially relevant technologies. Instead of using the absolute numbers of invention disclosures and new invention filings, if one uses Filing Ratio as the metric, then a better picture emerges about how judicious an agency or laboratory is in their decision to use federal resources to pursue patenting. As shown in the Fig. 1, there are considerable differences in Filing Ratio across the agencies. Five agencies had a Filing Ratio higher than the industry norm (60%).

Although the university industry-wide Transfer Rate is approximately 42%, the government-wide average for the eleven agencies over the analyzed period was 25 ± 18%. It is notable that the Department of Veterans Affairs (VA) and National Aeronautics and Space Administration (NASA) had lower Filing Ratios, yet had higher Transfer Rates relative to several of their governmental peers (see Figs. 1, 2), indicating both a judicial approach towards evaluation of disclosures and generally more effective technology transfer programs overall. Interestingly, although the relevant statute (15 USC 3703) curiously treats the DoD as four distinct agencies (i.e., Army, Navy, Air Force, and the collective remaining DoD agencies), the DoD combines all the metrics into a single annual report making it more of a challenge to evaluate each specific agency. Two agencies (DoI and HHS) achieved the benchmark Transfer Rate of 42% or more, indicating that most agencies appear to not have instituted extensive pre-filing technology evaluation processes. The DoD stands out as having the highest Filing Ratio (> 96%) and the lowest Transfer Rate (< 6%) (Fig. 2); while the DoE, which is also heavily involved in national security by being responsible for the nuclear arsenal, has a Filing Ratio of 55% and a Transfer Rate of 18%.

4 Modernized metrics

4.1 Partnership agreements

Many if not most of the technologies developed in the federal laboratories are early-stage technologies that are not commercially relevant and need further development before they are ready to be commercialized (Lipinski et al. 2013; Papadakis 1995; Ham and Mowery 1998). To better leverage government and private-sector resources for the purpose of developing technologies congress passed the Federal Technology Transfer Act of 1986 (PL 99-502) which enabled federal laboratories to enter into cooperative research and development agreements (CRADA) with non-Federal entities to share facilities, resources, personnel, and expertise (Rogers et al. 1998). In addition to the CRADA authority, many federal agencies use other authorities to enter into research and development agreements (Varner 2012). For example, DoE utilizes “work-for-others” agreements and user facility agreements to permit non-Federal organizations to use its facilities, equipment and technical expertise (Senate 2002); and the National Institutes of Health executes a large number of material transfer and collaboration agreements with academic institutions (a number vastly exceeding their annual number of CRADAs) under the authority of the Public Health Service Act. Since there is widespread benefit to advancing science and developing technologies, a benefit relevant broadly to the federal government and private sector alike, the number of partnership agreements involving non-federal partners, would naturally be a good metric for agencies (Franza and Srivastava 2009). Unfortunately, agencies don’t consistently publicly report data related to these other types of partnership agreements, and so Fig. 3a focuses only on CRADAs and licenses, comparing the number of executed invention licenses and new CRADAs. Limiting partnership agreements to just CRADAs, it is nevertheless readily apparent that CRADAs far out-number number licenses agreements across all agencies for the fiscal years 2011–2015 (NIST 2018). This phenomenon is found at the individual laboratory level as well, as the NMR&D ORTA also established significantly higher numbers of CRADAs (Fig. 3b) than licenses in the past 4 years (2014–2017). The numbers indicate the widespread reliance on, and value of CRADAs and similar resource leveraging partnership agreements to the research and engineering communities, as well as the agencies. Few metrics surrounding these types of interactions have been proposed other than gross counts. If the gross count were filtered according to unique domestic partners, this would provide information regarding how interconnected the agency is, although such a metric would still not address an agency’s effectiveness, efficiency, or any outcome related to a particular transaction. To begin to address this, beyond simply reporting the number of partnership agreements, agencies could include number of personnel, including support personnel that were involved in the reported transactions. Proximal outcomes could similarly be monitored by noting the number of joint peer-reviewed publications (i.e., a publication coauthored by personnel from a federal laboratory together with personnel from a non-federal institution) or patents (Narin et al. 1997) resulting from such collaborations, outcomes that establish a linkages between the partners.

4.2 Measures of process efficiency

Establishing CRADAs and license agreements depends on a number of factors (Kirchberger and Pohl 2016), such as type of collaborator (e.g., small business, non-profit, and industry partner), organizational practices (Siegel et al. 2003; Anderson et al. 2007), people skills (Greiner and Franza 2003) and agency policy affecting the length and complexity of terms within the boilerplate agreements (Bozeman and Crow 1991). These factors can significantly impact the time needed to establish a partnership or licensing agreement. To comply with recent executive guidance, it is important that all federal laboratories establish strategies and set realistic goals to improve the transactional efficiency of various agreements, as well as improve rates of technology licensing. First, though, the transaction times needs to be measured and consistently and transparently reported (e.g., in calendar days). Simple tracking tools to measure the transaction times would help monitor the efficiency of the individual laboratory (and the agency, by extension) by measuring the elapsed time since initiation of a partnership agreement negotiation to its final execution. Since partnerships are established in the form of various projects and stages, organization practices and management of agreements by a highly skilled and empowered staff (Siegel et al. 2003; Swamidass and Vulasa 2009) will have significant impact on the transactional efficiency (Ham and Mowery 1998). The success of technology transfer activities would depend on low levels of bureaucratization (Bozeman and Coker 1992) and a diligent partner who provides substantive response promptly. Toward this end, the DoE recently proposed to reduce the time to establish new traditional CRADAs to 60 days in their technology transfer and commercialization plan (Harrer and Cejka 2011). While agency-wide numbers are not available, NMR&D has averaged 146 ± 12.9 calendar days (mean ± S.E.; n = 187) between FY12-FY17, a number far exceeding the DoE’s aspirational time and warranting its own distinct analysis of the factors contributing to the prolonged time.

One can also measure the time needed to find a licensee for any given invention, and use this information to make prudent decisions regarding maintenance fees and follow-on filings. As an example, while calculating Transfer Rate for this study, we also linked each licensed technology to its year of initial filing, providing information about the average time it took for the NMR&D ORTA to license each licensable technology from its initial filing. It has been reported that universities take an average of 51 months to license a technology to industry partners (Markman et al. 2005). Figure 4, shows that on an average it took the NMR&D ORTA about 38 months to find a suitable licensee. In recent years (2014–2016), the ORTA managed to reduce the average time toof licenseing to 12 months from initial filing (Fig. 4). Here too, use of a simple tracking tool not only provides for metrics related to the time between filing and licensing, it may also help inform patent resourcing decisions as pre- and post-allowance decision points arise.

5 Discussion

Federal agencies keep one-third of the ~ $150 billion U.S. Government R&D budget for internal activities, and recent executive guidance has directed agencies to improve and expand metrics to measure the efficiency, effectiveness and ROI of their technology transfer processes and programs (Obama 2011; Trump 2018). As reported, the current metrics provide little insight into agency technology transfer programs, leaving the requirements and guidance of the current and most recent administrations largely unanswered. Putting aside ROI for the moment, broad measures of agency technology transfer program prudence and effectiveness can nevertheless be calculated using currently reported data, and benchmarked against non-federal counterparts. Specifically, the Filing Ratio and Transfer Rate described here can both be rapidly calculated, and each provides information with which one can readily assess agency programs against their non-federal peers.

The analyses and results presented here stem from agency-reported data, as well as from data taken directly from the Navy’s most transactional ORTA with respect to CRADAs (NIST 2018), and these analyses support the idea that Filing Ratio and Transfer Rate are useful metrics for tracking the prudent use of resources and effectiveness of each agency (and laboratory). It should be noted, however, that the numbers reported in agency reports occasionally differ from those reported in the annual NIST report on federal technology transfer. For example, non-patent licenses (e.g., biological material licenses) were reported by one agency as ‘invention’ licenses in FY2012, FY2013 and FY2015 in the NIST report, and different numbers of filed patent applications have been reported on agency websites (HHS 2017) than reported in the NIST report (NIST 2018). Nevertheless, Filing Ratio and Transfer Rate provide for a meaningful comparison over time within and across agencies.

The higher Filing Ratio for some agencies observed in Fig. 1 indicates that those agencies generally apply a liberal approach towards disclosure evaluation. Although experts in the field are keenly aware that, by the numbers, most patents are not valuable (Allison et al. 2003; Moore 2005) or worth enforcing (Jaffe and Lerner 2006), due to the rather esoteric nature of patents, these facts may not be broadly appreciated by policy makers. Typically, less than 1% of invention disclosures have any major commercial success (Stevens and Burley 1997; Lemley and Feldman 2016); and there is generally little point to over-patenting (Siegel et al. 2004), as this only increases cost for agencies and taxpayers, by extension. Although one might imagine that some strategic patenting might be occurring in the defense sector, the actual number of inventions under secrecy orders due to their subject being deemed ‘detrimental to national security’ is relatively few, numbering 85 for all of FY18 with just over half (43) of those having private sector inventors (Aftergood 2018), indicating that patenting for strictly strategic defense purposes is negligible. The higher costs and lower returns suggests an arbitrary patenting strategy is applied at several agencies (Kordal and Guice 2008). The system would broadly benefit from a more discriminative approach towards evaluating invention disclosures (Ham and Mowery 1998), leading to improved success in getting the patented technologies licensed and commercialized.

In this study, we focused primarily on measuring Transfer Rate of technologies with patent support as a measure of program effectiveness. However, non-patented inventions can account for a significant share of licensing activity (Finne et al. 2009). A metric similar to Transfer Rate that includes licensing of non-patented technologies (e.g., the licensed transfer of know-how, materials, complementary assets, etc.) may provide additional information about commercially-relevant contributions of the federal laboratories (Rantanen 2012). The lower Transfer Rate observed for most agencies in Fig. 2 may also in part be the result of some laboratories focusing on fundamental research that is at such an early stage that it simply fails to interest industry partners. One metric that some agencies use is number of publications cited in US patents. However, it is equally important to also measure number of times a patent is cited in scientific journals or in future patents, especially in private sector patent filings, as this provides a measurement of the laboratory’s or agency’s ‘relevance’ in particular field. This retrospective approach (Lapray and Rebouillat 2014) would reflect on the value (Bessen 2008) and the impact of innovation on the downstream research efforts (Jung 2007), and will help in assessment of the social value of the invention (Van Zeebroeck 2011) and laboratory.

The number of partnership agreements and their transaction time provide measures of agency and laboratory interconnectedness and competence. Measurements of process efficiency related to transactional activity (e.g., CRADAs and licenses) are critically important in order to implement reforms aimed at programmatic improvement (Figs. 3, 4). Naturally, different types of transactions would be expected to have different transaction times; while simple agreements (e.g., material transfer agreements) could be done within a few days, substantive CRADAs could be expected to take up to 60 days, and license agreements up to 120 calendar days. Transactions that are too lengthy are among the most common criticisms from both external and internal stakeholders, and encourage the non-memorialization of activities, leading to the government’s loss of rights to key datasets and potential inventions (Hernandez et al. 2017; Scherer et al. 2018). Unfortunately, although executive guidance explicitly directed improving the ‘pace’ and ‘streamlining’ of transactions, agencies do not generally report transactional efficiency times.

Explicitly noted in the President’s Management Agenda, is ROI. There are various ways to view ROI, ranging from a proximal perspective (e.g., agency outputs/agency inputs) to a more systemic perspective (e.g., [agency outputs + agency-enabled partner outputs]/agency inputs). Although more proximal datasets generally require fewer assumptions, are more reliable, and are more readily reportable, it has been argued that the systemic ROI may be many-fold greater than the initial investment. In either case, to accurately determine ROI as per the new focus (OMB 2018), agencies need to first provide information related to the costs of their technology transfer programs, such as the combined fully-burdened salaries and expenses required to support the establishment of partnership transactions as well as patenting activities. However, it has previously been noted that overhead costs, such as those related to negotiating transactions and prosecuting patents are not publicly reported (Anadon et al. 2016). In light of the Unleashing American Innovation initiative’s new focus on ROI (Copan 2018), agencies may choose (and may ultimately be required) to report this information. A simple proximal ROI metric that agencies could readily report is: Net Costs/# Transactions, which is to say: (Program revenues − Program costs)/(Technology transfer Agreements). Program revenue would naturally include license income as well as revenue received through various technology transfer agreements such as CRADAs. The program costs are all costs associated with the ORTA, including the patenting and support staff. This calculation provides the proximal per transaction costs of an agency’s program, and a positive number registers when programs bring in sufficient revenues to offset their own activities.

However, until agencies start to report their programmatic costs, analyses must make do with the currently reported data. In this report, we have used newly reported data to assess federal agencies relative to their non-federal counterparts. Metrics such as Filing Ratio and Transfer Rate, and expanded conventional metrics such as number of partnership agreements, transactional efficiency, and even proximal ROI described here, are all simple for technology transfer practitioners to apply, can be calculated using existing data and would incent both laboratories and agencies to reflect and implement processes for better outcomes from internal federal scientific research.

References

Abrams, I., Leung, G., & Stevens, A. J. (2009). How are US technology transfer offices tasked and motivated-is it all about the money. Research Management Review,17(1), 1–34.

Adams, J. D., Chiang, E. P., & Jensen, J. L. (2003). The influence of federal laboratory R&D on industrial research. Review of Economics and Statistics,85(4), 1003–1020.

Aftergood, S. (2018). Invention secrecy hits recent high. Secrecy News. Retrieved October 31, 2018 from https://fas.org/blogs/secrecy/2018/10/invention-secrecy-2018/.

Allison, J. R., Lemley, M. A., Moore, K. A., & Trunkey, R. D. (2003). Valuable patents. Geo. Lj,92, 435.

Anadon, L. D., Chan, G., Bin-Nun, A. Y., & Narayanamurti, V. (2016). The pressing energy innovation challenge of the US National Laboratories. Nature Energy,1(10), 16117.

Anderson, T. R., Daim, T. U., & Lavoie, F. F. (2007). Measuring the efficiency of university technology transfer. Technovation,27(5), 306–318.

Berman, E. M. (1994). Technology transfer and the federal laboratories: A midterm assessment of cooperative research. Policy Studies Journal,22(2), 338–348.

Bessen, J. (2008). The value of US patents by owner and patent characteristics. Research Policy,37(5), 932–945.

Bozeman, B., & Coker, K. (1992). Assessing the effectiveness of technology transfer from US government R&D laboratories: The impact of market orientation. Technovation,12(4), 239–255.

Bozeman, B., & Crow, M. (1991). Technology transfer from US government and university R&D laboratories. Technovation,11(4), 231–246.

Bozeman, B., Rimes, H., & Youtie, J. (2015). The evolving state-of-the-art in technology transfer research: Revisiting the contingent effectiveness model. Research Policy,44(1), 34–49.

Carr, R. K. (1992a). Doing technology transfer in federal laboratories (Part 1). The Journal of Technology Transfer,17(2–3), 8–23.

Carr, R. K. (1992b). Menu of best practices in technology transfer (Part 2). The Journal of Technology Transfer,17(2–3), 24–33.

Chapman, R. L. (1989). The federal government and technology transfer implementing the 1986 act: Signs of progress. The Journal of Technology Transfer,14(1), 5–13.

Chen, C., Link, A. N., & Oliver, Z. T. (2018). US federal laboratories and their research partners: A quantitative case study. Scientometrics,115(1), 501–517.

Copan, W. G. (2018). Unleashing American innovation: return on investment. Retrieved November 28, 2018 from https://www.aip.org/sites/default/files/aipcorp/images/fyi/pdf/Copan%20Panel%20ROI%20Initiative%20Presentation%20041918.pdf.

Economist. (2002, Dec. 12). Innovation’s golden goose. The Economist.

Finne, H., Arundel, A., Balling, G., Brisson, P., & Erselius, J. (2009). Metrics for knowledge transfer from public research organisations in Europe. Report from the European Commission’s expert group on knowledge transfer metrics. Retrieved October 2, 2018 from http://ec.europa.eu/invest-in-research/pdf/download_en/knowledge_transfer_web.pdf.

Franza, R. M., & Grant, K. P. (2006). Improving federal to private sector technology transfer. Research-Technology Management,49(3), 36–40.

Franza, R. M., & Srivastava, R. (2009). Evaluating the return on investment for department of defense to private sector technology transfer. International Journal of Technology Transfer and Commercialisation,8(2–3), 286–298.

Fraser, J. (2009). Communicating the full value of academic technology transfer: Some lessons learned. Tomorrow’s Technology Transfer,1(1), 9–20.

Gardner, P. L., Fong, A. Y., & Huang, R. L. (2010). Measuring the impact of knowledge transfer from public research organisations: A comparison of metrics used around the world. International Journal of Learning and Intellectual Capital,7(3–4), 318–327.

Geisler, E. (1994). Key output indicators in performance evaluation of research and development organizations. Technological Forecasting and Social Change,47(2), 189–203.

Greiner, M. A., & Franza, R. M. (2003). Barriers and bridges for successful environmental technology transfer. The Journal of Technology Transfer,28(2), 167–177.

Ham, R. M., & Mowery, D. C. (1998). Improving the effectiveness of public–private R&D collaboration: Case studies at a US weapons laboratory. Research Policy,26(6), 661–675.

Harrer, B., & Cejka, C. (2011). Agreement execution process study: CRADAs and NF-WFO agreements and the speed of business (D. o. Energy, trans.). Richland, Washington: Pacific Northwest National Laboratory. Department of Energy. https://doi.org/10.2172/1008242.

Hernandez, S. H. A., Morgan, B. J., Hernandez, B. F., & Parshall, M. B. (2017). Building academic-military research collaborations to improve the health of service members. Nursing Outlook,65(6), 718–725.

Hertzfeld, H. R. (2002). Measuring the economic returns from successful NASA life sciences technology transfers. The Journal of Technology Transfer,27(4), 311–320.

HHS. (2017). Office of Technology Transfer activities (NIH, CDC, and FDA) FY 2011-2017. Retrieved September 6, 2018 from https://www.ott.nih.gov/reportsstats/ott-statistics.

Hicks, D., Breitzman, T., Olivastro, D., & Hamilton, K. (2001). The changing composition of innovative activity in the US—A portrait based on patent analysis. Research Policy,30(4), 681–703.

Hsu, D. W. L., Shen, Y.-C., Yuan, B. J. C., & Chou, C. J. (2015). Toward successful commercialization of university technology: Performance drivers of university technology transfer in Taiwan. Technological Forecasting and Social Change,92, 25–39.

Hughes, M. E., Howieson, S. V., Walejko, G., Gupta, N., Jonas, S., Brenner, A. T., et al. (2011). Technology transfer and commercialization landscape of the federal laboratories. Institute for the Defense Analyses,27, 2015.

IAWGTT. (2013). Guidance for preparing annual agency technology transfer reports under the Technology Transfer Commercialization Act. http://www.nist.gov/tpo/publications/upload/Metrics-Guidance_Oct2013.pdf.

Jaffe, A. B., Fogarty, M. S., & Banks, B. A. (1998). Evidence from patents and patent citations on the impact of NASA and other federal labs on commercial innovation. The Journal of Industrial Economics,46(2), 183–205.

Jaffe, A. B., & Lerner, J. (2001). Reinventing public R&D: Patent policy and the commercialization of national laboratory technologies. Rand Journal of Economics,32(1), 167–198.

Jaffe, A. B., & Lerner, J. (2006). Innovation and its discontents. Innovation Policy and the Economy,6, 27–65.

Jung, T. (2007). Federal lab patents, licensing, and the value of patents: An explorative study about the licensed patents from NASA. WOPR, March 9.

Kim, J., Anderson, T., & Daim, T. (2008). Assessing university technology transfer: A measure of efficiency patterns. International Journal of Innovation and Technology Management,5(04), 495–526.

Kirchberger, M. A., & Pohl, L. (2016). Technology commercialization: A literature review of success factors and antecedents across different contexts. The Journal of Technology Transfer,41(5), 1077–1112.

Kordal, R., & Guice, L. (2008). Assessing technology transfer performance. Research Management Review,16(1), 1–13.

Landree, E., & Silberglitt, R. (2018). Application of logic models to facilitate DoD laboratory technology transfer. RAND Corporation. Retrieved October 2, 2018 from http://www.rand.org/t/RR2122.

Lapray, M., & Rebouillat, S. (2014). INNOVATION REVIEW: Closed, open, collaborative, disruptive, inclusive, nested… and soon reverse how about the metrics: Dream and reality. International Journal of Innovation and Applied Studies,9(1), 1–28.

Lemley, M. A., & Feldman, R. (2016). Patent licensing, technology transfer, and innovation. American Economic Review,106(5), 188–192.

Link, A. N., Siegel, D. S., & Van Fleet, D. D. (2011). Public science and public innovation: Assessing the relationship between patenting at U.S. National Laboratories and the Bayh-Dole Act. Research Policy,40(8), 1094–1099.

Lipinski, J., Lester, Donald L., & Nicholls, Jeananne. (2013). Promoting social entrepreneurship: Harnessing experiential learning with technology transfer to create knowledge based opportunities. The Journal of Applied Business Research,29(2), 597–606.

Markman, G. D., Gianiodis, P. T., Phan, P. H., & Balkin, D. B. (2005). Innovation speed: Transferring university technology to market. Research Policy,34(7), 1058–1075.

McDevitt, V. L., Mendez-Hinds, J., Winwood, D., Nijhawan, V., Sherer, T., Ritter, J. F., et al. (2014). More than money: The exponential impact of academic technology transfer. Technology & Innovation,16(1), 75–84.

Moore, K. A. (2005). Worthless patents. Berkeley Technology Law Journal,20, 1521.

Narin, F., Hamilton, K. S., & Olivastro, D. (1997). The increasing linkage between US technology and public science. Research Policy,26(3), 317–330.

NIST. (2018). Federal laboratory technology transfer: Fiscal year 2015. Retrieved June 20, 2018 from https://www.nist.gov/tpo/federal-laboratory-interagency-technology-transfer-summary-reports.

Obama, B. H. (2011). Presidential memorandum: Accelerating technology transfer and commercialization of federal research in support of high growth businesses. Retrieved May 1, 2018 from http://www.whitehouse.gov/the-press-office/2011/10/28/presidential-memorandum-accelerating-technology-transfer-and-commerciali.

OMB. (2018). Analytical perspectives, budget of the United States Government. https://www.gpo.gov/fdsys/pkg/BUDGET-2019-PER/pdf/BUDGET-2019-PER.pdf.

Papadakis, M. (1995). The delicate task of linking industrial R&D to national competitiveness. Technovation,15(9), 569–583.

Premus, R., & Jain, R. (2005). Technology transfer and regional economic growth issues. International Journal of Technology Transfer and Commercialisation,4(3), 302–317.

Rantanen, J. (2012). Peripheral disclosure. University of Pittsburgh Law Review, 74, 1–45.

Rhoten, D., & Powell, W. W. (2007). The frontiers of intellectual property: Expanded protection versus new models of open science. The Annual Review of Law and Social Science,3, 345–373.

Rogers, E. M., Carayannis, E. G., Kurihara, K., & Allbritton, M. M. (1998). Cooperative research and development agreements (CRADAs) as technology transfer mechanisms. R&D Management,28(2), 79–88.

Rood, S. A. (1998). Government laboratory technology transfer: Process and impact assessment. Doctoral Dissertation, Virginia Polytechnic Institute and State University, http://hdl.handle.net/10919/30585.

Scherer, R. W., Sensinger, L. D., Sierra-Irizarry, B., & Formby, C. (2018). Lessons learned conducting a multi-center trial with a military population: The Tinnitus Retraining Therapy Trial. Clinical Trials,15(5), 429–435.

Senate, U. (2002). Technology transfer several factors have led to a decline in partnerships at DOE’s laboratories. Technology, 1–40.

Siegel, D. S., Veugelers, R., & Wright, M. (2007). Technology transfer offices and commercialization of university intellectual property: Performance and policy implications. Oxford review of economic policy,23(4), 640–660.

Siegel, D. S., Waldman, D. A., Atwater, L. E., & Link, A. N. (2004). Toward a model of the effective transfer of scientific knowledge from academicians to practitioners: Qualitative evidence from the commercialization of university technologies. Journal of Engineering and Technology Management,21(1–2), 115–142.

Siegel, D. S., Waldman, D., & Link, A. (2003). Assessing the impact of organizational practices on the relative productivity of university technology transfer offices: An exploratory study. Research Policy,32(1), 27–48.

Sorensen, J. A. T., & Chambers, D. A. (2008). Evaluating academic technology transfer performance by how well access to knowledge is facilitated—Defining an access metric. The Journal of Technology Transfer,33(5), 534–547.

Souder, W. E., Nashar, A. S., & Padmanabhan, V. (1990). A guide to the best technology-transfer practices. The Journal of Technology Transfer,15(1), 5–16.

Spann, M. S., Adams, M., & Souder, W. E. (1993). Improving federal technology commercialization: Some recommendations from a field study. The Journal of Technology Transfer,18(3), 63–74.

Spann, M. S., Adams, M., & Souder, W. E. (1995). Measures of technology transfer effectiveness: Key dimensions and differences in their use by sponsors, developers and adopters. IEEE Transactions on Engineering Management,42(1), 19–29.

Stevens, A. J. (2017). An emerging model for life sciences commercialization. Nature Biotechnology,35(7), 608–613. https://doi.org/10.1038/nbt.3911.

Stevens, A. J., & Kosuke, K. (2013). Technology transfer’s twenty-five percent rule. Les Nouvelles, March, 44–51.

Stevens, G. A., & Burley, J. (1997). 3,000 raw ideas = 1 commercial success! Research-Technology Management,40(3), 16–27.

Swamidass, P. M., & Vulasa, V. (2009). Why university inventions rarely produce income? Bottlenecks in university technology transfer. The Journal of Technology Transfer,34(4), 343–363.

Trump, D. J. (2018). President’s management agenda: Modernizing Government for the 21st Century. Retrieved June 20, 2018 from https://www.whitehouse.gov/omb/management/pma/.

Tseng, A. A., Kominek, J., Chabičovský, M., & Raudensky, M. (2014). Performance assessments of technology transfer offices of thirty major US Research universities in 2012/2013. International Journal of Engineering and Technology Innovation,4(4), 195–212.

Ustundag, A., Kahraman, C., Uğurlu, S., & Serdar Kilinc, M. (2011). Evaluating the performance of technology transfer offices. Journal of Enterprise Information Management,24(4), 322–337.

Van Zeebroeck, N. (2011). The puzzle of patent value indicators. Economics of Innovation and New Technology,20(1), 33–62.

Varner, T. R. (2012). An economic perspective on patent licensing structure and provisions. Les Nouvelles, March, 28–36.

Vinig, T., & Lips, D. (2015). Measuring the performance of university technology transfer using meta data approach: The case of Dutch universities. The Journal of Technology Transfer,40(6), 1034–1049.

Woodell, J. K., & Smith, T. L. (2017). Technology transfer for all the right reasons. Technology & Innovation,18(4), 295–304.

Acknowledgements

We thank Ms. Kelly Svendsen for her assistance in gathering information related to the NMR&D patent portfolio.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors report no conflicts of interest. The views expressed in this article are those of the author and do not necessarily reflect the official policy or position of the Department of the Navy, Department of Defense, nor the U.S. Government. TAP is an employee of the U.S. Government. This work was prepared as part of his official duties. Title 17 U.S.C. § 105 provides that “Copyright protection under this title is not available for any work of the United States Government.” Title 17 U.S.C. § 101 defines a U.S. Government work as a work prepared by a military service member or employee of the U.S. Government as part of that person’s official duties.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Fig. S1:

5 years average numbers (± 0.5%) for all federal agencies (FY 2011–2015). (PDF 79 kb)

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Choudhry, V., Ponzio, T.A. Modernizing federal technology transfer metrics. J Technol Transf 45, 544–559 (2020). https://doi.org/10.1007/s10961-018-09713-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10961-018-09713-w