Abstract

In this paper, we propose a new long-step interior point method for solving sufficient linear complementarity problems. The new algorithm combines two important approaches from the literature: the main ideas of the long-step interior point algorithm introduced by Ai and Zhang and the algebraic equivalent transformation technique proposed by Darvay. Similar to the method of Ai and Zhang, our algorithm also works in a wide neighborhood of the central path and has the best known iteration complexity of short-step variants. However, due to the properties of the applied transforming function in Darvay’s technique, the wide neighborhood definition in the analysis depends on the value of the handicap. We implemented not only the theoretical algorithm but a greedy variant of the new method (working in a neighborhood independent of the handicap) in MATLAB and tested its efficiency on both sufficient and non-sufficient problem instances. In addition to presenting our numerical results, we also make some interesting observations regarding the analysis of Ai–Zhang type methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we introduce a new long-step interior point method for solving linear complementarity problems (LCPs). LCPs have a wide range of applications in numerous different fields, for example, solving the Arrow–Debreu market exchange model with Leontief utilities [42], finding equilibrium points in bimatrix games [31], and several engineering applications can be found in the survey [22]. The LCP class also contains the linear programming problem and the quadratic programming problem as special cases. Many of the classical applications and results can be found in the monographs of Cottle et al. [8] and Kojima et al. [29].

In general, the LCP is an NP-complete problem [7], but many efficient algorithms have been introduced assuming that the coefficient matrix has a special property. In this paper, we suppose that the coefficient matrix is a \(\mathcal {P}_*(\kappa )\)-matrix. In this case, a nonnegative number \(\kappa \) can be assigned to the matrix, which is called its handicap. With this assumption, several authors could introduce interior point algorithms that are polynomial in the size of the problem and the handicap. However, de Klerk and E.-Nagy [17] proved that there are matrices for which the value of the handicap is exponential in the problem size.

Based on the used step length, interior point algorithms (IPAs) can be divided into two main groups, short-step, and long-step methods. Even though long-step algorithms perform better in practice, in general, for short-step variants, a better theoretical complexity can be proved, i.e., for many years, there was a gap between theory and practice. Several attempts have been made to resolve this issue based on self-regular functions, kernel functions, and other techniques, e.g., [6, 35, 37].

Ai and Zhang [1] introduced a long-step IPA for solving monotone LCPs. Their method works in a wide neighborhood of the central path and has the best known theoretical complexity of short-step variants. Based on their approach, several authors proposed long-step methods with the best known theoretical complexity, for different problem classes, e.g., for linear optimization [15, 33, 41], horizontal linear complementarity problems (HLCPs) [38], symmetric cone Cartesian \(\mathcal {P}_*(\kappa )\)-HLCPs [4, 5], and also for semidefinite optimization [21, 32, 36].

Darvay [9] introduced the algebraic equivalent transformation (AET) technique to determine new search directions for IPAs. His main idea was to apply a continuously differentiable, invertible function \(\varphi \) to the centering equation of the central path problem. Then, by applying Newton’s method to this transformed system, the new search directions can be determined. A new version of the AET method has been examined in the paper of Darvay and Takács for linear optimization [16], based on a different rearrangement of the centering equation. Using this new type of transformation, recently Darvay et al. introduced a predictor–corrector IPA for sufficient LCPs [13].

By changing the function \(\varphi \), different methods can be introduced. Most IPAs from the literature can be considered as a special case of the AET technique, with the function \(\varphi (t)=t\) (in this case, the central path problem is not transformed). In his first paper, Darvay applied the function \(\varphi (t)=\sqrt{t}\). This function has been used in the paper of Darvay and Rigó as well [15], where they introduced an Ai–Zhang type long-step IPA for linear optimization with the best known theoretical complexity, and using the same function, Illés et al. recently proposed a predictor–corrector IPA in [26]. This function has also been applied by Asadi and Mansouri to \(\mathcal {P}_*(\kappa )\)-HLCPs [3].

The function \(\varphi (t)=t-\sqrt{t}\) has been proposed also by Darvay et al. [14], and in the last few years, it has been applied in several different papers by Darvay and his coauthors. They introduced a corrector–predictor IPA for solving linear programming problems [10] and proposed another corrector–predictor IPA for sufficient LCPs [12], and they also presented a short-step IPA for sufficient LCPs [11].

Moreover, the function \(\varphi (t)=\frac{\sqrt{t}}{2(1+\sqrt{t})}\) has been introduced by Kheirfam and Haghighi [28], to solve \(\mathcal {P}^* (\kappa )\)-LCPs with a short-step IPA.

In this paper, we also apply the AET technique, with the function \(\varphi (t)=t-\sqrt{t}\) and introduce an Ai–Zhang type long-step interior point method for solving sufficient LCPs. This is the first such algorithm, to the best of our knowledge. We prove that our IPA has the best known iteration complexity of short-step variants. This result can be considered as the generalization of the IPA we introduced for linear optimization in [20]. In addition to generalizing the algorithm, we could also improve some of our estimations in [20], and for this reason, better parameter settings can be applied here.

Potra [38] proposed an Ai–Zhang type method for \(\mathcal {P}_*(\kappa )\)-HLCPs. (A \(\mathcal {P}_*(\kappa )\)-HLCP can be equivalently reformulated as a \(\mathcal {P}_*(\kappa )\)-LCP problem [2]). He did not apply the AET method, i.e., in his case \(\varphi \) can be considered as the identity function. In that case, the convergence and best known complexity of the method could be proved using the original, \(\kappa \)-independent neighborhood of Ai and Zhang. For our method using the function \(\varphi (t)=t-\sqrt{t}\), with the current estimations, the convergence cannot be proved, assuming that the neighborhood does not depend on the handicap. Still, the result is interesting because it shows that the AET technique can be applied for Ai–Zhang type methods, even in the case of \(\mathcal {P}_*(\kappa )\)-LCPs. Our main goal is to identify a class of transforming functions, where, similar to the result of Potra, a \(\kappa \)-independent neighborhood can be applied, and another wider class, where, similar to the case examined in this paper, the analysis can be carried out only in a \(\kappa \)-dependent neighborhood.

Throughout this paper, the following notations will be used. We denote scalars and indices by lowercase Latin letters and vectors by bold lowercase Latin letters. Matrices are denoted by uppercase Latin letters. We denote sets by capital calligraphic letters. \(\mathbb {R}^n_+\) denotes the set of n-dimensional vectors with strictly positive coordinates, and \(\mathbb {R}^n_{\oplus }\) is the set of n-dimensional nonnegative vectors. Let f(x) be a univariate function, and let \(\textbf{x} \in \mathbb {R}^n\) be a given vector. Then, \(f(\textbf{x})\) denotes the vector \(f(\textbf{x})=\left[ f(x_1), \ldots , f(x_n) \right] ^\top \). Let \(\textbf{u},\textbf{v} \in \mathbb {R}^n\) be two given vectors. Then, \(\textbf{u}\textbf{v}\) is the Hadamard product (namely, the componentwise product) of \(\textbf{u}\) and \(\textbf{v}\). If \(v_i \ne 0\) holds for all indices i, then the fraction of \(\textbf{u}\) and \(\textbf{v}\) is the vector \(\textbf{u}/\textbf{v}=[u_1/v_1,\ldots ,u_n/v_n]^\top \). If \(\alpha \in \mathbb {R}\), let \(\textbf{u}^{\alpha }=[u_1^{\alpha },\ldots , u_n^{\alpha }]^\top \).

Let \(\mathcal {I}\) denote the index set \(\mathcal {I}=\{1,\ldots ,n\}\). We denote the positive and negative part of the vector \(\textbf{u}\) by \(\textbf{u}^+\) and \(\textbf{u}^-\), i.e.,

where the maximum and minimum are taken componentwise. We use the standard notation \(\Vert \textbf{u} \Vert \) for the Euclidean norm of \(\textbf{u}\), \(\Vert \textbf{u} \Vert _1=\sum _{i=1}^n |u_i|\) denotes the Manhattan-norm of \(\textbf{u}\), and \(\Vert \textbf{u} \Vert _{\infty }=\max _{i=1}^n |u_i|\) is the infinity norm of \(\textbf{u}\). The matrix \(\text {diag} (\textbf{u})\) is the diagonal matrix with the elements of the vector \(\textbf{u}\) in its diagonal. Finally, \(\textbf{e}\) denotes the vector of all ones.

This paper is organized as follows. Section 2 summarizes the most important properties of LCPs and the related matrix classes. In Sect. 3, we give an overview of the algebraic equivalent transformation technique and the method of Ai and Zhang. In Sect. 4, we introduce a new, Ai–Zhang type wide neighborhood and describe our new algorithm. In Sect. 5, we prove that the method is convergent and has the best known iteration complexity. Section 6 presents our numerical results. In Sect. 6.2, we make some interesting observations on the coordinates of the vector \(\textbf{v}\). Section 7 summarizes our results.

2 The Linear Complementarity Problem

Let us consider the linear complementarity problem (LCP) in the following form:

where \(M \in \mathbb {R}^{n \times n}\) and \(\textbf{q} \in \mathbb {R}^n\) are given, and our goal is to find a vector pair \((\textbf{x}, \textbf{s}) \in \mathbb {R}^n \times \mathbb {R}^n\) that satisfies the system.

Let \(\mathcal {F}=\{(\textbf{x},\textbf{s}):\ -M \textbf{x}+\textbf{s} = \textbf{q}, \textbf{x} \ge \textbf{0},\ \textbf{s} \ge \textbf{0} \} \) denote the set of feasible solutions, \(\mathcal {F}_+=\{(\textbf{x},\textbf{s}) \in \mathcal {F}: \ \textbf{x}> \textbf{0},\ \textbf{s} > \textbf{0} \}\) the set of strictly positive feasible solutions and \(\mathcal {F}^*=\{(\textbf{x},\textbf{s}) \in \mathcal {F}: \textbf{x} \textbf{s} = \textbf{0} \}\) the set of solutions to the linear complementarity problem.

The class of sufficient matrices has been introduced by Cottle et al. [8]. A matrix \(M \in \mathbb {R}^{n \times n}\) is column sufficient if the following implication holds for all \(\textbf{x} \in \mathbb {R}^n\):

M is row sufficient if \(M^T\) is column sufficient, and a matrix M is sufficient if it is both row and column sufficient.

Kojima et al. [29] introduced the class of \(\mathcal {P}_* (\kappa )\)-matrices. Let \(\kappa \) be a given nonnegative number. A matrix \(M \in \mathbb {R}^{n \times n}\) is a \(\mathcal {P}_*(\kappa )\)-matrix, if

holds for all \(\textbf{x} \in \mathbb {R}^n\). This class can be considered as the generalization of positive semidefinite matrices since \(\mathcal {P}_*(0)\) is the set of positive semidefinite matrices.

The smallest \(\kappa \) value for which M is a \(\mathcal {P}_* (\kappa )\)-matrix is called the handicap of M. The matrix class \(\mathcal {P}_*\) can be defined in the following way:

Kojima et al. [29] proved that if a matrix belongs to the set \(\mathcal {P}_*\), then it is column sufficient. Later, Guu and Cottle [24] showed that a \(\mathcal {P}_*\)-matrix is also row sufficient, meaning that all \(\mathcal {P}_*\)-matrices are sufficient. Väliaho [39] proved the other inclusion; therefore, the class of sufficient matrices is equivalent to the class of \(\mathcal {P}_*\)-matrices.

2.1 The Central Path Problem

The central path problem of LCP can be formulated as follows:

where \(\nu >0\) is a given parameter.

The next theorem highlights the importance of the \(\mathcal {P}_*\) matrix class. Illés et al. [27] gave an elementary proof of these statements in an unpublished manuscript in 1997. The proof can be found in [34].

Theorem 2.1

[27, Corollary 4.1] Let us consider a linear complementarity problem with a \(\mathcal {P}_*(\kappa )\) coefficient matrix M. Then, the following three statements are equivalent:

-

1.

\(\mathcal {F}^+ \ne \emptyset \).

-

2.

\(\forall \ \textbf{w} \in \mathbb {R}^n_{+}\) \(\exists ! \ (\textbf{x},\textbf{s}) \in \mathcal {F}^+:\) \(\textbf{xs}=\textbf{w}\).

-

3.

\(\forall \ \nu >0\) \(\exists !\) \((\textbf{x},\textbf{s}) \in \mathcal {F}^+:\) \(\textbf{xs}=\nu \textbf{e}\).

According to the last statement, for \(\mathcal {P}_*(\kappa )\) linear complementarity problems, when \(\mathcal {F}^+ \ne \emptyset \), the central path exists and it is unique. Moreover, as \(\nu \) tends to 0, the solutions of the central path problem (1) converge to a solution of the LCP.

From now on, we assume that the coefficient matrix M of the LCP is sufficient, more precisely \(\mathcal {P}_*(\kappa )\); furthermore, \(\mathcal {F}_+ \ne \emptyset \) and an initial point \((\textbf{x}_0, \textbf{s}_0) \in \mathcal {F}_+\) is given.

3 The Theoretical Background of the Algorithm

As it has already been mentioned in the introduction, our method combines two important results from the literature, the algebraic equivalent transformation (AET) tech- nique proposed by Darvay [9] and the main approach of the long-step IPA introduced by Ai and Zhang [1].

According to the AET technique, we apply a continuously differentiable function \(\varphi : (\xi , \infty ) \rightarrow \mathbb {R}\) with \(\varphi ' (t)>0\) for all \(t \in (\xi , \infty )\), \(\xi \in [0,1)\) to the central path problem (1):

If we apply Newton’s method to system (2), we obtain

As can be seen from the previous formulation, the right-hand side of the system (3) depends on the choice of the function \(\varphi \), and by modifying \(\varphi \), we can determine different search directions and introduce new interior point algorithms.

One of the important ideas of Ai and Zhang was to decompose the Newton directions into positive and negative parts and use different step lengths for the two components. Namely, we consider the following two systems:

where \(\textbf{a}_{\varphi }^+\) and \(\textbf{a}_{\varphi }^-\) are the positive and negative parts of the vector \(\textbf{a}_{\varphi }\), respectively.

It is important to notice that the coordinates of \(\varDelta \textbf{x}_+\) are not necessarily nonnegative, since this is the solution of the system with the positive part of \(\textbf{a}_{\varphi }\) on the right-hand side (we have a subscript in the notation, instead of a superscript). The similar can be stated for \(\varDelta \textbf{x}_-\), \(\varDelta \textbf{s}_+\) and \(\varDelta \textbf{s}_-\) as well.

If \(\alpha _1\) and \(\alpha _2\) are given step lengths, after solving the systems (4), we can calculate the new iterates as

To simplify the analysis of interior point methods, we usually work with a scaled version of the Newton-system. To determine the scaled systems from (4), we introduce the following notations:

Let \(D=\text {diag}(\textbf{d})\) and \(\overline{M}=DMD\), then the scaled systems can be written as

where

In this paper, we focus on the function \(\varphi (t)=t-\sqrt{t}\), which has been introduced by Darvay et al. for linear optimization [14]. In this case,

Since we fixed the function \(\varphi \), from now on we simply use the notation \(\textbf{p}\) instead of \( \textbf{p}_{\varphi }\).

Throughout the analysis, we need to ensure that \(\textbf{p}\) is well defined. Therefore, we assume that \(v_i > 1/2 \) is satisfied for all \(i \in \mathcal {I}\).

Because of the decomposition applied in Ai–Zhang type methods, we also introduce the notation for the index sets \(\mathcal {I}_+\) and \(\mathcal {I}_-\). Let \(\mathcal {I}_+=\{ i \in \mathcal {I}: x_i s_i \le \tau \mu \}=\{i \in \mathcal {I}: v_i \le 1\}\), and \(\mathcal {I}_-=\mathcal {I} \setminus \mathcal {I}_+\). Notice that under the assumption \(v_i > 1/2\) for all index \(i\in \mathcal {I}\), the nonnegativity of a coordinate \(p_i\) is equivalent to \(i \in \mathcal {I}_+\).

The vector \(\textbf{p}\) has been defined as a componentwise transformation of the vector \(\textbf{v}\); therefore, let p denote the transforming function, namely for which \(p(v_i)=p_i\) holds for all \(v_i \in (1/2, \infty )\), i.e.,

In the analysis, we use some estimations on the function p, namely for all \(t\in (1/2, \infty )\)

From now on, we fix the value of \(\nu \) as \(\tau \mu \), where \(\tau \in (0,1)\) is a given update parameter, and \(\mu =\frac{\textbf{x}^T \textbf{s}}{n}\), i.e., if in the current iteration we are in the point \((\textbf{x},\textbf{s}) \in \mathcal {F}^+\), then our goal is to take a step toward the \(\tau \mu \)-center, that is, toward the solution of the central path problem (1) for \(\nu =\tau \mu \).

4 The Algorithm

The neighborhood we use is based on the approach of Ai and Zhang [1]; however, we slightly modified their definition. To achieve the desired complexity, we limit only the norm of the positive part of the vector \(\textbf{p}\), while the paper of Ai and Zhang uses the norm of the vector \(\textbf{vp}^+\). Furthermore, our definition depends on the handicap of the matrix and \(0< \beta < 1/2\) is a given real number. Due to the properties of the function \(\varphi (t)=t-\sqrt{t}\), we also need to ensure that the technical condition \(\textbf{v}>1/2 \textbf{e}\) is satisfied throughout the iterations; therefore, it is also included in the definition of the neighborhood:

The wide neighborhood \(\mathcal {N}_{\infty }^- (1-\tau )\) has been introduced by Kojima et al. [30]:

The following lemma shows that \(\mathcal {W}(\tau ,\beta ,\kappa )\) is indeed a wide neighborhood.

Lemma 4.1

Let \(0< \tau <1\) and \(0< \beta < 1/2\) be given parameters, and let \(\gamma =\tau \left( 1-\frac{\beta }{2(1+4\kappa )} \right) ^2\). Then,

holds.

Proof

If \((\textbf{x}, \textbf{s}) \in \mathcal {N}_{\infty }^-(1-\tau )\), then \((\tau \mu - x_i s_i )^+=0\) for all \(i \in \mathcal {I}\), therefore \(\Vert \textbf{p}^+ \Vert =0 < \beta /(1+4\kappa )\). The condition \(\textbf{v}> 1/2 \textbf{e}\) is also satisfied since \(v_i^2=(x_i s_i)/(\tau \mu ) \ge 1 >1/4\) for all \(i \in \mathcal {I}\).

For the other inclusion, let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\) and assume that there exists an index \(i \in \mathcal {I}\) for which \(x_i s_i < \gamma \mu \) holds. In this case, \(v_i^2=\frac{x_i s_i}{\tau \mu } < \frac{\gamma }{\tau }= \left( 1-\frac{\beta }{2(1+4\kappa )} \right) ^2\). Using (5), we get

which is a contradiction. \(\square \)

Remark 4.1

Let \(\tilde{\gamma }=\tau \left( 1-\beta /2 \right) ^2\). Since \(\gamma >\tilde{\gamma }\), \(\mathcal {W}(\tau , \beta , \kappa ) \subseteq \mathcal {N}_{\infty }^- (1-\tilde{\gamma })\) also holds.

In the next corollary, we give lower and upper bounds on the coordinates of \(\textbf{v}\).

Corollary 4.1

If \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau ,\beta ,\kappa )\) then

-

1.

\( 1-\frac{\beta }{2(1+4\kappa )}\le v_i \le 1\) for all \(i \in \mathcal {I}_+\),

-

2.

\(1<v_i \le \sqrt{n/\tau }\) for all \(i \in \mathcal {I}_-\).

Proof

The first statement follows from the second inclusion of Lemma 4.1. Furthermore, \(v_i \le \sqrt{n/\tau }\) is satisfied for all \(i \in \mathcal {I}\), since

\(\square \)

Since we have already defined all main elements of our method, we are ready to give its pseudocode:

5 Analysis of the Algorithm

The next lemma contains some well-known results from the theory of interior point algorithms for the Newton-system of sufficient LCPs. The proof of the first and third statements can be found in [29], and for the second statement, see, for example, [23, Lemma 2].

Lemma 5.1

Let us consider the following system:

where M is a \(\mathcal {P}_*(\kappa )\)-matrix, \(\textbf{x}, \textbf{s} \in \mathbb {R}^n_+\) and \(\textbf{a} \in \mathbb {R}^n\) are given vectors.

-

1.

The system then has a unique solution \((\varDelta \textbf{x},\varDelta \textbf{s})\).

-

2.

The next estimations hold for the solutions of the above system:

$$\begin{aligned} \left\| \varDelta \textbf{x} \varDelta \textbf{s} \right\| _r \le \frac{2^{1/r}+4\kappa }{4} \left\| \frac{\textbf{a}}{\sqrt{\textbf{xs}}}\right\| ^2\quad \text {for}\quad r=1,2,\infty , \end{aligned}$$where \(1/\infty :=0\).

-

3.

\(-\kappa \left\| \frac{\textbf{a}}{\sqrt{\textbf{xs}}}\right\| ^2 \le \varDelta \textbf{x}^T \varDelta \textbf{s} \le \frac{1}{4} \left\| \frac{\textbf{a}}{\sqrt{\textbf{xs}}}\right\| ^2 \).

We need to prove that after an iteration the decrease in the duality gap is suitable and that the new iterate will also be in the neighborhood. Therefore, we examine the new iterate after taking a Newton-step with step length \(\alpha =(\alpha _1,\alpha _2)\), where \(\alpha _1,\alpha _2 \in (0,1]\) are given.

Let us introduce the following notations:

Using these notations, \(\textbf{x}(\alpha ) \textbf{s} (\alpha )=(\textbf{x}+ \alpha _1 \varDelta \textbf{x}_- + \alpha _2 \varDelta \textbf{x}_+)(\textbf{s}+ \alpha _1 \varDelta \textbf{s}_- + \alpha _2 \varDelta \textbf{s}_+)\) can be written as

Corollary 5.1

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W} (\tau , \beta , \kappa )\) and \(\alpha _1, \alpha _2 \in (0,1]\) be given. Then,

where \(1/\infty :=0\), and

Proof

The vector \(\textbf{dx}(\alpha ) \textbf{ds}(\alpha )\) is the same as \(\tau \mu \varDelta \textbf{x}^{\alpha } \varDelta \textbf{s}^{\alpha }\), where \(\varDelta \textbf{x}^{\alpha }\) and \(\varDelta \textbf{s}^{\alpha }\) are the solutions of the system

since \(\mathcal {I}_{-} \cap \mathcal {I}_{+}=\emptyset \).

If we apply Lemma 5.1 to the above system, we get

since \(\frac{\tau \mu \textbf{v}}{\sqrt{\textbf{xs}}}=\sqrt{\tau \mu }\).

Furthermore, \(\left\| \alpha _1 \textbf{p}^-+ \alpha _2 \textbf{p}^+ \right\| ^2=\alpha _1^2 \left\| \textbf{p}^- \right\| ^2 + \alpha _2^2 \left\| \textbf{p}^+ \right\| ^2\) by the orthogonality of \(\textbf{p}^-\) and \(\textbf{p}^+\). According to the definition of the neighborhood \(\mathcal {W}(\tau ,\beta ,\kappa )\), \( \left\| \textbf{p}^+ \right\| ^2 \le \frac{\beta ^2}{(1+4\kappa )^2}\). Moreover,

In the first inequality we applied (6), and the second follows from (8).

From these estimations, the first statement of the corollary follows. The inequalities regarding the scalar product \( \textbf{dx}(\alpha )^T \textbf{ds}(\alpha )\) can be proved similarly. \(\square \)

We need to give a lower bound on the coordinates of \(\textbf{h}(\alpha )\). The proof of the following two statements remains the same as it was in the LP case [20]; therefore, we do not present them here. Later on, the analysis becomes more complicated than in [20], since the search directions here are not orthogonal.

Lemma 5.2

[20, Lemma 2] \(h_i (\alpha ) \ge \tau \mu \) holds for all \(i \in \mathcal {I}_-\).

In the following lemma, we give a strictly positive lower bound for all coordinates of \(\textbf{h} (\alpha )\).

Lemma 5.3

[20, Lemma 3] If \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), then \(\textbf{h}(\alpha ) \ge \gamma \mu \textbf{e}\), and consequently \(\textbf{h}(\alpha ) > \textbf{0}\).

From now on, we use the step lengths \(\alpha _1=\frac{1}{1+4 \kappa } \sqrt{\frac{\beta \tau }{n}}\) and \(\alpha _2=1\). From \(\alpha _2=1\), it follows that

holds for all indices, not just for the ones in \(\mathcal {I}_-\). Indeed, by the inequality (7), we get \(h_i(\alpha )=\tau \mu (v_i^2+\alpha _2 v_ip_i)\ge \tau \mu (1-(1-\alpha _2)v_ip_i)\) for all \(i\in \mathcal {I}_+\).

We have to prove that the new iterates are strictly positive, i.e., \(\textbf{x}(\alpha )>\textbf{0}\) and \(\textbf{s}(\alpha )>\textbf{0}\) holds.

Lemma 5.4

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), \(\alpha _1=\frac{1}{1+4 \kappa } \sqrt{\frac{\beta \tau }{n}}\) and \(\alpha _2=1\). Then,

Proof

By applying (9) and then Corollary 5.1, we get the following:

\(\square \)

To prove that the new iterates (\(\textbf{x}(\alpha )\) and \(\textbf{s}(\alpha )\)) are strictly positive, we can apply Proposition 3.2 by Ai and Zhang [1]. They analyze the case of monotone LCPs, but the properties of the coefficient matrix do not have any role in their proof, and therefore, it can be used in this more general setting as well.

Proposition 5.1

[1, Proposition 3.2] Let \((\textbf{x,s})\in \mathcal {F}^+\) and \(( \varDelta \textbf{x}, \varDelta \textbf{s})\) be the solution of the system

If \(\textbf{z}+\textbf{xs}>0\) and \((\textbf{x}+t_0 \varDelta \textbf{x})(\textbf{s}+t_0 \varDelta \textbf{s})>0\) holds for some \(t_0 \in (0,1]\), then \(\textbf{x}+t \varDelta \textbf{x}>0\) and \(\textbf{s}+t \varDelta \textbf{s}>0\) for all \(t \in (0,t_0]\).

To prove the positivity of the vectors \(\textbf{x}(\alpha )\) and \(\textbf{s}(\alpha )\), we apply Proposition 5.1 with \(\textbf{z}=\tau \mu (\alpha _1\textbf{v}\textbf{p}^-+\alpha _2\textbf{v}\textbf{p}^+)\). Since \(\textbf{z}+\textbf{x}\textbf{s}=\textbf{h}(\alpha )>0\) by Lemma 5.3, it is enough to prove that their Hadamard product is positive. This is satisfied for all \(\beta \in [0,1]\), because in this case

holds.

5.1 Estimation of the Change in the Duality Gap

The next two lemmas examine the change in the duality gap \(\mu (\alpha )=\frac{\textbf{x}(\alpha )^T \textbf{s}(\alpha )}{n}\) after the Newton-step.

Lemma 5.5

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), \(\alpha _1=\frac{1}{1+4 \kappa } \sqrt{\frac{\beta \tau }{n}}\) and \(\alpha _2=1\). Then,

Proof

By the definition of \(\mu (\alpha )\),

First, we give an upper bound on the expression \(\textbf{v}^T \textbf{p}^+\):

The first equality holds since the vector \(\textbf{v} \textbf{p}^+\) is nonnegative, and the first estimation can be shown using the Cauchy–Schwartz inequality. The second inequality can be verified using the property that \(v_i \le 1\) when \(i \in \mathcal {I}_+\) and for the last estimation, we used the definition of the neighborhood \(\mathcal {W}(\tau ,\beta , \kappa )\).

We can estimate the term \( \textbf{v}^T \textbf{p}^-\) using \(v_i>1\) for all \(i \in \mathcal {I}_-\):

Combining (11) and (12), and using Corollary 5.1, we obtain

Since \(\alpha _2=1=(1+4\kappa ) \sqrt{\frac{n}{\beta \tau }} \alpha _1\), we get

\(\square \)

This result shows that the step length \(\alpha _1\) is responsible for the decrease in the duality gap in our analysis, i.e., by choosing its value properly, we can prove the convergence and desired complexity of the method. According to our estimation (13), the terms multiplied by \(\alpha _2\) increase the duality gap, but this step length has an important role in ensuring that the new iterates remain in the neighborhood \(\mathcal {W}(\tau ,\beta ,\kappa )\), as it will be discussed later.

For the correctness of our algorithm, we need to ensure that the duality gap decreases after every iteration.

Corollary 5.2

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), \(\alpha _1=\frac{1}{1+4 \kappa } \sqrt{\frac{\beta \tau }{n}}\), \(\alpha _2=1\), \(\beta \in \left( 0,\frac{1}{2} \right] \), \(\tau \in \left( 0,\frac{1}{4} \right] \) and \(\beta \tau \le \frac{1}{16}\). Then,

holds.

Proof

The following expression is monotone decreasing both in \(\tau \) and \(\beta \); thus, considering the upper bounds \(\beta \le \frac{1}{2}\), \(\tau \le \frac{1}{4}\) and \(\beta \tau \le \frac{1}{16}\), we get a positive lower bound on it:

Therefore, the statement holds by Lemma 5.5. \(\square \)

To ensure that the iterates stay in the neighborhood \(\mathcal {W}(\tau , \beta , \kappa )\), we need a lower bound on the duality gap after a Newton-step.

Lemma 5.6

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), then

Proof

where we used the estimation

that follows from (6) and (8). \(\square \)

5.2 The New Iterates Stay in the Neighborhood \(\mathcal {W}(\tau , \beta , \kappa )\)

To guarantee that the new points after taking the Newton-step stay in the neighborhood \(\mathcal {W}(\tau , \beta , \kappa )\), we need to choose the values of the parameters \(\tau \) and \(\beta \) properly.

First, we need to ensure that all coordinates of the vector \(\textbf{v}(\alpha )=\sqrt{\frac{\textbf{x}(\alpha )\textbf{s}(\alpha )}{\tau \mu (\alpha )}}\) are greater than 1/2.

Lemma 5.7

Let \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta , \kappa )\), \(\alpha _1=\frac{1}{1+4 \kappa } \sqrt{\frac{\beta \tau }{n}}\) and \(\alpha _2=1\). Then,

Proof

Using Lemma 5.4 and Corollary 5.2, we obtain

To prove the statement, it is enough to show that

which is satisfied for all \(\beta \in [0,1]\). \(\square \)

Finally, we need to show that \(\left\| \textbf{p}(\alpha )^+ \right\| \le \frac{\beta }{1+4\kappa }\) holds. To be able to prove this, we need the following technical lemma:

Lemma 5.8

Let \(\alpha _1=\frac{1}{1+4\kappa }\sqrt{\frac{\beta \tau }{n}}\) and \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta ,\kappa )\). Then

Proof

By Lemma 5.2, we have \(\tau \mu (\alpha )- h_i(\alpha ) \le 0\) for all \(i \in \mathcal {I}_-\), therefore, we need to consider indices only from \(\mathcal {I}_+\).

From (7), it follows that

For all \(i \in \mathcal {I}_+\), \(h_i(\alpha )=\tau \mu (v_i^2 + \alpha _2 v_i p_i)\). Using this definition, Corollary 5.2 and (15), we obtain

This, together with the definition of \(\mathcal {W}(\tau ,\beta ,\kappa )\), yields

This result shows that if we fix the value of \(\alpha _2\) as 1, then \(\tau \mu (\alpha )- h_i(\alpha ) \le 0\) holds for the indices from \(\mathcal {I}_+\) as well, i.e., \(\Vert (\tau \mu (\alpha )\textbf{e}-\textbf{h}(\alpha ))^+ \Vert =0\) in this case. This also follows from (9).

Lemma 5.9

Let \(\beta \in \left( 0,\frac{1}{2} \right] \), \(\tau \in \left( 0,\frac{1}{4} \right] \) and \(\beta \tau \le \frac{1}{16}\). If \(\alpha _1=\frac{1}{1+4\kappa }\sqrt{\frac{\beta \tau }{n}}\), \(\alpha _2=1\) and \((\textbf{x}, \textbf{s}) \in \mathcal {W}(\tau , \beta ,\kappa )\), then the new point \((\textbf{x}(\alpha ), \textbf{s}(\alpha )) \in \mathcal {W}(\tau , \beta , \kappa )\).

Proof

We need to prove that

We can give an upper bound on the norm in the following way:

Let \(q: \left( \frac{1}{2}, \infty \right) \rightarrow \mathbb {R}\) and \(q(t)=\frac{2t}{2t^2+t-1}\). This function is strictly decreasing in its domain; therefore, using the discussed lower bound of \(\textbf{v}(\alpha )\) (14) and substituting the upper bound of \(\beta \), the first term in (16) can be estimated as

For the other term, we use Corollary 5.1 and Lemma 5.8:

From Lemma 5.6, we have

since

for all \(\kappa \) values. Using the previous estimation and using \(\alpha _2=1\), from (18) we obtain

This upper bound is a monotone increasing function of \(\beta \) and \(\tau \), so using their maximal values and the assumption that \(\beta \tau \le \frac{1}{16}\), we can get the following upper bound

therefore, the new point is in the neighborhood \(\mathcal {W}(\beta ,\tau ,\kappa )\). \(\square \)

5.3 The Complexity of the New Algorithm

Theorem 5.1

Let \(\beta \in \left( 0,\frac{1}{2}\right] \), \(\tau \in \left( 0,\frac{1}{4}\right] \) such that \(\beta \tau \le \frac{1}{16}\), furthermore \(\alpha _1=\frac{1}{1+4\kappa }\sqrt{\frac{\beta \tau }{n}}\), \(\alpha _2=1\), and suppose that a starting point \((\textbf{x}_0, \textbf{s}_0) \in \mathcal {W}(\tau ,\beta ,\kappa )\) is given. The algorithm then provides an \(\varepsilon \)-optimal solution in

iterations.

Proof

According to Lemma 5.5, the following holds for the duality gap in the kth iteration:

By rearranging, we get

Therefore, \(\textbf{x}_k^T \textbf{s}_k \le \varepsilon \) holds if

is satisfied.

Taking the natural logarithm of both sides yields

Using the inequality \(-\log (1-\vartheta ) \ge \vartheta \), it is enough to prove that

The last inequality is satisfied when

and this proves the statement. \(\square \)

6 Numerical Results

We tested and analyzed our algorithm in four different aspects. Usually, the handicap of the coefficient matrix is not known in advance, and the only algorithm that has been introduced to determine it has exponential running time [40]. Thus, in most cases, the theoretical algorithms for solving sufficient linear complementarity problems cannot be implemented directly. Moreover, we cannot make computations with an arbitrary large handicap because it would result in a step length that is too small; namely, it would be considered zero by the computer. Therefore, first, we show numerical results related to a greedy version of the algorithm (usually, for most IPAs, these variants are implemented in practice). Second, we present results about the theoretical algorithm for test problems where we know the exact value of the handicap of the coefficient matrix. We show interesting observations and raise some questions to which the answers can lead to a better analysis of the method. Then we compare the greedy algorithm for \(\varphi (t)=t\) (its theoretical version was investigated by Potra [38]) and for our algorithm with \(\varphi (t)=t-\sqrt{t}\) on larger-sized instances. We will see that the latter function also has a justification for usage. Finally, we compare the running times of the greedy algorithm for different parameter settings (we considered different values of the parameters \(\beta \) and \(\tau \)).

6.1 Greedy Algorithm

For the numerical tests, we implemented the following greedy variant of our algorithm:

As can be seen from the pseudocode, in this case, we ignore the value of the handicap and take the largest step so that the new iterates remain in the neighborhood \(\mathcal {W}(\tau , \beta ,0)\). For safety reasons, we also check whether the duality gap actually decreases after an iteration (it is known from the theory of sufficient LCPs that the duality gap is not monotonically decreasing in the value of \(\alpha _1\), and we take a larger step than the one that we proved the convergence for). During our numerical tests, this latter condition was never restrictive, i.e., the step length was always determined by the constraint on the neighborhood.

We tested our method for both sufficient and non-sufficient LCPs, even though in the second case we have no theoretical proof that interior point methods work (in general it is not necessarily true that the central path exists and it is unique).

The sufficient matrices that we used are the following (square matrices of order n):

-

ENM_SU: 82 matrices were constructed by E.-Nagy of order \(3\le n\le 10\) [18].

-

MGS_SU: 58 matrices were generated by Morapitiye and Illés of order \(10\le n\le 700\) [25].

-

EV_SU: 90 sufficient matrices with \(n= 1000,\;5000,\;10000\), constructed by E.-Nagy and Varga [18].

-

Lower triangular \(\mathcal {P}\)-matrices (all of their principal minors are positive) introduced by Csizmadia:

$$\begin{aligned} C = \begin{pmatrix} 1 &{} 0 &{} 0 &{} \cdots &{} 0\\ -1 &{} 1 &{} 0 &{} \cdots &{} 0\\ -1 &{} -1 &{} 1 &{}\cdots &{}0\\ \vdots &{} \vdots &{} \vdots &{} \ddots &{} \vdots \\ -1&{}-1&{}-1&{}\cdots &{}1 \end{pmatrix}. \end{aligned}$$It was shown by E.-Nagy that its handicap is exponential in the size of the matrix, more precisely, \(\kappa =2^{2n-8}-0.25\) [19].

The examined non-sufficient matrices were also collected on webpage [18]:

-

ENM_NSU: 80 instances of order \(3\le n\le 10\).

We calculated the right-hand sides as \(-M\textbf{e}+\textbf{e}=\textbf{q}\); therefore, \(\textbf{x}_0=\textbf{e}\) and \(\textbf{s}_0=\textbf{e}\) are feasible starting vectors and are in the neighborhood \(\mathcal {W}(\tau , \beta ,0)\).

For the numerical tests, we used the settings \(\beta =\tau =0.25\) and \(\varepsilon =10^{-5}\). Table 1 shows our numerical results for different sets of test problems. These are average values, except for Csizmadia-matrices.

The sufficient LCPs determined by using the ENM_SU and MGS_SU matrices could be solved easily; the running time was less than 1 second even for the largest, \(700 \times 700\) problem instance.

We could only solve problem instances with Csizmadia-matrices up to the size \(150 \times 150\), and the number of iterations is larger than the average calculated for the MGS_SU instances of similar size. In the case of the \(200 \times 200\) problem instance, the step length \(\alpha _1\) at the first iteration is too small and cannot be handled numerically.

To understand this behavior better, we resolved the same problems (\(\textbf{q}=-M\textbf{e}+\textbf{e}\)) using different starting points. Let \(\textbf{x}_0=\lambda \textbf{e}\) (\(\lambda \in (0,1]\)), and \(\textbf{s}_0=\textbf{q}+M\textbf{x}_0\), namely \((s_0)_i=1+(i-2)\cdot (1-\lambda )\) for all \(i\in \{1, \ldots ,n \}\). For \(\lambda =1\), we get back the case \(\textbf{x}_0=\textbf{s}_0=\textbf{e}\).

As can be observed from Table 2, for the smaller problem instances the required number of iterations decreases as we decrease the value of \(\lambda \). However, it is not possible to choose arbitrarily small values for \(\lambda \) if we want to have a special starting point in the neighborhood \(\mathcal {W}(\tau ,\beta ,0)\). Therefore, for larger problems, this approach is impractical but shows that the frequently applied starting point \(\textbf{x}_0=\textbf{s}_0=\textbf{e}\) may not be the best choice for the problem instances generated using the Csizmadia-matrices.

We also examined another set of LCPs using the Csizmadia-matrices, but in this case, we modified not just the starting points but the right-hand side vector \(\textbf{q}\) as well. Let \(\textbf{x}_0=\textbf{e}\) and \(\textbf{s}_0=\eta \textbf{e}\) (\(\eta \ge 1\)), and \(\textbf{q}=-M\textbf{e}+\eta \textbf{e}\). For \(\eta =1\), we get back our original case \(\textbf{x}_0=\textbf{s}_0=\textbf{e}\). Since \(\textbf{q} \ge \textbf{0}\) holds for the modified LCPs as well, their solution is still \(\textbf{x}=\textbf{0}\).

As can be seen from Table 3, by increasing \(\eta \) it is possible to solve significantly larger problem instances. The reason for failure is always the too small initial step length \(\alpha _1\).

Even though our analysis only works for sufficient LCPs, we also tested the algorithm for non-sufficient problem instances. Surprisingly, we could solve almost all problems correctly and the behavior of the algorithm was quite similar to the sufficient case. There were only two problematic instances out of the 80 (ENM_NSU_10_07 and ENM_NSU_10_08); the LCPs generated using these matrices could not be solved by our method. (Here also, the step length \(\alpha _1\) at the first iteration was too small and could not be handled numerically.) The results are shown in the third part of Table 1.

6.2 Observations Regarding the Coordinates of the Vector \(\textbf{v}\)

To be able to further examine the behavior of our theoretical algorithm, we calculated the handicap for some of the smaller test matrices. This way we could run the algorithm exactly as it is described in our analysis and make some important observations that raise interesting questions regarding the theoretical analysis.

We used the parameter settings \(\beta =\tau =0.25\) and \(\varepsilon =10^{-5}\). First we used the starting points \(\textbf{x}_0=\textbf{e}\), \(\textbf{s}_0=\textbf{e}\) (and calculated \(\textbf{q}\) as \(\textbf{q}=-M\textbf{e}+\textbf{e}\)). The numerical results are summarized in Table 4 (for instances from dimension 5, 6 and 7), and Fig. 1 shows the change in the coordinates of the vector \(\textbf{v}\) during the iterations. As expected, the numbers of iterations are significantly larger than in the greedy case, and they depend on the value of the handicap (since the step length depends on the handicap as well).

In the case of the Csizmadia-matrix, Fig. 1 shows that one of the coordinates (the first one) converges remarkably slower than the others, due to the properties of the Newton directions. This is the main reason why we experienced numerical issues with these starting points.

With these starting points, at the beginning \(v_i=\sqrt{\frac{1}{\tau }}=2\) holds for all coordinates of the vector \(\textbf{v}\). It can be seen from Table 4 and Fig. 1 that the coordinates remain in a really narrow interval around 2. However, in our analysis we use the upper bound \(v_i \le \sqrt{\frac{n}{\tau }}\), according to Corollary 4.1. The value of this upper bound for \(n=7\) and \(\tau =0.25\) is \(\sqrt{28} \approx 5.2915\), which is significantly larger than what we experienced in practice, even in this small dimension.

Furthermore, all coordinates of \(\textbf{v}\) are greater than 1; therefore, all iterates remain in the narrower neighborhood \(\mathcal {N}_{\infty }^-(1-\tau )\) and never actually get to a point from the set \(\mathcal {W}(\beta ,\tau ,\kappa )\setminus \mathcal {N}_{\infty }^-(1-\tau )\), i.e., in practice the algorithm works in a \(\kappa \)-independent neighborhood when the starting points are well centered.

We observed the same phenomenon for linear programming problems while preparing the numerical tests for our recent paper [20], where we had test problems with several thousands of variables. There we applied the self-dual embedding technique; therefore, we could use the starting point \(\textbf{x}_0=\textbf{e}\), \(\textbf{s}_0=\textbf{e}\), similar to the LCP case examined in this paper, i.e., the starting points were well centered in both cases. Based on these numerical tests, the size of this interval around \(1/\sqrt{\tau }\) seems to be independent of the problem size.

This raises the question whether it would be possible to give constant lower and upper bounds on the coordinates of \(\textbf{v}\), assuming that the starting point is well centered, i.e., to show that the algorithm is convergent and has the desired complexity using the neighborhood \( \mathcal {N}_\textbf{v}( \underline{\nu }, \bar{\nu })=\{ (\textbf{x}, \textbf{s}) \in \mathcal {F}^+: \underline{\nu } \textbf{e} \le \textbf{v} \le \bar{\nu } \textbf{e} \}\), where \(1 \le \underline{\nu } \le 1/\sqrt{\tau } \le \bar{\nu }\) are given parameters.

The coordinates of \(\textbf{v}\) for the \(5 \times 5\) Csizmadia-problems with three different (not well-centered) starting points are shown in Fig. 2. We kept the right-hand side as \(\textbf{q}=-M\textbf{e}+\textbf{e}\). The starting points were \(\textbf{x}_0=0.9\textbf{e}\) and \(\textbf{s}_0=[0.9,1,1.1,1.2,1.3]^T\), \(\textbf{x}_0=[0.8,0.6,0.5,0.5,0.6731]^T\) and \(\textbf{s}_0=[0.8,0.8,1.1,1.6,2.2731]^T\), \(\textbf{x}_0=[1.7,1.7^2,1.7^3,1.7^4,1.7^5]^T\) and \(\textbf{s}_0=[1.700,2.1900,2.3230,1.8491,0.3435]^T\), respectively. Here the intervals around 2 become narrower as the algorithm proceeds, and the coordinates are concentrated around this value in the end.

6.3 Comparison with the Case \(\varphi (t)=t\)

To examine the practical role of applying the transforming function \(\varphi (t)=t-\sqrt{t}\), we compared our numerical results with the case \(\varphi (t)=t\), i.e., when the AET technique is not applied. As mentioned before, Potra [38] proposed an Ai–Zhang type method for \(\mathcal {P}_*(\kappa )\)-HLCPs without the AET technique, i.e., with \(\varphi (t)=t\).

Since the sizes and, therefore, the running times were very small for the known problem instances, we generated larger sufficient matrices to compare the performance of the two methods. The results are shown in Table 5. The shorter running time for each instance is highlighted by bold letters. The number of iterations was the same in almost all cases, and the average running times were also similar. However, in this regard, the function \(t-\sqrt{t}\) performed slightly better for the \(1000\times 1000\) and \(10{,}000 \times 10{,}000\) size test problems.

In general, based on our current numerical results and other results for different types of algorithms from the AET literature, it cannot be stated that, in general, one transforming function would outperform another. For different problem instances, different functions can give the best results. In the case of the instance EV_SU_10000_1, the running time for \(\varphi (t)=t\) was more than 66 s less than that of the other method. However, in the case of EV_SU_10000_11, the running time was more than 67 s less in the second case. It is an important open question in connection with the AET technique to determine which transforming function would give the best results for a given problem instance.

6.4 The Neighborhood and Update Parameters

Usually, in the analysis of Ai–Zhang type methods, the value of the neighborhood and update parameters are chosen as equals to make it easier to check certain conditions. This subsection aims to examine whether this is a good choice in practice.

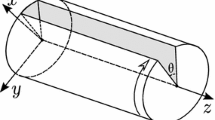

We can determine all parameter pairs \((\beta , \tau )\) that are suitable from the point of view of our analysis by using the upper bounds obtained in Lemmas 5.5 and 5.9. Based on these results, the blue area in Fig. 3 shows values of the parameters for which the duality gap decreases, and for the values from the green area, the new iterates remain in the neighborhood \(\mathcal {W}(\tau , \beta , \kappa )\). Therefore, the parameter values for which both conditions are satisfied (i.e., the duality gap decreases and the new iterates remain in \(\mathcal {W}\)) are in the intersection of the blue and green areas. Since we applied upper bounds in the calculations, these are subsets of the actual parameter settings that would be suitable for the algorithm.

We examined five different parameter settings for LCPs. Table 6 shows the average iteration numbers and running times for the LCPs constructed using the previously mentioned sufficient and non-sufficient matrices, and calculating the right-hand side of the system as \(\textbf{q}=-M\textbf{e}+\textbf{e}\). In this way, the starting point \(\textbf{x}_0=\textbf{e}\) and \(\textbf{s}_0=\textbf{e}\) is suitable in all cases. The value of the precision parameter was \(\varepsilon =10^{-5}\).

The minimum number of iterations and the smallest running time are highlighted in bold letters in each row. As can be seen from Table 6, the best average iteration numbers and running times were both achieved in the first case for most test problems, when the value of the neighborhood parameter was relatively large, and the value of the update parameter was small. The settings applied in the theoretical analysis are shown in the last two columns for reference.

For the Csizmadia problem instances, the number of iterations is the smallest for the first setting, similar to the previous problems. However, the running time is slightly smaller in the case of \(\beta =0.5\) and \(\tau =0.2\) in most cases. We performed similar tests for LP problems from the Netlib library, where we found that the best setting was \(\beta =0.5\) and \(\tau =0.2\) in almost all cases. This difference requires further examination.

The results were similar to those of the ENM_SU, ENM_NSU, and MGS_SU instances; in almost all cases, the first parameter setting gave the best results. We ran these numerical tests for some of the larger EV test instances mentioned in the previous subsection, and these experiments also gave similar results.

7 Conclusion

We introduced a new Ai–Zhang type long-step interior point method for \(\mathcal {P}_*(\kappa )\)-LCPs. The new IPA uses the AET technique with the function \(\varphi (t)=t-\sqrt{t}\). We proved that the method is convergent and has the best known iteration complexity.

An interesting question regarding the analysis is whether it would be possible to carry it out in a \(\kappa \)-independent neighborhood. In the case of \(\varphi (t)=t\), Potra [38] could prove the convergence and best known complexity of an Ai–Zhang type IPA for LCPs in a \(\kappa \)-independent neighborhood, but he did not apply the AET technique (i.e., considered the case of \(\varphi (t)=t\)).

In the near future, we would like to investigate Ai–Zhang type methods with different transforming functions for \(\mathcal {P}_*(\kappa )\)-LCPs. These two existing results suggest that there are some functions where a \(\kappa \)-independent neighborhood can be applied, and for other functions, the convergence and desired complexity can only be proved if we assume the \(\kappa \)-dependency of the neighborhood.

We implemented both the theoretical and a greedy variant of the IPA in MATLAB and tested the greedy variant on both sufficient and non-sufficient problem instances. The greedy method was very effective on most test problems. Furthermore, we compared the Ai–Zhang type IPAs using \(\varphi (t)=t\) and \(\varphi (t)=t-\sqrt{t}\). The performance of the two methods was similar, but there were some instances where one outperformed the other. It is an important open question in connection with the AET technique to determine which transforming function would give the best results for a given problem instance.

We ran the theoretical variant of the algorithm for some smaller problem instances (where we calculated the handicap in advance) and investigated the change in the coordinates of the vector \(\textbf{v}\). We found that when the starting points are well centered, the coordinates remain in a really narrow interval around \(\sqrt{1/\tau }\). This phenomenon raises some interesting theoretical questions that we would like to investigate in the future.

We also examined the practical role of the neighborhood and the update parameters. We found that instead of choosing the two parameters as equals (\(\beta =\tau \)), we should choose a relatively large value for the neighborhood parameter \(\beta \) and a small value for the update parameter \(\tau \). The results in the case of the Csizmadia-problems were different; this requires further examination.

References

Ai, W., Zhang, S.: An \(O(\sqrt{n}L)\) iteration primal-dual path-following method, based on wide neighborhoods and large updates, for monotone LCP. SIAM J. Optim. 16(2), 400–417 (2005). https://doi.org/10.1137/040604492

Anitescu, M., Lesaja, G., Potra, F.A.: Equivaence between different formulations of the linear complementarity promblem. Optim. Methods Softw. 7(3–4), 265–290 (1997). https://doi.org/10.1080/10556789708805657

Asadi, S., Mansouri, H.: Polynomial interior-point algorithm for \({\cal{P}}_*(\kappa )\) horizontal linear complementarity problems. Numer. Algorithm 63(2), 385–398 (2013). https://doi.org/10.1007/s11075-012-9628-0

Asadi, S., Mansouri, H., Darvay, Zs., Zangiabadi, M., Mahdavi-Amiri, N.: Large-neighborhood infeasible predictor-corrector algorithm for horizontal linear complementarity problems over Cartesian product of symmetric cones. J. Optim. Theory Appl. 180(3), 811–829 (2019). https://doi.org/10.1007/s10957-018-1402-6

Asadi, S., Mansouri, H., Lesaja, G., Zangiabadi, M.: A long-step interior-point algorithm for symmetric cone Cartesian \({\cal{P}}_*(\kappa )\)-HLCP. Optimization 67(11), 2031–2060 (2018). https://doi.org/10.1080/02331934.2018.1512604

Bai, Y., Lesaja, G., Roos, C., Wang, G., El Ghami, M.: A class of large-update and small-update primal-dual interior-point algorithms for linear optimization. J. Optim. Theory Appl. 138(3), 341–359 (2008). https://doi.org/10.1007/s10957-008-9389-z

Chung, S.J.: NP-completeness of the linear complementarity problem. J. Optim. Theory Appl. 60(3), 393–399 (1989). https://doi.org/10.1007/BF00940344

Cottle, R.W., Pang, J.S., Venkateswaran, V.: Sufficient matrices and the linear complementarity problem. Lin. Algebra Appl. 114, 231–249 (1989). https://doi.org/10.1016/0024-3795(89)90463-1

Darvay, Zs.: New interior point algorithms in linear programming. Adv. Model. Optim. 5(1), 51–92 (2003)

Darvay, Zs., Illés, T., Kheirfam, B., Rigó, P.R.: A corrector–predictor interior-point method with new search direction for linear optimization. Cent. Eur. J. Oper. Res. 28(3), 1123–1140 (2020). https://doi.org/10.1007/s10100-019-00622-3

Darvay, Zs., Illés, T., Majoros, Cs.: Interior-point algorithm for sufficient LCPs based on the technique of algebraically equivalent transformation. Optim. Lett. 15(2), 357–376 (2021). https://doi.org/10.1007/s11590-020-01612-0

Darvay, Zs., Illés, T., Povh, J., Rigó, P.R.: Feasible corrector-predictor interior-point algorithm for \({\cal{P}}_*(\kappa )\)-linear complementarity problems based on a new search direction. SIAM J. Optim. 30(3), 2628–2658 (2020). https://doi.org/10.1137/19M1248972

Darvay, Z.S., Illés, T., Rigó, P.R.: Predictor-corrector interior-point algorithm for \({\cal{P}}_*(\kappa )\)-linear complementarity problems based on a new type of algebraic equivalent transformation technique. Eur. J. Oper. Res. (2021). https://doi.org/10.1016/j.ejor.2021.08.039

Darvay, Zs., Papp, I.M., Takács, P.R.: Complexity analysis of a full-Newton step interior-point method for linear optimization. Period. Math. Hung. 73(1), 27–42 (2016). https://doi.org/10.1007/s10998-016-0119-2

Darvay, Zs., Takács, P.R.: Large-step interior-point algorithm for linear optimization based on a new wide neighbourhood. Cent. Eur. J. Oper. Res. 26(3), 551–563 (2018). https://doi.org/10.1007/s10100-018-0524-0

Darvay, Zs., Takács, P.R.: New method for determining search directions for interior-point algorithms in linear optimization. Optim. Lett. 12(5), 1099–1116 (2018). https://doi.org/10.1007/s11590-017-1171-4

de Klerk, E., E.-Nagy, M.: On the complexity of computing the handicap of a sufficient matrix. Math. Program. 129(2), 383–402 (2011). https://doi.org/10.1007/s10107-011-0465-z

E.-Nagy, M.: Sufficient matrices. https://sites.google.com/view/menagy/research/sufficient-matrices. Accessed: 2022–06–22

E.-Nagy, M., Illés, T., Povh, J., Varga, A., Žerovnik, J.: Testing sufficient matrices. (2021) (Submitted)

E.-Nagy, M., Varga, A.: A new long-step interior point algorithm for linear programming based on the algebraic equivalent transformation. Cent. Eur. J. Oper. Res. (2022). https://doi.org/10.1007/s10100-022-00812-6

Feng, Z., Fang, L.: A new \(O(nL)\)-iteration predictor-corrector algorithm with wide neighborhood for semidefinite programming. J. Comput. Appl. Math. 256, 65–76 (2014). https://doi.org/10.1016/j.cam.2013.07.011

Ferris, M.C., Pang, J.S.: Engineering and economic applications of complementarity problems. SIAM Rev. 39(4), 669–713 (1997). https://doi.org/10.1137/S0036144595285963

Gurtuna, F., Petra, C., Potra, F.A., Shevchenko, O., Vancea, A.: Corrector-predictor methods for sufficient linear complementarity problems. Comput. Optim. Appl. 48(3), 453–485 (2011). https://doi.org/10.1007/s10589-009-9263-4

Guu, S.M., Cottle, R.W.: On a subclass of \(\cal{P} (0)\). Linear Algebra Appl. 223, 325–335 (1995). https://doi.org/10.1016/0024-3795(93)00271-Z

Illés, T., Morapitiye, S.: Generating sufficient matrices. In: VOCAL 2018. 8th VOCAL Optimization Conference: Advanced Algorithms: Esztergom, Hungary, December 10–12, 2018. Short Papers, pp. 56–61 (2018)

Illés, T., Rigó, P.R., Török, R.: Predictor–corrector interior-point algorithm based on a new search direction working in a wide neighbourhood of the central path. Corvinus Econ. Work. Paper. (2) (2021)

Illés, T., Roos, C., Terlaky, T.: Simple approach for the interior point theory of linear complementarity problems with \(\cal{P}_{*}\)-matrices (1998). Unpublished manuscript

Kheirfam, B., Haghighi, M.: A full-Newton step feasible interior-point algorithm for \({\cal{P} } _* (\kappa )\)-LCP based on a new search direction. Croat. Oper. Res. Rev. 7(2), 277–290 (2016). https://doi.org/10.17535/crorr.2016.0019

Kojima, M., Megiddo, N., Noma, T., Yoshise, A.: A unified approach to interior point algorithms for linear complementarity problems. Lect. Notes Comput. Sci. (1991). https://doi.org/10.1007/3-540-54509-3

Kojima, M., Mizuno, S., Yoshise, A.: A primal-dual interior point algorithm for linear programming. In: Progress in Mathematical Programming, pp. 29–47. Springer (1989). https://doi.org/10.1007/978-1-4613-9617-8_2

Lemke, C.E., Howson, J.T., Jr.: Equilibrium points of bimatrix games. J. Soc. Ind. Appl. Math. 12(2), 413–423 (1964). https://doi.org/10.1137/0112033

Li, Y., Terlaky, T.: A new class of large neighborhood path-following interior point algorithms for semidefinite optimization with \(O \left(n \log \frac{Tr(X^0S^0)}{\varepsilon } \right)\) iteration complexity. SIAM J. Optim. 20(6), 2853–2875 (2010). https://doi.org/10.1137/080729311

Liu, C., Liu, H., Cong, W.: An \(O(\sqrt{n}L)\) iteration primal-dual second-order corrector algorithm for linear programming. Optim. Lett. 5(4), 729–743 (2011). https://doi.org/10.1007/s11590-010-0242-6

Nagy, M.: Interior point algorithms for general linear complementarity problems. Ph.D. Thesis, Eötvös Loránd University (2009)

Peng, J., Roos, C., Terlaky, T.: Self-regularity: A New Paradigm for Primal-dual Interior-point Algorithms. In: Princeton Series in Applied Mathematics. Princeton University Press (2002). https://doi.org/10.2307/j.ctt7sf0f

Pirhaji, M., Mansouri, H., Zangiabadi, M.: An \(O \left( \sqrt{n}L \right) \) wide neighborhood interior-point algorithm for semidefinite optimization. Comput. Appl. Math. 36(1), 145–157 (2017). https://doi.org/10.1007/s40314-015-0220-9

Potra, F.A.: A superlinearly convergent predictor–corrector method for degenerate LCP in a wide neighborhood of the central path with \(O(\sqrt{n}L)\) iteration complexity. Math. Program. 100(2), 317–337 (2004). https://doi.org/10.1007/s10107-003-0472-9

Potra, F.A.: Interior point methods for sufficient horizontal LCP in a wide neighborhood of the central path with best known iteration complexity. SIAM J. Optim. 24(1), 1–28 (2014). https://doi.org/10.1137/120884341

Väliaho, H.: \(\cal{P} _*\)-matrices are just sufficient. Linear Algebra Appl. 239, 103–108 (1996). https://doi.org/10.1016/S0024-3795(96)90005-1

Väliaho, H.: Determining the handicap of a sufficient matrix. Linear Algebra Appl. 253(1–3), 279–298 (1997). https://doi.org/10.1016/0024-3795(95)00703-2

Yang, X., Zhang, Y., Liu, H.: A wide neighborhood infeasible-interior-point method with arc-search for linear programming. J. Appl. Math. Comput. 51(1–2), 209–225 (2016). https://doi.org/10.1007/s12190-015-0900-z

Ye, Y.: Exchange market equilibria with Leontief’s utility: freedom of pricing leads to rationality. Theor. Comput. Sci. 378(2), 134–142 (2007). https://doi.org/10.1016/j.tcs.2007.02.016

Acknowledgements

This research has been supported by the NRDI Fund (TKP2020 NC, Grant No. BME-NC) based on the charter of bolster issued by the NRDI Office under the auspices of the Ministry for Innovation and Technology.

Funding

Open access funding provided by Corvinus University of Budapest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Etienne de Klerk.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

E.-Nagy, M., Varga, A. A New Ai–Zhang Type Interior Point Algorithm for Sufficient Linear Complementarity Problems. J Optim Theory Appl (2022). https://doi.org/10.1007/s10957-022-02121-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10957-022-02121-z