Abstract

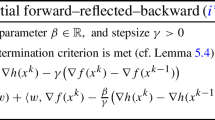

In a Hilbert space, we analyze the convergence properties of a general class of inertial forward–backward algorithms in the presence of perturbations, approximations, errors. These splitting algorithms aim to solve, by rapid methods, structured convex minimization problems. The function to be minimized is the sum of a continuously differentiable convex function whose gradient is Lipschitz continuous and a proper lower semicontinuous convex function. The algorithms involve a general sequence of positive extrapolation coefficients that reflect the inertial effect and a sequence in the Hilbert space that takes into account the presence of perturbations. We obtain convergence rates for values and convergence of the iterates under conditions involving the extrapolation and perturbation sequences jointly. This extends the recent work of Attouch–Cabot which was devoted to the unperturbed case. Next, we consider the introduction into the algorithms of a Tikhonov regularization term with vanishing coefficient. In this case, when the regularization coefficient does not tend too rapidly to zero, we obtain strong ergodic convergence of the iterates to the minimum norm solution. Taking a general sequence of extrapolation coefficients makes it possible to cover a wide range of accelerated methods. In this way, we show in a unifying way the robustness of these algorithms.

Similar content being viewed by others

References

Attouch, H., Cabot, A.: Convergence rates of inertial forward–backward algorithms. SIAM J. Optim. 28(1), 849–874 (2018)

Nesterov, Y.: A method of solving a convex programming problem with convergence rate \(O(1/k^2)\). Sov. Math. Dokl. 27, 372–376 (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Applied Optimization, vol. 87. Kluwer Academic Publishers, Boston (2004)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Bauschke, H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. CMS Books in Mathematics. Springer, Berlin (2011)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 4, 1168–1200 (2005)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1, 123–231 (2013)

Polyak, B.T.: Introduction to Optimization. Optimization Software, New York (1987)

Su, W., Boyd, S., Candès, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17, 1–43 (2016)

Chambolle, A., Dossal, Ch.: On the convergence of the iterates of the Fast Iterative Shrinkage/Thresholding Algorithm. J. Optim. Theory Appl. 166, 968–982 (2015)

Kim, D., Fessler, J.A.: Optimized first-order methods for smooth convex minimization. Math. Program. 159, 81–107 (2016)

Liang, J., Fadili, M.J., Peyré, G.: Activity identification and local linear convergence of forward–backward-type methods. SIAM J. Optim. 27(1), 408–437 (2017)

Lorenz, D.A., Pock, T.: An inertial forward–backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51, 311–325 (2015)

Villa, S., Salzo, S., Baldassarres, L., Verri, A.: Accelerated and inexact forward–backward. SIAM J. Optim. 23, 1607–1633 (2013)

Schmidt, M., Le Roux, N., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: NIPS’11—25 th Annual Conference on Neural Information Processing Systems, Dec 2011, Grenada. HAL inria-00618152v3 (2011)

Aujol, J.-F., Dossal, Ch.: Stability of over-relaxations for the Forward–Backward algorithm, application to FISTA. SIAM J. Optim. 25, 2408–2433 (2015)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing damping. Math. Program. Ser. B 168, 123–175 (2018)

Fiacco, A., McCormick, G.: Nonlinear Programming: Sequential Unconstrained Minimization Techniques. Wiley, Hoboken (1968)

Cominetti, R.: Coupling the proximal point algorithm with approximation methods. J. Optim. Theory Appl. 95, 581–600 (1997)

Attouch, H., Czarnecki, M.-O., Peypouquet, J.: Prox-penalization and splitting methods for constrained variational problems. SIAM J. Optim. 21, 149–173 (2011)

Attouch, H., Czarnecki, M.-O., Peypouquet, J.: Coupling forward–backward with penalty schemes and parallel splitting for constrained variational inequalities. SIAM J. Optim. 21, 1251–1274 (2011)

Bot, R.I., Csetnek, E.R.: Second order forward–backward dynamical systems for monotone inclusion problems. SIAM J. Control Optim. 54, 1423–1443 (2016)

Cabot, A.: Proximal point algorithm controlled by a slowly vanishing term: applications to hierarchical minimization. SIAM J. Optim. 15, 555–572 (2005)

Hirstoaga, S.A.: Approximation et résolution de problèmes d’équilibre, de point fixe et d’inclusion monotone. PhD thesis, Paris VI. HAL Id: tel-00137228. https://tel.archives-ouvertes.fr/tel-00137228 (2006)

Attouch, H., Czarnecki, M.-O.: Asymptotic control and stabilization of nonlinear oscillators with non-isolated equilibria. J. Differ. Equ. 179, 278–310 (2002)

Attouch, H., Chbani, Z., Riahi, H.: Combining fast inertial dynamics for convex optimization with Tikhonov regularization. J. Math. Anal. Appl. 457, 1065–1094 (2018)

Combettes, P.L., Pesquet, J.C.: Proximal splitting methods in signal processing. In: Bauschke, H., et al. (eds.) Fixed-Point Algorithms for Inverse Problems in Science and Engineering. Springer Optimization and Its Applications, vol. 49, pp. 185–212. Springer, New York (2011)

Lemaire, B.: The proximal algorithm. In: Penot, J.P. (ed.) New Methods in Optimization and Their Industrial Uses. Int. Ser. Numer. Math., vol. 87, pp. 73–89. Birkhäuser, Basel (1989)

Peypouquet, J.: Convex Optimization in Normed Spaces: Theory, Methods and Examples. Springer, Berlin (2015)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14, 877–898 (1976)

Attouch, H., Peypouquet, J.: The rate of convergence of Nesterov’s accelerated forward–backward method is actually faster than \(\frac{1}{k^2}\). SIAM J. Optim. 26, 1824–1834 (2016)

Apidopoulos, V., Aujol, J.-F., Dossal, Ch.: Convergence rate of inertial Forward–Backward algorithm beyond Nesterov’s rule. HAL Preprint 01551873 (2017)

Attouch, H., Chbani, Z., Riahi, H.: Rate of convergence of the Nesterov accelerated gradient method in the subcritical case \(\alpha \le 3\). ESAIM: COCV (2017). https://doi.org/10.1051/cocv/2017083 (Forthcoming article)

Alvarez, F.: On the minimizing property of a second-order dissipative system in Hilbert spaces. SIAM J. Control Optim. 38, 1102–1119 (2000)

Alvarez, F., Attouch, H.: An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9, 3–11 (2001)

Cabot, A., Engler, H., Gadat, S.: On the long time behavior of second order differential equations with asymptotically small dissipation. Trans. Am. Math. Soc. 361, 5983–6017 (2009)

Cabot, A., Engler, H., Gadat, S.: Second order differential equations with asymptotically small dissipation and piecewise flat potentials. Electron. J. Differ. Equ. 17, 33–38 (2009)

Attouch, H., Cabot, A.: Asymptotic stabilization of inertial gradient dynamics with time-dependent viscosity. J. Differ. Equ. 263, 5412–5458 (2017)

Attouch, H.: Viscosity solutions of minimization problems. SIAM J. Optim. 6, 769–806 (1996)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 73, 591–597 (1967)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Giuseppe Buttazzo.

A Appendix

A Appendix

In this section, we present some auxiliary lemmas that are used throughout the paper.

Lemma A.1

([17, Lemma 5.14]) Let \((a_k)\) be a sequence of nonnegative numbers such that \( a_k^2 \le c^2 + \sum _{j=1}^k \beta _j a_j \) for all \(k\in {\mathbb {N}}\), where \((\beta _j )\) is a summable sequence of nonnegative numbers, and \(c\ge 0\). Then, \(\displaystyle a_k \le c + \sum \nolimits _{j=1}^{+\infty } \beta _j\) for all \(k\in {\mathbb {N}}\).

Lemma A.2

([40, Opial’s Lemma]) Let S be a nonempty subset of \({\mathcal {H}}\), and \((x_k)\) a sequence of elements of \({\mathcal {H}}\). Assume that

- (i):

-

every sequential weak cluster point of \((x_k)\), as \(k\rightarrow +\infty \), belongs to S;

- (ii):

-

for every \(z\in S\), \(\lim _{k\rightarrow +\infty }\Vert x_k-z\Vert \) exists.

Then \((x_k)\) converges weakly as \(k\rightarrow +\infty \) to a point in S.

The following result allows us to establish the summability of a sequence \((a_k)\) satisfying some suitable inequality.

Lemma A.3

([1, Lemma 23]) Let us give a nonnegative sequence \((\alpha _k)\) satisfying \((K_0)\). Let \((t_k)\) be the sequence defined by \(t_k=1+\sum _{i=k}^{+\infty }\prod _{j=k}^i\alpha _j\). Let \((a_k)\) and \((\omega _k)\) be two sequences of nonnegative numbers such that, for every \(k\ge 0\), the following inequality is satisfied: \(a_{k+1}\le \alpha _ka_k+\omega _k\).

Then, if \(\sum _{k=0}^{+\infty }t_{k+1}\omega _k<+\infty \), we conclude that \(\sum _{k=0}^{+\infty }a_k<+\infty \).

The next lemma provides an estimate of the convergence rate of a sequence that is summable with respect to weights.

Lemma A.4

([1, Lemma 22]) Let \((\tau _k)\) be a nonnegative sequence such that \(\sum _{k=1}^{+\infty } \tau _{k}=+\infty \). Assume that \((\varepsilon _k)\) is a nonnegative and nonincreasing sequence satisfying \(\sum _{k=1}^{+\infty } \tau _{k}\,\varepsilon _k<+\infty \).

Then, we have \(\varepsilon _k=o\left( \frac{1}{\sum _{i=1}^k \tau _i}\right) \quad \text{ as } k\rightarrow +\infty .\)

Rights and permissions

About this article

Cite this article

Attouch, H., Cabot, A., Chbani, Z. et al. Inertial Forward–Backward Algorithms with Perturbations: Application to Tikhonov Regularization. J Optim Theory Appl 179, 1–36 (2018). https://doi.org/10.1007/s10957-018-1369-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1369-3

Keywords

- Structured convex optimization

- Inertial forward–backward algorithms

- Accelerated Nesterov method

- FISTA

- Perturbations

- Tikhonov regularization