Abstract

The scalar wave equation is solved using higher order immersed finite elements. We demonstrate that higher order convergence can be obtained. Small cuts with the background mesh are stabilized by adding penalty terms to the weak formulation. This ensures that the condition numbers of the mass and stiffness matrix are independent of how the boundary cuts the mesh. The penalties consist of jumps in higher order derivatives integrated over the interior faces of the elements cut by the boundary. The dependence on the polynomial degree of the condition number of the stabilized mass matrix is estimated. We conclude that the condition number grows extremely fast when increasing the polynomial degree of the finite element space. The time step restriction of the resulting system is investigated numerically and is concluded not to be worse than for a standard (non-immersed) finite element method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The cut finite element method [3] is an immersed method. For a domain immersed in a background mesh, one solves for the degrees of freedom of the smallest set of elements covering the domain. The inner products in the weak form are taken over the immersed domain. That is, on each element one integrates over the part of the element that is inside the domain. As a result of this, some elements will have a very small intersection with the immersed domain. This will make some eigenvalues of the discrete system very small and in turn, result in poorly conditioned matrices. A suggested way to remedy this is by adding stabilizing terms to the weak formulation. A jump-stabilization was suggested in [4] for the case of piecewise linear elements, where the jump in the normal derivative is integrated over the faces of the elements intersected by the boundary. This stabilization makes it possible to prove that the condition numbers of the involved matrices are bounded independently of how the boundary cuts the elements. This form of stabilization has been used with good results in several recent papers, see for example [3, 7, 15, 21], and has also been used for PDEs posed on surfaces in [5, 9].

Thus, a lot of attention has been directed to the use of lower order elements. Higher order cut elements have received less attention so far. These are interesting in wave propagation problems. The reason for this is that the amount of work per dispersion error typically increases slower for higher order methods for this type of problems. In [2] it was suggested to stabilize higher order elements by integrating also jumps in higher derivatives over the faces. This generalization of the jump-stabilization was further analyzed in [15].

In this paper, we consider solving the scalar wave equation using higher order cut elements. Both the mass and stiffness matrix are stabilized using the higher order jump-stabilization. We present numerical results showing that the method obtains a high order of accuracy. The time-step restriction of the resulting system is computed numerically and is concluded to be of the same size as for standard finite elements with aligned boundaries. Furthermore, we estimate how the condition number of the stabilized mass matrix depends on the polynomial degree of the basis functions. The estimate suggests that the condition number grows extremely fast with respect to the polynomial degree, which is supported by the numerical experiments. All numerical experiments are performed in two dimensions, but the generalization to three dimensions is immediate.

One reason why the considered stabilization is attractive is because it is quite easy to implement. Integrals over internal faces occur also in discontinuous Galerkin methods, thus making the implementation similar to what is already supported in many existing libraries.

The suggested jump-stabilization is one but not the only possibility for stabilizing an immersed method. In [10] a higher order discontinuous Galerkin method was suggested and proved to give optimal order of convergence. Here the problem of ill-conditioning was solved by associating elements that had small intersections with neighboring elements. Similar approaches has been used with higher order elements in for example [12, 16], where elements with small intersection take their basis functions from an element inside the domain. One problem with these approaches is that it is not obvious how to choose which elements should merge with or associate to one another. A related alternative to these is the approach in [18], where individual basis functions were removed if they have a small support inside the domain. A different approach was used in [11] where streamline diffusion stabilization was added to the elements intersected by the boundary. This was proved to give up to fourth order convergence. However, this approach is restricted to interface problems. Another alternative is to use preconditioners to try to overcome problems with ill-conditioning, such as in [13]. However, only preconditioning does not solve the problem of severe time-step restrictions when using explicit time-stepping. For this reason preconditioning alone is not sufficient in the context of wave-propagation.

This paper is organized in the following way. Notation and some basic problem setup are explained in Sect. 2.1, the stabilized weak formulation is described in Sect. 2.2, and the stability of the method is discussed in Sect. 2.3. Analysis of how fast the condition number increases when increasing the polynomial degree is presented in Sect. 2.4, and numerical experiments are presented in Sect. 3.

2 Problem Statement and Theoretical Considerations

2.1 Notation and Setting

Consider the wave equation

posed on a given domain \(\varOmega \), with a smooth boundary \(\partial \varOmega =\Gamma _D\cup \Gamma _N\). Let \(\varOmega \subset \mathbb {R}^d\) be immersed in a mesh, \(\mathcal {T}\), as in Fig. 1. We assume that each element \(T\in \mathcal {T}\) has some part which is inside \(\varOmega \), that is: \(T\cap \varOmega \ne \emptyset \). Furthermore, let \(\varOmega _\mathcal {T}\) be the domain that corresponds to \(\mathcal {T}\), that is

Let \(\mathcal {T}_\Gamma \) denote the set of elements intersected by \(\partial \varOmega \):

as in Fig. 2. Let \(\mathcal {F}_\Gamma \) denote the faces seen in Fig. 3. That is, the faces of the elements in \(\mathcal {T}_\Gamma \), excluding the faces that make up \(\partial \varOmega _\mathcal {T}\). To be precise, \(\mathcal {F}_\Gamma \) is defined as

We assume that our background mesh is sufficiently fine, so that the immersed geometry is well resolved by the mesh. Furthermore, we shall restrict ourselves to meshes as the one in Fig. 1, where we have a mesh consisting of hypercubes and our coordinate axes are aligned with the mesh faces. That is, the face normals have a nonzero component only in one of the coordinate directions. Denote the element side length by h.

Consider the situation in Fig. 4, where two neighboring elements, \(T_1\) and \(T_2\), are sharing a common face F. Denote by \(\partial _n^k v\) the kth directional derivative in the direction of the face normal. That is, fix \(j\in \{1,\ldots ,d\}\) and let the normal of the face, n, be such that

then define

In the following, we shall use the following inner products

where the subscript indicates over which region we integrate. Note that (11) is used when we integrate over a d-dimensional subset of \(\mathbb {R}^d\), while (12) is used when we integrate over a \(d-1\) dimensional region. The \(L_2\)-norm over some part of the domain, Z, we will denote as \(\Vert \cdot \Vert _Z\), or in some places as \(\Vert \cdot \Vert _{L_2(Z)}\) if we want to particularly clear. Let \(\Vert \cdot \Vert _{H^s(Z)}\) and \(|\cdot |_{H^s(Z)}\) denote the \(H^s(Z)\)-norm and semi-norm. By [v] we shall denote a jump over a face, F:

We shall assume that our basis functions are tensor products of one-dimensional polynomials of order p. In particular, we shall use Lagrange elements with Gauss-Lobatto nodes, in the following referred to as \(Q_p\)-elements, \(p\in \{1,2,\ldots \}\). Let \(V_h^p\) denote a continuous finite element space, consisting of \(Q_p\)-elements on the mesh \(\mathcal {T}\):

Define also the following semi-norm

which is a norm on \(V_h^p\) in the case that \(\Gamma _D\ne \emptyset \).

2.2 The Stabilized Weak Formulation

Multiplying (1) by a test-function, integrating by parts, and applying boundary conditions by Nitsche’s method [17] leads to a weak formulation of the following form: find \(u_h\) such that for each fix \(t\in (0,t_f ]\), \(u_h\in V_h^p\) and

where

What makes this different from standard finite elements is that the integration on each element needs to be adapted to the part of the element that is inside the domain. As illustrated in Fig. 5, some elements will have a very small intersection with the domain. Consider the mass-matrix from the method in (16):

Note that its smallest eigenvalue is smaller than each diagonal entry:

Depending on the size of the cut with the background mesh some diagonal entries can become arbitrarily close to zero. Thus, both the mass and stiffness matrix can now be arbitrarily ill-conditioned depending on how the cut occurs. Because of this, one can not guarantee that the method is stable.

One way to remedy this is by adding stabilizing terms, j, to the two bilinear forms

where \(\gamma _M,\gamma _A>0\) are penalty parameters. This gives us the following weak formulation: find \(u_h\) such that for each fix \(t\in (0,t_f ]\), \(u_h\in V_h^p\) and

In [2] the following stabilization term was suggested

This stabilization was analyzed and tested numerically for piecewise linear elements in [4]. The stabilization in (24) was further analyzed for higher order elements in [15]. The bilinear form (21) can be shown to define a scalar product which is norm equivalent to the \(L_2\)-norm on the whole background mesh:

and a corresponding equivalence holds for the gradient:

The constants in (25) and (26) depend on the polynomial degree of our basis functions, but not on how the boundary cuts through the mesh. Let \(\mathcal {M}\) denote the mass matrix with respect to the bilinear form M, and \(\mathcal {M}_\mathcal {T}\) with respect to the scalar product on the background mesh, that is:

Now, (25) implies that the condition number, \(\kappa (\mathcal {M})\), of \(\mathcal {M}\) is bounded by the condition number of \(\mathcal {M}_\mathcal {T}\):

The property (26) is necessary in order to show that \(A(\cdot ,\cdot )\) is coercive in \(V_h^p\) with respect to the \(| \cdot |_\star \)-semi-norm on the background mesh:

As we shall see in Sect. 2.3 this is needed in order to show that the method is stable with respect to time. The result in (30) follows by the same procedure as in [15], assuming that the following inverse inequality holds

For piecewise linear basis functions, this inequality follows in the same way as the proof of Lemma 4 in [6]. Related inverse inequalities were proved for planar cuts for higher order elements in [14]. The inequality (31) follows the same scaling with respect to h and p as the corresponding standard inverse inequality, which relates the norm over a face to the norm over the whole element. See for example [22].

The stabilization in (24) is the basic form of stabilization that we shall consider. However, each time we differentiate we will introduce some dependence on the polynomial degree. It therefore seems reasonable that each term in the sum should be scaled in some way. Because of this, we consider a stabilization of the following form:

where \(w_j\in \mathbb {R}^+\) are some weights, which we are free to choose as we wish. The choice of weights will determine how large our constants \(C_U\), \(C_L\) in (29) are, and in turn influence how well conditioned the mass matrix is. Given how the stabilization is derived from a Taylor expansion (see [15] or Sect. 2.4), it is perhaps most natural to use \(w_k=1\). However, several papers [2, 8, 15] discussing high order cut finite elements state the stabilization as in (24). This would be equivalent to choosing \(w_k=(k!)^2 (2k+1)\) in (32). We think it makes sense to introduce the weights in (32) to make it possible to analyze what effect different choices of weights have.

If the solution and the boundary are sufficiently smooth, a standard non-immersed finite element method with the same type of elements is expected to yield errors which converge as follows

This is also what we expect for the considered immersed method.

2.3 Stability

The bilinear forms in (23) are symmetric. This is a quite important property, since this in the end will guarantee stability of the system. In order to show stability we want a bound over time on \(\Vert u \Vert _{\varOmega _\mathcal {T}}\). Define an energy, E, of the form

Since both bilinear forms are at least positive semi-definite, this energy has the property \(E \ge 0\). The symmetry now allows us to show that for a homogeneous system,

the energy is conserved:

so that

By the definition of the energy together with (25) and (30) this immediately implies that \(\Vert \dot{u}_h\Vert _{\varOmega _\mathcal {T}}\) and \(\Vert \nabla u_h \Vert _{\varOmega _\mathcal {T}}\) are both bounded. For the case \(\Gamma _D\ne \emptyset \) the semi-norm \(| \cdot |_\star \) is a norm for the space \(V_h^p\) and (30) implies that \(\Vert u_h \Vert _{{\varOmega _\mathcal {T}}} \) is also bounded. When \(\Gamma _D = \emptyset \) we can use that

which gives us

By integrating we obtain that \(\Vert u_h \Vert _{{\varOmega _\mathcal {T}}} \) is bounded since \(\Vert \dot{u}_h \Vert _{{\varOmega _\mathcal {T}}} \) is bounded:

Thus the system is stable.

In total the method (23) discretizes to a system of the form

with \(\mathcal {M},\mathcal {A}\in \mathbb {R}^{N \times N}\), \(\xi \in \mathbb {R}^N\) and \(\mathcal {L}: \mathbb {R}\rightarrow \mathbb {R}^N\), and where

When solving this system in time we will have a restriction on the time-step, \(\tau \), of the form

where \(\alpha \) is a constant which depends on the time-stepping algorithm. If we for example use a classical 4th-order explicit Runge–Kutta \(\alpha =2\sqrt{2}\). The CFL-number, \(\beta _{cfl} \), is given by

where \(\lambda _{\max }\) is the largest eigenvalue of the generalized eigenvalue problem: find \((x,\lambda )\) such that

One would expect that the added stabilization has some effect on the CFL-number. Because of this, we will investigate this constant experimentally in Sect. 3. It turns out that the CFL-number is not worse than for a standard non-immersed method.

2.4 Analysis of the Condition Number of the Mass Matrix

We would like to choose the weights in (32) in order to minimize the condition number of the mass matrix. This is particularly important when it comes to wave-propagation problems. For this application one typically uses an explicit time-stepping method. When this is the case we need to solve a system involving the mass matrix in each time-step.

In order to choose the weights we need to know how the condition number depends on the weights and the polynomial degree. To determine this, we follow essentially the same path as in [15] and keep track of the weights and the polynomial dependence of the involved inequalities. In the following, we denote by C various constants which do not depend on h or p, unless explicitly stated otherwise. We shall also by w denote the vector \(w=(w_1,\ldots ,w_p)\), where \(w_j\) are the weights in the stabilization term (32). We can now derive the following inequality, which is a weighted version of Lemma 5.1 in [15].

Lemma 1

Given two neighboring elements, \(T_1\) and \(T_2\), sharing a face F (as in Fig. 4), and \(v\in V_h^p\), we have that:

where

Proof

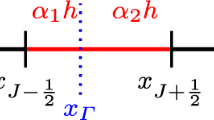

Denote by \(v_i\) the restriction of v to \(T_i\) and then extended by expression to the whole of \(T_1 \cup T_2\). As in Fig. 4, let \(x\in T_1\) and denote by \(x_F(x)\) the projection of x onto the face. Let n be the normal pointing towards \(T_1\) and let j denote the only nonzero component, as in (9). We may now Taylor expand from the face:

Using that

gives us

by definition of \(\partial _n^k v\) from (10). Consequently we have that

Now introduce the following weighted \(l^2(\mathbb {R}^{p+1})\)-norm:

where \(\alpha _k>0\) and \(z\in \mathbb {R}^{p+1}\). If \(\Vert \cdot \Vert _1\) denotes the usual \(l^1(\mathbb {R}^{p+1})\)-norm we have that:

where

Taking the \(L_2(T_1)\)-norm of (54) and using (56) now results in:

Since \(v_2\) lies in a finite dimensional polynomial space on \(T_1 \cup T_2\) the norms on \(T_1\) and \(T_2\) are equivalent:

where \(C_1=C_1(p)\). Using this in (58) and choosing

gives us (49). \(\square \)

Remark 1

The constant \(C_1(p)\) will grow rapidly with the polynomial order. By only considering the highest order term in the polynomial a lower bound on the constant is achieved. We get an exponential dependence

This is however far from sharp. The constant can straightforwardly be computed numerically for a given p by considering how polynomials, which are orthonormal in one element, extend to a neighbouring element and are projected to the orthonormal polynomials of the neighbouring element. The constant equals the norm of the projection matrix. From such computations we see that \(C_1(p) \sim e^{1.75p}\).

Lemma 1 will now allow us to give a lower bound on the bilinear form M, which was defined in (21).

Lemma 2

A lower bound for M(v, v) is:

where L(w) is given by (50), \(N_J\) is some sufficiently large integer and \(C_l\) is a constant independent of h and p.

Proof

Let \(T_0\in \mathcal {T}_\Gamma \) and let \(\{T_i\}_{i=1}^{N-1}\) (with \(T_i\in \mathcal {T}_\Gamma \)) be a sequence of elements that need to be crossed in order to get to an element \(T_N\in \mathcal {T}\setminus \mathcal {T}_\Gamma \), as in Fig. 6, and let \(F_i=T_{i-1}\cap T_i\). By using (49) we get

where we have used that \({L(w)}\ge 1\) (since at least \(C_1\ge 1)\). Let now \(N_J\ge 1\) denote some upper bound on the maximum number of jumps that needs to be made in the mesh. If our geometry is well resolved by our background mesh \(N_J\) is a small integer. This gives us

from which (63) follows. \(\square \)

We proceed by estimating how a bound on the jumps depends on the polynomial degree.

Lemma 3

For the jumps in the normal derivative we have that:

where \(T_F^+\) and \(T_F^-\) denotes the two elements sharing the face F.

Proof

Note first that

We shall need the following inequalities:

which were discussedFootnote 1 in [20]. Although (69) holds for a whole element we shall use the corresponding inequality applied to a face:

This is valid since a function v in the tensor product space over T will have a restriction \(\left. v\right| _{F}\) in the tensor product space over the face F. Note that the constants, C, in (69) and (70) are not necessarily the same. By combining (67), (68) and (70) we obtain (66). \(\square \)

Using Lemma 3 we can now bound the bilinear form \(M(\cdot ,\cdot )\) from above.

Lemma 4

An upper bound for M(v, v) is:

where

and \(C_g\) is a constant independent of h and p.

Proof

Using the definition of \(j(\cdot ,\cdot )\) and applying Lemma 3 on each order of derivatives in the sum individually we have

Let \(n_F\) denote the number of faces that an element has in \(\mathbb {R}^d\). We now have

so we finally obtain:

which gives us (71). \(\square \)

By using Lemmas 2 and 4 we now have the following bound on the condition number.

Lemma 5

An upper bound for the condition number of the mass matrix is

where

Proof

Denote eigenvalues by \(\lambda \). From Lemmas 2 and 4 we obtain

which gives us (76). \(\square \)

Here, we would like to choose the weights in order to minimize the constant \(C_M\). However, we have the following unsatisfying result, which shows that no matter how we choose the weights our bound on the condition number increases extremely fast with p.

Lemma 6

The constant \(C_M(w)\) in Lemma 5 fulfills \(C_M(w)\ge C_0 P(p)\), where \(C_0\) does not depend on p or w. Here P(p) is the function

which is independent of the choice of weights w.

Proof

First note that

Now we have

where we first used that the \(l^1(\mathbb {R}^{p})\)-norm is greater than the \(l^2(\mathbb {R}^{p})\)-norm and finally Cauchy-Schwartz. From this the result follows. \(\square \)

The function P(p) increases incredibly fast when increasing the polynomial degree. This result could reflect either:

-

1.

The analysis leading to Lemma 5 is not sharp. The bound \(C_M\) is too generous, and a better bound exists.

-

2.

The bound in Lemma 5 is not unnecessarily generous, so that the constant \(C_M\) is in some sense “tight”. This means that the condition number of the stabilized mass matrix (27) will grow faster than the function P(p), regardless of the choice of weights.

Alternative 2 is rather devastating from a time-stepping perspective, since in order to time-step (44) an inverse of the mass matrix needs to be available in each time-step. If this inversion is done with an iterative method the number of required iterations until convergence is going to be large.

A combination of these two alternatives is, of course, possible. The estimate in Lemma 5 could be too pessimistic, but even the optimal bound increases incredibly fast. Given the results in Sect. 3 this appears to be the most plausible alternative.

2.5 Choosing Weights in the Jump-Stabilization

In order to do a computation, we are forced to make some choice of the weights \(w_i\). The essence of Lemma 6 is that we can bound L(w)G(w) from below. So in order to choose weights let us assume that:

From Lemma 4 it is seen that choosing \(w_i\gg 1\) makes G(w) very large. In the same way, Lemma 2 tells us that choosing \(w_i \ll 1\) for some i makes L(w) very large. From this observation it seems reasonable to try to enforce both bounds to be of about the same magnitude. In this way, we minimize L(w)G(w) with respect to w and enforce \(G(w)=L(w)\). This leaves us with

where \(\nabla _w\) denotes the gradient with respect to w. This now gives us the following choice of weights

Given the analysis here, we find that this is the choice of weights that is easiest to motivate. Although, this choice is not necessarily optimal. Numerical tests (not reported here) indicate that the conditioning is not very sensitive to the choice of weights.

3 Numerical Experiments

In the following, we shall solve both an inner problem and an outer problem using finite element spaces of different orders. In the inner problem we consider a simple bounded domain, while in the outer problem, the domain is bounded but exterior to a simple domain, see for example Figs. 1 and 10, respectively.

The weights from (85) are used. In addition, the following parameters are used

The scaling of \(\gamma _D\) with respect to p follows from the inequality (31). When \({p=1}\) these parameters coincide with the parameters used in [21]. There the effect of \(\gamma _M\) on the condition number of the mass matrix was investigated numerically. For \(p=1\) this choice of \(\gamma _A\) and \(\gamma _D\) also coincides with the one in [4], where \(\gamma _A\) was investigated numerically.

The geometry of \(\varOmega \) is approximated as the zero level set of a function, \(\psi _h\). This level set function, \(\psi _h\), is an element in the space

where

and where \(\mathcal {T}_B\) is the larger background mesh from which \(\mathcal {T}\) is created. In the experiments \(\psi _h\) is the \(L_2\)-projection of an analytic level set function onto the space \(W_h^p\). Which elements in \(\mathcal {T}_B\) that should belong to \(\mathcal {T}\) is determined by checking the sign of \(\psi _h\) at the nodes on each element in \(\mathcal {T}_B\). In order to approximate integrals on elements intersected by the boundary, we have used the algorithm in [19]. On each element, T, this algorithm generates quadrature rules for integration over \(\varOmega \cap T\) and \(\partial \varOmega \cap T\). This is done using the level set function, \(\psi _h\). It is worth noting that also the errors of the solution are calculated with respect to this approximation of the geometry. That is, the \(L_2\)-norms are approximated as

Order of accuracy is estimated as

where \(e_i\) denotes an error corresponding to mesh size \(h_i\).

Time-stepping is performed with a classical fourth order explicit Runge–Kutta, after rewriting the system (44) as a first order system in time. A time step, \(\tau \), of size

is used. During the time-stepping we need to solve a system involving the mass matrix. When using higher order elements the condition number of the mass matrix is large, so an iterative method would require many iterations. However, we can approximate the integrals in the mass matrix using Gauss-Lobatto quadrature. This choice of reduced integration has the benefit that the mass matrix becomes almost diagonal. All off-diagonal entries in the mass matrix are related to degrees of freedom close to the immersed boundary. Since these are relatively few, it is feasible to use a direct solver.

The library deal.II [1] was used to implement the method.

3.1 Standard Reference Problem with Aligned Boundary

It is relevant to compare some of the properties of the mass and stiffness matrices with standard (non-immersed) finite elements. The unstabilized mass and stiffness matrices were computed on a rectangular grid with size \([-1.5,1.5] \times [-1.5,1.5]\), with Neumann boundary conditions. As for the immersed case, quadrilateral Lagrange elements with Gauss-Lobatto nodes were used. The computed CFL-number is shown in Table 1. The CFL-number was found by computing the largest eigenvalue of (48) and using this in (47). For a given p the value in Table 1 is the mean value when calculating the CFL-number over a number of grid sizes. The condition number of the mass matrix is shown in Fig. 7 and the minimal and maximal eigenvalues of the mass matrix is shown in Fig. 8. Since all eigenvalues should be proportional to \(h^2\), the eigenvalues have been scaled by \(h^{-2}\) for easier comparison.

3.2 An Immersed Inner Problem

Let \(\varOmega \) be a disk domain, centered at origo, with radius \(R=1\), and enforce homogeneous Dirichlet boundary condition along the boundary

Let \(J_0\) denote the 0th order Bessel-function and let \(\alpha _n\) denote its nth zero. By starting from initial conditions:

we can calculate the error in our numerical solution with respect to the analytic solution:

Let \(n = 3\). A few snapshots of the numerical solution are shown in Fig. 9. The problem was solved with the given method until an end-time, \(t_f \), corresponding to a three periods:

At the end-time the errors were computed.

The calculated errors and estimated orders of accuracy for the different element orders are shown in Tables 2, 3 and 4. The order of accuracy for each error agrees quite well with what is expected from (33)–(35).

Computed CFL-numbers for different element orders are shown in Table 1. The values were computed according to (47). We see that the CFL-number is essentially the same as for the non-immersed case. In the same way as for the non-immersed case, the values in Table 1 are the mean values over a number of grid sizes. However, the CFL-number only varied slightly when varying the grid size. By inserting the values in Table 1 into (46) one can see that it would have been possible to use a larger time-step than the one in (94).

How the condition number of the mass matrix depend on the grid size is shown in Fig. 7, for the different orders of p. We see that the condition numbers are essentially constant when refining h, in agreement with (25). We also see that the condition numbers increase extremely rapidly when increasing the polynomial degree, as predicted by Lemma 6. It is also clear from Fig. 7 that the condition number increase much faster than in the non-immersed case. The dashed lines in Fig. 7 denote the function CP(p), where P is the function from (80). The constant C was chosen so that CP(1) agreed with the mean (with respect to h) of the condition numbers for \(V_h^1\). The estimate from Lemma 6 is fairly reasonable for the tested polynomial orders. It does, however, appear to be slightly too pessimistic.

The minimal and maximal eigenvalues for the different polynomial orders and refinements are seen in Fig. 8. As can be seen, the scaled eigenvalues are essentially constant with respect to h. Thus the dependence on h is in agreement with the theoretical considerations in Sect, 2.4. We see that the minimal eigenvalues decrease quite fast when increasing the polynomial degree, and that they are substantially smaller than in the non-immersed case. The maximal eigenvalues also decrease but much slower than in the non-immersed case.

3.3 An Immersed Outer Problem

Consider instead an outer problem with the geometry depicted in Fig. 10. The star shaped geometry is the zero contour of the following level set function

where \((r,\theta )\) are the polar coordinates, and \(R=0.5\), \(R_0=0.1\), \(n=5\). So our domain \(\varOmega \) is given by

Starting from zero initial conditions

we prescribe homogeneous Neumann boundary condition on the internal boundary

and Dirichlet boundary conditions on the external boundaries

Here, \(g_D\) is the function

where we have chosen \(\sigma =0.25\), \(t_c=3\). A few snapshots of the numerical solution are seen in Fig. 11.

Here, we don’t have an expression for the analytic solution. So when computing the errors we compare against a reference solution, \(u_{\text {ref}}\). The reference solution was computed on a grid twice as fine as the finest grid that we present errors for.

The computed errors after solving to the end time \(t_f =4\) are shown in Tables 5, 6 and 7. We see that the convergence is at least \(h^{p+1}\) for the \(L_2(\varOmega )\)-error and \(h^{p}\) for the \(H^1(\varOmega )\)-error. The last column shows the error in the Neumann boundary condition, which converges close to what is expected: \(h^p\).

4 Discussion

The results in Sects. 3.2 and 3.3 show that it is possible to solve the wave equation and obtain up to 4th order convergence. In particular, it is also promising that the CFL-condition is not stricter than for the non-immersed case. However, both the theoretical results in Lemma 6 and the results in Sect. 3.2 show that there are problems with the conditioning of the mass matrix. It should be emphasized that even if the added stabilization creates some new problems it is by far better than using no stabilization at all. With the added stabilization the method can be proved to be stable, which is essential.

It would, of course, be advantageous if one would be able to create a stabilization which does not lead to conditioning problems. However, the prospects for creating a good preconditioner for the mass matrix is rather good, since the stabilization maintains the symmetry of the mass matrix and since one obtains bounds on its spectrum from the analysis.

The choice of the weights in (85) were based on hand-waving arguments and can, therefore, be criticized. We have tried other choices of weights but have not presented the results here. This is mainly because they give similar results and we have no reason to believe that there exists a choice which makes the condition number significantly better.

Notes

In particular, see (4.6.4) and (4.6.5) in Theorem 4.76, together with the reasoning leading to Corollary 3.94.

References

Arndt, D., Bangerth, W., Davydov, D., Heister, T., Heltai, L., Kronbichler, M., Maier, M., Pelteret, J.P., Turcksin, B., Wells, D.: The deal.II library, version 8.5. J. Numer. Math. (2017). https://doi.org/10.1515/jnma-2016-1045

Burman, E.: Ghost penalty. C. R. Math. 348(21–22), 1217–1220 (2010). https://doi.org/10.1016/j.crma.2010.10.006

Burman, E., Claus, S., Hansbo, P., Larson, M.G., Massing, A.: CutFEM: discretizing geometry and partial differential equations. Int. J. Numer. Methods Eng. 104(7), 472–501 (2015). https://doi.org/10.1002/nme.4823

Burman, E., Hansbo, P.: Fictitious domain finite element methods using cut elements: II. A stabilized Nitsche method. Appl. Numer. Math. 62(4), 328–341 (2012). https://doi.org/10.1016/j.apnum.2011.01.008

Burman, E., Hansbo, P., Larson, M.G., Zahedi, S.: Cut finite element methods for coupled bulk-surface problems. Numer. Math. 133(2), 203–231 (2016). https://doi.org/10.1007/s00211-015-0744-3

Hansbo, A., Hansbo, P.: An unfitted finite element method, based on Nitsche’s method, for elliptic interface problems. Comput. Methods Appl. Mech. Eng. 191(47), 5537–5552 (2002). https://doi.org/10.1016/S0045-7825(02)00524-8

Hansbo, P., Larson, M., Zahedi, S.: A cut finite element method for a Stokes interface problem. Appl. Numer. Math. 85, 90–114 (2014). https://doi.org/10.1016/j.apnum.2014.06.009

Hansbo, P., Larson, M.G., Larsson, K.: Cut finite element methods for linear elasticity problems. In: Bordas, S.P.A., Burman, E., Larson, M.G., Olshanskii, M.A. (eds.) Geometrically Unfitted Finite Element Methods and Applications, pp. 25–63. Springer, Cham (2017)

Hansbo, P., Larson, M.G., Zahedi, S.: Characteristic cut finite element methods for convection–diffusion problems on time dependent surfaces. Comput. Methods Appl. Mech. Eng. 293, 431–461 (2015). https://doi.org/10.1016/j.cma.2015.05.010

Johansson, A., Larson, M.G.: A high order discontinuous Galerkin Nitsche method for elliptic problems with fictitious boundary. Numer. Math. 123(4), 607–628 (2013). https://doi.org/10.1007/s00211-012-0497-1

Johansson, A., Larson, M.G., Logg, A.: High order cut finite element methods for the Stokes problem. Adv. Model. Simul. Eng. Sci. 2(1), 24 (2015). https://doi.org/10.1186/s40323-015-0043-7

Kummer, F.: Extended discontinuous Galerkin methods for two-phase flows: the spatial discretization. Int. J. Numer. Methods Eng. 109(2), 259–289 (2017). https://doi.org/10.1002/nme.5288

Lehrenfeld, C., Reusken, A.: Optimal preconditioners for Nitsche-XFEM discretizations of interface problems. Numer. Math. pp. 1–20 (2016). https://doi.org/10.1007/s00211-016-0801-6

Lehrenfeld, C., Reusken, A.: Analysis of a high-order unfitted finite element method for elliptic interface problems. IMA J. Numer. Anal. 38(3), 1351–1387 (2018). https://doi.org/10.1093/imanum/drx041. URL https://academic.oup.com/imajna/article/38/3/1351/4084723

Massing, A., Larson, M.G., Logg, A., Rognes, M.E.: A stabilized Nitsche fictitious domain method for the stokes problem. J. Sci. Comput. 61(3), 604–628 (2014). https://doi.org/10.1007/s10915-014-9838-9

Müller, B., Krämer-Eis, S., Kummer, F., Oberlack, M.: A high-order discontinuous Galerkin method for compressible flows with immersed boundaries. Int. J. Numer. Methods Eng. (2016). https://doi.org/10.1002/nme.5343

Nitsche, J.: Über ein Variationsprinzip zur Lösung von Dirichlet-Problemen bei Verwendung von Teilräumen, die keinen Randbedingungen unterworfen sind. Abh. Math. Sem. Univ. Hambg. 36, 9–15 (1971). https://doi.org/10.1007/BF02995904

Reusken, A.: Analysis of an extended pressure finite element space for two-phase incompressible flows. Comput. Vis. Sci. 11(4–6), 293–305 (2008). https://doi.org/10.1007/s00791-008-0099-8

Saye, R.I.: High-order quadrature methods for implicitly defined surfaces and volumes in hyperrectangles. SIAM J. Sci. Comput. 37(2), A993–A1019 (2015). https://doi.org/10.1137/140966290

Schwab, C.: p- and hp- Finite Element Methods. Oxford University Press, Oxford (1998)

Sticko, S., Kreiss, G.: A stabilized Nitsche cut element method for the wave equation. Comput. Methods Appl. Mech. Eng. 309, 364–387 (2016). https://doi.org/10.1016/j.cma.2016.06.001

Warburton, T., Hesthaven, J.: On the constants in hp-finite element trace inverse inequalities. Comput. Methods Appl. Mech. Eng. 192(25), 2765–2773 (2003). https://doi.org/10.1016/S0045-7825(03)00294-9

Acknowledgements

Open access funding provided by Uppsala University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by the Swedish Research Council Grant No. 2014-6088.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sticko, S., Kreiss, G. Higher Order Cut Finite Elements for the Wave Equation. J Sci Comput 80, 1867–1887 (2019). https://doi.org/10.1007/s10915-019-01004-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-01004-2