Abstract

In this paper we consider a problem, called convex projection, of projecting a convex set onto a subspace. We will show that to a convex projection one can assign a particular multi-objective convex optimization problem, such that the solution to that problem also solves the convex projection (and vice versa), which is analogous to the result in the polyhedral convex case considered in Löhne and Weißing (Math Methods Oper Res 84(2):411–426, 2016). In practice, however, one can only compute approximate solutions in the (bounded or self-bounded) convex case, which solve the problem up to a given error tolerance. We will show that for approximate solutions a similar connection can be proven, but the tolerance level needs to be adjusted. That is, an approximate solution of the convex projection solves the multi-objective problem only with an increased error. Similarly, an approximate solution of the multi-objective problem solves the convex projection with an increased error. In both cases the tolerance is increased proportionally to a multiplier. These multipliers are deduced and shown to be sharp. These results allow to compute approximate solutions to a convex projection problem by computing approximate solutions to the corresponding multi-objective convex optimization problem, for which algorithms exist in the bounded case. For completeness, we will also investigate the potential generalization of the following result to the convex case. In Löhne and Weißing (Math Methods Oper Res 84(2):411–426, 2016), it has been shown for the polyhedral case, how to construct a polyhedral projection associated to any given vector linear program and how to relate their solutions. This in turn yields an equivalence between polyhedral projection, multi-objective linear programming and vector linear programming. We will show that only some parts of this result can be generalized to the convex case, and discuss the limitations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let us start with a short motivation, describing a field of research where convex projection problems, the main object of this paper, arise. A dynamic programming principle, called a set-valued Bellman principle, for a particular vector optimization problem has been proposed in [4], and has also been applied in [3, 5] to other multivariate problems. Often, dynamic optimization problems depend on some parameter, in the above examples the initial capital. Then, the dynamic programming principle leads to a sequence of parametrized vector optimization problems, that need to be solved recursively backwards in time. A parametrized vector optimization problem can equivalently be written as a projection problem. If the problem happens to be polyhedral, the theory of [8] can be applied, which allows to rewrite the polyhedral projection as a particular multi-objective linear program, and thus, known solvers can be used to solve the original parametrized vector optimization problem for all values of the parameter. This method has been used e.g. in [5]. However, in general the problem is convex, and not necessarily polyhedral, and thus leads to a convex projection problem.

The aim of this paper is to develop the needed theory to treat these problems. That is, to define the problem of convex projection, find appropriate solution concepts and, in analogy to the polyhedral case, relate those to a multi-objective convex problem, for which, at least in the bounded case, solvers are already available that compute approximate solutions, see [1, 2, 7]. It is left for future research to develop algorithms to solve also unbounded multi-objective convex problems, which would, by the results of this paper, also allow to solve certain unbounded convex projection problems. This, in turn, would then enable to solve the above mentioned problems arising from multivariate dynamic programming as they are by construction unbounded. Thus, the present research can be seen as a first step into that direction, but might also be of independent interest.

Let us start with a short description of the known results in the polyhedral case. Polyhedral projection is a problem of projecting a polyhedral convex set, given by a finite collection of linear inequalities, onto a subspace. This problem is considered in [8], where an equivalence between polyhedral projection, multi-objective linear programming and vector linear programming is proven.

The following problem is called a polyhedral projection:

where the matrices \(G \in \mathbb {R}^{k \times n}, H \in \mathbb {R}^{k \times m}\) and a vector \(h \in \mathbb {R}^k\) define a polyhedral feasible set \(S = \{ (x,y) \in \mathbb {R}^n \times \mathbb {R}^m \; \vert \; Gx + Hy \ge h \}\) to be projected. To the above polyhedral projection corresponds a multi-objective linear program

In [8] it is proven that every solution of the associated multi-objective linear program is also a solution of the polyhedral projection (and vice versa) and, moreover, a solution exists whenever the polyhedral projection is feasible. This enables to solve polyhedral projection problems using the existing solvers for multi-objective (vector) linear programs such as [9]. Furthermore, [8] shows how to construct a polyhedral projection associated to any given vector linear program. A solution of the given vector linear program can then, under some assumptions, be recovered from a solution of the associated polyhedral projection. This yields the aforementioned equivalence between polyhedral projection, multi-objective linear programming and vector linear programming.

In this paper we are interested in a projection problem, where the feasible set \(S \subseteq \mathbb {R}^n \times \mathbb {R}^m\) is convex, but not necessarily polyhedral. Our problem, which we call a convex projection, is of the form

Under computing the set Y we understand computing a collection of feasible points that (exactly or approximately) span the set Y. The question we want to answer is whether there exists a similar connection between convex projection, multi-objective convex optimization and convex vector optimization as there is in the linear (polyhedral) case. Inspired by [8], we consider the multi-objective convex problem

Just as in the polyhedral case, there is a close link between the problems (CP) and (1). Relating solutions of the two problems, however, becomes somehow more involved. Namely, we need to make clear with which solution concept we work and also consider the boundedness of the problem. The notion of an exact solution does exist, but it is mostly a theoretical concept as it usually does not consist of finitely many points. In practice, instead, one obtains approximate solutions (i.e. finite \(\varepsilon \)-solutions), which solve the problem up to a given error tolerance. We consider exact solutions, as well as approximate solutions of bounded and self-bounded problems. Precise definitions will be given in the following sections. The results for exact solutions (which does not assume (self-)boundedness) are parallel to those for the linear case considered in [8], we obtain an equivalence between exact solutions of the convex projection (CP) and the associated multi-objective problem (1). For approximate solutions (of bounded or self-bounded problems) a connection also exists, but the tolerance needs to be adjusted. That is, an approximate solution of the convex projection solves the multi-objective problem only with an increased error. Similarly, an approximate solution of the multi-objective problem solves the convex projection with an increased error. In both cases the tolerance is increased proportionally to a multiplier that is roughly equal to the dimension of the problem.

A problem similar to (CP) was considered in [12], where an outer approximation Benson algorithm was adapted to solve it under some compactness assumptions. Here, we take a more theoretical approach to relate the different problem classes. For this reason we also consider a general convex vector optimization problem. There does exist an associated convex projection, which spans (up to a closure) the upper image of the vector problem. However, instead of having a correspondence between solutions of the two problems, we can only connect (exact or approximate) solutions of the associated projection to (exact or approximate) infimizers of the vector optimization.

The paper is organized as follows: Sect. 2.1 contains preliminaries and introduces the notation, Sect. 2.2 summarizes the solution concepts and properties of a convex vector optimization problem. In Sect. 3.1, we define a convex projection and introduce the corresponding solution concepts. Section 3.2 contains the main results, the connection between the convex projection and an associated multi-objective problem is established. Section 4 formulates a convex projection corresponding to a given convex vector optimization problem. Finally, in Sect. 5 the derived results are considered under a slightly different solution concept based on the Hausdorff distance, which was recently proposed in [1].

2 Preliminaries

2.1 Notation

For a set \(A \subseteq \mathbb {R}^m\) we denote by \({\text {conv}}A, {\text {cone}}A, {\text {cl}}A\) and \({\text {int}}A\) the convex hull, the conical hull, the closure and the interior of A, respectively. The recession cone of the set \(A \subseteq \mathbb {R}^m\) is \(A_{\infty } = \{ y \in \mathbb {R}^m \; \vert \; \forall x \in A, \lambda \ge 0 : \; x + \lambda y \in A \}\). A cone \(C \subseteq \mathbb {R}^m\) is solid if it has a non-empty interior, it is pointed if \(C \cap -C = \{0\}\) and it is non-trivial if \(\{0\} \subsetneq C \subsetneq \mathbb {R}^m\). A non-trivial pointed convex cone \(C\subseteq \mathbb {R}^m\) defines a partial ordering on \(\mathbb {R}^m\) via \(q^{(1)} \le _C q^{(2)}\) if and only if \(q^{(2)} - q^{(1)} \in C\). Given a non-trivial pointed convex cone \(C\subseteq \mathbb {R}^m\) and a convex set \(\mathscr {X} \subseteq \mathbb {R}^n\), a function \(\varGamma : \mathscr {X} \rightarrow \mathbb {R}^m\) is C-convex, if for all \(x^{(1)}, x^{(2)} \in \mathscr {X}\) and \(\lambda \in [0,1]\) it holds \(\varGamma (\lambda x^{(1)} + (1-\lambda ) x^{(2)}) \le _C \lambda \varGamma (x^{(1)}) + (1-\lambda ) \varGamma (x^{(2)})\). The Minkowski sum of two sets \(A, B \subseteq \mathbb {R}^m\) is, as usual, denoted by \(A + B = \{ a + b \; \vert \; a \in A, b \in B\}\). As a short hand notation we will denote the Minkowski sum of a set \(A \subseteq \mathbb {R}^m\) and the negative of a cone \(C \subseteq \mathbb {R}^m\) by \(A - C = A + (-C)\). The positive dual cone of C is \(C^+ = \{ w \in \mathbb {R}^m \vert w^{\mathsf {T}} c \ge 0 \forall c \in C \}\).

Throughout the paper we fix \(p \in [1, \infty ]\) and denote by \(\Vert \cdot \Vert \) the p-norm and by \(B_{\varepsilon } = \{ z \in \mathbb {R}^m \; \vert \; \Vert z \Vert \le \varepsilon \}\) the closed \(\varepsilon \)-ball around the origin in this norm. The standard basis vectors are denoted by \(e^{(1)}, \dots , e^{(m)}\). In the following, we work with Euclidean spaces of various dimensions, mainly with \(\mathbb {R}^m\) and \(\mathbb {R}^{m+1}\). In order to keep the notation as simple as possible we do not explicitly denote the dimensions of most vectors (e.g. \(e^{(1)}\)) or sets (e.g. \(B_{\varepsilon }\)), as they should be clear from the context. One exception is the vector of ones, where we denote \(\mathbf {1} \in \mathbb {R}^{m}\) and \(\mathbb {1} \in \mathbb {R}^{m+1}\) to avoid any confusion.

We use three projection mappings, \({\text {proj}}_y: \mathbb {R}^{n} \times \mathbb {R}^m \rightarrow \mathbb {R}^{m}, {\text {proj}}_x: \mathbb {R}^{n} \times \mathbb {R}^m \rightarrow \mathbb {R}^{n}\) and \({\text {proj}}_{-1}: \mathbb {R}^{m+1} \rightarrow \mathbb {R}^{m}\). The mapping \({\text {proj}}_y\), given by the matrix \({\text {proj}}_y = \begin{pmatrix} 0&\quad I \end{pmatrix},\) projects a vector \((x, y) \in \mathbb {R}^{n} \times \mathbb {R}^m\) onto \(y \in \mathbb {R}^m\). The mapping \({\text {proj}}_x\), given by the matrix \({\text {proj}}_x = \begin{pmatrix} I&\quad 0 \end{pmatrix},\) projects a vector \((x, y) \in \mathbb {R}^{n} \times \mathbb {R}^m\) onto \(x \in \mathbb {R}^n\). The mapping \({\text {proj}}_{-1}\) drops the last element of a vector from \(\mathbb {R}^{m+1}\).

2.2 Convex vector optimization problem

In this section we recall the definition of a convex vector optimization problem, its properties and solution concepts from [7, 13], which are adopted within this work, and which are based on the lattice approach to vector optimization [6].

A convex vector optimization problem is

where \(C \subseteq \mathbb {R}^m\) is a non-trivial, pointed, solid, convex ordering cone, \(\mathscr {X} \subseteq \mathbb {R}^n\) is a convex set and the objective function \(\varGamma : \mathscr {X} \rightarrow \mathbb {R}^m\) is C-convex. The convex feasible set \(\mathscr {X}\) is usually specified via a collection of convex inequalities. A convex vector optimization problem is called a multi-objective convex problem if the ordering cone is the natural ordering cone, i.e. if \(C=\mathbb {R}^m_+\). A particular multi-objective convex problem that helps in solving a convex projection problem will be considered in Sect. 3.2. The general convex vector optimization problem and its connection to convex projections will be treated in Sect. 4.

The image of the feasible set \(\mathscr {X}\) is defined as \(\varGamma [\mathscr {X}] = \{ \varGamma (x) \; \vert \; x \in \mathscr {X} \}\). The closed convex set

is called the upper image of (CVOP). A feasible point \(\bar{x} \in \mathscr {X}\) is a minimizer of (CVOP) if it holds \((\{\varGamma (\bar{x})\} - C \backslash \{0\}) \cap \varGamma [\mathscr {X}] = \emptyset \). It is a weak minimizer if \((\{\varGamma (\bar{x})\} - {\text {int}}C) \cap \varGamma [\mathscr {X}] = \emptyset \).

Definition 1

(see [13]) The problem (CVOP) is bounded if for some \(q \in \mathbb {R}^m\) it holds \(\mathscr {G} \subseteq \{q\} + C\). If (CVOP) is not bounded, it is called unbounded. The problem (CVOP) is self-bounded if \(\mathscr {G} \ne \mathbb {R}^m\) and for some \(q \in \mathbb {R}^m\) it holds \(\mathscr {G} \subseteq \{q\} + \mathscr {G}_{\infty }\).

Self-boundedness is related to the tractability of the problem, i.e., to the existence of polyhedral inner and outer approximations to the Pareto frontier of (CVOP) such that the Hausdorff distance between the two is finite, see [13]. Self-boundedness allows to turn an unbounded problem into a bounded one by replacing the ordering cone C by \(\mathscr {G}_{\infty }\). One can notice that a bounded problem is a special case of a self-bounded one. The following relationship holds.

Lemma 1

A self-bounded (CVOP) is bounded if and only if \(\mathscr {G}_{\infty } = {\text {cl}}C\).

Proof

Boundedness of (CVOP) implies \(\mathscr {G}_{\infty } \subseteq {\text {cl}}C\), together with \( {\text {cl}}C\subseteq \mathscr {G}_{\infty }\) one obtains \(\mathscr {G}_{\infty } = {\text {cl}}C\). For the reverse, note that self-boundedness and \(\mathscr {G}_{\infty } = {\text {cl}}C\) imply \(\mathscr {G} \subseteq \{q\} + {\text {cl}}C\). To prove the boundedness of (CVOP), one needs to show that also \(\mathscr {G} \subseteq \{\bar{q}\} + C\) for some \(\bar{q} \in \mathbb {R}^m\). This follows from \({\text {cl}}C \subseteq \{-\delta c\} + C\) for arbitrary \(\delta >0\) and \(c \in {\text {int}}C\), which holds as C is a solid convex cone. Thus one can set \(\bar{q} = q-\delta c\). \(\square \)

Boundedness of the problem (CVOP) is defined as boundedness of the upper image \(\mathscr {G}\) with respect to the ordering cone C. According to the above proof, for a solid cone C boundedness of \(\mathscr {G}\) with respect to C, with respect to \({\text {cl}}C\) and with respect to \({\text {int}}C\) are equivalent.

(Self-)boundedness is important for the definition of approximate solutions. In the following, a normalized direction \(c \in {\text {int}}C\), i.e. \(\Vert c \Vert = 1\), and a tolerance \(\varepsilon > 0\) are fixed.

Definition 2

(see [7, 13]) A set \(\bar{X} \subseteq \mathscr {X}\) is an infimizer of (CVOP) if it satisfies

An infimizer \(\bar{X}\) of (CVOP) is called a (weak) solution if it consists of (weak) minimizers only. A nonempty finite set \(\bar{X} \subseteq \mathscr {X}\) is a finite \(\varepsilon \)-infimizer of a bounded problem (CVOP) if

A nonempty finite set \(\bar{X} \subseteq \mathscr {X}\) is a finite \(\varepsilon \)-infimizer of a self-bounded problem (CVOP) if

A finite \(\varepsilon \)-infimizer \(\bar{X}\) of (CVOP) is called a finite (weak) \(\varepsilon \)-solution if it consists of (weak) minimizers only.

There are algorithms such as [1, 2, 7] for finding finite \(\varepsilon \)-solutions of a bounded (CVOP) (under some assumptions, e.g. compact feasible set, continuous objective). The definition of a finite \(\varepsilon \)-solution in the self-bounded case is more of a theoretical concept, as it assumes that the recession cone of the upper image is known. Nevertheless, we consider also this case, as it can be treated jointly with the bounded case. To the best of our knowledge, there is, so far, no algorithm for solving an unbounded (CVOP), which also means, there is no definition yet of an approximate solution in the not self-bounded case.

The definition of a finite \(\varepsilon \)-solution in [1] differs slightly from the one used here that is based on [7]. However, the structure of the main results (Theorems 2, 3, 4 and 5) remain valid using the definition from [1] under some minor adjustments of some details, which are given in Sect. 5.

In this paper we will often refer to a solution as an exact solution and to a finite \(\varepsilon \)-solution as an approximate solution.

3 Convex projections

3.1 Definitions

The object of interest is the convex counterpart of the polyhedral projection, that is, the problem of projecting a convex set onto a subspace. Thus, let a convex set \(S \subseteq \mathbb {R}^n \times \mathbb {R}^m\) be given. Just as the feasible set \(\mathscr {X}\) of (CVOP), it could be specified via a collection of convex inequalities. The aim is to project the feasible set S onto its y-component, that is to

First, we introduce the properties of boundedness and self-boundedness for the convex projection problem.

Definition 3

The convex projection (CP) is called bounded if the set Y is bounded, i.e. \(Y \subseteq B_K\) for some \(K > 0\). The convex projection (CP) is called unbounded if it is not bounded. The convex projection (CP) is called self-bounded if \(Y \ne \mathbb {R}^m\) and there exist finitely many points \(y^{(1)}, \dots , y^{(k)} \in \mathbb {R}^m\) such that

The reader can justifiably question the differences between Definition 3 for the projection problem and Definition 1 for the vector optimization problem. As far as bounded problems are concerned, these differences are intuitively reasonable: Each of the two problems is represented by a set, (CVOP) by the upper image \(\mathscr {G}\) and (CP) by the set Y. The upper image \(\mathscr {G}\) is a so-called closed upper set with respect to the ordering cone C. Boundedness of the problem (CVOP), therefore, corresponds to boundedness of \(\mathscr {G}\) with respect to the ordering cone, in particular as an upper set cannot be topologically bounded. On the other hand, the set Y is not an upper set and it can be bounded in the usual topological sense. Furthermore, as no ordering cone was specified to define the convex projection, boundedness with respect to the ordering cone is not the appropriate concept here. The difference for the self-bounded problems is less intuitive. We discuss in detail in Sect. 3.2.1 why for convex projections finitely many points are necessary, while for convex vector optimization problems it is enough to consider one point in the definition of self-boundedness, which provides a motivation for the definition we proposed here. Finally, we will prove in Proposition 1 below that the definitions of boundedness and self-boundedness in Definition 3 for the projection problem and Definition 1 for a particular multi-objective convex optimization problem correspond one-to-one to each other.

Now, we give a definition of solutions—both exact and approximate—for the convex projection. Since we aim towards relating the projection to vector optimization, we only define approximate solutions for the bounded and the self-bounded case. Let a tolerance level \(\varepsilon > 0\) be fixed.

Definition 4

A set \(\bar{S} \subseteq S\) is called a solution of (CP) if it satisfies

A non-empty finite set \(\bar{S} \subseteq S\) is called a finite \(\varepsilon \)-solution of a bounded problem (CP) if

A non-empty finite set \(\bar{S} \subseteq S\) is called a finite \(\varepsilon \)-solution of a self-bounded problem (CP) if

3.2 An associated multi-objective convex problem

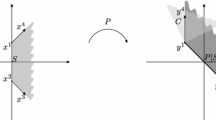

We are interested in examining the connection between convex projections and convex vector optimization. The linear counterpart suggests that multi-objective optimization might be helpful also for solving the convex projection problem. Inspired by the polyhedral case of [8] and the case of convex bodies in [12], we construct a multi-objective problem with the same feasible set S and one additional dimension of the objective space. That is, we consider the following problem

which is a multi-objective convex optimization problem with a linear objective function and a convex feasible set S. To increase readability we add the following notations. The mappings \(P: \mathbb {R}^n \times \mathbb {R}^m \rightarrow \mathbb {R}^{m+1}\) and \(Q: \mathbb {R}^{m} \rightarrow \mathbb {R}^{m+1}\) are given by the matrices \(P = \begin{pmatrix}0 &{}\quad I \\ 0 &{}\quad - \mathbf {1}^{\mathsf {T}} \end{pmatrix}\) and \(Q = \begin{pmatrix}I \\ - \mathbf {1}^{\mathsf {T}} \end{pmatrix}\), respectively. The image of the feasible set of (8) is given by \(P[S] = \{ P (x,y) \; \vert \; (x,y) \in S \}\), its upper image is denoted by

The aim of this section is to establish a connection between the convex projection (CP) and the multi-objective problem (8), between their properties and their solutions. Then, a solution provided by a solver for problem (8) would lead the way to a solution to problem (CP). The following observations are immediate, most importantly the connection between the sets P[S] and Y.

Lemma 2

-

1.

Every feasible point \((x, y) \in S\) is a minimizer of (8).

-

2.

For the sets Y and P[S] it holds \(Y = {\text {proj}}_{-1} [P[S]]\) and \(P[S] = Q[Y].\)

Proof

The first claim follows from the form of the matrix P. Since \(Y = {\text {proj}}_y [S]\), the second claim follows from the matrix equalities \({\text {proj}}_y = {\text {proj}}_{-1} \cdot P\) and \(P = Q \cdot {\text {proj}}_y\). \(\square \)

A similar observation was made in [12] about the problem studied there. Also here, convexity is not used within the proof, so the claim would hold also for non-convex projection problems.

For convenience, we restate from Definition 2 the definition of exact and approximate solutions specifically for problem (8) with the direction \( \Vert \mathbb {1} \Vert ^{-1} \mathbb {1} \in {\text {int}}\mathbb {R}^{m+1}_+\), and applying Lemma 2 (1). A set \(\bar{S} \subseteq S\) is a solution of (8) if

A nonempty finite set \(\bar{S} \subseteq S\) is a finite \(\varepsilon \)-solution of a self-bounded (8) if

This includes also the case of a bounded problem (8), where \(\mathscr {P}_{\infty } = \mathbb {R}^{m+1}_+\).

3.2.1 Relation of boundedness

The definition of an approximate solution and the availability of solvers (for (CVOP)) depend on whether the problem is (self-)bounded. So before we can relate approximate solutions of the two problems, we need to relate their properties with respect to boundedness. Which also brings us back to the question raised in the previous section—why does our definition of a self-bounded problem differ between the projection and the vector optimization problem?

An analogy with the corresponding definition in convex vector optimization would suggest that the convex projection (CP) should be considered self-bounded if \(Y \ne \mathbb {R}^m\) and there exists a single point \(y \in \mathbb {R}^m\) such that

Instead, we suggested to replace the single point y with a convex polytope and to use the recession cone of the closure of Y. This led to the following notion of self-boundedness in Definition 3: The convex projection problem (CP) is called self-bounded if \(Y \ne \mathbb {R}^m\) and there exist finitely many points \(y^{(1)}, \dots , y^{(k)} \in \mathbb {R}^m\) such that

Two considerations motivate the definition via (4): First, the recession cone \(Y_{\infty }\) might have an empty interior. Second, the set Y does not need to be closed. Both of these can lead to situations where the multi-objective problem (8) is self-bounded, but the projection (CP) does not satisfy (11). We illustrate this in Examples 1 and 2, where for simplicity the trivial projection, i.e. the identity, is used. In both of our examples these issues can be resolved by replacing condition (11) with condition (4). We will prove in Proposition 1 below, that this is also in general the case.

Example 1

Consider the set

and its recession cone \(Y_{\infty } = {\text {cone}}\, \{(0,1)\}\). The associated multi-objective problem (8) with the upper image

is self-bounded as \(\mathscr {P} \subseteq \{(-1,-1,-2)\} + \mathscr {P}_{\infty }\). However, the set Y does not satisfy (11) for any single point \(y \in \mathbb {R}^m\). Because of its empty interior, no shifting of the cone \(Y_{\infty }\) can cover the set Y with a non-empty interior. But already two points \(y^{(1)} = (-1, -1), \, y^{(2)} = (1, -1)\) suffice for (4).

Example 2

Consider the convex, but not closed, set

It is not bounded, but its recession cone is trivial, \(Y_{\infty } = \{0\}\). The associated multi-objective problem (8) with the upper image

is self-bounded as \(\mathscr {P} \subseteq \{(0,0,-1)\} + \mathscr {P}_{\infty }\). However, the set Y with its trivial recession cone \(Y_{\infty } = \{0\}\) clearly cannot satisfy (11). It also wouldn’t suffice to just replace the single point y in (11) by a convex polytope \({\text {conv}}\{y^{(1)}, \dots , y^{(k)}\}\), since the set Y is not bounded. But the recession cone \(({\text {cl}}Y)_{\infty } = {\text {cone}}\, \{(0,1)\}\) satisfies (4) with e.g. the pair of points \(y^{(1)} = (0,0), \, y^{(2)} = (1, 0)\).

The two considerations illustrated here were the motivation for defining self-boundedness via (4). The condition (4) can be considered as a generalization of the definition of self-boundedness found in the literature. For vector optimization problems, Definition 1 and a definition via (4) coincide because of two properties of upper images. An upper image is always a closed set and its recession cone is solid as it always contains the ordering cone.

We will now prove that, as long as one uses the more general notion of self-boundedness (Definition 3) for (CP), boundedness, self-boundedness, and unboundedness of the projection (CP) are equivalent to boundedness, self-boundedness, and unboundedness of the multi-objective problem (8), respectively.

Proposition 1

-

1.

The convex projection (CP) is bounded if and only if the multi-objective problem (8) is bounded.

-

2.

The convex projection (CP) is self-bounded if and only if the multi-objective problem (8) is self-bounded.

-

3.

The convex projection (CP) is unbounded if and only if the multi-objective problem (8) is unbounded.

The proof of Proposition 1 will require the following two lemmas. The first one is a trivial observation and thus stated without proof, but comes in handy as it will also be used later. The second lemma provides a crucial connection between the recession cones of the two problems.

Lemma 3

Let \(A \subseteq \mathbb {R}^n\) be a set and \(C \subseteq \mathbb {R}^n\) be a convex cone. Then, \({\text {cl}}(A + C) = {\text {cl}}( {\text {cl}}A + C)\).

Lemma 4

For the recession cones of the sets Y and P[S] it holds

For the recession cone of the upper image it holds

Proof

The first four equalities follow from \(P[S] = Q[Y]\) (Lemma 2 (2)) and \({\text {cl}}P[S] = Q [{\text {cl}}Y]\), the matrix Q being the right-inverse of \({\text {proj}}_{-1}\) (Lemma 2 (2)) and the fact that \({\text {cl}}P[S] \subseteq Q [\mathbb {R}^m] = \{ x \in \mathbb {R}^{m+1} \vert \mathbb {1}^{\mathsf {T}} x =0\}\).

According to Lemma 3, for the upper image it holds \(\mathscr {P} = {\text {cl}}( Q [{\text {cl}}Y] + \mathbb {R}^{m+1}_+)\). The sets \(Q [{\text {cl}}Y]\) and \(\mathbb {R}^{m+1}_+\) are both closed and convex. One easily verifies that \(Q [({\text {cl}}Y)_{\infty }] \subseteq Q [\mathbb {R}^{m}] = \{ x \in \mathbb {R}^{m+1} \vert \mathbb {1}^{\mathsf {T}} x =0\}\), so \(Q [({\text {cl}}Y)_{\infty }] \cap -\mathbb {R}^{m+1}_+ = \{0\}\) and the sets \(Q [{\text {cl}}Y]\) and \(\mathbb {R}^{m+1}_+\) satisfy all assumptions of Corollary 9.1.2 of [11]. Thus, the set \(Q [{\text {cl}}Y] + \mathbb {R}^{m+1}_+\) is closed and \(( Q [{\text {cl}}Y] + \mathbb {R}^{m+1}_+)_{\infty } = Q [({\text {cl}}Y)_{\infty }] + \mathbb {R}^{m+1}_+\). \(\square \)

Proof of Proposition 1

Let us first prove the second claim.

- \(\Rightarrow \):

-

Let (CP) be self-bounded. Applying the mapping Q onto (4) and adding the standard ordering cone yields

$$\begin{aligned} P[S] + \mathbb {R}^{m+1}_+ \subseteq {\text {conv}}\{Qy^{(1)}, \dots , Qy^{(k)}\} + Q[({\text {cl}}Y)_{\infty }] + \mathbb {R}^{m+1}_+. \end{aligned}$$For a vector \(q \in \mathbb {R}^{m+1}\), element-wise defined by \(q_{i} = \min \limits _{j=1, \dots k} (Qy^{(j)})_{i}\) for \(i=1, \dots m+1\), it holds \({\text {conv}}\{Qy^{(1)}, \dots , Qy^{(k)}\} \subseteq \{q\} + \mathbb {R}^{m+1}_+\), so

$$\begin{aligned} P[S] + \mathbb {R}^{m+1}_+ \subseteq \{q\} + Q[({\text {cl}}Y)_{\infty }] + \mathbb {R}^{m+1}_+ = \{q\} + \mathscr {P}_{\infty }, \end{aligned}$$where the last equality is due to (12). Since the shifted cone \(\{q\} + \mathscr {P}_{\infty }\) is closed, self-boundedness of (8) follows.

- \(\Leftarrow \):

-

If the problem (8) is self-bounded, then there is a point \(q \in \mathbb {R}^{m+1}\) such that

$$\begin{aligned} \mathscr {P} \subseteq \{q\} + \mathscr {P}_{\infty }. \end{aligned}$$(13)Since \(Q[Y] + \mathbb {R}^{m+1}_+ \subseteq Q[\mathbb {R}^m] + \mathbb {R}^{m+1}_+ = \{ x \in \mathbb {R}^{m+1} \vert \mathbb {1}^{\mathsf {T}} x \ge 0\}\), for the upper image \(\mathscr {P} = {\text {cl}}( Q[Y] + \mathbb {R}^{m+1}_+ )\) and its recession cone \(\mathscr {P}_{\infty }\) it holds \(\mathscr {P}, \mathscr {P}_{\infty } \subseteq \{ x \in \mathbb {R}^{m+1} \vert \mathbb {1}^{\mathsf {T}} x \ge 0\}\). As the upper image \(\mathscr {P}\) contains elements of \(Q [\mathbb {R}^m]\), inclusion (13) is only possible if \(\mathbb {1}^{\mathsf {T}} q \le 0\). As \(Q[Y] \subseteq \mathscr {P} \cap Q [\mathbb {R}^m]\), from (13) and (12) it follows

$$\begin{aligned} Q[Y]&\subseteq ( \{q\} + \mathscr {P}_{\infty }) \cap Q [\mathbb {R}^m] = ( \{q\} + \mathbb {R}^{m+1}_+) \cap Q [\mathbb {R}^m] + Q[({\text {cl}}Y)_{\infty }] \\&= {\text {conv}}\{ q^{(1)}, \dots , q^{(m+1)} \} + Q[({\text {cl}}Y)_{\infty }], \end{aligned}$$where \(q^{(i)} := q + \vert \mathbb {1}^{\mathsf {T}} q \vert \cdot e^{(i)}\) for \(i = 1, \dots , m+1\). By applying the projection \({\text {proj}}_{-1}\) we obtain

$$\begin{aligned} Y&\subseteq {\text {conv}}\{ {\text {proj}}_{-1} q^{(1)}, \dots , {\text {proj}}_{-1} q^{(m+1)} \} + ({\text {cl}}Y)_{\infty }. \end{aligned}$$

The first claim is a consequence of the second claim, relation (12) and the fact that for a bounded (CP) it holds \(({\text {cl}}Y)_{\infty } = \{0\}\) and for a bounded problem (8) it holds \(\mathscr {P}_{\infty } = \mathbb {R}^{m+1}_+\). The last claim is an equivalent reformulation of the first claim. \(\square \)

3.2.2 Relation of solutions

Note that under some compactness assumptions the convex projection problem (CP) can be approximately solved using the algorithm in [12]. Here, we are however interested in the connection between problem (CP) and the multi-objective problem (8) and to see if one can be solved in place of the other. Lemma 2 already provides us with a close connection between the two problems, which suggests that interchanging the two problems (in some manner) should be possible. We will now examine if, and in what sense, an (exact or approximate) solution of one problem solves the other problem. We start with the exact solutions, where we obtain an equivalence. Note that this result does not need any type of boundedness assumption. Then, we will move towards approximate solutions for the bounded and the self-bounded case.

Theorem 1

A set \(\bar{S} \subseteq S\) is a solution of the convex projection (CP) if and only if it is a solution of the multi-objective problem (8).

Proof

- \(\Rightarrow \) :

-

Let \(\bar{S} \subseteq S\) be a solution of (CP). Applying the mapping Q onto (5), we obtain

$$\begin{aligned} P[S] \subseteq {\text {cl}}{\text {conv}}P[\bar{S}], \end{aligned}$$see Lemma 2 (2). Adding the ordering cone, taking the closure and Lemma 3 give

$$\begin{aligned} {\text {cl}}( P[S] + \mathbb {R}^{m+1}_+ ) \subseteq {\text {cl}}( {\text {conv}}P[\bar{S}] + \mathbb {R}^{m+1}_+ ), \end{aligned}$$therefore, \(\bar{S}\) is an infimizer of (8), cf. Definition 2. According to Lemma 2, it is also a solution.

- \(\Leftarrow \) :

-

Let \(\bar{S} \subseteq S\) be a solution of (8). We prove that

$$\begin{aligned} P[S] \subseteq {\text {cl}}{\text {conv}}P [\bar{S}] \end{aligned}$$via contradiction: Assume that there is \(q \in P[S]\) and \(\varepsilon > 0\) such that \((\{q\} + B_{\varepsilon }) \cap {\text {conv}}P[\bar{S}] = \emptyset \). According to (9), for all \(\delta > 0\) it holds \((\{q\} + B_{\delta }) \cap ({\text {conv}}P[\bar{S}] + \mathbb {R}^{m+1}_+) \ne \emptyset \). That is, there exist \(b \in B_{\delta }, \bar{q} \in {\text {conv}}P[\bar{S}]\) and \(r \in \mathbb {R}^{m+1}_+\) such that \(q + b = \bar{q} + r\). As \(b_i \le \delta \) one has \(\mathbb {1}^{\mathsf {T}}b \le (m+1) \delta \). Thus, \(\Vert b - r \Vert \le \Vert b \Vert + \Vert -r\Vert \le \Vert b\Vert + \mathbb {1}^{\mathsf {T}}r =\Vert b\Vert + \mathbb {1}^{\mathsf {T}}b \le \delta + (m+1)\delta \), where \(\mathbb {1}^{\mathsf {T}}r =\mathbb {1}^{\mathsf {T}}b\) follows from \(q + b = \bar{q} + r\) and \(\mathbb {1}^{\mathsf {T}} q = \mathbb {1}^{\mathsf {T}} \bar{q} = 0\). Hence, one obtains \(b-r \in B_{\delta \cdot (m+2)}\), so for the choice of \(\delta := \varepsilon / (m+2)\) we get a contradiction. Finally, applying \({\text {proj}}_{-1}\) gives \(Y \subseteq {\text {cl}}{\text {conv}}{\text {proj}}_y [\bar{S}]\) and \(\bar{S}\) is a solution of (CP).

\(\square \)

This result is analogous to Theorem 3 of [8] for the polyhedral case. Here it is unnecessary to prove the existence of a solution when the problem is feasible as the full feasible set is a solution according to Definitions 2 and 4 (consider Lemma 2). Since (exact) solutions are usually unattainable in practice, it is of greater interest to see how the approximate solutions relate. It turns out, the approximate solutions are equivalent only up to an increased error. We will see that in order to use an approximate solution of one problem to solve the other problem, we need to increase the tolerance proportionally to a certain multiplier. These multipliers depend both on the dimension m of the problem and on the p-norm under consideration. Recall that the definition of a finite \(\varepsilon \)-solution of either of the two problems depends implicitly on the fixed \(p \in [1, \infty ]\). For this reason, we denote the p explicitly in this section.

We deal with the bounded and the self-bounded case jointly. As we mentioned before, a bounded (CP) has a trivial recession cone \(({\text {cl}}Y)_{\infty } = \{0\}\) and if (8) is bounded it holds \(\mathscr {P}_{\infty } = \mathbb {R}^{m+1}_+\). This verifies that (7) and (10) correcly define a finite \(\varepsilon \)-solution of a bounded (CP) and a bounded problem (8), respectively. Proposition 1 provided an equivalence between the self-boundedness of the two problems. The following two theorems contain the main results.

Theorem 2

Let the convex projection (CP) be self-bounded and fix \(p \in [1, \infty ]\). If \(\bar{S} \subseteq S\) is a finite \(\varepsilon \)-solution of (CP) (under the p-norm), then it is a finite \(\left( \underline{\kappa } \cdot \varepsilon \right) \)-solution of (8) (under the p-norm), where \(\underline{\kappa } = \underline{\kappa } (m, p) = m^{\frac{p-1}{p}} \cdot (m+1)^{\frac{1}{p}}\) for \(p \in [1, \infty )\) and \(\underline{\kappa } = \underline{\kappa } (m, \infty ) = m\) for \(p = \infty \).

Proof

Multiplying (7) with Q and adding the ordering cone \(\mathbb {R}^{m+1}_+\) yields

Let \(p \in [1, \infty )\). For \(b \in B_{\varepsilon }^{p}\) it holds \(\vert b_i \vert \le \varepsilon \) for each \(i = 1, \dots , m\). By considering the problem \(\max \sum _{i=1}^m b_i \text { s.t. } \sum _{i=1}^m b_i^p \le 1\), we also obtain \(\vert \sum _{i=1}^m b_i \vert \le m^{\frac{p-1}{p}} \cdot \varepsilon \). Thus, we have \(- \left( m^{\frac{p-1}{p}} \cdot \varepsilon \right) \mathbb {1} \le Qb\) for all \(b \in B_{\varepsilon }^{p}\), which leads to

From the Eqs. (12), (14) and (15) and \(\Vert \mathbb {1} \Vert _p = (m+1)^{\frac{1}{p}}\) it follows

Now consider \(p = \infty \). It similarly holds \(\vert b_i \vert \le \varepsilon , i = 1, \dots , m\) , as well as \(\vert \sum _{i=1}^m b_i \vert \le m \cdot \varepsilon \) for \(b \in B_{\varepsilon }^{\infty }\). Therefore, the same steps yield \(Q \left[ B_{\varepsilon }^{\infty } \right] \subseteq - (m \cdot \varepsilon ) \left\{ \mathbb {1} \right\} + \mathbb {R}^{m+1}_+\) and \(P[S] + \mathbb {R}^{m+1}_+ \subseteq {\text {conv}}P[\bar{S}] + \mathscr {P}_{\infty } - \left( m \cdot \varepsilon \right) \left\{ \Vert \mathbb {1} \Vert _p^{-1} \mathbb {1} \right\} \).

Finally, the sets \({\text {conv}}P[\bar{S}]\) and \(\mathscr {P}_{\infty }\) satisfy the assumptions of Corollary 9.1.2 of [11]—both sets are closed convex and \({\text {conv}}P[\bar{S}]\) has a trivial recession cone as it is bounded—therefore, the set \({\text {conv}}P[\bar{S}] + \mathscr {P}_{\infty }\) is closed. Since the shifted set is also closed, we obtain \(\mathscr {P} \subseteq {\text {conv}}P[\bar{S}] + \mathscr {P}_{\infty } - \left( \underline{\kappa } \cdot \varepsilon \right) \left\{ \Vert \mathbb {1} \Vert _p^{-1} \mathbb {1} \right\} \) and \(\bar{S}\) is a finite \(\left( \underline{\kappa } \cdot \varepsilon \right) \)-solution of (8) according to Lemma 2. \(\square \)

Theorem 3

Let the multi-objective problem (8) be self-bounded and fix \(p \in [1, \infty ]\). If \(\bar{S} \subseteq S\) is a finite \(\varepsilon \)-solution of (8) (under the p-norm), then it is a finite \(\left( \overline{\kappa } \cdot \varepsilon \right) \)-solution of (CP) (under the p-norm), where \(\overline{\kappa } = \overline{\kappa } (m, p) = \left( \frac{m^p + m - 1}{m+1} \right) ^{\frac{1}{p}}\) for \(p \in [1, \infty )\) and \(\overline{\kappa } = \overline{\kappa } (m, \infty ) = m\) for \(p = \infty \).

Proof

We give the proof for \(p\in [1, \infty )\). For \(p = \infty \) the same steps work, only the expressions \((m^p + m - 1)^{\frac{1}{p}}\) and \(\left( \frac{m^p + m - 1}{m+1} \right) ^{\frac{1}{p}}\) need to be replaced by their value in the limit, m. For the set \(Q[Y] = P[S] \subseteq \mathscr {P} \cap Q [\mathbb {R}^m]\), according to (10) and (12), we obtain

A projection onto the first m coordinates yields

What remains is to show that the last set on the right-hand side is contained in an error-ball

which would finish the proof.

Now, to see that (16) holds true, note that by \(Q [\mathbb {R}^m] = \{ x \in \mathbb {R}^{m+1} \vert \mathbb {1}^{\mathsf {T}} x =0\}\) one has

A projection onto the first m coordinates yields

The optimization problem

maximizes a convex objective over a compact polyhedron. As such, the maximum needs to be attained in one of the vertices of the polyhedron. Therefore, the optimal objective value of problem (17) is \(\Vert (m+1) \cdot e^{(1)} - \mathbf {1} \Vert _p = (m^p + m - 1)^{\frac{1}{p}}\), which shows

Multiplication with the constant \(\varepsilon \cdot \Vert \mathbb {1} \Vert _p^{-1}\) yields (16). \(\square \)

Both of the above theorems involve increasing the error tolerance proportionally to a certain multiplier. The multipliers \(\underline{\kappa }\) and \(\overline{\kappa }\) both depend on the dimension m and on the p-norm. Of those two the dimension plays a more important role. One can verify that for all \(p \in [1, \infty ]\) the value of \(\underline{\kappa } (m, p)\) lies between m and \(m+1\) and the value of \(\overline{\kappa } (m, p)\) is bounded from above by m. The multipliers in the maximum norm correspond to the limits, i.e. it holds \(\underline{\kappa } (m, \infty ) = \lim \limits _{p \rightarrow \infty } \underline{\kappa } (m, p)\) and \(\overline{\kappa } (m, \infty ) = \lim \limits _{p \rightarrow \infty } \overline{\kappa } (m, p)\). We list the values of the two multipliers for the three most popular norms, the Manhattan, the Euclidean and the maximum norm, in Table 1.

Theorem 2 (respectively Theorem 3) guarantees that increasing the tolerance \(\underline{\kappa }\)-fold (respectively \(\overline{\kappa }\)-fold) is sufficient. We could, however, ask if some smaller increase of the tolerance might not suffice instead. In Sect. 3.3 below, we provide examples where the multipliers \(\underline{\kappa }\) and \(\overline{\kappa }\) are attained. Therefore, the results of Theorems 2 and 3 cannot be improved.

A finite \(\varepsilon \)-solution of a (self-)bounded (CVOP) depends not only on the tolerance \(\varepsilon \) and the underlying p-norm, but also on the direction \(c \in {\text {int}}C\) used within the defining relation (2), respectively (3). As we stated at the beginning of this section, for the multi-objective problem (8) we use the direction \( \Vert \mathbb {1} \Vert ^{-1} \mathbb {1} \in {\text {int}}\mathbb {R}^{m+1}_+\). Since this direction appears within the proofs of Theorems 2 and 3, how much do our results depend on it? The structure of the results would remain unchanged regardless of the considered direction, only the multipliers \(\underline{\kappa }\) and \(\overline{\kappa }\) are direction-specific—variants of relations (15) and (16) hold for arbitrary fixed direction \(r \in {\text {int}}\mathbb {R}^{m+1}_+\) with appropriately adjusted multipliers.

The last question that remains open is whether approximate solutions exist. In the following lemma we prove their existence for self-bounded convex projections.

Lemma 5

There exists a finite \(\varepsilon \)-solution to a self-bounded convex projection (CP) for any \(\varepsilon > 0\).

Proof

This proof is based on Proposition 3.7 of [13]. We use it to prove the existence of a finite \(\frac{\varepsilon }{\underline{\kappa }}\)-solution of the associated problem (8) and then apply Theorem 3. Fix \(\varepsilon > 0\) and set \(\xi := \frac{\varepsilon }{2\underline{\kappa }}\) and \(\delta := \frac{\varepsilon }{2 \underline{\kappa } \Vert \mathbb {1} \Vert }\). Since problem (8) is self-bounded, the upper image \(\mathscr {P}\) and its recession cone \(\mathscr {P}_{\infty }\) satisfy the assumptions of Proposition 3.7 of [13]. Then, for a tolerance \(\xi > 0\) there exists a finite set of points \(\bar{A} \subseteq \mathscr {P}\) such that

Since \(\mathscr {P} = {\text {cl}}(P[S] + \mathbb {R}^{m+1}_+)\), for each \(\bar{a} \in \bar{A}\) there exists \((\bar{x}, \bar{y}) \in S\) such that \(\bar{a} \in P(\bar{x}, \bar{y}) + \mathbb {R}^{m+1}_+ + B_{\delta }\). Denote by \(\bar{S} \subseteq S\) the (finite) collection of such feasible points \((\bar{x}, \bar{y}) \in S\), one per each element of \(\bar{A}\). We obtain

Equations (18) and (19) jointly give

which shows that \(\bar{S}\) is a finite \(\frac{\varepsilon }{\underline{\kappa }}\)-solution of (8). According to Theorem 3, \(\bar{S}\) is a finite \(\varepsilon \)-solution of (CP). \(\square \)

Lemma 5 also proves the existence of a finite \(\varepsilon \)-solution of a self-bounded problem (8). Unlike Proposition 4.3 of [7], this result does not require a compact feasible set. In practice, however, we are restricted by the assumptions of the solvers.

3.3 Examples

We start with three theoretical examples, one for each theorem of the previous subsection, before providing two numerical examples. For simplicity, Examples 4 and 5 contain a trivial projection, i.e. the identity. One could modify the feasible sets to include additional dimensions, but since these examples are intended to illustrate theoretical properties, we chose to keep them as simple as possible.

First, we look at exact solutions for which equivalence was proven in Theorem 1. Example 3 illustrates that a solution of (8) solves (CP) only ’up to the closure’, i.e. the closure in (5) mirrors the closure in (9).

Example 3

Consider the feasible set \(S = \{ (x, y) \; \vert \; x^2 + y^2 \le 1 \}\), which projects onto \(Y = \{ y \; \vert \; x^2 + y^2 \le 1 \} = [-1, 1]\). Correspondingly, \(P[S] = \{y \cdot (1, -1) \; \vert \; y \in [-1, 1] \}\). The set

is a solution of the multi-objective problem. However, projected onto its y-element it gives \({\text {proj}}_y [\bar{S}] = (-1, 1]\). Therefore, \(\bar{S}\) is a solution of the projection but \(Y \ne {\text {conv}}{\text {proj}}_y [\bar{S}]\).

Second, we give an example where the multiplier \(\underline{\kappa }\) introduced in Theorem 2 is attained. Namely, we provide a convex projection and its finite \(\varepsilon \)-solution \(\bar{S}\). This \(\bar{S}\) is not a finite \(\bar{\varepsilon }\)-solution of the associated multi-objective problem for any \(\bar{\varepsilon } < \underline{\kappa } \varepsilon \). This shows that Theorem 2 would not hold with any smaller multiplier. The example holds for all dimensions m and for all p-norms, so \(p \in [1, \infty ]\) is explicitly denoted.

Example 4

Let us start with an example in dimension \(m=2\). Consider the approximate solution \(\bar{S} = \{ (0,0), (1, 0), (0, 1)\}\) of the projection

The smallest tolerance for which the set \(\bar{S}\) is a finite \(\varepsilon ^{(p)}\)-solution of this convex projection (under the p-norm) is \(\varepsilon ^{(p)} = \Vert (\frac{1}{\sqrt{2}}, \frac{1}{\sqrt{2}}) - (\frac{1}{2}, \frac{1}{2}) \Vert _p = \frac{\sqrt{2}-1}{2} \cdot 2^{\frac{1}{p}}\). Now consider the associated multi-objective problem. To cover the vector \(\left( \frac{1}{\sqrt{2}}, \frac{1}{\sqrt{2}}, -\sqrt{2} \right) \in P[S]\) by the set \({\text {conv}}P[\bar{S}] - x \{\mathbb {1}\} + \mathbb {R}^3_+\) we need \(x \ge \sqrt{2} - 1\). Considering normalization by \(\Vert \mathbb {1} \Vert _p = 3^{\frac{1}{p}}\), the set \(\bar{S}\) is a finite \(\bar{\varepsilon }^{(p)}\)-solution of the multi-objective problem (under the p-norm) only if \(\bar{\varepsilon }^{(p)} \ge (\sqrt{2}-1) \cdot 3^{\frac{1}{p}} = \underline{\kappa } \cdot \varepsilon ^{(p)}\), where \(\underline{\kappa } = 2^{\frac{p-1}{p}}\cdot 3^{\frac{1}{p}}\).

For a self-bounded example consider \(Y = S = \{ (y_1, y_2) : y_1^2 + y_2^2 \le 1, y_1 \ge 0, y_2 \ge 0 \} + {\text {cone}}\{(0, -1)\}\) with the same approximate solution.

The same structure yields an example also in the m-dimensional space for any \(m \ge 2\). Consider the approximate solution \(\bar{S} = \{ \mathbb {0}, e^{(1)}, \dots , e^{(m)}\}\) of the projection

The smallest tolerance for which \(\bar{S}\) is a finite \(\varepsilon ^{(p)}\)-solution of this convex projection (under the p-norm) is \(\varepsilon ^{(p)} = \Vert \frac{1}{\sqrt{m}} \mathbb {1} - \frac{1}{m} \mathbb {1} \Vert = \frac{\sqrt{m} - 1}{m} m^{\frac{1}{p}}.\) The upper image of the associated multi-objective problem contains the vector \(\left( \frac{1}{\sqrt{m}}, \dots , \frac{1}{\sqrt{m}}, -\sqrt{m} \right) \). Therefore, the set \(\bar{S}\) is a finite \(\bar{\varepsilon }^{(p)}\)-solution of the multi-objective problem (under the p-norm) only if \(\bar{\varepsilon }^{(p)} \ge (\sqrt{m}-1) \cdot (m+1)^{\frac{1}{p}}\), where \((\sqrt{m}-1) \cdot (m+1)^{\frac{1}{p}} = \underline{\kappa } \cdot \varepsilon ^{(p)}\).

Third, we give an example where the multiplier \(\overline{\kappa }\) introduced in Theorem 3 is attained. Here a multi-objective problem (associated to a given projection) and its finite \(\varepsilon \)-solution \(\bar{S}\) are given. We show that this \(\bar{S}\) is a finite \(\bar{\varepsilon }\)-solution of the projection only for \(\bar{\varepsilon } \ge \overline{\kappa } \varepsilon \). This shows that Theorem 3 would not hold with a smaller multiplier. The p-norm is again explicitly denoted.

Example 5

Let us start with an example in dimension \(m=2\). Consider the projection \(Y = S = {\text {conv}}\{ (0,0), (2, -1) \}\) with the associated upper image \(\mathscr {P} = {\text {conv}}\{ (0,0,0), (2, -1, -1) \} + \mathbb {R}^3_+\). The smallest tolerance for which the set \(\bar{S} = \{ (0,0) \}\) is a finite \(\varepsilon ^{(p)}\)-solution of the multi-objective problem (under the p-norm) is \(\varepsilon ^{(p)} = \Vert \mathbb {1} \Vert _p = 3^{\frac{1}{p}}\). On the other hand, the set \(\bar{S}\) is a finite \(\bar{\varepsilon }^{(p)}\)-solution of the convex projection (under the p-norm) only for a tolerance \(\bar{\varepsilon }^{(p)} \ge \Vert (2, -1) \Vert _p = (2^p + 1)^{\frac{1}{p}} = \overline{\kappa } \cdot \varepsilon ^{(p)}\), where \(\overline{\kappa } = \left( \frac{2^p + 1}{3} \right) ^{\frac{1}{p}}\).

For a self-bounded example consider \(Y = S = {\text {conv}}\{ (0,0), (2, -1) \} + {\text {cone}}\{(-1, 0)\}\) with the same approximate solution.

This example can be extended to any dimension \(m \ge 2\). Consider the projection \(Y = S = {\text {conv}}\{ \mathbb {0}, (m, -1, \dots , -1) \} \subseteq \mathbb {R}^m\), the associated multi-objective problem with upper image \(\mathscr {P} = {\text {conv}}\{ \mathbb {0}, (m, -1, \dots , -1) \} + \mathbb {R}^{m+1}_+\), and the approximate solution \(\bar{S} = \{ \mathbb {0}\}\). The set \(\bar{S}\) is a finite \(\varepsilon ^{(p)}\)-solution of the multi-objective problem (under the p-norm) for \(\varepsilon ^{(p)} = \Vert \mathbb {1} \Vert _p = (m+1)^\frac{1}{p}\). However, it is a finite \(\bar{\varepsilon }^{(p)}\)-solution of the convex projection (under the p-norm) only for a tolerance \(\bar{\varepsilon }^{(p)} \ge \Vert (m, -1, \dots , -1) \Vert _p = (m^p + m -1)^\frac{1}{p}\), where \((m^p + m -1)^\frac{1}{p} = \overline{\kappa } \varepsilon ^{(p)}\).

Finally, we provide two numerical examples. As both have a compact feasible set, one could apply also the algorithm in [12] to approximately solve the convex projection problem directly. As here, our motivation is to illustrate the theory of the last subsection, we will use in both cases the algorithm of [7] for convex vector optimization problems to solve the convex projection problem by applying Theorem 3.

Example 6

Consider the feasible set

consisting of an intersection of two three-dimensional ellipses. We project this set onto the first two coordinates, so we are interested in the set \(Y = \{ (y_1, y_2) \; \vert \; (y_1, y_2, x) \in S \}\). We used the associated multi-objective problem to compute an approximation of Y. The algorithm of [7] was used for the numerical computations. In Figure 1 we depict the (inner) approximations obtained for various error tolerances of the algorithm.

Example 7

We add one more dimension. This means, we are computing a three-dimensional set \(Y = \left\{ (y_1, y_2, y_3) \; \vert \; (y_1, y_2, y_3, x) \in S \right\} \) obtained by projecting an intersection of two four-dimensional ellipses

onto the first three coordinates. Figure 2 contains an (inner) approximation of the set Y obtained by solving the associated multi-objective problem with an error tolerance \(\varepsilon = 0. 01\). Once again the algorithm of [7] was used.

4 Convex projection corresponding to (CVOP)

In the polyhedral case it is also possible to construct a projection associated to any given vector linear program. In [8], it is shown that if a solution of the vector linear program exists, it can be obtained from a solution of the associated projection. This, together with the connection between polyhedral projection and its associated multi-objective problem, led to an equivalence between polyhedral projection, multi-objective linear programming and vector linear programming in [8]. This also allows to construct a multi-objective linear program corresponding to any given vector linear program, where the dimension of the objective space is increased only by one.

We will now investigate this in the convex case. We start with a general convex vector optimization problem and construct an associated convex projection problem, once again taking inspiration from the polyhedral case in [8]. Analogously to the previous section, we investigate the connection between the two problems, their properties and their solution. While the connection does exist, two issues arise, which show that the equivalence obtained in the polyhedral case cannot be generalized to the convex case in full extent. Firstly, the associated convex projection is never a bounded problem. So even if a bounded (CVOP) is given, its associated convex projection (and thus, its associated multi-objective convex optimization problem) is just self-bounded. Recall that while solvers for a bounded (CVOP) are available, there is not yet a solver for self-bounded problems. Secondly, and even more severely, the convex projection provides only (exact or approximate) infimizers of the convex vector optimization problem, but not (exact or approximate) solutions. For exact solutions one can provide conditions to resolve this issue (see Lemma 8 below). This is, however, not possible for the in practice more important approximate solutions.

We now deduce these results in detail. Recall from Sect. 2.2 the convex vector optimization problem

with its feasible set \(\mathscr {X}\) and its upper image \(\mathscr {G} = {\text {cl}}(\varGamma [\mathscr {X}] + C).\) To obtain an associated projection, we define the set \(S_a = \{ (x, y) \; \vert \; x \in \mathscr {X}, y \in \{\varGamma (x)\} + C\}\), again motivated by [8]. The projection problem with this feasible set is

Clearly, (20) is feasible if and only if (CVOP) is feasible. Note that if the feasible set \(\mathscr {X}\) is given via a collection of inequalities, then the new feasible set \(S_a\) can be expressed similarly. The following lemma provides convexity and establishes the connection between (CVOP) and (20).

Lemma 6

The set \(S_a\) is convex and, therefore, the problem (20) is a convex projection. Additionally, \(Y_a = \varGamma [\mathscr {X}] + C\) and \(\mathscr {G} = {\text {cl}}Y_a\).

Proof

Convexity of \(S_a\) follows from the convexity of \(\mathscr {X}\), the C-convexity of \(\varGamma \) and the convexity of C. For the rest consider \(Y_a = \{ y \; \vert \; \exists x \in \mathscr {X}, y \in \{\varGamma (x)\} + C\} = \varGamma [\mathscr {X}] + C.\) \(\square \)

Given this close connection between the sets \(\mathscr {G}\) and \(Y_a\), we expect a similar connection to exist between the properties and the solutions concepts of (CVOP) and (20). Since the set \(Y_a\) contains the (shifted) ordering cone C, the projection problem (20) cannot be bounded. But still, a bounded problem (CVOP) has (by Lemma 1) its counterpart in the properties of the (self-bounded) associated projection (20), namely \(({\text {cl}}Y_a)_{\infty } = {\text {cl}}C\). We investigate the connection between the two problems in Theorems 4 and 5. Recall from Definition 2 that for a convex vector optimization an (exact or approximate) solution is an (exact or approximate) infimizer consisting of minimizers. Recall also that the direction \(c \in {\text {int}}C\) appearing in Definition 2 is assumed to be normalized, i.e. \(\Vert c \Vert = 1\).

Theorem 4

-

1.

If \(\bar{X}\subseteq \mathscr {X}\) is an infimizer of (CVOP), then \(\bar{S} := \{ (x,y) \; \vert \; x \in \bar{X}, y \in \{\varGamma (x)\} + C \}\) is a solution of the associated projection (20).

-

2.

If the problem (CVOP) is self-bounded, then the associated projection (20) is also self-bounded. If, additionally, the problem (CVOP) is bounded, then \(({\text {cl}}Y_a)_{\infty } = {\text {cl}}C\).

-

3.

Let (CVOP) be bounded or self-bounded and let \({\tilde{X}} \subseteq \mathscr {X}\) be a finite \(\varepsilon \)-infimizer of (CVOP). Then, \({\tilde{S}} := \{ (x, \varGamma (x)) \; \vert \; x \in {\tilde{X}} \}\) is a finite \(\varepsilon \)-solution of the associated projection (20).

Proof

-

1.

The way the set \(\bar{S}\) is constructed implies feasibility as well as \({\text {proj}}_y [\bar{S}] = \varGamma [\bar{X}] + C\). Since \(\bar{X}\) is a infimizer of (CVOP), it follows that \(Y_a \subseteq \mathscr {G} \subseteq {\text {cl}}( {\text {conv}}\varGamma [\bar{X}] + C ) = {\text {cl}}{\text {conv}}{\text {proj}}_y [\bar{S}]\).

-

2.

Self-boundedness of (20) follows directly from \(\mathscr {G} = {\text {cl}}Y_a\). Boundedness of (CVOP) implies \(({\text {cl}}Y_a)_{\infty } = \mathscr {G}_{\infty } = {\text {cl}}C\), see Lemma 1.

-

3.

For a finite \(\varepsilon \)-infimizer \({\tilde{X}}\) of (CVOP) it holds \(\mathscr {G} \subseteq {\text {conv}}\varGamma [{\tilde{X}}] + \mathscr {G}_{\infty } -\varepsilon \{c\}\) (both in the bounded and in the self-bounded case as \(C \subseteq \mathscr {G}_{\infty }\)). Since \(\varepsilon c \in B_{\varepsilon }\) and \(\varGamma [{\tilde{X}}] = {\text {proj}}_y [{\tilde{S}}]\) it follows

$$\begin{aligned} Y_a \subseteq \mathscr {G} \subseteq {\text {conv}}\varGamma [{\tilde{X}}] + \mathscr {G}_{\infty } -\varepsilon \{c\} \subseteq {\text {conv}}{\text {proj}}_y [{\tilde{S}}] + ({\text {cl}}Y_a)_{\infty } + B_{\varepsilon }. \end{aligned}$$

\(\square \)

Before moving on, we illustrate that an infimizer (or even a solution) \(\bar{X}\) of (CVOP) can lead towards a solution \(\bar{S}\) that solves the associated projection (20) only ’up to the closure’. Compare this also to Example 3.

Example 8

Consider a set \(\varGamma [\mathscr {X}] = \mathscr {X} = \{ (x_1, x_2) \; : \; x_1^2 + x_2^2 \le 1 \}\) with the ordering cone \(C = \mathbb {R}^2_+\). The set \(\bar{X} = \{ (x_1, x_2) \; : \; x_1^2 + x_2^2 = 1, x_1, x_2 < 0 \}\) is a solution of (CVOP). The corresponding set \(\bar{S}\) projects onto \({\text {proj}}_y [\bar{S}] = \bar{X} + \mathbb {R}^2_+\). It is a solution of (20), however, the closure in (5) is essential as \(Y_a \ne {\text {conv}}{\text {proj}}_y [\bar{S}]\).

Theorem 4 shows how to construct (exact or approximate) solutions of the associated projection from the (exact or approximate) infimizers of the vector optimization problem. Now we look at the other direction. We will show that, given an (exact or approximate) solution \(\bar{S}\) of (20), the set \(\bar{X} = {\text {proj}}_x [\bar{S}]\) is an (exact or approximate) infimizer of (CVOP). This set \(\bar{X}\), however, does not need to consist of minimizers, see Example 9 below.

In the case of approximate solutions, the tolerance depends on the direction \(c \in {\text {int}}C\). For this purpose denote

The quantity \(\delta _c\) is strictly positive (c is an interior element), finite (the cone C is pointed) and depends on the underlying norm. For example, for the standard ordering cone \(C = \mathbb {R}^m_+\) and the direction \(\Vert \mathbb {1} \Vert ^{-1} \mathbb {1}\) we have \(\delta _{ \Vert \mathbb {1} \Vert ^{-1} \mathbb {1} } = \Vert \mathbb {1} \Vert ^{-1}\).

Theorem 5

-

1.

If \(\bar{S} \subseteq S_a\) is a solution of (20), then \(\bar{X} := {\text {proj}}_x [\bar{S}]\) is an infimizer of (CVOP).

-

2.

If the problem (20) is self-bounded, then also the problem (CVOP) is self-bounded. If, additionally, \(({\text {cl}}Y_a)_{\infty } = {\text {cl}}C\), then the problem (CVOP) is bounded.

-

3.

Let the problem (20) be self-bounded and let \(\bar{S} \subseteq S_a\) be a finite \(\varepsilon \)-solution of (20). Then, \(\bar{X} := {\text {proj}}_x [\bar{S}]\) is a finite \(\tilde{\varepsilon }\)-infimizer of (CVOP) for any tolerance \(\tilde{\varepsilon } > \dfrac{\varepsilon }{\delta _c}\).

The following lemma will be used in the proof of Theorem 5.

Lemma 7

Let \(C \subseteq \mathbb {R}^n\) be a solid cone. For any finite collection of points \(\{q^{(1)}, \dots , q^{(k)}\} \subseteq \mathbb {R}^n\) there exists a point \(q \in \mathbb {R}^n\) such that \(q \le _C q^{(i)}\), i.e. \(q^{(i)} \in q + C\), for all \(i = 1, \dots , k\).

Proof

For a solid cone C there exists \(c \in {\text {int}}C\). Without loss of generality assume that c is scaled in such a way that \(\{c\} + B_1 \subseteq C\). The set \(\{c\} + B_1\) generates a convex solid cone contained within C.

Consider two points \(q^{(1)}, q^{(2)} \in \mathbb {R}^n\). Define \(q:= q^{(1)} - \Vert q^{(1)} - q^{(2)} \Vert \left( c + \frac{q^{(1)} - q^{(2)}}{\Vert q^{(1)} - q^{(2)} \Vert } \right) = q^{(2)} - \Vert q^{(1)} - q^{(2)} \Vert c\). Then \(q^{(1)}, q^{(2)} \in q + {\text {cone}}(\{c\} + B_1) \subseteq \{q\} + C\). For more than two points use an induction argument. \(\square \)

Proof of Theorem 5

-

1.

For the solution \(\bar{S}\) and the set \(\bar{X}\) it holds \( Y_a \subseteq {\text {cl}}\left( {\text {conv}}{\text {proj}}_y [\bar{S}] \right) \subseteq {\text {cl}}\left( {\text {conv}}\varGamma [\bar{X}] + C \right) . \) Since the right-hand side is closed and \(\mathscr {G} = {\text {cl}}Y_a\), the set \(\bar{X}\) is an infimizer of (CVOP).

-

2.

A self-bounded problem (20) satisfies \(Y_a \ne \mathbb {R}^m\) and there exist \(y^{(1)}, \dots , y^{(k)} \in \mathbb {R}^m\) such that

$$\begin{aligned} \varGamma [\mathscr {X}] + C = Y_a \subseteq {\text {conv}}\{y^{(1)}, \dots , y^{(k)} \} + ({\text {cl}}Y_a)_{\infty }. \end{aligned}$$Since the set \(Y_a\) is convex it follows that also \(\mathscr {G} = {\text {cl}}Y_a \ne \mathbb {R}^m\). Since the recession cone \(({\text {cl}}Y_a)_{\infty }\) contains the solid cone C, there exists a point \(q \in \mathbb {R}^m\) such that \({\text {conv}}\{y^{(1)}, \dots , y^{(k)} \} + ({\text {cl}}Y_a)_{\infty } \subseteq \{q\} + ({\text {cl}}Y_a)_{\infty }\), see Lemma 7. As \(({\text {cl}}Y_a)_{\infty } = \mathscr {G}_{\infty }\) and the shifted cone is closed, we obtain \(\mathscr {G} \subseteq \{q\} + \mathscr {G}_{\infty }.\) The second claim follows from Lemma 1.

-

3.

The finite \(\varepsilon \)-solution \(\bar{S}\) of (20) satisfies

$$\begin{aligned} \varGamma [\mathscr {X}] + C = Y_a \subseteq {\text {conv}}{\text {proj}}_{y} [\bar{S}] + ({\text {cl}}Y_a)_{\infty } + B_{\varepsilon }. \end{aligned}$$By (21) for all \(0< \delta < \delta _c\) it holds \(B_{\varepsilon } \subseteq -\frac{\varepsilon }{\delta } \{c\} + C\). For the set \({\text {conv}}{\text {proj}}_y [\bar{S}] \) it holds \({\text {conv}}{\text {proj}}_y [\bar{S}] \subseteq {\text {conv}}\varGamma [\bar{X}] + C\). Since \(({\text {cl}}Y_a)_{\infty } = \mathscr {G}_{\infty }\) is a convex cone containing C, we obtain

$$\begin{aligned} \varGamma [\mathscr {X}] + C \subseteq {\text {conv}}\varGamma [\bar{X}] + \mathscr {G}_{\infty } -\frac{\varepsilon }{\delta } \{c\}. \end{aligned}$$Since \(\bar{X}\) is finite, the set \({\text {conv}}\varGamma [\bar{X}]\) is closed convex with a trivial recession cone. The sets \({\text {conv}}\varGamma [\bar{X}]\) and \(\mathscr {G}_{\infty }\) then fulfill all assumptions of the Corollary 9.1.2 of [11] and, therefore, the set \({\text {conv}}\varGamma [\bar{X}] + \mathscr {G}_{\infty }\) is closed. This gives

$$\begin{aligned} \mathscr {G} \subseteq {\text {conv}}\varGamma [\bar{X}] + \mathscr {G}_{\infty } -\frac{\varepsilon }{\delta } \{c\} \end{aligned}$$for all \(0< \delta < \delta _c\). In the self-bounded case this proves the claim. In the bounded case with \(\mathscr {G}_{\infty } = {\text {cl}}C\) the relation \({\text {cl}}C \subseteq -\tilde{\delta } \{c\} + C\) with an arbitrarily small \(\tilde{\delta } > 0\) gives the desired result.

\(\square \)

Next we provide an example of (both exact and approximate) solutions of (20), which yield infimizers, but not solutions of (CVOP).

Example 9

Consider the trivial example of \(\min x \text { s.t. } x \ge 0\) with \(\mathscr {G} = \varGamma [\mathscr {X}] = [0, \infty )\) and the associated feasible set \(S_a = \{ (x, y) \; \vert \; y \ge x \ge 0 \}\). The set \(\bar{S}_1 = \{ (x, y) \; \vert \; y \ge x > 0 \}\) is a solution of (20). The corresponding \(\bar{X}_1 = {\text {proj}}_x \bar{S}_1 = (0, \infty )\) is an infimizer, but not a solution of (CVOP).

The situation is similar for approximate solutions: Fix \(\varepsilon > 0\). The set \(\bar{S}_2 = \{ (\varepsilon , \varepsilon )\}\) is a finite \(\varepsilon \)-solution of the convex projection. The set \(\bar{X}_2 = {\text {proj}}_x \bar{S}_2 = \{\varepsilon \}\) is a finite \(\varepsilon \)-infimizer, but it does not consist of minimizers.

In the polyhedral case [8] it is possible (under an assumption on the lineality space of \(\mathscr {G}\)) to obtain a solution of the vector optimization problem by removing non-minimal points from the solution of the associated projection. This is not possible for either the exact solution \(\bar{S}_1\) or the approximate solution \(\bar{S}_2\) in the above example, as both consist of non-minimal points only. However, for exact solutions, we can formulate conditions under which an (exact) solution of (CVOP) can be constructed from a solution of (20), see Lemma 8 below. It is, however, of theoretical interest rather than of practical use.

Lemma 8

Assume that a solution of (CVOP) exists and let the solution \(\bar{S}\) of (20) satisfy \(Y_a = {\text {conv}}{\text {proj}}_y [\bar{S}]\). Then, \(\bar{X} := \{ x \; \vert \; (x,y) \in \bar{S}, y \not \in \varGamma [\mathscr {X}] + C \backslash \{0\} \}\) is a solution of (CVOP).

Proof

Denote \(\bar{S}_0 := \{ (x,y) \in \bar{S} \; \vert \; y \not \in \varGamma [\mathscr {X}] + C \backslash \{0\} \}\). Let \(\bar{X}_1 \subseteq \mathscr {X}\) be some solution of (CVOP). By Lemma 6 and by assumption it holds

We prove that \(\varGamma [\bar{X}_1] \subseteq {\text {conv}}{\text {proj}}_y [\bar{S}_0]\): Take arbitrary \(\bar{x}_1 \in \bar{X}_1\), according to (22) we have \(\varGamma [\bar{x}_1] = \sum \limits _{y_i \in {\text {proj}}_y [\bar{S}]} \lambda _i y_i\), where \(\sum \lambda _i = 1\) and all \(\lambda _i \ge 0\). Assuming that for some \(\lambda _i > 0\) we have \(y_i \in \varGamma [\mathscr {X}] + C \backslash \{0\}\) contradicts \(\bar{x}_1 \in \bar{X}_1\) being a minimizer. The relation \(\varGamma [\bar{X}_1] \subseteq {\text {conv}}{\text {proj}}_y [\bar{S}_0]\) proves the claim as \(\bar{X} = {\text {proj}}_x [\bar{S}_0]\). \(\square \)

Both assumption in Lemma 8 are essential. The condition \(Y_a = {\text {conv}}{\text {proj}}_y [\bar{S}]\) in effect guarantees that the (exact) solution \(\bar{S}\) of (20) can be reduced to only minimal points. Compare this to Example 9. Unfortunately, one cannot not expect a practical version of Lemma 8 for approximate solutions. Since approximate solutions contain an approximation error, the condition \(Y_a = {\text {conv}}{\text {proj}}_y [\bar{S}]\) is not feasible there. We would need to assume that each element of the approximate solution is either minimal or redundant. Such requirement is, however, almost tautological.

In the polyhedral case, the equivalence between polyhedral projection, multi-objective linear programming and vector linear programming makes it possible to construct a multi-objective problem corresponding to an initial vector linear program. One could do this also in the convex case: combine the associated projection (20) and the ideas of the previous section to construct the multi-objective problem

associated to (CVOP). As in the linear case, the dimension of the objective space of the multi-objective problem (23) is only one higher than the original problem (CVOP). The results of the last two sections can be combined to establish a connection between (CVOP) and (23). Unfortunately, doing so combines all drawbacks of these results. Most importantly, it involves only (exact or approximate) infimizers of (CVOP), but not solutions. For completeness we list these combined results in the following corollary, the upper image of (23) is denoted \(\mathscr {P}_a = {\text {cl}}(P[S_a] + \mathbb {R}^{m+1}_+)\).

Corollary 1

-

1.

The upper images of (CVOP) and (23) are connected via \(\mathscr {P}_a = Q [\mathscr {G}] + \mathbb {R}^{m+1}_+\).

-

2.

Problem (CVOP) is self-bounded if and only if (23) is self-bounded. Additionally, (CVOP) is bounded if and only if (23) is self-bounded with \((\mathscr {P}_a)_{\infty } = Q[{\text {cl}}C] + \mathbb {R}^{m+1}_+\).

-

3.

If \(\bar{S} \subseteq S_a\) is a solution of (23), then \(\bar{X} := {\text {proj}}_x [\bar{S}]\) is an infimizer of (CVOP).

-

4.

If \(\bar{X} \subseteq \mathscr {X}\) is an infimizer of (CVOP), then \(\bar{S} := \{ (x,y) \; \vert \; x \in \bar{X}, y \in \{\varGamma (x)\} + C \}\) is a solution of (23).

-

5.

If the problem (CVOP) is bounded or self-bounded and \(\bar{X} \subseteq \mathscr {X}\) is a finite \(\varepsilon \)-infimizer of (CVOP), then \(\bar{S} := \{ (x, \varGamma (x)) \; \vert \; x \in \bar{X} \}\) is a finite \( ( \underline{\kappa } \cdot \varepsilon )\)-solution of (23).

-

6.

If (23) is self-bounded and \(\bar{S} \subseteq S_a\) is a finite \(\varepsilon \)-solution of (23), then \(\bar{X} := {\text {proj}}_x [\bar{S}]\) is a finite \(\xi \)-infimizer of (CVOP) for any tolerance \(\xi > \dfrac{ \overline{\kappa } }{\delta _c}\cdot \varepsilon \).

5 Solution concept according to [1]

In the previous sections of this paper we work with the definition of a finite \(\varepsilon \)-solution of a bounded (or self-bounded) convex vector optimization problem from [7, 13]. The idea there is to shift the (inner) approximation in a fixed direction to cover the full upper images (i.e. obtain an outer approximation), see (2). In [1], a slightly different definition of a finite \(\varepsilon \)-solution of a bounded (CVOP) is proposed. Here the idea is to bound the Hausdorff distance between the inner approximation and the upper image by the given tolerance. To distinguish between the two definitions we will speak about [7]-finite \(\varepsilon \)-solutions and [1]-finite \(\varepsilon \)-solutions. In this section we revisit our main results, Theorems 2 and 3 from Sect. 3.2.2 and Theorems 4 and 5 from Sect. 4, under this alternative definition.

Given a norm \(\Vert \cdot \Vert \), the Hausdorff distance between two sets \(A_1 \subseteq \mathbb {R}^n\) and \(A_2 \subseteq \mathbb {R}^n\) is defined as

One easily verifies that for sets \(A_1 \subseteq A_2\) it holds \(d_H (A_1, A_2) = \sup _{a_2 \in A_2} \inf _{a_1 \in A_1} \Vert a_1 - a_2 \Vert \) and the condition \(d_H (A_1, A_2) \le \varepsilon \) is equivalent to the condition \(A_2 \subseteq A_1 + B_{\varepsilon }\). The following definition is proposed in [1] for a bounded (CVOP): A nonempty finite set \(\bar{X}\subseteq \mathscr {X}\) is a [1]-finite \(\varepsilon \)-infimizer of (CVOP) if

We now address the connection between these two definitions of finite \(\varepsilon \)-infimizers. This was done in [1, Proposition 3.5] under the Euclidean norm. Here, we adapt that proof to the p-norm.

Lemma 9

-

1.

If \(\bar{X}\) is a [7]-finite \(\varepsilon \)-infimizer (see Definition 2) of a bounded problem (CVOP), then it is also a [1]-finite \(\varepsilon \)-infimizer of (CVOP).

-

2.

If \(\bar{X}\) is a [1]-finite \(\varepsilon \)-infimizer of a bounded problem (CVOP), then it is also a [7]-finite \((k \cdot \varepsilon )\)-infimizer of (CVOP), where

$$\begin{aligned} k = \frac{ 1 }{ \min \left\{ w^{\mathsf {T}} c \; \vert \; w \in C^+, \Vert w \Vert _q = 1 \right\} }. \end{aligned}$$(25)Here q is given by \(\frac{1}{p} + \frac{1}{q} = 1\) for \(p \in (1, \infty )\), respectively \(q = \infty \) for \(p = 1\) and \(q = 1\) for \(p = \infty \).

Proof

The first claim trivially follows from the fact that the element \(c \in {\text {int}}C\) is assumed to be normalized, so (2) implies \(\mathscr {G} \subseteq {\text {conv}}\varGamma [\bar{X}] + C + B_{\varepsilon }\).

We adapt the proof of [1, Proposition 3.5] to the case of a p-norm, we mainly highlight the changes needed because of the different norm, we refer the reader to [1] for details. First, note that Hölder’s inequality implies \(\vert w^{\mathsf {T}} c \vert \le \Vert w\Vert _q \Vert c \Vert _p\), so \(k \ge 1\). The closed convex set \({\text {conv}}\varGamma [\bar{X}] + C\) admits a representation

for some index set I, scalars \(\gamma _i \in \mathbb {R}\) and vectors \(w_i \in C^+ \setminus \{0\}\) that without loss of generality satisfy \(\Vert w_i\Vert _q = 1\). Take arbitrary \(g \in \mathscr {G}\) and define \(k_g := \inf \left\{ t \ge 1 \right. \vert g + \varepsilon t c \in \left. {\text {conv}}\varGamma [\bar{X}] + C \right\} \). If \(k_g = 1\), then \(k_g \le k\) holds trivially. For \(k_g > 1\) there exists an index \(j \in I\) such that \(w^{\mathsf {T}}_j \left( g + \varepsilon k_g c \right) = \gamma _j\), which allows us to express \(k_g\) as \(k_g = \frac{\gamma _j - w^{\mathsf {T}}_j g}{\varepsilon w^{\mathsf {T}}_j c}\). Now we show that \(\gamma _j - w^{\mathsf {T}}_j g \le \varepsilon \): Since \(d_H \left( \mathscr {G}, {\text {conv}}\varGamma [\bar{X}] + C \right) \le \varepsilon \) holds, there exists \(u \in \mathbb {R}^m\) with \(\Vert u \Vert _p \le \varepsilon \) such that \(g + u \in {\text {conv}}\varGamma [\bar{X}] + C\). Assuming \(\gamma _j - w^{\mathsf {T}}_j g > \varepsilon \) would yield \(w^{\mathsf {T}}_j \left( g+u \right) \ge \gamma _j > w^{\mathsf {T}}_j g + \varepsilon \ge w^{\mathsf {T}}_j g + \Vert u\Vert _p \Vert w_j \Vert _q\), which contradicts the Hölder’s inequality. This yields

which proves the claim. \(\square \)

Let us consider the convex projection (CP) and the associated multi-objective problem (8) in the bounded case. Note that for the multi-objective problem (8) with \(C = \mathbb {R}^{m+1}_+\) and \(c = \Vert \mathbb {1}\Vert ^{-1} \mathbb {1}\) (recall that \(\Vert \cdot \Vert \) denotes the p-norm) we have \( \min \{ \Vert \mathbb {1}\Vert ^{-1} w^{\mathsf {T}} \mathbb {1} \; \vert \; w \in \mathbb {R}^{m+1}_+, \Vert w \Vert _q = 1 \} = \Vert \mathbb {1}\Vert ^{-1}\), which follows by considering the problem \(\min \mathbb {1}^{\mathsf {T}} w \text { s.t. } \Vert w \Vert _q = 1, w \ge 0\). Note further, that condition (6) that defines a finite \(\varepsilon \)-solution of (CP) can be equivalently stated as \(d_H (Y, {\text {conv}}{\text {proj}}_y [\bar{S}]) \le \varepsilon .\) Recall that a nonempty finite set \(\bar{S} \subseteq S\) is a [7]-finite \(\varepsilon \)-solution of (8) if it holds

and a nonempty finite set \(\bar{S} \subseteq S\) is a [1]-finite \(\varepsilon \)-solution of (8) if

First, we revisit the question studied in Theorem 2 under the [1]-solution concept. We will show in Proposition 2 below that an approximate solution of the projection (CP) is also an approximate solution of (8) in the [1] sense, where the multiplier is given by the norm \(\Vert Q \Vert \). The value of \(\Vert Q \Vert \) is deduced in the following lemma.

Lemma 10

The operator norm \(\Vert Q \Vert \) of the matrix Q induced by the vector-p-norm is \(\Vert Q \Vert = \left( m^{p-1} + 1 \right) ^{\frac{1}{p}}\) for \(p \in [1, \infty )\) and \(\Vert Q \Vert = m\) for \(p=\infty \).

Proof

The operator norm is defined as \(\Vert Q \Vert = \max \limits _{\Vert x\Vert = 1} \Vert Q x \Vert \), which can be computed by considering the problem \(\max \mathbf {1}^{\mathsf {T}} x \text { s.t. } \Vert x \Vert = 1\). \(\square \)

Proposition 2

Let the problem (CP) be bounded and let \(p \in [1, \infty ]\) be fixed. If the set \(\bar{S} \subseteq S\) is a finite \(\varepsilon \)-solution of (CP), then it is a [1]-finite \(\left( \Vert Q \Vert \cdot \varepsilon \right) \)-solution of (8).

Proof

First we show that \(d_H \left( \mathscr {P}, {\text {conv}}P[\bar{S}] + \mathbb {R}^{m+1}_+ \right) \le d_H \left( P[S], {\text {conv}}P[\bar{S}] \right) \). Since a closure does not influence the Hausdorff distance, the left-hand side is \(d_H ( P[S] + \mathbb {R}^{m+1}_+, {\text {conv}}P[\bar{S}] + \mathbb {R}^{m+1}_+ )\). Take arbitrary \(q \in P[S]\) and \(r \in \mathbb {R}^{m+1}_+\). Since the norm satisfies the triangle inequality we have

As the points \(q \in P[S]\) and \(r \in \mathbb {R}^{m+1}_+\) are arbitrary, this gives the desired inequality. Now let \(\bar{S}\) be a finite \(\varepsilon \)-solution of (CP). Hence, it satisfies

Keep in mind that the sets of interest satisfy \(P[S] = Q[Y]\) and \(P[\bar{S}] = Q [{\text {proj}}_y [\bar{S}]]\) , see Lemma 2 (2) and its proof. The induced matrix norm \(\Vert \cdot \Vert : \mathbb {R}^{(m+1) \times m} \rightarrow \mathbb {R}\) is consistent, so this gives us the desired result,

\(\square \)