Abstract

Branch and Bound (B&B) algorithms in Global Optimization are used to perform an exhaustive search over the feasible area. One choice is to use simplicial partition sets. Obtaining sharp and cheap bounds of the objective function over a simplex is very important in the construction of efficient Global Optimization B&B algorithms. Although enclosing a simplex in a box implies an overestimation, boxes are more natural when dealing with individual coordinate bounds, and bounding ranges with Interval Arithmetic (IA) is computationally cheap. This paper introduces several linear relaxations using gradient information and Affine Arithmetic and experimentally studies their efficiency compared to traditional lower bounds obtained by natural and centered IA forms and their adaption to simplices. A Global Optimization B&B algorithm with monotonicity test over a simplex is used to compare their efficiency over a set of low dimensional test problems with instances that either have a box constrained search region or where the feasible set is a simplex. Numerical results show that it is possible to obtain tight lower bounds over simplicial subsets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A review of simplicial Branch and Bound (B&B) can be found in [15]. Recently, there is a renewed interest in generating tight bounds over simplicial partition sets. Karhbet and Kearfott [7] discuss the idea of using range computation over simplices based on Interval Arithmetic. In [12], focus is on using second derivative enclosures for generating bounds. These works do not take monotonicity considerations over the simplex into account as discussed by [6]. Our research question is how information on the bounds of first derivatives can be used to derive tight bounds and to create new monotonicity tests in simplicial B&B. To investigate this question, we derive bounds based on derivative information and implement them in a B&B algorithm to compare the different techniques.

The rest of this paper is organized as follows. Section 2 introduces the notation. Section 3 presents several approaches to obtain lower bounds of a function over a simplex. Section 4 deals with monotonicity over a simplex. Section 5 describes the Global Optimization B&B algorithm to compare lower bounding methods over a simplex. Section 6 compares the results of the bounding techniques numerically on a large number of instances. Finally, Sect. 7 presents our findings.

2 Preliminaries

Consider a function \(f:\mathbb R^n \rightarrow \mathbb R\) which has to be minimized over a feasible set \(D\subset \mathbb R^n\), which is either a box or a simplex, on which f is differentiable:

The simplicial B&B algorithm to be investigated uses simplicial partition sets S and lower bounds of \(\min _{x\in S} f(x)\).

Notation 1

Let \(\mathcal {V}=\{v_0,\ldots ,v_n\}\subset \mathbb R^n\) denote a set of \(n+1\) affinely independent vertices. For the component i of vertex j, we use the notation \((v_j)_i\).

Notation 2

An n-simplex S is determined by the convex hull of \(\mathcal {V}\), i.e. \(S={\mathop \mathrm{conv}}(\mathcal {V})\)

Notation 3

We denote intervals by boldface letters and their lower and upper bound by ‘underline’ and ‘overline’, respectively. The radius of an interval \(\varvec{x}=[\underline{x},\overline{x}]\) is denoted by \({\mathop \mathrm{rad\,}}(\varvec{x})=\frac{\overline{x}-\underline{x}}{2}\) and its midpoint by \({\mathop \mathrm{mid\,}}(\varvec{x})= \frac{\overline{x}+\underline{x}}{2}\). For an interval vector (also called a box) these are taken component-wise. The width of a box \(\varvec{x}=(\varvec{x}_1,\ldots ,\varvec{x}_n)^T\) is to be understood as \({\mathop \mathrm{wid\,}}(\varvec{x})=2\max \limits _{i=1,\ldots ,n} {\mathop \mathrm{rad\,}}(\varvec{x}_i)\).

Notation 4

The interval hull of a simplex S is denoted by \(\Box S=\Box {\mathop \mathrm{conv}}(\mathcal {V})\), that is the smallest interval box enclosing the simplex S. Let \(\varvec{x}=\Box S\), where

Remark 1

For cases where \(\varvec{x}=\Box S\subseteq D\), f is differentiable over \(\varvec{x}\). Notice that if D is a box and \(S\subset D\), automatically we have \(\Box S\subseteq D\). However, if D is a simplex, then f is not necessarily differentiable over \(\varvec{x}=\Box S\).

Notation 5

The boundary and interior of set S is denoted by \(\partial S\) and \({{\mathop \mathrm{int\,}}}S\), respectively, where \(S = \partial S \cup {{\mathop \mathrm{int\,}}}S\) and \(\partial S \cap {{\mathop \mathrm{int\,}}}S=\emptyset \).

3 Bounding techniques over a simplex

3.1 Extension of standard interval bounding techniques to simplices

Extensive investigation on Interval Arithmetic has lead to many ways to derive rigorous bounds, see for instance [5, 8, 13, 17].

Notation 6

Let \(\varvec{f}\) denote the natural interval extension [13] of an expression f with

Remark 2

\(\forall x\in S\subset \varvec{x}=\Box S\), \(f(x) \in \varvec{f}(\varvec{x})\).

Notation 7

Let \({\varvec{\nabla \varvec{f}}(\varvec{x})}\) denote an enclosure of the gradient and \({\varvec{\nabla \varvec{f}}_i(\varvec{x})}=[\underline{\nabla \varvec{f}}_i({\varvec{x})}, \overline{\nabla \varvec{f}}_i(\varvec{x})]\) the i-th component of the interval gradient. They can be computed using Interval ArithmeticFootnote 1 and Automatic DifferentiationFootnote 2 [16].

Remark 3

\(\forall x\in \varvec{x}\), \(\frac{\partial f}{\partial x_i}(x) \in {\varvec{\nabla \varvec{f}}}_i(\varvec{x})\). Then, \(\forall x\in S\subset \varvec{x}= \Box S\), \(\frac{\partial f}{\partial x_i}(x) \in {\varvec{\nabla \varvec{f}}_i(\varvec{x})}\).

Notation 8

A centered form on a box \(\varvec{x}\) with center c is denoted by \(\varvec{f}_c(\varvec{x})\). It is in fact the interval extension of the first-order Taylor expansion using \(\varvec{\nabla }\varvec{f}(\varvec{x})\):

Usually c is the midpoint (or center) of box \(\varvec{x}\). In that case, we refer to \(\varvec{f}_{cb}(\varvec{x})\) where \(cb={\mathop \mathrm{mid\,}}(\varvec{x})\). The lower bound \(\underline{\varvec{f}_c}(\varvec{x})\) can also be written as \(\underline{\varvec{f}(c)+(\varvec{x}-c)^T \varvec{\nabla }\varvec{f}(\varvec{x})}=\underline{\varvec{f}}(c)+\underline{(\varvec{x}-c)^T \varvec{\nabla }\varvec{f}(\varvec{x})}\), where underline takes the lower bound of the formula computed by IA.

Remark 4

\(\forall x\in S\subset \varvec{x}= \Box S\), \(f(x)\in \varvec{f}_{c}(\varvec{x})\). Thus, \(\varvec{f}_{c}(\varvec{x})\) provides lower and upper bounds of f over S, even if \(c\not \in S\).

Baumann [3] proposed another base-point instead of the center cb to improve the lower and upper bounds of the centered form.

Notation 9

We denote the Baumann base-point for the optimal lower bound in the centered form on a box by \(bb^-\). Component i is given by

Any centered form (with a base-point \(y\in \varvec{x}\)) can be tightened based on the vertices of simplex S.

Proposition 1

Let

Then \(\underline{\varvec{f}_y}(S)\le \min _{x\in S}f(x)\).

Proof

A first-order Taylor form provides a concave lower bounding function [3, 25]. A concave function takes its minimum over a convex set at its extreme points. Consequently, the lower bounding function over the simplex takes its minimum at a vertex of the simplex. Thus, instead of computing the interval enclosure over \(\varvec{x}\)=\(\Box S\), taking the minimum over the simplex vertices provides a valid lower bound.\(\square \)

Remark 5

We can use \(y=cb\) or \(y=bb^-\) in (3).

Now, it is interesting to see how the Baumann point \(bb^-\) can be generalized to a simplicial base-point. For \(bb^-\), the aim is to select the best base-point for the Taylor form, such that the lower bound is as high as possible. For a simplex, instead of using the limits of enclosing box \(\varvec{x}\)=\(\Box S\), we use the simplex vertices.

The highest lower bound in (3) over a simplex is taken at the base-point

Obviously, optimizing (4) is a nonlinear problem as it includes the optimization of f(y) varying y. Therefore, it is advisable to optimize only the second part.

Definition 1

Let us define \(bs^-=\mathop {\hbox {argmax}}\limits _{y\in \varvec{x}}\min _{v\in \mathcal {V}}\underline{(v-y)^T{\varvec{\nabla \varvec{f}}(\varvec{x})}}\) as the Baumann point over the simplex. This point can be found by an interval linear program:

Let \((z^*,y^*)\) be the optimum of (5). Then we take base point \(bs^-=y^*\) with the corresponding lower bound \(\underline{\varvec{f}_{bs^-}}(\varvec{x})=\varvec{f}(bs^-)+z^*\).

Notation 10

\(\nabla ^w \varvec{f}(\varvec{x}) \in \mathbb R^n\) denotes gradient bounds with components \(\nabla ^w \varvec{f}_i(\varvec{x})=\underline{\nabla \varvec{f}}_i({\varvec{x})}\) if \(w_i=\underline{x}_i\) and \(\nabla ^w \varvec{f}_i(\varvec{x})=\overline{\nabla \varvec{f}}_i({\varvec{x})}\) if \(w_i=\overline{x}_i\).

Remark 6

In Notation 10, all possible variations of lower and upper bounds of the gradients are taken into account when considering all vertices w of \(\varvec{x}\).

Writing (5) as a linear program requires \(2^n\) constraints for each vertex \(v\in \mathcal V\):

The constraints in (6) can be written as \(2^n\) linear inequalities

Note that we do not force \(bs^-\) to be in simplex S, because it may happen that a point outside S would give the best lower bound. In case we want to use \(\overline{\varvec{f}}(bs^-)\) to update the upper bound of the global minimum in a B&B algorithm, \(bs^-\) has to be in the initial search region. In our experiments we force \(bs^-\) to be in S by adding \(y \in S\) using simplex inclusion constraints (1) to (7) in a similar way as it is done in (10).

Notice that (5), (6) and (7) are equivalent descriptions of the same problem, thus providing the same optimum corresponding to the same bound.

3.2 Linear relaxation based lower bounds

Following earlier results in interval based B&B [14, 20, 21], we can now define other lower bounds for simplicial subsets.

3.2.1 Standard linear relaxation of f over a box

Let w be a vertex of \(\varvec{x}= \Box S\) and consider a first order Taylor expansion

Since we have \(2^n\) vertices of \(\varvec{x}\), we obtain \(2^n\) inequalities from Eq. (8), see [10] for more details. Consider the linear program

Let \((x^*,z^*)\) be the optimal solution of (9), then

such that \(z^*\) is a lower bound of f over \(\varvec{x}\). \(z^*\) is also a lower bound of f over \(S\subset \varvec{x}=\Box S\).

3.2.2 Linear relaxation of f over a simplex

We now focus on the bounds of f over simplex \(S={\mathop \mathrm{conv}}(\mathcal {V})\). The earlier bound in (9) is valid for f over \(\varvec{x}=\Box S\), such that it is also a bound over the simplex S. However, it is interesting to force \(x\in \varvec{x}\) to be inside S, like in (1). Introducing the corresponding linear equations into problem (9) provides linear program

Let \((x^*,z^*, \lambda ^*)\) be the solution of (10). Then we have that

and therefore, \(z^*\) is a lower bound of f over S.

A straightforward idea is to consider the vertices of the simplex instead of the vertices of the enclosing box. Unfortunately, such a formulation leads to a Mixed Integer Programming problem, as the piece-wise linear lower bounding function is neither convex nor concave anymore.

3.3 Bounding technique using Affine Arithmetic

This section describes the use of Affine Arithmetic (see [2, 4, 9, 11, 18, 22]) to generate a linear underestimation of function f over \(\varvec{x}\)=\(\Box S\). We add the constraint that the solution has to be inside the simplex \(S={\mathop \mathrm{conv}}(\mathcal {V})\), see (1). This provides a linear program.

First, we focus on the transformation of an interval vector into a vector of affine forms. Second, we describe how the computations are made using Affine Arithmetic to provide linear equations. Third, we sketch how the so-obtained linear equations are used to provide linear underestimations of f over \(\varvec{x}\) and then we provide the linear program to find a lower bound of f over the simplex S. Fourth, we show a simple way to solve the linear program.

3.3.1 Conversion into affine forms

The interval vector \(\varvec{x}\)=\(\Box S\) can be converted to an affine form vector, denoted by \({\hat{x}}\), as follows

where \(\epsilon _i\in [-1,1]\) for all \(i\in \{1,\ldots ,n\}\). The affine form \(\hat{x}\) can be transformed back into an interval by changing \(\epsilon _i\) to \([-1,1]\). Moreover, for all \(x \in \varvec{x}\), there is exactly one corresponding value for \(\epsilon \) in the affine description,

where \(\epsilon _i=\frac{x_i-{\mathop \mathrm{mid\,}}(\varvec{x}_i)}{{\mathop \mathrm{rad\,}}(\varvec{x}_i)}, i=1,\ldots ,n\).

3.3.2 Affine arithmetic

By replacing all the occurrences of the variable \(x_i\) by the corresponding affine form \({\hat{x}_i}\) in an expression of f, and by performing the computations using Affine Arithmetic, we obtain a resulting affine form, denoted by

where \(\epsilon _j\) is in \([-1,1]\) for all \(j\in \{1,\ldots ,N\}\). Note that some error terms \(r_k\epsilon _k\) are added for all \(k\in \{n+1,\ldots ,N\}\), which come from non affine operations in f.

3.3.3 Linear underestimation of f over \(\varvec{x}\)

Using Affine Arithmetic, (12) underestimates f over \(\varvec{x}\)

because all error terms are taken into account using their worst value.

Remark 7

Equation (13) is a linear underestimation of f over \(\varvec{x}\) using the new variables \(\epsilon _i\).

3.3.4 Linear program to provide lower bounds

In order to compute a lower bound of f over the simplex S (and not only on the \(\varvec{x}\)=\(\Box S\)), we constrain the point x to be inside S by adding (1). In this case, we describe x by its affine form \(T(\varvec{x},\epsilon )\) and thus, we obtain the following linear program

Denoting the exact solution of (14) by \((\epsilon ^*, \lambda ^*)\), we have that

and therefore, this is a lower bound of f over S.

Note that to solve the above linear program, we just need to evaluate f at each vertex of S and then take the minimum value of the linear underestimation (13). If \({\mathop \mathrm{rad\,}}(\varvec{x}_i)\ne 0\) (else \(r_i=0\)), \(\epsilon _i(v_j)\) is a transformation of component i of vertex \(v_j\) into variable \(\epsilon \)

Then, the lower bound of (15) becomes

Therefore, instead of solving linear program (14), we can determine (16) and this yields directly the lower bound of f over S. The solution corresponds to the solution of linear program (14).

4 Monotonicity test

In this paper, we use a concise monotonicity test which excludes an interior partition set S if it does not contain a stationary point. To be more precise:

Proposition 2

Let \(S\subset int(D)\) be a simplex in the interior of the search area D. If \(\exists i\in \{1,\ldots , n\}\) with \(0\notin {\varvec{\nabla }\varvec{f}}_i(\Box S)\) then S does not contain a global minimum point.

Proof

The condition implies that

such that S cannot contain an interior minimum of D. Moreover, S does not touch the boundary, i.e. \(S\cap \partial D=\emptyset \), such that neither it can contain a boundary optimum point. \(\square \)

The test is not very strong, as initial partition sets typically touch the boundary. A slight relaxation is the following corollary, where a simplicial partition set has only vertices in common with the boundary.

Corollary 1

Let \(S\subset D\) be a partition set, where the number of boundary points is finite, i.e. \(S\cap \partial D\subset \mathcal V\), and \(0\notin {\varvec{\nabla }\varvec{f}}(\Box S)\). Then S can be eliminated from the search tree.

Proof

The same reasoning as in the proof of Proposition 2 applies with respect to the interior of S. Now a minimum point could be attained in a vertex \(v \in S\cap \partial D\). However, vertex v is also part of another simplicial partition set which covers part of the boundary of D, such that we do not have to store S anymore. \(\square \)

This corollary is not very strong, but it is relatively easy to check. For practical tests, Proposition 2 offers the conditions for removing an interior simplicial partition set S. We can also remove it, if just several vertices of S touch the boundary of D according to Corollary 1. Otherwise, we should store the facets of S which are completely in a face of the search region as new simplicial partition sets with less than \(n+1\) vertices.

In our former investigation [6], we focused on bounds of the directional derivative in a direction d, denoted by \(\underline{d^T\varvec{\nabla }\varvec{f}(S)}\) and \(\overline{d^T\varvec{\nabla }\varvec{f}(S)}\). In this context, one can consider for instance an upper bound of the directional derivative

Notice that a necessary condition for \(\overline{d^T\varvec{\nabla }\varvec{f}(S)}\le 0\) according to (17) is that f is monotone on \(\Box S\), i.e. \(0\notin {\nabla \varvec{f}}(\Box S)\).

Proposition 3

Let \(\mathcal V\) be a vertex set, \(S={\mathop \mathrm{conv}}(\mathcal V)\), \(w\in \mathcal V\), \(\hat{V}=\mathcal V\setminus \{w\}\), facet \(F={\mathop \mathrm{conv}}(\hat{V})\) and \(d=\frac{1}{n}\sum _{v\in \hat{V}}v-w\). If \(\overline{d^T\varvec{\nabla }\varvec{f}(S)}\le 0\), then F contains a minimum point of \(\min _{x\in S} f(x)\).

Proof

Consider the vertices of \(\mathcal V\) ordered such that \(w=v_0\). Let \(x=\sum _{j=1}^n \lambda _jv_j+\lambda _0w\) be a minimum point \(x\notin F\). We construct a point z on F walking in direction d according to \(z=x+\lambda _0d=\sum _{j=1}^n (\lambda _j+\frac{1}{n})v_j\). Then we have that \(f(z)\le f(x)+\lambda _0\overline{\nabla d^Tf^T(S)}\le f(x)\). Thus, minimum point x either does not exist, or z is also a minimum point of \(\min _{x\in S} f(x)\) and it is located on facet F.\(\square \)

Corollary 2

Let \(\mathcal V\) be a vertex set, \(S={\mathop \mathrm{conv}}(\mathcal V)\), \(w\in \mathcal V\), \(\hat{V}=\mathcal V\setminus \{w\}\), facet \(F={\mathop \mathrm{conv}}(\hat{V})\) and \(d=\frac{1}{n}\sum _{v\in \hat{V}}v-w\). If \(\overline{d^T\varvec{\nabla }\varvec{f}(S)}< 0\), then F contains all minimum points of \(\min _{x\in S} f(x)\), i.e \(\mathop \mathrm{argmin}_{x\in S} f(x)\subset F\).

The corresponding test allows us to perform a dimension reduction of S by removing the vertex w. In case the conditions are not true, one can check each border facet if it can contain a minimum point. If we show it cannot, we do not have to deal further with the facet. In case no border facet can contain the minimum, it follows that S can be disregarded.

Corollary 3

Let \(\mathcal V\) be a vertex set, \(S={\mathop \mathrm{conv}}(\mathcal V)\), \(w\in \mathcal V\), \(\hat{V}=\mathcal V\setminus \{w\}\), facet \(F={\mathop \mathrm{conv}}(\hat{V})\) and \(d=\frac{1}{n}\sum _{v\in \hat{V}}v-w\). If \(\underline{d^T\varvec{\nabla }\varvec{f}(S)}> 0\), then F cannot contain a minimum point of \(\min _{x\in S} f(x)\).

5 Simplicial B&B algorithm (SBB)

Algorithm 1 uses an AVL treeFootnote 3 [1] \(\Lambda \), a self-balancing binary search tree, for storing partition sets. Such a structure has a computational complexity of sorted insertion and extraction of an element of \(\mathcal {O}(\log _2 |\Lambda |)\). Evaluated and not rejected simplices are sorted in \(\Lambda \) by non decreasing order of the bounds on the objective using any of the methods from Sect. 3. This means \([\underline{x},\overline{x}] < [\underline{y},\overline{y}]\) when \(\underline{x}<\underline{y}\) or when \(\underline{x}=\underline{y}\) and \(\overline{x}<\overline{y}\). Simplicial partition sets having the same bounds are stored in the same node of the AVL tree using a linked list.

All vertices of a simplex are also stored in an AVL tree. Vertices may be shared among several simplices, such that we avoid duplicate storage. Although Algorithm 1 describes vertices to be evaluated in order to update the incumbent \(\tilde{f}\) (see Algorithm 1, lines 5 and 13), their evaluation depends on the actual lower bounding method. The simplex S with the lowest value of \(\varvec{f}(S)\) is extracted from \(\Lambda \) ( lines 8 and 19). The lower bound of \(\varvec{f}(S)\) is used in the stopping criterion of the algorithm ( line 9).

Evaluation of a simplex S always includes computation of the natural inclusion \(\varvec{f}(S)=\varvec{f}(\Box S)\) of the objective function and inclusion of the gradient \(\nabla \varvec{f}(S)=\nabla \varvec{f}(\Box S)\) using Automatic Differentiation (see Algorithm 1, lines 4 and 16, and Algorithm 2 line 6). Other bounding methods can be applied afterwards in order to improve the calculated bounds in \(\varvec{f}\).

Simplices with a lower bound greater than the incumbent \(\tilde{f}\) are rejected. They are also rejected using Proposition 2 when they are in the relative interior of the search space D and \(0\notin \nabla \varvec{f}_i(\Box S)\) (see Algorithm 1, lines 14 and 17, and Algorithm 2, line 7).

In case f is monotone on \(\Box S\) and \(S\cap \partial D\ne \emptyset \), S can be reduced to a number of facets by Corollary 3 (see calls to Algorithm 2 from Algorithm 1, lines 6 and 10). From computational perspective it is better to label the vertex border or not-border. A border vertex means that when it is removed from S, the remaining facet is on the boundary of D. If the search region is a simplex, P contains just the initial simplex, and all initial facets are at the boundary, such that all vertices are labelled border. In case the search region is a box, P contains the result of the combinatorial vertex triangulation of the box into n! simplices [24, 26].

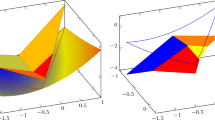

This technical detail has not been included in Algorithm 1 for the sake of simplicity. The specific triangulation is not appealing for large values of n. We use this here because box constrained problems are used to compare methods. Each of the n! initial simplices has two border facets. They are determined by removing the smallest and largest vertex (numbered in a binary system), see the grey nodes in Fig. 1. In the binary system, 0 is the lower bound and 1 is the upper bound of the given component of the box.

A simplicial partition set, which was neither rejected nor reduced, is divided using Longest Edge Bisection (LEB), see Algorithm 1, line 11. When several longest edges exist, the longest edge with a vertex with the lowest value of \(\underline{\varvec{f}}\) and the other vertex having the highest value of \(\underline{\varvec{f}}\) is selected. In case vertices are not evaluated, the first longest edge is selected.

Remark 8

The interior of a new facet generated by LEB is always in the relative interior of the bisected simplex. This contributes to reduce the number of vertices labelled as border in the new sub-simplices.

Descendants of a partition set having all its vertices labelled as not-border have all facets in the interior of D, so labelling is no longer necessary.

6 Numerical results

Algorithm 1 was run on an Asus UX301L NoteBook with Intel(R) Core(TM) i7-4558U CPU and 8GB of RAM running Fedora 32 Linux distribution. The algorithm was coded with g++ (gcc version 10.1.1) and it uses Kv-0.4.50 for Interval Arithmetic and Affine Arithmetic (AA). Kv uses boost libraries. Algorithms were compiled with -O3 -DNDEBUG -DKV_FASTROUND options and AA uses #define AFFINE_SIMPLE 2 and #define AFFINE_MULT 2 in Kv. For the Linear Programming, we use PNL 1.10.4 with -DCMAKE_BUILD_TYPE = Release -DWITH_MPI = OFF, as a C++ wrapper to LPsolve 5.5.2.0.

Notice that Kv Affine Arithmetic is slow in execution speed: When the direction of rounding is fixed as "upward", the downward calculation is performed as "sign inversion", and it currently does not support division by affine variables containing zero. Additionally, the execution time for Interval Arithmetic can be reduced on processors supporting Advanced Vector Extensions SIMD (AVX-512) (see last table at kv-rounding web page), which is not our case. Moreover, the PNL library has support for MPI, which is not used here.

Table 1 describes the studied instances. Their detailed description can be found in [7] and the optimization web page.

The used termination accuracy is \(\alpha =10^{-6}\) and Interval Arithmetic is applied with Automatic Differentiation to obtain bounds of \(\varvec{f}\) and \(\nabla \varvec{f}\) on \(\Box S\). The following notation is used to describe the variants to calculate lower bounds:

- IA:

-

: Natural IA.

- +CFcb:

-

: IA + Centered form on a box (see Not. 8) using the center of \(\Box S\).

- +CFbb:

-

: IA + Centered form on a box (see Not. 8) using the Baumann point \(bb^-\) on \(\Box S\) (see Not. 9).

- +CFcs:

-

: IA + Centered form on a simplex (see Prop. 1) using the centroid as the base-point and the gradient on \(\Box S\).

- +CFbs:

-

: IA + Centered form on a simplex (see Prop. 1) using base-point \(y=bs^-\) (see Def. 1) and the gradient on \(\Box S\).

- +CFvs:

-

: IA + Centered form on a simplex (see Prop. 1) using base-point \(y=\mathop {\hbox {argmax}}\limits _{v\in S}\{\overline{\varvec{f}}(v)\}\) and the gradient on \(\Box S\).

- +AA:

-

: IA + Affine Arithmetic lower bound (16) over \(\Box S\).

- +LR:

-

: IA + Linear Relaxation bound (9) on \(\Box S\).

- +LRS:

-

: IA + Linear Relaxation bound (10) on \(\Box S\), forcing the solution on S.

Rejection tests like the ones on monotonicity, are checked after the bound calculations. This is not efficient, but it allows us to compare the calculated bounds.

Improvement of the best function value found \(\tilde{f}\) is done by point evaluation. Together with the IA bound calculation we evaluate simplex vertices. When other lower bound methods are added to IA, the evaluation of simplex vertices can be disabled in order to save computation. However, this may imply another (worse) update of \(\tilde{f}\) and a different course of the algorithm, due to Longest Edge Bisection (LEB) by the first longest edge, instead of the best LEB [19].

The following points are evaluated for each method. +CFc* methods (*=b or s) evaluate only the center and +CFb* evaluate only base-points \(bb^-\) or \(bs^-\). Such points are not stored. Notice that base point \(bb^-\) might be located outside the simplicial search region. +CFvs and +AA evaluate and store simplex vertices. +LR and +LRS evaluate and store box vertices when the search region is a box. Additionally, simplex vertices are evaluated when the search region is a simplex, because vertices of \(\Box S\) may be outside the search region and should not be used to improve \(\tilde{f}\).

The +CF*s (*=c,b or v) methods only update lower bounds. The other methods also update upper bounds, which may affect the partition set storage order.

Figures 2 and 3 show the normalized (to the range [0,1]) number of simplex evaluations (NS) and execution time (T), respectively. The number of simplex evaluations can be considered as the number of iterations, as in each iteration one simplex is evaluated. The problems are sorted by NS in both figures. The data for the figures is taken from Table 2 to Table 21 in Appendix A. Reduction to border facets due to monotonicity does not occur in box constrained problems. It happens in the simplex constrained instances (see Corollary 3). The monotonocity test reduces the number of simplex evaluations significantly for all test problems. Without that test, the algorithm lasts more than the limit of 15 minutes for several problems. Therefore, we always apply the monotonicity test.

Going over the results of the test problems, problem G7 appears to be a special case, see Table 7. Apparently, adding methods to IA does not provide better lower bounds. For L8 only the +AA method improves the bound a few times and for RB2 only the LR* methods show tighter bounds, see Table 12.

A value of \(>15m\) in Figs. 2 and 3 means that i) the algorithm reached the 15 minute time limit, or ii) there was a problem with the Linear Programming solver in methods +LR and +LRS or iii) a division by zero occurred. The latter only happens for the +AA method for problem L8, because the Kv library does not implement division by zero in Affine Arithmetic.

Normalized number of simplex evaluations in \(\log \) scale. A value of \(>15m\) means time out of 15 minutes or execution error. The ranges of evaluated simplices per problem are as follow: RB2\(\in [44,\ 66]\), KE2-2\(\in [47,\ 60]\), DP2\(\in [16,\ 112]\), MCH2\(\in [128,\ 192]\), EX2-1\(\in [186,\ 510]\), MC2\(\in [434,\ 1,052]\), ST2\(\in [558,\ 1,382]\), SHCB\(\in [556,\ 1,646]\), THCB\(\in [626,\ 1,986]\), H3\(\in [2,286,\ 4,430]\), G7\(\in [5,040,\ 5,314]\), S4 \(\in [3,984,\ 5,288]\), SCH2\(\in [4,862,\ 6,834]\), L8\(\in [40,462,\ 40,662]\), DP5\(\in [97,060,\ 188,476]\), GP2\(\in [2,272,\ 167,800]\), H4\(\in [53,368,\ 170,622]\), MCH5\(\in [189,198,\ 210,356]\), H6\(\in [2,641,024,\ 4,944,040]\), and ST5\(\in [2,569,082,\ 6,358,328]\)

Focusing on the number of required simplex evaluations (NS), Fig. 2 shows that the +AA method requires the least evaluations for most of the test cases. The second best methods regarding the NS metric are those using LP (+LR* and +CFbs). The +LRS lower bounding requires less simplex evaluations than +LR for some cases, and +CFcb has the best NS values for only a few test cases.

Normalized execution time (seconds) in \(\log \) scale. A value of \(>15m\) means time out of 15 minutes or execution error. The ranges of execution time per problem are as follow: RB2\(\in [0.005,\ 0.014]\), EX2-2\(\in [0.005,\ 0.010]\), DP2\(\in [0.005,\ 0.019]\), MCH2\(\in [0.006,\ 0.024]\), EX2-1\(\in [0.005,\ 0.034]\), MC2\(\in [0.005,\ 0.019]\), ST2\(\in [0.006,\ 0.085]\), SHCB2\(\in [0.006,\ 0.101]\), THCB2\(\in [0.008,\ 0.1]\), H3\(\in [0.032,\ 0.415]\), G7\(\in [0.048,\ 6.69]\), S4 \(\in [0.056,\ 0.995]\), SCH2\(\in [0.104\, 0.696]\), L8\(\in [0.566,\ 159.124]\), DP5\(\in [0.526,\ 61.352]\), GP2\(\in [0.051,\ 1.461]\), H4\(\in [1.196,\ 19.844]\), MCH5\(\in [3.611,\ 60.438]\), H6\(\in [51.057,\ 187.538]\), and ST5\(\in [58.476,\ 184.924]\)

For smooth functions, the algorithm converges to a region which is captured by a convex quadratic function. To study the limit convergence behaviour of the algorithm, we run all variants over the so-called Trid function from [23], which represents a convex quadratic function. The results can be found in Tables 22–24 (Appendix B). One can observe for this limit situation that the Linear Relaxation variants are relatively close models and require less simplex evaluations than other lower bounding methods. This means that, for all cases, the +LR variants have an advantage in the final stages of the algorithm. It is worth to mention that, when the dimension increases, the required Linear Programming gets more time consuming and also the +AA variant starts to do better.

The execution time is a difficult performance indicator, as it depends on the used external subroutines. Figure 3 provides normalized values. In the first 9 test cases (ordered according to NS), the execution time is similar for most of the methods apart from those using LP (+LR* and +CFbs). In fact, methods using LP are in general the most time consuming due to the called routines, followed by +AA which avoids solving an LP due to (16). According to the Kv library documentation, Affine Arithmetic is slow and its implementation could be improved.

The +CFvs method requires the least execution time for most of the instances. Comparing +CFvs with other +CF* methods, the centered form used in +CFvs has to evaluate one sum term less and the base-point vertex can already have been evaluated and stored. On average, +CFbb is the best method, but this is because it is the best for the ST5 test problem, which is one of the most time consuming instances.

7 Conclusions

In simplicial branch and bound methods, the determination of the lower bound is of great importance. The Interval Arithmetic lower bound on a simplex interval hull can be tightened by additional calculations at a given cost. Several methods have been described and investigated. We have used the centered form with several base-points over a simplex and the interval hull of a simplex. The use of Affine Arithmetic and a Linear Relaxation over the interval hull and over the simplex has also been presented. Moreover, we introduced several theoretical results about monotonicity that can be applied to construct new rejection tests.

Results on a set of well known low dimensional test instances show that Affine Arithmetic is a promising method to get lower bounds over a simplex. It requires the smallest number of simplex evaluations in many problems. However, its computational time is larger than that of several other methods. In general, methods using a Linear Programming solver suffer the same drawback requiring more time. We found that the monotonicity tests were essential for the reduction of computing time.

The method requiring least computing time in several test problems is the one based on the center form on a simplex using the vertex of a simplex with the highest function value as a base-point. The vertex can already have been evaluated and stored and the centered form requires one less additional term evaluation.

This means that it is preferable to evaluate cheap lower bounds that reuse previous information over more simplices than expensive lower bounds over less simplices for low dimensional instances.

Notes

named after the inventors Adelson-Velsky and Landis

References

Adelson-Velsky, G.M., Landis, E.M.: An algorithm for the organization of information. Proceed. USSR Acad. Sci. (in Russian) 146, 263–266 (1962)

Andrade, A., Comba, J., Stolfi, J.: Affine arithmetic. International Conf. on Interval and Computer-Algebraic Methods in Science and Engineering (INTERVAL/94) (1994)

Baumann, E.: Optimal centered forms. BIT Num. Math. 28(1), 80–87 (1988). https://doi.org/10.1007/BF01934696

de Figueiredo, L., Stolfi, J.: Affine arithmetic: concepts and applications. Num. Alg. 37(1–4), 147–158 (2004). https://doi.org/10.1023/B:NUMA.0000049462.70970.b6

Hansen, E., Walster, W.: Global Optimization Using Interval Analysis, 2ème edn. Marcel Dekker Inc., New York (2004)

Hendrix, E.M.T., Salmerón, J.M.G., Casado, L.G.: On function monotonicity in simplicial branch and bound. AIP Conf. Proceed. (2019). https://doi.org/10.1063/1.5089974

Karhbet, S.D., Kearfott, R.B.: Range bounds of functions over simplices, for branch and bound algorithms. Reliable Computing 25, 53–73 (2017). https://interval.louisiana.edu/reliable-computing-journal/volume-25/reliable-computing-25-pp-053-073.pdf

Kearfott, R.B.: Rigourous Global Search: Continuous Problems. Kluwer Academic Publishers, Newyork (1996)

Messine, F.: Extensions of affine arithmetic: application to unconstrained global optimization. J. Univ. Comput. Sci. 8(11), 992–1015 (2002). https://doi.org/10.3217/jucs-008-11-0992

Messine, F., Lagouanelle, J.L.: Enclosure methods for multivariate differentiable functions and application to global optimization. J. Univ. Comput. Sci. 4(6), 589–603 (1998). https://doi.org/10.3217/jucs-004-06-0589

Messine, F., Touhami, A.: A general reliable quadratic form: an extension of affine arithmetic. Reliab. Comput. 12(3), 171–192 (2006). https://doi.org/10.1007/s11155-006-7217-4

Mohand, O.: Tighter bound functions for nonconvex functions over simplexes. RAIRO Oper. Res. 55, S2373–S2381 (2021). https://doi.org/10.1051/ro/2020088

Moore, R.: Interval Analysis. Prentice-Hall Inc., Englewood Cliffs (1966)

Ninin, J., Messine, F., Hansen, P.: A reliable affine relaxation method for global optimization. 4OR 13(3), 247–277 (2014). https://doi.org/10.1007/s10288-014-0269-0

Paulavičius, R., Žilinskas, J.: Simplicial Global Optimization. Springer, New York (2014)

Rall, L.B.: Automatic differentiation: Techniques and applications. Lecture Notes in Computer Science, vol. 120. Springer, Newyork (1981)

Ratschek, H., Rokne, J.: Computer Methods for the Range of Functions. Ellis Horwood Ltd, Chichester (1984)

Rump, S.M., Kashiwagi, M.: Implementation and improvements of affine arithmetic. Nonlin. Theory Appl. 6(3), 341–359 (2015). https://doi.org/10.1587/nolta.6.341

Salmerón, J.M.G., Aparicio, G., Casado, L.G., García, I., Hendrix, E.M.T., Toth, B.G.: Generating a smallest binary tree by proper selection of the longest edges to bisect in a unit simplex refinement. J. Comb. Optim. (2015). https://doi.org/10.1007/s10878-015-9970-y

Sherali, H., Adams, W.: A Reformulation-Linearization Technique for Solving Discrete and Continuous Nonconvex Problems. Kluwer Academis Publishers, Dordrecht (1999)

Sherali, H., Liberti, L.: Reformulation-Linearization Technique for Global Optimization. In: Encyclopedia of Optimization, Springer, New york (2009)

Stolfi, J., de Figueiredo, L.: Self-Validated Numerical Methods and Applications. Monograph for 21st Brazilian Mathematics Colloquium. IMPA/CNPq (1997)

Surjanovic, S., Bingham, D.: Virtual library of simulation experiments: Test functions and datasets (2013). http://www.sfu.ca/~ssurjano

Todd, M.J.: The Computation of Fixed Points and Applications. Springer, Heidelberg (1976)

Tóth, B., Casado, L.G.: Multi-dimensional pruning from the Baumann point in an Interval Global Optimization Algorithm. J. Glob. Optim. 38(2), 215–236 (2007). https://doi.org/10.1007/s10898-006-9072-6

Žilinskas, J.: Branch and bound with simplicial partitions for global optimization. Math. Modell. Anal. 13(1), 145–159 (2008). https://doi.org/10.3846/1392-6292.2008.13.145-159

Acknowledgements

This research is supported by the Spanish Ministry (RTI2018-095993-B-I00), in part financed by the European Regional Development Fund (ERDF).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has been funded by grant RTI2018-095993-B-I00 from the Spanish Ministry.

Appendices

A Extended numerical results

In Tables 2 to 21 the following notation is used.

- NS:

-

: number of simplex evaluations,

- NSV:

-

: number of simplex vertex evaluations,

- MTS:

-

: maximum number of simplices stored in the AVL tree,

- MTP:

-

: maximum number of points stored in the AVL tree,

- NNV:

-

: number of non simplex vertex evaluations. They can be cb, \(bb^-\), cs or \(bs^-\).

- NBV:

-

: number of \(\Box S\) vertex evaluations,

- NI:

-

: number of times the natural inclusion lower bound is improved by another lower bounding method,

- T:

-

: wall clock time. Differences smaller than 0.005s are not significant.

B Methods on a convex quadratic function

The Trid problem from optimization is a convex quadratic function. According to Some Hard Global Optimization Test Problems: This is a simple discretized variational problem, convex, quadratic, with a unique local minimizer and a tridiagonal Hessian. The scaling behaviour for increasing n (search region is \([-n^2, n^2]\)) gives an idea on the efficiency of localizing minima once the region of attraction (which here is everything) is found; most local methods only need \(O(n^2)\) function evaluations, or only O(n) (function+gradient) evaluations. A global optimization code that has difficulties with solving this problem for n=100, say, is of limited worth only. The strong coupling between the variables causes difficulties for genetic algorithms. The problem is typical for many problems from control theory, though the latter are usually nonquadratic and often nonconvex.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

G.-Tóth, B., Casado, L.G., Hendrix, E.M.T. et al. On new methods to construct lower bounds in simplicial branch and bound based on interval arithmetic. J Glob Optim 80, 779–804 (2021). https://doi.org/10.1007/s10898-021-01053-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01053-8