Abstract

We establish a geometric condition guaranteeing exact copositive relaxation for the nonconvex quadratic optimization problem under two quadratic and several linear constraints, and present sufficient conditions for global optimality in terms of generalized Karush–Kuhn–Tucker multipliers. The copositive relaxation is tighter than the usual Lagrangian relaxation. We illustrate this by providing a whole class of quadratic optimization problems that enjoys exactness of copositive relaxation while the usual Lagrangian duality gap is infinite. Finally, we also provide verifiable conditions under which both the usual Lagrangian relaxation and the copositive relaxation are exact for an extended CDT (two-ball trust-region) problem. Importantly, the sufficient conditions can be verified by solving linear optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the following nonconvex quadratic optimization problem, which is referred to as the extended trust region problem:

where \({\mathsf {Q}}_0,{\mathsf {Q}}_1\) are \((n \times n)\) symmetric matrices, \({\mathsf {A}}\) is an \((\ell \times n)\) matrix, \({\mathsf {B}}\) is an \((m \times n)\) matrix, \({\mathsf {a}}\in \mathbb {R}^\ell \), \({\mathsf {b}}\in \mathbb {R}^m\) and \({\mathsf {q}}_0,{\mathsf {q}}_1 \in \mathbb {R}^n\). Model problems of this form arise from robust optimization problems under matrix norm or polyhedral data uncertainty [5, 20] and the application of the trust region method [15] for solving constrained optimization problems, such as nonlinear optimization problems with nonlinear and linear inequality constraints [9, 34]. It covers many important and challenging quadratic optimization (QP) problems such as those with box constraints; trust region problems with additional linear constraints; and the CDT (Celis–Dennis–Tapia or two-ball trust-region) problem [1, 9, 14, 28, 38]. In general, with no further structure on the additional linear constraints \({\mathsf {B}}{\mathsf {x}}\le {\mathsf {b}}\), the model problem (P) is NP-hard as it encompasses the quadratic optimization problem with box constraints.

In the special case where \({\mathsf {Q}}_1\) is the identity matrix, \({\mathsf {A}},{\mathsf {B}}\) are zero matrices and \({\mathsf {a}},{\mathsf {b}},{\mathsf {q}}_1\) are zero vectors, the model problem (P) reduces to the well-known trust-region model. It has been extensively studied from both theoretical and algorithmic points of view [19, 39]. The trust-region problem enjoys exact Lagrangian relaxations. Moreover, its solution can be found by solving a dual Lagrangian system or, equivalently, a semidefinite optimization problem (SDP). Unfortunately, these nice features do not continue to hold for the more general extended trust-region problem (P); see [20]. In fact, it has been shown that exactness of Lagrangian (or SDP) relaxation can fail for the CDT problem, or for the trust region problem with only one additional linear inequality constraint.

Recently, copositive optimization has emerged as one of the important tools for studying nonconvex quadratic optimization problems. Copositive optimization is a special case of convex conic optimization (namely, to minimize a linear function over a cone subject to linear constraints). By now, equivalent copositive reformulations for many important problems are known, among them (non-convex, mixed-binary, fractional) quadratic optimization problems under a mild assumption [2, 3, 13], and some special optimization problems under uncertainty [4, 18, 32, 37]. In particular, it has been shown in [7] that, for quadratic optimization problems with additional nonnegative constraints, copositive relaxations (and its tractable approximations) provides a tighter bound than the usual Lagrangian relaxation. On the other hand, the techniques in [7] are not directly applicable because our model problem does not require the variables to be nonnegative.

In light of rapid evolution of this field, in this paper, we introduce a new copositive relaxation for the extended trust region problem (P), and present two significant contributions to copositive optimization:

-

We establish a geometric condition guaranteeing exact copositive relaxation for the nonconvex quadratic optimization problem (P). We also present sufficient conditions for global optimality in terms of generalized Karush–Kuhn–Tucker multipliers extending the global optimality conditions obtained for CDT problems [9]. Moreover, we provide a class of quadratic optimization problems that enjoys exactness of the copositive relaxation while the usual Lagrangian duals for these problems yield trivial lower bounds with infinite gaps.

-

In the special case, where (P) is an extended CDT (or two-ball trust region, TTR) problems, we also derive simple verifiable sufficient conditions, which is independent of the geometric conditions, ensuring both exact copositive relaxation and exact Lagrangian relaxations. In particular, the sufficient conditions can be checked by solving a linear optimization problem.

The paper is organized as follows: In Sect. 2, we first recall notation and terminology, and present some basic facts on copositivity. In Sect. 3, we introduce the copositive relaxation for (P) and its semi-Lagrangian reformulation. We also provide a global optimality condition and prove an exactness result for this relaxation. In Sects. 4 and 5, we examine the extended CDT problem and provide simple conditions ensuring the tightness of both the copositive relaxation and the usual Lagrangian relaxation. In Sect. 6, we provide details on how copositive relaxation problems can be approximated by hierarchies of semidefinite and/or linear optimization problems.

2 Preliminaries

We abbreviate by \([m\! : \! n]{:}=\left\{ m, m+1, \ldots , n\right\} \) the integer range between two integers m, n with \(m\le n\). By bold-faced lower-case letters we denote vectors in n-dimensional Euclidean space \({\mathbb {R}}^n\), by bold-faced upper case letters matrices, and by \(^\top \) transposition. The positive orthant is denoted by \({\mathbb {R}}^n_+ {:}=\left\{ {\mathsf {x}}\in {\mathbb {R}}^n: x_i \ge 0 \text{ for } \text{ all } i{ \in \! [{1}\! : \! {n}]}\right\} \). \({\mathsf {I}}_n\) is the \(n\times n\) identity matrix. The letters \({\mathsf {o}}\) and \({\mathsf {O}}\) stand for zero vectors, and zero matrices, respectively, of appropriate orders. The set of all \(n\times n\) matrices is denoted by \({\mathbb {R}}^{n\times n}\), and the closure (resp. interior) of a set \(S\subset {\mathbb {R}}^n\) by \(\mathrm{cl}(S)\) (resp. \(\mathrm{int}~S\)).

For a given symmetric matrix \({\mathsf {H}}={\mathsf {H}}^\top \), we denote the fact that \({\mathsf {H}}\) is positive-semidefinite by \({\mathsf {H}}\succeq {\mathsf {O}}\). Sometimes we write instead “\({\mathsf {H}}\) is psd.” Denoting the smallest eigenvalue of any symmetric matrix \({\mathsf {M}}={\mathsf {M}}^\top \) by \(\lambda _\mathrm{min}({\mathsf {M}})\), we thus have \({\mathsf {H}}\succeq {\mathsf {O}}\) if and only if \(\lambda _\mathrm{min}({\mathsf {H}})\ge 0\). Linear forms in symmetric matrices \({\mathsf {X}}\) will play an important role in this paper; they are expressed by Frobenius duality \(\langle {{\mathsf {S}}} , {{\mathsf {X}}} \rangle = \text{ trace }({\mathsf {S}}{\mathsf {X}})\), where \({\mathsf {S}}={\mathsf {S}}^\top \) is another symmetric matrix of the same order as \({\mathsf {X}}\). By \({\mathsf {A}}\oplus {\mathsf {B}}\) we denote the direct sum of two square matrices:

For any optimization problem, say (Q), we denote by val(Q) its optimal objective value (attained or not). Consider a quadratic function \(q({\mathsf {x}}) = {\mathsf {x}}^\top {\mathsf {H}} {\mathsf {x}}- 2{\mathsf {d}}^\top {\mathsf {x}}+ \gamma \) defined on \({\mathbb {R}}^{n}\), with \(q({\mathsf {o}})=\gamma \), \(\nabla q({\mathsf {o}})=-2{\mathsf {d}}\) and \(D^2 q({\mathsf {o}})= 2{\mathsf {H}}\) (the factors 2 being here just for ease of later notation). For this q we define the Shor relaxation matrix [36] as

Then \(q({\mathsf {x}}) \ge 0\) for all \({\mathsf {x}}\in {\mathbb {R}}^{n}\) if and only if \({\mathsf {M}}(q) \succeq {\mathsf {O}}\).

Given any cone \({\mathcal {C}}\) of symmetric \(n\times n\) matrices,

denotes the dual cone of \({\mathcal {C}}\). For instance, if \({\mathcal {C}}= \left\{ {\mathsf {X}}={\mathsf {X}}^\top \in {\mathbb {R}}^{n\times n } : {\mathsf {X}}\succeq {\mathsf {O}}\right\} \), then \({\mathcal {C}}^\star = {\mathcal {C}}\) itself, an example of a self-dual cone. Trusting the sharp eyes of our readers, we chose a notation with subtle differences between the five-star denoting a dual cone, e.g., \({\mathcal {C}}^\star \), and the six-star, e.g. \(z^*\), denoting optimality.

The key notion used below is that of copositivity. Given a symmetric \(n\times n\) matrix \({\mathsf {Q}}\), and a closed, convex cone \(\Gamma \subseteq {\mathbb {R}}^n\), we say that

Strict copositivity generalizes positive-definiteness (all eigenvalues strictly positive) and copositivity generalizes positive-semidefiniteness (no eigenvalue strictly negative) of a symmetric matrix. Checking copositivity is NP-hard for most cones \(\Gamma \) of interest, see [16, 31] for the classical case \(\Gamma ={\mathbb {R}}^n_+\) studied already by Motzkin [30] who coined the notion back in 1952. In the sequel, we will use “copositive” synonymous for “\({\mathbb {R}}^n_+\)-copositive” in Motzkin’s sense.

The set of all \(\Gamma \)-copositive matrices forms a closed, convex matrix cone, the copositive cone

with non-empty interior \(\mathrm{int}~{\mathcal {C}}^\star _\Gamma \), which exactly consists of all strictly \(\Gamma \)-copositive matrices. However, the cone \({\mathcal {C}}^\star _\Gamma \) is not self-dual. Rather one can show that \({\mathcal {C}}^\star _\Gamma \) is the dual cone of

the cone of \(\Gamma \)-completely positive (cp) matrices. Note that the factor matrix \({\mathsf {F}}\) has many more columns than rows. A perhaps more amenable representation is

where \({\text{ conv } }S\) stands for the convex hull of a set \(S\subset {\mathbb {R}}^n\). Caratheodory’s theorem then elucidates the bound \({n+1\atopwithdelims ()2}\) on the number of columns in \({\mathsf {F}}\) above, which is not sharp in the classical case \(\Gamma ={\mathbb {R}}^n_+\) but asymptotically tight [10, 35].

Next, we specify a result on reducing \(\Upsilon \)-copositivity with \(\Upsilon ={\mathbb {R}}^p_+\times {\mathbb {R}}^{n}\) to a combination of psd and classical copositivity conditions. This result will be used later on.

Lemma 2.1

Let \(\Upsilon ={\mathbb {R}}^p_+\times {\mathbb {R}}^{n}\) and partition a \((p+n)\times (p+n)\) matrix \({\mathsf {M}}\) as follows:

Then \({\mathsf {M}}\) is \(\Upsilon \)-copositive if and only if the following two conditions hold:

-

(a)

\({\mathsf {H}}\) is positive semidefinite and \({\mathsf {H}}{\mathsf {H}}^\dag {\mathsf {S}}={\mathsf {S}}\), i.e., \(\text{ ker } {\mathsf {H}}\subseteq \text{ ker } {\mathsf {S}}^\top \);

-

(b)

\({\mathsf {R}}-{\mathsf {S}}^\top {\mathsf {H}}^\dag {\mathsf {S}}\) is \(({\mathbb {R}}^p_+-)\)copositive.

Here \({\mathsf {H}}^\dag \) is the Moore-Penrose pseudoinverse of \({\mathsf {H}}\).

Proof

The argument is an easy extension of the arguments that led to [9, Thm.3.1]. \(\square \)

3 Relaxations for extended trust region problems

3.1 Problem structure

The problem we study here is given by

Throughout this paper, we assume that the feasible set of problem (P) is non-empty. The model problem (P) can be reformulated as

where \(F{:}=\left\{ {\mathsf {x}}\in {\mathbb {R}}^n: f_i({\mathsf {x}}) \le 0, \, i=1,2\right\} \), \({\mathsf {b}}\in {\mathbb {R}}^p\) and \({\mathsf {B}}\) is a \(p\times n\) matrix.

For our approach, it will be convenient to introduce slack variables \(s_j{:}= b_j - ({\mathsf {B}}{\mathsf {x}})_j\) for all \(j{ \in \! [{1}\! : \! {p}]}\), arriving at new primal-feasible points \({\mathsf {y}}= ({\mathsf {s}}, {\mathsf {x}})\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\), in other words, to replace P with

with the \(p\times (p+n)\)-matrix \(\bar{{\mathsf {B}}} {:}= [{\mathsf {I}}_p \,| \, {\mathsf {B}}]\).

We now need to extend all original functions in the obvious way, namely \({\bar{f}}_i ({\mathsf {y}}) ={\bar{f}}_i({\mathsf {s}},{\mathsf {x}}) = f_i({\mathsf {x}})\) by writing \(\bar{{\mathsf {Q}}} _i ={\mathsf {O}}\oplus {\mathsf {Q}}_i\), i.e., adding p zero rows and p zero columns to \({\mathsf {Q}}_i\), arriving at symmetric matrices of order \(p+n\); likewise we define \({\bar{{\mathsf {q}}}}_i ^\top = [{\mathsf {o}}^\top ,{\mathsf {q}}_i^\top ]\). Finally, by introducing another quadratic constraint, defining \(\bar{{\mathsf {Q}}}_{3}= \bar{{\mathsf {B}}}^\top \bar{{\mathsf {B}}}\), \({\bar{{\mathsf {q}}}}_{3}= \bar{{\mathsf {B}}}^\top {\mathsf {b}}\) and \(c_{3}={\mathsf {b}}^\top {\mathsf {b}}\), we rephrase the p linear constraints \(\bar{{\mathsf {B}}}{\mathsf {y}}={\mathsf {b}}\) into one quadratic constraint \({\bar{f}}_{3}({\mathsf {y}})={ \Vert \bar{{\mathsf {B}}}{\mathsf {y}}- {\mathsf {b}} \Vert }^2 { \le 0}\).

In this way, the original problem (3) is rephrased in a somehow standardized form, namely

The optimal value \(z^*\) of (3) need not be attained, and it could also be equal to \(-\infty \) (in the unbounded case) or to \(+\infty \) (in the infeasible case). Considering \({\mathsf {Q}}_i={\mathsf {O}}\) would also allow for linear inequality constraints. But it is often advisable to discriminate the functional form of constraints, and we will adhere to this principle in what follows.

3.2 Copositive relaxation

Next, we introduce a copositive relaxation for (P). Let \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}}) \in \mathbb {R}^p \times \mathbb {R}^n\). Now consider multipliers \({\mathsf {u}}\in {\mathbb {R}}^{3}_+\) of the inequality constraints \({\bar{f}}_i({\mathsf {y}})= f_i({\mathsf {x}})\le 0\), \(i{ \in \! [{1}\! : \! {3}]}\), and \({\mathsf {v}}\in {\mathbb {R}}^p_+\) for the sign constraints \({\mathsf {s}}\in {\mathbb {R}}^p_+\). Then we define the full Lagrangian function for problem (4) as

Let \(\Upsilon = {\mathbb {R}}^{p+1}_+\times {\mathbb {R}}^n\). Recall that the matrix \({\mathsf {J}}_0\) and the Shor relaxation matrix \({\mathsf {M}}(q)\) for a quadratic function q are given as in (1) and (2) respectively. Then the matrix \({\mathsf {M}}(L (\cdot ;{\mathsf {u}},{\mathsf {o}}))-\mu {\mathsf {J}}_0\) can be written as below:

where \({\mathsf {H}}_u = {\mathsf {Q}}_0 + u_1 {\mathsf {Q}}_1+ u_2 {\mathsf {A}}^\top {\mathsf {A}}\) is the Hessian of the Lagrangian function. We now associate a copositive relaxation for (P) as follows:

It is worth noting that, unlike in [7], our model problem does not require the variables to be nonnegative, and so the techniques in constructing a copositive relaxation as in [7] cannot be applied directly. Here we achieve this task by introducing nonnegative slack variables.

An important observation is that the copositive relaxation can be equivalently reformulated as a semi-Lagrangian dual problem of the problem (P). Recall that the usual Lagrangian dual (or Lagrangian relaxation) of (P) is given by

where \(\Theta ({\mathsf {u}},{\mathsf {v}}) {:}=\inf \left\{ L ({\mathsf {y}};{\mathsf {u}},{\mathsf {v}}) : {\mathsf {y}}\in {\mathbb {R}}^{p+n}\right\} \). A form of partial Lagrangian relaxation called semi-Lagrangian of (P) (see [7, 17] and the references therein) is given by

where \(\Theta _{\mathrm{semi}}({\mathsf {u}}) {:}=\inf \left\{ L ({\mathsf {y}};{\mathsf {u}},{\mathsf {o}}) : {\mathsf {y}}\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\right\} \). The relation between copositive relaxation, full and semi-Lagrangian bounds can be summarized in the following chain of inequalities:

We note that the relation \(z_\mathrm{LD}^*\le z_{\mathrm{semi}}^* \le z^*\) follows by the construction, and the equality \(z_\mathrm{COP}^*=z_{\mathrm{semi}}^*\) follows by adapting the techniques in [7, Lemma 2.1] to the polyhedral cone \({\mathbb {R}}^p_+\times {\mathbb {R}}^n\) (see also (11) later for a detailed proof).

We now illustrate that, in general, a copositive relaxation can provide a much tighter bound for the model problem (P) than the usual Lagrangian dual. Indeed, in the following example, we see that the copositive relaxation is tight while the usual Lagrangian dual yields a trivial lower bound which has infinite gap. As we will see later (Proposition 4.3), one can indeed construct a whole class of quadratic optimization problems with exact copositive relaxation but infinite Lagrangian duality gap.

Example 3.1

(Copositive relaxation vs Lagrangian relaxation) Consider the following nonconvex quadratic optimization problem with simple linear inequality constraints

Clearly, the objective function is not convex and the optimal value of this problem is \(z^*=0\). We next observe that this problem can be converted to our standard form as

Then the copositive relaxation reads

Clearly, from the copositivity requirement, \(z_\mathrm{COP}^* \le 0\). Moreover, it can be verified from Lemma 2.1 that, for \(\mu =0\) and \(u=1\), the matrix

Thus, \(z_\mathrm{COP}^*=z^*=0\).

Next we show that \(z_\mathrm{LD}^*=-\infty \). To see this, we only need to show that for each fixed \(u \ge 0\) and \({\mathsf {v}}=(v_1,v_2)^\top \in {\mathbb {R}}_+^2\), we have

Indeed, taking \({\mathsf {x}}={\mathsf {s}}=(-t,t)\) we see that, as \(t\rightarrow +\infty \),

4 Tightness of copositive relaxation

We consider, for \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}})\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\), the full Lagrangian function

As in [7], let us say that the pair \(( {\mathsf {x}};{\mathsf {u}},{\mathsf {v}})\in (F\cap P)\times {\mathbb {R}}^{3}_+\times {\mathbb {R}}^p\) is a generalized KKT pair for (3) if and only if, for \({\mathsf {s}}={\mathsf {b}}-{\mathsf {B}}{\mathsf {x}}\) and \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}})\), it satisfies both the first-order conditions \(\nabla _{\mathsf {y}}L({\mathsf {y}}; {\mathsf {u}}, {\mathsf {v}}) = {\mathsf {o}}\) and as well the complementarity conditions \(v_k s_k =0\) for all \(k{ \in \! [{1}\! : \! {p}]}\) and \(u_i {\bar{f}}_i({\mathsf {y}})=0\) for all \(i{ \in \! [{1}\! : \! {3}]}\), but without requiring \(v_k\ge 0\).

4.1 Geometric conditions for exact copositive relaxation

Next, we provide a geometric condition ensuring the exactness of the copositive relaxation which does not rely on the information of KKT pairs. To do this, denote \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}})\) and let \(\bar{f}_0({\mathsf {y}})={\mathsf {x}}^\top {\mathsf {Q}}_0{\mathsf {x}}+2 {\mathsf {q}}_0^\top {\mathsf {x}}\), \(\bar{f}_1({\mathsf {y}}) = {\mathsf {x}}^\top {\mathsf {Q}}_1x+2{\mathsf {q}}_1^\top {\mathsf {x}}-1\), \(\bar{f}_2({\mathsf {y}}) = { \Vert {\mathsf {A}}{\mathsf {x}}-{\mathsf {a}} \Vert }^2-1\) and \(\bar{f}_3({\mathsf {y}})=\Vert {\mathsf {B}}{\mathsf {x}}+{\mathsf {s}}-{\mathsf {b}}\Vert ^2\).

Theorem 4.1

For the extended trust region problem (P), let

Suppose that \(\Omega \) is closed and convex. Then we have \(z_\mathrm{COP}^*=z^*\).

Proof

Let \(z_{\mathrm{semi}}^*\) denote the optimal value of the semi-Lagrangian dual (8). We first observe that \(z_\mathrm{COP}^*=z_{\mathrm{semi}}^*\). To see this, for any \(\mu \in {\mathbb {R}}\) and any quadratic function q defined on \(\mathbb {R}^{p+n}\), it can be directly verified that the following two conditions are equivalent:

-

(a)

\(q({\mathsf {y}})\ge \mu \) for all \({\mathsf {y}}\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\);

-

(b)

the \((n+p+1)\times (n+p+1)\)-matrix \({\mathsf {M}}(q-\mu )={\mathsf {M}}(q)-\mu {\mathsf {J}}_0\) is \(\Upsilon \)-copositive.

This equivalence implies the identity

Note that above equality holds, by the usual convention that \(\sup \emptyset = -\infty \), also if q is unbounded from below on \({\mathbb {R}}^p_+\times {\mathbb {R}}^n\). Applying (10) with \(q=L (\cdot ;{\mathsf {u}},{\mathsf {o}})\), we see that

Then it follows from the definitions of semi-Lagrangian dual and copositive relaxation that

As \(z^* \ge z_{\mathrm{semi}}^*\), we see that \(z^* \ge z_\mathrm{COP}^*\) always holds. So, we can assume without loss of generality that \(z^*>-\infty \). As the feasible set of (P) is nonempty, we have \(z^*<+\infty \), and hence \(z^* \in \mathbb {R}\). Let \(\epsilon >0\). Thus \([z^*-\epsilon ,0,0,0]^\top \notin \Omega \). By the strict separation theorem, there exists \((\mu _0,\mu _1,\mu _2,\mu _3) \ne (0,0,0,0)\) such that

As \(\Omega +\mathbb {R}^4_+ \subseteq \Omega \), we get \(\mu _i \ge 0\) for all \(i{ \in \! [{0}\! : \! {3}]}\). Moreover, by the feasibility, we see that \(\mu _0>0\). Thus, by dividing \(\mu _0\) on both sides, we see that for all \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}}) \in \mathbb {R}^p_+ \times \mathbb {R}^n\)

where \(\lambda _i=\mu _i /\mu _0\), \(i=1,2,3\). This implies that

where the second inequality follows from the definition of semi-Lagrangian dual (8). By letting \(\epsilon \searrow 0\), we have \(z^* \le z_\mathrm{COP}^*\). As the reverse inequality always holds, the conclusion follows. \(\square \)

Before we provide simple sufficient conditions ensuring this geometrical condition, we will illustrate it using our previous example.

Example 4.1

Consider the same example as in Example 3.1. We observe that, in this case \({\mathsf {A}},{\mathsf {Q}}_1\) are zero matrices and \({\mathsf {q}}_1,{\mathsf {a}}\) are zero vectors, and so, the set \(\Omega \) becomes

Then

where

Now we provide an analytic expression for \(\Omega _1\). Note that, if \(x_1=0\), then \([\frac{x_1^2}{2}+2x_1x_2+ x_2^2,(x_1-s_1)^2+(x_2-s_2)^2 ]^\top \in \mathbb {R}_+^2\) and \(\mathbb {R}^2_+ \subseteq \Omega _1\) (take \(x_2=s_2\ge 0\) and \(s_1\ge 0=x_1\) to get an arbitrary point \((x_2^2,s_1^2)^\top \in {\mathbb {R}}^2_+\)). Thus we only need to consider the case where \(x_1 \ne 0\). Then

where the last equality follows by noting that \(\min _{s \ge 0}(x-s)^2=\min \{x,0\}^2\). Direct verification now shows that

which is closed and convex. Therefore, \(\Omega \) is also closed and convex.

Next, we provide some verifiable sufficient conditions guaranteeing convexity as well as closedness of \(\Omega \). To do this, recall that an \(n\times n\) matrix \({\mathsf {M}}\) is called a Z-matrix if its off-diagonal elements \(M_{ij}\) with \(1 \le i,j \le n\) and \(i \ne j\), are all non-positive. We also need the following joint-range convexity for Z-matrices.

Lemma 4.1

Let \({\mathsf {M}}_i\), \(i{ \in \! [{1}\! : \! {q}]}\), be symmetric Z-matrices of order n. Then

is a convex cone.

Proof

The proof is similar to [19, Theorem 5.1]. \(\square \)

Proposition 4.1

Suppose that \({\mathsf {Q}}_0,{\mathsf {Q}}_1\), \({\mathsf {A}}^\top {\mathsf {A}}\) are all Z-matrices, \({\mathsf {B}}=-{\mathsf {I}}_n\) and \({\mathsf {q}}_0,{\mathsf {q}}_1,{\mathsf {a}},{\mathsf {b}}\) are zero vectors. Then \(\Omega \) is convex.

Proof

Let \(\bar{h}_0({\mathsf {y}})={\mathsf {x}}^\top {\mathsf {Q}}_0{\mathsf {x}}\), \(\bar{h}_1({\mathsf {y}}) = {\mathsf {x}}^\top {\mathsf {Q}}_1{\mathsf {x}}\), \(\bar{h}_2({\mathsf {y}}) = { \Vert {\mathsf {A}}{\mathsf {x}} \Vert }^2\) and \(\bar{h}_3({\mathsf {y}})=\Vert {\mathsf {x}}-{\mathsf {s}}\Vert ^2\) with \({\mathsf {y}}=({\mathsf {s}},{\mathsf {x}})\), so \(p=n\) here. We first note that \( \Omega =(0,-1,-1,0)+\bar{\Omega }\) where

To see the convexity of \(\Omega \), it suffices to show that \(\bar{\Omega }\) is convex. To verify this, take \((u_{0},u_{1},u_{2},u_{3}) \in \bar{\Omega }\) and \((v_{0},v_{1},v_{2},v_{3}) \in \bar{\Omega }\), and let \(\lambda \in [0,1]\). Then there exist \((\hat{{\mathsf {s}}},\hat{{\mathsf {x}}}) \in \mathbb {R}^n_+ \times \mathbb {R}^n \) and \((\tilde{{\mathsf {s}}},\tilde{{\mathsf {x}}}) \in \mathbb {R}^n_+ \times \mathbb {R}^n \) such that

In particular, \(u_3\ge 0\) and \(v_3 \ge 0\). We now verify that

Note that \(\bar{h}_i({\mathsf {y}}) = {\mathsf {y}}^\top \left[ {\mathsf {O}}\oplus {\mathsf {Q}}_i \right] {\mathsf {y}}\) for \(i\in \left\{ 0,1\right\} \) and \(\bar{h}_2({\mathsf {y}}) = {\mathsf {y}}^\top \left[ {\mathsf {O}}\oplus {\mathsf {A}}^\top {\mathsf {A}}\right] {\mathsf {y}}\), cf. (1), while

so that the associated matrices

are all Z-matrices. We see that

is convex. So there exists \(({\mathsf {r}},{\mathsf {z}}) \in \mathbb {R}^p \times \mathbb {R}^n\) such that

Denote \({\mathsf {z}}=(z_1,\ldots ,z_n)\) and let \(|{\mathsf {z}}|=(|z_1|,\ldots ,|z_n|)\). The Z-matrices assumptions ensure

and

Therefore, \(\lambda (u_{0},u_{1},u_{2},u_{3})+(1-\lambda ) (v_{0},v_{1},v_{2},v_{3}) \in \bar{\Omega }\), and so the conclusion follows. \(\square \)

Proposition 4.2

Suppose that there exist \(\tau _i\ge 0\), \(i{ \in \! [{0}\! : \! {2}]}\), such that

Then \(\Omega \) is closed.

Proof

Let \({\mathsf {r}}^{(k)} \in \Omega \) such that \({\mathsf {r}}^{(k)}\rightarrow {\mathsf {r}}\in {\mathbb {R}}^4\). Then there exists \({\mathsf {y}}_k=({\mathsf {s}}_k,{\mathsf {x}}_k)\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\) such that

We first see that \(\{{\mathsf {x}}_k\}\) is bounded. To see this, note that

Since \(\nabla ^2 \big (\sum _{i=0}^2 \tau _i f_i\big )({\mathsf {x}}) \equiv \tau _0 {\mathsf {Q}}_0+ \tau _1 {\mathsf {Q}}_1+\tau _2 {\mathsf {A}}^\top {\mathsf {A}}\succ 0\), this implies that \(\{{\mathsf {x}}_k\}\) must be bounded. Taking into account that

it follows that also \(\{{\mathsf {s}}_k\}\) is a bounded sequence. By passing to subsequences, we may assume that \({\mathsf {y}}_k=({\mathsf {s}}_k,{\mathsf {x}}_k) \rightarrow ({\mathsf {s}},{\mathsf {x}})=:{\mathsf {y}}\in {\mathbb {R}}^p_+\times {\mathbb {R}}^n\). Passing to the limit, we see that \(\bar{f}_i({\mathsf {y}}) \le r_{i}, \ i{ \in \! [{0}\! : \! {3}]}\) and so \({\mathsf {r}}\in \Omega \). Thus \(\Omega \) is closed. \(\square \)

4.2 Sufficient global optimality conditions

Now, we obtain the following sufficient second-order global optimality condition, which also implies that the copositive relaxation is tight, generalizing a recent result [9, Section 6.3] for CDT problems:

Theorem 4.2

If at a generalized KKT pair \((\bar{{\mathsf {x}}}; \bar{{\mathsf {u}}},\bar{{\mathsf {v}}})\in (F\cap P)\times {\mathbb {R}}^3_+\times {\mathbb {R}}^p\) of problem (3), we have

then \(\bar{{\mathsf {x}}}\) is a globally optimal solution to (3) and \(z^*=z_\mathrm{COP}^*\).

Proof

We first note that the conic dual of problem (6) is

with \({\mathsf {M}}_i = {\mathsf {M}}({\bar{f}}_i)\) and \({\mathcal {C}}_\Upsilon = {\text{ conv } }\left\{ {\mathsf {x}}{\mathsf {x}}^\top : {\mathsf {x}}\in {\mathbb {R}}^{p+1}_+\times {\mathbb {R}}^n \right\} \). From standard conical lifting and weak duality arguments it follows

Let \(\bar{{\mathsf {s}}}= {\mathsf {b}}-{\mathsf {B}}\bar{{\mathsf {x}}}\) and \(\bar{{\mathsf {y}}}=(\bar{{\mathsf {s}}}, \bar{{\mathsf {x}}})\). The complementarity conditions imply \(\bar{{\mathsf {v}}}^\top \bar{{\mathsf {s}}} = 0\) and \(\sum \nolimits _{i=1}^{3}u_i {\bar{f}}_i(\bar{{\mathsf {y}}})= 0\), so that both the standard \(L (\bar{{\mathsf {y}}};\bar{{\mathsf {u}}},\bar{{\mathsf {v}}}) = f_0(\bar{{\mathsf {x}}})\) and as well \(L (\bar{{\mathsf {y}}};\bar{{\mathsf {u}}},{\mathsf {o}}) = f_0(\bar{{\mathsf {x}}})\), which will be used now. Indeed, put \(\bar{{\mathsf {z}}}^\top =[1,\bar{{\mathsf {y}}}^\top ]\) and \(\bar{{\mathsf {X}}} = \bar{{\mathsf {z}}}\bar{{\mathsf {z}}}^\top \in {\mathcal {C}}_\Upsilon \). Then from the definition of \(\bar{{\mathsf {S}}}\) we get

so that \(( \bar{{\mathsf {X}}},\bar{{\mathsf {S}}})\) form an optimal primal-dual pair for the copositive problem (13) and (6) with zero duality gap. We conclude, by feasibility of \(\bar{{\mathsf {x}}}\) and definition of \(z^*\), and because of (6) with \(\mu =f_0(\bar{{\mathsf {x}}})\), cf. (12),

yielding tightness of the copositive relaxation, zero duality gap for the copositive-cp conic optimization problems, and optimality of \(\bar{{\mathsf {x}}}\). \(\square \)

While checking copositivity is NP-hard, the slack matrix \(\bar{{\mathsf {S}}}\) may lie in a slightly smaller but tractable approximation cone, and then global optimality is guaranteed even in cases where \(\bar{{\mathsf {S}}}\) is indefinite. The difference can also be expressed in properties of the Hessian \({\mathsf {H}}_{\bar{{\mathsf {u}}}}\) of the Lagrangian (recall that this is the same irrespective of our decision whether to relax also the linear constraints or not): indeed, a similar condition on the slack matrix yielding tightness of the classical Lagrangian bound (i.e. \(z_\mathrm{LD}^*=z^*\)) or the equivalent SDP relaxation [7, Section 5.1] implies that its lower right principal submatrix \({\mathsf {H}}_{\bar{{\mathsf {u}}}}\) has to be psd, and we know this is too strong in some cases [39].

By contrast, the condition \(\bar{{\mathsf {S}}}\in {\mathcal {C}}^\star _\Upsilon \) (giving tightness \(z_\mathrm{COP}^*=z^*\)), by the same argument using Lemma 2.1 and (5), only yields positive semidefiniteness of \({\mathsf {H}}_{\bar{{\mathsf {u}}}}+ \bar{{\mathsf {u}}}_{3}{\mathsf {B}}{\mathsf {B}}^\top \). Of course, this happens with higher frequency than positive-definiteness of the Hessian, and the discrepancy is not negligible, see [7, Section 5] for an example.

We note that Theorem 4.2 can be used to construct a class of problems where copositive relaxation is always tight while the usual Lagrangian dual produces a trivial bound with infinite duality gaps. To see this, we shall need the following auxiliary result.

Lemma 4.2

Let \({\mathsf {M}}\) be strictly \({\mathbb {R}}^n_+\)-copositive; then there exists a constant \(\sigma >0\) such that

Proof

We first note that the conclusion trivially holds if \({\mathsf {M}}\) is further assumed to be positive semidefinite. So we may assume without loss of generality that \(\lambda _\mathrm{min} ({\mathsf {M}}) <0\). For any \({\mathsf {x}}\in {\mathbb {R}}^n\), denote by

and by \({\mathsf {x}}^-{:}= {\mathsf {x}}^+-{\mathsf {x}}\in {\mathbb {R}}^n_+\) so that \({\mathsf {x}}= {\mathsf {x}}^+-{\mathsf {x}}^-\). Furthermore, we have \( \Vert {\mathsf {x}}-{\mathsf {s}} \Vert \ge \Vert {\mathsf {x}}^- \Vert \) for all \({\mathsf {s}}\in {\mathbb {R}}^n_+\), as can be seen easily. Therefore we are done if we establish the (non-quadratic) inequality \({\mathsf {x}}^\top {\mathsf {M}} {\mathsf {x}}+ \sigma \Vert {\mathsf {x}}^- \Vert ^2 >0\) whenever \({\mathsf {x}}\in {\mathbb {R}}^n\setminus \left\{ {\mathsf {o}}\right\} \). Now, given \({\mathsf {M}}\) is strictly copositive, we choose \(\rho {:}=\min \left\{ {\mathsf {x}}^\top {\mathsf {M}} {\mathsf {x}}: {\mathsf {x}}\in {\mathbb {R}}^n_+, \Vert {\mathsf {x}} \Vert =1\right\} >0\). Note that \({\mathsf {x}}\in {\mathbb {R}}^n_+\) if and only if \( \Vert {\mathsf {x}}^- \Vert =0\). By continuity and compactness, we infer existence of an \(\varepsilon >0\) such that \({\mathsf {x}}^\top {\mathsf {M}} {\mathsf {x}}\ge \frac{\rho }{2}\) whenever \( \Vert {\mathsf {x}}^- \Vert \le \varepsilon \) and \( \Vert {\mathsf {x}} \Vert = 1\). Now we distinguish two cases:

Case 1: \( \Vert {\mathsf {x}}^- \Vert \ge \varepsilon \Vert {\mathsf {x}} \Vert >0\). In this case, we have

where we set \(\sigma {:}= - 2\lambda _\mathrm{min} ({\mathsf {M}})/\varepsilon ^2 >0\);

Case 2: \( \Vert {\mathsf {x}}^- \Vert \le \varepsilon \Vert {\mathsf {x}} \Vert \). In this case, one has \({\mathsf {x}}^\top {\mathsf {M}} {\mathsf {x}}\ge \frac{\rho }{2} { \Vert {\mathsf {x}} \Vert }^2 > -\sigma \Vert {\mathsf {x}}^- \Vert ^2\) (for any \(\sigma >0\)).

So in both cases, we obtain \({\mathsf {x}}^\top {\mathsf {M}} {\mathsf {x}}+ \sigma { \Vert {\mathsf {x}}^- \Vert }^2 >0\) for all \({\mathsf {x}}\in {\mathbb {R}}^n\setminus \left\{ {\mathsf {o}}\right\} \), and the lemma is shown. \(\square \)

Consider the following non-convex quadratic optimization problem

where \({\mathsf {Q}}_0\) is a strictly \(\mathbb {R}^n_+\)-copositive and indefinite matrix. This problem can be regarded as a special case of the model problem (P) with \({\mathsf {Q}}_1={\mathsf {I}}_n\), \({\mathsf {A}}={\mathsf {O}}\) and \({\mathsf {a}}=0\). We now see that this class of quadratic optimization admits a tight copositive relaxation and an infinite Lagrangian duality gap.

Proposition 4.3

(Tight copositive relaxation and infinite Lagrangian duality gap for (EP)) For problem (EP), let \(z^*\), \(z_\mathrm{LD}^*\) and \(z_\mathrm{COP}^*\) denote the optimal value of (EP), the Lagrangian relaxation of (EP) and copositive relaxation of (EP) respectively. Then \(z_\mathrm{LD}^*=-\infty \) and \(z^*=z_\mathrm{COP}^*=0\).

Proof

Direct verification shows that \({\mathsf {o}}\in \mathbb {R}^n\) is a global solution with the optimal value \(z^*=0\). We first observe that, as \({\mathsf {Q}}_0\) is indefinite, the optimal value of the Lagrangian dual is \(z_\mathrm{LD}^*=-\infty \). Next, as \({\mathsf {Q}}_0\) is strictly \(\mathbb {R}^n_+\)-copositive, the preceding lemma implies that there exists \(\sigma >0\) such that

Let \(\bar{{\mathsf {y}}}=(\bar{{\mathsf {x}}},\bar{{\mathsf {s}}})\) with \(\bar{{\mathsf {x}}}=\bar{{\mathsf {s}}}={\mathsf {o}}\in \mathbb {R}^n\), \(\bar{{\mathsf {u}}}=(0,0,\sigma ) \in \mathbb {R}^3_+\) and \(\bar{{\mathsf {v}}}={\mathsf {o}}\in \mathbb {R}^n\). Then we see that \((\bar{{\mathsf {y}}};\bar{{\mathsf {u}}},\bar{{\mathsf {v}}})\) is a (generalized) KKT pair for (EP). Moreover, we have

For all \({\mathsf {d}}{:}=(r,{\mathsf {s}},{\mathsf {x}})\in \Upsilon = \mathbb {R}_+ \times {\mathbb {R}}^n_+\times {\mathbb {R}}^n\), above implies

and hence \({\mathsf {M}}(L (\cdot ;\bar{{\mathsf {u}}},{\mathsf {o}}))-f_0(\bar{{\mathsf {x}}}) {\mathsf {J}}_0\) is \(\Upsilon \)-copositive. This shows that \(z_\mathrm{COP}^*=0\). \(\square \)

5 Relaxation tightness in extended CDT Problems

In this section, we examine the so-called extended CDT problem:

This problem is a special case of our general model problem with \({\mathsf {Q}}_1={\mathsf {I}}_n\) and \({\mathsf {q}}_1={\mathsf {o}}\). In the cases where no linear inequalities are present, the problem \(\mathrm {(P_{CDT})}\) reduces to the so-called CDT problem (also referred as two-ball trust region problems, TTR). The CDT problem, in general, is much more challenging than the well-studied trust region problems and has received much attention lately, see for example [1, 5, 6, 9, 14]. The problem \(\mathrm {(P_{CDT})}\) arises from robust optimization [20] as well as applying trust region techniques for solving nonlinear optimization problems with both nonlinear and linear constraints: see [34] for the case of trust region problems with additional linear inequalities and see [6, 9] for the case of CDT problems. We will establish simple conditions ensuring exactness of the copositive relaxations and the usual Lagrangian relaxations of the extended CDT problem.

First of all, we note that the sufficient second-order global optimality condition in Theorem 4.2, specialized to the setting \(\mathrm {(P_{CDT})}\), yields the exact copositive relaxation for extended CDT problems.

Corollary 5.1

Let \((\bar{{\mathsf {x}}}; \bar{{\mathsf {u}}},\bar{{\mathsf {v}}})\in F_{\mathrm{CDT}} \times {\mathbb {R}}^3_+\times {\mathbb {R}}^p\) be a generalized KKT pair of problem \(\mathrm {(P_{CDT})}\) where \(F_{\mathrm{CDT}}\) is the feasible set of \(\mathrm {(P_{CDT})}\). Denote by \(\bar{\mu }{:}=\bar{{\mathsf {x}}}^\top {\mathsf {Q}}_0 \bar{{\mathsf {x}}}+2 {\mathsf {q}}_0^\top \bar{{\mathsf {x}}}\). Suppose that

is \((\mathbb {R}^{p+1}_+ \times \mathbb {R}^n)\)-copositive. Then \(\bar{{\mathsf {x}}}\) is a globally optimal solution to problem \(\mathrm {(P_{CDT})}\) and \(z^*=z_\mathrm{COP}^*\).

Proof

The conclusion follows by Theorem 4.2 with \({\mathsf {Q}}_1={\mathsf {I}}_n\) and \({\mathsf {q}}_1={\mathsf {o}}\). \(\square \)

Next we examine when the usual Lagrangian relaxation is exact for the extended CDT problems. To this end, we define an auxiliary convex optimization problem

where

We first see that if the auxiliary convex problem (AP) has a minimizer on the sphere \(\{{\mathsf {x}}\in {\mathbb {R}}^n :\Vert {\mathsf {x}}\Vert =1\}\), then an extended CDT problem has a tight semi-Lagrangian relaxation. We will provide a sufficient condition in terms of the original data guaranteeing this condition later (in Theorem 5.1).

Lemma 5.1

Suppose that the auxiliary convex problem (AP) has a minimizer on the sphere \(\{{\mathsf {x}}:\Vert {\mathsf {x}}\Vert =1\}\). Then \(z_\mathrm{LD}^*=z_\mathrm{COP}^*=z^*\).

Proof

Recall that \(z_\mathrm{LD}^* \le z_\mathrm{COP}^* \le z^*\). So it suffices to show that \(z_\mathrm{LD}^*=z^*\). Without loss of generality, we assume that \(\lambda _{\min }({\mathsf {Q}}_0)<0\) (otherwise \(\mathrm {(P_{CDT})}\) is a convex quadratic problem and so \(z_\mathrm{LD}^*=z^*\)). Let \({\mathsf {x}}^*\) be a solution of (AP) with \(\Vert {\mathsf {x}}^*\Vert =1\). As \(\lambda _{\min }({\mathsf {Q}}_0)<0\), it follows from \( \Vert {\mathsf {x}} \Vert \le 1\) for all \({\mathsf {x}}\in F_{\mathrm{CDT}}\) that

where the last inequality follows from feasibility of \({\mathsf {x}}^*\) for the extended CDT problem. This shows that \(z^*=\mathrm{val}\mathrm {(AP)}+\lambda _{\min }({\mathsf {Q}}_0)\). Rewriting (AP) as

we obtain the Lagrangian dual of this problem which can be stated as

where \(\tilde{{\mathsf {M}}}(\mu ,{\mathsf {u}},{\mathsf {v}})\) denotes the matrix

Note that the feasible set of (AP1) is bounded by \(\Vert {\mathsf {x}}\Vert \le 1\) and \({\mathsf {s}}={\mathsf {B}}{\mathsf {x}}-{\mathsf {b}}\) for all feasible \(({\mathsf {x}},{\mathsf {s}})\). Since any convex optimization problem with compact feasible set enjoys a zero duality gap (for example see [21]), it follows that

Finally, the conclusion results by noting that \(\mathrm{val}\mathrm {(LD1)}=z_\mathrm{LD}^*+\lambda _{\min }({\mathsf {Q}}_0)\). So we have \(z^*=z_\mathrm{LD}^*\) and furthermore \(z_\mathrm{LD}^*=z_\mathrm{COP}^*=z^*\). \(\square \)

Next we provide a simple sufficient condition formulated in terms of the original data guaranteeing tightness of the relaxations. It is important to note that this sufficient condition can be efficiently verified by solving a feasibility problem of a linear optimization problem.

Theorem 5.1

Let \({\mathsf {M}}=[{\mathsf {Q}}_0^+ | {\mathsf {A}}^\top ]^\top \). Suppose that

Then \(z_{\mathrm{LD}}^*=z_{\mathrm{COP}}^*=z^*\).

Proof

By the preceding lemma, the conclusion follows if we show that the auxiliary convex problem (AP) has a minimizer on the sphere \(\{{\mathsf {x}}:\Vert {\mathsf {x}}\Vert =1\}\). Suppose that a minimizer \({\mathsf {x}}^*\) of (AP) satisfies \(\Vert {\mathsf {x}}^*\Vert <1\). Let \({\mathsf {v}}\in \mathrm{ker}({\mathsf {M}}) \cap \{{\mathsf {v}}\in \mathbb {R}^n: {\mathsf {B}}{\mathsf {v}}\le {\mathsf {o}}\} \cap \{{\mathsf {v}}: {\mathsf {q}}_0^\top {\mathsf {v}}\ge 0\}\) with \({\mathsf {v}}\ne {\mathsf {o}}\). Consider \({\mathsf {x}}(t)={\mathsf {x}}^*+t{\mathsf {v}}\), \(t \ge 0\). Then there exists \(t_0>0\) such that \(\Vert {\mathsf {x}}(t_0)\Vert =1\). Now observe

and

This shows that \({\mathsf {x}}(t_0)\) is a minimizer for (AP) and \(\Vert {\mathsf {x}}(t_0)\Vert =1\). The conclusion follows. \(\square \)

Remark 5.1

(LP reformulation of the sufficient condition (15)) Our sufficient condition (15) can be efficiently verified by determining a feasible solution to the following LP

Remark 5.2

(Links to the known dimension condition for exact relaxation) In the special case where \({\mathsf {A}}={\mathsf {O}}\) and \({\mathsf {a}}={\mathsf {o}}\in \mathbb {R}^n\), the authors showed in [20], that under the dimension condition

where \({\mathsf {b}}_i^\top \) is the ith row of \({\mathsf {B}}\), the SDP (or Lagrangian) relaxation is exact. We observe that this dimension condition is strictly stronger than our sufficient condition in the preceding theorem.

Firstly, we see that the dimension condition implies our sufficient condition in the preceding theorem. To see this, suppose the above dimension condition holds. Then there exists \({\mathsf {v}}\ne {\mathsf {o}}\) such that \({\mathsf {v}}\in \mathrm{ker} {\mathsf {Q}}_0^+\) and \({\mathsf {b}}_i^\top {\mathsf {v}}=0\) for all \(i{ \in \! [{1}\! : \! {p}]}\) (and hence \({\mathsf {B}}{\mathsf {v}}={\mathsf {o}}\)). By replacing \({\mathsf {v}}\) by \(-{\mathsf {v}}\) if necessary, we can assume that \({\mathsf {q}}_0^\top {\mathsf {v}}\ge 0\). Thus, our sufficient condition in the preceding theorem holds.

To see the dimension condition is strictly stronger, let us consider \({\mathsf {Q}}_0=\left[ \begin{array}{cc} 2 &{} 0 \\ 0 &{} -2 \end{array}\right] \), \({\mathsf {A}}=\left[ \begin{array}{cc} 0 &{} 0 \\ 0 &{} 0 \end{array}\right] \), \({\mathsf {q}}_0={\mathsf {a}}= \left[ \begin{array}{c} 0\\ 0\end{array}\right] \) and \({\mathsf {B}}={\mathsf {b}}_1^\top =[-1,0]\). Clearly, \(\mathrm{dim}\, \mathrm{ker} {\mathsf {Q}}_0^+=\mathrm{dim}\, \mathrm{ker} \left[ \begin{array}{cc} 4 &{} 0 \\ 0 &{} 0 \end{array}\right] =1\) and \(\mathrm{dim} \, \mathrm{span}[{\mathsf {b}}_1]=1\), and so the dimension condition fails. On the other hand, our sufficient condition reads

which is obviously satisfied.

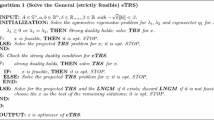

6 Approximation hierarchies for \({\mathcal {C}}_\Upsilon \)-copositivity

In general, checking copositivity of a matrix is an NP-hard problem, and hence solving a copositive optimization problem is also NP-hard. Therefore, to compute the semi-Lagrangian, we need to approximate them by so-called hierarchies, i.e., a sequence of conic optimization problems involving tractable cones \({\mathcal {K}}_d^\star \) such that \({\mathcal {K}}_d^\star \subset {\mathcal {K}}_{d+1}^\star \subset {\mathcal {C}}_{\Upsilon }^\star \) where d is the level of the hierarchy, and \(\mathrm{cl}(\bigcup _{d=0}^\infty {\mathcal {K}}_d^\star ) = {\mathcal {C}}_{{\Upsilon }}^\star \). On the dual side, \({\mathcal {K}}_d\) are also tractable, \({\mathcal {K}}_{d+1} \subset {\mathcal {K}}_d\), and \(\bigcap _{d=0}^\infty {\mathcal {K}}_d= {\mathcal {C}}_{\Upsilon }\). For classical copositivity \({\mathcal {C}}_{{\mathbb {R}}^n_+}\), there are many options, for a concise survey see [8]. Many of these hierarchies involve linear [11, 12] or psd constraints of matrices of order \(n^{d+2}\), e.g. the seminal ones proposed in [24, 33]. One possibility would be the reduction of \(\Upsilon \)-copositivity via Schur complements as in Lemma 2.1 above, reducing this question to a combination of psd and classical copositivity conditions, which can be treated by these classical approximation hierarchies. However, the difficulty with this approach is the nonlinear dependence of the Schur complement \({\mathsf {R}}- {\mathsf {S}}^\top {\mathsf {H}}^\dag {\mathsf {S}}\) on \((\mu , {\mathsf {u}})\). Therefore let us outline two alternative approaches for constructing tractable hierarchies in approximating the copositive relaxation, extending and adapting the classical approach.

6.1 SDP hierarchy

One approach for computing the copositive relaxation is to use a hierarchy of SDP relaxations, extending the sum-of-squares idea in Parrilo’s work [33], which we sketch as below. Let \(I=[1\!:\!p+1]\) and, for a matrix \({\mathsf {M}}={\mathsf {M}}^\top \in \mathbb {R}^{(p+n+1)\times (p+n+1)}\), define a quartic polynomial

Note that \({\mathsf {M}}\) is \(\Upsilon \)-copositive if and only if \(p_{\mathsf {M}}({\mathsf {y}}) \ge 0\) for all \({\mathsf {y}}\in \mathbb {R}^{p+n+1}\). A sufficient condition for this is that the product \(p_{\mathsf {M}}({\mathsf {y}}){ \Vert {\mathsf {y}} \Vert }^{2d} = p_{\mathsf {M}}({\mathsf {y}})(\sum _k y_k^2)^d\) is a sum-of-squares (s.o.s.) polynomial, which automatically guarantees nonnegativity of \(p_{\mathsf {M}}\) over \({\mathbb {R}}^{p+n+1}\). Now it is natural to define

Then, following the logic of classical hierarchies, it is not difficult to see that above properties hold, and that \({\mathcal {K}}_d^\star \) (and \({\mathcal {K}}_d\) itself) are tractable cones expressible by LMI conditions on matrices of order \(n^{d+2}\). Thus, copositivity characterization of the semi-Lagrangian relaxation can be computed by using SDP hierarchies and polynomial optimization techniques [33]. Of course, LMIs on matrices of larger order pose a serious memory problem for algorithmic implementations even for moderate d if n is large.

However, in recent years, various techniques have been proposed to address this issue: one approach is to exploit special structures of the problem such as sparsity and symmetry [22, 23] to treat large scale polynomial problems. Other techniques include refined SDP hierarchies such as the SDP approximation proposed in [26] and the recently established bounded s.o.s. hierarchy [27].

On the other hand, it is worth noting that sometimes even the zero-level approximation in the hierarchy (16) can provide a much better bound as compared to the Lagrangian relaxation, as shown in the next example.

Example 6.1

(Zero-level approximation of copositive relaxations can beat the Lagrangian relaxation) With the data from Example 3.1, recalling that the optimal value of this example is \(z^*=0\), we have

Then the zero-level approximation for the copositive relaxation problem reads

From the definition of \({\mathsf {M}}(u,\mu )\), we see that \(p_{{\mathsf {M}}(u,\mu )}\) is a s.o.s. polynomial if and only if \(\mu \le 0\) and

is a s.o.s. polynomial. This shows that

where

Using the “solvesos” command in the Matlab toolbox YALMIP [29], one can verify that \(\widehat{p}_u\) is a s.o.s. polynomial if \(u=1\). Thus, \(\mathrm{val}\mathrm {(RP)}=0\) which agrees with the true optimal value \(z^*=0\). On the other hand, as computed in Example 3.1, the Lagrangian relaxation yields a trivial lower bound \(-\infty \).

6.2 LP hierarchy

Another approach is to compute the copositive relaxation using linear optimization. While providing, in general, weaker bounds in comparing with SDP hierarchies, this approach is appealing because LP solvers suffer less from memory problems than state-of-art SDP solvers. To do this, consider a compact polyhedral base K of the cone \(\Upsilon \), i.e., \({\mathbb {R}}_+K =\Upsilon \), e.g. the polytope

described by \(m=p+2(n+1)\) affine-linear inequalities. By positive homogeneity, we observe that \({\mathsf {M}}\) is \(\Upsilon \)-copositive if and only if \(q_{\mathsf {M}}({\mathsf {y}}){:}={\mathsf {y}}^T {\mathsf {M}}{\mathsf {y}}\ge 0\) for all \({\mathsf {y}}\in K\). Now Handelman’s theorem (for example see [25, Theorem 2.24]) ensures that any polynomial f positive over such a polytope K admits the representation \(f=\sum _{{\alpha } \in \mathbb {N}^{m}}c_{{\alpha }}\prod _{j=1}^{m} h_j^{\alpha _j}\) for some scalars \(c_{{\alpha }}\ge 0\). Then one can construct a sequence of LP approximation by letting

It is well known (see for example [25, Theorem 5.11]) that above \({\mathcal {K}}_d^\star \) can be expressed by linear inequality constraints.

References

Ai, W., Zhang, S.: Strong duality for the CDT subproblem: a necessary and sufficient condition. SIAM J. Optim. 19(4), 1735–1756 (2009)

Amaral, P.A., Bomze, I.M.: Copositivity-based approximations for mixed-integer fractional quadratic optimization. Pac. J. Optim. 11(2), 225–238 (2015)

Amaral, P.A., Bomze, I.M., Júdice, J.J.: Copositivity and constrained fractional quadratic problems. Math. Program. 146(1–2), 325–350 (2014)

Ardestani-Jaafari, A., Delage, E.: Linearized robust counterparts of two-stage robust optimization problems with applications in operations management. Preprint, HEC Montréal (2016). http://www.optimization-online.org/DB_HTML/2016/01/5388.html

Beck, A., Eldar, Y.: Strong duality in nonconvex quadratic optimization with two quadratic constraints. SIAM J. Optim. 17(3), 844–860 (2006)

Bienstock, D.: A note on polynomial solvability of the CDT problem. SIAM J. Optim. 26(1), 488–498 (2016)

Bomze, I.M.: Copositive relaxation beats Lagrangian dual bounds in quadratically and linearly constrained QPs. SIAM J. Optim. 25(3), 1249–1275 (2015)

Bomze, I.M., Dür, M., Teo, C.-P.: Copositive optimization. Optima MOS Newsl. 89, 2–10 (2012)

Bomze, I.M., Overton, M.L.: Narrowing the difficulty gap for the Celis–Dennis–Tapia problem. Math. Program. 151(2), 459–476 (2015)

Bomze, I.M., Schachinger, W., Ullrich, R.: New lower bounds and asymptotics for the cp-rank. SIAM J. Matrix Anal. Appl. 36(1), 20–37 (2015)

Bundfuss, S., Dür, M.: Algorithmic copositivity detection by simplicial partition. Linear Algebra Appl. 428(7), 1511–1523 (2008)

Bundfuss, S., Dür, M.: An adaptive linear approximation algorithm for copositive programs. SIAM J. Optim. 20(1), 30–53 (2009)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120(2), 479–495 (2009)

Burer, S., Anstreicher, K.: Second-order-cone constraints for extended trust-region subproblems. SIAM J. Optim. 23(1), 432–451 (2013)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust-Region Methods. MPS/SIAM Series on Optimization. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2000)

Dickinson, P.J.C., Gijben, L.: On the computational complexity of membership problems for the completely positive cone and its dual. Comput. Optim. Appl. 57(2), 403–415 (2014)

Faye, A., Roupin, F.: Partial Lagrangian relaxation for general quadratic programming. 4OR 5(1), 75–88 (2007)

Hanasusanto, G.A., Kuhn, D.: Conic programming reformulations of two-stage distributionally robust linear programs over Wasserstein balls. Oper. Res. (2017) (accepted)

Jeyakumar, V., Lee, G.M., Li, G.: Alternative theorems for quadratic inequality systems and global quadratic optimization. SIAM J. Optim. 20(2), 983–1001 (2009)

Jeyakumar, V., Li, G.: Trust-region problems with linear inequality constraints: exact SDP relaxation, global optimality and robust optimization. Math. Program. 147(1–2), 171–206 (2014)

Jeyakumar, V., Wolkowicz, H.: Zero duality gaps in infinite-dimensional programming. J. Optim. Theory Appl. 67(1), 87–108 (1990)

Jeyakumar, V., Kim, S., Lee, G.M., Li, G.: Solving global optimization problems with sparse polynomials and unbounded semialgebraic feasible sets. J. Glob. Optim. 65(2), 175–190 (2016)

Kim, S., Kojima, M.: Exploiting sparsity in SDP relaxation of polynomial optimization problems. In: Anjos, M., Lasserre, J.B. (eds.) Handbook on Semidefinite, Conic and Polynomial Optimization: Theory, Algorithm, Software and Applications, pp. 499–532. Springer, New York (2011)

Lasserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11(3), 796–817 (2000/01)

Lasserre, J.B.: Moments, Positive Polynomials and Their Applications. Imperial College Press, World Scientific (2010)

Lasserre, J.B.: New approximations for the cone of copositive matrices and its dual. Math. Program. 144(1–2), 265–276 (2014)

Lasserre, J.B., Toh, K., Yang, S.: A bounded degree SOS hierarchy for polynomial optimization EURO. J. Comput. Optim. 5(1–2), 87–117 (2017)

Locatelli, M.: Exactness conditions for an SDP relaxation of the extended trust region problem. Optim. Lett. 10(6), 1141–1151 (2016)

Löfberg, J.: Pre- and post-processing sum-of-squares programs in practice. IEEE Trans. Autom. Control 54(5), 1007–1011 (2009)

Motzkin, T.S.: Copositive quadratic forms. Projects and Publications of the National Applied Mathematics Laboratories, Quarterly Report, April through June 1952, pp. 11–12, No. 1818, National Bureau of Standards (1952)

Murty, K.G., Kabadi, S.N.: Some NP-complete problems in quadratic and nonlinear programming. Math. Program. 39(2), 117–129 (1987)

Natarajan, K., Teo, C.P., Zheng, Z.: Mixed zero-one linear programs under objective uncertainty: a completely positive representation. Oper. Res. 59(3), 713–728 (2011)

Parrilo, P.A.: Structured Semidefinite Programs and Semi-algebraic Geometry Methods in Robustness and Optimization. PhD thesis, California Institute of Technology, Pasadena, CA, May (2000)

Powell, M.J.D.: On fast trust region methods for quadratic models with linear constraints Math. Program. Comput. 7(3), 237–267 (2015)

Shaked-Monderer, N., Berman, A., Bomze, I.M., Jarre, F., Schachinger, W.: New results on the cp rank and related properties of co(mpletely )positive matrices. Linear Multilinear Algebra 63(2), 384–396 (2015)

Shor, N.Z.: Quadratic optimization problems. Izv. Akad. Nauk SSSR Tekhn. Kibernet. 222(1), 128–139 (1987)

Xu, G., Burer, S.: A copositive approach for two-stage adjustable robust optimization with uncertain right-hand sides. Comput. Optim. Appl. (2017). https://doi.org/10.1007/s10589-017-9974-x

Yang, B., Burer, S.: A two-variable analysis of the two-trust-region subproblem. SIAM J. Optim. 26(1), 661–680 (2016)

Yuan, Y.-X.: On a subproblem of trust region algorithms for constrained optimization. Math. Program. 47(1), 53–63 (1990)

Acknowledgements

Open access funding provided by University of Vienna. We are grateful to two anonymous referees for their suggestions which improved presentation of our results.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bomze, I.M., Jeyakumar, V. & Li, G. Extended trust-region problems with one or two balls: exact copositive and Lagrangian relaxations. J Glob Optim 71, 551–569 (2018). https://doi.org/10.1007/s10898-018-0607-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0607-4