Abstract

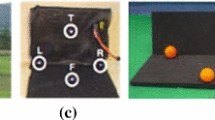

In this paper, we present an onboard monocular vision system for autonomous takeoff, hovering and landing of a Micro Aerial Vehicle (MAV). Since pose information with metric scale is critical for autonomous flight of a MAV, we present a novel solution to six degrees of freedom (DOF) pose estimation. It is based on a single image of a typical landing pad which consists of the letter “H” surrounded by a circle. A vision algorithm for robust and real-time landing pad recognition is implemented. Then the 5 DOF pose is estimated from the elliptic projection of the circle by using projective geometry. The remaining geometric ambiguity is resolved by incorporating the gravity vector estimated by the inertial measurement unit (IMU). The last degree of freedom pose, yaw angle of the MAV, is estimated from the ellipse fitted from the letter “H”. The efficiency of the presented vision system is demonstrated comprehensively by comparing it to ground truth data provided by a tracking system and by using its pose estimates as control inputs to autonomous flights of a quadrotor.

Similar content being viewed by others

References

Bouguet, J.Y.: Camera Calibration Toolbox for Matlab. http://www.vision.caltech.edu/bouguetj/calib_doc. Accessed 2001

Canny, J.: A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 8(6), 679–698 (1986)

Cesetti, A., Frontoni, E., Mancini, A., Zingaretti, P., Longhi, S.: A vision-based guidance system for UAV navigation and safe landing using natural landmarks. J. Intell. Robot. Syst. 57(1–4), 233–257 (2010)

Chen, Q., Wu, H., Wada, T.: Camera calibration with two arbitrary coplanar circles. In: ECCV-2004, LNCS, vol. 3023/2004, pp. 521–532 (2004)

Chen, Z., Huang, J.B.: A vision-based method for the circle pose determination with a direct geometric interpretation. IEEE Trans. Robot. Autom. 15(6), 1135–1140 (1999)

Diebel, J.: Representing attitude: Euler angles, unit quaternions, and rotation vectors. Technical report, Stanford University, Stanford, California, 94301-9010 (2006)

Eberli, D., Scaramuzza, D., Weiss, S., Siegwart, R.: Vision based position control for MAVs using one single circular landmark. J. Intell. Robot. Syst. 61(1–4), 495–512 (2011)

Faugeras, O.: Three-Dimensional Computer Vision: A Geometric Viewpoint. MIT Press (1993)

Fitzgibbon, A., Pilu, M., Fisher, R.B.: Direct least square fitting of ellipses. IEEE Trans. Pattern Anal. Mach. Intell. 21(5), 476–480 (1999)

Forsyth, D., Mundy, J.L., Zisserman, A., Coelho, C., Heller, A., Rothwell, C.: Invariant descriptors for 3D object recognition and pose. IEEE Trans. Pattern Anal. Mach. Intell. 13(10), 971–991 (1991)

GarciaPardo, P.J., Sukhatme, G.S., Montgomery, J.F.: Towards vision-based safe landing for an autonomous helicopter. Robot. Auton. Syst. 38(1), 19–29 (2002)

He, L., Chao, Y., Suzuki, K.: A run-based two-scan labeling algorithm. IEEE Trans. Image Process. 17(5), 749–756 (2008)

Kanatani, K., Wu, L.: 3D interpretation of conics and orthogonality. Image Underst. 58, 286–301 (1993)

Lange, S., Sünderhauf, N., Protzel, P.: A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In: Proceedings 2009 International Conference on Advanced Robotics, pp. 1–6. Munich (2009)

Meier, L., Tanskanen, P., Fraundorfer, F., Pollefeys, M.: PIXHAWK: a system for autonomous flight using onboard computer vision. In: Proceedings 2011 IEEE International Conference on Robotics and Automation, pp. 2992–2997. Shanghai (2011)

Mellinger, D., Michael, N., Kumar, V.: Trajectory generation and control for precise aggressive maneuvers with quadrotors. Int. J. Rob. Res. (2012). doi:10.1177/0278364911434236

Merz, T., Duranti, S., Conte, G.: Autonomous landing of an unmanned helicopter based on vision and inertial sensing. Experimental Robotics IX, STAR 21, 343–352 (2006)

Michael, N., Mellinger, D., Lindsey, Q., Kumar, V.: The GRASP multiple micro UAV testbed. Robot. Auton. Syst. 17(3), 56–65 (2010)

Bradski, G.: The OpenCV Library. Dr. Dobb’s Journal of Software Tools (2000)

Saripalli, S., Montgomery, J.F., Sukhatme, G.S.: Visually guided landing of an unmanned aerial vehicle. IEEE Trans. Robot. Autom. 19(3), 371–380 (2003)

Scherer, S.A., Dube, D., Komma, P., Masselli, A., Zell, A.: Robust real-time number sign detection on a mobile outdoor robot. In: Proceedings of the 6th European Conference on Mobile Robots (ECMR 2011). Orebro, Sweden (2011)

Wenzel, K.E., Rosset, P., Zell, A.: Low-cost visual tracking of a landing place and hovering flight control with a microcontroller. J. Intell. Robot. Syst. 57(1–4), 297–311 (2009)

Wenzel, K.E., Masselli, A., Zell, A.: Automatic take off, tracking and landing of a miniature UAV on a moving carrier vehicle. J. Intell. Robot. Syst. 61, 221–238 (2011)

Xu, G., Zhang, Y., Ji, S., Cheng, Y., Tian, Y.: Research on computer vision-based for UAV autonomous landing on a ship. Pattern Recogn. Lett. 30(6), 600–605 (2009)

Zell, A., Mache, N., Hübner, R., Mamier, G., Vogt, M., Schmalzl, M., Herrmann, K.-U.: SNNS (stuttgart neural network simulator). In: Skrzypek, J. (ed.) Neural Network Simulation Environments. The Springer International Series in Engineering and Computer Science, vol. 254, chapter 9. Kluwer Academic, Norwell (1994)

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, S., Scherer, S.A. & Zell, A. An Onboard Monocular Vision System for Autonomous Takeoff, Hovering and Landing of a Micro Aerial Vehicle. J Intell Robot Syst 69, 499–515 (2013). https://doi.org/10.1007/s10846-012-9749-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10846-012-9749-7