Abstract

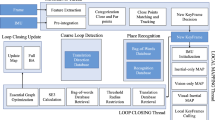

The fusion of inertial and visual data is widely used to improve an object’s pose estimation. However, this type of fusion is rarely used to estimate further unknowns in the visual framework. In this paper we present and compare two different approaches to estimate the unknown scale parameter in a monocular SLAM framework. Directly linked to the scale is the estimation of the object’s absolute velocity and position in 3D. The first approach is a spline fitting task adapted from Jung and Taylor and the second is an extended Kalman filter. Both methods have been simulated offline on arbitrary camera paths to analyze their behavior and the quality of the resulting scale estimation. We then embedded an online multi rate extended Kalman filter in the Parallel Tracking and Mapping (PTAM) algorithm of Klein and Murray together with an inertial sensor. In this inertial/monocular SLAM framework, we show a real time, robust and fast converging scale estimation. Our approach does not depend on known patterns in the vision part nor a complex temporal synchronization between the visual and inertial sensor.

Similar content being viewed by others

References

Jung, S.-H., Taylor, C.: Camera trajectory estimation using inertial sensor measurements and structure from motion results. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. II–732–II–737 (2001)

Klein, G., Murray, D.: Parallel tracking and mapping for small AR workspaces. In: Proc. Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’07), Nara, Japan (2007)

Nygårds, J., Skoglar, P., Ulvklo, M., Högström, T.: Navigation aided image processing in uav surveillance: preliminary results and design of an airborne experimental system. J. Robot. Syst. 21(2), 63–72 (2004)

Labrosse F.: The visual compass: performance and limitations of an appearance-based method. JFR 23(10), 913–941 (2006)

Zufferey, J.-C., Floreano, D.: Fly-inspired visual steering of an ultralight indoor aircraft. IEEE Trans. Robot. 22(1), 137–146 (2006)

Huster, A., Frew, E., Rock, S.: Relative position estimation for auvs by fusing bearing and inertial rate sensor measurements. In: Oceans ’02 MTS/IEEE, vol. 3, pp. 1863–1870 (2002)

Armesto, L., Chroust, S., Vincze, M., Tornero, J.: Multi-rate fusion with vision and inertial sensors. In: 2004 IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA ’04, vol. 1, pp. 193–199, 1 April–1 May 2004

Kim, S.-B., Lee, S.-Y., Choi, J.-H., Choi, K.-H., Jang, B.-T.: A bimodal approach for gps and imu integration for land vehicle applications. In: 2003 IEEE 58th Vehicular Technology Conference. VTC 2003-Fall, vol. 4, pp. 2750–2753 (2003)

Chroust, S.G., Vincze, M.: Fusion of vision and inertial data for motion and structure estimation. J. Robot. Syst. 21(2), 73–83 (2004)

Helmick, D., Cheng, Y., Clouse, D., Matthies, L., Roumeliotis, S.: Path following using visual odometry for a mars rover in high-slip environments. In: 2004 IEEE Aerospace Conference, 2004. Proceedings, vol. 2, pp. 772–789 (2004)

Stratmann, I., Solda, E.: Omnidirectional vision and inertial clues for robot navigation. J. Robot. Syst. 21(1), 33–39 (2004)

Niwa, S., Masuda, T., Sezaki, Y.: Kalman filter with time-variable gain for a multisensor fusion system. In: 1999 IEEE/SICE/RSJ International Conference on Multisensor Fusion and Integration for Intelligent Systems. MFI ’99. Proceedings, pp. 56–61 (1999)

Waldmann J.: Line-of-sight rate estimation and linearizing control of an imaging seeker in a tactical missile guided by proportional navigation. IEEE Trans. Control Syst. Technol. 10(4), 556–567 (2002)

Goldbeck, J., Huertgen, B., Ernst, S., Kelch, L.: Lane following combining vision and dgps. Image Vis. Comput. 18(5), 425–433(9) (2000)

Eino, J., Araki, M., Takiguchi, J., Hashizume, T.: Development of a forward-hemispherical vision sensor for acquisition of a panoramic integration map. In: IEEE International Conference on Robotics and Biomimetics, 2004. ROBIO 2004, pp. 76–81 (2004)

Ribo, M., Brandner, M., Pinz, A.: A flexible software architecture for hybrid tracking. J. Robot. Syst. 21(2), 53–62 (2004)

Zaoui, M., Wormell, D., Altshuler, Y., Foxlin, E, McIntyre, J.: A 6 d.o.f. opto-inertial tracker for virtual reality experiments in microgravity. Acta Astronaut. 49, 451–462 (2001)

Helmick, D., Roumeliotis, S., McHenry, M., Matthies, L.: Multi-sensor, high speed autonomous stair climbing. In: IEEE/RSJ International Conference on Intelligent Robots and System, 2002, vol. 1, pp. 733–742 (2002)

Robert Clark, R., Lin, M.H., Taylor, C.J.: 3d environment capture from monocular video and inertial data. In: Proceedings of SPIE, The International Society for Optical Engineering (2006)

Kelly, J., Sukhatme, G.: Fast relative pose calibration for visual and inertial sensors. In: Khatib, O., Kumar, V., Pappas, G. (eds.) Experimental Robotics, vol. 54, pp. 515–524. Springer Berlin/Heidelberg (2009)

Lobo, J.: InerVis IMU Camera Calibration Toolbox for Matlab. http://www2.deec.uc.pt/~jlobo/InerVis_WebIndex/InerVis_Toolbox.html (2008)

Klein, G.: Source Code of PTAM (Parallel Tracking and Mapping). http://www.robots.ox.ac.uk/~gk/PTAM/

Author information

Authors and Affiliations

Corresponding author

Additional information

The research leading to these results has received funding from the European Community’s Seventh Framework Programme (FP7/2007-2013) under grant agreement n. 231855 (sFly). Gabriel Nützi is currently a Master student at the ETH Zurich. Stephan Weiss is currently PhD student at the ETH Zurich. Davide Scaramuzza is currently senior researcher and team leader at the ETH Zurich. Roland Siegwart is full professor at the ETH Zurich and head of the Autonomous Systems Lab.

Rights and permissions

About this article

Cite this article

Nützi, G., Weiss, S., Scaramuzza, D. et al. Fusion of IMU and Vision for Absolute Scale Estimation in Monocular SLAM. J Intell Robot Syst 61, 287–299 (2011). https://doi.org/10.1007/s10846-010-9490-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10846-010-9490-z