Abstract

In the past two decades, individual explanations of scientific misconduct (‘bad apples’) have increasingly given way to systemic explanations (‘bad systems’). Where did this interest in systemic factors (publication pressure, competition for research funding) come from? Given that research ethicists often present their interventions as responses to scientific misconduct, this article tests the hypothesis that these systemic explanations were triggered by high-visibility cases of scientific norm violation. It does so by examining why Dutch scientists in 2011 explained Diederik Stapel’s grand-scale data fabrication largely in systemic terms, whereas only fifteen years earlier, in the René Diekstra affair (1996), such explanations had been close to absent. Drawing on a wealth of historical sources, the article suggests that cases like Stapel’s as such do not explain why early 21st-century commentators exchanged individual explanations for systemic ones. Only against the background of an existing discourse of criticism of the science system, developed in the 1990s and 2000s in response to rapidly increasing competition for research funding, could the Stapel affair achieve notoriety as an example of how systemic factors provoke bad conduct.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

When textbooks in research ethics try to situate their field in history, they typically attribute its emergence, situated in the aftermath of World War II, to a widely shared sense that horrors like human experimentation in the German concentration camps should be prevented from happening ever again. Accordingly, they present the Nuremberg code on human experimentation, drafted in 1947, as an immediate response to atrocities conducted by Nazi doctors in Auschwitz (Flynn and Goldsmith 2013; Boddington 2012; Fisher and Anushku 2008; Wassenaar 2006). Along similar lines, they tell their readers that the Office for Protection from Research Risks was founded in 1972 in response to the Tuskegee syphilis experiment (Emanuel et al. 2008), thereby suggesting that major cases of misconduct have served as foundational events in the history of research ethics. Among research ethicists, this is a broadly familiar argument, not only in stories about the origins and development of the field, but also in advocacy for better regulation, codification, or cooperation. It is not uncommon, for instance, to find authors bolstering their case for codification or implementation of research integrity standards with examples like Woo-Suk Hwang, the fraudulent South Korean stem cell researcher (Resnik 2009; Bouter 2020). Likewise, the Inter Academy Partnership cites the ‘emergence of high-profile cases’ like Hwang’s as clear evidence of a need for global codification of ethical standards (Inter Academies 2012). What these examples illustrate is that spectacular cases of misconduct play a significant role in the field: they are believed to serve as triggers for research ethical initiatives and are, consequently, invoked rhetorically as reasons for investing in integrity.

To what extent, then, can a new and important development like the emergence of systemic explanations of scientific misconduct also be attributed to high-visibility cases of norm violation? Sovacool (2008) distinguishes three types of explanations for scientific misconduct: ‘individual impurity’ (bad apples), ‘institutional failure’ (bad barrels), and ‘structural crisis’ (bad science systems). Whereas the first type focuses on individual scientists and their personalities, the two other ones are systemic: they point to supra-individual factors such as publication pressure and competitive research funding. In their study on the ‘organizational climate’ of science, Haven et al. (2019) argue that after an initial focus on individual explanations, ‘structural and institutional climates’ are now widely regarded as the most decisive explanatory factors. This shift of emphasis is confirmed by Aubert Bonn and Pinxten (2019), who show that between 2005 and 2015, institutional and systemic explanations (bad barrels, bad systems) increasingly began to dominate the literature on research integrity. In the recent past, therefore, a broad consensus seems to have emerged that scientific misconduct is caused primarily by factors at the level of what an influential Nature article called the ‘wider research environment, including institutional and systemic structures’ (Martinson et al. 2005).

Where does this preference for systemic explanations come from? What were the factors that contributed to its rise to dominance in the early twenty-first century? In this article, we will test the hypothesis that systemic explanations of scientific misconduct were triggered by highly-publicized causes of norm violation. There are two reasons for zooming in on this hypothesis. One reason is the significance that research ethicists attribute to cases where things went wrong. In line with the examples evoked in our opening paragraph, it is well conceivable that research ethicists and other commentators – including working scientists and representatives of scientific institutions – recognized the importance of ‘bad systems’ by studying fraud cases like the Woo-Suk Hwang scandal (2005). More importantly, in the second place, systemic explanations achieved prominence in the first two decades of the twentieth century when the scientific community was plagued by high-profile cases of misconduct, which besides the case of Hwang (2005) included those of Jan Hendrik Schön (2002), the German physicist who falsified data for his semiconductor research, and the American professor of anesthesiology, Scott Reuben, who fabricated his data for his clinical trials on pain management and eventually was sentenced to prison in 2010. Were these phenomena related in the sense that cases of norm violation triggered systemic explanations of scientific misconduct?

We will answer this question on the base of Dutch source material. For our purposes, the Netherlands are an interesting case because of a notable difference between two highly publicized cases of fraud in this country. When in 1996 the Dutch psychologist René Diekstra was found guilty of plagiarism, hardly any commentator from the scientific community drew attention to systemic factors. Fifteen years later, however, Diederik Stapel’s admission of large-scale fabrication and falsification of data provoked a debate to which struggles for funding, publication pressure, and the competitive science system in general were central. How can this shift in focus be explained? Did Stapel’s fraud make commentators aware of how competitive research environments can affect scientific practice in unintended ways? Or are there other, perhaps more important causal factors that have to be taken into account? Our conclusion is that cases of norm violation as such do not explain why early 21st-century commentators exchanged individual explanations of scientific misconduct for systemic ones. Only against the background of an existing discourse of criticism of the science system could the case of Stapel achieve notoriety as an example of how systemic factors provoke bad conduct.

Definitions and Methods

This is a study of how Dutch scientists from 1990 to 2018 perceived scientific misconduct and its possible causes. Given that there was, and is, no unanimity about what misconduct exactly entails – to what extent, for instance, does it include ‘questionable research practices’ or ‘sloppy science’ (Bouter et al. 2016; Martinson et al. 2005)? – this term must be handled with some care. Consistent with historians’ practice, this article tries to do justice to the historical record by treating scientific misconduct as an actors’ category: a term that, in the period under investigation, could mean different things to different people (even though most agreed that it covered the cardinal sins of ‘FFP’: fabrication, falsification, and plagiarism). Accordingly, in assessing the question what impact scandals such as those caused by Diekstra and Stapel had on scientists’ perceptions of scientific misconduct, the article tries to avoid the complication of using an actors’ category for analytical purposes. It does so by grouping cases like Diekstra and Stapel under the more generic term ‘norm violation’.

As a study of how scientists perceived of scientific misconduct, this article resembles studies like Anderson et al. (2007), which draw on focus group research to examine scientists’ perceptions of how and why science can go wrong. Unlike focus group studies, however, which by definition deal with present-day perceptions, this article is a historical study. In order to find out to what extent high-visibility cases of norm violation served as triggers for systemic explanations of scientific misconduct, it examines how Dutch scientists since the early 1990s have perceived of misconduct and its causes. To that end, the article employs a broad array of historical sources.

The most important of these sources are articles published in three Dutch newspapers: NRC Handelsblad, De Volkskrant, and Trouw. These are quality papers that reported extensively on science in its various aspects, not only through news coverage, but also in the form of columns, interviews, and opinion pieces. The sources selected for our analysis are pieces in which voices from the scientific community made themselves heard – either because scientists were quoted or because the articles were written by scientists themselves. Relevant newspaper articles were identified through keyword searches in the LexisNexis database for the period 1990–2018.Footnote 1 We started with a general search for articles on scientific misconduct (e.g., ‘science + fraud’ [‘wetenschap + fraude’] and ‘sloppy science’ [used in English as well as Dutch: ‘slodderwetenschap’]), after which we narrowed down our results with the additional keywords ‘competition’ (‘competitie’), ‘publication pressure’ (‘publicatiedruk’), ‘NWO’ (the Netherlands Organization for Scientific Research), ‘Vernieuwingsimpuls’ (the name of a large competitive funding scheme introduced by NWO in the early 2000s), and, of course, ‘René Diekstra’ and ‘Diederik Stapel’. In total, this yielded a set of 131 newspaper articles. Close reading of this material revealed that 46 of the 131 articles offered explanations for scientific misconduct. In these 46 articles, 43 Dutch scientists shared their views on what Diekstra or Stapel had done or, more generally, on ethical risks inherent to doing research. Six labels, corresponding to six distinct types of explanations, were created for the purpose of analyzing the perceived causal factors of norm violation in some depth:

-

1.

Personality traits (e.g., ambition, megalomania)

-

2.

Publication pressure

-

3.

Grant competition pressure

-

4.

Third-party funding (e.g., funders’ pressure not to publish certain findings)

-

5.

Research culture in the field (e.g., tolerance for sloppiness)

-

6.

Other explanations (e.g., popular writing allowing for reuse of texts)Footnote 2

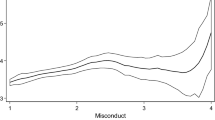

Whereas the first category includes personal explanations of scientific norm violation (i.e., explanations that attribute misconduct to personality traits), the labels 2, 3, 4, and 5 refer to structural explanations (i.e., explanations that identify structural features of the research culture or the science system as causes of norm violation). Table 1 shows how often these six types of explanations were invoked in the decades covered in this study, either in response to Diekstra or Stapel or in more general reflections on scientific research in the Netherlands.

In interpreting these findings, we have to take into account that scientists also employed other genres than newspaper articles to articulate their views on norm violation and its possible causes. To that end, we also consulted a broad range of other relevant source material, such as reports issued by investigation committees at several Dutch universities and memoirs such as Diekstra’s O Nederland, vernederland (1998) and Stapel’s Ontsporing (2012). If appropriate, we also refer to sources from outside the Netherlands, such as widely-cited Nature articles on structural features of the academic system that might contribute to scientific norm violation (e.g., Martinson et al. 2005; Giles 2007). In combining these various types of sources, we draw on the best available material for examining how Dutch scientists perceived scientific misconduct and its causes.

Our study design has some obvious limitations. First of all, its focus on the Netherlands does not allow its findings to be immediately extrapolated to other countries. Secondly, as historians, we work with existing source material. This prevents us from creating a sample that is representative in terms of gender, age, or disciplinary affiliation. Although the newspapers consulted for this article provided space to a wide variety of voices, it is likely that well-established scientists were more often approached by journalists than their junior colleagues. Consequently, senior figures are likely to be overrepresented in the source material. For the purposes of this article, however, this is not a major disadvantage. What matters most for our purposes is that newspaper articles closely followed the news. Consequently, they offer a unique glimpse on scientists’ immediate responses to headline cases like Diekstra’s and Stapel’s, to which we will now turn.

Plagiarism in the 1990s: René Diekstra

In August 1996, the Dutch weekly Vrij Nederland shocked the scientific community by exposing the Leiden professor René Diekstra as a plagiarist (Verhey and Danhof 1996a). At the time, the internationally renowned psychologist, specialized in depression and suicide, held a chair in clinical psychology. He was a well-known media personality, who regularly appeared on television and had published a number of popular, Dutch-language books. In the latest of these – a book accompanying a new television series – Diekstra turned out to have literally translated eight pages from How to Heal Depression (1995) by the American psychologists Harold Bloomfield and Peter McWilliams. In a first response, Diekstra declared that the lack of proper referencing in these passages had been the result of misunderstanding between him and his publisher. The Leiden University board responded by installing a committee charged with the task of writing a code for referencing in popular scientific work (Van Kolfschooten 2012; Verhey and Danhof 1996a).

However, the eight pages from Bloomfield and McWilliams were only the beginning. Week after week, new instances of plagiarism were discovered. No less than 54 pages from Diekstra’s Het onderste boven (1996) had been copied from Caring for the Mind (1995) by Dianne and Robert Hales. In addition, 26 pages from Als het leven pijn doet (1990) turned out to originate in Sol Gordon’s When Living Hurts (1985). In most cases, Diekstra had mentioned these books and their authors, but without properly referencing the long passages that he had literally translated. Notably, Diekstra appeared to have copied even the foreword from Caring for the Mind, thereby presenting the personal experiences that Dianne and Robert Hales described in these pages as his own (Van Kolfschooten 2012; Verhey and Danhof 1996b). In response to these new accusations, Leiden University changed its course and began to investigate Diekstra’s work in depth.

What followed was an affair that reached widely beyond academia. Diekstra became the subject of wide media coverage, even to the extent that his behavior became a standard example for authors writing about plagiarism, also in non-related fields like architecture (Gerrits 1996). In September 1996, an important new accusation was added: not only in his popularizing work, but also in a scientific article, Diekstra had relied extensively on the work of others. This led the investigating committee to conclude, in November 1996, that Diekstra could not maintain his professorial chair. In December, Diekstra resigned from his position (Van Kolfschooten 2012).

Right from the start, Dutch scientists responded in different ways. A significant number of psychologists condemned Diekstra’s publication habits. They called it ‘shameless plagiarism’, ‘very wrong and incredibly stupid’ (Verhey and Danhof 1996a). Diekstra also received support, though. Students organized themselves in a committee named CARE (‘Comité Achter René tot het Einde’, which translates as ‘Committee Supporting René Until the End’). Colleagues tried to dismiss the accusations as ‘slander’ or ‘jealousy’, or suggested that Diekstra’s behavior was nothing but ‘sloppiness’ caused by ‘cryptomnesia’ or ‘haste’ (N. N. 1996; Verhey and Danhof 1996a). Some of Diekstra’s defenders even questioned the ethical standards invoked by those who dissociated themselves from Diekstra, for instance by trying to instigate a debate on ‘what plagiarism is’ (Verhey and Danhof 1996a). Their most important line of defence was that plagiarism in popular publications should not count as such and that Diekstra’s plagiarism in an academic publication could not be proven (Dijkhuis et al. 1997).

Both critics and defenders faced the question how to explain what Diekstra had done. Interestingly, most of the explanations offered in newspaper articles focused on the personal level, that is, on Diekstra’s own motives for misbehaving. Using language of sin and vice, some commentators argued that Diekstra had fallen prey to the vice of avarice (Ephimenco 1996) or had been unable to resist the temptation of ‘vanity’ – a temptation that had driven him right ‘into the arms of the monster called plagiarism’ (‘t Hart 1996). Indeed, as shown in Table 1, no less than 67% of the explanations offered of Diekstra’s norm violation invoked his personality. In response to this, several psychologists and psychiatrists protested that ‘psychologizing’ about Diekstra’s personality was not scientifically warranted (Bockma and Thomas 1996; Boevink 1996). In the words of the chair of the investigating committee at Leiden University, the Groningen psychology professor Willem Hofstee: ‘I cannot pass a good psychological judgement on Diekstra. (…) A psychologist has learned to be very careful with that’ (Kreulen 1996). Implicitly, however, this response confirmed that an explanation of Diekstra’s norm violation was most likely to be found at a personal level.

Diekstra himself seemed to agree with this diagnosis. In his 1998 retrospective on the affair, he psychologized at length about himself and his critics. Published as O, Nederland, vernederland – which translates as O, Holland, humiliation land – Diekstra’s book presented itself as a case study in the ‘psychology of fall and resurrection’. The author identified possible causes for his conduct in his childhood (having grown up in a large family without having received much attention from his parents) as well as in his psychological makeup (a desire to be creative and original) (Diekstra 1998).

Non-personal factors contributing to scientific norm violation were invoked only in reflections on the science system as a whole – not in direct relation to Diekstra (see Table 1). Most notably, in an essay solicited by NRC Handelsblad, Pieter Drenth, a psychology professor at the Free University in Amsterdam and former president of the Royal Netherlands Academy of Arts and Sciences (KNAW), identified the changing science system as a possible cause of increases in scientific norm violation. Growing publication and performance pressure, said Drenth (carefully avoiding to mention Diekstra’s name), could ‘seduce’ scientists into irresponsible conduct. By way of remedy, Drenth proposed scientific integrity courses for junior researchers, standardized procedures for experiments and data analysis as well as a reduction of publication pressure. Still, even Drenth agreed that ‘scientific conscience’ and a ‘sense of responsibility’ – the character of the researcher – were most important of all (Drenth 1996).

Drenth’s analysis was exceptional. As shown in Table 1, no other Dutch commentator in our sample of texts from the 1990s contemplated the possibility of competitive research funding contributing to scientific misconduct. Most commentators interpreted Diekstra’s behavior in terms of what Sovacool (2008) calls ‘individual impurity’. This, however, would change in the years to come.

Data Fabrication in the 2010s: Diederik Stapel

In September 2011, Tilburg University issued a press release stating that Diederik Stapel, a professor of social psychology and dean of the School of Social and Behavioral Sciences, had committed large-scale fabrication and falsification of research data. At the time, Stapel had reached the zenith of an impressive career. A recipient of several awards and prestigious research grants from NWO and KNAW, he had held positions in Amsterdam and Groningen before coming to Tilburg in 2006. At this university, however, three young researchers had become suspicious of Stapel’s research data, simply because they had been too good to be true. After they had exposed the misconduct and Stapel had confessed to it, three investigating committees were installed: one in Tilburg, one in Groningen, and one in Amsterdam. The Tilburg committee responsible for coordinating this multiple-site investigation was led by the psycholinguist and former KNAW president Pim Levelt. Its first results were published in October 2011, with a final report being issued in November 2012. Among the 143 articles examined, the committee identified 55 as indisputably fraudulent and another ten as containing evidence of fraud. This propelled Stapel into a worldwide top-ten of authors with the highest number of fraudulent publications (Abma 2013; Callaway 2011). Stapel was fired from Tilburg University, voluntarily returned his PhD degree to the University of Amsterdam, and in 2013 reached a settlement with the public prosecutor to do 120 hours of community service (Abma 2013; Kreulen 2013).

Unsurprisingly, in the two years following Tilburg University’s press release, the Stapel case received a lot of media coverage, which peaked around the publication of the committees’ first results and final report. Unlike Diekstra, Stapel hardly received any support: his behavior was condemned by colleagues, commentators, and the general public alike. However, scientists differed with regard to how Stapel’s misconduct had to be explained. Some saw Stapel as a ‘rotten apple’ more than as evidence of broader problems (Borst 2012). Microbiologist Rosanne Hertzberger, for instance, used her column in NRC Handelsblad to explain that in the messy realities of day-to-day work, scientific experiments are not always conducted according to textbook rules. Most scientists, Hertzberger (2012) assured her readers, ‘are looking for truth’. Others argued that the very fact that Stapel had been caught was evidence of a well-functioning science system, with peer review procedures being effective in exposing deviance from the rules (Abma 2013). Likewise, the chairman of NWO, physicist Jos Engelen, claimed that ‘the system works’ and that NWO only funded ‘excellent researchers, and excellent researchers are one hundred percent reliable’. This suggested that Stapel was an exception and that his exposure was proof of a properly functioning system (Berkhout and Rosenberg 2012a). Hofstee, who had chaired the investigating committee for Diekstra fifteen years earlier, responded rather similarly. Isolating the case of Stapel, he explicitly rejected the possibility that the science system provided incentives for irresponsible behavior. He said not to believe ‘one bit’ of a systemic factor like ‘publication pressure’ being a possible cause of scientific misconduct. Likewise, Hofstee questioned calls for more extensive control mechanisms on the ground that misconduct is possible in any system, often ‘even without colleagues noticing it’ (Kreulen 2011).

The investigation committee led by Pim Levelt, however, arrived at different conclusions. When it issued a series of recommendations for decreasing the risks of fraud, it did so because the committee perceived a research culture of ‘sloppy science’ in the field of social psychology as the main cause of Stapel’s fraudulent behavior (Commissie Levelt et al. 2012). In a newspaper interview, Levelt repeated this message, while adding a remark reminiscent of Hofstee’s words fifteen years earlier. Levelt said he did not want to explain why Stapel had committed his fraud, because it was ‘not our task to look into Mr. Stapel’s soul’ (Kreulen 2012). This comment suggested that causes for fraud could also be found in the fraudster’s personality. Along similar lines, other commentators also drew attention to Stapel’s personal motives, just as they had done in response to Diekstra. Among other things, they argued that ‘haughtiness’ and ‘vanity’ were character traits encouraging irresponsible conduct (Funnekotter and Berkhout 2011). Likewise, reiterating his analysis of fifteen years before, Pieter Drenth identified ‘vanity’ and ‘ambition’ as likely causes of breaches of integrity (Drenth 2011).

However, like the investigating committee, Drenth also contemplated a different type of explanation. Commenting on the ‘current climate’ of science, he noted that scientists increasingly experienced the dilemma of ‘publishing or perishing’ (Drenth 2011). Physicist Robert Dijkgraaf, serving president of the KNAW, likewise identified increasing competition as a relevant factor in the Stapel case (Berkhout 2011). Instead of treating Stapel as a bad apple, Drenth and Dijkgraaf thus regarded the science system itself as a factor that had to be taken into account. In an attempt to minimize his personal culpability, Stapel himself also preferred structural explanations over personal ones. In a statement issued in October 2011, shortly after the preliminary report of the investigating committee, he declared: ‘In modern science, the level of ambition is very high and the competition for scarce resources is immense. Over the past few years, that pressure has become too much for me’ (Abma 2013). A year later, Stapel published his autobiographical book Derailment (Ontsporing), in which competitiveness in academia also figured prominently (Stapel 2012).

If we try to quantify the relative importance of these various types of explanations, it turns out that systemic explanations (labels 2–5) were increasingly favored over personal ones. In articles responding specifically to the Stapel affair, 9 out of 14 explanations pointed to structural factors – which amounts to 64%, compared to zero in the case of Diekstra (see Table 1). If we include more general reflections on scientific norm violation published in the 2010s, this percentage even raises to 83 (34 out of 41 explanations). Notably, many articles identified more than one structural factor: especially publication pressure and competition for research grants were often mentioned in one breath. The Leiden bio-ethicist Ellen ter Gast, for example, argued that quantitative output measures played a key role in competition for ‘prizes, grants and professorial chairs’. In her perception, this greatly enhanced publication pressure, which in turn increased the ‘pressure to commit fraud’ (Ter Gast 2012). Similar arguments about the detrimental effects of a scientific ‘rat race’ (Huibers 2011) were put forward by colleagues with disciplinary backgrounds as varied as physics and history (De Regt 2011; Van der Heijden 2014; Von der Dunk 2011). More cautious commentators postulated a casual relation between competition and ‘questionable research practices’ (QRPs) instead of FFP. Typical was the econometrist Mirjam van Praag, who argued that Stapel’s misconduct, though exceptional as such, illustrated how easily publication pressure could lead to ‘salami-slicing’ or unwarranted attribution of authorship (Van Praag 2011). Two psychologists, likewise, described the Dutch science system as ‘possibly the most competitive culture of science in the world’, especially in terms of grant acceptance rates and the kind of publication culture that this helped create. Their worry that such a competitive climate might encourage haste and sloppiness (Borsboom and Van den Berg 2012) was shared by many others (Smulders and Tijdink 2013; Van der Heijden 2012; Venema 2011).

What this shows is that Dutch scientists responded differently to Stapel than they had done to Diekstra. Instead of emphasizing personal factors, they began to consider the possibility of what Sovacool (2008) calls a ‘structural crisis’ in the science system. This was confirmed by a survey conducted in the early 2010s by science journalist Frank van Kolfschooten. When trying to find out how many cases of norm violation had taken place in the Netherlands, van Kolfschoten heard many scientists say that publication pressure and excessive competition for money and status were likely to foster unethical behavior (Van Kolfschooten 2012).

Six Explanatory Hypotheses

The question then is: How can this change in perceptions between the 1990s and the 2010s be explained? We will consider six potential answers. A first explanation of the different responses elicited by Diekstra and Stapel is that the two men represented different subfields. Although both were psychologists, Stapel worked in social psychology, whereas Diekstra was a clinical psychologist. The committee investigating Stapel identified this as a relevant difference by blaming specifically the research culture of Dutch social psychology (Keulemans 2012). However, cultural differences between psychological subfields do not sufficiently explain why Stapel’s critics were much more inclined to offer systemic explanations than Diekstra’s critics fifteen years earlier. Apart from that fraud was not more prevalent in social psychology than in other branches of psychology (Stroebe and Hewstone 2013; see also Gibson 2012), the structural factors that commentators identified as relevant explanatory variables affected scientific work across the academic spectrum. If publication pressure and competition for research money were held responsible for inducing scientific norm violation, then scientists experiencing similar pressures in other fields would run an equal risk of violating standards of integrity.

Secondly, one might argue that Stapel’s norm violation was different from Diekstra’s. Although plagiarism, fabrication, and falsification of data were widely seen as the ‘big three’, some Dutch scientists regarded plagiarism as the lesser of these, because it did not create false knowledge (Lagendijk 1996; Van Kolfschooten 2012; Van Oostendorp 2014). This may help explain why colleagues sometimes downplayed the gravity of Diekstra’s behavior, but never did so in the case of Stapel. What this second explanation overlooks, however, is that quite a few commentators in the early 2010s also complained about publication pressure and grant competition pressure inducing questionable research practices such as sloppiness and selective reporting (Van Praag 2011; Borsboom and Van den Berg 2012; Smulders and Tijdink 2013; Van der Heijden 2012; Venema 2011). Even if these forms of behavior did not qualify as fraud, they were unambiguously perceived as violating scientific norms. Evidence does therefore not permit the conclusion that QRPs were more broadly tolerated than FFP.

Perhaps more to the point is a third explanatory hypothesis: Diekstra’s and Stapel’s different ‘funding histories’. Although both psychologists were prolific authors – both published hundreds of articles with dozens of co-authors – Stapel was more successful than Diekstra in terms of grant acquisition. As mentioned earlier, he received a series of major grants, including a prestigious ‘Pionier’ grant from NWO. The fact that Diekstra’s funding history looked less impressive cannot, however, be considered a relevant explanatory variable, given that funding opportunities up until the 1990s had been more limited than in Stapel’s time. Indeed, what Diekstra’s and Stapel’s funding histories reflect is not a different success rate, but a rather thorough transformation of the Dutch scientific landscape between 1996 and 2011. Although NWO had been a key player since the 1970s – serving as the Dutch government’s principal means for realizing its increasingly ambitious science policy, especially since the late 1980s (Koier et al. 2016) – competition for grant money had hardly existed prior to the 1990s. In the third quarter of the twentieth century, NWO grant schemes had had acceptance rates of close to 100%. However, between 1990 and 2017, NWO’s annual budget rose from circa 75 million to about 873 million euros, taking a significant part of the science budget at the expense of the share of the universities. In the same period, acceptance rates at NWO dropped from almost 100% to about 30%, or even much lower for individual grants of the kind that Stapel secured (Versleijen 2007; NWO 2018).

Important for our purposes is that these changes were accompanied by a chorus of critical voices. Already in the 1980s, commentators had predicted that implementation of competitive research schemes would cause scientists to favor ‘safe’ projects over more ‘adventurous’ ones (Ellemers 1982). This charge of ‘conservatism’ became quite common in the years between 1996 and 2011 (Kortlandt 2007; Nooteboom 2007; cf. also Van Calmthout 2016). In addition, competitive research funding was held responsible for the rise of a new scientific persona – ‘a new type of man: the networker or fundraiser’, as a Leiden law scholar put it in a critique of an increasingly market-oriented university system (Cliteur 2000). Varying on this argument, scientists quipped grimly that Charles Darwin and Johan Huizinga, the famous Dutch historian, would never have been able to meet NWO’s requirements (Koelewijn and De Vrieze 2009; Wesseling 1996). Also, critics feared that competitive research funding would compromise scientists’ freedom. As a Dutch astronomer (Van Calmthout 1996) asked rhetorically: Had the government ever told painters to make sculptures instead of paintings? Last but not least, critics disliked the bureaucracy involved in evaluating proposals and the inefficiency of large numbers of scientists writing unfunded grant proposals (Koelewijn and De Vrieze 2009; Lakens 2013; Radder 2010). Although most of these criticisms were already familiar by 2007, the year in which the Dutch government increased NWO’s annual budget with 100 million euros at the expense of the university’s basic funding, they intensified in response to this unpopular measure (Bouwens 2007; Kortlandt 2007; Nooteboom 2007; Walters 2007). Scientists who applauded this decision or called for even more competition were a small minority (Van der Ploeg 1996; Borst 2007).

All this suggests a fourth answer to the question why systemic factors were considered more important in the 2010s than had been the case in 1996: perceptions of the science system had already changed significantly in the years between the Diekstra and Stapel affairs. In particular, negative perceptions of competition for money had become much more dominant. Seen from this perspective, the argument that systemic factors were to blame for what Stapel had done wrong in Amsterdam, Groningen, and Tilburg was not exactly new. Even if direct causal connections between a competitive science system and norm violation at Stapel’s scale had not been suggested before – we have not been able to find them prior to 2011 – the idea that ‘over-competition’ can have effects detrimental to the honest pursuit of science was already familiar by the time that Stapel hit the headlines. This suggests that Stapel’s critics drew on, and gave new twists to, an existing repertoire of arguments against overly competitive science systems. For them, the case of Stapel confirmed what critics had been saying already for years, namely, that competition for money does not exactly encourage love of truth or care for accuracy.

Interestingly, this is confirmed by the Dutch scientific code of conduct that appeared in 2004 as well as by an influential working paper on scientific integrity that was issued in 2001 by the KNAW, NWO, and the Association of Universities in the Netherlands (VSNU). Initially, it might seem as if these documents themselves were a potentially relevant factor – a fifth item on our list of possible factors – in bringing about a shift in attention from personal to structural causes of scientific norm violation. Arguably, indeed, the very genre of codes of conduct, valid for all researchers in the country, presupposed that risks of norm violation were not limited to a handful of ‘bad apples’. Content-wise, however, the code focused almost exclusively on personal factors. It articulated its ethical vision in terms of qualities that were, in most cases, historically known as virtues: ‘scrupulousness’, ‘reliability’, ‘transparency’ (controleerbaarheid), ‘impartiality’, and ‘independency’. Even if the code did not explicitly argue that misconduct was a matter of character faults, readers were told that cultivating the right kind of personal qualities was the best possible remedy against scientific norm violation. Although the code of conduct also briefly pointed, without further explanation, to ‘pressure to perform,’ it presented this merely as a vice detrimental to the virtue of scrupulousness (VSNU 2004).Footnote 3 Likewise, the 2001 working paper on scientific integrity noted that ‘pressure to perform’, though not a bad thing in itself, might disturb the delicate balance between ‘competition’ and ‘cooperation’ when becoming too large. However, the authors did not yet perceive this as a structural factor, judging by the fact that they held principal investigators (PI’s) responsible for managing the level of competition in their group. Apparently, in their view, PI’s were capable of negotiating such pressures, to the point of ensuring that researchers experienced competition only as a ‘positive incentive’ (KNAW, VSNU and NWO 2001). So despite the fact that these documents expressed worries about excessive competition – evidence that strengthens our previous point – they were not yet inclined to favor structural explanations of norm violation over personal ones. If an emerging awareness of the importance of structural factors manifested itself in the early 2000s, this happened in calls for regulation of third-party funding (De Gruyter 2001; Duursma 2004).

Could one add, by way of final hypothesis, that the emergence of systematic explanations in the 2010s reflected a broader, international trend? In the early 2000s, journals like Nature repeatedly drew attention to structural factors responsible for breaches of integrity (e.g., Martinson et al. 2005; Giles 2007). Although it is very likely that Dutch commentators were influenced by voices from abroad, it is remarkable to what degree Stapel’s norm violation was analyzed within national contexts. Although Drenth, who had served as chairman of a working group responsible for writing a European Code of Conduct for Research Integrity, briefly referred to ‘American estimates’ of the prevalence of misconduct (Drenth 2011; see also Drenth 1996), such references were exceptional. Most commentators on the Stapel affair did not even mention cases of misconduct elsewhere, let alone compare them – even though the Hwang Woo-suk scandal, among other ones, was covered in Dutch media, too (Giesen 2006). Indeed, insofar as Dutch commentators engaged in comparisons, they preferably invoked Dutch analogies. This became especially clear in the years after the Stapel affair, when several other cases of misconduct at Dutch universities were revealed. Although Don Poldermans (2011), Dirk Smeesters (2012), Mart Bax (2013), and Peter Nijkamp (2014) were accused of very different types of norm violation, varying from self-plagiarism to describing villages that no anthropologist had ever seen, the four men were repeatedly compared to Stapel (Lubsen 2011; Berkhout and Rosenberg 2012b; Van Kolfschooten 2013; Dijstelbloem 2014). Also, in assessing the perceived wrong-doings of these four men, scientists routinely invoked systemic factors of the kind they had also held responsible for Stapel’s fraud (Oosting 2013; Van Tilburg 2014; Abma 2014; Dijstelbloem 2014; Keulemans 2017). So while granting that Dutch perceptions of norm violation were very likely to mirror those of scientists and science journalists elsewhere, the hypothesis that our evidence corroborates most robustly is the fourth one: Dutch scientists attributing Stapel’s misconduct to structural factors like publication and grant acquisition pressure drew and varied upon worries about ‘hyper-competition’ in science that had been prompted by structural changes in the scientific funding system.

Conclusion

What this article, in sum, has shown is that, in the case of the Netherlands, high-publicity cases of norm violation as such cannot be held responsible for the rise of systemic explanations of scientific misconduct. Although our findings show that Dutch commentators began to use this type of explanation only in 2011, in response to the Stapel affair, we have argued that causal connections between a competitive science system, on the one hand, and scientific norm violation, on the other, could be postulated plausibly only against the backdrop of an already broadly shared sense of dissatisfaction with ‘hyper-competition’ for grant money. Even if the Stapel case can be said to have served as a trigger for the argument that an overly competitive science system encourages norm violation, the more important underlying cause that contributed to the emergence of systemic explanations of scientific misconduct was the rise to dominance of grant schemes that scientists widely perceived as having detrimental effects on their work.

These findings raise a couple of important follow-up questions. Given that this study has focused on a country with a highly competitive science system, an obvious question for further research is whether, when, and to what extent systemic explanations of norm violation also emerged in countries where competition for research money was less fierce than in the Netherlands – for instance in Switzerland, where funding rates were substantially higher (up to one-third in 2018, judging by Swiss National Science Foundation 2018 and NWO 2019). Also, it would be worth tracing in more detail what kinds of criticism competitive research funding elicited in various national contexts, with special attention to the question whether causal connections between ‘hyper-competition’ and norm violation were postulated in contexts where spectacular cases of norm violation were not, or not yet, on the horizon. More generally, it would be worthwhile to compare the scientific realm to other domains of society. To what extent, for instance, was fraud in the public sector – in the police or in social housing projects – also attributed to systemic factors (cf. De Koning 2017)? And, if so, were these explanations field-specific or did they vary on broader perceptions of a ‘neoliberal’ restructuring of society? Finally, although these are historical questions, they touch on current policy issues, too. If overly competitive systems are perceived as provoking bad conduct, is there a sense in which reduction of competition might contribute to preventing norm violation, in science or elsewhere?

Notes

The LexisNexis newspaper database has been replaced by NexisUni after the search for this article had been completed. NexisUni’s search engine works differently than LexisNexis. Although all the articles examined for this study can be found through it, the exact same searches can no longer be performed.

After labeling ten articles independently of each other, the two authors compared their results and settled upon the six labels. The first author subsequently used these in labeling the remaining 36 articles.

It is worth pointing out that the code was revised in the wake of the Stapel affair. In its revised version, the code explained more specifically what counted as scientific misconduct, how students should be trained in scientific integrity, and how complaints about integrity violations could be handled. However, even in its revised form, the code did not elaborate on the causes behind norm violation (Israel and Drenth 2016).

References

Abma, R. (2013). De publicatiefabriek: over de betekenis van de affaire-Stapel. Nijmegen: Vantilt.

Abma, R. (2014). De universiteit als publicatiefabriek. Trouw 28 January.

Anderson, M. S., Ronning, E. A., de Vries, R., & Martinson, B. C. (2007). The perverse effects of competition on scientists’ work and relationships. Science and Engineering Ethics, 13(4), 437–461.

Aubert Bonn, N., & Pinxten, W. (2019). A decade of empirical research on research integrity: what have we (not) looked at? Journal of Empirical Research on Research Integrity, 14(4), 338–352.

Berkhout, K. (2011). Niemand controleert de psycholoog. NRC Handelsblad 9 September.

Berkhout, K, & Rosenberg, E. (2012a). ‘Fraude is zaak van universiteiten’. NRC Handelsblad 4 February.

Berkhout, K, & Rosenberg, E. (2012b). Een tweede ‘Stapel’ ontdekt. NRC Handelsblad 25 June.

Bloomfield, H., & McWilliams, P. (1995). How to heal depression. Los Angeles, CA: Prelude Press.

Bockma, H, & Thomas, H. (1996). Diekstra? De Volkskrant 30 August.

Boddington, P. (2012). Ethical challenges in genomics research: a guide to understanding ethics in context. Heidelberg: Springer.

Boevink, W. (1996). ‘Hoogleraar Diekstra voelt zich een uitverkorene voor een edele zaak’. Trouw 4 September.

Borsboom, D, & Van den Berg, H. (2012). Voor grondige en eerlijke wetenschap is geen tijd. Trouw 8 December.

Borst, P. (2007). Wild geraas. NRC Handelsblad. 22 September.

Borst, P. (2012). Fraudekul. NRC Handelsblad 8 December.

Bouter, L. (2020). What research institutions can do to foster research integrity. Science and Engineering Ethics, 26, 2363–2369.

Bouter, L. M., Tijdink, J., Axelsen, N., Martinson, B. C., & Ter Riet, G. (2016). Ranking major and minor research misbehaviors: results from a survey among participants of four World Conferences on Research Integrity. Research Integrity and Peer Review, 1, 17.

Bouwens, J. (2007). Besteed onderzoeksgeld nuttiger. Het Financieele Dagblad 31 August.

Callaway, E. (2011). Report finds massive fraud at Dutch universities. Nature, 479, 15.

Cliteur, P. (2000). Academicus moet net zo vrij zijn als rechter. NRC Handelsblad. 21 September.

Commissie Levelt, Commissie Noort, Commissie Drenth. (2012). Falende wetenschap: de frauduleuze onderzoekspraktijken van sociaal-psycholoog Diederik Stapel. Tilburg. https://www.rug.nl/about-ug/latest-news/news/archief2012/nieuwsberichten/stapel-eindrapport-ned.pdf. Accessed 23 November 2020.

De Gruyter, R. (2001). ‘Er is veel wangedrag in de wetenschap’; J. de Haes over ‘haar’ gedragscode. NRC Handelsblad 28 July.

De Koning, B. (2017). Vriendjespolitiek: fraude en corruptie in Nederland. Amsterdam: Amsterdam University Press.

De Regt, H. (2011). Fraudeur Stapel kon niet anders. Trouw 17 November.

Diekstra, R. (1990). Als het leven pijn doet. Utrecht: A. W. Bruna.

Diekstra, R. (1996). Het onderste boven: zorg voor de psyche. Utrecht: A. W. Bruna.

Diekstra, R. (1998). O Nederland, vernederland! Psychologie van val en opstand. Utrecht: A.W. Bruna.

Dijkhuis, J., Heuves, W., Hofstede, M., Janssen, M., & Rörsch, A. (1997). Leiden in last: de zaak Diekstra nader bekeken. Rijswijk: Uitgeverij Elmar.

Dijstelbloem, H. (2014). Wetenschap mag niet alleen om publicatie gaan. NRC Handelsblad 9 January.

Drenth, P. (1996). Wetenschap tussen parafrase en bedrog. NRC Handelsblad 7 September.

Drenth, P. (2011). Wetenschap gebaat bij Europese code tegen fraude. Trouw 22 September.

Duursma, M. (2004). Bijklussen mag, als het maar bekend is; Rector UvA wil code voor wetenschappers. NRC Handelsblad 10 January.

Ellemers, J., et al. (1982). Bureaucratisering van het universitair onderzoek. Hollands Maandblad, 422–423, 20–21.

Emanuel, E. J., Grady, C. C., Crouch, R. A., Lie, R. K., Miller, F. G., & Wandler, D. D. (Eds.). (2008). The Oxford textbook of clinical research ethics. Oxford: Oxford University Press.

Ephimenco, S. 1996. De plagiator. Trouw 31 August.

Fisher, C. B., & Anushko, A. E. (2008). Research ethics in social science. In P. Alasuutari, L. Bickman, & J. Brannen (Eds.), The SAGE handbook of social research methods (pp. 95–109). London: SAGE.

Flynn, L. R., & Goldsmith, R. E. (2013). Case studies for ethics in academic research in the social sciences. Los Angeles, CA: SAGE.

Funnekotter, B, & Berkhout, K. (2011). Magna cum fraude. NRC.next 1 November.

Gerrits, R. (1996). Plegen architecten plagiaat? De Volkskrant 8 October.

Gibson, S. (2012). Don’t tar discipline with Stapel brush. Times Higher Education 20 December.

Giesen, P. (2006). Ook Science moet het geloven. De Volkskrant 7 January.

Giles, J. (2007). Breeding cheats. Nature, 445, 242–243.

Gordon, S. (1985). When living hurts. New York: Union of American Hebrew Congregations.

Hales, D., & Hales, R. (1995). Caring for the mind: The comprehensive guide to mental health. New York: Bantam Books.

Haven, T. L., Tijdink, J. K., Martinson, B. C., & Bouter, L. M. (2019). Perceptions of research integrity climate differ between academic ranks and disciplinary fields: results from a survey among academic researchers in Amsterdam. PLoS ONE, 14(1), e0210599.

Hertzberger, R. (2012). Notoire betweters, één doel: hoe het zit. NRC Handelsblad 8 December.

Huibers, M. (2011). Bijna alle wetenschappers hebben wel reputatiestress. De Volkskrant 10 September.

Inter Academies. (2012). Responsible conduct in the global research enterprise: a policy report. Amsterdam: Inter Academy Council.

Israel, M., & Drenth, P. (2016). Research integrity: perspectives from Australia and the Netherlands. In T. Bretag (Ed.), Handbook of academic integrity (pp. 789–808). Singapore: Springer.

Keulemans, M. (2012). ‘Stapels werkwijze was geraffineerd’. De Volkskrant 29 November.

Keulemans. M. (2017). Economen herkauwen eigen onderzoek. De Volkskrant 26 September.

KNAW, VSNU, & NWO. (2001). Notitie wetenschappelijke integriteit: over normen van wetenschappelijk onderzoek en een Landelijk Orgaan voor Wetenschappelijke Integriteit (LOWI). Amsterdam: Koninklijke Nederlandse Akademie van Wetenschappen.

Koelewijn, J, & De Vrieze, J. (2009). Knappe koppen zonder hoofdpijn. De Volkskrant 4 July.

Koier, E., Van der Meulen, B., Horlings, E., & Belder, R. (2016). Chinese borden: financiële stromen en prioriteringsbeleid in het Nederlandse universitaire onderzoek. The Hague: Rathenau Instituut.

Kortlandt, F. (2007). Geesteswetenschappen gebaat bij ‘eigen’ geld. NRC Handelsblad 28 August.

Kreulen, E. (1996). ‘Wat Diekstra heeft gedaan, daar staat je verstand bij stil’. Trouw 13 December.

Kreulen, E. (2011). Wetenschap moet tegenspraak organiseren. Trouw 29 October.

Kreulen, E. (2012). Niemand die zei: hier klopt iets niet. Trouw 29 November.

Kreulen, E. (2013). Stapel schikt met justitie. Trouw 29 June.

Lagendijk, A. (1996). Plagiaat in Leiden. De Volkskrant 24 August.

Lakens, D. (2013). Al die subsidieaanvragen remmen het onderzoek. NRC Handelsblad 25 July.

Lubsen, J. (2011). Moeten Poldemans’ publicaties worden ingetrokken? Alle 500? Medische fraude is veel erger dan die theorietjes van Diederik Stapel. NRC Handelsblad 26 November.

Martinson, B. C., Anderson, M. S., & de Vries, R. (2005). Scientists behaving badly. Nature, 435(7043), 737–738.

N. N. (1996). Leidse psycholoog Diekstra betrapt op plagiaat. De Volkskrant 15 August.

Nooteboom, B. (2007). Plasterk stuurt verkeerd: Geld voor onderzoek moet direct naar universiteiten blijven gaan. NRC Handelsblad 24 August.

NWO. (2018). NWO Jaarverslag 2017. The Hague: Nederlandse Organisatie voor Wetenschappelijk Onderzoek.

NWO. (2019). NWO Jaarverslag 2018. The Hague: Nederlandse Organisatie voor Wetenschappelijk Onderzoek.

Oosting, R. (2013). Wangedrag in wetenschap nog niet passé. Trouw 28 September.

Radder, H. (2010). Hoe de universiteit weer wat geld kan maken. NRC Handelsblad 19 April.

Resnik, D. B. (2009). International standards for research integrity: an idea whose time has come? Account in Research, 16(4), 218–228.

Smulders, Y, & Tijdink, J. (2013). ‘Mild bedrog’ groter probleem dan fraude. Trouw 11 October.

Sovacool, B. K. (2008). Exploring scientific misconduct: Isolated individuals, impure institutions, or an inevitable idiom of modern science? Bioethical Inquiry, 5, 271–282.

Stapel, D. (2012). Ontsporing. Amsterdam: Prometheus.

Stroebe, W, & Hewstone, M. (2013). Primed, but not suspect. Times Higher Education 28 February.

Swiss National Science Foundation. (2018). Statistics 2018. http://www.snf.ch/SiteCollectionDocuments/profil/2018/SNF-Profil-2018-2019-en-Statistiken-Vollversion.pdf. Accessed 23 November 2020.

‘t Hart, J. (1996). Van Diekstra zullen we nog meer horen. De Volkskrant 13 December.

Ter Gast, E. (2012). Onderzoek vervalsen: je moet nu bijna wel. NRC Handelsblad 28 April.

Van Calmthout, M. (1996). Wetenschappers in oorlogstijd. De Volkskrant 23 November.

Van Calmthout, M. (2016). ‘Er is geen ruimte meer voor de Isaac Newtons’. De Volkskrant 2 June.

Van Kolfschooten, F. (2012). Ontspoorde wetenschap: over fraude, plagiaat en academische mores. Amsterdam: De Kring.

Van Kolfschooten, F. (2013). Mooie verhalen, helaas verzonnen. NRC Handelsblad 23 September.

Van der Heijden, M. (2012). Ethiekles is een sluitpost. NRC Handelsblad 25 February.

Van der Heijden, M. (2014). Op wetenschap is een lachspiegelmodel van de vrije markt losgelaten. NRC Handelsblad 3 May.

Van der Ploeg, R. (1996). Promovendus is te afhankelijk van hoogleraar. De Volkskrant. 15 January 1996.

Van Oostendorp, M. (2014). Zo erg is zelfplagiaat ook weer niet. NRC Handelsblad 9 January.

Van Praag, M. (2011). Na rechter en bankier staat nu de hoogleraar onder druk. NRC Handelsblad 13 September.

Van Tilburg, R. (2014). Waar het om draait in de wetenschap. De Volkskrant 15 January.

Venema, S. (2011). De druk is enorm: publiceer of crepeer. De Volkskrant 9 September.

Verhey, E., & Danhof, E. (1996a). René Diekstra’s plagiaat. Vrij Nederland, 57(33), 10–11.

Verhey, E., & Danhof, E. (1996b). René Diekstra’s bestsellers zijn van anderen. Vrij Nederland, 57(35), 22–23.

Versleijen, A. (Ed.). (2007). Dertig jaar publieke onderzoeksfinanciering in Nederland 1975–2005: historische trends, actuele discussies. The Hague: Rathenau Instituut.

Von der Dunk, H. (2011). Op drift tot scoren volgt bedrog. De Volkskrant 22 September.

VSNU. (2004). De Nederlandse gedragscode wetenschapsbeoefening: principes van goed wetenschappelijk onderwijs en onderzoek. Amsterdam: VSNU.

Walters, D. (2007). Op onderzoek; universiteiten krijgen geld voortaan via onderzoekers. NRC Handelsblad 22 September.

Wassenaar, D. R. (2006). Ethical issues in social science research. In Martin Terre Blanche, Kevin Durrheim, and Desmond Painter (Eds.), Research in practice: applied methods for the social sciences (2nd ed., pp. 60–79). Cape Town: University of Cape Town Press.

Wesseling, H. (1996). Huizinga als pionier. NRC Handelsblad 1 February.

Acknowledgments

Funding has generously been provided by the Netherlands Organization for Health Research and Development (ZonMw).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huistra, P., Paul, H. Systemic Explanations of Scientific Misconduct: Provoked by Spectacular Cases of Norm Violation?. J Acad Ethics 20, 51–65 (2022). https://doi.org/10.1007/s10805-020-09389-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10805-020-09389-8