Abstract

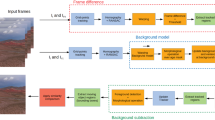

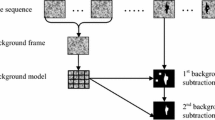

The realm of video surveillance has various methods to extract foreground. Background subtraction is one of the prime methods for automatic video analysis. The sensitivity of a meaningful event of interest is increased due to dampening effect of background changes and detection of false alarm. Hence the model can be strongly recommended to industries. This paper restricts the focus to one of the most common causes of dynamic background changes: that of swaying trees branches and illumination changes. To overcome the issue available in existing system, we propose a method called as twin background modeling. This method has dual models namely long and short term background models to increase exposure rate of foreground by using statistical method and also reduce false negative rate. This method has dimensional transformation from 2D to 1D which reduces computation time of the system and increases batch processing. The proposed method uses Manhattan distance to reduce execution time, increase detection rate and reduce error rate. The performance of the suggested approach is illustrated by using change detection dataset 2014 and is compared to other conventional approaches.

Similar content being viewed by others

References

Qin, L., Sheng, B., Lin, W., Wu, W., Shen, R.: GPU-accelerated video background subtration using Gabor detector. Proced. Comput. Sci. 32, 1–10 (2015)

Kavitha, K., Tejaswini, A.: Background detection and subtraction for image sequences in video. Int. J. Comput. Sci. Inf. Technol. 3(5), 5223–5226 (2012)

Bouwmans, T.: Traditional and recent approaches in background modeling for foreground detection: an overview. Comput. Sci. Rev. 11–12, 31–66 (2014)

Jeeva, S., Sivabalakrishnan, M.: Survey on background modeling and foreground detection for real time video surveillance. Proced. Comput. Sci. 50, 566–571 (2015)

Bouwmans, T.: Recent advanced statistical background modeling for foreground detection: a systematic survey. Recent Pat. Comput. Sci. 4(3), 147–171 (2011)

Bouwmans, T., Shan, C., Piccardi, M., Davis, L.: Special issue on background modeling for foreground detection in real-world dynamic scenes. Mach. Vis. Appl. 25(5), 1101–1103 (2014)

Radke, R., Andra, S., Al-Kofahi, O., Roysam, B.: Image change detection algorithms: a systematic survey. IEEE Trans. Image Process. 14(3), 294–307 (2005)

Faro, A., Giordano, D., Spampinato, C.: Adaptive background modeling integrated with luminosity sensors and occlusion processing for reliable vehicle detection. IEEE Trans. Intell. Transp. Syst. 12(4), 1398–1412 (2011)

Barnichsz, O., Van Droogenbroeck, M.: ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 20(6), 1709–1724 (2011)

Van Droogenbroeck, M., Paquot, O.: Background subtraction: experiments and improvements for ViBe. In: Change Detection Workshop (CDW), vol. 6. (2012)

St-Charles, P.L., Bilodeau, G.A., Bergevin, R.: SuBSENSE: a universal change detection method with local adaptive sensitivity. IEEE Trans. Image Process. 24(1), 359–373 (2015)

Barnich, O., Van Droogenbroeck, M.: ViBe: a powerful random technique to estimate the background in video sequences. In: IEEE sponsored ICASSP-2009, No: 978-1-4244-2354-5

Shah, M., Deng, J.D., Woodford, B.J.: Video background modeling: recent approaches, issues and our proposed techniques. Mach. Vis. Appl. 25(5), 1105–1119 (2014)

Dou, J., Li, J.: Moving object detection based on improved ViBe and graph cut optimization. Optik 124, 6081–6088 (2013)

Jevnisek, R.J., Avidan, S.: Semi global boundary detection. Comput. Vis. Image Underst. 152, 21–28 (2016)

Wen, J., Lai, Z., Zhan, Y., Cui, J.: The $L2,1$-norm-based unsupervised optimal feature selection with applications to action recognition. Pattern Recognit. 60, 515–530 (2016)

Wang, B., Dudek, P.: A fast self-tuning background subtraction algorithm. In: Proceedings of the IEEE Conference on CVPR Workshops, pp. 401–404 (2014)

Maddalena, L., Petrosino, A.: A fuzzy spatial coherence-based approach to background / foreground separation for moving object detection. Neural Comput. Appl. 19(2), 179–186 (2010)

Lee, D.-S.: Effective Gaussian mixture learning for video background subtraction. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 827–832 (2005)

Yang, M.-H., Huang, C.-R., Liu, W.-C., Lin, S.-Z., Chuang, K.-T.: Binary descriptor based nonparametric background modeling for foreground extraction by using detection theory. IEEE Trans. Circuits Syst. Video Technol. 25(4), 595–608 (2015)

Kim, K., Chalidabhongse, T.H., Harwood, D., Davis, L.: Real-time foreground-background segmentation using codebook model. Real-Time Imaging 11(3), 172–185 (2005)

Jeeva, S., Sivabalakrishnan, M.: Robust background subtraction for real time video processing. Int. J. Pure. Appl. Math. 109(5), 117–124 (2016)

Goyette, N., Jodoin, P.M., Porikli, F., Konrad, J., Ishwar, P.: A novel video dataset for change detection benchmarking. IEEE Trans. Image Process. 23(11), 4663–4679 (2014)

Xu, Y., Dong, J., Zhang, B., Xu, D.: Background modeling methods in video analysis: a review and comparative evaluation. CAAI Trans. Intell. Technol 1, 43–60 (2016)

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Jeeva, S., Sivabalakrishnan, M. Twin background model for foreground detection in video sequence. Cluster Comput 22 (Suppl 5), 11659–11668 (2019). https://doi.org/10.1007/s10586-017-1446-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-017-1446-7