Abstract

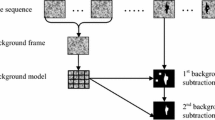

Identifying any moving object is essential for wide-area surveillance systems and security applications. In this paper, we present a moving object detection method based on background modeling and subtraction. Background modeling-based methods describe a model with features such as color and textures to represent the background. Background subtraction is challenging due to complex background types in natural environments. Many methods suffer from numerous false detections in real applications. In this study, we create a background model with each pixel’s age, mean, and variance. Our main contribution is to propose a tracking approach in background subtraction and use simple frame difference to set weight during background subtraction operation. The proposed tracking strategy aims to use spatio-temporal features in foreground mask decision. The tracking method is used as a verification mechanism for candidate moving object regions. Tracking approach is also applied for frame difference, and the generated output motion mask is used to support background model subtraction, especially for slow-moving object cases which cause failure in our background model. The main novelty of the paper is that it proposes a reasonable solution for false detection issue due to homography error without adding a heavy computational cost. We measure each module’s performance to demonstrate the impact of each module on the proposed method clearly. Experimental results are examined on two publicly available aerial image datasets, PESMOD and VIVID. The proposed method runs in real time and outperforms existing background modeling-based methods. It is seen that the proposed method achieves a significant reduction in false positives and has stable performance on different kinds of images.

Similar content being viewed by others

Availability of data and materials

Not applicable.

References

Moo Yi, K., Yun, K., Wan Kim, S., Jin Chang, H., Young Choi, J.: Detection of moving objects with non-stationary cameras in 5.8 ms: bringing motion detection to your mobile device. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 27–34 (2013)

Zoran, Z.: Improved adaptive gaussian mixture model for background subtraction. In: Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004., vol. 2. IEEE, pp. 28–31 (2004)

Zivkovic, Z., Van Der Heijden, F.: Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recogn. Lett. 27(7), 773–780 (2006)

Allebosch, G., Deboeverie, F., Veelaert, P., Philips, W.: Efic: edge based foreground background segmentation and interior classification for dynamic camera viewpoints. In: International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 130–141. Springer (2015)

De Gregorio, M., Giordano, M.: Wisardrp for change detection in video sequences. In: ESANN (2017)

López-Rubio, F.J., López-Rubio, E., Luque-Baena, R.M., Dominguez, E., Palomo, E.J.: Color space selection for self-organizing map based foreground detection in video sequences. In: 2014 International Joint Conference on Neural Networks (IJCNN), pp. 3347–3354. IEEE (2014)

Heikkilä, M., Pietikäinen, M., Heikkilä, J.: A texture-based method for detecting moving objects. In: BMVC, vol. 401, pp. 1–10. Citeseer (2004)

Huerta, I., Rowe, D., Viñas, M., Mozerov, M., Gonzàlez, J.: Background subtraction fusing colour, intensity and edge cues (2007)

Mason, M., Duric, Z.: Using histograms to detect and track objects in color video. In: Proceedings 30th Applied Imagery Pattern Recognition Workshop (AIPR 2001). Analysis and Understanding of Time Varying Imagery, pp. 154–159. IEEE (2001)

Zhao, P., Zhao, Y., Cai, A.: Hierarchical codebook background model using haar-like features. In: 2012 3rd IEEE International Conference on Network Infrastructure and Digital Content, pp. 438–442. IEEE (2012)

Bouwmans, T., Silva, C., Marghes, C., Zitouni, M.S., Bhaskar, H., Frelicot, C.: On the role and the importance of features for background modeling and foreground detection. Comput. Sci. Rev. 28, 26–91 (2018)

Kanprachar, S., Tangkawanit, S.: Performance of rgb and hsv color systems in object detection applications under different illumination intensities. In: IMECS, pp. 1943–1948 (2007)

St-Charles, P.-L., Bilodeau, G.-A., Bergevin, R.: Subsense: a universal change detection method with local adaptive sensitivity. IEEE Trans. Image Process. 24(1), 359–373 (2014)

Wang, T., Liang, J., Wang, X., Wang, S.: Background modeling using local binary patterns of motion vector. In: 2012 Visual Communications and Image Processing, pp. 1–5. IEEE (2012)

Bilodeau, G.A., Jodoin, J.P., Saunier, N.: Change detection in feature space using local binary similarity patterns. In: 2013 International Conference on Computer and Robot Vision, pp. 106–112. IEEE (2013)

Tomasi, C., Kanade, T.: Detection and tracking of point. Int. J. Comput. Vis. 9, 137–154 (1991)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Huang, J., Zou, W., Zhu, J., Zhu, Z.: Optical flow based real-time moving object detection in unconstrained scenes. arXiv preprint arXiv:1807.04890 (2018)

Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., Brox, Y.: Flownet 2.0: evolution of optical flow estimation with deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2462–2470 (2017)

Kurnianggoro, L., Shahbaz, A., Jo, K.Y.: Dense optical flow in stabilized scenes for moving object detection from a moving camera. In: 2016 16th International Conference on Control, Automation and Systems (ICCAS), pp. 704–708. IEEE (2016)

Mandal, M., Vipparthi, S.K.: An empirical review of deep learning frameworks for change detection: model design, experimental frameworks, challenges and research needs. IEEE Trans. Intell. Transport. Syst. (2021)

Wang, Y., Jodoin, P.M., Porikli, F., Konrad, J., Benezeth, Y., Ishwar, P.: Cdnet 2014: an expanded change detection benchmark dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 387–394 (2014)

Lim, L.A., Keles, H.Y.: Foreground segmentation using a triplet convolutional neural network for multiscale feature encoding. arXiv preprint arXiv:1801.02225 (2018)

Tezcan, M.O., Ishwar, P., Konrad, J.: Spatio-temporal data augmentations for video-agnostic supervised background subtraction: Bsuv-net 2.0. IEEE Access 9, 53849–53860 (2021)

Rahmon, G., Bunyak, F., Seetharaman, G., Palaniappan, K.: Motion u-net: multi-cue encoder-decoder network for motion segmentation. In: 2020 25th International Conference on Pattern Recognition (ICPR), pp. 8125–8132. IEEE (2021)

Giraldo, J.H., Bouwmans, T.: Semi-supervised background subtraction of unseen videos: minimization of the total variation of graph signals. In: 2020 IEEE International Conference on Image Processing (ICIP), pp. 3224–3228. IEEE (2020)

Giraldo, J.H., Javed, S., Sultana, M., Jung, S.K., Bouwmans, T.: The emerging field of graph signal processing for moving object segmentation. In: International Workshop on Frontiers of Computer Vision, pp. 31–45. Springer (2021)

Stauffer, C., Grimson, W.E.L.: Adaptive background mixture models for real-time tracking. In: Proceedings. 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), vol. 2, pp. 246–252. IEEE (1999)

Stauffer, C., Grimson, W.E.L.: Learning patterns of activity using real-time tracking. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 747–757 (2000)

Varadarajan, S., Miller, P., Zhou, H.: Spatial mixture of gaussians for dynamic background modelling. In: 2013 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, pp. 63–68. IEEE (2013)

Yun, K., Lim, J., Choi, J.Y.: Scene conditional background update for moving object detection in a moving camera. Pattern Recogn. Lett. 88, 57–63 (2017)

Chen, C., Li, S., Qin, H., Hao, A.: Robust salient motion detection in non-stationary videos via novel integrated strategies of spatio-temporal coherency clues and low-rank analysis. Pattern Recogn. 52, 410–432 (2016)

Delibasoglu, I.: Real-time motion detection with candidate masks and region growing for moving cameras. J. Electron. Imaging 30(6), 063027 (2021)

Wang, W., Shen, J., Shao, L.: Video salient object detection via fully convolutional networks. IEEE Trans. Image Process. 27(1), 38–49 (2017)

Song, H., Wang, W., Zhao, S., Shen, J., Lam, K.M.: Pyramid dilated deeper convlstm for video salient object detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 715–731 (2018)

Wang, W., Song, H., Zhao, S., Shen, J., Zhao, S., Hoi, S.C.H., Ling, H.: Learning unsupervised video object segmentation through visual attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3064–3074 (2019)

Chen, C., Wang, G., Peng, C., Fang, Y., Zhang, D., Qin, H.: Exploring rich and efficient spatial temporal interactions for real-time video salient object detection. IEEE Trans. Image Process. 30, 3995–4007 (2021)

Yuan, B., Sun, Z.: Guide background model update with object tracking. J. Comput. Inf. Syst. 4(4), 1635–1642 (2008)

Taycher, L., Fisher III, J.W., Darrell, T.: Incorporating object tracking feedback into background maintenance framework. In: 2005 Seventh IEEE Workshops on Applications of Computer Vision (WACV/MOTION’05), vol. 1, 2, pp. 120–125. IEEE (2005)

Delibasoglu, I.: Uav images dataset for moving object detection from moving cameras. arXiv preprint arXiv:2103.11460 (2021)

Collins, R., Zhou, X., Teh, S.K.: Teh: an open source tracking testbed and evaluation web site. In: IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, vol. 2, pp. 35 (2005)

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

The author confirms responsibility for data collection, analysis and interpretation of results, and manuscript preparation.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Ethics approval and consent to participate

Not applicable.

Consent for publication

The author allows the article to be published in the journal.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Delibaşoğlu, İ. Moving object detection method with motion regions tracking in background subtraction. SIViP 17, 2415–2423 (2023). https://doi.org/10.1007/s11760-022-02458-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02458-y