Abstract

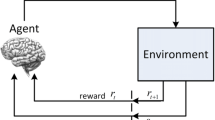

The design complexities of trending UAVs necessitates formulation of C ontrol L aws that are both robust and model-free besides being self-capable of handling the evolving dynamic environments. In this research, a unique intelligent control architecture is presented which aims at maximizing the glide range of an experimental UAV having unconventional controls. To handle control complexities, while keeping them computationally acceptable, a distinct RL technique namely Modified Model Free Dynamic Programming (MMDP) is proposed. The methodology is novel as RL based Dynamic Programming algorithm has been specifically modified to configure the problem in continuous state and control space domains without knowledge of the underline UAV model dynamics. Major challenge during the research was the development of a suitable reward function which helps in achieving the desired objective of maximising the glide performance. The efficacy of the results and performance characteristics, demonstrated the ability of the presented algorithm to dynamically adapt to the changing environment, thereby making it suitable for UAV applications. Non-linear simulations performed under different environmental and varying initial conditions demonstrated the effectiveness of the proposed methodology over the conventional classical approaches.

Similar content being viewed by others

Abbreviations

- b ::

-

Span of Wing (m)

- \(\tilde {c}\)::

-

Mean Aerodynamic Chord (m)

- CAD ::

-

Computer Aided Design

- CFD ::

-

Computational Fluid Dynamics

- \(C_{M_{x}}\)::

-

Rolling moment coefficient

- \(C_{M_{y}}\)::

-

Pitching moment coefficient

- \(C_{M_{z}}\)::

-

Yawing moment coefficient

- \(C_{F_{x}}\)::

-

X-direction force coefficient

- \(C_{F_{y}}\)::

-

Y-direction force coefficient

- \(C_{F_{z}}\)::

-

Z-direction force coefficient

- D o F::

-

Degree of Freedom

- g ::

-

Acceleration due to gravity (m/sec2)

- h ::

-

Altitude (m)

- LCD ::

-

Left Control Deflection

- MMDP ::

-

Modified Model Free Dynamic Programming

- ML ::

-

Machine Learning

- MDP ::

-

Markov Decision Process

- m ::

-

Vehicle’s Mass (kg)

- P E::

-

East direction position vector (km)

- P N::

-

North direction position vector (km)

- P ::

-

Roll Rate (deg/sec)

- Q ::

-

Pitch Rate (deg/sec)

- P a r m::

-

Parameter

- R ::

-

Yaw Rate (deg/sec)

- RL ::

-

Reinforcement Learning

- RCD ::

-

Right Control Deflection

- S ::

-

Area of Wing (m2)

- UAV ::

-

Unmanned Aerial Vehicle

- V T::

-

Free Stream Velocity (m/sec)

- nw ::

-

Numerical Weights

- X c::

-

Current X-Position(m)

- Z c::

-

Current Z-Position(m)

- rew ::

-

Instantaneous Reward

- T R e w::

-

Total Reward

- pty ::

-

Penalty

- α::

-

Angle of Attack (deg)

- β::

-

Sideslip Angle (deg)

- γ::

-

Flight path Angle (deg)

- ψ::

-

Yaw Angle (deg)

- ϕ::

-

Roll Angle (deg)

- 𝜃::

-

Theta Angle (deg)

- δ L::

-

LCD deflection (deg)

- δ R::

-

RCD deflection (deg)

- ρ::

-

Air Density (kg/m3)

References

Yanushevsky R (2011) Guidance of unmanned aerial vehicles. CRC press

Mir I, Eisa S, Taha H E, Gul F (2022) On the stability of dynamic soaring: Floquet-based investigation. In: AIAA SCITECH 2022 Forum, p 0882

Mir I, Eisa S, Maqsood A, Gul F (2022) Contraction analysis of dynamic soaring. In: AIAA SCITECH 2022 Forum, p 0881

Mir I, Taha H, Eisa S A, Maqsood A (2018) A controllability perspective of dynamic soaring. Nonlinear Dyn 94(4):2347–2362

Mir I, Maqsood A, Eisa S A, Taha H, Akhtar S (2018) Optimal morphing–augmented dynamic soaring maneuvers for unmanned air vehicle capable of span and sweep morphologies. Aerosp Sci Technol 79:17–36

Mir I, Maqsood A, Akhtar S (2017) Optimization of dynamic soaring maneuvers to enhance endurance of a versatile uav. In: IOP Conference Series: Materials Science and Engineering, vol 211. IOP Publishing, p 012010

Mir I, Maqsood A, Akhtar S (2017) Optimization of dynamic soaring maneuvers to enhance endurance of a versatile uav. In: IOP Conference Series: Materials Science and Engineering, vol 211. IOP Publishing, p 012010

Paucar C, Morales L, Pinto K, Sánchez M, Rodríguez R, Gutierrez M, Palacios L (2018) Use of drones for surveillance and reconnaissance of military areas. In: International Conference of Research Applied to Defense and Security. Springer, pp 119–132

Kim H, Mokdad L, Ben-Othman J (2018) Designing uav surveillance frameworks for smart city and extensive ocean with differential perspectives. IEEE Commun Mag 56(4):98–104

van Lieshout M, Friedewald M (2018) Drones–dull, dirty or dangerous? the social construction of privacy and security technologies. In: Socially Responsible Innovation in Security. Routledge, pp 37–55

Nikolakopoulos K G, Soura K, Koukouvelas I K, Argyropoulos N G (2017) Uav vs classical aerial photogrammetry for archaeological studies. J Archaeol Sci: Rep 14:758–773

Winkler S, Zeadally S, Evans K (2018) Privacy and civilian drone use: The need for further regulation. IEEE Secur Privacy 16(5):72–80

Nurbani E S (2018) Environmental protection in international humanitarian law. Unram Law Rev 2(1)

Cai G, Dias J, Seneviratne L (2014) A survey of small-scale unmanned aerial vehicles: Recent advances and future development trends. Unmanned Syst 2(02):175–199

Mir I, Eisa S A, Taha HE, Maqsood A, Akhtar S, Islam T U (2021) A stability perspective of bio-inspired uavs performing dynamic soaring optimally. Bioinspir. Biomim

Mir I, Akhtar S, Eisa SA, Maqsood A (2019) Guidance and control of standoff air-to-surface carrier vehicle. Aeronaut J 123(1261):283–309

Mir I, Maqsood A, Taha H E, Eisa S A (2019) Soaring energetics for a nature inspired unmanned aerial vehicle. In: AIAA Scitech 2019 Forum, p 1622

Elmeseiry N, Alshaer N, Ismail T (2021) A detailed survey and future directions of unmanned aerial vehicles (uavs) with potential applications. Aerospace 8(12):363

Giordan D, Adams M S, Aicardi I, Alicandro M, Allasia P, Baldo M, De Berardinis P, Dominici D, Godone D, Hobbs P et al (2020) The use of unmanned aerial vehicles (uavs) for engineering geology applications. Bull Eng Geol Environ 79(7):3437–3481

Mir I, Eisa S A, Maqsood A (2018) Review of dynamic soaring: technical aspects, nonlinear modeling perspectives and future directions. Nonlinear Dyn 94(4):3117–3144

Mir I, Maqsood A, Akhtar S (2018) Biologically inspired dynamic soaring maneuvers for an unmanned air vehicle capable of sweep morphing. Int J Aeronaut Space Sci 19(4):1006–1016

Mir I, Maqsood A, Akhtar S (2017) Dynamic modeling & stability analysis of a generic uav in glide phase. In: MATEC Web of Conferences, vol 114. EDP Sciences, p 01007

Mir I, Eisa S A, Taha H, Maqsood A, Akhtar S, Islam T U (2021) A stability perspective of bioinspired unmanned aerial vehicles performing optimal dynamic soaring. Bioinspir Biomimetics 16 (6):066010

Gul F, Mir S, Mir I (2022) Coordinated multi-robot exploration: Hybrid stochastic optimization approach. In: AIAA SCITECH 2022 Forum, p 1414

Gul F, Mir S, Mir I (2022) Multi robot space exploration: A modified frequency whale optimization approach. In: AIAA SCITECH 2022 Forum, p 1416

Gul F, Mir I, Abualigah L, Sumari P (2021) Multi-robot space exploration: An augmented arithmetic approach. IEEE Access 9:107738–107750

Gul F, Rahiman W, Alhady SS N, Ali A, Mir I, Jalil A (2020) Meta-heuristic approach for solving multi-objective path planning for autonomous guided robot using pso–gwo optimization algorithm with evolutionary programming. J Ambient Intell Human Comput:1–18

Gul F, Mir I, Rahiman W, Islam T U (2021) Novel implementation of multi-robot space exploration utilizing coordinated multi-robot exploration and frequency modified whale optimization algorithm. IEEE Access 9:22774–22787

Gul F, Mir I, Abualigah L, Sumari P, Forestiero A (2021) A consolidated review of path planning and optimization techniques: Technical perspectives and future directions. Electronics 10(18):2250

Gul F, Alhady S S N, Rahiman W (2020) A review of controller approach for autonomous guided vehicle system. Ind J Electr Eng Comput Sci 20(1):552–562

Gul F, Rahiman W (2019) An integrated approach for path planning for mobile robot using bi-rrt. In: IOP Conference Series: Materials Science and Engineering, vol 697. IOP Publishing, p 012022

Gul F, Rahiman W, Nazli Alhady S S (2019) A comprehensive study for robot navigation techniques. Cogent Eng 6(1):1632046

Szczepanski R, Tarczewski T, Grzesiak L M (2019) Adaptive state feedback speed controller for pmsm based on artificial bee colony algorithm. Appl Soft Comput 83:105644

Szczepanski R, Bereit A, Tarczewski T (2021) Efficient local path planning algorithm using artificial potential field supported by augmented reality. Energies 14(20):6642

Szczepanski R, Tarczewski T (2021) Global path planning for mobile robot based on artificial bee colony and dijkstra’s algorithms. In: 2021 IEEE 19th International Power Electronics and Motion Control Conference (PEMC). IEEE, pp 724–730

Azar A T, Koubaa A, Ali Mohamed N, Ibrahim H A, Ibrahim Z F, Kazim M, Ammar A, Benjdira B, Khamis A M, Hameed I A et al (2021) Drone deep reinforcement learning: A review. Electronics 10(9):999

Thorndike EL (1911) Animal intelligence, darien, ct. Hafner

Sutton R S, Barto A G (1998) Planning and learning. In: Reinforcement Learning: An Introduction., ser. Adaptive Computation and Machine Learning. A Bradford Book, pp 227–254

Verma S (2020) A survey on machine learning applied to dynamic physical systems. arXiv:2009.09719

Du W, Ding S (2021) A survey on multi-agent deep reinforcement learning: from the perspective of challenges and applications. Artif Intell Rev 54(5):3215–3238

Dalal G, Dvijotham K, Vecerik M, Hester T, Paduraru C, Tassa Y (2018) Safe exploration in continuous action spaces. arXiv:1801.08757

Garcıa J, Fernández F (2015) A comprehensive survey on safe reinforcement learning. J Mach Learn Res 16(1):1437–1480

Kretchmar R M, Young P M, Anderson C W, Hittle D C, Anderson M L, Delnero C C (2001) Robust reinforcement learning control with static and dynamic stability. Int J Robust Nonlinear Control: IFAC-Affil J 11(15):1469–1500

Mannucci T, van Kampen E-J, de Visser C, Chu Q (2017) Safe exploration algorithms for reinforcement learning controllers. IEEE Trans Neural Netw Learn Syst 29(4):1069–1081

Mnih V, Kavukcuoglu K, Silver D, Rusu A A, Veness J, Bellemare M G, Graves A, Riedmiller M, Fidjeland A K, Ostrovski G et al (2015) Human-level control through deep reinforcement learning. nature 518(7540):529–533

Rinaldi F, Chiesa S, Quagliotti F (2013) Linear quadratic control for quadrotors uavs dynamics and formation flight. J Intell Robot Syst 70(1-4):203–220

Araar O, Aouf N (2014) Full linear control of a quadrotor uav, lq vs hinf. In: 2014 UKACC International Conference on Control (CONTROL). IEEE, pp 133–138

Brière D, Traverse P (1993) Airbus a320/a330/a340 electrical flight controls-a family of fault-tolerant systems. In: FTCS-23 The Twenty-Third International Symposium on Fault-Tolerant Computing. IEEE, pp 616–623

Poksawat P, Wang L, Mohamed A (2017) Gain scheduled attitude control of fixed-wing uav with automatic controller tuning. IEEE Trans Control Syst Technol 26(4):1192–1203

Doyle J, Lenz K, Packard A (1987) Design examples using μ-synthesis: Space shuttle lateral axis fcs during reentry. In: Modelling, Robustness and Sensitivity Reduction in Control Systems. Springer, pp 127–154

Kulcsar B (2000) Lqg/ltr controller design for an aircraft model. Period Polytech Transp Eng 28(1-2):131–142

Hussain A, Hussain I, Mir I, Afzal W, Anjum U, Channa B A (2020) Target parameter estimation in reduced dimension stap for airborne phased array radar. In: 2020 IEEE 23rd International Multitopic Conference (INMIC). IEEE, pp 1–6

Hussain A, Anjum U, Channa B A, Afzal W, Hussain I, Mir I (2021) Displaced phase center antenna processing for airborne phased array radar. In: 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST). IEEE, pp 988–992

Escareno J, Salazar-Cruz S, Lozano R (2006) Embedded control of a four-rotor uav. In: 2006 American Control Conference. IEEE, pp 6–pp

Derafa L, Ouldali A, Madani T, Benallegue A (2011) Non-linear control algorithm for the four rotors uav attitude tracking problem. Aeronaut J 115(1165):175–185

Adams R J, Banda S S (1993) Robust flight control design using dynamic inversion and structured singular value synthesis. IEEE Trans Control Syst Technol 1(2):80–92

Zhou Y (2018) Online reinforcement learning control for aerospace systems

Kaelbling L P, Littman M L, Moore A W (1996) Reinforcement learning: A survey. J Artif Intell Res 4:237–285

Zhou C, He H, Yang P, Lyu F, Wu W, Cheng N, Shen X (2019) Deep rl-based trajectory planning for aoi minimization in uav-assisted iot. In: 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP). IEEE, pp 1– 6

Bansal T, Pachocki J, Sidor S, Sutskever I, Mordatch I (2017) Emergent complexity via multi-agent competition. arXiv:1710.03748

Du W, Ding S, Zhang C, Du S (2021) Modified action decoder using bayesian reasoning for multi-agent deep reinforcement learning. Int J Mach Learn Cybern 12(10):2947–2961

Liu Y, Liu H, Tian Y, Sun C (2020) Reinforcement learning based two-level control framework of uav swarm for cooperative persistent surveillance in an unknown urban area. Aerosp Sci Technol 98:105671

Xu D, Hui Z, Liu Y, Chen G (2019) Morphing control of a new bionic morphing uav with deep reinforcement learning. Aerosp Sci Technol 92:232–243

Lin X, Liu J, Yu Y, Sun C (2020) Event-triggered reinforcement learning control for the quadrotor uav with actuator saturation. Neurocomputing 415:135–145

Kim D, Oh G, Seo Y, Kim Y (2017) Reinforcement learning-based optimal flat spin recovery for unmanned aerial vehicle. J Guid Control Dyn 40(4):1076–1084

Dutoi B, Richards N, Gandhi N, Ward D, Leonard J (2008) Hybrid robust control and reinforcement learning for optimal upset recovery. In: AIAA Guidance, Navigation and Control Conference and Exhibit, p 6502

Wickenheiser A M, Garcia E (2008) Optimization of perching maneuvers through vehicle morphing. J Guid Control Dyn 31(4):815–823

Novati G, Mahadevan L, Koumoutsakos P (2018) Deep-reinforcement-learning for gliding and perching bodies. arXiv:1807.03671

Kroezen D (2019) Online reinforcement learning for flight control: An adaptive critic design without prior model knowledge

Ding S, Zhao X, Xu X, Sun T, Jia W (2019) An effective asynchronous framework for small scale reinforcement learning problems. Appl Intell 49(12):4303–4318

Rastogi D (2017) Deep reinforcement learning for bipedal robots

Haarnoja T, Ha S, Zhou A, Tan J, Tucker G, Levine S (2018) Learning to walk via deep reinforcement learning. arXiv:1812.11103

Silver D, Huang A, Maddison C J, Guez A, Sifre L, Van Den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M et al (2016) Mastering the game of go with deep neural networks and tree search. Nature 529(7587):484–489

Xenou K, Chalkiadakis G, Afantenos S (2018) Deep reinforcement learning in strategic board game environments. In: European Conference on Multi-Agent Systems. Springer, pp 233–248

Koch W, Mancuso R, West R, Bestavros A (2019) Reinforcement learning for uav attitude control. ACM Trans Cyber-Phys Syst 3(2):1–21

Hu H, Wang Q- (2020) Proximal policy optimization with an integral compensator for quadrotor control. Front Inf Technol Electr Eng 21(5):777–795

Kimathi S (2017) Application of reinforcement learning in heading control of a fixed wing uav using x-plane platform

Pham H X, La H M, Feil-Seifer D, Nguyen L V (2018) Autonomous uav navigation using reinforcement learning. arXiv:1801.05086

Rodriguez-Ramos A, Sampedro C, Bavle H, De La Puente P, Campoy P (2019) A deep reinforcement learning strategy for uav autonomous landing on a moving platform. J Intell Robot Syst 93(1-2):351–366

Roskam J (1985) Airplane design 8vol

Petterson K (2006) Cfd analysis of the low-speed aerodynamic characteristics of a ucav. AIAA Paper 1259:2006

Finck RD, (US) A F F D L, Hoak DE (1978) Usaf stability and control datcom. Engineering Documents

Buning P G, Gomez R J, Scallion W I (2004) Cfd approaches for simulation of wing-body stage separation. AIAA Paper 4838:2004

Uyanık G K, Güler N (2013) A study on multiple linear regression analysis. Procedia-Soc Behav Sci 106:234–240

Olive D J (2017) Multiple linear regression. In: Linear regression. Springer, pp 17–83

Roaskam J (2001) Airplane flight dynamics and automatic flight controls. vol Part1

Hafner R, Riedmiller M (2011) Reinforcement learning in feedback control. Mach Learn 84 (1-2):137–169

Laroche R, Feraud R (2017) Reinforcement learning algorithm selection. arXiv:1701.08810

Kingma D P, Ba J (2014) Adam: A method for stochastic optimization. arXiv:1412.6980

Bellman R (1966) Dynamic programming. Science 153(3731):34–37

Bellman R E, Dreyfus S E (2015) Applied dynamic programming. Princeton university press

Liu D, Wei Q, Wang D, Yang X, Li H (2017) Adaptive dynamic programming with applications in optimal control. Springer

Luo B, Liu D, Wu H-N, Wang D, Lewis F L (2016) Policy gradient adaptive dynamic programming for data-based optimal control. IEEE Trans Cybern 47(10):3341–3354

Bouman P, Agatz N, Schmidt M (2018) Dynamic programming approaches for the traveling salesman problem with drone. Networks 72(4):528–542

Silver D, Lever G, Heess N, Degris T, Wierstra D, Riedmiller M (2014) Deterministic policy gradient algorithms

Matignon L, Laurent G J, Le Fort-Piat N (2006) Reward function and initial values: better choices for accelerated goal-directed reinforcement learning. In: International Conference on Artificial Neural Networks. Springer, pp 840–849

Gleave A, Dennis M, Legg S, Russell S, Leike J (2020) Quantifying differences in reward functions. arXiv:2006.13900

Gul F, Rahiman W, Alhady SS, Ali A, Mir I, Jalil A (2021) Meta-heuristic approach for solving multi-objective path planning for autonomous guided robot using pso–gwo optimization algorithm with evolutionary programming. J Ambient Intell Human Comput 12(7):7873–7890

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Din, A.F.U., Akhtar, S., Maqsood, A. et al. Modified model free dynamic programming :an augmented approach for unmanned aerial vehicle. Appl Intell 53, 3048–3068 (2023). https://doi.org/10.1007/s10489-022-03510-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03510-7