Abstract

This paper deals with the estimation of kurtosis on large datasets. It aims at overcoming two frequent limitations in applications: first, Pearson's standardized fourth moment is computed as a unique measure of kurtosis; second, the fact that data might be just samples is neglected, so that the opportunity of using suitable inferential tools, like standard errors and confidence intervals, is discarded. In the paper, some recent indexes of kurtosis are reviewed as alternatives to Pearson’s standardized fourth moment. The asymptotic distribution of their natural estimators is derived, and it is used as a tool to evaluate efficiency and to build confidence intervals. A simulation study is also conducted to provide practical indications about the choice of a suitable index. As a conclusion, researchers are warned against the use of classical Pearson’s index when the sample size is too low and/or the distribution is skewed and/or heavy-tailed. Specifically, the occurrence of heavy tails can deprive Pearson’s index of any meaning or produce unreliable confidence intervals. However, such limitations can be overcome by reverting to the reviewed alternative indexes, relying just on low-order moments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Undoubtedly, Karl Pearson can be considered as the “father” of kurtosis: indeed, he was the first statistician who firmly stated that several phenomena could be represented by means of frequency distributions which significantly differ from the Gaussian [see Pearson (1894)]. To represent such distributions, he introduced the well-known “Pearson’s system,” whose elements depart from normality according to the values taken by their moments. Specifically, the standardized third moment \(\beta _1\) reflects departure from symmetry while the standardized fourth moment \(\beta _2\) reflects the degree of what Pearson called “flat-toppedness” of the distribution. It was only in Pearson (1905), however, that the term “kurtosis” was coined and that \(\beta _2\), as compared with the value 3 taken for the normal distribution, was firstly identified as a measure of kurtosis (the interested reader can refer to Fiori and Zenga (2009) for an exhaustive review of the genesis of \(\beta _2\) in Pearson’s thought).

Over time, the use of \(\beta _2\) as a measure of kurtosis was extended, beyond Pearson’s system, to the entire universe of frequency distributions. However, in this passage, some problems arose. First, out of Pearson’s system, \(\beta _2\) cannot be simply linked to the value taken by the density function at the mean [see Kaplansky (1945)]. Thus, to provide a general interpretation, Darlington [see Darlington (1970)] linked \(\beta _2\) to the tendency to bimodality, while Finucan [see Finucan (1964)] underlined its concurrent sensitivity to the “tailedness” of the distribution. Second, and more dangerously, \(\beta _2\) is undefined for distributions with infinite fourth moment.

Despite the lines of criticism above, \(\beta _2\) is nowadays often considered as the main measure of kurtosis and sometimes it is even identified with such a phenomenon, which reflects something vaguely connected to tails of the distribution. However, alternative measures have been proposed in the literature. There are, actually, large differences among them, motivated by the fact that the definition of kurtosis rests on location, scale and skewness, which are vague concepts as well. For instance, Balanda and McGillivray [see Balanda and MacGillivray (1988)] write that “a shape characteristic that we call kurtosis can be vaguely defined as the location and scale-free movement of probability mass from the shoulders of a distribution into its center and tails,” but, to apply such a logic, one needs both to provide a sharp definition of location, scale and skewness and, with more difficulties, to establish where the shoulders and the tails of a distribution are positioned. The entanglement of kurtosis with skewness is, in effect, the most cumbersome problem in this system of cross-referenced summary measures of a distribution: while it is easy to find scale measures which are independent of location, for instance, kurtosis indexes may behave differently according to the underlying degree of skewness [see Balanda and MacGillivray (1990), Blest (2003), and Jones et al. (2011)].

Today, researchers can thus rely on many alternatives of \(\beta _2\) to measure kurtosis and, as a result, choosing the one to be computed on a given dataset is hard, both because they are all reasonable and because their efficacy may strongly depend on other unexplored characteristics of the same data. Operationally, there are, in this sense, some open questions about kurtosis: does the performance of the mostly known indexes significantly differ when they are computed on data? Specifically, if data are samples, does the need for a extremely large size prevents to use some particular indexes? How such performances and such indications about the sample size are affected by other possible shape deviations of the underlying population, like skewness?

In this paper, we will try to answer some of the questions addressed above. We will focus on the recent approach to kurtosis due to Zenga [see Zenga (1996) and Zenga (2006)], but we will use Pearson’s \(\beta _2\) and Geary’s \(\alpha _G\) [see Geary (1936)], which is also a widespread classical index, as benchmarks. Actually, Zenga (1996) introduced a kurtosis curve and two kurtosis indexes, but the following literature explored their properties just from a descriptive point of view [see Zenga (2006) and the references therein]. After briefly reviewing that literature, we will thus provide the sample asymptotic distribution of the two indexes and introduce two asymptotic confidence intervals for the related population parameters. In our opinion, that can be considered as a first achievement of the paper. In addition, we will report some results of a simulation study aimed at comparing the sample performances of the considered indexes, along with their sensitivity to the sample size and the shape characteristics of the population.

The paper is organized as follows. In Sect. 2, Zenga’s approach to kurtosis is reviewed, along with a variant due to De Capitani and Polisicchio [see De Capitani and Polisicchio (2016)], and the kurtosis indexes \(K_2(\mu )\), \(K_2(\gamma )\), \(K_1(\mu )\), \(K_1(\gamma )\) are recalled. In Sect. 3, the asymptotic distributions of the estimators of such indexes are derived, along with the ones for Pearson’s \(\beta _2\) and Geary’s \(\alpha _G\). In Sect. 4, a simulation study is performed in order to evaluate the accuracy of the approximation provided by the asymptotic theory and the practical applications of the considered indexes on large datasets. Sect. 5 concludes.

2 Recalling Zenga’s approach to kurtosis

Zenga (1996) based the study of kurtosis on suitable transformations of a random variable X which produce new distributions possibly characterized by different levels of kurtosis, while maintaining the same median and the same mean absolute deviation around the median. Equivalently, after denoting by \(\gamma\) the median of X, a change in the concentration of the random variables

is produced by the transformations above, which, in these sense, resembles those considered in the Pigou-Dalton “principle of transfers”. Thus, the appeal of Zenga’s approach rests in the opportunity to use all the well-established tools for concentration of positive variables in the context of kurtosis. A good review of this approach is given in Zenga (2006), where the international reader can found details about the original references on the topic. Further useful references are Fiori (2008), Domma (2004) and Fiori (2007), where a careful comparison between the logic of the two indexes \(K_2,\) \(K_1\) (details below) and of Pearson’s \(\beta _2\) is given. More recently, De Capitani and Polisicchio [see De Capitani and Polisicchio (2016)] reviewed Zenga’s approach by replacing the median \(\gamma\) with an arbitrary “cutting point” k in the definition of the two variables in (1). Such a substitution, especially if k is chosen as the mean \(\mu\), turns out to be a powerful alternative when kurtosis in studied in asymmetric models. In the following, we will then start with a generic k and set \(k=\gamma\) or \(k = \mu\) when needed.

Let X be a random variable with continuous distribution function F. Assume that X has finite expectation \(\mu =\mathrm{E}[X]\) and median \(\gamma = F^{-1}(0.5)\). Moreover, suppose to measure the dispersion of X by its mean absolute deviation around k:

(note that, when unspecified, integrals range from \(-\infty\) to \(+\infty\)). Now, re-define the variables in (1) as

and let \(F_{Dk}\) and \(F_{Sk}\) denote their distribution functions, which can be easily determined from F. Finally, denote by \(\delta _{Dk}\) and \(\delta _{Sk}\) the expectations of \(D_k\) and \(S_k\) and notice that \(\delta _k = q_k \, \delta _{Sk} + (1-q_k) \, \delta _{Dk},\) where \(q_k=F(k).\) Obviously, \(D_k\) and \(S_k\) are, by construction, non-negative random variables. Their Lorenz curves are thus:

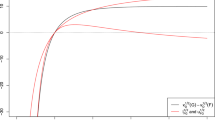

Zenga’s kurtosis diagram of the random variable X is defined as the graph of the following function \(G_k: [-1,1]\rightarrow [0,1]\),

Consistently, a synthetic index of kurtosis (denoted here as \(K_2(k)\) to emphasize its dependency on k) is defined as the weighted average of the Gini concentration indexes of \(D_k\) and \(S_k\):

Notice that the two components of \(K_2(k)\) can be rewritten in terms of Gini’s mean difference: after setting

one gets

The two ratios above evaluate the kurtosis of X on the left and on the right of k, by looking at the relative variability of \(S_k\) and \(D_k\), respectively. By a similar logic, Zenga (1996) considered an alternative index based on the ratios between variances and second moments,

which give

When the cutting point k is chosen as \(\mu\) or \(\gamma ,\) some simplifications apply. For instance, it is easy to notice that \(\delta _{S_{\mu }} = (2 \, q_{\mu })^{-1} \, \delta _{\mu }\) and \(\delta _{D_{\mu }} = ((2 \, (1-q_{\mu }))^{-1} \, \delta _{\mu },\) so that

[see De Capitani and Polisicchio (2016)], where \(\delta _\mu\) and \(\varDelta = \int \int |x-y| \, \, {\mathrm{d}}F \, \, {\mathrm{d}}F\) are not actually referred to any conditional distributions. As emphasized in the following, \(K_2(\mu )\) is, in effect, the only considered index which does not need to be separated into components to be evaluated. Other simplifications come after noticing that \(q_{\gamma } = 1/2\) so that, for instance, \(\delta _{D_{\gamma }} = 2\, \delta _{\gamma } - \delta _{S_{\gamma }}.\)

Despite some possible simplifications, the occurrence of conditional distributions is the main problem in the determination of the asymptotic distributions of the sample estimators of the above-defined indexes. To ease such a process, in the following we will express our indexes in terms of some suitable non-conditional random variables. Consider the indicator function \(\mathbbm {1}(A)\) on the set A and define

Similarly, consider the functions

and notice that

After choosing \(k=\gamma\) or \(k=\mu ,\) the considered kurtosis indexes can be easily re-defined as functionals of the following non-conditional random variables:

More specifically, begin by considering the expectation as a first functional and set

It turns out that

Now consider Gini’s mean difference as a second functional, that is, for every random variable X,

and define

One can easily notice that

so that \(\varDelta _{S_\gamma }=4(\varDelta ^--\delta ^-).\) Similarly, \(\varDelta _{D_\gamma }=4(\varDelta ^+-\delta ^+),\) which finally gives:

When the cutting point is \(k = \mu ,\) the two functionals above let one define

so that the needed summary measures of the conditional random variables in (3) can be computed as

and as

Consequently, the components of the index \(K_2(\mu )\) are written as

Now notice that \(d^-=d^+=\delta _\mu / {2}\) and \(D^-+D^+=\varDelta ,\) so that (4) is actually re-obtained: once again, the definition of \(K_2(\mu )\) does not need any conditional distributions. However, the decomposition provided by (7) is likely to be useful in those applications where the left and right kurtosis should be studied separately. For instance, in a statistical test aiming at comparing the two sides of kurtosis, one might need to know the joint sample distribution of the estimators of the two components.

Let us now turn to the indexes \(K_1(\gamma )\) and \(K_1(\mu ).\) To re-define them in terms of non-conditional random variables, we need to consider the \(l-\)th moments as well \((l \ge 2).\) Define first

and consider, for now, \(\delta _2^-\) and \(\delta _2^+:\) by definition,

so that \(\mathrm{E}(S_{\gamma }^2)=2\delta _2^-\) and, similarly, \(\mathrm{E}(D_{\gamma }^2)=2\delta _2^+.\) It is easily seen that

and that

When the cutting point \(k=\mu\) is chosen and the \(l-\)th moments are considered,

one can easily obtain that

and

On passing, notice that when the distribution of X is symmetric, \(\gamma =\mu\) and \(d_2^-=d_2^+=\sigma ^2/2\), where \(\sigma ^2\) denotes the variance of X. In this chance, \(K_1(\mu )=K_1(\gamma )=1-(\delta _{\mu }/\sigma )^2,\) which is closely related to the definition of Geary’s kurtosis measure \(\alpha _G=\delta _\mu / \sigma .\)

3 Asymptotics

3.1 Asymptotic theory for \(K_2(\mu )\) and \(K_2(\gamma )\)

As above outlined, the first aim of this paper is to derive the asymptotic distribution of the natural estimators of the considered kurtosis indexes. Let us start from the “simplest” of them, \(K_2(\mu ).\) After easing notation by \(q = q_{\mu }\) and \(d=\delta _{\mu },\) recall that

Now let \((X_1,\ldots ,X_n)\) be a iid random sample from F and consider

where \({\widehat{\varDelta }}_n=\frac{1}{n^2}\sum _{i=1}^{n}\sum _{j=1}^{n}|X_i-X_j|,\) \({\widehat{d}}_n=\frac{1}{n}\sum _{i=1}^{n}|X_i-{{\widehat{\mu }}}_n|\) and \({\widehat{\mu }}_n=\frac{1}{n}\sum _{i=1}^{n}X_i.\) Notice that, in the definition of the estimator \({{\widehat{K}}}_{2n}({{\widehat{\mu }}}_n),\) the cutting point \(\mu\) is estimated from data as well. When the integral representation of the latter estimators is recalled, it is not difficult to approximate them by suitable linear combinations of the sample. After denoting by \({\widehat{F}}_n\) the empirical distribution function, for instance,

which is a well-known result by Hoeffding (1948). We will use a similar logic for the remaining component \({\widehat{d}}_n\) in (10); the following lemma, whose proof is found in De Capitani and Pasquazzi (2015), is of use:

Lemma 1

Let F be a continuous distribution function and let \((X_1,X_2,\ldots ,X_n)\) be a iid random sample from F. Denote by \({\widehat{F}}_n\) the empirical distribution function, and let \({\widehat{z}}_n=T({\widehat{F}}_n)\) be an estimator of \(z=T(F)\) such that \({\widehat{z}}_n=z+O_P(n^{-1/2})\) for \(n\rightarrow \infty\). Now consider the estimators

of the functionals

Then, the following asymptotic representations hold:

Lemma 1 easily gives:

Of course, the linearization of \({{\widehat{\varDelta }}}_n\) and \({\widehat{d}}_n\) is a useful step to derive both their marginal asymptotic distributions (a known result in the literature) and their joint distributions, which is the key for the needed conclusion in Theorem 1. Before stating it, let us define two further functionals for a given random variable \(X \sim F,\)

and set, to ease notation,

Theorem 1

Let \((X_1,X_2,\ldots ,X_n)\) be a iid random sample from a continuous distribution function F such that \(\mathrm{E}(X_1)=\mu <\infty\) and \(\mathrm{E}[(X_1-\mathrm{E}(X_1))^2]=\sigma ^2<\infty\). Then, the vector \(\sqrt{n}\left[ {{\widehat{\varDelta }}}_n-\varDelta \, , \, {\widehat{d}}_n-{d} \right] ^{T}\) is asymptotically normally distributed with zero mean and variance matrix \({{\varvec{\varSigma }}},\) where

As a consequence, \(\sqrt{n}({\widehat{K}}_{2n}({{\widehat{\mu }}}_n)-K_2(\mu ))\) is asymptotically normal with zero mean and variance

Proof

Thanks to the linearizations above, the result is a simple application of the central limit theorem, the Cramer–Wold device and the delta method. The expressions for \(\varSigma _{11}\) and \(\varSigma _{22}\) can be found in Hoeffding (1948) and Gastwirth (1974), respectively. Concerning the asymptotic covariance \(\varSigma _{12}\), note first that

Hence,

\(\square\)

On passing, notice that asymptotic linearizations similar to (11) and (13) can be provided for the \(m-\)th sample centered moment \({{\widehat{\mu }}} _{mn} = \frac{1}{n} \sum _{i=1}^{n} (X_i - {\widehat{\mu }}_{n})^m,\) for every real m (see Serfling (1980), p. 72). Hence, the asymptotic distribution of the estimators of Geary’s \(\alpha _G\) and Pearson’s \(\beta _2\)

where \({\widehat{\sigma }}^2_n={{\widehat{\mu }}} _{2n},\) are easily derived as in the next theorem:

Theorem 2

Let \((X_1,X_2,\ldots ,X_n)\) be a iid random sample from a continuous distribution function F such that \(\mathrm{E}(X_1)=\mu <\infty\) and \(\mathrm{E}[(X_1-\mathrm{E}(X_1))^l]=\mu _l<\infty\) up to \(l=4.\) Then, \(\sqrt{n}({\widehat{\alpha }}_{Gn}-\alpha _G)\) is asymptotically normally distributed with zero mean and variance

where

Moreover, under the assumption that \(\mu _l<\infty\) up to \(l=8,\) \(\sqrt{n}({\widehat{\beta }}_{2n}-\beta _2)\) is asymptotically normal with zero mean and variance

where

Proof

The representation of \({\widehat{d}}_n\) in (13) can be used, along with

to prove that the two statistics are asymptotically jointly normal. Now consider that, after some computations, \(\mathrm{Cov}[g_3(X), g_2(X)]= \frac{1}{\sigma }\left[ \mu _3 \, q-d_3^-\right] -\frac{\sigma \, d}{2},\) so that the delta method easily gives the result for \({{\widehat{\alpha }}}_{Gn}.\) Concerning \({{\widehat{\beta }}}_{2n},\) one can use Theorem B in Serfling (1980), pag. 72 to show that the second and the fourth sample centered moments are jointly asymptotically normal, and again, the result follows from a simple application of the delta method. \(\square\)

We now turn to the estimation of \(K_2(\gamma ).\) After recalling (6), a first step is to estimate \(\delta ^-\) and \(\delta ^+\) by

respectively. In the formula above, \({\widehat{\gamma }}_n=\inf \left\{ x: {\widehat{F}}_n(x)\ge {1}/{2}\right\}\) denotes the sample median, which, assuming that F has density f strictly positive and continuous in \(\gamma\), is known to admit the following asymptotic representation (see Serfling (1980), p. 77):

Moreover, Lemma 1 can be applied again to get

Similarly, Lemma 1 gives \({{\widehat{\delta }}}^+_n-\delta ^+ = \frac{1}{n}\sum _{i=1}^{n}l_4(X_i)+o_P(n^{-1/2}),\) where

However, to get a linearization for the estimators of \(\varDelta ^-\) and \(\varDelta ^+,\)

a different statement is needed:

Lemma 2

Under the assumptions of Lemma 1, consider the estimators

respectively, of the functionals

Then, the following asymptotic representations hold:

For the sake of readability, the proof of Lemma 2 is reported in Appendix. Its application easily gives:

where the first summand can be regarded as the mean difference of the pseudo-sample \(\left\{ (\gamma -X_i)\, \mathbbm {1}(X_i\le \gamma )\right\} _{i=1}^{n}\). By the usual asymptotic representation, one thus obtains:

Analogously, \({{\widehat{\varDelta }}}_n^+-\varDelta ^+=\frac{1}{n}\sum _{i=1}^{n}l_2(X_i)+o_p(n^{-1/2})\) where

We are now ready to provide the asymptotic distribution of the natural estimator of \(K_2(\gamma ),\)

in the next theorem, whose statement requires to set

Theorem 3

Let \((X_1,X_2,\ldots ,X_n)\) be a iid random sample from an absolutely continuous distribution function F whose density f is strictly positive and continuous at \(\gamma\). Assume that \(\mathrm{E}(X_1)<\infty\) and \(\mathrm{E}[(X_1-\mathrm{E}(X_1))^2]<\infty\). Then, \(\sqrt{n}({\widehat{K}}_{2n}({{\widehat{\gamma }}}_n)-K_2(\gamma ))\) is asymptotically normally distributed with zero mean and variance \(\sigma ^2_{K_2(\gamma )} = {\mathbf {s}}^\intercal {{\varvec{\varSigma }}}{\mathbf {s}},\) where \({\mathbf {s}}^\intercal = \left[ \frac{1}{2\delta ^-} \, , \, \frac{1}{2\delta ^+} \, , \, -\frac{\varDelta ^-}{2(\delta ^-)^2} \, , \, -\frac{\varDelta ^+}{2(\delta ^+)^2} \right]\) and \({{\varvec{\varSigma }}} = \left( \varSigma _{ij} \right) _{i,j=1, \ldots , 4}\) is a symmetric matrix with elements:

From the proof of the theorem, which is left in Appendix, one can also easily derive the asymptotic distributions of the two components of \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\) or of their difference. Even if details are omitted here, notice that those results could be useful in many testing problems aiming at comparing the right and the left side of kurtosis. As above anticipated, despite no decomposition is needed to get (10), when those testing problems arise, one can apply a similar reasoning to the single components of \({{\widehat{K}}}_{2n}({{\widehat{\mu }}}_n)\) as well. The evaluation of Zenga’s indexes as tools to compare different sides of kurtosis will be the object of a future research.

3.2 Asymptotic theory for \(K_1(\mu )\) and \(K_1(\gamma )\)

After recalling (9), a natural estimator for \(K_1(\mu )\) is easily obtained from

and

Again, a linear approximation for \(d^-_n\) is provided by Lemma 1. For \(d_{2n}^-\) and \(d_{2n}^+\) a new statement is needed (proof in Appendix):

Lemma 3

Under the assumptions of Lemma 1, consider the estimators

of the functionals

Then, the following asymptotic representations hold:

where \(I_1\) and \(I_2\) are as in (12).

Now,

and a similar result is obtained for \({{\widehat{d}}}_{2n}^+.\) The next theorem (stated without proof) follows:

Theorem 4

Let \((X_1,X_2,\ldots ,X_n)\) be a random sample from a continuous distribution function F such that \(\mathrm{E}(X_1)<\infty\) and \(\mathrm{E}[(X_1-\mathrm{E}(X_1))^4]<\infty\). After considering

the random variable \(\sqrt{n}({\widehat{K}}_{1n}({{\widehat{\mu }}}_n)-K_1(\mu ))\) is asymptotically normally distributed with zero mean and variance \(\sigma ^2_{K_1(\mu )} = {\mathbf {s}}^\intercal {{\varvec{\varSigma }}}{\mathbf {s}},\) where \({\mathbf {s}}^\intercal =\left[ -\frac{2d^-}{d_2^-}-\frac{2d^-}{d_2^+} \, \, , \, \left( \frac{d^-}{d_2^-}\right) ^2 , \, \left( \frac{d^-}{d_2^+}\right) ^2 \right]\) and \({{\varvec{\varSigma }}} = \left( \varSigma _{ij} \right) _{i,j=1, 2, 3}\) is a symmetric matrix with elements:

Finally, to estimate \(K_1(\gamma )\) as defined in (8), the tools in (14) can be used jointly with

While an asymptotic representation for \({{\widehat{\delta }}}_{n}^-\) and \({{\widehat{\delta }}}_{n}^+\) has been already provided in (16) and (17), respectively, for \({{\widehat{\delta }}}_{2n}^-\) and \({{\widehat{\delta }}}_{2n}^-\) another application of Lemma 3, jointly with (15) is needed: \({\widehat{\delta }}_{2n}^--\delta _2^-=\frac{1}{n}\sum _{i=1}^{n}r(X_i)+o_P(n^{-1/2})\) with

(and analogously for \({\widehat{\delta }}_{2n}^+\)). The next theorem, whose proof is omitted as well, follows easily:

Theorem 5

Let \((X_1,X_2,\ldots ,X_n)\) be a random sample from an absolutely continuous distribution function F whose density f is strictly positive and continuous at \(\gamma =F^{-1}(1/2)\). Assume that that \(\mathrm{E}(X_1)<\infty\) and \(\mathrm{E}[(X_1-\mathrm{E}(X_1))^4]<\infty\). Then, the random variable \(\sqrt{n}({{\widehat{K}}}_{1n}({{\widehat{\gamma }}}_n)-K_1(\gamma )),\) with

is asymptotically normal with zero mean and variance \(\sigma ^2_{K_1(\gamma )} = {\mathbf {s}}^\intercal {{\varvec{\varSigma }}}{\mathbf {s}},\) where \({\mathbf {s}}^\intercal =\left[ -\frac{2\delta ^-}{\delta _2^-}\, \, , \, \, -\frac{2\delta ^-}{\delta _2^+} \, \, , \, \, \left( \frac{\delta ^-}{\delta _2^-}\right) ^2 \, \, , \, \, \left( \frac{\delta ^-}{\delta _2^+}\right) ^2 \right]\) and \({{\varvec{\varSigma }}} = \left( \varSigma _{ij} \right) _{i,j=1, \ldots , 4}\) is a symmetric matrix whose elements are:

4 Simulation study

The asymptotic theory for the considered kurtosis indexes provides deeper tools than simple point estimation, thanks to the evaluation of standard errors and the computation of confidence intervals. Despite that all the asymptotic distributions above share the same theoretical rate of convergence, however, the quality of their approximation may result differently good when the indexes are computed on samples with a fixed, though large, size. That is likely to depend both on the chosen index and on the characteristics of the sampled population. With this respect, notice that, even if one could think to improve results by suitable modifications in the definition of a given index (or sometimes in the computation of its standard error), such changes often depend on the choice of a specific model for the population. In many applications, however, the user just wonders which index to choose for kurtosis, without the possibility of making any preliminary assumption, because it is not yet clear whether the underlying population is affected by skewness or heavy tails.

To get further insight about the practical usage of the considered indexes on (large) samples, this section reports some results of a simulation study aimed at comparing the coverage rates of the related confidence intervals and, more generally, at evaluating their performance as estimators. To get coverage, data were randomly drawn from distributions for which the exact value of each kurtosis index can be computed as a function of the model parameters. The latter, in addition, were varied to explore a wide set of situations of kurtosis, skewness and heavy-tailedness. The usual Wald 0.95-confidence interval \({{\hat{\theta }}}_n {\mp } 1.96 \, (A{\widehat{S}}V/n)^{1/2}\) was applied where, for each sample index \({{\hat{\theta }}}_n,\) \(A{\widehat{S}}V\) denotes the estimated asymptotic variance according to the formulas provided in Theorems 1 to 5. On passing, notice that such formulas require to use simulated data to estimate several moments and functionals of the underlying population and of the variables in (5). At this aim, the ordered dataset \(x_{(1)}, \ldots x_{(n)}\) proves to be a useful tool both where the median is the cutting point (for instance, \(\delta _2^-\) can be estimated as \(\frac{1}{n} \sum _{i=1}^{m-1} \left( x_{(m)}-x_{(i)}\right) ^2_,\) where \(m =\lfloor 0.5(n+1) \rfloor\)) and to ease the computation of some sample functionals. Indeed, following Zenga et al. (2007), the estimate of \({{{\mathcal {F}}}}\) can be computed as

where \({{\bar{x}}}\) and \(s^2\) are the sample mean and variance and \(t_j=\sum _{i=1}^{j} x_{(i)}\) \((j=1, \ldots , n).\) Analogously, we obtain that a computationally easy formula for the estimate of \({{{\mathcal {D}}}}\) is

Consider first a set of simulations from the normal model. Despite the regularity of the distribution, the estimation process may be still strongly affected by the quality of the sampled data, especially if it rests on the evaluation of some “deep” characteristics of the population, like moments of high order. This fact is fairly known for the estimation of Pearson’s \(\beta _2\): to evaluate asymptotic standard errors, for instance, one needs to estimate central moments up to the 8-th order as well. In addition, though the estimator \({\widehat{\beta }}_{2n}\) is asymptotically unbiased, a not negligible bias can be observed even in large samples. Table 1 provides evidence of the two drawbacks: it reports the results obtained by computing \({\widehat{\beta }}_{2n}\) on 10,000 simulated samples from the standard normal distribution and by comparing it with its true value 3. First of all, notice that a significant bias (0.1136) is shown when the sample size is fixed to \(n=50\) and that such a bias is not completely negligible even for \(n=2000\) (still 0.0040), especially if compared with that corresponding to other indexes (commented below). Of course, some corrections to get unbiased estimates from \({{\widehat{\beta }}}_{2n}\) exist [see Joanes and Gill (1998)], but they rest on the assumption of normality of the population, which is suitable here but not generally and thus, as above commented, it is not safe for many applications. As a second issue, Table 1 underlines the problems arising in the evaluation of the standard error of \({{\widehat{\beta }}}_{2n}:\) the standard deviation of the 10,000 simulated values of \({{\widehat{\beta }}}_{2n}\) is compared with the mean of \((A {\widehat{S}} V/n)^{1/2}\) over the same samples. When \(n=50,\) a clear problem of underestimation of the standard error exists (0.4502 against 0.5933) and, again, this divergence is not completely negligible when n is raised to 2000 (0.1057 against 0.1093). More importantly for applications, the problems arising in bias and standard error of \({\widehat{\beta }}_{2n}\) reflect on a bad performance in terms of coverage: Table 1 shows that, for 0.95-confidence intervals based on samples of size \(n=50,\) the actual coverage (0.8065) is significantly far from the nominal value. One may check that no relevant changes can be induced by varying both the model parameters or the confidence level (results not shown) and that the coverage problem is not negligible even when \(n=2000\) (see Table 1).

Turning to the other considered kurtosis indexes, the problems above seem to have minor incidence, with some distinctions. Table 1 reports the true value of each kurtosis index for the normal model and the corresponding simulated bias on the 10,000 samples, which is considerably lower than the one for \({{\widehat{\beta }}}_2.\) Geary’s \({{\widehat{\alpha }}}_{Gn}\) is almost as unbiased as Zenga’s indexes based on the median (\({\widehat{K}}_{1n}({{\widehat{\gamma }}}_n)\) and \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\)), but its bias is of opposite sign: that is coherent with a general tendency to underestimate the real extent of kurtosis. Zenga’s indexes using the mean as a cutting point (\({\widehat{K}}_{1n}({{\widehat{\mu }}}_n)\) and \({{\widehat{K}}}_{2n}({{\widehat{\mu }}}_n)\)) suffer from a slightly higher bias than the latter ones: perhaps that can be ascribed to the scarce robustness of the sample mean. Nonetheless, the effect clearly vanishes when the sample size increases, which is sensible if one considers that, in the normal model, the mean and the median are actually the same parameter. For all indexes other than \({{\widehat{\beta }}}_{2n},\) the mean estimated asymptotic standard error is almost coincident with the simulated value. In this aspect (and conversely to the problems in bias), \({{\widehat{K}}}_{1n}({{\widehat{\mu }}}_n)\) and \({\widehat{K}}_{2n}({{\widehat{\mu }}}_n)\) behave better than \({\widehat{K}}_{1n}({{\widehat{\gamma }}}_n)\) and \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\) but, again, that effect is not significant when the sample size increases. Finally, the most relevant strength of the competitors of \({{\widehat{\beta }}}_{2n}\) in Table 1 is their ability to reach coverage rates very close to the nominal value. This fact seems to be particularly true for \({{\widehat{K}}}_{1n}({{\widehat{\gamma }}}_n)\) (and partly for the related \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\)), even if the values in the table should be judged with some caution, due to the simulation error which can be roughly assessed as \({\mp } 0.0065\) for 10,000 replications. Anyway, practical differences from the nominal 0.95 are observed just for the case \(n=50\) and they are surely of minor relevance, if compared with those commented above for \({{\widehat{\beta }}}_{2n}\).

A second set of simulations was conducted by using the T-distribution, to get insight about the sensitivity of the considered indexes to heavy-tailedness. A new problem arises because, to guarantee the existence of the \(r-\)th moment, the distribution needs to have at least \(r+1\) degrees of freedom (df). Thus, few df may prevent the computation of the true value of a given kurtosis index as population parameter, despite that its estimate can be still obtained from the simulated data. Obviously, under this chance (denoted as “scenario A”), the performance of the estimator cannot be even evaluated. More interestingly, another scenario (B) arises when the df are enough to compute a given population parameter, but too low to provide the existence of the asymptotic variance of its estimator, which is likely to depend on high-order moments. Being that the unaware user may still use the dataset to compute standard errors and confidence intervals, simulations are quite useful here, to evaluate the potential error occurred when using not properly defined tools. Naturally, each index can be evaluated under a third scenario C, where there are sufficient df both to guarantee its existence as a parameter and for the applicability of the related asymptotic theory.

Clearly, the index which mostly depends on the existence of moments is \(\beta _2.\) Table 2 reports a first set of simulations where \(n=50\) and the df are set to 3, which means that \(\beta _2\) falls under scenario A and that its estimator cannot be valued. Notice that there are consequences also for \(\alpha _G\), \(K_1(\mu )\), and \(K_1(\gamma ),\) which are all under scenario B: while the mean asymptotic standard error (which is theoretically undefined) departs just partially from the simulated value, the actual coverage is, indeed, always remarkably distant from the nominal 0.95. This problem seems to be more severe for the \(K_1-\) indexes than for \(\alpha _G,\) which shows also a lower bias. On the contrary, the \(K_2-\)indexes, which fall under scenario C, perform quite well in this critical situation, both from the point of view of bias and from that of coverage. If the df are raised to 5 (see Table 2, again with \(n=50\)), Pearson’s \(\beta _2\) falls under scenario B: the bias of its estimator, which can now be evaluated, reveals to be huge. As expected, this fact combines with a very bad coverage rate of the related confidence interval. The remaining indexes are now under scenario C, but, in spite of that, the estimators based on second moments (\({{\widehat{\alpha }}}_{Gn}\), \({{\widehat{K}}}_{1n}({{\widehat{\mu }}}_n)\), and \({\widehat{K}}_{1n}({{\widehat{\gamma }}}_n)\)) behave worse than those based just on the first moment (\({{\widehat{K}}}_{2n}({{\widehat{\mu }}}_n)\) and \({\widehat{K}}_{2n}({{\widehat{\gamma }}}_n)\)), which show practically no bias and almost exact coverage. Starting from 9 df, all the considered indexes are in scenario C, but notice that the performance of \({{\widehat{\beta }}}_{2n}\) is still far from being optimal, with no justification based on the existence of moments. Even when the df are raised to 15, the coverage rate for \({{\widehat{\beta }}}_{2n}\) reaches just 0.6626 when \(n=50\) (see details in Table 2). On the contrary, in this chance, the coverage for all other indexes gets quite close to the nominal value.

Overall, Table 2 shows that, when heavy-tailedness prevents the existence of moments, \({{\widehat{K}}}_{2n}({{\widehat{\mu }}}_n)\) and \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\) seem to be the most accurate choice, both because the related indexes are always defined (and thus their estimates are meaningful) and because a good inference is provided in a wide range of situations. It is interesting to underline that, differently from what happened with the normal model, the real criterion to distinguish among Zenga’s indexes seems not to be the cutting point (mean or median), but the index definition in terms of moments. To appreciate possible effects of the sample size, while Table 2 just considers cases where \(n=50,\) Table 3 concentrates on two relevant situations (5 df and 15 df) with raising values of n (250, 1000 and 2000). Large sample sizes obviously tend to even out differences among the indexes; however, the conclusions of Table 2 are substantially confirmed. Notice that, despite the high sample size, \({{\widehat{\beta }}}_{2n}\) cannot recover from its disadvantage. In addition, a certain tendency for over-coverage seems to characterize \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n).\)

Tables 2 and 3 show the effects of heavy tails conjugated with that of the non-existence of moments. To get further insight, a new set of simulations was considered under the the Generalized Error distribution [see Subbotin (1923)]. This model has a shape parameter \(\nu\) providing normality when \(\nu =2\) and heavy or light tails when \(\nu\) is, respectively, less or greater than 2, but, in contrast with the T-distribution, it possesses all moments. Table 4 shows some results obtained by a combination of three sample sizes (\(n=50; 250; 1000\)) and two values of the shape parameter (\(\nu =0.5; 4\)), while the location and the scale parameters of the model were always set to get zero mean and unit variance. The first part of the table, the one with heavy tails, resembles what faced for the T-distribution with few df: all indexes except \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\) and \({\widehat{K}}_{2n}({{\widehat{\mu }}}_n)\) suffer from the irregularity of the distribution and, while \({{\widehat{\alpha }}}_{Gn}\) and the \(K_1-\)indexes recover fast with the increase in the sample size, \({{\widehat{\beta }}}_{2n}\) is still quite inaccurate even for \(n=1000.\) On the contrary, in the second part of Table 4 (\(\nu =4\) and light tails), the situation is similar to the one of Table 1 (normal model): the performance of all indexes is far from being critical, with some limitations just for \({{\widehat{\beta }}}_{2n}.\) Heavy-tailedness, then, confirms to be a major inconvenience for the performance of many kurtosis indexes, beyond the problem of the existence of moments.

All the above considered simulations were based on symmetric models. To check for cases where the user faces skew data, Table 5 reports some results obtained with the standard skew-normal model [for a recent review, see Azzalini and Capitanio (2014)]. In this family, the standard normal distribution is obtained by setting the shape parameter (denoted here as \(\xi\)) to zero. Large positive values of \(\xi\) increase right skewness of the distribution, while negative values make it skewed to the left. For our purposes, the direction of skewness is not relevant; thus, just two positive values (1 and 4) are set; such values are combined with three sample sizes (50, 250 and 1000). Looking at Table 5, one can notice that \({{\widehat{\beta }}}_{2n}\) is also strongly affected by skewness, differently from the other considered indexes. However, when the degree of skewness increases, \({{\widehat{\alpha }}}_{Gn}\) reveals some problems as well, performing quite worse than in the case of symmetric distributions with light tails. On the contrary, Zenga’s indexes show a good robustness to skewness, with a dominance of those using the median as a cutting point (conjugated with a slight tendency to over-coverage).

Of course, skewness and heavy tails might jointly affect data and, to understand the effect of that, a final set of simulations was conducted by using the skew-T model [see Azzalini and Capitanio (2014)]. Not surprisingly, the index which best opposes the related “double” violation to normality is \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n).\) Indeed, as noticed from Table 2 on, the effect of heavy tails tends to favor indexes based on moments of first order. In addition, skewed distributions and a generic attitude to robustness lead to the dominance of the median as a cutting point (see Tables 1 and 5, for instance). The conclusions above are clearly depicted in Table 6 which considers, for the sake of brevity, just the case \(n=50\) and combines two values of the shape parameter (\(\xi = 1;4\)) with two levels of df (5 and 15). Notice that, like for the T-distribution, the existence of some moments depends on the latter parameter: in our settings, however, the only index under scenario B is \(\beta _2\) when there are 5 df, while all other indexes are under scenario in C in all situations considered in Table 6. Even after discarding cases where the application of the asymptotic theory is shaky, however, the performance of \({{\widehat{\beta }}}_{2n}\) results to be strongly affected by both skewness and heavy-taildeness. The same is partially true for \({\widehat{\alpha }}_{Gn}\) and mildly for the \(K_1-\)indexes. In addition, notice that a distinguishing feature of \({{\widehat{K}}}_{2n}({{\widehat{\gamma }}}_n)\) seems to be its definite tendency to over-coverage: this fact can be an advantage, because it leaves room to seek for shorter, thus more informative, confidence intervals. As a final comment, let us consider that Table 6 depicts some very realistic situations, resulted from the sum of many deviations from regularity. Therefore, one should be at least aware that, under many circumstances, the use of some classical indexes of kurtosis (markedly of \(\beta _{2}\)), or at least the use of a limited set of them, may lead to inaccurate results.

5 Conclusions

This paper gave indications about the usage of several kurtosis measures on large datasets. The need to estimate kurtosis is found in many fields of applications, basically in all those circumstances where departure from normality may cause the unreliability of classical tools and, more generally, where the shape of the distribution is of interest. The empirical finance is surely one of those fields as demonstrated by the ubiquitous fat-tailedness of daily stock returns, the widespread use of GARCH models and the attempts made in the literature to improve the Black–Scholes formula [see Corrado and Su (1996) and Carnero et al. (2004)]. At the same time, those examples show that kurtosis is too often simply identified with the centered fourth moments, while this paper shows that more informative measures can be considered. Actually, some practitioners may find it difficult to compute indexes other than the well-established \(\beta _2,\) mainly because they are unsure on how to interpret the obtained results. However, the meaning of those alternative indexes is quite similar to the one for \(\beta _2\), because it suffices to compare an observed value with its theoretical counterpart in the case of the normal distribution (which, for the indexes considered in this paper, is provided in the first row of Table 1). We want to highlight, in addition, that computing a different kurtosis index, or at least a range of alternative measures, can provide a series of advantages.

First of all, researchers should consider that, despite its size, a dataset is often a sample from a given population. Thus, the simple point estimation is meaningless unless accompanied by the corresponding standard error. Our simulations reveal that, while there is scarcely a problem of bias, all considered kurtosis measure are often characterized by high standard errors and, as a consequence, the real extent of kurtosis is likely to be different from the estimated value. To ease the interpretation, the user should then revert to confidence intervals which, however, must also provide reliable results. In this sense, the choice of a kurtosis index must match the need to guarantee the desired coverage of confidence intervals, against possible choices of the sample size or possible irregularities of the sampled distribution.

Something which is often neglected is that, even when the sampled population is quite regular, too low sample sizes can still lead to unreliable results, if some indexes are applied. As expected, such problems arise when the indexes are based on unrobust summaries of the sample. Specifically, when n is as low as 50, our simulations show that \(\beta _2\) cannot be properly estimated even for samples from the normal distribution: some problems arise both in bias both in the coverage of confidence intervals. Being based on the 4-th power of deviations from the mean, namely, \(\beta _2\) is likely to be strongly affected by the sample variability which, first, reflects into high standard errors. The real problem, however, is that even those standard errors cannot be easily estimated because, in turn, they depend on the 8-th centered moment. As a consequence, confidence intervals for \(\beta _2\) are quite large, thus not informative, and unreliable. The same problem, of course, affects other indexes, but to a lesser extent: among the considered indexes, \(\alpha _G,\) \(K_1(\mu )\) and \(K_1(\gamma )\) are defined upon moments up to the second order, so that the related standard errors need moments up to the fourth. A further improvement, of course, can be obtained by Zenga’s \(K_2-\)indexes, whose definition rests on first moments, while standard errors just depend on the second order. In our opinion, then, when the sample size is too low, the researcher cannot forget to include \(K_2(\mu )\) and (markedly) \(K_2(\gamma )\) in the set of computed kurtosis measures and to check whether they provide contrasting evidence with respect to the classical indexes.

High sample sizes, of course, make the choice of a specific index redundant. Our simulation show that, when n is around 200, all considered indexes provide similarly reliable information. However, this judgment fits just those situations (like the one depicted in Table 1) where the underlying distribution is quite regular, while it is not uncommon to face heavy-tailed and/or skewed populations. The former kind of irregularity is, in our opinion, the one which deserves most attention when a kurtosis index is chosen. First, heavy-tailed distributions often do not possess some moments, a fact which can make the point estimate meaningless, because the related parameter does not even exist. Sometimes this problem affects just the estimation of standard errors, but it can be considered almost as severe as before because, in this case, confidence intervals are not properly defined. Of course, a possible indication is to use preliminary statistical testing to check for the existence of the needed moments in the underlying distribution. The literature provides both graphical and parametric methods based on tail-index estimation [Hill (1975)] and, more recently, some nonparametric approaches using bootstrap [see Ng and Yau (2018)]. However, it is clear that the process to get a final assessment of kurtosis could results too cumbersome, at least if compared with the possibility of starting with indexes which are quickly robust against the nonexistence of moments, like \(K_2(\mu )\) and \(K_2(\gamma ).\) In addition, our simulations (see Table 4) show that heavy-tailedness itself may undermine the performance of some indexes, beyond the problem of the existence of moments, so that just robust indexes are actually a safe choice. The researcher must be aware, however, that even those indexes cannot always cope with too low sample sizes, as evidenced in Tables 2 and 4.

Overall, \(K_2(\mu )\) and \(K_2(\gamma )\) react to heavy-tailedness quite similarly. A distinction among them could be appreciated in the case of skewness. The use of the median as a cutting point seems to be the strength of \(K_2(\gamma )\) over \(K_2(\mu )\) (see Table 5). Nonetheless, under a mild skewness, the real answer is just a high sample size: excluding \(\beta _2,\) no relevant differences can be reported over 200 observations, similar to the case of a fully regular distribution. Of course, things change when skewness is conjugated with heavy tails: our simulation show that, in those cases, the only reliable choice is \(K_2(\gamma ),\) even if n is as high as 200.

As a final remark, we would like to underline that the conclusions above can be useful, beyond the process of the estimation of kurtosis, when researchers need to compare the levels of kurtosis of two populations or of two sides of a distribution. Indeed, suitable testing procedures can be built upon the asymptotic distributions derived in this paper, so that it is important to have knowledge of their sensitivity to the level of the sample size and to the characteristics of the sampled population. This study will be the object of a future research.

Concerning other topics of research, the above-reported need to use estimated kurtosis in many fields of application leaves, in our opinion, a relevant problem unexplored: the dependence of data across time. Such a characteristic is frequently encountered, for instance, in financial applications based on the analysis of returns. Beyond a specific framework, however, we think that the search for reliable inferential instruments for kurtosis under serial dependence is an urgent problem which deserves attention in the nearest future. That issue could be the starting point for the development of further generalizations of the presented methodology. In the literature, for instance, a certain need is exhibited to conjugate the study of kurtosis with the measurement of dependence among variables, to cope with the so-called co-kurtosis [see Martellini and Ziemann (2010)]. Again, we think that, even in the multivariate framework, some efforts could be paid to go well beyond the usual identification with the fourth centered moment, so that kurtosis can be measured by means of other logics.

Change history

23 July 2022

Missing Open Access funding information has been added in the Funding Note.

References

Azzalini, A., Capitanio, A.: The Skew-normal and related families. Cambridge University Press, Cambridge (2014)

Balanda, K.P., MacGillivray, H.L.: Kurtosis: a critical review. Am Stat 42, 111–119 (1988)

Balanda, K.P., MacGillivray, H.L.: Kurtosis and spread. Can J Stat 18, 17–30 (1990)

Blest, D.C.: A new measure of kurtosis adjusted for skewness. Aust N Z J Stat 45, 175–179 (2003)

Carnero, M.A., Peña, D., Ruiz, E.: Persistence and kurtosis in GARCH and stochastic volatility models. J Financ Econom 2, 319–342 (2004)

Corrado, C., Su, T.: Skewness and kurtosis in S&P 500 index returns implied by option prices. J Financ Res 19, 175–192 (1996)

Darlington, R.B.: Is kurtosis really “peakedness”?. Am Stat 24, 19–22 (1970)

De Capitani, L., Pasquazzi, L.: Inference for performance measures for financial assets. Metron 73, 73–98 (2015)

De Capitani, L., Polisicchio, M.: Some remarks on Zenga’s approach to Kurtosis. Statistica Applicazioni 14, 159–195 (2016)

Domma, F.: Kurtosis diagram for the Log-Dagum distribution. Statistica Applicazioni 2, 3–23 (2004)

Finucan, H.M.: A note on kurtosis. J R Stat Soc 26, 111–112 (1964)

Fiori, A.M.: Kurtosis measures: tradition, contradictions, alternatives. Statistica Applicazioni 5, 185-203 (2007)

Fiori, A.M.: Measuring kurtosis by right and left inequality orders. Commun Stat 37, 2665–2680 (2008)

Fiori, A.M., Zenga, M.M.: Karl Pearson and the origin of kurtosis. Int Stat Rev 77, 40–50 (2009)

Gastwirth, J.: Large sample theory of some measures of income inequality. Econometrica 42, 191–196 (1974)

Geary, R.C.: Moments of the ratio of the mean deviation to the standard deviation for normal samples. Biometrika 28, 295–307 (1936)

Hill, B.: A simple general approach to inference about the tail of a distribution. Ann Stat 3, 1163–1174 (1975)

Hoeffding, W.: A class of statistics with asymptotically normal distribution. Ann Math Stat 19, 293–325 (1948)

Joanes, D.N., Gill, C.A.: Comparing measures of sample skewness and kurtosis. J R Stat Soc 47, 183–189 (1998)

Jones, M.C., Rosco, J.F., Pewsey, A.: Skewness-Invariant measures of kurtosis. Am Stat 65, 89–95 (2011)

Kaplansky, I.: A common error concerning kurtosis. J Am Stat Assoc 40, 259 (1945)

Martellini, L., Ziemann, V.: Improved estimates of higher-order comoments and implications for portfolio selection. Rev Financ Stud 23, 1467–1502 (2010)

Ng, W.L., Yau, C.Y.: Test for the existence of finite moments via bootstrap. J Nonparametr Stat 30, 28–48 (2018)

Pearson, K.: Contributions to the mathematical theory of evolution. Philos Trans Roy Soc Lond A185, 71–114 (1894)

Pearson, K.: Skew variation, a rejoinder. Biometrika 4, 169–212 (1905)

Serfling, R.J.: Approximation theorems of mathematical statistics. Wiley (1980)

Subbotin, M.T.: On the law of frequency of error. Mat Sb 31, 296–301 (1923)

Zenga, M.: La curtosi. Statistica 56, 87–102 (1996)

Zenga, M.: Kurtosis. In: Kotz, S., Read, C.B., Balakrishnaa, N., Vidakovic, B. (eds.) Encyclopedia of statistical sciences (second edition). Wiley, New York (2006)

Zenga, M., Polisicchio, M., Greselin, F.: The variance of the Gini’s mean difference and its estimators. Statistica 64, 455–475 (2007)

Funding

Open access funding provided by Università degli Studi di Milano - Bicocca within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Proof of Lemma 2

First, recall that, under the assumptions of the lemma, \({\widehat{F}}_n({{\widehat{z}}}_n)-{{\widehat{F}}}_n(z)=o_P(1)\) and, consequently, \({{\widehat{F}}}_n({{\widehat{z}}}_n)=F(z)+o_P(1)\) [see De Capitani and Pasquazzi (2015)]. Now, simplify notation in \({\widehat{I}}_{3n}\) and decompose it as:

After assuming that \({\widehat{z}}_n\le z\), it is easily seen that

Moreover, note that

Finally, being that

one has

Now, expressions from (18) to (21) allow to write, at least when \({\widehat{z}}_n\le z\),

However, similar steps can be followed under the assumption that \({\widehat{z}}_n > z,\) which shows that the statement above is a general result. The proof for \({\widehat{I}}_{3n}\) is obtained consistently.

1.2 Proof of Lemma 3

As in the proof of Lemma 2, notice first that

with \(l=1; 2\). Now decompose \({\widehat{I}}_{5n}\) as

The asymptotic representation for \({\widehat{I}}_{6n}\) can be obtained similarly.

1.3 Proof of Theorem 3

Clearly, \({{\varvec{\varSigma }}}\) is the asymptotic variance matrix of the vector

whose asymptotic normality follows from the linearizations provided in Section 3.1, the central limit theorem and the Cramer–Wold device. Then, the result about \({\widehat{K}}_{2n}({{\widehat{\gamma }}}_n)\) is an application of the delta method. To get into details about the the derivation of the elements of \({{\varvec{\varSigma }}},\) consider first the following expectations:

Similarly, \(\int \int H^+(x,y,\gamma )\, \mathbbm {1}(x>\gamma )\, {\mathrm{d}}F(y)\, {\mathrm{d}}F(x)=\varDelta ^+-\frac{\delta ^+}{2}\). In addition,

and \(\int \int H^+(x,y,\gamma )\, \mathbbm {1}(x\le \gamma )\, {\mathrm{d}}F(y)\, {\mathrm{d}}F(x)=\frac{\delta ^+}{2}.\) Moreover,

and analogously \(\int \int H^+(x,y,\gamma ) \, (\gamma -x)\, \mathbbm {1}(x\le \gamma )\, {\mathrm{d}}F(y)\, {\mathrm{d}}F(x)= \delta ^+\delta ^-.\) Now notice that

and that, equivalently,

Thus, a last relevant expectation can be computed as:

The elements of \({{\varvec{\varSigma }}}\) can now be obtained:

(and similarly for \(\varSigma _{22}\)).

(and similarly for \(\varSigma _{44}\)).

(and similarly for \(\varSigma _{24}\)).

(and similarly for \(\varSigma _{23}\)). Finally,

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Borroni, C.G., De Capitani, L. Some measures of kurtosis and their inference on large datasets. AStA Adv Stat Anal 106, 573–607 (2022). https://doi.org/10.1007/s10182-022-00442-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10182-022-00442-y