Abstract

Smart healthcare monitoring systems are proliferating due to the Internet of Things (IoT)-enabled portable medical devices. The IoT and deep learning in the healthcare sector prevent diseases by evolving healthcare from face-to-face consultation to telemedicine. To protect athletes’ life from life-threatening severe conditions and injuries in training and competitions, real-time monitoring of physiological indicators is critical. In this research work, we present a deep learning-based IoT-enabled real-time health monitoring system. The proposed system uses wearable medical devices to measure vital signs and apply various deep learning algorithms to extract valuable information. For this purpose, we have taken Sanda athletes as our case study. The deep learning algorithms help physicians properly analyze these athletes’ conditions and offer the proper medications to them, even if the doctors are away. The performance of the proposed system is extensively evaluated using a cross-validation test by considering various statistical-based performance measurement metrics. The proposed system is considered an effective tool that diagnoses dreadful diseases among the athletes, such as brain tumors, heart disease, cancer, etc. The performance results of the proposed system are evaluated in terms of precision, recall, AUC, and F1, respectively.

Similar content being viewed by others

1 Introduction

Health is one of the essential elements of human life. Health is a blessing that is considered the lack of illness and better physical and mental state conditions. In every society, health and healthcare systems are getting more attention and adopting technology. In recent times, COVID-19 has severely affected the economy of every country resulting in the adaptation of smart healthcare systems. In smart healthcare systems, the persons are monitored remotely to stop the spread of diseases and provide quick and cost-effective treatment. The integration of IoT-enabled healthcare systems and machine learning is considered an ideal solution in this context [1]. IoT and machine learning-based solutions are efficient due to the advancements in sensing, processing, spectrum utilization, and machine intelligence. These solutions are possible due to the advancement in microelectronics that has provided tiny and cheap medical sensing devices, which has revolutionized medical services [2]. As a result, healthcare systems classify these solutions as symptomatic treatment and preventive treatment. Nowadays, people pay significant attention to preventing and early detection of diseases and the best medication for various chronic diseases. Therefore, the development of national healthcare monitoring systems has become an inevitable trend these days.

Recently, IoT devices and machine learning algorithms to monitor persons remotely for proper diagnoses have gained significant attention in telemedicine. The development of energy-efficient, cost-effective, and scalable real-time healthcare systems is highly demanded to manage persons’ vital signs [3]. However, the use of traditional wireless communication technology for healthcare systems suffer from radiation and higher cost. On the other hand, real-time health monitoring systems do not suffer from radiation have flexible communication modes and are suitable for monitoring various environments. For example, in the home, the data communication and transmission can be realized by connecting to the local router for wireless communication. However, when the persons are in the outdoor environment, the portable healthcare devices are connected using a wireless medium to realize the data communication and transmission. In this context, the machine learning algorithms are used to make appropriate decisions if the persons are indoor or outdoor.

Machine learning and deep learning algorithms in IoT-enabled healthcare systems are commonly used these days [4]. These algorithms are also widely employed in sports to keep track of the health of athletes using image interpretation, medical image analysis, injury prediction, and diagnosis of the athletes. In sports, connected footwear and clothes, i.e., shirts and similar clothing items integrated with sensors to intelligently track athlete’s pace, footwork, measuring their respiration rate, heart rate, and muscle usage [5]. These implanted sensors are tremendously advantageous to athlete’s health by ensuring that they follow a balanced exercise. However, these IoT devices produce extensive unstructured telemedicine data, including a more robust correlation and outliers. Therefore, machine learning and deep learning techniques must be used to identify valuable features from raw telemedicine data to reduce athlete data redundancy. Storing and processing duplicated, lossy, and noisy data in remote cloud data centers waste resources and result in catastrophic health-related events [6]. The importance of machine learning and deep learning algorithms cannot be overstated, as they eliminate outliers and redundancies. These algorithms guarantee that only highly processed telemedicine information is available in the management information system for making vital health decisions for athletes. These algorithms assure that valuable features are retrieved from vast amounts of raw data and make the best decision for delay-sensitive and time-critical applications in sport informatics [7]. Unlike machine learning, deep learning employs several hidden nodes and layers to provide extensive coverage and high-level feature extraction from raw telemedicine data. The heterogeneous data generated in sports informatics is massive and needs standard techniques to evaluate and extract valuable information. The deep neural network can automatically generate relevant features from a given unstructured dataset using standard learning processes without human engineering and intervention.

This paper has proposed an IoT-enabled real-time smart healthcare system using deep learning algorithms for athletes. The proposed system uses portable medical devices to capture different signals from the human body and apply various deep learning algorithms to extract valuable information. For this study, we have considered Sanda athletes as our case study, and our results are obtained based on the health status of these athletes. Our proposed system captures various vital signs of Sanda athletes and remotely transmits them for further analysis. The physicians use the extracted information to diagnose the diseases, analyze the conditions of athletes, and suggest proper medications. The performance of the proposed system is evaluated, and it is believed an effective tool in the healthcare monitoring system. The major contributions of this research are as follow,

-

We presented a real-time health monitoring system that uses portable medical equipment attached to the subject’s body (Sanda athlete in this study) to capture data

-

The use of embedded wearable devices employs real-time monitoring through a wireless network that can directly communicate with the server

-

Deep learning models are executed at the local servers for real-time optimization using standard learning processes. It performs accurate prediction during real-time transmission, storage, monitoring, and analysis of athletes’ data.

The remainder of this paper is structured as follows. Section 2 describes the system design of the proposed model, followed by the design of deep neural network architecture in Sect. 3. Section 4 presents experimental results and discussion. Finally, Sect. 5 puts forward the concluding remarks and further research.

2 System design

The proposed real-time health monitoring system aims to monitor the health of Sanda athletes using IoT-enabled wearable devices. The IoT-enabled sensing devices are attached to the athlete’s body, which regularly collects data and transmits it to the server using a relay network. In this way, continuous monitoring and analysis of the athletes are performed. This phenomenon is shown in Fig. 1, where the proposed system is divided into three parts. These parts majorly include wearable devices and monitoring equipment, wireless communication relay networks, and health assessment and monitoring server. In this next section, we discuss these parts in detail.

The architecture of real-time health monitoring system [3]

2.1 Wearable devices and monitoring equipment

The IoT-enabled sensing devices sense and collect different types of data, such as blood pressure, pulse, blood glucose, body temperature, heartbeat, dynamic monitoring of falls, etc., from the athlete’s body. At present, sensing devices and deep learning algorithms are used to measure various physical signs. The sensed data are used to monitor the health status of athletes for chronic diseases. The data are regularly transmitted to the health assessment and monitoring server. The physicians can trace the data in real-time, and generate early warning information for abnormal conditions, to truly realize early detection and early prevention [4]. Considering the physical signals’ features and measurement accuracy, we use wearable devices to transmit signs data timely. There are two types of sensor modules, the chest band sensor module, and the wrist band sensor module. These two types of sensor modules are developed through a plug-in, allowing simultaneous interpreting of different sensors. These include ECG sensors, triple-axis accelerometers, and oxygen saturation sensors. The universal wireless sensor frame module is used to communicate and transmit data with operators or wireless communication networks, as shown in Fig. 2.

Block diagram of wireless general sensor system, adaptive from [8]

The chest strap sensor module and the wrist strap sensor module can communicate with the universal wireless frame module and send measurement data regularly. The device uses a standard interface for the sensor module, allowing the device to integrate different sensor modules according to a different person’s needs to detect different physical data. These devices support multiple sensing modules on an ad-hoc basis and form a Body Area Network (BAN) with the universal wireless frame module [8, 9].

2.2 Wireless communication relay network

Due to the impact of radiation on the person’s human body, relay enabled communication is considered suitable. Because it uses low-range communication that does not require high transmission power. It is believed that the design of an efficient IoT-enabled wireless sensor transmission is essential. In this paper, we proposed designing a low-power wireless relay network, optimized the design of a sensor operating system using TinyOS [9]. In TinyOS, data fragmentation and retransmission are used, which solves data transmission effectiveness and high performance. All wearable sensing and monitoring devices actively transmit data to the relay network, effectively reducing the device’s power consumption. Furthermore, the use of transmission communication protocol to quickly initialize the time window technology significantly improves transmission efficiency and reduces equipment consumption. The optimized sensor device operating system processing flow is shown in Fig. 3.

TinyOS component diagram adaptive from [9]

2.3 Real-time health monitoring system

The real-time medical health monitoring system can continuously monitor multiple persons. It can automatically gather health status reports daily, weekly, monthly, quarterly, and annual from persons’ records. Combining the knowledge of multiple professionals and medical experts to monitor persons and inform them of early diagnosis and warnings of health conditions. The system includes health status online monitoring, health status trend analysis, early warning of health status, health status report, health maintenance advice, personalized health monitoring, emergency monitoring, and other functional modules.

3 Design of deep neural network architecture

In this section, we introduce the prototype and design of the proposed model. The architecture of the proposed model is shown in Fig. 4. The figure contained several components that are discussed in detail as follow:

3.1 DNN model

DNN is a subfield of machine learning algorithms in artificial intelligence inspired by the human brain’s working mechanism and its activities [1, 3]. A DNN model network topology consists of the input layer, output layer, and multiple hidden layers, as shown in Fig. 5. The hidden layers are essential elements of the DNN model and are actively engaged in learning [5, 8, 9]. Using more hidden layers in the model training process may increase the model efficiency; however, it may arise with computational cost, model complexity, and overfitting [11, 12]. The DNN model can mathematically express in Eq. (1).

The \(y_{a}\) represents output at a layer \(a\), \(B\) represents bias value, the \(w_{b}^{a}\) representing the weights used at a layer \(a\) by a neuron \(b\). The \(x_{b}^{a}\) represents input feature and \(f\) represent nonlinear activation Tanh function. It can be calculated using Eq. (2).

We choose the DNN model over other conventional machine learning algorithms for a variety of reasons. Firstly, conventional learning algorithms use a single stack processing layer, which is insufficient for dealing with a complex nature dataset with high nonlinearity. Secondly, the conventional machine learning algorithms required human expertise or engineering to extract optimum features for successful prediction.

3.2 Data noise reduction algorithm

Principal Component Analysis (PCA) [13] is one of the most widely used methods for data dimensionality reduction and data de-noising. PCA can be regarded as mapping two spaces, i.e., how to map the original space where the original data are located to the new space. At the same time, it is required that the core information of the original data will not be lost in the new space, and the redundant components should be removed. Its core work is to find a set of orthogonal transformation bases in the original space and construct a new coordinate system in the new space with the variance of the original data as a reference [14, 15]. Data dimensionality reduction is achieved by mapping N-dimensional features to k-dimensional features. The discarded dimensions in the new space can be regarded as noise space.

Let X samples of data \(X = \{ X^{1} ,X^{2} ,...,X^{N} \}\); each sample has N-dimensional features \(X_{i} = \{ x_{i}^{1} ,x_{i}^{2} ,...,x_{i}^{N} \}\).

First step: First, calculate the mean value × 1 of the ith feature to obtain a new sample:

Second step: Then we can find the covariance of \(\overline{x}_{1}\) to get the matrix C:

Among them cov (× 1, × 2) is to find the covariance of × 1 and × 2, the formula is:

Third step: Find the eigenvalue λ and eigenvector u = (u1, u2, • • • •, uN) of the matrix C; Then, filters the first k eigenvectors as the base, and project the original features onto the new eigenvectors to obtain dimensionality reduction Data after Y.

Final step: In the final step, we select the k number of values with maximum eigenvalues.

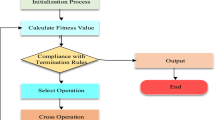

3.3 Model optimization algorithm

The Gradient Descent (GD) algorithm [16] has a wide range of linear and logistic regression applications. The GD algorithm searches for the minimum value using step-by-step iteration. Each iteration follows the direction of the smallest gradient and quickly approaching the minimum value. The gradient descent algorithm can be mathematically expressed using the following equation.

where \(\theta\) and \(\eta\) represents the model parameter and the learning rate, respectively. The functions \(J(\theta )\) and \(\Delta J(\theta )\) represents the loss function and gradient of the loss function, respectively. These functions are updated each time one pair of samples is used, which greatly accelerates the update rate of the model. The formula of the stochastic gradient descent method is as follows:

Moreover, in the gradient descent method, the setting of the learning rate is particularly critical. If the learning rate is too large, the model will oscillate repeatedly and not reach the optimal solution. If the learning rate is too low, it will slow down the model solving speed and increase the number of iterations. Ideally, if the gradient of the parameter is larger [17, 18], it matches a lower learning rate. Similarly, Adagrad is also another gradient-based optimization algorithm, which proposed optimizing the dynamic learning rate. The learning rate will be changed according to the square sum of the previous gradient. The learning rate and solution formula are shown in Eqs. (9) and (10).

The \(g_{i,t}\) is the sum of squared gradients of the ith parameter in the previous t iterations, \(J(\theta_{i,t} )\) is the gradient of the ith parameter in the tth iteration, and \(\varepsilon\) is a trace that prevents the denominator from being zero. Therefore, the learning rate will change with the gradient. However, the above formula \(\frac{\eta }{{\sqrt {\theta_{i,t} + \varepsilon } }}\) causes the learning rate to decrease continuously with the increase in the denominator part, making the learning rate at the later stage of the iteration approach zero. In order to change the late fatigue situation of Adagrad [19, 20], AdaDelta proposed to add an attenuation coefficient w to weaken the influence of the gradient of the long-time interval on the current training. AdaDelta updates the \(g_{i,t}\) solution formula to

V (t) is the parameter momentum of the tth iteration, and ρ1 and ρ2 are constant parameters. In such a training process, parameter updates are more independent, which improves the training speed while also enhancing training stability. Adam or NADAM is also widely used because it combines the advantages of the above algorithms. The proposed model employee Adam and NADAM updater’s technique; however, it negatively affects the overall model efficiency and performance.

4 Experimental results

The experiment involves crowd sampling and real-time monitoring with wearable sensor monitoring devices. The equipment is shown in Fig. 6, with various vital signs that can be measured and monitored simultaneously in the experiment.

4.1 Data measurement

The blood oxygen saturation data depends on the degree of absorption of the two lights of the wristband sensor. It is calculated by the ratio of red light and infrared light on the hardware surface. The blood oxygen saturation is measured through the wristband sensing device. The photoplethysmographic pulse wave signal (PPG), the blood oxygen saturation value (SpO2), and the heart rate data are collected with a data packet size of 5 bytes per second.

4.2 Measurement data transmission

First, the wearable sensor device node finds the nearest wireless network relay sensor node to send the collected measurement data, and then, the wireless communication relay sensor node recognizes the nearest path information, works in a specific area, and relays the measurement data to the base station. The wireless relay sensor device node consists of a microcontroller, an RF transceiver chip, and a battery. It transmits the measured ECG, acceleration, and blood oxygen saturation data to the connection base station of the wireless server of the real-time medical health monitoring system. Figure 7 shows the component structure optimization after TinyOS adopts the component programming mode, such as “blood oxygen saturation” and “ECGC” components. The components in the red circle are specially modified components to optimize wireless relay sensor network transmission and improve transmission efficiency. This routing method can provide high-efficiency transmission and solve the entire network’s low energy consumption and configuration stability.

4.3 Explanation of test results

Based on preliminary performance test results using chest strap and wrist strap sensing devices, the system can effectively monitor the person’s condition through a wireless relay communication network. All measurement data from wearable sensing devices can be effectively transmitted to the real-time medical health monitoring system by deploying low-powered wireless relay nodes. Compared with a single network topology or pipelined routing network, wireless relay nodes can automatically identify the shortest path, thereby providing better communication performance. Figure 8 shows the packet structure of the wearable sensor device to be transmitted. These data package includes all measurement values; ECG, acceleration measurement, and blood oxygen saturation measurement.

The real-time medical health monitoring system records various events and data, allowing persons to go back and frequently observe at home. The optimized database storage structure runs each person to manage and observe the measured values recorded by the sensors. Various measurement values such as ECG, acceleration measurement, and blood oxygen saturation measurement can be automatically saved and archived to the real-time medical monitoring system server. Figure 9 shows the trend of physical signs in the real-time medical health monitoring system. Even if the person is unconscious, the system can perform accurate identification and recording. In addition, wearable devices monitor the person’s physical sign data in daily life to ensure that the data can be systematically provided to the doctor to diagnose the conditions.

4.4 Simulation test results of deep learning

In this section, we present our results by examining the characteristics of the data and the disorders. Health monitoring systems use real-time analytics to achieve valuable insights and quicker reaction times with faster and accurate results in the current era. However, unsustainable network access and the unknown supply of data from remote sensors will lead the systems to perform slow. For this purpose, we first collect large volumes of data from appropriate sensors. Secondly, we store the data in the proper format and frames. Thirdly, we apply the deep learning algorithm to classify and identify diseases that cause a person to suffer. Finally, we compare the results of DNN architectures and selection mechanisms.

5 Characteristics of the data

In all, 3,214 samples are used in the text data that we use for our study. Each sample refers to a particular discharge report of a person’s hospital admission. We show a summary of the data in Table 1. Specifically, we study different persons with nine different diseases and the available samples. The characteristics indicate the number of samples that are labeled with multiple phenotypes. The reason is that more than one disease causes a person to suffer. Wearable devices are employed to monitor the actual health data of athletes per second in real-time during training and competitions to improve their performance. Figures 10 and 11 show the blood pressure and heartbeat rate per second of athletes. From these figures, it is clear that our proposed system can monitor the health of athletes based on athlete’s data gathered in real-time.

6 Mean and deviation

For a word-level input, we can calculate the mean standard and deviation of the persons. The total length of a sample consists of 35.95 deviations with an 8.73 mean standard after preparing the word-level and sentence-level data. The sample’s average number of characters is 3.80, and the sample’s average number of words is 3.40, as shown in Table 2. The shortest sample of the smallest number of characters consists of 0.50 mean standard with 0.30 deviation.

7 Balancing classes for training

We used a weight balance to counter this perceived bias for DNN preparation. It means that we transfer class weight to our failure feature in order to make learning expensive. To avoid the model overfitting issue during the model setup or configuration, we employed regularization methods. These methods help avoid overfitting during model training, i.e., l1 and l2 regularization and dropout methods. In particular, the cross-entropy loss function [21, 22] is done by a weight vector. At the same time, its components are supplied by one specimen per type. It helps the network to deal with the minority class in a better manner with false predictions. We found significant differences in the findings by applying this methodology. The findings of two phenotypes, i.e., A and B, are mostly affected by these equilibrium statistics. Therefore, we used weight balance for preparation for analyses. The effect of some parameters, i.e., learning rate and activation functions, on accuracy is summarized in Table 3: The performance of different phenotypes. We have optimized the parameters set for all the learning algorithms for accuracy-based analysis.

We have evaluated the deep neural network model’s success in AUC terms (area under the ROC curve). The AUC is generally used for binary classification performance assessment. In the range of 0 and 1, the AUC value is determined. A classifier that calculates a greater AUC value is a good indicator than a smaller value [22]. Figure 12 shows the output of the deep model for both pules and blood sugar (A & B) using various sequence formulation techniques. From these statistics, we can conclude that the deep model worked better for using these features compared with other formulation models.

8 Conclusion

In this paper, a relay-enabled wireless sensor network provides the measurement of wearable sensor device nodes. The design of wearable sensor device nodes considers the convenience of wearing and walking in daily life. The wearable sensor device node includes a universal wireless sensor frame. The chest strap sensor integrates an ECG and acceleration sensor, and the wrist strap sensor reflects blood oxygen saturation. We consider the ECG, acceleration, and blood oxygen saturation information important vital signs based on these wearable sensor device nodes. The vital signs data from wearable sensor devices are automatically saved to the real-time medical health monitoring system after verification. In addition, through a well-designed relay-enabled wireless sensor network, which runs under low-power operating conditions, the adverse effects of radiation are minimized. The use of the DNN model exhibited promising results, and several limitations are associated with the DNN model. Firstly, the model is sensitive to the number of neurons in the hidden layers, i.e., a few neurons may cause under-fitting problems. An overabundance of neurons, on the other hand, may contribute to an overfitting problem. In general, a network with more hidden layers in a training or testing process might lead to improved accuracy. However, this raise critical issues, including computation cost, complexity, and vanishing gradient. These concerns will be addressed in the future.

References

Liu X, He P, Chen W, Gao J. (2019) Multi-task deep neural networks for natural language understanding. arXiv doi: https://doi.org/10.18653/v1/p19-1441

Sun J, Khan F, Li J, Alshehri MD, Alturki R, Wedyan M (2021) Mutual authentication scheme for ensuring a secure device-to-server communication in the internet of medical things. IEEE Internet Things J. https://doi.org/10.1109/JIOT.2021.3078702

Zhang Q, Yang LT, Chen Z, Li P (2017) An improved deep computation model based on canonical polyadic decomposition. IEEE Trans Syst Man Cybern Syst 48(10):1657–1666

Jan MA, Khan F, Khan R, Watters P, Alazab M, Rehman AU (2021) A lightweight mutual authentication approach for intelligent wearable devices in health-CPS. IEEE Trans Ind Inf 17(8):5829–5839

https://roboticsbiz.com/wearables-in-sports-smart-clothing-e-textile-technologies/ Accessed on May 22, 2021

Jan SR, Khan R, Khan F, Jan MA (2021) Marginal and average weight-enabled data aggregation mechanism for the resource-constrained networks. Comput Commun J 174:101–108

Jan MA, Khan F, Mastorakis S, Adil M, Akbar A, Stergiou N (2021) LightIoT: lightweight and secure communication for energy-efficient IoT in health informatics. IEEE Trans Green Commun Netw. https://doi.org/10.1109/TGCN.2021.3077318

Wu Y, Tan H, Qin L, Ran B, Jiang Z (2018) A hybrid deep learning-based traffic flow prediction method and its understanding. Transp Res Part C Emerg Technol 90:166–180. https://doi.org/10.1016/j.trc.2018.03.001

Xu Yu, Zhan D, Liu L, Lv H, Lingwei Xu, Junwei Du (2021) A privacy-preserving cross-domain healthcare wearables recommendation algorithm based on domain-dependent and domain-independent feature fusion. IEEE J Biomed Health Inform. https://doi.org/10.1109/JBHI.2021.3069629

Feng S, Zhou H, Dong H (2019) Using deep neural network with a small dataset to predict material defects. Mater Des 162:300–310. https://doi.org/10.1016/j.matdes.2018.11.060

Miao JH, Miao KH (2018) Cardiotocographic diagnosis of fetal health based on multiclass morphologic pattern predictions using deep learning classification. Int J Adv Comput Sci Appl 9:1–11. https://doi.org/10.14569/IJACSA.2018.090501

Ravi D, Wong C, Deligianni F, Berthelot M, Andreu-Perez J, Lo B et al (2017) Deep learning for health informatics. IEEE J Biomed Heal Inf 21:4–21. https://doi.org/10.1109/JBHI.2016.2636665

Smith L (1988) A tutorial on principal components analysis. Commun Stat - Theory Methods 17:3157–3175

Sindi H, Nour M, Rawa M, Öztürk Ş, Polat K (2021) A novel hybrid deep learning approach including combination of 1D power signals and 2D signal images for power quality disturbance classification. Expert Syst Appl 174:114785

Shahamat H, Abadeh MS (2020) Brain MRI analysis using a deep learning based evolutionary approach. Neural Netw 126:218–234

Fang C, Moriwaki Y, Li C, Shimizu K (2019) Prediction of antifungal peptides by deep learning with character embedding. IPSJ Trans Bioinf 12:21–29

Chou K-C (2010) Graphic rule for drug metabolism systems. Curr Drug Metab 11:369–378. https://doi.org/10.2174/138920010791514261

Wong TT, Yang NY (2017) Dependency analysis of accuracy estimates in k-fold cross-validation. IEEE Trans Knowl Data Eng 29:2417–2427. https://doi.org/10.1109/TKDE.2017.2740926

Liu G, Liu J, Cui X, Cai L (2012) Sequence-dependent prediction of recombination hotspots in Saccharomyces cerevisiae. J Theor Biol 293:49–54. https://doi.org/10.1016/j.jtbi.2011.10.004

Wong TT, Yeh PY (2020) Reliable accuracy estimates from k-fold cross-validation. IEEE Trans Knowl Data Eng 32:1586–1594. https://doi.org/10.1109/TKDE.2019.2912815

Miao Yu, Quan T, Qinglong Peng XY, Liu L (2021) A model-based collaborate filtering algorithm based on stacked autoencoder. Neural Comput Appl. https://doi.org/10.1007/s00521-021-05933-8

Zhou G-P, Chen D, Liao S, Huang R-B (2015) Recent progress in studying helix-helix interactions in proteins by incorporating the wenxiang diagram into the NMR spectroscopy. Curr Top Med Chem 16:581–590

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wu, X., Liu, C., Wang, L. et al. Internet of things-enabled real-time health monitoring system using deep learning. Neural Comput & Applic 35, 14565–14576 (2023). https://doi.org/10.1007/s00521-021-06440-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06440-6