Abstract

Computational homogenization is the gold standard for concurrent multi-scale simulations (e.g., FE2) in scale-bridging applications. Often the simulations are based on experimental and synthetic material microstructures represented by high-resolution 3D image data. The computational complexity of simulations operating on such voxel data is distinct. The inability of voxelized 3D geometries to capture smooth material interfaces accurately, along with the necessity for complexity reduction, has motivated a special local coarse-graining technique called composite voxels (Kabel et al. Comput Methods Appl Mech Eng 294: 168–188, 2015). They condense multiple fine-scale voxels into a single voxel, whose constitutive model is derived from the laminate theory. Our contribution generalizes composite voxels towards composite boxels (ComBo) that are non-equiaxed, a feature that can pay off for materials with a preferred direction such as pseudo-uni-directional fiber composites. A novel image-based normal detection algorithm is devised which (i) allows for boxels in the firsts place and (ii) reduces the error in the phase-averaged stresses by around 30% against the orientation cf. Kabel et al. (Comput Methods Appl Mech Eng 294: 168–188, 2015) even for equiaxed voxels. Further, the use of ComBo for finite strain simulations is studied in detail. An efficient and robust implementation is proposed, featuring an essential selective back-projection algorithm preventing physically inadmissible states. Various examples show the efficiency of ComBo against the original proposal by Kabel et al. (Comput Methods Appl Mech Eng 294: 168–188, 2015) and the proposed algorithmic enhancements for nonlinear mechanical problems. The general usability is emphasized by examining various Fast Fourier Transform (FFT) based solvers, including a detailed description of the Doubly-Fine Material Grid (DFMG) for finite strains. All of the studied schemes benefit from the ComBo discretization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Homogenization in engineering

In the last decade, the quality of micro x-ray computed tomography (CT) images has steadily improved. Nowadays, standard CT devices have a spatial resolution below one \(\upmu \)m. They produce 3D images of up to 40963 voxels. This permits a detailed view of the microstructure’s geometry of composite materials all the way down to the limits of continuum mechanical theories and the necessity for discrete particle methods. The geometrical information itself is sufficient to detect defects in components by measuring, e.g., the pore space and the shape of the pores or by detecting the presence of micro-cracks. In order to understand the effect of such microscopic features, one has to solve PDEs on the high-resolution 3D image data. These simulations assist in the characterization of effective mechanical behavior.

Due to the sheer size of today’s CT images, the resulting computational homogenization algorithms face severe challenges related to the high computational demands. For instance, to compute effective linear elastic properties on a 40963 CT image, the number of nodal displacement degrees of freedom amounts to approximately 206 Billion. The solution to problems of this size using conventional simulation methods such as the finite element (FE) method requires huge compute clusters [1, 2]. These difficulties are commonly overcome by working with conventional FEM on a variety of smaller subsamples of moderate size [3]. The resulting effective properties of the individual subsamples are averaged in post-processing, cf., for instance, [4, 5]. This method, however, does not exploit the available information gathered from the specimen.

1.2 FFT-based solvers

In contrast to the conventional FEM approach, the numerical homogenization method of Moulinec–Suquet [6, 7] operates on the voxels of a CT image directly: the set of unknowns is formed by the strains, i.e., one tensor for each voxel in the CT image. The solution algorithm works in place (i.e., matrix-free) such that, in practice, the size of the CT images to be treated is only restricted by the size of the memory and the affordable compute time. FFT-based schemes can handle arbitrary heterogeneity, phase contrast [e.g., [8]], and degree of compressibility of the materials. Generally, the number of iterations is independent of the grid size, depending only on the material’s contrast, i.e., the maximum of the quotient of the largest and the smallest eigenvalue of the elastic tensor field, and on the geometric complexity. The solution of the linear algebraic system relies upon a Lippmann-Schwinger fixed point formulation, enabling, by use of fast Fourier transform (FFT), a fast matrix-free implementation. By changing the discretization to finite elements [9] and finite differences [10] also infinite material contrast problems became solvable. Recent displacement-based implementations [11] also allowed the use of higher order integration schemes without increasing the memory demands and with improved computational efficiency over strain-based algorithms also building on finite element technology [11, 12]. A relation with Galerkin-based methods was outlined by [13] where the use of the efficient Conjugate Gradient (CG) method was mentioned. Interestingly, the CG method (as well as minres and other Krylov solvers) is completely natural in FANS, and FFT-\(Q_1\) Hex [11, 12], including straightforward implementation.

1.3 Composite voxel technique

Despite the many advances in theory and algorithmic realization of FFT-based methods, the resolution of state-of-the-art computed tomography poses challenges for numerics. A single, double-precision scalar field on a 40963 voxel image as delivered by modern \(\upmu \)CT scanners already occupies 512 GB of memory. Thus, even performing linear elastic computations on such images requires either exceptionally equipped workstations or big compute clusters. This applies evermore so to inelastic computations, where additional history variables need to be stored, increasing the memory demand significantly.

To enable computations on conventional desktop computers or workstations that can still take into account relevant microstructural details of the fully resolved image, the composite voxel technique was developed for linear elastic, hyperelastic and inelastic problems [14,15,16,17]. A coarse-graining procedure serves as the initial idea, i.e., a number of smaller, typically \(4^3=64\), voxels are merged into bigger voxels. Each of these so-called composite voxels gets assigned an appropriately chosen material law which is based on a bi-phasic laminate. This laminate takes into account the exact phase volume fractions and the interface normal vector N. Thereby, it can reflect the most relevant microstructural features, avoid staircase phenomena intrinsic to regular voxel discretizations and allow for a boost in accuracy in computational homogenization.

In many scenarios, the edge lengths of the voxels are different. This can be due to the employed imaging technique, e.g., in serial sectioning by a focused ion beam and using scanning electron microscopy (FIB-SEM, [18]). Another reason for the wish to use anisotropic voxel shapes is the presence of a preferred direction, e.g., in composites with a preferred orientation such as long fiber reinforced materials studied by [19]. The consideration of anisotropic composite voxels—called boxels in the present study—leads to low-quality normal orientations when using the original approach suggested by [15]. Likewise, low volume fractions can deteriorate the quality of the normal orientation.

Another issue arises depending on the material contrast and the local volume fraction in the composite voxels: If the soft phase of the two-phase laminate has a small volume fraction, the deformation within this phase is severely exaggerated. This typically leads to convergence issues of the Newton–Raphson method, which evaluates the effective response of the composite voxel and is normally circumvented by limiting the volume fractions of either constituent to be in the range of 5\(\cdots \)95% and by resorting to simple Voigt averaging otherwise, ruling out the aforementioned advantages of the composite voxels.

1.4 Outline

In the current study, we target composite voxels for finite strain homogenization problems. In Sect. 2 the homogenization problem is recalled. The foundations of FFT-based schemes are summarized in Sect. 3 including the Lippmann–Schwinger equation and an extension of the staggered grid approach of [10] towards improved local fields. The composite voxel technique is considered in detail in Sect. 4 including algorithmic improvements leading to increased robustness for finite strain problems.

1.5 Notation

The spatial average of a quantity over a domain \({{{\mathcal {A}}}}\) with measure \(A = |{{{\mathcal {A}}}}|\) is defined as

In the sequel boldface lowercase letters denote vectors (exceptions: material coordinate \(\varvec{ X}\), normal vector \(\varvec{N}\), displacement \(\varvec{ U}\) and traction \(\varvec{ T}\)), boldface upper case letters denote 2-tensors and blackboard bold uppercase letters (e.g., \({\mathbb {C}}\)) denote 4-tensors. The inner product contracts all indices of two k-tensors while the usual linear mapping contracts the last k indices of the first tensor with the following k-tensor. The double contraction operator \({\mathbb { A}}:\varvec{ B}\) contracts the trailing two indices of \({\mathbb { A}}\) with the leading two indices of \(\varvec{ B}\). The outer tensor product is denoted by \(\otimes \), and its symmetric version is given by \(\otimes ^\mathrm{s}\).

General vectors and matrices are denoted by single and double underlines, respectively. Note that in the sequel, we focus on an orthonormal basis for the tensor fields. A vector representation for general (\(\varvec{F}\leftrightarrow \underline{F}\)) and symmetric 2-tensors (\(\varvec{ S}\leftrightarrow \underline{S}\)) is given by

Note the different ordering and normalization factors of the Mandel-type notation versus the commonly used Voigt notation, which is avoided due to the ambiguity of stress versus strain-like quantities in the vector representation. The notation (2) preserves the inner product for general 2-tensors (e.g., \(\varvec{F}, \varvec{P}\)) and symmetric 2-tensors (e.g., \(\varvec{ S}, \varvec{ E}\)), i.e.,

Likewise, 4-tensors are represented by \(9\times 9\) and \(6 \times 6\) (in the case of left and right sub-symmetry) matrices, respectively.

In view of spatial derivatives, the gradient (\(\mathrm{Grad}\left( \cdot \right) \)) and the divergence (\(\mathrm{Div }\left( \cdot \right) \)) with respect to the reference configuration are used. In the context of an internal surface \({\mathscr {S}}\), the jump operator is given as

where \(\varvec{N}\) is the normal of \({\mathscr {S}}\) pointing from the second phase (referred to as the inclusion phase) into the first phase (referred to as the matrix material).

2 Homogenization problem

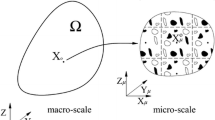

We focus our attention on the finite strain mechanics of hyperelastic solids. Consider a material point \(\varvec{ X}\) of a body \({\mathcal {B}}_0\) in the reference configuration at time \(t = 0\). The corresponding current position at time \(t \in [0, T]\) is denoted by \(\varvec{x}\) in the deformed domain \({{{\mathcal {B}}}}(t)\). The motion of the body is given by

where \(d \in \{ 2, 3\}\) is the spatial dimension. In the sequel, the dependence on time t is implicitly assumed but not reflected in the arguments for brevity. The displacement field is given by \(\varvec{ U}(\varvec{ X}) = \varvec{x}- \varvec{ X}\). The deformation gradient \(\varvec{F}\) is defined as

The volume change at a material point is given by the material Jacobian of the deformation map,

with \(\mathrm{d}v\) the differential volume in the current configuration and \(\mathrm{d}V\) denoting its counterpart in the reference configuration. The right Cauchy-Green tensor is given by \(\varvec{ C}= \varvec{F}^{\textsf {T}} \varvec{F}\). At a particular material point \(\varvec{ X}\) with an infinitesimal area \(\text {d}A\) with a unit normal vector \(\varvec{N}\), we define the resulting traction vector \(\varvec{ T}\) in terms of the first Piola-Kirchoff (PK1) stress tensor \(\varvec{P}\),

In homogenization, the constitutive response on the structural (or macroscopic) scale of a body depends strongly on the underlying microstructure. In the following, we consider a periodic microstructure

Separation of length scales is assumed, i.e., established homogenization principles apply without the need for advanced modeling techniques, e.g., based on filtering [20] or higher-order continuum theories [21].

The objective of homogenization is the identification of the effective, macroscopic constitutive response

of micro-heterogeneous materials for given \(\overline{\varvec{F}}=\langle \varvec{F}\rangle _\Omega \). Further, phase-averaged stresses, stress statistics, and the algorithmic tangent operator can be of relevance. The effective quantities must satisfy the Hill-Mandel macro-homogeneity condition

Periodic fluctuation boundary conditions of the form

satisfy the Hill-Mandel condition (see, e.g., [22]) and have proven versatile and efficient. The tractions \(\varvec{ T}(\varvec{ X}^\pm )\) are then anti-periodic for point-pairs \(\varvec{ X}^\pm \in \partial \varOmega ^\pm \) on opposing faces of the RVE \(\Omega \).

The related function space for \(\widetilde{\varvec{ U}}\) is referred to as \({{{\mathcal {V}}}}_\#\) which is a subset of the Sobolev space of weakly differentiable functions \(H^1(\varOmega )\):

where \(\mathrm{mod}\) is the spatial modulo operator. The homogenization problem (HOM) on \(\Omega \) for prescribed deformation \(\overline{\varvec{F}}\) reads:

3 FFT-based homogenization

The solution of (HOM) (14)-(15) can either be obtained (in seldom cases) using analytical solution or by using discrete numerical techniques. The most widely used methods for computational homogenization in solid mechanics are certainly the finite element method (FEM) [e.g., FE2, [23]] and FFT-based homogenization.

The latter was proposed by Moulinec and Suquet [6] in the early 1990s. It is based on the Lippmann-Schwinger equation in elasticity [24, 25] and avoids both time-consuming meshing needed by conforming finite element discretizations as well as the assembly of the related linear system. Therefore, the memory needed for solving the problem is significantly reduced compared with other methods. By virtue of the seminal Fast Fourier Transform algorithm [FFT, [26]], the compute time scales with \({{{\mathcal {O}}}}(n \, \log (n) )\) where n is the number of unknowns, i.e., it is just slightly superlinear.

For finite strain problems, the Lippmann-Schwinger equation reads

with the Greens’ operator

which relies on the solution operator \({\varvec{G}^0}\) of a linear reference problem. Lahellec, Moulinec, and Suquet [27] proposed to solve (16) by the Newton-Raphson method and the linear Moulinec-Suquet fixed point solver. In contrast, Eisenlohr et al. [28] suggested using the Moulinec-Suquet fixed point iteration on the nonlinear Lippmann-Schwinger equation for finite strains directly. Kabel et al. [29] carried over the idea of Vinogradov and Milton [30], and of Gélébart and Mondon-Cancel [31] to combine the Newton-Raphson procedure with fast linear solvers to the geometrically nonlinear case.

The present work will also make use of the nonlinear conjugate gradient (CG) method introduced by Schneider [32] for small strains. Further, the FFT-accelerated solution of regular tri-linear hexahedral elements [FFT-\(Q_1\) Hex, see [12]] and in the closely related Fourier-Accelerated Nodal Solver [11] will be used in the sequel, for which the nonlinear CG method has a perfectly natural interpretation with the fundamental solution acting as preconditioner. An overview of alternative solution methods can be found in [33].

Regarding the discretization, we will compare the original discretization by Fourier polynomials of Moulinec-Suquet [34] with the HEX8R discretization of Willot [9], and the fully integrated HEX8 elements [12, 35]. In order to consistently evaluate material laws for the staggered grid discretization [10, 36], we apply the idea of the double fine material grid (DFMG) to large strains. In contrast to the regime for small strains, which can be efficiently implemented for isotropically linear elastic materials by a suitable averaging of the shear moduli, the DFMG for large deformations requires multiple evaluations of the material routines, regardless of the complexity of the geometrically nonlinear material law. The technical details of the implementation can be found in the Appendix A.

4 Composites voxels

One of the main advantages but—at the same time—a major disadvantage of FFT-based techniques is the constraint to regular Cartesian grids: While they enable direct use of 3D image data, they are unable to capture the material interface accurately compared to interface-conforming discretizations. This is primarily due to the binarized nature of the microstructure, which leads to the so-called staircase approximation of the interface. In order to capture the microscale effects sufficiently, a high grid resolution is hence needed, which–in turn–calls for high computational cost. In order to limit or even eliminate the staircase phenomenon without the need for a (distinct) grid refinement, so-called composite voxels were previously suggested in [15,16,17, 37]. They enhance the existing binary discretization using special voxels with effective material properties that depend on the phase volume fractions and the normal orientation \(\varvec{N}\) of the laminate.

Consider such a composite voxel \(\Omega ^e\) comprised of two phases denoted by \(\Omega ^e_\pm \subsetneq \Omega ^e\), where \(+\) and \(-\) stand for inclusion and matrix phase, respectively. The fields corresponding to the two phases are represented as \((\cdot )_\pm \) for brevity, and either phase is assumed to be equipped with a hyperelastic strain energy density \(W_\pm \). Within \(\Omega ^e\), the material interface is approximated by a plane \({\mathscr {S}}^e\) leading to a rank-1 laminate defined by the interface normal vector \(\varvec{N}\in {\mathbb { R}}^d\) in material configuration. The individual phase volume fractions are given by \(c_+\) and \(c_-\) where \(c_++ c_-= 1\).

In previous works, different rules of mixture have been suggested, namely the Voigt (•V) and Reuss (•R)Footnote 1 estimates correspond to the upper and lower bounds of the mechanical response.

Inspired by laminate theory, [38] suggests the definition of the effective elasticity tensor of the laminate \({\mathbb {C}}_{\square }^{\Vert }\) implicitly through

Here, the fourth order tensor \({\mathbb { P}}\) depends on the normal \(\varvec{N}\) via

for \(i,j,k,l \in \{1, \dots , d\}\). Note that in laminate theory, the states in the ± phase are piece-wise constant. Hence, the averaging translates into

The laminate mixing rule considers the interface orientation and introduces a parameter \(\lambda > 0\) that must be larger than the leading eigenvalue of the individual stiffness tensors \({\mathbb {C}}_\pm \). Solving (18) for linear elastic materials involves 6 inversions of \(6\times 6\) matricesFootnote 2 for each composite voxel individually, i.e., at every interface-related voxel or cubature point within an FFT-based simulation, which can lead to non-negligible overhead. Hence, a more numerically efficient and physics-motivated approach devoid of additional parameters is sketched in the following that can also handle geometric and material nonlinearities, if needed.

4.1 Hadamard jump conditions for finite strain kinematics

Following the established laminate theory, the following assumptions hold:

-

The deformation gradient in \(\Omega ^e_+\) and \(\Omega ^e_-\) are related by a rank-1 jump along the normal orientation, i.e., for \(\widetilde{\varvec{a}} \in {\mathbb { R}}^d\),

$$\begin{aligned} \llbracket \varvec{F}\rrbracket _{{\mathscr {S}}^e} = \varvec{F}_+- \varvec{F}_-= \widetilde{\varvec{a}} \otimes \varvec{N}\, . \end{aligned}$$(21) -

The traction vector is continuous across the interface \({\mathscr {S}}^e\), i.e.,

$$\begin{aligned} \llbracket \varvec{ T}\rrbracket _{{\mathscr {S}}^e}= \llbracket \varvec{P}\rrbracket _{{\mathscr {S}}^e} \varvec{N}&= \varvec{ 0},&\varvec{ T}_+&= \varvec{ T}_-\,. \end{aligned}$$(22)

The kinematic compatibility of the interface is characterized by having a continuous deformation gradient tangential to the interface. This ensures that material point pairs remain identical. Condition (22) ensures that the interface is in static equilibrium.

In order to enforce (21), we chose the following parameterizationFootnote 3 of the deformation gradient tensors of the two material phases

where \(\varvec{a}\) is related to \(\widetilde{\varvec{a}}\) in (21) by a scaling constant and \(\varvec{F}_{\square }\) is the prescribed deformation gradient on the composite voxel \(\Omega ^e\) . The parameterization (23) preserves the volume average \(\varvec{F}_{\square }\) of the deformation gradient \(\varvec{F}\) over the composite voxel \(\Omega ^e\) according to

In the finite strain context, not only the average of \(\varvec{F}\) must be preserved, but also the total volume of the deformed material must remain constant under the rank-one perturbation:

Lemma 1

The kinematic rank-1 perturbation on the material interface \({\mathscr {S}}^e\) is volume preserving by construction.

Proof

Suppose \(\varvec{ A}\) is an invertible \(n\times n\) matrix and \(\varvec{ u}\), \(\varvec{ v}\) are column vectors of size n. Then the matrix determinant lemma states that

The deformation gradient \(\varvec{F}_{\square }\) is regular by definition since material inversion is not allowed. Thus, by setting \(\varvec{ A}\leftarrow \varvec{F}_{\square }\) and \(\varvec{ u}\leftarrow \pm \varvec{a}/c_\pm , \, \varvec{ v}\leftarrow \varvec{N}\) the material Jacobian \(J_\pm \) in the two phases can be computed using the matrix determinant lemma

Averaging J over the composite voxel \(\Omega ^e\), we obtain

\(\square \)

To obtain the gradient jump vector \(\varvec{a}\), the equilibrium of the tractions on the interface (22) must be granted; see also [17]:

Examining the relative volume in \(\Omega ^e_\pm \), additional constraints on \(J_\pm \) emerge:

These constraints imposed on (28) are essential to gain robustness, see Sect. 4.2.

4.1.1 Algorithmic implementation

In the case of large strain kinematics, the system (28) is always nonlinear with no explicit solution available, in general. Hence, it must be solved iteratively, e.g., by using a Newton–Raphson scheme with the Hessian

The Hessian in index notation can be written in terms of the 2-tensors \(\varvec{P}_\pm \) and \(\varvec{F}_\pm \) as

where, \({\mathbb { A}}_\pm = \displaystyle \frac{\displaystyle \partial \varvec{P}_\pm }{\displaystyle \partial \varvec{F}_\pm } = \dfrac{\partial ^2 W_\pm }{\partial \varvec{F}_\pm \otimes \partial \varvec{F}_\pm }\). The constitutive tangent operator \({\mathbb { A}}_\pm \) can directly be obtained from the hyperelastic potentials \(W_\pm \).

Using a vector notation for \(\varvec{F}, \varvec{P}\) and the related matrix representation \(\underline{\underline{A}}_\pm \) of \({\mathbb { A}}_\pm \), the Hessian gets

with the matrix \(\underline{\underline{D}}\) defined via

Note the straightforward symmetric structure of the matrix \(\underline{\underline{\Delta }}_f\) (32). Within iteration [k], the Newton-Raphson update reads

The full algorithm for the naive Newton–Raphson (NR) update is given in Algorithm 1. Note that each iteration requires a single \(d\times d\) matrix inversion. Once convergence is achieved, i.e., once the tractions on the interface are in balance, the first Piola-Kirchoff stress is

The unconditionally symmetric effective stiffness matrix can be computed from

4.2 Robust algorithm via selective back-projection

Unfortunately, a naive update of the gradient jump vector \(\varvec{a}\leftarrow \varvec{a}- {{\varvec{\Delta }}}_f^{-1} \varvec{ f}\) sometimes leads to physically unacceptable iterates: the local volume within \(\Omega ^\pm \) can get negative which is inadmissible, i.e., contradicting the constraints (29).

Robustness can be enhanced by restricting the material Jacobian \(J_\pm \) in both phases to be strictly positive according to (29). Previously, this problem was addressed in [16] by limiting the overall step width of the NR iterations if the constraint (29) is not met.

We choose a different approach that can improve the convergence behavior by building on the matrix determinant lemma (25). It allows for rewriting \(J_\pm \) as

We define

and, with help of \(\varvec{ m}_\beta \) the orthogonal projectors

The matrix determinant lemma implies that every NR iterate \(\varvec{a}\) must satisfy

i.e., a condition that is trivial to check. Most importantly, \(\varvec{ m}_\beta \) is independent of the current iteration, i.e., it can be precomputed.

If starting from a previously admissible point \(\varvec{a}_0=\varvec{a}^{[k-1]}\) an inadmissible iterate \(\varvec{a}_1 \leftarrow \varvec{a}_0 + \delta \varvec{a}\) is detected, we compute \(\beta _i = \varvec{a}_i \cdot \varvec{ m}_\beta \) (\(i\in \{0, 1\}\)). Obviously \(\beta _0\) is admissible while \(\beta _1\) is not. We suggest to compute a selectively back-projected admissible coordinate \(\beta _*\) as the mid point between the admissible \(\beta _0\) and the critical value \(\beta _{\mathrm{c}} \in \{ \beta _+, \beta _-\}\) (the value which is exceeded by \(\beta _1\) is taken, see Algorithm 2):

Other than the approach suggested in [16] our selective back-projection yields a different (admissible) iterate by adjusting only the part of \(\varvec{a}\) contributing to \(J_\pm \):

It leaves the part of \(\varvec{a}\) not interacting with the volume constraint – that is, the part of \(\varvec{a}_1\) that is orthogonal to \(\varvec{ m}_\beta \) – unaltered. Only the part of \(\varvec{a}_1\) that is co-linear with \(\varvec{ m}_\beta \) is modified such that a valid iterate is obtained. Using the projectors (40), this is equivalent to:

This induces that a large part of the NR update is preserved. No additional hyperparameters, line searches, and constitutive evaluations are required.

The increased robustness due to the selective back-projection is of utmost relevance for actual finite strain simulations, particularly in FE2 like settings. This holds a fortiori if composite voxels with rather small phase volume fractions go along with pronounced phase contrast and high load increments, see Sect. 9.

As mentioned earlier, our algorithm shares similarities with the backtracking algorithm proposed in [16]. Both approaches use the matrix determinant lemma to check for inadmissible iterates. The backtracking of [16] is employed as a line search strategy to compute an optimal step size in the admissible range, leading to scalar backtracking of \(\varvec{a}\) and a damped NR method. This induces additional line search parameters as well as additional function evaluations. Contrary to that, our selective back-projection algorithm requires no extra constitutive evaluations and ensures an admissible update unconditionally in the absence of additional hyperparameters.

5 Identification of the normal vector for composite voxels and boxels

5.1 Existing procedures and complications

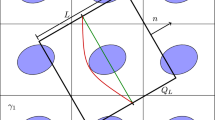

Composite voxels and boxels (non-equiaxed voxels) require the normal vector \(\varvec{N}\) alongside the phase volume fractions \(c_\pm \). While \(c_+\) and \(c_-\) are easy to compute from averaging over the composite voxel or boxel, identifying the normal vector \(\varvec{N}\) is not without issues. It is also evident that the quality of the normal information is critical to the performance of the composite boxels. The original proposal of Kabel and colleagues [15] uses the direction of the line connecting the barycenter of the dominant of the two phases within the composite voxel/boxel against the center of the voxel as an approximation of the normal vector, see Fig. 1. A trivial computation shows that this is equivalent to having the normal defined via the connecting line of the barycenters:

In the present work, the normal is assumed to point out of the inclusion phase (indexed by \(+\)).

The approach (45) has several advantages to it: The implementation is rather trivial, the computation is rapid, and it is easy to guarantee that the normal \(\varvec{N}\) points from phase 1 (denoted inclusion phase in the sequel) into phase 0 (denoted matrix phase in the sequel). However, the use of the barycenters \(\varvec{c}_\pm \) for the normal detection is not without issues, as can be seen from the examples shown in Fig. 1. At low volume fractions, the normal direction will depend only on the position of the phase rather than on the actual interface (compare (c) and (d) in Fig. 1), and the method does not work for boxels that differ from equiaxed composite voxels by having (sometimes pronounced) aspect ratios, see Fig. 2. This occurs if the number of voxels inside the composite boxel varies along the different edges (with fine-scale voxels being equiaxed, e.g., Fig. 2, top). For instance, this can be useful in order to reduce the resolution in pseudo-unidirectional fiber reinforced materials; see Sect. 6.5 for examples.

In order to allow for an improved normal computation over (45), the authors suggest a novel strategy that can be broken down into two steps:

- N.1:

-

compute an indicator for the interface between the phases using a discrete Laplacian resulting in discrete weights on the fine grid;

- N.2:

-

within composite boxels that contain a material interface define the normal vector via a minimization problem.

The individual steps are described below. A free python implementation is available via an open access software accessible via GitHub ( [39]), including the option to process HDF5 files easily [40] (see also the documentation and tutorial given in the repository’s Jupyter notebook).

5.2 Interface indication via a discrete Laplacian

In the following, the Laplace filter commonly used in image processing is introduced. It can be employed for edge detection. For simplicity, the Laplace stencil is defined by building on a first-order finite difference gradient operator along each coordinate axis. Therefore, the 3D stencil illustrated in Fig. 3 is utilized.

In 1D for grid spacing \(h>0\), the derivative at position \(x_i\) and the second derivative (gathered from the subsequent application of the first derivative) read

Setting \(f_j=f(x_j)\) to value 1, if the inclusion phase is found at \(x_j\) and to 0 otherwise, the Laplacian will be 0, if and only if all pixel values within the stencil are identical. Therefore, only point triples hosting more than one phase will lead to a nonzero Laplacian. Further, pixels with positive and negative values will be found, which represent inside and outside voxels related to the interface, respectively. For 2D and 3D boxels, the stencil is composed of uniaxial Laplace stencils along the coordinate axes, using the respective h value corresponding to the fine-scale boxel dimension along the respective direction \((h_x, h_y, h_z)\), see also Fig. 3. The Laplace stencil will be weighted by the boxel volume. Thereby, the scalar factor will get a neat physical interpretation:

The ratio \(r_i\) expresses the ratio of the area of the boxel face with normal direction \(\varvec{e}_i\) divided by the boxel dimension along direction \(\varvec{e}_i\). After application of the stencil \({{{\mathcal {S}}}}\) to the image, the absolute value is taken to gain the discrete weights

The convolution is carried out in the Fourier domain, which automatically enforces periodic boundary conditions for the normal detection of inclusions crossing the edges of the computational domain. Of course, also a direct computation would be feasible at a comparable compute cost. The weights are now processed for each composite boxel attributed with multiple material phases, i.e., with volume fraction \(0< c_-< 1\). On each of these boxels, a weighted least squares problem is set up in order to identify the normal orientation. Therefore, on the boxel \(\Omega _{\mathrm{B}}\) the second moment tensor

is computed, where \(\varvec{x}_{ijk}\) denotes the coordinates of the voxel with discrete coordinates (i, j, k)Footnote 4. Next, the eigenvector matching the smallest eigenvalue of \(\underline{\underline{M}}\) is used as the initial normal \(\varvec{N}\). In a second step, the direction of the normal \(\varvec{N}\) is identified. Therefore, the vector connecting the barycenters of the material phase within the boxels \(\widetilde{\varvec{N}}\) given by (45) of the original approach [15] is considered to identify the proper sign of the proposed \(\underline{N}\):

Thereby, the normal is guaranteed to point out of the \(+\) phase.

The example shown earlier in Fig. 2 has been used with our approach, see Fig. 4. The interface voxels are shown in red with darker tones denoting increased weights. Note the good correlation of the analytically defined normal \(\varvec{N}_0\) used to generate the discrete image and the normal reconstructed using the proposed procedure. The collinearity of the two vectors is \(\varvec{N}_0 \cdot \varvec{N}= 0.99998\), where the interface detection was effected using matching padding for the analytical normal. This compares against \(\varvec{N}_0 \cdot \widetilde{\varvec{N}} = 0.7761\) for the normal shown in Fig. 2 obtained from (45).

Normal vector for the same example as in Fig. 2 but using the proposed algorithm; interface voxels are red; \(\varvec{c}_S\) is the barycenter of the interface

Another comparison is shown in Fig. 5. Here an input image consisting of 2563 voxels containing a centered sphere of radius \(r=0.4\,L\) (with L denoting the edge length of the cube) is coarsened using composite voxels of size 323, i.e., the information is compressed by a factor 323=32 768 yielding a coarse scale resolution of just 83. For each composite voxel the normal is computed using either the approach from (45) [15] (Fig. 5a) and the approach presented in this Section (Fig. 5b). In this visualization, the actual facets are reconstructed from the normal and the volume fraction \(c_+\). By the metric of vision, the normals of the old approach graphs are less regular. Obviously, the facets reconstructed from these are also not leading to an accurate approximation of the curved surface of the sphere (e.g., gaps/overlaps). Our procedure leads to visually more accurate normals. This is confirmed by the planar facet reconstruction that is almost entirely devoid of gaps and overlaps.

(Top view) Comparison of the established normal computation [15] a, c and the proposed approach b, d with composite boxels of size \(32\times 32\times 32\) and \(32\times 16\times 8\) respectively. Input image of size 2563

Next, we have repeated the previous comparison by scaling down from 2563 to 8\(\times \)16\(\times \)32, i.e., introducing non-equiaxed composite boxels of size 32\(\times \)16\(\times \)8. The top views of the concurrent approaches for the normal computation are compared in Fig. 5. The difference when using boxels is massive, with large gaps showing in Fig. 5c for the old method, while basically no gaps and minimal overlap is found in Fig. 5d for the improved normal identification. The results from Figs. 4 and 5 are consistent. They emphasize the relevance of using a dedicated scheme for normal identification. This is evermore so true in the presence of small volume fractions and/or non-equiaxed boxels.

6 Numerical examples

6.1 Material models and loading conditions

In this section, we will focus on the key aspects and novelties of the proposed composite boxel approach for mechanical applications at finite strains. We assume that the materials obey a compressible Neo-Hookean material model

The Young’s modulus \(E_\pm \) and the Poisson ratios \(\nu _\pm \) for the inclusion and the matrix phase are denoted by the respective subscripts. We have deliberately chosen a rather distinct contrast by setting the synthetic parameters

Note that Young’s modulus has a distinct contrast of 10, exceeding that of literally all practical metal matrix composites, while the difference in the Poisson ratio is also pronouncedFootnote 5. Elevated contrast in the Poisson ratio is usually detrimental to the convergence of FFT-based schemes as it alters the collinearity of the stiffness of the contained phases, see [11] for a convergence study.

The macroscopic deformation gradient \({\overline{\varvec{F}}}\) imposed on the RVE in the homogenization problem (HOM) is chosen to be 50% pure shear in component \(\overline{F}_{xy}\)

The authors also emphasize the arbitrary selection of the material models (trying to trigger elevated material contrasts) and of the imposed kinematic loading. In view of reproducibility, we have opted for a simple unambiguous model and a substantial loading considering multiscale applications.

6.2 Spherical inclusion

Note that all of the following examples are truly 3D, although some slice views could lead to the misinterpretation of a 2D problem.

First, we look at a benchmark problem with a spherical inclusion of radius \(R = 0.4\) embedded in a 3D matrix. We aim to identify the impact of a more accurate identification (Sect. 5) for both equiaxed and non-equiaxed composite boxels. The influence on the phase averages is also studied. We start from a fine-scale microstructure of \(256^3\) voxels which is down-scaled to a resolution of \(32^3\) voxels (downscale factor 512). The coarsened discretization comprises 2624 (\(\sim \) 8%) composite voxels. In each composite voxel, the normal orientation \(\varvec{N}\) and the local phase volume fractions \(c_\pm \) are computed via Sect. 5 and using the established method of [15]. The homogenization problem is then solved via Fourier-Accelerated Nodal Solvers (FANS, [11]) using the 8-noded hexahedral finite elements. The convergence criterion is a relative tolerance of 10-10 with respect to the \(l^\infty \)-norm of the nodal force residual vector.

In Fig. 6, the converged solution is shown at the center slice \(Z = 0\). We employ a post-processing procedure that gathers individual field data for each phase and visualizes it using the planar interface with orientation \(\varvec{N}\).

It is obvious that this leads to a much smoother solution close to the interface, even within the individual phases. To our knowledge, a comparable visualization has not been used in previous studies. Further, we have visualized the tractions in the deformed configuration (Fig. 6c), which are unavailable in both non-conforming discretizations as well as in usual conforming FE discretizations.

Regarding the accuracy of the effective stress tensor \(\overline{\varvec{P}}\) and the phase averages \(\overline{\varvec{P}}_\pm \), a study was performed which compares the 2563 reference solution using the procedure suggested in [9] for five different ComBo discretizations using reduced integration CG-FANS [11] (FANS HEX8R) and using the normals from (45) matching previous studies as well as the improved normals from Sect. 5. The results are summarized in Table 1. Note that the average stress only reflects part of the actual accuracy of the solver as it neglects local field fluctuations, which are examined in the following examples, see Sect. 6.3. It can be noted that the errors in \(\overline{\varvec{P}}\) of all composite discretizations are below 1.15% for the normals of [15] and 0.78% for the normals suggested in Sect. 5, respectively, i.e., the improved normals reduce the error in all averaged stresses by approximately 30% for equiaxed composite voxels and up to 1 000% for the non-equiaxed composite boxels. Remarkably, these improvements come at no additional computational expense, but they owe only to the more accurate orientation information.

6.3 Composite boxels and local solution field quality

The straightforward implementation of composite boxels into various FFT-based schemes makes them truly versatile. Here, we present some of the most popular methods used in tandem with composite boxels. Most importantly, the composite boxel method can be used to replace any call to a constitutive model, independent of the discretization method.

In Fig. 7, we consider a random polyhedral inclusion surrounded by the matrix material. The fine-scale microstructure is generated at a resolution of 2563. The ComBo discretization is 323 with normals gained from Sect. 5, yielding a 512 times smaller problem. We further go on to compare the full field solutions for the \(E_{XY}\) component of the Green-Lagrange strain at the center slice (\(Z=0\)) for various popular FFT-based solution strategies. A reference solution is computed on the original fine-scale problem (without any composite boxels).

The solution using FANS with full integration (8 Gauss points per element) finite elements (HEX8) is shown next to the reference. It exhibits no staircasing and no artifacts even close to the interface. The solution quantitatively captures the behavior well in the interior of the phases and near the phase boundaries compared to the reference solution, even for this coarse discretization. Stereotypical of the fully integrated HEX8 elements, the response is a tad stiffer, which is reflected in the phase-wise averages, Table 2. The solution obtained by the staggered grid approach, despite its advantages in an algorithmic sense, lacks symmetry in the physical location of the strain components in each voxel. Hence, only an interpolated measure can be obtained at the boxel center for visualization. This ambiguity, unfortunately, leads to poor results close to the material interface despite the use of the ComBo discretization, which is also reflected in the homogenized quantities. This issue can partially be overcome by the proposed double-fine material grid (DFMG, see Appendix A) approach at the cost of extra material law evaluations compared to the original staggered grid approach. The DFMG approach also interpolates the field quantities to the boxel center, which causes blurring/smoothing everywhere, including the material boundaries: local solution field accuracy is sacrificed, although DFMG outperforms the staggered grid approach, and it preserves existing symmetries. FANS HEX8R, i.e., with reduced integration (1 Gauss point per element), is numerically similar to the HEX8R discretization by Willot [9], as shown by [10]. The FANS HEX8R solution suffers from hourglassing, but the amplitude of the hourglass modes is greatly diminished in the presence of composite boxels compared to when no composite boxels are in use. The convergence behavior of FANS HEX8R is very much on par with FANS with fully integrated HEX8 elements. Finally, we also employ the original Moulinec-Suquet scheme, which has been extensively studied in the literature. It suffers from spurious oscillations, although predicting the homogenized quantities very well.

6.4 Selective back-projection in practice

The selective back-projection introduced in Sect. 4.2 is investigated for the polyhedral microstructure of the previous Sect. 6.3. First, we demonstrate the influence it has on the convergence of the NR scheme inside of a single critical voxel, see Fig. 8.

The traction balance residual vs. material Jacobian of phase \(-\) is plotted in a particular case when back-projection is employed. In the case discussed, the volume fraction of the inclusion phase \(+\) is 99.625%. The Newton-Raphson algorithm 1 starts with an admissible initial guess (at \(k=0\)), but the naive NR-update would push the iterate \(\varvec{a}^{[1]}\) to the inadmissible domain, which is highlighted in red in Fig. 8. The solid blue line tracks the continuous intermediate residual between the current iterate \(\varvec{a}^{[k]}\) and the subsequent iterate \(\varvec{a}^{[k+1]}\) along a line search parameter. Note that if either one of \(J_\pm \) tends to 0, the residual tends to \(\infty \), which is characteristic of \(\log \) based hyperelastic strain energies [41]. It is also noteworthy that, although the solution lies in between \(\varvec{a}^{[0]}\) and \(\varvec{a}^{[1]}\) in this plot, the continuous residual does not go to zero anywhere. This is due to the projection onto the \(J_-\) axis (corresponding to the \(\beta \) axis modulo scaling) which cannot account for incorrect components \(\varvec{ M}^\perp _\beta \varvec{a}^{[k]}\) – i.e., a mere line search cannot suffice, in general. Using the selective back-projection algorithm 2 yields a valid iterate \(\varvec{a}^{[1]}\). From there on, the NR algorithm 1 converges to a physically feasible solution within six iterations up to machine precision, and in practice, one could stop after just four iterations. Each iteration of our NR algorithm equates to a single evaluation of the constitutive law for either phase, independent of whether selective back-projection is needed.

Despite striking similarities with the algorithm proposed in [16], we developed our scheme independently since we observed that it was needed during the simulations. While the authors of [16] state that “The projection and backtracking steps occur only rarely”, we would like to emphasize that a single inadmissible iterate can break the entire simulation (e.g. leading to negative \(J_\pm \) used in \(\log \)-energies). Further, we have investigated the percentage of the composite boxels that require selective back-projection as a function of the loading for the polyhedral inclusion, see Fig. 9.

Percentage of boxels (Problem c.f. Sect. 6.3) with failed naive N-R updates for mixed displacement gradient loading conditions

It turns out that our algorithm is needed in a majority of the composite voxels under certain loading conditions. This becomes critical in actual two-scale simulations, where spuriously high loadings are frequently observed in isolated points, especially in the compression regime.

On a side note, we would like to emphasize that in our tests, the old normal direction cf. [15] further increased the number of failed iterates. Hence, using the normal detection from Sect. 5 alongside Algorithm 2 improves on the robustness in both regards.

6.5 Short fiber reinforced microstructures

In this Section, we investigate fiber-reinforced composites with global fiber directional affinity to show the effectiveness of non-equiaxed composite boxels in certain use cases. We consider the two microstructures shown in Fig. 10a and b referred to as [FPR-1] and [FRP-2] respectively. Microstructure [FRP-1] is a fibrous microstructure with almost aligned fibers with a fiber volume fraction of \(\sim 4 \%\) primarily oriented along the X-axis. This problem is down-scaled to varied equiaxed and non-equiaxed resolutions starting from a fine-scale image comprising 5043 voxels tabulated in Table 3. Similarly, the microstructure [FRP-2] hosts 150 fibers almost aligned along the X-axis with a fiber volume fraction of \(\sim 15\%\). The fibers have a length of \(120\mu m\), a diameter of \(12\mu m\), and a minimum fiber distance is about \(2\mu m\). This problem is down-scaled to varied equiaxed and non-equiaxed resolutions starting from a fine-scale image comprising 2403 voxels tabulated in Table 4.

The overall homogenized first Piola-Kirchoff stress and its phase-wise counterparts are compared towards a reference solution computed without the use of composite boxels and using the FFT solver proposed in [9] while the coarse-grained models are solved using FANS HEX8R.

Figure 10c and d show that the ComBo discretization leads to reasonable local stress fields despite the use of massively anisotropic grids. Thereby, the resolution of the simulation can adapt to the aspect ratio of the fibers, allowing for distinct computational gains while, at the same time, the lateral resolution can remain sufficiently fine to gain (a) accurate orientation data (Sect. 5) and (b) to separate individual fibers. Both can be achieved without growing the number of degrees of freedom. For instance, [FRP-1] is resolved 3.5 times coarser along the fiber axis, yielding a speed-up and memory savings in the same order against an equiaxed grid. For [FRP-2] several ComBo discretizations are compared against a reference solution regarding \(\overline{\varvec{P}}, \overline{\varvec{P}}_\pm \) in Table 3. The overall accuracy was better than 1% with improvements independent of the boxel aspect ratio as the number of boxels grows. The accuracy within the inclusion phase is impressive, given that the fibers make up only \(\sim 4 \%\) of the material. Similar trends are observed in Table 4 for the [FRP-2] problem, where the use of non-equiaxed boxels becomes vital in the need to resolve very small fiber distances. Much coarser non-equiaxed resolutions still perform better than corresponding equiaxed resolutions outlining the need to resolve the lateral plane of the fibers properly. It can also be observed that modest improvements are seen when the resolution along the fibers is increased, while much more substantial improvements are observed when the fiber lateral plane is refined.

We also extract the Von-Mises Cauchy stress: overall and phase-wise histograms for the [FRP-2] problem as shown in Fig. 11, in order to judge the quality of the full field solution. The stress distributions for the equiaxed \(48^3\) (Downscale : 125) and the non-equiaxed \(30\times 60\times 60\) (Downscale: 128) is compared against a reference solution \(240^3\). Although by the metric of vision, we can observe a better agreement with the non-equiaxed resolution against the reference solution, the corresponding cumulative squared deviations of the distributions from the reference stress histogram are plotted, which unequivocally states that the non-equiaxed resolution has lower error across the board and thus captures the over-all field solution better.

7 Résumé

7.1 Summary

We present an extension of the composite voxel/boxel (anisotropic voxel) approach of [15] towards finite strain hyperelasticity for FFT-based homogenization schemes similar to [16]. The foundations of FFT-based homogenization are recalled in Sect. 3, and a detailed description of the doubly-fine material grid which can rule out some issues of the staggered grid approach [10] regarding the local field accuracy, is outlined in Appendix A.

The detailed algorithmic treatment of the composite voxels/boxels in Sect. 4 yields low-, i.e., d-dimensional nonlinear equations to be solved with explicit Hessians being provided for infinitesimal and finite strain problems; see also the cheat sheet in Appendix 5. The algorithmic tangent operator of the composite voxels is provided too, and it has a sleek representation with a simplistic implementation. A crucial ingredient in composite boxel finite strain simulations is the back-projection scheme described in Algorithm 2. It ensures the admissibility of the deformation in either of the laminate phases at negligible computational overhead but much-increased robustness.

When examining composite voxels and boxels, some issues with the normal detection [15] were found. In Sect. 5, an improved algorithm for normal identification is suggested, which leads to considerable improvements in the laminate orientation within the composite voxels. The proposed algorithm can also process composite boxels (ComBo) characterized by non-equiaxed coarsening, which was impossible using the approach by [15]. The procedure was shown to yield accurate normals for different microstructures. An open-source python implementation with examples can be accessed through the GitHub repository [39]. It also features 3D tools for visualization and a tutorial demonstrating the usage.

In Sect. 6 a variety of different microstructures are simulated using different FFT-based solvers, different normal detection procedures, and using different coarse-grained resolutions. The results demonstrate that the local fields using the ComBo discretization are closely matching full resolution solutions. FANS HEX8 and HEX8R [11, 12] were found to yield the smoothest representation of the local fields. Despite the tendency of HEX8 to overestimate the stresses, this discretization has the smoothest stress fields. Moreover, the suggested normal detection was shown to provide notably improved accuracy on the overall stress response as well as for the phase-wise averaged stresses (see Tables 1, 2, 3 and 4). The improvement for actual boxels was even more notable. Further, the stress statistics for equiaxed and non-equiaxed resolutions with similar downscale factors hint at the improved quality of the local stresses for the same downscale factor. Additionally, the normals were shown to deliver convergence of the effective stresses that depends mainly on the number of DOF, i.e., the overall amount of coarse-graining, see Tables 3 and 4. Computational savings of 2 000 and beyond at errors around 1% in the phase-averaged stresses are observed.

7.2 Discussion

First up, the authors are thoroughly convinced that composite boxels have proven to be a valuable addition to many established FFT-based homogenization schemes. They allow for impressive computational savings in CPU time and memory (factor 2 000 and beyond) at a modest—if any—sacrifice in accuracy. The accuracy was further improved by using the novel strategy for the normal detection from Sect. 5. It leads to a reduction of approximately 30% in the relative errors of the effective stress \(\overline{\varvec{P}}\) and its phase-wise counterparts \(\overline{\varvec{P}}_\pm \) even for relatively smooth and simple microstructures. In the case of anisotropic boxels, more distinct improvements in the error were found. Surprisingly, in the presence of composite boxels, the number of DOF of the system seems to be the primary influence factor regarding the accuracy of the simulation even when pronounced boxel anisotropy is considered. A key advantage of non-equiaxed boxels is that pseudo-unidirectional fiber separation can be granted without growing the number of degrees of freedom of the problem while retaining accuracy.

We think that this can leverage the simulations in certain fields, e.g., for discontinuous short fiber composites with pronounced aspect ratios (e.g., 20 and beyond) and pseudo unidirectional fiber orientation. We are also convinced that making the source code for the normals freely available [39] could help in rendering composite boxels an attractive choice in academia and industry. In the future, we are confident that more refined comparisons of the actual solution fields of ComBo and high-resolution simulations will lead to further insights regarding the accuracy and overall efficiency.

By the introduction of the doubly-fine material grid for the staggered grid discretization [10], considerable improvements with respect to the quality of the local fields were observed. However, these come at the expense of a distinct rise in the number of constitutive evaluations and little gain regarding the overall homogenized response. Therefore, this method is probably best suited when local solution fields are sought-after. In this regard, FANS [11], or the equivalent FFT-\(Q_1\) Hex [12] show the most confidence-inspiring results: local fields are smooth and match the reference solution closely; hour-glassing is less distinct (HEX8R) or absent (HEX8) than in the almost identical discretization of [9]. It is important to state that the use of the ComBo discretization comes without issues.

The application of composite voxels/boxels in finite strain homogenization problems revealed that particular care should be taken in view of considering physical constraints: The selective back-projection algorithm (Algorithm 2) demonstrates that robustness can be gained and (sometimes unrecognized) physically questionable iterates might occur in practice. We are confident that the presented algorithm is a leap forward. Despite this improvement, the realizable loading can still be limited, particularly when the phase volume fractions within the composite boxels tend towards 0 or 1 and the contrast in stiffness is pronounced. This can imply that the deformation gradient can approach critical states not just in the laminate but on the overall ComBo voxel (denoted \(\varvec{F}_{\square }\)). Finding further refinements to the simulation scheme could further boost robustness, giving rise to future research topics.

An important message is also given by demonstrating the usefulness of ComBo discretizations for a rich set of different discretizations: FANS HEX8(R)/FFT-\(Q_1\) Hex [11, 12], DFMG, staggered grid [10] the rotated grid scheme of Willot [9], and the classical Moulinec–Suquet scheme [6, 7] were all used with success and building on the same implementation. The authors would like to emphasize that the approach is, however not limited to FFT-based schemes: regular Finite Element and Finite Difference schemes could use them too, yielding potential benefits without the intrusiveness of, e.g., the extended finite element method (X-FEM, e.g., [42]).

Related to the recent progress reported by [43], the extension of our framework for interface mechanics in the small and finite strain setting is a promising route. Major benefits due to the improved normal orientations from Sect. 5 are expected: Both, the local solution fields as well as the (thereby influence) interfacial tractions are assumed to gain in accuracy.

Last, an equivalent to composite boxels for materials with more than two phases is urgently needed, e.g., in order to deal with polycrystals.

8 Supplementary information

The normal detection algorithm is available from [39].

Notes

In the nonlinear kinematic regime, these correspond to the Taylor and Sachs approximation, respectively.

Symmetry is exploited.

(slightly different to that used, e.g., in [17])

It is assumed that \(\varvec{x}_{ijk}\) is relative to the barycenter of \(\Omega _{\square }\).

This is equivalent to a contrast of 25 in the bulk modulus K and a contrast of 7.69 in the shear modulus G.

References

Arbenz P, van Lenthe GH, Mennel U, Müller R, Sala M (2008) A scalable multi-level preconditioner for matrix-free \(\mu \)-finite element analysis of human bone structures. Int J Numer Methods Eng 73(7):927–947. https://doi.org/10.1002/nme.2101

Arbenz P, Flaig C, Kellenberger D (2014) Bone structure analysis on multiple GPGPUs. J Parallel Distrib Comput 74:2941–2950. https://doi.org/10.1016/j.jpdc.2014.06.014

Kanit T, Forest S, Galliet I, Mounoury V, Jeulin D (2003) Determination of the size of the representative volume element for random composites: statistical and numerical approach. Int J Solids Struct 40(13–14):3647–3679. https://doi.org/10.1016/S0020-7683(03)00143-4

Andrä H, Combaret N, Dvorkin J, Glatt E, Han J, Kabel M, Keehm Y, Krzikalla F, Lee M, Madonna C, Marsh M, Mukerji T, Saenger EH, Sain R, Saxena N, Ricker S, Wiegmann A, Zhan X (2013) Digital rock physics benchmarks - Part I: imaging and segmentation. Comput Geosci 50:25–32. https://doi.org/10.1016/j.cageo.2012.09.005

Andrä H, Combaret N, Dvorkin J, Glatt E, Han J, Kabel M, Keehm Y, Krzikalla F, Lee M, Madonna C, Marsh M, Mukerji T, Saenger EH, Sain R, Saxena N, Ricker S, Wiegmann A, Zhan X (2013) Digital rock physics benchmarks - Part II: computing effective properties. Comput Geosci 50:33–43. https://doi.org/10.1016/j.cageo.2012.09.008

Moulinec H, Suquet P (1994) A fast numerical method for computing the linear and nonlinear mechanical properties of composites. Comptes rendus de l’Académie des sciences. Série II, Mécanique, physique, Chimie, astronomie 318(11), 1417–1423

Moulinec H, Suquet P (1998) A numerical method for computing the overall response of nonlinear composites with complex microstructure. Comput Methods Appl Mech Eng 157(1–2):69–94. https://doi.org/10.1016/s0045-7825(97)00218-1

Michel JC, Moulinec H, Suquet P (2001) A computational scheme for linear and non-linear composites with arbitrary phase contrast. Int J Numer Meth Eng 52(12):139–160. https://doi.org/10.1002/nme.275

Willot F (2015) Fourier-based schemes for computing the mechanical response of composites with accurate local fields. Comptes Rendus Mécanique 343(3):232–245. https://doi.org/10.1016/j.crme.2014.12.005

Schneider M, Ospald F, Kabel M (2016) Computational homogenization of elasticity on a staggered grid. Int J Numer Meth Eng 105(9):693–720. https://doi.org/10.1002/nme.5008

Leuschner M, Fritzen F (2018) Fourier-accelerated nodal solvers (FANS) for homogenization problems. Comput Mech 62(3):359–392. https://doi.org/10.1007/s00466-017-1501-5

Schneider M, Merkert D, Kabel M (2017) Fft-based homogenization for microstructures discretized by linear hexahedral elements. Int J Numer Meth Eng 109(10):1461–1489. https://doi.org/10.1002/nme.5336

Vondřejc J, Zeman J, Marek I (2014) An FFT-based Galerkin method for homogenization of periodic media. Comput Math Appl 68:156–173. https://doi.org/10.1016/j.camwa.2014.05.014

Gélébart Lionel, Ouaki Franck (2015) Filtering material properties to improve FFT-based methods for numerical homogenization. J Comput Phys 294:90–95. https://doi.org/10.1016/j.jcp.2015.03.048

Kabel M, Merkert D, Schneider M (2015) Use of composite voxels in FFT-based homogenization. Comput Methods Appl Mech Eng 294:168–188. https://doi.org/10.1016/j.cma.2015.06.003

Kabel M, Ospald F, Schneider M (2016) A model order reduction method for computational homogenization at finite strains on regular grids using hyperelastic laminates to approximate interfaces. Comput Methods Appl Mech Eng 309:476–496. https://doi.org/10.1016/j.cma.2016.06.021

Kabel M, Fink A, Schneider M (2017) The composite voxel technique for inelastic problems. Comput Methods Appl Mech Eng 322:396–418. https://doi.org/10.1016/j.cma.2017.04.025

Uchic MD, Groeber MA, Dimiduk DM, Simmons JP (2006) 3D microstructural characterization of nickel superalloys via serial-sectioning using a dual beam FIB-SEM. Scripta Mater 55(1):23–28. https://doi.org/10.1016/j.scriptamat.2006.02.039

Fliegener S, Luke M, Gumbsch P (2014) 3D microstructure modeling of long fiber reinforced thermoplastics. Compos Sci Technol 104:136–145. https://doi.org/10.1016/j.compscitech.2014.09.009

Yvonnet J, Bonnet G (2014) A consistent nonlocal scheme based on filters for the homogenization of heterogeneous linear materials with non-separated scales. Int J Solids Struct 51(1):196–209. https://doi.org/10.1016/j.ijsolstr.2013.09.023

Jänicke R, Diebels S, Sehlhorst H-G, Düster A (2009) Two-scale modelling of micromorphic continua. Continuum Mech Thermodyn 21(4):297–315. https://doi.org/10.1007/s00161-009-0114-4

Suquet P (1985) Local and global aspects in the mathematical theory of plasticity. Plasticity today, 279–309

Feyel F (1999) Multiscale FE2 elastoviscoplastic analysis of composite structures. Comput Mater Sci 16(1–4):344–354. https://doi.org/10.1016/S0927-0256(99)00077-4

Kröner E (1977) Bounds for effective elastic moduli of disordered materials. J Mech Phys Solids 25(2):137–155. https://doi.org/10.1016/0022-5096(77)90009-6

Zeller R, Dederichs PH (1973) Elastic constants of polycrystals. Physica Status Solidi (B) 55(2):831–842. https://doi.org/10.1002/pssb.2220550241

Cooley JW, Tukey JW (1965) An algorithm for the machine calculation of complex fourier series. AMS Math Comput 19(90):297–301

Lahellec N, Michel JC, Moulinec H, Suquet P (2003) Analysis of inhomogeneous materials at large strains using fast fourier transforms 108:247–258. https://doi.org/10.1007/978-94-017-0297-3_22

Eisenlohr P, Diehl M, Lebensohn RA, Roters F (2013) A spectral method solution to crystal elasto-viscoplasticity at finite strains. Int J Plast 46:37–53. https://doi.org/10.1016/j.ijplas.2012.09.012

Kabel M, Böhlke T, Schneider M (2014) Efficient fixed point and Newton-Krylov solvers for FFT-based homogenization of elasticity at large deformations. Comput Mech 54(6):1497–1514. https://doi.org/10.1007/s00466-014-1071-8

Vinogradov V, Milton GW (2008) An accelerated FFT algorithm for thermoelastic and non-linear composites. Int J Numer Meth Eng 76(11):1678–1695. https://doi.org/10.1002/nme.2375

Gélébart L, Mondon-Cancel R (2013) Non-linear extension of FFT-based methods accelerated by conjugate gradients to evaluate the mechanical behavior of composite materials. Comput Mater Sci 77:430–439. https://doi.org/10.1016/j.commatsci.2013.04.046

Schneider M (2020) A dynamical view of nonlinear conjugate gradient methods with applications to FFT-based computational micromechanics. Comput Mech 66(1):239–257. https://doi.org/10.1007/s00466-020-01849-7

Schneider M (2021) A review of nonlinear FFT-based computational homogenization methods. Acta Mech 232(6):2051–2100. https://doi.org/10.1007/s00707-021-02962-1

Michel JC, Moulinec H, Suquet P (1999) Effective properties of composite materials with periodic microstructure: a computational approach. Comput Methods Appl Mech Eng 172(1–4):109–143. https://doi.org/10.1016/S0045-7825(98)00227-8

Leuschner M, Fritzen F (2018) Fourier-accelerated nodal solvers (FANS) for homogenization problems. Comput Mech 62(3):359–392. https://doi.org/10.1007/s00466-017-1501-5

Ospald F, Schneider M, Kabel M (2015) Computational homogenization of elasticity at large deformations on a staggered grid. In: Conference proceedings of the YIC GACM 2015, pp. 178–191. https://publications.rwth-aachen.de/record/480970

Merkert D, Andrä H, Kabel M, Schneider M, Simeon B (2015) An efficient algorithm to include sub-voxel data in FFT-based homogenization for heat conductivity 105:267–279. https://doi.org/10.1007/978-3-319-22997-3_16

Milton GW (2002) The theory of composites

Fritzen F. https://github.com/DataAnalyticsEngineering/ComBoNormal

The HDF Group (2022). https://www.hdfgroup.org/

Doll S, Schweizerhof K (2000) On the development of volumetric strain energy functions. J Appl Mech 67(1):17–21. https://doi.org/10.1115/1.321146

Loehnert S, Mueller-Hoeppe DS, Wriggers P (2011) 3D corrected XFEM approach and extension to finite deformation theory. Int J Numer Meth Eng 86(4–5):431–452. https://doi.org/10.1002/nme.3045

Chen Y, Gélébart L, Marano A, Marrow J (2021) FFT phase-field model combined with cohesive composite voxels for fracture of composite materials with interfaces. Comput Mech 68:433–457. https://doi.org/10.1007/s00466-021-02041-1

Acknowledgements

Funded by Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy - EXC 2075 - 390740016. Contributions by Felix Fritzen are funded by Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) within the Heisenberg program - DFG-FR2702/8 - 406068690. We acknowledge the support by the Stuttgart Center for Simulation Science (SimTech).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A A finite difference discretization on a staggered grid

Appendix A A finite difference discretization on a staggered grid

In the sequel, we describe the consistent formulation of the staggered grid discretization [10] for the geometrically nonlinear case [36]. Similar to the small strain case, the diagonal and off-diagonal components of the deformation gradient are located at different positions; compare Fig. 12. It is shown that ignoring this leads to unsatisfactory results, which can be significantly improved by using a doubly-fine material grid (DFMG). In contrast to the linear elastic small strain regime, however, the DFMG needs an increased number of evaluations of the nonlinear material law.

Fix positive integers \(n_1,n_2,n_3\) and consider a regular periodic grid consisting of \(n = n_1 n_2 n_3\) cells, each a translate of \([0,\, h_1]\times [0,\, h_2]\times [0,\, h_3]\) with \(h_j=\frac{l_j}{n_j}\) (\(j=1,2,3\)). Let \(i^+,j^+\) and \(k^+\) denote the location of the staggered grid coordinates, i.e., \(a^+=a+\tfrac{1}{2}\) for \(a=i,j,k\). For the \((i,\;j,\;k)\)-cell the coordinates of the displacement vector \((U_1,\;U_2,\;U_3)\) are located at the cell face centers, i.e., \(U_1[i,\;j,\;k]\) is located at the coordinate \((ih_1,\;j^+ h_2,\;k^+ h_3)\), \(U_2[i,\;j,\;k]\) lives at \((i^+ h_1,\;jh_2,\;k^+ h_3)\) and \(U_3[i,\;j,\;k]\) is associated to \((i^+ h_1,\;j^+ h_2,\;kh_3)\). The situation is displayed in Fig. 12a, where we have restricted ourselves to the 2D case for clarity.

The diagonal components \(F_{aA}[i,\;j,\;k]\) (\(a, A \in \{1,2,3\}\)) of the deformation gradient \(\varvec{F}\) are positioned on the cell centers \((i^+ h_1,\;j^+ h_2,\;k^+ h_3)\), whereas the off-diagonal strains \(F_{23},F_{32}[i,\;j,\;k]\), \(F_{13},F_{31}[i,\;j,\;k]\) and \(F_{12},F_{21}[i,\;j,\;k]\), are located on the corresponding edge midpoints \((i^+ h_1,\;jh_2,\;kh_3)\), \((ih_1,\;j^+ h_2,\;kh_3)\) and \((ih_1,\;j,\;(k^+ h_3)\). For visualization, we again refer to Fig. 12a.

Displacements and gradients are connected by central difference formulae, where periodicity is understood implicitly. More precisely, introduce forward and backward difference operators on a scalar discrete field \(\phi : V_n\rightarrow {\mathbb { R}}\) by the formulae

for \(I\in V_n=\left\{ 0,1,\ldots ,n_1-1\right\} \times \left\{ 0,1,\ldots ,n_2-1\right\} \times \left\{ 0,1,\ldots ,n_3-1\right\} \). Then we introduce the gradient operator

giving rise to the deformation gradient \(\varvec{F}=I+\mathrm{Grad}\left( \varvec{ U} \right) \) associated to a periodic displacement field

Similarly, there is a divergence operator, turning \(\varvec{P}:V_n\rightarrow {\mathbb { R}}^{3\times 3}\) into

The stress variable \(P_{aB}\) is located on the same position as the corresponding \(F_{aB}\).

The constitutive law \(\varvec{P}(\varvec{F})\) is defined piecewise on the regular voxel grid. For a typical material cell, shaded in gray in Fig. 12b, it is not clear how to apply the material law for off-diagonal strains, as the function \(\varvec{P}(\varvec{F})\) may be defined differently along the corresponding edge.

We circumvent these problems by utilizing a doubly-fine grid, i.e., a grid with half the spacing of the original grid. Figure 12b illustrates this concept—a typical doubly fine cell is shaded in orange. We interpret the deformation gradients and stresses as living on this doubly-fine grid. For every deformation gradient component \(F_{iJ}\) and every doubly-fine cell there is precisely one \(F_{iJ}\)-value, as specified in Fig. 12a, located on the boundary of the cell. We associate this value to the doubly-fine cell. Thus, a particular value \(F_{iJ}\) is distributed to the 4 (in 2D) or 8 (in 3D) adjacent doubly-fine cells, compare Fig. 13 for an illustration. The stress components are distributed similarly to the deformation gradients, i.e., the staggering of Fig. 13, is also present for the stresses.

With this assignment, to any doubly-fine grid cell all 4 (in 2D) or 9 (in 3D) deformation gradient components are associated. The discretization just outlined directly carries over to three space dimensions easily. The variable placement in this case is shown in Fig. 14.

We suppose that the constitutive material law is given on the original grid, i.e., each cell is associated with one material. We will elaborate on the implementation of the material law. By averaging over the combination of adjacent doubly-fine voxels, the material law can be written as

with

and

for

as well as

where

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Keshav, S., Fritzen, F. & Kabel, M. FFT-based homogenization at finite strains using composite boxels (ComBo). Comput Mech 71, 191–212 (2023). https://doi.org/10.1007/s00466-022-02232-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-022-02232-4