Abstract

The objective of this article is to analyse the statistical behaviour of a large number of weakly interacting diffusion processes evolving under the influence of a periodic interaction potential. We focus our attention on the combined mean field and diffusive (homogenisation) limits. In particular, we show that these two limits do not commute if the mean field system constrained to the torus undergoes a phase transition, that is to say, if it admits more than one steady state. A typical example of such a system on the torus is given by the noisy Kuramoto model of mean field plane rotators. As a by-product of our main results, we also analyse the energetic consequences of the central limit theorem for fluctuations around the mean field limit and derive optimal rates of convergence in relative entropy of the Gibbs measure to the (unique) limit of the mean field energy below the critical temperature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Overview

The study of large systems of interacting particles in the presence of noise has attracted a large amount of interest in recent years. This is largely due to the fact that they pose challenging mathematical questions and that they appear in several applications, ranging from the theory of random matrices [43] and the construction of Kähler–Einstein metrics [5] to the design of algorithms for global optimisation [28, 40], biological models of chemotaxis [20], and models of opinion formation [22].

We place ourselves in the setting of a system of weakly interacting diffusion processes as in [36]. It is well-known that, under appropriate assumptions on the interaction and confining potentials, one can pass to the mean field limit as \(N \rightarrow \infty \) to obtain the so-called McKean–Vlasov equation (1.14) for the limit of the N-particle empirical measure. More precisely, given chaotic initial data, the empirical measure associated to the system of particles converges weakly to the weak solution of the McKean–Vlasov equation. Formally, one can say that the law of the N-particle system decouples and converges to N copies of the mean field McKean–Vlasov equation. This corresponds to a strong law of large numbers (LLN) for the the empirical measure. A natural question to ask then is whether one can obtain a second order characterisation of this convergence, i.e. a central limit theorem (CLT).

Partial results in this direction do exist: Fernandez and Méléard [19] obtained a finite-time horizon version of the CLT. They showed that the fluctuations around the mean field limit are described in the large N-limit by a Gaussian random field which itself is the solution of a linear stochastic PDE. Additionally, Dawson [11] proved an equilibrium CLT for the empirical measure of a system of particles in a bistable confining potential and Curie–Weiss interaction. The interesting feature of Dawson’s system is that exhibits a phase transition, i.e. for a certain value of the interaction strength the system transitions from having one invariant measure to having multiple. Dawson showed that below the phase transition point equilibrium fluctuations are described by Gaussian random field, similar to the result in [19]. However, at the critical temperature the fluctuations become non-Gaussian and are given by the invariant measure of a nonlinear SDE. These non-Gaussian fluctuations are persistent and are characterised by a longer time scale, exhibiting the well known phenomenon of critical slowing down (cf. [44] for a less rigorous derivation of similar results). We are not aware of any results on the limiting behaviour of the fluctuations that have been obtained ahead of the phase transition.

Fluctuations around the McKean–Vlasov mean field limit for a system of weakly interacting diffusions with an internal degree of freedom were also studied recently in [3]. Under the assumption of scale separation between the macroscopic and microscopic dynamics, a large deviations principle (LDP) was established for the slow dynamics, valid in the combined limit of infinite scale separation (\(\varepsilon \rightarrow 0\), where \(\varepsilon \) measures the ratio between the small and the large scale in the problem) and of the number of particles going to infinity (\(N \rightarrow \infty \)). This LDP was then used to deduce information about the fluctuations around the mean field limit and to also offer partial justification for the so-called Dean equation, a stochastic partial differential equation used in dynamical density functional theory which combines, formally, the mean field limit and central limit theorem results for the system of weakly interacting diffusions. Furthermore, the connection between the LDP framework and the Chapman–Enskog approach to the study of the hydrodynamic limit was discussed in detail. The crucial assumption made by the authors was that the microscopic dynamics has a unique stationary state, i.e. that no phase transitions occur.

The prototype of the systems we consider is the following system of N interacting SDEs on \({\mathbb {R}}\):

Here the \(B_t^i\) are independent \({\mathbb {R}}\)-valued Wiener processes. The interesting feature about the above system is that the interaction potential is 1-periodic. As a consequence of this, the behaviour of (1.1) is influenced heavily by the corresponding quotiented process on \({\mathbb {T}}\) (the one dimensional unit torus). The quotiented system on the torus is in fact the noisy Kuramoto model for mean field plane rotators [6, 9].Footnote 1 Indeed (cf. Proposition 1.22), one can show that the corresponding mean field limit on the torus exhibits a phase transition. A more complete picture of the local bifurcations and phase transitions for the McKean–Vlasov equation on the torus can be found in [9].Footnote 2

In the spirit of Dawson, our main objective is to study fluctuations in the presence of phase transitions. However, instead of the phase transitions of the system on \({\mathbb {R}}\), we will be concerned with the phase transitions of the quotiented system on \({\mathbb {T}}\). Furthermore, we study the diffusive limit which can be thought of as the first step in understanding fluctuations of the N-particle system. Although we do discuss the implications of a full CLT (cf. Sect. 1.10), we concern ourselves in this paper mainly with the combined diffusive-mean field limits.

The problem that we study in this paper is closely related, and simpler, to the one studied in [3]: scale separation arises naturally in our case due to the disparity between the period of the interaction potential which is the characteristic length scale of the microscopic dynamics, and the long, diffusive length/time scale. The “hydrodynamics” in our problem is described by the (homogenised) heat equation, with the effective covariance matrix given by the standard homogenisation formula: compare Equation (1.19) below with formulas (3.14) and (3.15) in [3]. However, in contrast to [3] our main focus is on the effect of the presence of phase transitions at the microscopic scale on the effective/macroscopic dynamics. We are, in particular, interested on the effect of phase transitions on the (lack of) commutativity between the homogenisation and mean field limits.

Before we discuss what we mean by the combined limit, we remind the reader of what we mean by the diffusive limit. For a fixed number of particles \(N>0\) for the system in (1.1), a natural question to ask is how the law of the system behaves under the diffusive rescaling, i.e., if  then what is the limit as \(\varepsilon \rightarrow 0\) of \(\rho ^{\varepsilon ,N}\)? The answer to this question can be obtained by using classical arguments from periodic homogenisation [38, Chapter 20] [4]. It turns out that \(\rho ^{\varepsilon ,N}\) converges to \(\rho ^{N,*}\), the solution of the heat equation with a positive definite effective covariance matrix \(A^{\mathrm {eff},N}\)(cf. Sect. 1.8 and Equation (1.18)), which can be obtained by solving a Poisson equation for the generator of the process on \({\mathbb {T}}^N\) (cf. Equation (C)). Another way of reinterpreting this result is by saying that the system of particles (1.1) converges in law to an N-dimensional Brownian motion with covariance \(A^{\mathrm {eff},N}\). A natural next question to ask is: how does the covariance matrix \(A^{\mathrm {eff},N}\), and by extension the heat equation, behave in the limit as \(N \rightarrow \infty \)?

then what is the limit as \(\varepsilon \rightarrow 0\) of \(\rho ^{\varepsilon ,N}\)? The answer to this question can be obtained by using classical arguments from periodic homogenisation [38, Chapter 20] [4]. It turns out that \(\rho ^{\varepsilon ,N}\) converges to \(\rho ^{N,*}\), the solution of the heat equation with a positive definite effective covariance matrix \(A^{\mathrm {eff},N}\)(cf. Sect. 1.8 and Equation (1.18)), which can be obtained by solving a Poisson equation for the generator of the process on \({\mathbb {T}}^N\) (cf. Equation (C)). Another way of reinterpreting this result is by saying that the system of particles (1.1) converges in law to an N-dimensional Brownian motion with covariance \(A^{\mathrm {eff},N}\). A natural next question to ask is: how does the covariance matrix \(A^{\mathrm {eff},N}\), and by extension the heat equation, behave in the limit as \(N \rightarrow \infty \)?

One could also ask the question the other way around. As discussed previously, for a fixed \(\varepsilon >0\), we can pass to the mean field limit as \(N \rightarrow \infty \) in \(\rho ^{\varepsilon ,N}\) to obtain N copies of the solution of the nonlinear McKean–Vlasov equation, \(\rho ^{\varepsilon , \otimes N}\). The natural question to ask now is whether we can understand the behaviour of \(\rho ^{\varepsilon ,\otimes N}\) as \(\varepsilon \rightarrow 0\). This dichotomy is illustrated in Figure 1. Starting from the rescaled law \(\rho ^{\varepsilon ,N}\), we can take the limit \(\varepsilon \rightarrow 0\) first followed by \(N \rightarrow \infty \) if we move in the clockwise direction or the other way around in the anti-clockwise direction. Whether these two limits commute depends heavily on the ergodic properties of the quotiented process on \({\mathbb {T}}^N\) and its behaviour in the mean field limit. Our main result asserts that the two limits commute at high temperatures (small \(\beta \)) and thus the combined limit is well-defined in this regime. However, at low temperatures (large \(\beta \)) and in particular, in the presence of a phase transition (cf. Definition 1.18), we can construct initial data such that the two limits do not commute.

The problem of non-commutativity between the mean field and homogenisation limits was also studied in [24]. In this paper, a system of weakly interacting diffusions in a two-scale, locally periodic confining potential subject to a quadratic, Curie-Weiss, interaction potential was considered. It was shown that, although the combined homogenization-mean field limit leads to coarse-grained McKean-Vlasov dynamics that have the same functional form, the effective diffusion (mobility) tensor and the coarse-grained (Fixman) potential are different, depending on the order with which we consider these two limits (for non-separable two-scale potentials). In particular, the phase diagrams for the effective dynamics can be different, depending on the order with which we take the limits. A more striking manifestation of the non-commutativity between the two limits can be observed at small but finite values of \(\varepsilon \), the parameter measuring scale separation: it is easy to construct examples where the mean field PDE, for small, finite \(\varepsilon \) can have arbitrarily many stationary states, the homogenised McKean–Vlasov equation (corresponding to the choice of sending first \(\varepsilon \rightarrow 0\) and then \(N \rightarrow \infty \)) is characterised by a convex free energy functional and, thus, a unique steady state.

1.2 Set Up and Preliminaries

We denote by \({\mathbb {T}}^d\) the d dimensional unit torus (which we identify with \([0,1)^d\)) and use the standard notation of \({L}^p({\mathbb {T}}^d)\) and \( {H}^s({\mathbb {T}}^d)\) (\(s \in {\mathbb {R}}\)) for the Lebesgue and \({L}^2\)-Sobolev spaces, respectively. We will use \({H}^s_0({\mathbb {T}}^d)\) (\(s \in {\mathbb {R}}\)) to denote the closed subspace of all \({H}^s({\mathbb {T}}^d)\) functions with mean zero. These spaces are equipped with the following norms:

We denote by the \(C^k({\mathbb {T}}^d),C^\infty ({\mathbb {T}}^d)\) the space of k-times (\(k \in {\mathbb {N}}\)) continuously differentiable and smooth functions, respectively.

We denote by \({\mathcal {P}}(\Omega )\) the space of all Borel probability measures on \(\Omega \) having finite second moment, with \(\Omega \) some Polish metric space. We will use \(d_1\) and \(d_2\) to denote the 1 and 2-Wasserstein distances, respectively, on \({\mathcal {P}}({\mathbb {R}}^d)\) and \({\mathcal {P}}({\mathbb {T}}^d)\). Similarly we will use \({\mathfrak {D}}_1\) and \({\mathfrak {D}}_2\) for the 1 and 2-Wasserstein distances, respectively, on \({\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) and \({\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\). We will often identify a given \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) (resp. \({\mathcal {P}}({\mathbb {T}}^d)\)) with its density with respect to the Lebesgue measure, \(\rho \in {L}^1({\mathbb {R}}^d)\) (resp. \({L}^1({\mathbb {T}}^d)\)). In the sequel, any limit of a sequence of measures  unless otherwise specified should be understood as a limit in the weak-\(*\) topology relative to \(C_b(\Omega )\), i.e. tested against bounded, continuous functions. We will often use the same notation for a measure and its density if the density is well-defined.

unless otherwise specified should be understood as a limit in the weak-\(*\) topology relative to \(C_b(\Omega )\), i.e. tested against bounded, continuous functions. We will often use the same notation for a measure and its density if the density is well-defined.

We consider a large number \(N\in {\mathbb {N}}\) of indistinguishable interacting particles \(\{X_t^i\}_{i=1}^N\) in \({\mathbb {R}}^d\), where both the interaction and confining potentials are periodic and highly oscillatory. In particular, we consider the system

where \(W:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) and \(V:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) are smooth 1-periodic interaction and confining potentials, respectively, \(\varepsilon \ll 1\) is the period size, \(\beta >0\) is the inverse temperature, \(\rho ^{\varepsilon ,N}_0\in {\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\) is the initial distribution of the particles which might depend on the period size, and \(\{B^i_t\}_{i=1}^N\) are independent Wiener processes. Furthermore, we assume that W is even. We are interested in understanding the joint limit when the period of oscillations goes to 0 (\(\varepsilon \rightarrow 0\)) and the number of particles tends to infinity (\(N\rightarrow \infty \)).

We consider the joint law of the particle positions which is given by

where \({\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) is as defined in (1.5). The law evolves through the linear forward Kolmogorov or Fokker–Planck equation

where \(H^N_\varepsilon :({\mathbb {R}}^d)^N\rightarrow {\mathbb {R}}\) is given by

We note that under the conditions imposed of V, W, and \(\rho _0^{\varepsilon ,N}\), there exists a unique solution to (1.3). Indeed, we have the following result:

Theorem A

Assume \(\rho _0^{\varepsilon ,N} \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) (with second moment bounded) and that \(V,\;W:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) are smooth and 1-periodic. Then, there exists a unique weak solution to (1.3) \(\rho ^{\varepsilon ,N} \in C([0,\infty );{\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)) \) which is the gradient flow of (1.6) in the 2-Wasserstein metric. Furthermore, for all \(t>0\),

and \(\rho ^{\varepsilon ,N}\) satisfies (1.3) in the classical sense.

Proof

The existence and uniqueness of gradient flow weak solutions follows from [1, Theorem 11.2.8] and the fact that \(H^N_\varepsilon \) is \(\lambda \)-convex. The required regularity then follows from classical parabolic regularity theory (cf., for example, [32, Theorem 5.6]) and the fact that V and W are both smooth. \(\square \)

We remind the reader that in the sequel we will always use the above notion of solution when we refer to (1.3).

The main objective of this paper is to study

and understand under which regimes they coincide or differ. For the rest of this section we introduce the relevant notions that will play an important role in understanding these limits and present our main results. The result concerning the limit \(N \rightarrow \infty \) followed by \(\varepsilon \rightarrow 0\) can be found in Theorem 1.10, while the result concerning the limit \(\varepsilon \rightarrow 0\) followed by \(N \rightarrow \infty \) can be found in Theorem 1.16. We discuss the effect of the presence of a phase transition in Sect. 1.9. Finally, in Sect. 1.10 we discuss the implications of a CLT on the rate of convergence of the Gibbs measure before the phase transition. The proofs of the two main results, Theorems 1.10 and 1.16, can be found in Sects. 2 and 3, respectively. The proofs of other useful results related to the phenomenon of phase transitions are relegated to Sect. 4. Appendix A contains some coupling arguments which are useful for the proof of Theorem 1.10.

1.3 The Space \({\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) as the Limit of \({\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\)

The set up we consider is similar to that in [8]. We remark that due to the indistinguishability assumption on the particles their joint law is invariant under relabelling of the particles. In probability this is known as exchangeability, while in analysis this is referred to as symmetry and we denote the set of symmetric probability measures by \({\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\), i.e.

where A is any Borel set and \(\Pi \) is the set of permutations of the particle positions. Central to our work will be the classical result attributed to de Finetti [12] and Hewitt–Savage [27], that characterises the limit \(N\rightarrow \infty \) of \({\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\). Adapted to the set up of this paper, their result can be reformulated as follows:

Definition 1.1

Given a family \(\{\rho ^{N}\}_{N\in {\mathbb {N}}}\) such that \(\rho ^{N}\in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) we say that

if for every \(n\in {\mathbb {N}}\) we have

where \(X^n \in {\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^n\big )\) is defined by duality as follows

for all \(\varphi \in C_b(({\mathbb {R}}^d)^n)\) and

We will often suppress the \(N \rightarrow \infty \) and just write \(\rho ^N \rightarrow X\).

In particular, we can relate this definition with the usual chaoticity assumption. We will say that \(\{\rho ^N\}_{N\in {\mathbb {N}}}\) is chaotic with limit \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) if

in the sense of Definition 1.1. Additionally, the notion of convergence introduced in Definition 1.1 can also be interpreted in the following manner:

Definition 1.2

(Empirical measure) Given some \(\rho ^{N}\in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) we define its empirical measure \({\hat{\rho }}^{N} \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) as follows:

Here \(T^N: ({\mathbb {R}}^d)^N \rightarrow {\mathcal {P}}({\mathbb {R}}^d)\) is the measurable mapping \((x_1, \dots ,x_N)\mapsto N^{-1}\sum _{i=1}^N\delta _{x_i}\). Furthermore, given a family \(\{\rho ^{N}\}_{N\in {\mathbb {N}}}\), we have that \(\rho ^N \rightarrow X \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) if and only if \({\hat{\rho }}^N \rightharpoonup ^* X\).

We conclude this subsection with the following compactness result:

Lemma 1.3

(de Finneti–Hewitt–Savage) Given a sequence \(\{\rho ^N\}_{N\in {\mathbb {N}}},\) with \(\rho ^N\in {\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\) for every N, assume that the sequence of the first marginals \(\{\rho _1^N\}_{N\in {\mathbb {N}}}\in {\mathcal {P}}({\mathbb {R}}^d)\) is tight. Then, up to a subsequence, not relabelled, there exists \(X\in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) such that \(\rho ^N\rightarrow X\) in the sense of Definition 1.1.

For a proof and more details, see [8, 26, 41]. In the sequel, any limit of a sequence of symmetric measures  with \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) should be understood in the sense of Definition 1.1.

with \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) should be understood in the sense of Definition 1.1.

Remark 1.4

The above notion of convergence, i.e. Definitions 1.1 and 1.2, can be naturally extended to \({\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\).

1.4 Gradient Flow Formulation and the Mean Field Limit

In [8], the mean field limit (the limit \(N\rightarrow \infty \)) of the interacting particle system (1.3) is achieved by passing to the limit in the 2-Wasserstein gradient flow structure. The results of this article will build on this perspective which we briefly recall here:

The evolution of the joint law \(\rho ^{\varepsilon ,N}\) given by (1.3) is the gradient flow (in the sense of [1, Definition 11.1.1]) of the energy \(E^N: {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N) \rightarrow (-\infty ,+\infty ]\)

under the rescaled 2-Wasserstein distance \(\frac{1}{\sqrt{N}}d_2\) on \({\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\). Moreover, we have the following classical result of Messer and Spohn [35]:

Lemma 1.5

The N-particle free energy \(E^N\) \(\Gamma \)-converges to \(E^\infty :{\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\rightarrow (-\infty ,+\infty ]\), where

with \(E_{MF}:{\mathcal {P}}({\mathbb {R}}^d)\rightarrow (-\infty ,+\infty ]\) given by

That is to say, for every \(X \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) there exists a sequence  , \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) with \(\rho ^N \rightarrow X\) such that

, \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) with \(\rho ^N \rightarrow X\) such that

Additionally, for every \(X \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) and  , \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) with \(\rho ^N \rightarrow X\) it holds that

, \(\rho ^N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) with \(\rho ^N \rightarrow X\) it holds that

On the other hand we have a similar convergence for the metrics: \(\frac{1}{\sqrt{N}}d_2\) the rescaled 2-Wasserstein distance on \({\mathcal {P}}_{\mathrm {sym}}\big (({\mathbb {R}}^d)^N\big )\) converges to \({\mathfrak {D}}_2\) the 2-Wasserstein distance on \({\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\). Specifically, given two sequences \(\{\mu ^N\}_{N\in {\mathbb {N}}}\) and \(\{\nu ^N\}_{N\in {\mathbb {N}}}\) of symmetric probability measures such that \(\mu ^N\rightarrow X_1\) and \(\nu ^N\rightarrow X_2\) and

then

We can now state our result concerning the mean field limit, i.e. the limit \(N \rightarrow \infty \).

Theorem B

(Mean field limit [8]) Under the regularity assumptions on W and V, fix any \(\varepsilon >0\) and assume that \(\lim _{N\rightarrow \infty }\rho ^{\varepsilon ,N}_0=X^\varepsilon _0\in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) and

Then, for every \(t>0\),

where \(X^\varepsilon : [0,\infty ) \rightarrow {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) is the unique gradient flow of \(E^\infty \) under the 2-Wasserstein metric \({\mathfrak {D}}_2\) with initial condition \(X_0^\varepsilon \).

Moreover,

where \(X^\varepsilon _0=\lim _{N\rightarrow \infty }\rho ^{\varepsilon ,N}_0\) and \(S_t^\varepsilon :{\mathcal {P}}({\mathbb {R}}^d)\rightarrow {\mathcal {P}}({\mathbb {R}}^d)\) is the solution semigroup associated to the nonlinear McKean–Vlasov evolution equation

with \(W_\varepsilon (x)=W(\varepsilon ^{-1}x)\) and \(V_\varepsilon (x)=V(\varepsilon ^{-1}x)\).

Remark 1.6

As W and V are assumed to be smooth and periodic, using similar arguments as in the proof of Theorem A, one can show that for any \({\mathcal {P}}({\mathbb {R}}^d)\)-valued initial condition there exists a unique gradient flow solution to (1.8) which becomes smooth for any positive time. Similar arguments can be used for the notions of solution to the PDEs discussed in the next subsection.

1.5 Scaling and the Quotiented Process

We notice that the Fokker–Planck equation (1.3) behaves well under the parabolic scaling, i.e. given a solution \(\rho ^{\varepsilon ,N}\) of (1.3) we have that

is the solution to the Fokker–Planck equation at scale \(\varepsilon =1\), i.e.

The above equation naturally describes the evolution of the law of N-particle system (1.2) at scale \(\varepsilon =1\):

Since W and V are periodic, in order to that to understand the behaviour of \(\rho ^{\varepsilon ,N}\) in the limit as \(\varepsilon \rightarrow 0\), we must first understand the behaviour of the quotiented process  of (1.11) which lives on \(({\mathbb {T}}^{d})^N\) [31, Section 9.1] [4, Section 3.3.2]. Before we introduce the quotiented process, we define the following notion which will play an important role in the rest of the paper:

of (1.11) which lives on \(({\mathbb {T}}^{d})^N\) [31, Section 9.1] [4, Section 3.3.2]. Before we introduce the quotiented process, we define the following notion which will play an important role in the rest of the paper:

Definition 1.7

Given a measure \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) we define its periodic rearrangement at scale \(\varepsilon >0\) to be the measure \({\tilde{\rho }} \in {\mathcal {P}}({\mathbb {T}}^d)\), such that for any measurable \(A\subset {\mathbb {T}}^d\) it holds that

We will often just use the words periodic rearrangement when \(\varepsilon =1\).

Analogously, we have that  the quotient of the process

the quotient of the process  is the unique Markov process associated to the generator \(L: {H}^2(({\mathbb {T}}^d)^N) \rightarrow {L}^2(({\mathbb {T}}^d)^N)\)

is the unique Markov process associated to the generator \(L: {H}^2(({\mathbb {T}}^d)^N) \rightarrow {L}^2(({\mathbb {T}}^d)^N)\)

with initial law given by \({\tilde{\nu }}^N_0\), the periodic rearrangement of \(\nu ^N_0\) in the sense of Definition 1.7. One can check that the process  is a reversible ergodic diffusion process with its unique invariant or Gibbs measure \(M_N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\) given by

is a reversible ergodic diffusion process with its unique invariant or Gibbs measure \(M_N \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\) given by

As expected, the law \({\tilde{\nu }}^N(t)\) of the quotiented system (1.12) can be obtained by considering the periodic rearrangement of \(\nu ^N(t)\), the solution of (1.10) and it evolves according to the following PDE:

In analogy to the discussion in Sect. 1.4, the above PDE is the gradient flow of the N-particle periodic free energy

under the rescaled 2-Wasserstein distance \(\frac{1}{\sqrt{N}}d_2\) on \({\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\). Furthermore, the Gibbs measure \(M_N\) of the process (1.12) is the unique minimiser of \({\tilde{E}}^N\).

Similarly, we also notice that the nonlinear McKean–Vlasov equation (1.8) behaves well under the parabolic scaling. Specifically, given \(\rho ^\varepsilon \) a solution to (1.8), then

is a solution to the McKean–Vlasov equation at scale \(\varepsilon =1\),

It is well known that this describes the law of the corresponding mean field McKean SDE which is given by

Again, we notice that all the coefficients in (1.14) are 1-periodic. Therefore, the nonlinearity \(\nabla W*\nu \) only depends on the law of the quotiented process. We can thus understand the behaviour of the nonlinearity \(\nabla W *\nu \) by considering the evolution of the periodic rearrangement \({\tilde{\nu }}(t)\) of \(\nu (t)\), which solves the periodic nonlinear McKean–Vlasov equation:

An important role is thus played by the limiting behaviour of solutions \({\tilde{\nu }}(t)\) of the above equation and its steady states. As in Sect. 1.4, the equation (1.15) is the gradient flow of the periodic mean field free energy

with respect to the the 2-Wasserstein metric on \({\mathbb {T}}^d\) and the energies \({\tilde{E}}^N\) and \({\tilde{E}}^{MF}\) are related in the same way as the energies \(E^N\) and \(E_{MF}\), i.e. through the result of Messer and Spohn [35].

Lemma 1.8

The N-particle periodic free energy \({\tilde{E}}^N\) \(\Gamma \)-converges (in the sense of Lemma 1.5) to \({\tilde{E}}^\infty :{\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\rightarrow (-\infty ,+\infty ]\), where

As a consequence, if \(\{M_N\}_{N\in {\mathbb {N}}}\) is the sequence of minimisers of \({\tilde{E}}^N\), then any accumulation point \(X\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\) of this sequence is a minimiser of \({\tilde{E}}^\infty \).

We can use the gradient flow structure to provide a useful characterisation of the steady states of the periodic McKean–Vlasov system (1.15).

Proposition 1.9

Let \({\tilde{\nu }} \in {\mathcal {P}}({\mathbb {T}}^d)\). Then, the following statements are equivalent:

-

(1)

\({\tilde{\nu }}\) is a steady state of (1.15).

-

(2)

\({\tilde{\nu }}\) is a critical point of the mean field free energy, \({\tilde{E}}_{MF}\), i.e. the metric slope (cf. [1, Definition 1.2.4])

.

. -

(3)

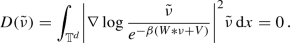

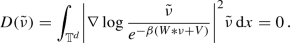

\({\tilde{\nu }}\) is a zero of the dissipation functional \(D: {\mathcal {P}}({\mathbb {T}}^d) \rightarrow (-\infty ,+\infty ]\), i.e.

-

(4)

\({\tilde{\nu }}\) satisfies the self-consistency equation

$$\begin{aligned} {\tilde{\nu }} =\frac{e^{-\beta (V + W*{\tilde{\nu }})}}{Z}, \end{aligned}$$(1.17)with the partition function given by

$$\begin{aligned} Z=\int _{{\mathbb {T}}^d}e^{-\beta (V + W*{\tilde{\nu }}(y))}{{\mathrm{d}}} y. \end{aligned}$$

A proof of this result can be found, for example, in [9, Proposition 2.4] or in [45]. It is evident from this characterisation that the behaviour of the system (1.15) on the torus will affect the distinguished limits (either \(N \rightarrow \infty \) or \(\varepsilon \rightarrow 0\)) of the system (1.8) on \({\mathbb {R}}^d\). In particular, if (1.15) has multiple steady states then the distinguished limits will be influenced by steady states attained in the long-time dynamics. We refer to the phenomenon of nonuniqueness of steady states as a phase transition and discuss its effect on the limits in Sect. 1.9.

To conclude this subsection, for the reader’s convenience, we include Figure 2 which provides a useful schematic of the notation that will be used for the rest of this paper. Starting with \(\rho ^{\varepsilon ,N}\) the solution of (1.3), one can obtain \({\tilde{\nu }}^N\), the solution of (1.10), by using the scaling in (1.9). One can then pass to to the limit \(N \rightarrow \infty \) in \(\rho ^{\varepsilon ,N}\) and \(\nu ^N\), to obtain the McKean–Vlasov equation at scale \(\varepsilon \) (1.8) or scale 1 (1.14), respectively. Alternatively one can consider the periodic rearrangement \({\tilde{\nu }}^N\) of \(\nu ^N\) which solves (1.13) and pass to the limit \(N \rightarrow \infty \) to obtain a solution of the periodic McKean–Vlasov equation (1.15). The rest of the figure follows in a similar fashion.

A schematic of the notation. The P.R. denotes periodic rearrangement in the sense of Definition 1.7

1.6 The Diffusive Limit

We have already discussed the limit \(N \rightarrow \infty \) in Sect. 1.4. Here, we discuss the diffusive limit, i.e. \(\varepsilon \rightarrow 0\). For a fixed number of particles N, we can use techniques from the theory of periodic homogenisation to pass to the limit \(\varepsilon \rightarrow 0\) in (1.3), see for instance [38, Chapter 20] [4, 13, 30]. In particular, we have the following result:

Theorem C

(The diffusive limit) Consider \(\rho ^{\varepsilon ,N}\) the solution to (1.3) with initial data \(\rho _0^{\varepsilon ,N} \in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\). Then, for all \(t>0\) the limit

exists. Furthermore, the curve of measures \(\rho ^{N,*}:[0,\infty ) \rightarrow {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {R}}^d)^N)\) satisfies the heat equation

with initial data \(\rho ^{N,*}(0)=\lim _{\varepsilon \rightarrow 0} \rho _0^{\varepsilon ,N}\) and where the covariance matrix is given by the formula

with

the Gibbs measure of the quotiented N-particle system (1.12) and \(\Psi ^N:\big ({\mathbb {T}}^d\big )^N\rightarrow \big ({\mathbb {R}}^d\big )^N\) the unique mean zero solution to the associated corrector problem

Here, \(H^N_1\) is the Hamiltonian of the associated particle system and is as defined in (1.4).

1.7 The Limit \(N \rightarrow \infty \) Followed by \(\varepsilon \rightarrow 0\)

We have discussed the mean field limit \(N \rightarrow \infty \) in Sect. 1.4. Now, we are ready to state our first result that characterises the limit \(\lim _{\varepsilon \rightarrow 0}\lim _{N\rightarrow \infty }\rho ^{\varepsilon ,N}\):

Theorem 1.10

Consider the set of initial data given by \(\{\rho _0^\varepsilon \}_{\varepsilon >0}\subset {\mathcal {P}}({\mathbb {R}}^d)\), and consider the periodic rearrangement at scale \(\varepsilon >0\) , i.e.

Assume that there exists \(C>0\), \(p>1\) and a steady state \({\tilde{\nu }}^*\in {\mathcal {P}}({\mathbb {T}}^d)\) such that \({\tilde{\nu }}^\varepsilon (t)\), the solution to the \(\varepsilon =1\) periodic nonlinear evolution (1.15) with initial data \({\tilde{\nu }}_0^\varepsilon (x)\), satisfies

Then,

where \(S^\varepsilon _t\) is the solution semigroup associated to (1.8), \(\rho ^*_0\in {\mathcal {P}}({\mathbb {R}}^d)\) is the weak-\(*\) limit of \(\rho _0^\varepsilon \), and \(S_t^*\) is the solution semigroup of the heat equation

where the covariance matrix

with \(\Psi ^*:{\mathbb {T}}^d\rightarrow {\mathbb {R}}^d\), \(\Psi ^*_i \in {H}^1({\mathbb {T}}^d)\) for \(i=1, \dots ,d\), is the unique mean zero solution to the associated corrector problem

Furthermore, assume that \(X(t)^\varepsilon \) is as defined in (1.7) and that \(\lim _{N \rightarrow \infty }\rho _0^{\varepsilon ,N}= X_0^\varepsilon =\delta _{\rho _0^\varepsilon }\). Then it holds that

where \(X_0=\delta _{\rho _0^*}\).

In particular, we can apply this theorem to obtain the following result:

Corollary 1.11

Assume that the periodic mean field energy (1.16) admits a unique minimiser (and hence critical point) \({\tilde{\nu }}^{\min }\) and that it is an exponential attractor for arbitrary initial data of the evolution of (1.15), i.e. \(d_2({\tilde{\nu }}(t),{\tilde{\nu }}^{\min }) \le d_2({\tilde{\nu }}_0^\varepsilon ,{\tilde{\nu }}_{\min })e^{-Ct}\) for some fixed constant \(C>0\). Then, the conclusions of Theorem 1.10 are valid for arbitrary initial data.

Proof

The proof of this follows from the fact that \(d_2({\tilde{\nu }}(t),{\tilde{\nu }}^{\min }) \le d_2({\tilde{\nu }}_0^\varepsilon ,{\tilde{\nu }}^{\min })e^{-Ct}\) implies that assumption (A1) holds. \(\square \)

Remark 1.12

(Non-chaotic initial data) Although Theorem 1.10 requires that the initial data be chaotic, we can deal with non-chaotic initial data by tweaking assumption (A1) to read as

for \(p>1\) and \(C>0\), with \({\tilde{\nu }}_\rho (t)\) being the solution of (1.15) starting with initial data \(\tilde{\nu _0}\) which is the periodic rearrangement of \(\rho \).

Remark 1.13

We cannot expect convergence \(S_t^\varepsilon \rho _0^\varepsilon \) to \(S_t^*\rho _0^*\) in a strong sense. By performing a formal multiscale expansion, we expect that

In particular, whenever \({\tilde{\nu }}^*\) is not trivial, the leading term \(S^*_t\rho ^*_0(x) {\tilde{\nu }}^*(x/\varepsilon )\rightharpoonup S^*_t\rho ^*_0 \) converges only weakly to its limit.

Remark 1.14

The effective covariance matrix \(A^{\mathrm {eff}}_*\) is strictly positive definite and we have the following bound on the ellipticity of the effective covariance matrix

where

see [38, Theorem 13.12].

Remark 1.15

If we consider rapidly varying initial data, that is to say, if there exists \(\rho _{in}\in {\mathcal {P}}({\mathbb {R}}^d)\) such that

Then, the hypothesis of Theorem 1.10 reduces to checking the speed of convergence to \(\nu ^*\) of the solution to (1.15) with the periodic rearrangement of \(\rho _{in}\) as initial data, and \(\rho ^*_0=\delta _0\).

Here we can easily see how the phase transition matters for the limiting behaviour. If the evolution (1.15) admits more that one steady state \({\tilde{\nu }}^{*}_1\) and \({\tilde{\nu }}^{*}_2\), then the diffusive limit will be different if we consider \(\rho _{in}={\tilde{\nu }}^{*}_1\) or \(\rho _{in}={\tilde{\nu }}^{*}_2\), see Corollary 1.27 for an explicit example.

1.8 The Limit \(\varepsilon \rightarrow 0\) Followed by \(N \rightarrow \infty \)

Now that we have discussed the diffusive limit in Sect. 1.6, we characterise the limit \(N\rightarrow \infty \) of \(\rho ^{N,*}(t)\).

Theorem 1.16

Assume that the periodic mean field energy \({\tilde{E}}_{MF}\) (1.16) admits a unique minimiser \({\tilde{\nu }}^{\min }\), then we have that \(\rho ^{N,*}\) the solution of (1.18) satisfies, for any fixed \(t>0\),

where \(X_0 \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\) is the limit of \(\rho ^{N,*}(0)\) in the sense of Definition (1.1), and \(S_t^{\min }:{\mathcal {P}}({\mathbb {R}}^d)\rightarrow {\mathcal {P}}({\mathbb {R}}^d)\) is the solution semigroup of the heat equation

where the covariance matrix

with \(\Psi ^{\min }:{\mathbb {T}}^d\rightarrow {\mathbb {R}}^d\),  for \(i=1, \dots , d\), the unique mean zero solution to the associated corrector problem

for \(i=1, \dots , d\), the unique mean zero solution to the associated corrector problem

It follows then, that for any fixed \(t>0\), the solution \(\rho ^{\varepsilon ,N}(t)\) of (1.3) satisfies

Remark 1.17

By \(\Gamma \)-convergence, the assumption that the periodic mean field energy \({\tilde{E}}_{MF}\) defined in (1.16) admits a unique minimiser implies chaoticity of the Gibbs measure, that is to say \(M_N\rightarrow \delta _{{\tilde{\nu }}^{\min }}\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\), see Lemma 1.8. We note that the assumption that \({\tilde{E}}_{MF}\) admits a unique minimiser can be replaced by the weaker chaoticity assumption on \(M_N\), i.e. \(M_N\rightarrow \delta _{{\tilde{\nu }}^{\min }_0}\) for some specific minimiser \({\tilde{\nu }}^{\min }_0\).

1.9 The Effect of Phase Transitions

As mentioned in Sect. 1.5, we expect the presence of phase transition to affect the commutativity of the limits , especially since the results of Theorems 1.10 and 1.16 depend on the steady states of (1.15) and the minimisers of the periodic mean field energy \({\tilde{E}}_{MF}\). Before proceeding any further, we define what we mean by a phase transition.

Definition 1.18

(Phase transition) The periodic mean field system (1.15) is said to undergo a phase transition at some \(0<\beta _c<\infty \), if

-

(1)

For all \(\beta <\beta _c\), there exists a unique steady state of (1.15).

-

(2)

For \(\beta >\beta _c\), there exist at least two steady states of (1.15).

The temperature \(\beta _c\) is referred to as the point of phase transition or the critical temperature.

The above definition would not make sense without the following result:

Proposition 1.19

(Uniqueness at high temperature) For all \(0<\beta <\infty \), the periodic mean field system (1.15) has at least one steady state, which is a minimiser of the periodic mean field energy \({\tilde{E}}_{MF}\). Furthermore, for \(\beta \) small enough, there exists a unique steady state \({\tilde{\nu }}^{\min }\) of (1.15), which corresponds to the unique minimiser of \({\tilde{E}}_{MF}\).

The proof of this result follows from standard fixed point and compactness arguments and can be found in [9, Theorem 2.3 and Proposition 2.8] or [35, Theorem 3].

Remark 1.20

The reader may have noticed that in Definition 1.18 we do not discuss what happens at \(\beta =\beta _c\). This is due to the fact that this depends on the nature of the phase transition, i.e. whether it is continuous or discontinuous. A detailed discussion of these phenomena and the conditions under which they arise can be found in [9, 10].

In the absence of a confining potential, i.e. for \(V=0\), the existence and properties of phase transitions were studied in detail in [9, 10]. It turns out that a key role in understanding this phenomenon is played by the notion of H-stability. We refer to an interaction potential W as H-stable, denoted by \(W \in {\mathbf {H}}_s\), if its Fourier coefficients are nonnegative, i.e.

This notion of H-stability is closely related to a similar concept used in the statistical mechanics of lattice spin systems (cf. [42]). Indeed, it provides us with a sharp criterion for the existence of a phase transition in the absence of the term V.

Proposition 1.21

(Existence of phase transitions, [9, 10]) Assume \(V=0\). Then the periodic mean field system (1.15) undergoes a phase transition in the sense of Definition 1.18 if and only if \(W \notin {\mathbf {H}}_s\).

As discussed in the introduction, a prototypical example of a system that exhibits a phase transition is given by the potentials \(V=0,\; W=-\cos (2 \pi x)\). The corresponding particle system is referred to the noisy Kuramoto model. The structure of phase transitions for this system is remarkably simple and is discussed in the following proposition:

Proposition 1.22

Consider the quotiented periodic mean field system (1.15) with \(d=1\), \(W=-\cos (2 \pi x)\), and \(V= 0\). Then for \(\beta \le 2\), \({\tilde{\nu }}_\infty \equiv 1\) is the unique minimiser and steady state of (1.15). For \(\beta >2\), the steady states of (1.15) are given by \({\tilde{\nu }}_\infty \equiv 1\) and the family of translates of the measure \({\tilde{\nu }}^{\min }_\beta \) which is given by the expression

with \(a=a(\beta )\) the solution of the following nonlinear equation \(a= \beta I_1(a)/I_0(a)\), where \(I_1(a),\;I_0(a)\) are the modified Bessel functions of the first and zeroth kind respectively. Moreover for \(\beta >2\), \({\tilde{\nu }}^{\min }_\beta \) (and its translates) are the only minimisers of the periodic mean field energy \({\tilde{E}}_{MF}\). Thus, \(\beta _c=2\) is the critical temperature of (1.15).

A proof of the above result can be found in [9, Proposition 6.1]. A depiction of the bifurcation diagram of the noisy Kuramoto system can be found in Figure 3.

a The bifurcation diagram for the noisy Kuramoto system: the solid blue line denotes the stable branch of solutions while the dotted red line denotes the unstable branch of solutions b An example of a clustered steady state \({\tilde{\nu }}_\beta ^{\min }\) representing phase synchronisation of the oscillators

We can now start stating our results concerning the effect of the presence of a phase transition on the combined diffusive-mean field limit. In general, we have that for the large temperature regime the limits commute; that is, we have

Corollary 1.23

Assume that  for some \(\rho _0^\varepsilon \in {\mathcal {P}}({\mathbb {R}}^d)\) and that

for some \(\rho _0^\varepsilon \in {\mathcal {P}}({\mathbb {R}}^d)\) and that

where \(\rho _0^* \in {\mathcal {P}}({\mathbb {R}}^d)\) is the weak-\(*\) limit of \(\rho _0^\varepsilon \). Then, there exists an explicit \(\beta _0\in (0,\beta _c]\) depending on  and

and  such that, for \(\beta <\beta _0\), the limits commute:

such that, for \(\beta <\beta _0\), the limits commute:

Moreover, for rapidly varying initial data and \(V=0\), we can show that the limits commute all the way up to the phase transition. We have the following result:

Corollary 1.24

Assume \(V=0\) and \(\beta <\beta _c\), the critical temperature. Assume further that

for some fixed \(\rho _0 \in {\mathcal {P}}({\mathbb {R}}^d)\). Then the limits commute, i.e.

where \(X_0= \delta _{\delta _0} \in {\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\). If W is H-stable, this result holds for all \(0<\beta <\infty \) and arbitrary chaotic initial data.

The proof of Corollaries 1.23 and 1.24 can be found in Sect. 4.

Remark 1.25

The results of the preceding corollaries apply to the noisy Kuramoto model.

We are now ready to present our results above the critical temperature. As we are interested in illustrating our results in a clear way, we consider a simple system that undergoes a phase transition and show that the limits do not commute ahead of the phase transition. We do not consider the noisy Kuramoto model because, as demonstrated in Proposition 1.22, the minimisers of the \({\tilde{E}}_{MF}\) are not unique ahead of the phase transition; the entire family of translates of \({\tilde{\nu }}_{\beta }^{\min }\) are minimisers. Thus we cannot apply the results of Theorem 1.16 directly. Indeed, applying Lemma 1.8, one can show that the N-particle Gibbs measure \(M_N\) converges, in the sense of Definitions 1.1 and 1.2, to \(X \in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}))\), where X is supported uniformly on the set of translates of \({\tilde{\nu }}_{\beta }^{\min }\), see [35, Proposition 3].

The alternative is to work in a quotient space as in [34, 39] or to add a small confinement to break the translation invariance of the problem. We choose to do the latter. However, we do expect our results to hold true even in the translation-invariant setting but we do not deal with what we feel is essentially a technical issue in this paper.

In particular, we consider in 1 space dimension the dynamics generated by the potentials \(V=- \eta \cos (2\pi x)\) and \(W=-\cos (2\pi x)\) with \(0<\eta <1\). In this case, we have the following characterisation of phase transitions:

Lemma 1.26

Consider the periodic mean field system (1.15) with \(d=1\), \(W=-\cos (2 \pi x)\), and \(V= -\eta \cos (2 \pi x)\) for a fixed \(\eta \in (0,1)\). Then there exists a value of the parameter \(\beta =\beta _c\) such that

-

For \(\beta <\beta _c\), there exists a unique steady state of the quotiented periodic system (1.15) given by

$$\begin{aligned} {\tilde{\nu }}^{\min }(x)= Z_{\min }^{-1}e^{a^{\min } \cos (2 \pi x)} \, , \qquad Z_{\min }= \int _{{\mathbb {T}}} e^{a^{\min } \cos (2 \pi x)} {{\mathrm{d}}} x\, , \end{aligned}$$(1.23)for some \(a^{\min }=a^{\min }(\beta ), a^{\min }>0\), which is the unique minimiser of the periodic mean field energy \({\tilde{E}}_{MF}\) (1.16).

-

For \(\beta >\beta _c\), there exist at least 2 steady states of the quotiented periodic system (1.15) given by

$$\begin{aligned} {\tilde{\nu }}^{\min }(x)&= Z_{\min }^{-1}e^{a^{\min } \cos (2 \pi x)}\, , \qquad Z_{\min }= \int _{{\mathbb {T}}} e^{a^{\min } \cos (2 \pi x)} {{\mathrm{d}}} x \, , \nonumber \\ {\tilde{\nu }}^{*}(x)&= Z_*^{-1}e^{ a^{*} \cos (2 \pi x)} \, , \qquad Z_*^{-1}= \int _{{\mathbb {T}}} e^{a^{*} \cos (2 \pi x)} {{\mathrm{d}}} x \, , \end{aligned}$$where \(a^{*}< 0< a^{\min }\) and both constants depend on \(\beta \). Here \({\tilde{\nu }}^{\min }\) is the unique minimiser and \({\tilde{\nu }}^*\) is a non-minimising critical points of the periodic mean field energy \({\tilde{E}}_{MF}\) (1.16). Moreover, \(a^*\ne - a^{\min }\).

The proof of Lemma 1.26 can be found in Sect. 4.

Now, we are ready to state our results in this specific case, i.e. above the phase transition we can choose specific initial data for which the limits do not commute.

Corollary 1.27

Assume that \(V=-\eta \cos (2\pi x)\), \(W=\cos (2\pi x)\) for a fixed \(\eta \in (0,1)\), and that we are above the phase transition \(\beta >\beta _c\). As in Proposition 1.26, we denote by \({\tilde{\nu }}^{\min }\) and \({\tilde{\nu }}^*\) the minimiser and the nonminimising critical point of \({\tilde{E}}_{MF}\). We choose the initial data

where \(\rho _0^* \in {\mathcal {P}}({\mathbb {R}})\) satisfies

for any measurable A, i.e. its periodic rearrangement is \({\tilde{\nu }}^*\). Then, for every \(t>0\), \(\rho ^{\varepsilon ,N}(t)\), the solution to (1.3) satisfies

where

On the other hand, we have that

where

Finally, by Lemma 1.26\(a^*\ne -a^{\min }\), and therefore by the strict monotonicity of the modified zeroth Bessel function \(I_0\) we obtain that

Proof

We first note that

For the limit \(\varepsilon \rightarrow 0\) followed by \(N \rightarrow \infty \), we use that by Proposition 1.26\({\tilde{\nu }}^{\min }\) is the unique minimiser of \({\tilde{E}}_{MF}\), hence we can apply Theorem 1.16 to obtain that

with \(\rho ^{\min }\) satisfying

where

To obtain this formula, we have used that in 1-D we can solve the corrector problem (1.22) explicitly, see for instance [38, Equation (13.6.13)]. The explicit expression for \(\rho ^{\min }(t)\) now follows.

Now we turn to the other limit. As discussed in Sect. 1.4, passing to the limit \(N \rightarrow \infty \), we obtain that for a fixed \(t>0\)

with \(S_t^\varepsilon \) the solution semigroup of (1.8) and \(X_0^\varepsilon = \delta _{\varepsilon ^{-1}\rho _0^*(\varepsilon ^{-1}x)}\). Using (1.24), we have that the initial data for the \(\varepsilon =1\) periodic mean field equation (1.15) is given by

We know from Proposition 1.26 that \({\tilde{\nu }}^*\) is steady state of (1.15), thus the hypothesis (A1) is trivially satisfied. Therefore, we can pass to the limit as \(\varepsilon \rightarrow 0\) using Theorem 1.10 and obtain for a fixed \(t>0\)

with \(\rho ^{*}\) satisfying

where

thus proving (1.25) and completing the proof of the result. \(\square \)

Remark 1.28

The result of Corollary 1.27 can be generalized to other rapidly varying initial data that is exponentially attracted to \({\tilde{\nu }}^{*}\).

Remark 1.29

A simple choice of initial data which satisfies (1.24) is \(\rho _0^*= \chi _{[0,1]}{\tilde{\nu }}^*\), with \(\chi _A\) the indicator function of the set A.

1.10 Application of the Fluctuation Theorem

In this subsection we will assume without proof that we have a characterisation of the fluctuations around the mean field limit, in the spirit of [11, 19], as the solution to a linear SPDE and use this together the energy minimisation property of the Gibbs measure to obtain a rate of convergence in relative entropy of the Gibbs measure to the minimiser of the periodic mean field energy (1.15). We also characterise the asymptotic behaviour of the partition function. At the end of the subsection we present a provisional result in which we show that this rate of convergence does hold at high temperatures without using the central limit theorem (the characterisation of fluctuations) but instead conditional on a certain rate of convergence in a weaker topology (cf. (1.35)).

We start by restating the classical result by Messer–Spohn [35] (cf. Lemma 1.8). We consider the unique minimiser of \({\tilde{E}}^N:{\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\rightarrow (-\infty ,+\infty ]\)

which is given by the Gibbs measure

with the partition function

Then any accumulation point \(X^\infty \in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\) of the sequence of minimisers \(M_N\) is a minimiser of

with \({\tilde{E}}_{MF}:{\mathcal {P}}({\mathbb {T}}^d)\rightarrow (-\infty ,+\infty ]\) given by

which implies that

In particular, if we are below the phase transition \(\beta <\beta _c\), we have, by Definition 1.18 and Proposition 1.9, that \({\tilde{E}}_{MF}\) admits a unique minimiser, which we denote by \({\tilde{\nu }}^{\min }\in {\mathcal {P}}({\mathbb {T}}^d)\) and thus \(X^\infty =\delta _{{\tilde{\nu }}^{\min }}\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\). In the subsequent calculations, we will use \((M_N)_n\) to refer to the \(n^{\mathrm{th}}\) marginal of the N-particle Gibbs measure \(M_N\).

A natural next step is to consider the next order of convergence:

The idea is to massage the previous expression to obtain something we can control with the fluctuations. To do this we first need to use the empirical measure \({\hat{M}}_N\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\) associated to \(M_N\in {\mathcal {P}}_{\mathrm {sym}}(({\mathbb {T}}^d)^N)\), as defined in Definition 1.2. We can compare the second marginal \((M_N)_2\) of \(M_N\) with the products of the empirical measure. We notice that for any test function \(\varphi \in C^\infty ({\mathbb {T}}^{2d})\), we have

where the expectation is taken with respect to the measure \(M_N\) on the probability space \((({\mathbb {T}}^d)^N,M_N)\) (for more details on these type identities for higher order marginals see [14]). We know from Proposition 1.9 that the minimiser of the mean field energy must satisfy the following condition:

Putting (1.27), (1.28), and (1.29) together, adding and subtracting

and completing the square, we obtain

where \({\mathcal {H}}(\cdot |\cdot )\) denotes the relative entropy or Kullback–Leibler divergence and

is a Radon measure-valued random variable defined on the probability space \((({\mathbb {T}}^d)^N,M_N)\). We refer to \({\mathcal {G}}^N\) as the fluctuations around the mean field limit. Using the fact that

we obtain the bound

In a similar way, we can also obtain the bound on the quotient between the N particle partition function and the limiting partition function \(Z_{\min }\) for \(\nu _{min}\):

where we have used the positivity of the relative entropy. Therefore, to obtain useful information from (1.30) and (1.31), we need to show that

To simplify the discussion and obtain sharp bounds all the way up to the phase transition, we consider the specific example of \(d=1\), \(V=0\) and \(W=-\cos (2\pi x)\), which undergoes a phase transition at \(\beta _c=2\) (cf. Proposition 1.22). We now make our main assumption that we have an equilibrium version of the central limit theorem before the phase transition., i.e. \({\mathcal {G}}^N\) converges in law to \({\mathcal {G}}^\infty \) whose law is the unique invariant measure of the following linear stochastic PDE

where we have used that \({\tilde{\nu }}^{\min }=d{\mathcal {L}}\) and that \(W=-\cos (2\pi x)\) has zero average to simplify the linearisation of the nonlinear PDE (1.8) and \(\xi \) is the space and time derivative of the cylindrical Wiener process. More specifically, if we consider \(\{e_k\}_{k\in {\mathbb {Z}}}\) the standard orthonormal Fourier basis of \(L^2({\mathbb {T}})\) given by

then we can express

where \(\{{\dot{B}}_k\}_{k\in {\mathbb {Z}}}\) is a countable family of independent \({\mathbb {T}}\)-valued Wiener processes. In particular, we can decompose (1.32) by projecting it onto each mode to obtain a family of uncoupled SDEs given by

where we have used the trigonometric identity

In particular, we can find the invariant measure explicitly for each mode

where \({\mathcal {N}}\) is the normal distribution. From (1.34) we can clearly identify the phase transition \(\beta _c=2\) when the SPDE (1.32) does no longer support an invariant measure.

Taking limits in (1.30) and using that \(W(0)=-1\) we obtain that for this specific system we have the bound

where we have used the trigonometric identity (1.33) and the law (1.34) of the projections of \({\mathcal {G}}^\infty \). Decomposing the \(M_N\) into its marginals, we can use the subadditivity of the relative entropy to conclude that

where \(\left\lfloor n/N\right\rfloor \) is the largest integer less than n/N. We note that this estimate holds all the way up to the phase transition for this system \(\beta _c=2\). Similarly, using (1.31) we obtain that for every \(\delta >0\), we have the estimate

for N large enough. To conclude this subsection, we rewrite these bounds into a general provisional theorem (cf. Remark 1.31).

Theorem 1.30

Consider \({\tilde{\nu }}^{\min }\), the unique minimiser of the periodic mean field energy \({\tilde{E}}_{MF}\) (1.26) and \(Z_{\min }\), its associated partition function. Assume that W is smooth and there exists \(l\in {\mathbb {R}}\) such that

Then, there exists \(C>0\) such that the following estimates hold:

and

Remark 1.31

Note that if the formal central limit theorem discussed at the start of the subsection (in the spirit of [19]) could be proved rigorously then (1.35) would hold for some exponent l which depends on dimension. For the case \(\beta =\beta _c\), we cannot expect (1.35) to hold (cf. [11]).

Proof

By (1.30), we need to show that there exists a C depending on \(\beta \), V, and W such that

for some \(C>0\). By using the using the smoothness of W, we obtain the following estimate:

The result now follows by applying hypothesis (1.35). \(\square \)

2 Proof of Theorem 1.10

We start the proof of Theorem 1.10 with some basic elliptic estimates on a time-dependent corrector problem.

Lemma 2.1

Consider the elliptic equations

where

where \({\tilde{\nu }}^\varepsilon (t,x)\) is a solution to the evolution (1.15) with initial data \({\tilde{\nu }}_0^\varepsilon \). Then, there exists a unique (up to an additive constant) smooth solution \(\chi : [0,\infty ) \times {\mathbb {T}}^d \rightarrow {\mathbb {R}}^d\), \(\chi _i \in {H}^1({\mathbb {T}}^d)\) to (2.1). Additionally, this satisfies the estimates

for all \(i = 1, \dots , d\) and \(t>0\), where \(C^{-3}({\mathbb {T}}^d)\) is the dual of \(C^3({\mathbb {T}}^d)\), and the constants \(C_1\;,c_k>0\) depend only on m, d,  , and

, and  .

.

Proof

Existence and uniqueness We consider the equation component-wise for any \(i= 1 \dots ,d\):

Note that \({\tilde{\mu }}^\varepsilon \) is smooth and is bounded above and below uniformly in time:

Thus, by standard elliptic theory, for each \(t \ge 0\) and \(i = 1, \dots , d\), there exists a unique smooth solution \(\chi _i \in {H}^1_0({\mathbb {T}}^d)\) to (2.4). We can check that \(\chi _i\) is continuously differentiable in time, as \(\xi _i=\partial _t \chi _i\) satisfies

Similar arguments imply that there exists a unique smooth solution of the above equation \(\xi _i \in {H}^1_0({\mathbb {T}}^d)\).

Regularity We note that is it is sufficient to prove the bounds (2.2) and (2.3) in the weighted space \({H}^m({\mathbb {T}}^d,{\tilde{\mu }}^\varepsilon )\) since by (2.5) these norms are equivalent to the flat space up to a time-independent multiplicative constant. We deal first with the regularity of (2.4). Testing against \(\chi _i\), we obtain

It follows then that

Now let \(\alpha \in {\mathbb {N}}^d\) be any multi-index of order \(m-1\) for some \(m \ge 1\). Testing (2.4) against \(\partial _{2 \alpha } \chi _i\), we obtain, for the left hand side,

where the coefficients are given by

Similarly, for the right hand side of (2.4), we obtain

Putting the previous two equations together and multiplying by \((-1)^{-m}\), we have

Using the exponential form of \(\mu ^\varepsilon \), we note that for any multi-index \(\alpha \in {\mathbb {N}}^d\) we have \(\partial _\alpha {\tilde{\mu }}^\varepsilon = f^\alpha {\tilde{\mu }}^\varepsilon \), where \(f^\alpha \) is a smooth function which is a linear combination of \(\partial _\gamma W *{\tilde{\nu }}^\varepsilon +\partial _\gamma V\) for \(\gamma \le \alpha \). This implies that we can obtain the bound

where \(C_\alpha \) depends only on  . Applying Hölder’s inequality and bounding in (2.8), we obtain

. Applying Hölder’s inequality and bounding in (2.8), we obtain

Simplifying, we obtain

We can sum over all such \(\alpha \) and recursively apply this bound along with (2.7) to obtain (2.2). Note that the  norm can be controlled by the Poincaré inequality since \(\chi _i\) is mean zero.

norm can be controlled by the Poincaré inequality since \(\chi _i\) is mean zero.

Before we turn to the regularity of (2.6), we derive the estimates

where we denote by \(C^{-3}({\mathbb {T}}^d)\) the dual of \(C^3({\mathbb {T}}^d)\) and equip it with the norm  . Similarly, for \(\alpha \in {\mathbb {N}}^d\), the following estimate holds:

. Similarly, for \(\alpha \in {\mathbb {N}}^d\), the following estimate holds:

Next, we test (2.6) against \(\xi _i\) to obtain

where we have simply used (2.9) and applied the Cauchy–Schwartz inequality. It follows that

where the constant C is independent of t and depends on  , V, and W. We omit the details but an essentially similar argument to the one used for (2.4) will give us an estimate of the form

, V, and W. We omit the details but an essentially similar argument to the one used for (2.4) will give us an estimate of the form

where  , and the constants \(C',\;C_l\) are independent of t and depend on the norms of \(\chi _i\), W, V, and their derivatives. Recursively applying these bounds one obtains (2.3). \(\square \)

, and the constants \(C',\;C_l\) are independent of t and depend on the norms of \(\chi _i\), W, V, and their derivatives. Recursively applying these bounds one obtains (2.3). \(\square \)

Next, we bound \(\Vert \partial _t {\tilde{\nu }}^\varepsilon \Vert _{C^{-3}({\mathbb {T}}^d)}\) by \(d_2({\tilde{\nu }}^\varepsilon ,{\tilde{\nu }}^*)\).

Lemma 2.2

Assuming that \({\tilde{\nu }}^\varepsilon \) and \({\tilde{\nu }}^*\) are a solution and a steady state of (1.15), respectively, then

where the constant C depends on dimension, \(\beta \),  , and

, and  .

.

Proof

Using (1.15) and that \({\tilde{\nu }}^*\) is a steady state, we obtain that, for any test function \(\varphi \),

and

Therefore,

Using the dual formulation of the 1-Wasserstein distance we can obtain the following bound:

Finally, bounding the 1-Wasserstein distance, by the 2-Wasserstein distance we obtain

Thus we have the desired estimate. \(\square \)

We now study the behaviour of the underlying SDE associated to (1.14).

Lemma 2.3

Consider the mean field SDE

where \(\nu (t)\) is a solution of (1.14) with initial data \(\nu _0^\varepsilon \) and \(B_t\) is a standard d-dimensional Wiener process Then for fixed \(\varepsilon >0\), the random variables  converge in law (specifically in \(d_2\)) as \(t \rightarrow \infty \) to a mean zero Gaussian random variable Y with covariance matrix \(2 A^{\mathrm {eff}}_* \in {\mathbb {R}}^{d \times d}\).

converge in law (specifically in \(d_2\)) as \(t \rightarrow \infty \) to a mean zero Gaussian random variable Y with covariance matrix \(2 A^{\mathrm {eff}}_* \in {\mathbb {R}}^{d \times d}\).

Proof

Consider \(\nu ^\varepsilon (t)\) the solution to the mean field PDE (1.14) with initial data given by \(\nu ^\varepsilon _0\). (we add the \(\varepsilon \) superscript to \(\nu (t)\) to emphasise the dependence of the initial data on \(\varepsilon \)). As V and W are smooth 1-periodic functions, it follows that \((W*\nu (t))(x)\) is also 1-periodic and is equal to \((W*{\tilde{\nu }}^\varepsilon (t))(x)\), where \({\tilde{\nu }}^\varepsilon \) is the periodic rearrangement of \(\nu ^\varepsilon (t)\). Thus the SDE in (2.10) can be rewritten as

where \({\dot{Y}}_t^\varepsilon \) is the quotient process, i.e. \(({\dot{Y}}_t^\varepsilon )_j=(Y_t^\varepsilon )_j\; (\text{ mod } \; 1)\) for all \(j= 1,\dots ,d\). Furthermore, \({\dot{Y}}_t^\varepsilon \) satisfies the SDE

where \({\dot{B}}_t\) is a \({\mathbb {T}}^d\)-valued Wiener process. Now consider the unique solution \(\chi (t,\cdot ) \in {H}^1({\mathbb {T}}^d,{\tilde{\mu }}^\varepsilon )\) of the time-dependent corrector problem in (2.1) given by Lemma 2.1. Applying Itô’s lemma to \(\chi (t,Y_t^\varepsilon )\), we obtain

where we have used the fact that \(f(Y_t^\varepsilon )=f({\dot{Y}}_t^\varepsilon )\) for any 1-periodic function f and the equation for \({\tilde{\mu }}^\varepsilon \). Using the fact that \(\chi \) satisfies (2.1), the above expression simplifies to

Integrating (2.11) from 0 to t and adding the above expression, we obtain

Multiplying by \(t^{-1/2}\), we obtain

To analyse the limit of \(t^{-1/2}Y_t^\varepsilon \) we start by showing that the first three terms on the RHS of the above expression go to zero in \({L}^\infty ({\mathbb {P}})\) as \(t \rightarrow \infty \). Picking \(m>d/2\) and applying the results of Lemma 2.1 along with Morrey’s inequality, we have

where in the last step we have used Lemma 2.2 and applied assumption (A1). For the fourth term we simply use the fact that \(Y_0\) has finite second moment to argue that it goes to zero in \({L}^2({\mathbb {P}})\). Thus studying the behaviour of \(t^{-1/2} Y_t\), in law, as \(t \rightarrow \infty \) is equivalent to studying the asymptotic behaviour of the martingale term \(Z_t\), where

We will proceed in steps: In Step 1, we will argue that the \(\chi \) in the above expression can be replaced by \(\Psi ^*\), where \(\Psi ^*\) solves (1.21). In Step 2, we will compute the limiting covariance matrix of \(Z_t\) as \(t \rightarrow \infty \) and show that it is precisely \(2 A^{\mathrm {eff}}_*\). Finally, in Step 3, we will argue that the limiting random variable is a mean zero Gaussian.

Step 1. First note that \(\mu ^\varepsilon (t) \rightarrow {\tilde{\nu }}^*\) in \(L^\infty \) as \(t \rightarrow \infty \). Indeed, we have that

where we have used (A1). We now argue that \(\nabla \chi \) converges to \(\nabla \Psi ^*\) in \({L}^2({\mathbb {T}}^d; {\mathbb {R}}^d)\). We perform the proof component-wise using the weak formulations of(2.1) and (1.21)

Choosing \(\phi =\chi _i- \Psi ^*_i\) and using the uniform lower bound from (2.5), we obtain that

using (2.13). Thus we can now simply apply Ito’s isometry as follows:

Picking some \(m >d/2\) and applying Morrey’s inequality, we obtain

where we have applied the Gagliardo–Nirenberg–Sobolev inequality and \(\alpha =m/(m+1)\). We bound the \({H}^{m+1}\)-norm in the above expression by a uniform constant using Lemma 2.1 and the fact that \(\Psi ^*\) is the solution of a uniformly elliptic PDE with smooth coefficients. Hence, using (2.14), we obtain

Step 2. In this step, we compute the limiting covariance as \(t \rightarrow \infty \) of the following term:

Applying Itô’s isometry again, we have

We can bound the first term as

where we have used (A1) and the fact \(\nabla \Psi ^*\) is Lipschitz. Then, from (2.17), it follows that

where in the penultimate step we have used the fact that \(\Psi ^*\) satisfies (1.21).

Step 3. In the final step, we will show that the limit in law of \(G_t^\varepsilon \) as \(t \rightarrow \infty \) is a Gaussian random variable. The key step involves replacing \({\dot{Y}}_t^\varepsilon \) in the expression for \(G_t^\varepsilon \) in (2.16) by \({\dot{X}}_t\), where \({\dot{X}}_t\) solves

Here \({\dot{X}}_t\) is a stationary, ergodic process with invariant measure \({\tilde{\nu }}^*\) and is precisely the process \({\dot{Y}}_t\) started from the invariant measure \({\tilde{\nu }}^*\). We assert now (cf. Lemma A.1) that (A1) implies that there exists a coupling of \(({\dot{X}}_t,{\dot{Y}}_t)\) (indeed a reflection coupling) such that  as \(t \rightarrow \infty \). Using this, we obtain,

as \(t \rightarrow \infty \). Using this, we obtain,

where we simply use the Itô’s isometry and the fact that \(\Psi ^*\) has a Lipschitz regular gradient. Thus we are left to analyse the following term:

Here \({\dot{X}}_s\) is stationary ergodic process. Additionally, we know the limiting covariance of the above term, i.e. \(2 A^{\mathrm {eff}}_*\). We now apply the Birkhoff ergodic theorem followed by the martingale central limit theorem (cf. [31, Theorem 2.1] or [46, Theorem 2.1]) to complete the proof. The fact that the convergence is also in \(d_2\) follows from the fact that the covariance matrices also converge. \(\square \)

The above result holds for a fixed \(\varepsilon >0\), however we can improve it by using the fact that the convergence in (A1) is uniform in \(\varepsilon >0\). A consequence of the analysis in the previous result is the following corollary:

Corollary 2.4

Consider the process

where \(\chi \) is the solution of the time-dependent corrector problem (2.1) and \(Y_t^\varepsilon \) solves (2.10). Then

where \({\dot{X}}_t\) is the solves and is coupled to \({\dot{Y}}_t^\varepsilon \) as in the proof of Lemma 2.3.

Proof

The proof of this result follows from the fact that the convergence in (2.12), (2.13), (2.15), (2.18), and (2.20), are all controlled by (A1) which is uniform in \(\varepsilon >0\). \(\square \)

We can finally put all the pieces together and complete the proof of Theorem 1.10

Proof of Theorem 1.10

We would like to understand the behaviour of the trajectory \(S_t^\varepsilon \rho _0^\varepsilon \) where \(S_t^\varepsilon \) is the solution semigroup associated to (1.8). However, we know that \(S_t^\varepsilon \rho _0^\varepsilon = \mathrm {Law}(\varepsilon Y^\varepsilon _{t/\varepsilon ^2})\), where \(Y_t^\varepsilon \) is the solution (2.10) of with initial law \(\varepsilon ^d \rho ^\varepsilon _0(\varepsilon x)\). Fix \(t=1\) and set \(\varepsilon ^{-2}=s\). Thus we have that

Applying Corollary 2.4, we can pass to the limit \(s \rightarrow \infty \) for the second term on the right hand side of the above expression. Since the convergence is in \({L}^2({\mathbb {P}})\), we can replace the second term in the limit as \(s \rightarrow \infty \) as follows:

Here \({\dot{X}}_u\) solves (2.19). The two random variables on the right hand side of the above expression are independent. Thus we can rewrite the above expression as

where \(F_s\) is the law of  . Since both laws converge in \(d_2\), their convolution converges to the convolution of the individual limits in \(d_2\) as \(s \rightarrow \infty \). The limit of \(F_s\) can be obtained by the martingale central limit theorem as in the proof of Lemma 2.3 while the limit of \(\rho _0^{s^{-1/2}}\) is \(\rho _0^*\). Thus we have that

. Since both laws converge in \(d_2\), their convolution converges to the convolution of the individual limits in \(d_2\) as \(s \rightarrow \infty \). The limit of \(F_s\) can be obtained by the martingale central limit theorem as in the proof of Lemma 2.3 while the limit of \(\rho _0^{s^{-1/2}}\) is \(\rho _0^*\). Thus we have that

Rewriting the same in terms of the laws, we have

The choice of time \(t=1\) was arbitrary. The same arguments can be repeated for arbitrary \(t \ge 0\) to complete the proof of (1.20). Assume now that the initial data of (1.3), \(\rho _0^{\varepsilon ,N}\) is such that \(\lim _{N \rightarrow \infty }\rho _0^{\varepsilon ,N} =X_0^\varepsilon =\delta _{\rho _0^\varepsilon }\). We can then apply Theorem B to first assert that, for a fixed \(t>0\),

Let \(X_0=\delta _{\rho _0^*}\) and consider some \(\Phi \in \mathrm{Lip}({\mathcal {P}}({\mathbb {R}}^d))\). Then we have

where in the last step we have simply applied (1.20), thus completing the proof of the theorem. \(\square \)

3 Proof of Theorem 1.16

Strategy of proof

We first need to pass to the limit in the covariance matrix

To do this, we first pass to the limit limit in the Poisson equation for \(\Psi ^N:\big ({\mathbb {T}}^d\big )^N\rightarrow \big ({\mathbb {R}}^d\big )^N\)

with

Once we have obtained the limit of the covariance matrix, we then need to pass to the limit in the equation

We do this by testing against cylindrical test functions, that is to say functions that depend on a finite number of variables, which is enough to determine the limit in \({\mathcal {P}}({\mathcal {P}}({\mathbb {R}}^d))\).

Step 1. We start by showing a few a priori estimates. First, we show that for every \(1\le i\le Nd\) and \(k\in \{1,\ldots ,N\}\) such that i does not belong to the particle k (i.e. \(i\notin [(k-1)d+1,kd]\)) the solution of the corrector problem (3.2) satisfies

where

for any smooth function \(\phi : ({\mathbb {T}}^d)^N \rightarrow {\mathbb {R}}\). Testing the i-th equation of (3.2) against \(\Psi _i^N\), applying the Cauchy-Schwarz inequality, and using the fact that \(M_N\) has mass one, we obtain

which implies the first inequality in (3.4). The second inequality follows due to the exchangeability of the particles. In fact, for any \(k_1,\; k_2\in \{1,\ldots ,N\}\) and \(i\notin [(k_1-1)d+1, k_1d]\cup [(k_2-1)d+1, k_2d]\) we have that up to exchanging the \(k_1\) and \(k_2\) particles (i.e. changing variables)

Combining this with the first inequality of (3.4) we obtain the second inequality of (3.4).

Next, we show that there exists  such that

such that

where \(M_{N-1}\) is the Gibbs measure associated to the quotiented \((N-1)\)-particle system trivially extended to \(\big ({\mathbb {T}}^d\big )^N\) and \((M_N)_1\) is the first marginal of \(M_N\). We start by rewriting

Differentiating the previous expression with respect to \(x_1\), we obtain

By (3.6) and (3.7) and the fact that V and W are sufficiently regular, the desired estimates follows if we can show that \(Z_{N-1}/Z_{N}\) is bounded above and below. This follows from the estimate

and its analogue for the reverse bound.

Step 2. Next we show that we can in a suitable sense decompose \(M_N\) by the product \((M_N)_1 M_{N-1}\). To be precise, we show that, for every \(x_1\in {\mathbb {T}}^d\),

We notice that

and we rewrite

where the function \(u^N:{\mathbb {T}}^d\times \big ({\mathbb {T}}^d\big )^{N-1}\rightarrow {\mathbb {R}}\) is given by

Therefore, by (3.5), we can show (3.8) by showing that

This will follow from the chaoticity assumption on \(M_N\) and a version of the Arzela–Ascoli theorem for the limit of symmetric functions, where we employ an idea that was proposed by Lions [33] in the context of mean field games (cf. [7, 23]).

We show that the sequence of functions \(\{u^N\}_{N\in {\mathbb {N}}}\) induces a compact sequence \(\{U^N\}_{N\in {\mathbb {N}}}\subset C({\mathbb {T}}^d\times {\mathcal {P}}({\mathbb {T}}^d))\) and that (3.9) can be written in terms of the limit of \(U^N\). We start by noticing that \(u_N\) is continuous and symmetric in the variables \(x_2\) through \(x_N\) such that there exists \(C(\beta ,W,V)\) such that

Using the symmetry of \(u^N\) and the previous bound, we can estimate

with \(\sigma \) an arbitrary permutation of the indices \(\{2,3,\ldots ,N\}\). Taking the infimum over \(\sigma \), we obtain that

where \(d_1\) denotes the 1-Wasserstein distance on \({\mathcal {P}}({\mathbb {T}}^d)\). For any \((x_1,\mu )\in {\mathbb {T}}^d\times {\mathcal {P}}({\mathbb {T}}^d)\), we define

It follows directly form (3.10) that

Using (3.11), we can rewrite (3.9) as

where \({\hat{M}}_{N-1}\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\) is the empirical measure associated with \(M_{N-1}\) as defined in Definition 1.2.

Next, we show that \(U^N\) is Lipschitz with respect to the 1-Wasserstein distance, i.e.

By the definition of \(U^N(y_1,\nu )\), for every \(\delta >0\) there exists \((z_2,\ldots ,z_N)\) such that

By the definition of \(U^N(x_1,\mu )\), the Lipschitz property of \(u^N\) (3.10), and the triangle inequality for \(d_1\), we have

Using the fact that \(\delta >0\), \((x_1,\mu )\) and \((y_1,\nu )\) are arbitrary, (3.13) follows. Due to the compactness of \({\mathbb {T}}^d\), the space \({\mathbb {T}}^d\times {\mathcal {P}}({\mathbb {T}}^d)\) equipped with the metric \(d_{{\mathbb {T}}^d}+d_1\) is also compact. Therefore, by the Arzela–Ascoli theorem and the uniform Lipschitz bound in (3.13), we have that, up to subsequence, there exists \(U\in C^0({\mathbb {T}}^d\times {\mathcal {P}}({\mathbb {T}}^d))\) such that

Finally, we use the assumption that \({\hat{M}}_{N-1}\rightarrow \delta _{{\tilde{\nu }}^{\min }}\in {\mathcal {P}}({\mathcal {P}}({\mathbb {T}}^d))\) (see Remark 1.17), to obtain that, up to subsequence,

As the limit is independent of the subsequence we have chosen, we obtain (3.12), which implies (3.8).

Step 3. Now we are ready to pass to the limit in the Poisson equation (3.2). As the dimension where the problem is posed grows, we consider test functions that depend on a finite number of variables. We take some \(\varphi \in [C^1({\mathbb {T}}^d)]^d\) and consider its trivial extension by 1 to  to test the first d equations in (3.2):

to test the first d equations in (3.2):

Here \(\nabla \Psi ^N(x): \nabla _{x_1}\varphi \) denotes the inner product between matrices and we notice that \(\nabla _{x_1} \varphi \) has non-trivial entries only for \(1\le i,\) \(j\le d\). Integrating the variables \(x_2\) to \(x_N\) in the right hand side of (3.14) we obtain

where \((M_N)_1\) is the first marginal of \(M_N\).

For the left hand side of (3.14), we notice that by Step 1. (3.4) and Step 2. (3.8) we can exchange \(M_N\) in the integrand with the product \(M_{N-1}(M_N)_1\)

where in the last equality we have used (3.8) and that

independently of N to be able to apply Lebesgue dominated convergence to pass to the limit in the outer integral.

Hence, putting together (3.14), (3.15) and (3.16) we obtain

To pass to the limit in (3.17), we use of the a priori estimates which we proved in Step 1., (3.4) and (3.5), which say that there exists \(C>0\) such that for every \(N\in {\mathbb {N}}\) and \(i\le d\) we have