Abstract

The DIA method for the detection, identification and adaptation of model misspecifications combines estimation with testing. The aim of the present contribution is to introduce a unifying framework for the rigorous capture of this combination. By using a canonical model formulation and a partitioning of misclosure space, we show that the whole estimation–testing scheme can be captured in one single DIA estimator. We study the characteristics of this estimator and discuss some of its distributional properties. With the distribution of the DIA estimator provided, one can then study all the characteristics of the combined estimation and testing scheme, as well as analyse how they propagate into final outcomes. Examples are given, as well as a discussion on how the distributional properties compare with their usage in practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The DIA method for the detection, identification and adaptation of model misspecifications, together with its associated internal and external reliability measures, finds its origin in the pioneering work of Baarda (1967, 1968a), see also e.g. Alberda (1976), Kok (1984), Teunissen (1985). For its generalization to recursive quality control in dynamic systems, see Teunissen and Salzmann (1989), Teunissen (1990). The DIA method has found its use in a wide range of applications, for example, for the quality control of geodetic networks (DGCC 1982), for geophysical and structural deformation analyses (Van Mierlo 1980; Kok 1982), for different GPS applications (Van der Marel and Kosters 1990; Teunissen 1998b; Tiberius 1998; Hewitson et al. 2004; Perfetti 2006; Drevelle and Bonnifait 2011; Fan et al. 2011) and for various configurations of integrated navigation systems (Teunissen 1989; Salzmann 1993; Gillissen and Elema 1996).

The DIA method combines estimation with testing. Parameter estimation is conducted to find estimates of the parameters one is interested in and testing is conducted to validate these results with the aim to remove any biases that may be present. The consequence of this practice is that the method is not one of estimation only, nor one of testing only, but actually one where estimation and testing are combined.

The aim of the present contribution is to introduce a unifying framework that captures the combined estimation and testing scheme of the DIA method. This implies that one has to take the intricacies of this combination into consideration when evaluating the contributions of the various decisions and estimators involved. By using a canonical model formulation and a partitioning of misclosure space, we show that the whole estimation–testing scheme can be captured in one single estimator \(\bar{x}\). We study the characteristics of this estimator and discuss some of its distributional properties. With the distribution of \(\bar{x}\) provided, one can then study all the characteristics of the combined estimation and testing scheme, as well as analyse how they propagate into the final outcome \(\bar{x}\).

This contribution is organized as follows. After a description of the null and alternative hypotheses considered, we derive the DIA estimator \(\bar{x}\) in Sect. 2. We discuss its structure and identify the contributions from testing and estimation, respectively. We also discuss some of its variants, namely when adaptation is combined with a remeasurement of rejected data or when adaptation is only carried out for a subset of misclosure space. In Sect. 3, we derive the distribution of the estimator \(\bar{x}\). As one of its characteristics, we prove that the estimator is unbiased under \(\mathcal {H}_{0}\), but not under any of the alternative hypotheses. We not only prove this for estimation, but also for methods of prediction, such as collocation, Kriging and the BLUP. Thus although testing has the intention of removing biases from the solution, we prove that this is not strictly achieved. We show how the bias of \(\bar{x}\) can be evaluated and on what contributing factors it depends.

In Sect. 4, we decompose the distribution conditionally over the events of missed detection, correct identification and wrong identification, thus providing insight into the conditional biases as well. We also discuss in this context the well-known concept of the minimal detectable bias (MDB). In order to avoid a potential pitfall, we highlight here that the MDB is about detection and not about identification. By using the same probability of correct detection for all alternative hypotheses \(\mathcal {H}_{a}\), the MDBs can be compared and provide information on the sensitivity of rejecting the null hypothesis for \(\mathcal {H}_{a}\)-biases the size of their MDBs. The MDBs are therefore about correct detection and not about correct identification. This would only be true in the binary case, when next to the null hypothesis only a single alternative hypothesis is considered. Because of this difference between the univariate and multivariate case, we also discuss the probability of correct identification and associated minimal bias sizes.

In Sect. 5, we discuss ways of evaluating the DIA estimator. We make a distinction between unconditional and conditional evaluations and show how they relate to the procedures followed in practice. We point out, although the procedures followed in practice are usually a conditional one, that the conditional distribution itself is not strictly used in practice. In practice, any follow-on processing for which the outcome of \(\bar{x}\) is used as input, the distribution of the estimator under the identified hypothesis is used without regards to the conditioning process that led to the identified hypothesis. We discuss this difference and show how it can be evaluated. Finally, a summary with conclusions is provided in Sect. 6. We emphasize that our development will be nonBayesian throughout. Hence, the only random vectors considered are the vector of observables y and functions thereof, while the unknown to-be-estimated parameter vector x and unknown bias vectors \(b_{i}\) are assumed to be deterministic.

2 DIA method and principles

2.1 Null and alternative hypotheses

Before any start can be made with statistical model validation, one needs to have a clear idea of the null and alternative hypotheses \(\mathcal {H}_{0}\) and \(\mathcal {H}_{i}\), respectively. The null hypothesis, also referred to as working hypothesis, consists of the model that one believes to be valid under normal working conditions. We assume the null hypothesis to be of the form

with \(\mathsf {E}(.)\) the expectation operator, \(y \in \mathbb {R}^{m}\) the normally distributed random vector of observables, \(A \in \mathbb {R}^{m \times n}\) the given design matrix of rank n, \(x \in \mathbb {R}^{n}\) the to-be-estimated unknown parameter vector, \(\mathsf {D}(.)\) the dispersion operator and \(Q_{yy} \in \mathbb {R}^{m \times m}\) the given positive-definite variance matrix of y. The redundancy of \(\mathcal {H}_{0}\) is \(r=m-\mathrm{rank}(A)=m-n\).

Under \(\mathcal {H}_{0}\), the best linear unbiased estimator (BLUE) of x is given as

with \(A^{+}=(A^{T}Q_{yy}^{-1}A)^{-1}A^{T}Q_{yy}^{-1}\) being the BLUE-inverse of A. As the quality of \(\hat{x}_{0}\) depends on the validity of the null hypothesis, it is important that one has sufficient confidence in \(\mathcal {H}_{0}\). Although every part of the null hypothesis can be wrong of course, we assume here that if a misspecification occurred it is confined to an underparametrization of the mean of y, in which case the alternative hypothesis is of the form

for some vector \(C_{i}b_{i} \in \mathbb {R}^{m}/\{0\}\), with \([{A}, {C_{i}}]\) a known matrix of full rank. Experience has shown that these types of misspecifications are by large the most common errors that occur when formulating the model. Through \(C_{i}b_{i}\), one may model, for instance, the presence of one or more blunders (outliers) in the data, cycle slips in GNSS phase data, satellite failures, antenna-height errors, erroneous neglectance of atmospheric delays, or any other systematic effect that one failed to take into account under \(\mathcal {H}_{0}\). As \(\hat{x}_{0}\) (cf. 2) looses its BLUE-property under \(\mathcal {H}_{i}\), the BLUE of x under \(\mathcal {H}_{i}\) becomes

with \(\bar{A}^{+}=(\bar{A}^{T}Q_{yy}^{-1}\bar{A})^{-1}\bar{A}^{T}Q_{yy}^{-1}\) the BLUE-inverse of \(\bar{A} = P_{C_{i}}^{\perp }A\) and \(P_{C_{i}}^{\perp }=I_{m}-C_{i}(C_{i}^{T}Q_{yy}^{-1}C_{i})^{-1}C_{i}^{T}Q_{yy}^{-1}\) being the orthogonal projector that projects onto the orthogonal complement of the range space of \(C_{i}\). Note that orthogonality is here with respect to the metric induced by \(Q_{yy}\).

As it is usually not only one single mismodelling error \(C_{i}b_{i}\) one is potentially concerned about, but quite often many more than one, a testing procedure needs to be devised for handling the various, say k, alternative hypotheses \(\mathcal {H}_{i}\), \(i=1, \ldots , k\). Such a procedure then usually consists of the following three steps of detection, identification and adaptation (DIA), (Baarda 1968a; Teunissen 1990; Imparato 2016):

Detection: An overall model test on \(\mathcal {H}_{0}\) is performed to diagnose whether an unspecified model error has occurred. It provides information on whether one can have sufficient confidence in the assumed null hypothesis, without the explicit need to specify and test any particular alternative hypothesis. Once confidence in \(\mathcal {H}_{0}\) has been declared, \(\hat{x}_{0}\) is provided as the estimate of x.

Identification: In case confidence in the null hypothesis is lacking, identification of the potential source of model error is carried out. It implies the execution of a search among the specified alternatives \(\mathcal {H}_{i}\), \(i=1, \ldots , k\), for the most likely model misspecification.

Adaptation: After identification of the suspected model error, a corrective action is undertaken on the \(\mathcal {H}_{0}\)-based inferences. With the null hypothesis rejected, the identified hypothesis, \(\mathcal {H}_{i}\) say, becomes the new null hypothesis and \(\hat{x}_{i}\) is provided as the estimate of x.

As the above steps illustrate, the outcome of testing determines how the parameter vector x will be estimated. Thus although estimation and testing are often treated separately and independently, in actual practice when testing is involved the two are intimately connected. This implies that one has to take the intricacies of this combination into consideration when evaluating the properties of the estimators involved. In order to help facilitate such rigorous propagation of the uncertainties, we first formulate our two working principles.

2.2 DIA principles

In the development of the distributional theory, we make use of the following two principles:

-

1.

Canonical form: as validating inferences should remain invariant for one-to-one model transformations, use will be made of a canonical version of \(\mathcal {H}_{0}\), thereby simplifying some of the derivations.

-

2.

Partitioning: to have an unambiguous testing procedure, the \(k+1\) hypotheses \(\mathcal {H}_{i}\) are assumed to induce an unambiguous partitioning of the observation space.

2.2.1 Canonical model

We start by bringing (1) in canonical form. This is achieved by means of the Tienstra-transformation (Tienstra 1956)

in which B is an \(m \times r\) basis matrix of the null space of \(A^{T}\), i.e. \(B^{T}A=0\) and \(\mathrm{rank}(B)=r\). The Tienstra transformation is a one-to-one transformation, having the inverse \(\mathcal {T}^{-1}=[A, B^{+T}]\), with \(B^{+}=(B^{T}Q_{yy}B)^{-1}B^{T}Q_{yy}\). We have used the \(\mathcal {T}\)-transformation to canonical form also for LS-VCE (Teunissen and Amiri-Simkooei 2008) and for the recursive BLUE-BLUP (Teunissen and Khodabandeh 2013). Application of \(\mathcal {T}\) to y gives under the null hypothesis (1),

in which \(\hat{x}_{0} \in \mathbb {R}^{n}\) is the BLUE of x under \(\mathcal {H}_{0}\) and \(t \in \mathbb {R}^{r}\) is the vector of misclosures (the usage of the letter t for misclosure follows from the Dutch word ’tegenspraak’). Their variance matrices are given as

As the vector of misclosures t is zero mean and stochastically independent of \(\hat{x}_{0}\), it contains all the available information useful for testing the validity of \(\mathcal {H}_{0}\). Note, since a basis matrix is not uniquely defined, that also the vector of misclosures is not uniquely defined. This is, however, of no consequence as the testing will only make use of the intrinsic information that is contained in the misclosures and hence will be invariant for any one-to-one transformation of t.

Under the alternative hypothesis (3), \(\mathcal {T}y\) becomes distributed as

Thus, \(\hat{x}_{0}\) and t are still independent, but now have different means than under \(\mathcal {H}_{0}\). Note that \(C_{i}b_{i}\) gets propagated differently into the means of \(\hat{x}_{0}\) and t. We call

These biases are related to the observation space \(\mathbb {R}^{m}\) as

with the orthogonal projectors \(P_{A}=AA^{+}\) and \(P_{A}^{\perp }=I_{m}-P_{A}\).

As the misclosure vector t has zero expectation under \(\mathcal {H}_{0}\) (cf. 6), it is the component \(b_{t}\) of \({b}_{y}\) that is testable. The component \({b}_{\hat{x}_{0}}\) on the other hand cannot be tested. As it will be directly absorbed by the parameter vector, it is this component of the observation bias \({b}_{{y}}\) that directly influences the parameter solution \(\hat{x}_{0}\). The bias-to-noise ratios (BNRs) of (9),

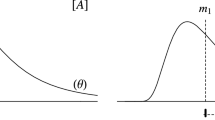

are related through the Pythagorian decomposition \(\lambda _{y}^{2}=\lambda _{\hat{x}_{0}}^{2}+\lambda _{t}^{2}\) (see Fig. 1; note: here and in the following we use the notation \(||.||_{M}^{2}=(.)^{T}M^{-1}(.)\) for a weighted squared norm). As the angle \(\phi \) between \(b_{y}\) and \(P_{A}b_{y}\) determines how much of the observation bias is testable and influential, respectively, it determines the ratio of the testable BNR to the influential BNR: \(\lambda _{t}=\lambda _{\hat{x}_{0}} \tan (\phi )\). The smaller the angle \(\phi \), the more \({b}_{y}\) and \(\mathcal {R}({A})\) are aligned and the more influential the bias will be. The angle itself is determined by the type of bias (i.e. matrix \(C_{i}\)) and the strength of the underlying model (i.e. matrices A and \({Q}_{yy}\)). The BNRs (11) were introduced by Baarda in his reliability theory to determine measures of internal and external reliability, respectively (Baarda 1967, 1968b, 1976; Teunissen 2000). We discuss this further in Sect. 5.2.

The Pythagorian BNR decomposition \(\lambda _{y}^{2}=\lambda _{\hat{x}_{0}}^{2}+\lambda _{t}^{2}\), with \(\lambda _{y}=||b_{y}||_{Q_{yy}}\), \(\lambda _{\hat{x}_{0}}= ||b_{\hat{x}_{0}}||_{Q_{\hat{x}_{0}\hat{x}_{0}}}=||Ab_{\hat{x}_{0}}||_{Q_{yy}}^{2}=||P_{A}b_{y}||_{Q_{yy}}=||b_{\hat{y}}||_{Q_{yy}}^{2}\) and \(\lambda _{t} = ||b_{t}||_{Q_{tt}}=||P_{A}^{\perp }b_{y}||_{Q_{yy}}\) (Teunissen 2000)

Due to the canonical structure of (8), it now becomes rather straightforward to infer the BLUEs of x and \(b_{i}\) under \(\mathcal {H}_{i}\). The estimator \(\hat{x}_{0}\) will not contribute to the determination of the BLUE of \(b_{i}\) as \(\hat{x}_{0}\) and t are independent and the mean of \(\hat{x}_{0}\) now depends on more parameters than only those of x. Thus, it is t that is solely reserved for the determination of the BLUE of \(b_{i}\), which then on its turn can be used in the determination of the BLUE of x under \(\mathcal {H}_{i}\). The BLUEs of x and \(b_{i}\) under \(\mathcal {H}_{i}\) are therefore given as

in which \((B^{T}C_{i})^{+}=(C_{i}^{T}BQ_{tt}^{-1}B^{T}C_{i})^{-1}C_{i}^{T}BQ_{tt}^{-1}\) denotes the BLUE-inverse of \(B^{T}C_{i}\). The result (12) shows how \(\hat{x}_{0}\) is to be adapted when switching from the BLUE of \(\mathcal {H}_{0}\) to that of \(\mathcal {H}_{i}\). With it, we can now establish the following useful transformation between the BLUEs of x under \(\mathcal {H}_{i}\) and \(\mathcal {H}_{0}\),

in which

Note that transformation (13) is in block-triangular form and that its inverse can be obtained by simply replacing \(-L_{i}\) by \(+L_{i}\).

The distribution of (13) under \(\mathcal {H}_{a}\) is given as

with the projector

This projector projects along \(\mathcal {R}(C_{i})\) and onto \(\mathcal {R}(A, Q_{yy}B(B^{T}C_{i})^{\perp })\), with \((B^{T}C_{i})^{\perp }\) a basis matrix of the null space of \(C_{i}^{T}B\). Note that \(\hat{x}_{i \ne a}\) is biased under \(\mathcal {H}_{a}\) with bias \(\mathsf {E}(\hat{x}_{i \ne a}-x | \mathcal {H}_{a}) = A^{+}R_{i \ne a}C_{a}b_{a}\). Hence, any part of \(C_{a}b_{a}\) in \(\mathcal {R}(A)\) gets directly passed on to the parameters and any part in \(\mathcal {R}(C_{i \ne a})\) or in \(\mathcal {R}(Q_{yy}B(B^{T}C_{i})^{\perp })\) gets nullified.

2.2.2 Partitioning of misclosure space

The one-to-one transformation (13) clearly shows how the vector of misclosures t plays its role in linking the BLUEs of the different hypotheses. This relation does, however, not yet incorporate the outcome of testing. To do so, we now apply our partitioning principle to the space of misclosures \(\mathbb {R}^{r}\) and unambiguously assign outcomes of t to the estimators \(\hat{x}_{i}\). Therefore, let \(\mathcal {P}_{i} \subset \mathbb {R}^{r}\), \(i=0, 1, \ldots , k\), be a partitioning of the r-dimensional misclosure space, i.e. \(\cup _{i=0}^{k} \mathcal {P}_{i} = \mathbb {R}^{r}\) and \(\mathcal {P}_{i} \cap \mathcal {P}_{j} = \{0\}\) for \(i \ne j\). Then, the unambiguous relation between t and \(\hat{x}_{i}\) is established by defining the testing procedure such that \(\mathcal {H}_{i}\) is selected if and only if \(t \in \mathcal {P}_{i}\). An alternative way of seeing this is as follows. Let the unambiguous testing procedure be captured by the mapping \(H: \mathbb {R}^{r} \mapsto \{0, 1, \ldots , k\}\), then the regions \(\mathcal {P}_{i}= \{ t \in \mathbb {R}^{r} |\; i=H(t) \}\), \(i=0, \ldots , k\), form a partition of misclosure space.

As the testing procedure is defined by the partitioning \(\mathcal {P}_{i} \subset \mathbb {R}^{r}\), \(i=0, \ldots , k\), any change in the partitioning will change the outcome of testing and thus the quality of the testing procedure. The choice of partitioning depends on different aspects, such as the null and alternative hypotheses considered and the required detection and identification probabilities. In the next sections, we develop our distributional results such that it holds for any chosen partitioning of the misclosure space. However, to better illustrate the various concepts involved, we first discuss a few partitioning examples.

Example 1

(Partitioning: detection only) Let \(\mathcal {H}_{0}\) be accepted if \(t \in \mathcal {P}_{0}\), with

If testing is restricted to detection only, then the complement of \(\mathcal {P}_{0}\), \(\mathcal {P}_{1}=\mathbb {R}^{r}/\mathcal {P}_{0}\) becomes the region for which no parameter solution is provided. Thus, \(\mathcal {P}_{0}\) and \(\mathcal {P}_{1}\) partition misclosure space with the outcomes: \(\hat{x}_{0}\) if \(t \in \mathcal {P}_{0}\) and ‘solution unavailable’ if \(t \in \mathcal {P}_{1}\). The probabilities of these outcomes, under \(\mathcal {H}_{0}\) resp. \(\mathcal {H}_{a}\), can be computed using the Chi-square distribution \(||t||_{Q_{tt}}^{2} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \chi ^{2}(r, \lambda _{t}^{2})\), with \(\lambda _{t}^{2}= ||P_{A}^{\perp }C_{a}b_{a}||_{Q_{yy}}^{2}\) (Arnold 1981; Koch 1999; Teunissen 2000). For \(\mathcal {H}_{0}\), \(b_{a}\) is set to zero.

\(\square \)

Example 2

(Partitioning: one-dimensional identification only) Let the design matrices \([A, C_{i}]\) of the k hypotheses \(\mathcal {H}_{i}\) (cf. 3) be of order \(m \times (n+1)\), denote \(C_{i}=c_{i}\) and \(B^{T}c_{i}=c_{t_{i}}\), and write Baarda’s test statistic (Baarda 1967, 1968b; Teunissen 2000) as

in which \(P_{c_{t_{i}}}=c_{t_{i}}(c_{t_{i}}^{T}Q_{tt}^{-1}c_{t_{i}})^{-1}c_{t_{i}}^{T}Q_{tt}^{-1}\) is the orthogonal projector that projects onto \(c_{t_{i}}\). If testing is restricted to identification only, then \(\mathcal {H}_{i \ne 0}\) is identified if \(t \in \mathcal {P}_{i \ne 0}\), with

Also this set forms a partitioning of misclosure space, provided not two or more of the vectors \(c_{t_{i}}\) are the same. When projected onto the unit sphere, this partitioning can be shown to become a Voronoi partitioning of \(\mathbb {S}^{r-1} \subset \mathbb {R}^{r}\). The Voronoi partitioning of a set of unit vectors \(\bar{c}_{j}\), \(j=1, \ldots , k\), on the unit sphere \(\mathbb {S}^{r-1}\) is defined as

in which the metric \(d(u,v)=\cos ^{-1}(u^{T}v)\) is the geodesic distance (great arc circle) between the unit vectors u and v (unicity is here defined in the standard Euclidean metric). If we now define the unit vectors

we have \(|w_{i}|=||t||_{Q_{tt}} \bar{c}_{i}^{T}\bar{t}\) and therefore \( \max _{i} |w_{i}| \Leftrightarrow \min _{i} d(\bar{t}, \bar{c}_{i}) \), thus showing that

For the distributions of t and \(\bar{t}\) under \(\mathcal {H}_{a}\), we have

with \(\mu _{t}= B^{T}C_{a}b_{a}\) and \(\mu _{\bar{t}}=Q_{tt}^{-1/2}B^{T}C_{a}b_{a}\). Thus, \(\bar{t}\) has a projected normal distribution, which is unimodal and rotationally symmetric with respect to its mean direction \(\mu _{\bar{t}}/||\mu _{\bar{t}}||_{I_{r}}\). The scalar \(||\mu _{\bar{t}}||_{I_{r}}=\lambda _{t}\) is a measure of the peakedness of the PDF. Thus, the larger the testable BNR \(\lambda _{t}\) is, the more peaked the PDF of \(\bar{t}\) becomes. The density of \(\bar{t}\) is given in, e.g. Watson (1983). Under \(\mathcal {H}_{0}\), when \(\mu _{\bar{t}}=0\), the PDF of \(\bar{t}\) reduces to

which is one over the surface area of the unit sphere \(\mathbb {S}^{r-1}\). Hence, under the null hypothesis, the PDF of \(\bar{t}\) is the uniform distribution on the unit sphere. The selection probabilities under \(\mathcal {H}_{0}\) are therefore given as \(\mathsf {P}( t \in \mathcal {P}_{i}| \mathcal {H}_{0}) = |V_{i}|\frac{\Gamma (\frac{r}{2})}{2 \pi ^{\frac{r}{2}}}\), in which \(|V_{i}|\) denotes the surface area covered by \(V_{i}\). \(\square \)

An important practical application of one-dimensional identification is datasnooping, i.e. the procedure in which the individual observations are screened for possible outliers (Baarda 1968a; DGCC 1982; Kok 1984). If we restrict ourselves to the case of one outlier at a time, then the \(c_{i}\)-vector of the alternative hypothesis takes the form of a canonical unit vector having 1 as its ith entry and zeros elsewhere. This would then lead to \(k=m\) regions \(\mathcal {P}_{i}\) provided not two or more of the vectors \(c_{t_{i}}=B^{T}c_{i}\) are the same. If the latter happens only one of them should be retained, as no difference between such hypotheses can then be made. This would, for instance, be the case when datasnooping is applied to levelled height differences of a single levelling loop.

Example 3

(Partitioning: datasnooping) To illustrate the datasnooping partitioning on the unit sphere, we consider the GPS single-point positioning pseudorange model,

in which the \(u_{i}\), \(i=1, \ldots , m\), are the receiver-satellite unit direction vectors of the m satellites. First we assume that the receiver position is known, thus reducing the design matrix to \(A=[1, \ldots , 1]^{T}\). Because of the symmetry in this model, one can expect the unit vectors \(\bar{c}_{i}\) (cf. 21), \(i=1, \ldots , m\), to have a symmetric distribution over the unit sphere and thus the datasnooping partitioning to be symmetric as well. Indeed, for the correlation between the \(w_{i}\)-statistics and therefore for the angle between the \(\bar{c}_{i}\) vectors, we have

Thus for \(m=3\), we have \(\cos ^{-1}(\frac{1}{2})= 60^{\circ }\) and for \(m=4\), we have \(\cos ^{-1}(\frac{1}{3})= 70.53^{\circ }\). The partitioning of this latter case is shown in Fig. 2 (top). In case the receiver position is unknown, the receiver-satellite geometry comes into play through the variations in the unit direction vectors \(u_{i}\) of (25). In that case, we expect a varying partitioning on the unit sphere. This is illustrated in Fig. 2 (bottom) for the case \(m=7\), \(n=4\). \(\square \)

Example 4

(Partitioning: detection and identification) Again let the design matrices \([A, C_{i}]\) of the k hypotheses \(\mathcal {H}_{i}\) (cf. 3) be of order \(m \times (n+1)\) and now consider detection and identification. Then

and

form a partitioning of the misclosure space, provided not two or more of the vectors \(c_{t_{i}}\) are the same. An example of such partitioning is given in Fig. 3 for \(r=2\), \(k=3\), and \(\tau ^{2}= \chi ^{2}_{\alpha }(r=2, 0)\). The inference procedure induced by this partitioning is thus that the null hypothesis gets accepted (not rejected) if \(||t||_{Q_{tt}} \le \tau \), while in case of rejection, the largest value of the statistics \(|w_{j}|\), \(j=1, \ldots , k\), is used for identifying the alternative hypothesis. In the first case, \(\hat{x}_{0}\) is provided as the estimate of x, while in the second case, \(\hat{x}_{i}=\hat{x}_{0}-L_{i}t\) (cf. 13) is provided for the identified alternative. The false- alarm selection probabilities under \(\mathcal {H}_{0}\) are now given as \(\mathsf {P}( t \in \mathcal {P}_{i \ne 0}| \mathcal {H}_{0}) = \alpha |V_{i}| \frac{\Gamma (\frac{r}{2})}{2 \pi ^{\frac{r}{2}}}\), in which \(\alpha \) is the overall level of significance. Note, the more correlated two w-test statistics \(w_{i}\) and \(w_{j}\) are, the smaller the angle \(\angle (c_{t_{i}}, c_{t_{j}})\) (see Fig. 3) and the more difficult it will be to discern between the two hypotheses \(\mathcal {H}_{i}\) and \(\mathcal {H}_{j}\), especially for small biases, see e.g. Foerstner (1983), Tiberius (1998), Yang et al. (2013b). \(\square \)

Example of a partitioning of misclosure space \(\mathbb {R}^{r=2}\) for \(k=3\). The circular region centred at the origin is \(\mathcal {P}_{0}\), while the remaining three sectors, having their borders at the bisector axes of adjacent \(c_{t_{i}}\)’s, represent the regions \(\mathcal {P}_{i}\) for \(i=1, 2, 3\). Note, since the figure has been drawn in the metric of \(Q_{tt}\), that the detection region \(\mathcal {P}_{0}\) is shown as a circle instead of an ellipse

In the above examples, the partitioning is formulated in terms of the misclosure vector t. It can, however, also be formulated by means of the least-squares residual vector \(\hat{e}_{0}=y-A\hat{x}_{0}\), thus providing a perhaps more recognizable form of testing. As \(t \in \mathbb {R}^{r}\) and \(\hat{e}_{0} \in \mathbb {R}^{m}\) are related as \(t=B^{T}\hat{e}_{0}\), we have (Teunissen 2000)

and

Thus, the actual verification in which of the regions \(\mathcal {P}_{i}\) the misclosure vector t lies can be done using the least-squares residual vector \(\hat{e}_{0}\) obtained under \(\mathcal {H}_{0}\), without the explicit need of having to compute t.

Note that in case of uncorrelated observations, i.e. \(Q_{yy}=\mathrm{diag}(\sigma _{1}^{2}, \ldots , \sigma _{m}^{2})\), the adapted design matrix \(\bar{A}_{(i)}=P_{c_{i}}^{\perp }A\) for computing the BLUE under \(\mathcal {H}_{i}\) is the original design matrix with its ith row replaced by zeros. Hence, in case of datasnooping, the BLUE \(\hat{x}_{i} = \bar{A}_{(i)}^{+}y=\hat{x}_{0}-A^{+}c_{i}\hat{b}_{i}\) is then the estimator with the ith observable excluded. Such is, for instance, done in exclusion-based RAIM (Kelly 1998; Yang et al. 2013a).

As a final note of this subsection, we remark that also other than the above partitioning examples can be given. For instance, in case of \(\mathcal {H}_{a}\)’s with one-dimensional biases, one can also find examples in which the ellipsoidal detection region (27) has been replaced by the intersection of half spaces, \(\{ t \in \mathbb {R}^{r} | |w_{i}| \le c, i=1, \ldots , k \}\), see e.g. Joerger and Pervan (2014), Imparato (2016), Teunissen et al. (2017). In fact, it is important to recognize that in the multivariate case of multihypotheses testing, no clear optimality results are available. Baarda’s test statistic \(w_{i}\) and the detector \(||t||_{Q_{tt}}\) are known to provide for uniformly most powerful invariant (UMPI) testing when \(\mathcal {H}_{0}\) is tested against a single alternative (Arnold 1981; Teunissen 2000; Kargoll 2007), but not necessarily when multiple alternatives are in play. To be able to infer the quality of the various possible partitionings, one should therefore be able to diagnose their impact on the actual output of the DIA method.

2.3 The DIA estimator

With the DIA method, the outcome of testing determines how the parameter vector x gets estimated. As the outcome of such estimation is influenced by the testing procedure, one cannot simply assign the properties of \(\hat{x}_{0}\) or \(\hat{x}_{i}\) to the actual DIA estimator computed. That is, the actual estimator that is produced is not \(\hat{x}_{0}\) nor \(\hat{x}_{i}\), but in fact (see Fig. 4)

By making use of the indicator functions \(p_{i}(t)\) of the regions \(\mathcal {P}_{i}\) (i.e. \(p_{i}(t)=1\) for \(t \in \mathcal {P}_{i}\) and \(p_{i}(t)=0\) elsewhere), the DIA estimator \(\bar{x}\) can be written in the compact form

This expression shows how the \(\hat{x}_{i}\)s and t contribute to the estimator \(\bar{x}\). If we now make use of the transformation (13), we can obtain its counterpart for \(\bar{x}\) as

with \(\bar{L}(t)=\sum _{i=1}^{k}L_{i}p_{i}(t)\). This important result shows how \([\bar{x}^{T}, t^{T}]^{T}\) stands in a one-to-one relation to \([\hat{x}_{0}^{T}, t^{T}]^{T}=\mathcal {T}y\) and thus to the original vector of observables y. Although the structure of (33) resembles that of the linear transformation (13), note that (33) is a nonlinear transformation due to the presence of t in \(\bar{L}(t)\). Hence, this implies that the DIA estimator \(\bar{x}\) will not have a normal distribution, even if all the individual estimators \(\hat{x}_{i}\), \(i=0, \ldots , k\), are normally distributed. In the next section, we derive the probability density function (PDF) of \(\bar{x}\). But before we continue, we first briefly describe two variations on the above-defined DIA procedure.

Remeasurement included In case of datasnooping, with \(Q_{yy}=\mathrm{diag}(\sigma _{1}^{2}, \ldots , \sigma _{m}^{2})\), one may take the standpoint in certain applications that rejected observations should be remeasured. After all, one may argue, the measurement setup was designed assuming the rejected observation included. If one remeasures and replaces the rejected observation, say \(y_{i}\), with the remeasured one, say \(\bar{y}_{i}\), one is actually not producing \(\hat{x}_{i}\) as output, but instead the solution for x based on the following extension of the model under \(\mathcal {H}_{i}\),

with \(a_{i}^{T}\) the ith row of A and \(\sigma _{i}^{2}\) the variance of the rejected and remeasured observations. The BLUE of x under this model is

Hence, in case the remeasurement step is included, the computed DIA estimator is the one obtained by replacing \(\hat{x}_{i}\) by \(\hat{\hat{x}}_{i}\) in (32).

Undecided included Above, each hypothesis \(\mathcal {H}_{i}\) was given its own region \(\mathcal {P}_{i}\), such that together these regions cover the whole misclosure space, \(\cup _{i=0}^{k} \mathcal {P}_{i}=\mathbb {R}^{r}\). This implies, whatever the outcome of t, that one always will be producing one of the estimates \(\hat{x}_{i}\), \(i=0, \ldots , k\), even, for instance, if it would be hard to discriminate between some of the hypotheses or when selection is unconvincing. To accommodate such situations, one can generalize the procedure and introduce an undecided region \(\Omega \subset \mathbb {R}^{r}\) for which no estimator of x is produced at all when \(t \in \Omega \). Thus when that happens, the decision is made that a solution for x is unavailable,

This is similar in spirit to the undecided regions of the theory of integer aperture estimation (Teunissen 2003a). As a consequence of (36), the regions \(\mathcal {P}_{i}\) of each of the hypotheses have become smaller and now only partition a part of the misclosure space,

As an example, consider the following generalization of (28),

Now it is also tested whether the maximum w-test statistic achieves a sufficient reduction in \(||t||_{Q_{tt}}^{2}\), i.e. whether \(||P_{c_{t_{i}}}^{\perp }t||_{Q_{tt}}^{2}=||t||_{Q_{tt}}^{2}-w_{i}^{2}\) is small enough. If this is not the case, then the undecided decision is made, see Fig. 5.

Example of undecided region \(\Omega \) (yellow) for t far away from \(\mathcal {H}_{0}\) and all \(\mathcal {H}_{i}\)s. Compare with Fig. 3

The choice made for the undecided region \(\Omega \) may of course affect the regions \(\mathcal {P}_{i \ne 0}\) of some hypotheses more than others. For instance, if one or more of the alternative hypotheses turn out to be too poorly identifiable, one may choose to have their regions \(\mathcal {P}_{i}\) completely assigned to the undecided region \(\Omega \). In that case, one would only proceed with identification for a subset of the k alternative hypotheses. In the limiting special case when all alternative hypotheses are considered too poorly identifiable, the undecided strategy would become one for which \(\Omega = \mathbb {R}^{r}/\mathcal {P}_{0}\). In this case, one thus computes \(\hat{x}_{0}\) if \(\mathcal {H}_{0}\) gets accepted, but states that the solution is unavailable otherwise. As a result, the testing procedure is confined to the detection step. This was, for instance, the case with earlier versions of RAIM which had detection but no exclusion functionality, see e.g. Parkinson and Axelrad (1988), Sturza (1988).

To conclude this section, we pause a moment to further highlight some of the intricacies of the estimator (32). As the construction of \(\bar{x}\) has been based on a few principles only, it is important to understand that the estimator describes the outcome of any DIA method. In it, we recognize the separate contributions of \(p_{i}(t)\) and \(\hat{x}_{i}\). Both contribute to the uncertainty or randomness of \(\bar{x}\). The uncertainty of testing, i.e. of detection and identification, is channelled through \(p_{i}(t)\), while the uncertainty of estimation is channelled through \(\hat{x}_{i}\). Their combined outcome provides \(\bar{x}\) as an estimator of x. It is hereby important to realize, however, that there are for now no a priori ’optimality’ properties that one can assign to \(\bar{x}\), despite the fact that its constituents do have some of such properties. The estimator \(\hat{x}_{0}\), for instance, is optimal under \(\mathcal {H}_{0}\) as it is then the BLUE of x. And in case of a single alternative hypothesis \((k=1)\), also the testing can be done in an optimal way, namely by using uniformly most powerful invariant tests. These properties, however, are individual properties that do not necessarily carry over to \(\bar{x}\). One may ask oneself, for instance, why use \(\hat{x}_{i}\) when \(\mathcal {H}_{i}\) is selected. Why not use, instead of \(\hat{x}_{i}\), an estimator that takes the knowledge of \(t \in \mathcal {P}_{i}\) into account. Also note, as the testing itself gives discrete outcomes, that the DIA estimator is a binary weighted average of all of the \(k+1\) \(\hat{x}_{i}\)s. But one may wonder whether this binary weighting is the best one can do if the ultimate goal is the construction of a good estimator of x. For instance, although the weights \(p_{i}(t)\) are binary in case of the DIA estimator, smoother weighting functions of the misclosures will provide for a larger class of estimators that may contain estimators with better performances for certain defined criteria. This in analogy with integer (I) and integer-equivariant (IE) estimation, for which the latter provides a larger class containing the optimal BIE estimator (Teunissen 2003b). And just like the nonBayesian BIE estimator was shown to have a Bayesian counterpart (Teunissen 2003b; Betti et al. 1993), the nonBayesian DIA estimator with smoother weights may find its counterpart in methods of Bayesian and information-theoretic multimodel inference (Burnham and Anderson 2002).

Answering these and similar questions on ‘optimizing’ the estimator \(\bar{x}\) is complex and not the goal of the present contribution. The aim of the present contribution is to provide a general framework that captures the testing and estimation characteristics in a combined way through the single estimator \(\bar{x}\). For that purpose, we present distributional properties of the DIA estimator \(\bar{x}\) in the next and following sections, thus making a rigorous quality evaluation of any estimator of the form of (32) possible.

3 The distribution of the DIA estimator

3.1 The joint, conditional and marginal PDFs

In order to be able to study the properties of the DIA estimator, we need its probability distribution. As its performance is driven for a large part by the misclosure vector t, we determine the joint PDF \(f_{\bar{x},t}(x,t)\) and the conditional PDF \(f_{\bar{x}|t}(x|t)\), next to the marginal PDF \(f_{\bar{x}}(x)\). We express their PDFs in the PDFs \(f_{\hat{x}_{0}}(x)\) and \(f_{t}(t)\) of \(\hat{x}_{0}\) and t, respectively. We have the following result.

Theorem 1

(PDFs of \(\bar{x}\) and t) Let \(\bar{x}\) be given as (32), with the \(\hat{x}_{i}\)s related to \(\hat{x}_{0}\) and t as in (13). Then, the joint, conditional and marginal PDFs of the DIA estimator \(\bar{x}\) and misclosure vector t can be expressed in the PDFs \(f_{\hat{x}_{0}}(x)\) and \(f_{t}(t)\) as

Proof

See Appendix. \(\square \)

This result shows that the PDF of \(\bar{x}\) is constructed from a sum with shifted versions \(f_{\hat{x}_{0}}(x+L_{i}t)\) of the PDF of \(\hat{x}_{0}\). In fact, it is a weighted average of these shifted functions,

thus showing how it is driven by the distribution of the misclosure vector t. It is thus indeed a nonnormal distribution, which only will approach one when the PDF of the misclosures is sufficiently peaked. For instance, when \(f_{t}(t)=\delta (t-\tau )\) and \(\tau \in \mathcal {P}_{j}\), then \(f_{\bar{x}}(x)=f_{\hat{x}_{0}}(x+L_{j}\tau )\).

The PDF \(f_{\bar{x}}(x|\mathcal {H}_{0})\) of the DIA estimator \(\bar{x}\) under \(\mathcal {H}_{0}\) (Example 5) for two values of \(\alpha \)

Example 5

(PDF for \(k=n=r=1\)) It follows from (39) that in case of only one alternative hypothesis \((k=1)\), the PDF of \(\bar{x}\) under \(\mathcal {H}_{0}\) can be written as

Let \(\hat{x}_{0} \mathop {\sim }\limits ^{\mathcal {H}_{0}} \mathcal {N}(0, \sigma _{\hat{x}_{0}}^{2}=0.5)\), \(t \mathop {\sim }\limits ^{\mathcal {H}_{0}} \mathcal {N}(0, \sigma _{t}^{2}=2)\) (thus \(n=r=1\)), and \(L_{1}=\frac{1}{2}\), with acceptance interval \(\mathcal {P}_{0} = \{ \tau \in \mathbb {R} | (\tau /\sigma _{t})^{2} \le \chi ^{2}_{\alpha }(1,0) \}\). Figure 6 shows this PDF for \(\alpha =0.1\) and \(\alpha =0.001\). Note that \(f_{\bar{x}}(x|\mathcal {H}_{0})\), like \(f_{\hat{x}_{0}}(x|\mathcal {H}_{0})\), is symmetric about 0, but that its tails become heavier the larger \(\alpha \) gets.\(\square \)

Quite often in practice one is not interested in the complete parameter vector \(x \in \mathbb {R}^{n}\), but rather only in certain functions of it, say \(\theta = F^{T}x \in \mathbb {R}^{p}\). As its DIA estimator is then computed as \(\bar{\theta }=F^{T}\bar{x}\), we need its distribution to evaluate its performance.

Corollary 1

Let \(\bar{\theta }=F^{T}\bar{x}\) and \(\hat{\theta }_{0}=F^{T}\hat{x}_{0}\). Then, the PDF of \(\bar{\theta }\) is given as

Although we will be working with \(\bar{x}\), instead of \(\bar{\theta }\), in the remaining of this contribution, it should be understood that the results provided can similarly be given for \(\bar{\theta }=F^{T}\bar{x}\) as well.

We also remark that here and in the remaining of this contribution, a \(k+1\) partitioning covering the whole misclosure space, \(\mathbb {R}^{r} = \cup _{i=0}^{k} \mathcal {P}_{i}\) is used. Thus, no use is made of an undecided region. The results that we present are, however, easily adapted to include such event as well. For instance, in case the \(\mathcal {P}_{i}\) do not cover the whole misclosure space and \(\Omega = \mathbb {R}^{r}{/}\cup _{i=0}^{k} \mathcal {P}_{i}\) becomes the undecided region, then the computed estimator is conditioned on \(t \in \Omega ^{c}=\cup _{i=0}^{k} \mathcal {P}_{i}\) and its PDF reads as,

The approach for obtaining such undecided-based results is thus to consider the undecided region \(\Omega \subset \mathbb {R}^{r}\) as the \((k+2)\)th region that completes the partitioning of \(\mathbb {R}^{r}\), followed by the analysis of the DIA estimator \(\bar{x}\) conditioned on \(\Omega ^{c}\), the complement of \(\Omega \).

3.2 The mean of \(\bar{x}\) under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\)

The estimators \(\hat{x}_{i}\), \(i=0, 1, \ldots , k\), (cf. 2, 4) are BLUEs and therefore unbiased under their respective hypotheses, e.g. \(\mathsf {E}(\hat{x}_{0}| \mathcal {H}_{0})=x\) and \(\mathsf {E}(\hat{x}_{a}| \mathcal {H}_{a})=x\). However, as shown earlier, these are not the estimators that are actually computed when testing is involved. In that case, it is the DIA estimator \(\bar{x}\) that is produced. As unbiasedness is generally a valued property of an estimator, it is important to know the mean of \(\bar{x}\). It is given in the following theorem.

Theorem 2

(Mean of DIA estimator) The mean of \(\bar{x}\) under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\) is given as

with

where

Proof

See Appendix. \(\square \)

The theorem generalizes the results of Teunissen et al. (2017). It shows that \(\bar{x}\), like \(\hat{x}_{0}\), is unbiased under \(\mathcal {H}_{0}\), but that, contrary to \(\hat{x}_{a}\), \(\bar{x}\) is always biased under the alternative,

It is thus important to realize that the result of adaptation will always produce an estimator that is biased under the alternative. Moreover, this is true for any form the adaptation may take. It holds true for, for instance, exclusion-based RAIM (Brown 1996; Kelly 1998; Hewitson and Wang 2006; Yang et al. 2013a), but also when adaptation is combined with remeasurement (cf. 35).

To evaluate the bias, one should note the difference between \(\mathsf {E}(\bar{x}|\mathcal {H}_{a})\) and \(\mathsf {E}(\hat{x}_{0}|\mathcal {H}_{a})=x+A^{+}C_{a}b_{a}\),

This expression shows the positive impact of testing. Without testing, one would produce \(\hat{x}_{0}\), even when \(\mathcal {H}_{a}\) would be true, thus giving the influential bias \(A^{+}C_{a}b_{a}\) (cf. 9). However, with testing included, the second term in (48) is present as a correction for the bias of \(\hat{x}_{0}\) under \(\mathcal {H}_{a}\). The extent to which the bias will be corrected for depends very much on the distribution of the misclosure vector t. In the limit, when \(f_{t}(t|\mathcal {H}_{a})\) is sufficiently peaked at the testable bias \(B^{T}C_{a}b_{a}\) (cf. Theorem 2), the second term in (48) reduces to \(A^{+}C_{a}b_{a}\), thereby removing all the bias from \(\hat{x}_{0}\) under \(\mathcal {H}_{a}\). A summary of the means of \(\hat{x}_{0}\), \(\bar{x}\) and \(\hat{x}_{a}\) is given in Table 1.

Example 6

(Bias in \(\bar{x}\)) Let \(\mathcal {H}_{0}\) have a single redundancy (\(r=1\)) and let there be only a single alternative \(\mathcal {H}_{a}\) (\(k=1\)). Then, (45) simplifies to

For the partitioning, we have: \(\mathcal {P}_{0}\) being an origin-centred interval and \(\mathcal {P}_{a}=\mathbb {R}/\mathcal {P}_{0}\). The size of \(\mathcal {P}_{0}\) is determined through the level of significance \(\alpha \) as \(\mathsf {P}(t \in \mathcal {P}_{0}|\mathcal {H}_{0})=1-\alpha \). In the absence of testing (i.e. \(\mathcal {P}_{0}=\mathbb {R}\)), the bias would be \(\bar{b}_{a}=b_{a}\). In the presence of testing however, we have \(\bar{b}_{a} \le b_{a}\) for every value of \(b_{a}>0\), thus showing the benefit of testing: the bias that remains after testing is always smaller than the original bias. This benefit, i.e. the difference between \(\bar{b}_{a}\) and \(b_{a}\), will kick in after the bias \(b_{a}\) has become large enough. The difference is small, when \(b_{a}\) is small, and it gets larger for larger \(b_{a}\), with \(\bar{b}_{a}\) approaching zero again in the limit. For smaller levels of significance \(\alpha \), the difference between \(\bar{b}_{a}\) and \(b_{a}\) will stay small for a larger range of \(b_{a}\) values. This is understandable as a smaller \(\alpha \) corresponds with a larger acceptance interval \(\mathcal {P}_{0}\), as a consequence of which one would have for a larger range of \(b_{a}\) values an outcome of testing that does not differ from the no-testing scenario.\(\square \)

One can also compare the weighted mean squared errors of the three estimators \(\hat{x}_{0}\), \(\bar{x}\) and \(\hat{x}_{a}\) under \(\mathcal {H}_{a}\). By making use of the fact that \(\mathsf {E}(||u||_{Q}^{2})=\mathrm{trace}(Q^{-1}Q_{uu})+\mu ^{T}Q^{-1}\mu \) if \(\mathsf {E}(u)=\mu \) and \(\mathsf {D}(u)=Q_{uu}\) (Koch 1999), we have the following result.

Corollary 2

(Mean squared error) Let \(b_{\hat{x}_{0}}=A^{+}b_{y}\) and \(b_{\bar{x}}=A^{+}\bar{b}_{y}\) be the bias in \(\hat{x}_{0}\) and \(\bar{x}\), respectively, and \(Q_{\hat{x}_{a}\hat{x}_{a}}= Q_{\hat{x}_{0}\hat{x}_{0}}+L_{a}Q_{tt}L_{a}^{T}\) the variance matrix of \(\hat{x}_{a}\). Then

with \(||.||_{F}^{2}=\mathrm{trace}(.)^{T}(.)\) denoting the squared Frobenius norm.

Note that for \(C_{a}=c_{a}\),

3.3 Bias in BLUP, Kriging and collocation

We now make a brief side step and show that also methods of prediction, like collocation, Kriging and best linear unbiased prediction (BLUP), are affected by the bias \(\bar{b}_{y_{a}}\) of Theorem 2. Let our prediction–aim be to predict the random vector \(z=A_{z}x+e_{z}\), in which \(e_{z}\) is zero mean and matrices \(A_{z}\), \(Q_{zy}=\mathsf {Cov}(z,y)\) and \(Q_{zz}=\mathsf {Cov}(z,z)=\mathsf {D}(z)\) are known. Then, we may extend our \(\mathcal {H}_{i}\)-model (3) as

With the \(\mathcal {H}_{i}\)-BLUEs \(\hat{x}_{i}\) and \(\hat{b}_{i}\), the BLUP of z under \(\mathcal {H}_{i}\) is then given as

But with the DIA method in place to validate the different hypotheses, the actual output of the combined estimation–testing–prediction process is then given as

As the aim is to predict \(z=A_{z}x+e_{z}\), it is of interest to know the difference \(\mathsf {E}(\bar{z}-A_{z}x|\mathcal {H}_{a})\). The following result shows how the bias in \(\bar{z}\) can be expressed in the vector \(\bar{b}_{y_{a}}\) of (45).

Corollary 3

(Bias in DIA predictor) The bias in the DIA predictor \(\bar{z}=\sum _{i=0}^{k}\hat{z}_{i}p_{i}(t)\) is given as

with \(P_{A}^{\perp }=I_{m}-AA^{+}\).

4 Decomposition of probabilities

4.1 Correct and incorrect decisions

The decisions in the testing procedure are driven by the outcome of the misclosure vector t, i.e. choose \(\mathcal {H}_{i}\) if \(t \in \mathcal {P}_{i}\). Such decision is correct if \(\mathcal {H}_{i}\) is true, and it is incorrect otherwise. The probabilities of such occurrences can be put into a probability matrix:

As we are working with a partitioning of misclosure space, each of the columns of this matrix sums up to one, \(\sum _{i=0}^{k} P_{ij} = 1\), \(\forall j\). Ideally one would like this matrix to be as diagonally dominant as possible, i.e. \(P_{ij}\) large for \(i=j\) (correct decision) and small for \(i \ne j\) (wrong decision). By making use of the translational property of the PDF of t under \(\mathcal {H}_{j}\) and \(\mathcal {H}_{0}\) (cf. 8), the entries of the probability matrix can be computed as

Instead of addressing all entries of this probability matrix, we concentrate attention to those of the first column, i.e. of the null hypothesis \(\mathcal {H}_{0}\), and of an arbitrary second column \(a \in \{1, \ldots ,k\}\), i.e. of alternative hypothesis \(\mathcal {H}_{a}\). We then discriminate between two sets of events, namely under \(\mathcal {H}_{0}\) and under \(\mathcal {H}_{a}\). Under \(\mathcal {H}_{0}\) we consider,

Their probabilities are given as

These probabilities are usually user defined, for instance by setting the probability of false alarm (i.e. level of significance) to a certain required small value \(\mathsf {P}_\mathrm{FA}=\alpha \). In Example 1, this would, for instance, mean choosing \(\tau ^{2}\) as the \((1-\alpha )\)-percentile point of the central Chi-square distribution with r degrees of freedom, \(\tau ^{2} = \chi ^{2}_{\alpha }(r,0)\).

For the events under \(\mathcal {H}_{a}\), we make a distinction between detection and identification. For detection, we have

with their probabilities given as

Although we refrained, for notational convenience, to give these probabilities an additional identifying index a, it should be understood that they differ from alternative to alternative. For each such \(\mathcal {H}_{a}\), the probability of missed detection can be evaluated as

Note, as \(b_{a}\) is unknown, that this requires assuming the likely range of values \(b_{a}\) can take.

With identification following detection, we have

with their probabilities given as

Note, as the three probabilities of missed detection, wrong identification and correct identification sum up to unity,

that a small missed detection probability does not necessarily imply a large probability of correct identification. This would only be the case if there would be a single alternative hypothesis \((k=1)\). In that case, the probability of wrong identification is identically zero, \(\mathsf {P}_\mathrm{WI}=0\), and we have \(\mathsf {P}_\mathrm{CI}=1-\mathsf {P}_\mathrm{MD}\). In the general case of multiple hypotheses \((k>1)\), the available probability of correct detection is spread out over all alternative hypotheses, whether correct or wrong, thus diminishing the probability of correct identification. It is up to the designer of the testing system to ensure that \(\mathsf {P}_\mathrm{CI}\) remains large enough for the application at hand. We have more to say about \(\mathsf {P}_\mathrm{CI} \ne 1-\mathsf {P}_\mathrm{MD}\) when discussing the concept of minimal detectable biases in Sect. 4.4.

4.2 The bias decomposition of \(\bar{x}\)

We will now use the events of missed detection (MD), correct detection (CD), wrong identification (WI) and correct identification (CI), to further study the mean of \(\bar{x}\). We have already shown that the DIA estimator is biased under \(\mathcal {H}_{a}\) (cf. 44). To study how this bias gets distributed over the different events of testing, we define

By application of the total probability rule for expectation, \(\mathsf {E}(\bar{x}) = \sum _{j=0}^{k} \mathsf {E}(\bar{x}|t \in \mathcal {P}_{j})P(t \in \mathcal {P}_{j})\), we may write the unconditional bias \(b_{\bar{x}}\) as the ‘weighted average’,

This expression shows how the unconditional bias is formed from its conditional counterparts. These conditional biases of the DIA estimator are given in the following theorem.

Theorem 3

(Conditional biases) The conditional counterparts of the unconditional bias \(b_{\bar{x}}=A^{+}[C_{a}b_{a}-\sum _{i=1}^{k}C_{i}\beta _{i}(b_{a})]\) are given as

Proof

See Appendix. \(\square \)

The above result shows that the DIA estimator \(\bar{x}\) is not only unconditionally biased under \(\mathcal {H}_{a}\), \(b_{\bar{x}}\ne 0\), but even also when it is conditioned on correct identification, \(b_{\bar{x}|\mathrm CI} \ne 0\). Thus if one would repeat the measurement–estimation–testing experiment of the DIA estimator a sufficient number of times under \(\mathcal {H}_{a}\) being true and one would then be only collecting the correctly adapted outcomes of \(\bar{x}\), then still their expectation would not coincide with x. This fundamental result puts the often argued importance of unbiasedness (e.g. in BLUEs) in a somewhat different light.

Note that in case of datasnooping, when the vectors \(C_{i}=c_{i}\) take the form of canonical unit vectors, the conditional bias under correct identification is given as

while the corresponding unconditional bias is given as

Comparison of these two expressions shows that where in the CI case the bias contribution to \(b_{\bar{x}|\mathrm{CI}}\) stays confined to the correctly identified ath observation, this is not true anymore for the bias contribution in the unconditional case. In the unconditional case, the bias in \(\bar{x}\) also receives contributions from the entries of \(\bar{b}_{y_{a}}\) other than its ath, i.e. instead of \(\bar{b}_{y_{a}|\mathrm{CI}}\), which only has its ath entry being nonzero, the vector \(\bar{b}_{y_{a}}\) has next to its ath entry also its other entries being filled up with nonzero values.

4.3 The PDF decomposition of \(\bar{x}\) under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\)

Similar to the above bias decomposition, we can decompose the unconditional PDF of \(\bar{x}\) into its conditional constituents under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\). We have the following result.

Theorem 4

(PDF decomposition) The PDF of \(\bar{x}\) can be decomposed under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\) as,

with \(f_{\bar{x}|\mathrm CA}(x|\mathrm{CA}) \!=\! f_{\hat{x}_{0}}(x|\mathcal {H}_{0})\), \(f_{\bar{x}|\mathrm MD}(x|\mathrm{MD}) \!=\! f_{\hat{x}_{0}}(x|\mathcal {H}_{a})\), and where

with

Proof

See Appendix. \(\square \)

The above decomposition can be used to evaluate the performance of the DIA estimator for the various different occurrences of the testing outcomes. Note that the PDF of \(\bar{x}\), when conditioned on correct acceptance (CA) or missed detection (MD), is simply that of \(\hat{x}_{0}\) under \(\mathcal {H}_{0}\) and \(\mathcal {H}_{a}\), respectively. Such simplification does, however, not occur for the PDF when conditioned on correct identification (CI cf. 73). This is due to the fact that \(\hat{x}_{a}\), as opposed to \(\hat{x}_{0}\), is not independent from the misclosure vector t. Thus

Also note, since \(\mathcal {H}_{a}\) depends on the unknown bias \(b_{a}\), that one needs to make assumptions on its range of values when evaluating \(f_{\bar{x}}(x|\mathcal {H}_{a})\). One such approach is based on the well-known concept of minimal detectable biases (Baarda 1968a; Teunissen 1998a; Salzmann 1991).

4.4 On the minimal detectable bias

The minimal detectable bias (MDB) of an alternative hypothesis \(\mathcal {H}_{a}\) is defined as the (in absolute value) smallest bias that leads to rejection of \(\mathcal {H}_{0}\) for a given CD probability. It can be computed by ‘inverting’

for a certain CD probability, say \(\mathsf {P}_\mathrm{CD}=\gamma _\mathrm{CD}\). For the \(\mathcal {P}_{0}\) of example 1 (cf. 27), with \(\tau ^{2}=\chi _{\alpha }^{2}(r,0)\), it can be computed from ‘inverting’

using the fact that under \(\mathcal {H}_{a}\), \(||t||_{Q_{tt}}^{2} \sim \chi ^{2}(r, \lambda _{t}^{2})\), with \(\lambda _{t}^{2}= ||P_{A}^{\perp }C_{a}b_{a}||_{Q_{yy}}^{2}\) (Arnold 1981; Koch 1999). For the case \(C_{a}=c_{a}\), inversion leads then to the well-known MDB expression (Baarda 1968a; Teunissen 2000)

in which \(\lambda _{t}(\alpha , \gamma _\mathrm{CD}, r)\) denotes the function that captures the dependency on the chosen false-alarm probability, \(\mathsf {P}_\mathrm{FA}=\alpha \), the chosen correct detection probability, \(\mathsf {P}_\mathrm{CD}=\gamma _\mathrm{CD}\), and the model redundancy r. For the higher-dimensional case when \(b_{a}\) is a vector instead of a scalar, a similar expression can be obtained, see Teunissen (2000).

The importance of the MDB concept is that it expresses the sensitivity of the detection step of the DIA method in terms of minimal bias sizes of the respective hypotheses. By using the same \(\mathsf {P}_\mathrm{CD}=1-\mathsf {P}_\mathrm{MD}=\gamma _\mathrm{CD}\) for all \(\mathcal {H}_{a}\), the MDBs can be compared and provide information on the sensitivity of rejecting the null hypothesis for \(\mathcal {H}_{a}\)-biases the size of their MDBs. For instance, in case of datasnooping of a surveyor’s trilateration network, the MDBs and their mutual comparison would reveal for which observed distance in the trilateration network the rejection of \(\mathcal {H}_{0}\) would be least sensitive (namely distance with largest MDB), as well as how large its distance bias needs to be to have rejection occur with probability \(\mathsf {P}_\mathrm{CD}=\gamma _\mathrm{CD}\).

It is important to understand, however, that the MDB is about correct detection and not about correct identification. This would only be true in the binary case, when next to the null hypothesis only a single alternative hypothesis is considered. For identification in the multiple hypotheses case \((k>1)\), one can, however, pose a somewhat similar question as the one that led to the MDB: what is the smallest bias of an alternative hypothesis \(\mathcal {H}_{a}\) that leads to its identification for a given CI probability. Thus similar to (75), such minimal identifiable bias is found from ’inverting’

for a given CI probability. Compare this with (75) and note the difference.

Example 7

(Minimal Identifiable Bias) Let \(t \mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(c_{t_{a}}b_{a}, Q_{tt})\) and define \([\hat{b}_{a}^{T}, \bar{t}_{a}^{T}]^{T}=\mathcal {T}_{a}t\), using the Tienstra transformation \(\mathcal {T}_{a}=[c_{t_{a}}^{+ T}, d_{t_{a}}]^{T}\), in which \(c_{t_{a}}^{+ }\) is the BLUE-inverse of \(c_{t_{a}}\) and \(d_{t_{a}}\) is a basis matrix of the null space of \(c_{t_{a}}^{T}\). Then \(\bar{t}_{a}\) and \(w_{a}\) are independent and have the distributions \(\bar{t}_{a} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(0, Q_{\bar{t}_{a}\bar{t}_{a}})\) and \( w_{a} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(b_{a}/\sigma _{\hat{b}_{a}}, 1)\), with the decomposition \(||t-c_{t_{a}}b_{a}||_{Q_{tt}}^{2}=||\bar{t}_{a}||_{Q_{\bar{t}_{a}\bar{t}_{a}}}^{2}+(w_{a}-\frac{b_{a}}{\sigma _{\hat{b}_{a}}})^{2}\). As the identification regions, we take the ones of (38). For a given \(b_{a}\), the probability of correct identification can then be computed as

with the \(\mathcal {P}_{a}\)-defining constraints given as \(\tau ^{2}-w_{a}^{2} < ||\bar{t}_{a}||_{Q_{\bar{t}_{a}\bar{t}_{a}}}^{2} \le \bar{\tau }^{2}\) and \(|w_{a}| = \max _{j} |w_{j}|\). Reversely, one can compute, for a given CI probability, the minimal identifiable bias by solving \(b_{a}\) from \(P_\mathrm{CI}=\mathsf {P}(t \in \mathcal {P}_{a} | \mathcal {H}_{a})\). As a useful approximation of the CI probability from above, one may use

The first inequality follows from discarding the constraint \(|w_{a}| = \max _{j} |w_{j}|\), the second from discarding the curvature of \(\mathcal {P}_{0}\) and the fact that \(\bar{t}_{a}\) and \(w_{a}\) are independent. A too small value of the easy-to-compute upper bound (80) indicates then that identifiability of \(\mathcal {H}_{a}\) is problematic.\(\square \)

Since \(\mathsf {P}_\mathrm{CD} \ge \mathsf {P}_\mathrm{CI}\), one can expect the minimal identifiable bias to be larger than the MDB when \(\mathsf {P}_\mathrm{CI}=\gamma _\mathrm{CD}\). Correct identification is thus more difficult than correct detection. Their difference depends on the probability of wrongful identification. The smaller \(\mathsf {P}_\mathrm{WI}\) is, the closer \(\mathsf {P}_\mathrm{CI}\) gets to \(\mathsf {P}_\mathrm{CD}\).

We note that in the simulation studies of Koch (2016, 2017) discrepancies were reported between the MDB and simulated values. Such discrepancies could perhaps have been the consequence of not taking the difference between the MDB and the minimal identifiable bias into account.

As the ‘inversion’ of (78) is more difficult than that of (75), one may also consider the ’forward’ computation. For instance, if all alternative hypotheses are of the same type (e.g. distance outliers in a trilateration network), then (78) could be used to compare the \(\mathsf {P}_\mathrm{CI}\)’s of the different hypotheses for the same size of bias. Alternatively, if one wants to infer the \(\mathsf {P}_\mathrm{CI}\) for hypotheses that all have the same correct detection probability \(\mathsf {P}_\mathrm{CD}=\gamma _\mathrm{CD}\), the easy-to-compute MDB can be used in the ’forward’ computation as

This is thus the probability of identifying a bias the size of the MDB. As the computation of (81) is based on the same \(\mathsf {P}_\mathrm{CD}\) for each \(\mathcal {H}_{a}\), it again makes comparison between the hypotheses possible. It allows for a ranking of the alternative hypotheses in terms of their identifiability under the same correct detection probability. Would the probability \(\mathsf {P}_\mathrm{CI,\mathrm{MDB}}\) then turn out to be too small for certain hypotheses (given the requirements of the application at hand), a designer of the measurement–estimation–testing system could then either try to improve the strength of the model (by improving the design matrix A and/or the variance matrix \(Q_{yy}\)) or decide to have the regions \(\mathcal {P}_{i \ne 0}\) of the poorly identifiable hypotheses added to the undecided region. In the latter case, one would allow such hypotheses to contribute to the rejection of \(\mathcal {H}_{0}\), but not to its adaptation.

4.5 On the computation of \(\mathsf {P}_\mathrm{CI}\)

Many of the relevant probabilities in this contribution are multivariate integrals over complex regions. They therefore need to be computed by means of numerical simulation, the principles of which are as follows: as a probability can always be written as an expectation and an expectation can be approximated by taking the average of a sufficient number of samples from the distribution, the random generation of such samples is used for the required approximation. Thus, the approximation of the probability of correct identification,

for \(b_{a}=b_{a, \mathrm MDB}\), is then given by

(cf. 81), with N the total number of samples and \(\tau _{i}\), \(i=1, \ldots , N\), being the samples drawn from \(f_{t}(\tau |\mathcal {H}_{a})=f_{t}(\tau -B^{T}C_{a}b_{a, \mathrm MDB}| \mathcal {H}_{0})\) and thus from the multivariate normal distribution \(\mathcal {N}(B^{T}C_{a}b_{a, \mathrm MDB}, Q_{tt})\). Whether or not the drawn sample \(\tau =\tau _{i}\) contributes to the average is regulated by the indicator function \(p_{a}(\tau )\) and thus the actual test. The approximation (83) constitutes the simplest of simulation-based numerical integration. For more advanced methods, see Robert and Casella (2013).

5 How to evaluate and use the DIA estimator?

5.1 Unconditional evaluation

The unconditional PDF \(f_{\bar{x}}(x)\) (cf. 39) contains all the necessary information about \(\bar{x}\). As the DIA estimator \(\bar{x}\) is an estimator of x, it is likely to be considered a good estimator if the probability of \(\bar{x}\) being close to x is sufficiently large. Although the quantification of such terms as ’close’ and ’sufficiently large’ is application dependent, we assume that a (convex) shape and size of an x-centred region \(\mathcal {B}_{x} \subset \mathbb {R}^{n}\), as well as the required probability \(1-\epsilon \) of the estimator residing in it, is given. Thus if \(\mathcal {H}\) would be the true hypothesis, the estimator \(\bar{x}\) would be considered an acceptable estimator of x if the inequality \(\mathsf {P}(\bar{x} \in \mathcal {B}_{x} | \mathcal {H}) \ge 1-\epsilon \), or

is satisfied for (very) small \(\epsilon \). With reference to safety-critical situations, for which occurrences of \(\bar{x}\) falling outside \(\mathcal {B}_{x}\) are considered hazardous, the probability (84) will be referred to as the hazardous probability. Using (33) and (39) of Theorem 1, we have the following result.

Corollary 4

(Hazardous probability) The hazardous probability can be computed as

with the PDF of \(\bar{x}\) given as

for which, when \(\mathcal {H}=\mathcal {H}_{a}\),

with \(\mu (\tau )= A^{+}(\mathsf {E}(y|\mathcal {H}_{0})+C_{a}b_{a}-C_{i}(B^{T}C_{i})^{+}\tau )\). To get the PDF under \(\mathcal {H}=\mathcal {H}_{0}\), set \(b_{a}=0\) in the above.

PDF \(f_{\bar{x}}(x|\mathcal {H}_{a})\) of estimator \(\bar{x}\) under \(\mathcal {H}_{a}\) (Example 8, \(\sigma _{\hat{x}_{0}}^{2}=0.5\), \(\sigma _{t}^{2}=2\)). Left \(b_{a}=1\) (\(b_{\hat{x}_{0}}=0.5)\), middle \(b_{a}=3\) (\(b_{\hat{x}_{0}}=1.5)\), right \(b_{a}=5\) (\(b_{\hat{x}_{0}}=2.5)\)

PDF \(f_{\bar{x}}(x|\mathcal {H}_{a})\) of DIA estimator \(\bar{x}\) under \(\mathcal {H}_{a}\) (Example 8, \(\sigma _{\hat{x}_{0}}^{2}=0.25\), \(\sigma _{t}^{2}=1\)). Left \(b_{a}=1\) (\(b_{\hat{x}_{0}}=0.5)\), middle \(b_{a}=3\) (\(b_{\hat{x}_{0}}=1.5)\), right \(b_{a}=5\) (\(b_{\hat{x}_{0}}=2.5)\)

By computing (85) for all \(\mathcal {H}_{i}\)s, one gets a complete picture of how the DIA estimator performs under each of the hypotheses. Such can then be used for various designing purposes, such as of finding a measurement–design (i.e. choice of A and \(Q_{yy}\)) and/or a testing–design (i.e. choice of \(\mathcal {P}_{i}\)’s and their partitioning) that realizes sufficiently small hazardous probabilities. One may then also take advantage of the difference between influential and testable biases, by testing less stringent for biases that are less influential. Under certain circumstances, one may even try to optimize the DIA estimator, by minimizing an (ordinary or weighted) average hazardous probability

as function of certain estimator defining properties, such as, for instance, the chosen partitioning of the misclosure space. In the event that one has information about the frequency of occurrence of the hypotheses, the weights \(\omega _{i}\) would then be given by these a priori probabilities.

Example 8

(Continuation of Example 5) It follows from (39) that in case of only one alternative hypothesis \((k=1)\), the PDF of \(\bar{x}\) under \(\mathcal {H}_{a}\) can be written as

Let \(\hat{x}_{0} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(b_{\hat{x}_{0}}=\frac{1}{2}b_{a}, \sigma _{\hat{x}_{0}}^{2}=0.5)\), \(t \mathop {\sim }\limits ^{\mathcal {H}_{0}} \mathcal {N}(b_{t}=b_{a}, \sigma _{t}^{2}=2)\) (thus \(n=r=1\)), and \(L_{1}=\frac{1}{2}\), with acceptance interval \(\mathcal {P}_{0} = \{ \tau \in \mathbb {R} | (\tau /\sigma _{t})^{2} \le \chi ^{2}_{\alpha }(1,0) \}\). Figure 7 shows this PDF for the three cases \(b_{a}=1, 3, 5\) using \(\alpha =0.1\) and \(\alpha =0.001\), while Fig. 8 shows these same three cases but now with twice improved variance. Note the positive effects that increasing \(\alpha \) (cf. Fig. 7) and improving precision (cf. Fig. 8) have on the estimator \(\bar{x}\). The larger \(\alpha \) gets, the more probability mass of the PDF of \(\bar{x}\) becomes centred again around the correct value 0, this of course at the expense of heavier tails in \(f_{\bar{x}}(x|\mathcal {H}_{0})\), see Fig. 6. For small values of \(\alpha \), thus when the acceptance interval of \(\mathcal {H}_{0}\) is large, the estimator \(\bar{x}\) is more biased as its PDF \(f_{\bar{x}}(x|\mathcal {H}_{a})\) becomes more centred around \(b_{\hat{x}_{0}}\). Also the effect of precision improvement is clearly visible, with ultimately, for \(b_{a}=5\), a PDF of \(\bar{x}\) that has most of its mass centred around 0 again.\(\square \)

To provide further insight in the factors that contribute to (85), we make use of the decomposition

The first term of the sum is relevant for detection, the second when identification is included as well. We first consider the detection-only case.

5.2 Detection only: precision and reliability

In the detection-only case, the solution \(\bar{x}\) is declared unavailable when the null hypothesis is rejected, i.e. when \(t \notin \mathcal {P}_{0}\). The probability of such outcome is under \(\mathcal {H}_{0}\), the false alarm \(\mathsf {P}(t \notin \mathcal {P}_{0}|\mathcal {H}_{0})=\mathsf {P}_\mathrm{FA}\), and under \(\mathcal {H}_{a}\), the probability of correct detection \(\mathsf {P}(t \notin \mathcal {P}_{0}|\mathcal {H}_{a})=\mathsf {P}_\mathrm{CD}\). The false alarm is often user controlled by setting the appropriate size of \(\mathcal {P}_{0}\). The probability of correct detection, however, depends on which \(\mathcal {H}_{a}\) is considered and on the size of its bias \(b_{a}\). We have \(\mathsf {P}_\mathrm{CD}=\mathsf {P}_\mathrm{FA}\) for \(b_{a}=0\), but \(\mathsf {P}_\mathrm{CD} > \mathsf {P}_\mathrm{FA}\) otherwise.

The estimator \(\bar{x}\) is computed as \(\hat{x}_{0}\) when the null hypothesis is accepted, i.e. when \(t \in \mathcal {P}_{0}\). Its hazardous probability is then given for \(\mathcal {H}=\mathcal {H}_{0}, \mathcal {H}_{a}\) as

where use has been made of the independence of \(\hat{x}_{0}\) and t. As \(\mathsf {P}_\mathrm{CA}\) is user fixed and \(\hat{x}_{0} \mathop {\sim }\limits ^{\mathcal {H}_{0}} \mathcal {N}(x, Q_{\hat{x}_{0}\hat{x}_{0}})\), the hazardous probability under \(\mathcal {H}=\mathcal {H}_{0}\) is completely driven by the variance matrix \(Q_{\hat{x}_{0}\hat{x}_{0}}\) and thus by the precision of \(\hat{x}_{0}\). This is not the case under \(\mathcal {H}_{a}\), however, since the hazardous probability, also referred to as hazardous missed detection (HMD) probability, becomes then dependent on \(b_{a}\) as well,

Note, since \(\hat{x}_{0} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(x+b_{\hat{x}_{0}}, Q_{\hat{x}_{0}\hat{x}_{0}})\) and \(t\mathop {\sim }\limits ^{\mathcal {H}_{a}} \mathcal {N}(b_{t}, Q_{tt})\) (cf. 8), that the two probabilities in the product (92) are affected differently by \(b_{a}\). The probability \(\mathsf {P}(\hat{x}_{0} \notin \mathcal {B}_{x} | \mathcal {H}_{a})\) is driven by the influential bias \(b_{\hat{x}_{0}}=A^{+}C_{a}b_{a}\), while the missed detection probability is driven by the testable bias \(b_{t}=B^{T}C_{a}b_{a}\) (cf. 9).

To compute and evaluate the probability of hazardous missed detection \(\mathsf {P}_\mathrm{HMD}\), one can follow different routes. The first approach, due to Baarda (1967, 1968a), goes as follows. For each \(\mathcal {H}_{a}\), the MDB is computed from inverting (75). Then, each MDB is taken as reference value for \(b_{a}\) and propagated to obtain the corresponding \(b_{\hat{x}_{0}}\), thus allowing for the computation of \(\mathsf {P}_\mathrm{HMD}\) for each \(\mathcal {H}_{a}\). The set of MDBs is said to describe the internal reliability, while their propagation into the parameters is said to describe the external reliability (Baarda 1968a; Teunissen 2000). The computations simplify, if one is only interested in \(\mathsf {P}_\mathrm{HMD}\), while \(\mathcal {B}_{x}\) and \(\mathcal {P}_{0}\) are defined as \(\mathcal {B}_{x} = \{ u \in \mathbb {R}^{n} |\; ||u-x||_{Q_{\hat{x}_{0}\hat{x}_{0}}}^{2} \le d^{2} \}\) and \(\mathcal {P}_{0} = \{ t \in \mathbb {R}^{r} |\; ||t||_{Q_{tt}}^{2} \le \chi _{\alpha }^{2}(r,0)\}\). Then, since \(||\hat{x}_{0}-x||_{Q_{\hat{x}_{0}\hat{x}_{0}}}^{2} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \chi ^{2}(n, \lambda _{\hat{x}_{0}}^{2})\) and \(||t||_{Q_{tt}}^{2} \mathop {\sim }\limits ^{\mathcal {H}_{a}} \chi ^{2}(r, \lambda _{t}^{2})\), one can make a direct use of the Pythagorian relation (see Fig. 1) and use

to compute the hazardous missed detection probability as \(\mathsf {P}_\mathrm{HMD}=\mathsf {P}(\chi ^{2}(n, \lambda _{\hat{x}_{0}}^{2}) > d^{2})\times (1- \gamma _\mathrm{CD})\) for each \(\mathcal {H}_{a}\). By comparing the \(\mathsf {P}_\mathrm{HMD}\)s between hypotheses, one would know which of the \(\mathcal {H}_{a}\)s would be the most hazardous to miss detection of and what its hazardous probability would be for a given \(\mathsf {P}_\mathrm{MD}\).

An alternative, more conservative approach would be to directly evaluate (92) as function of the bias \(b_{a}\). As \(\mathsf {P}_\mathrm{MD}\) gets smaller, but \(\mathsf {P}(\hat{x}_{0} \notin \mathcal {B}_{x} | \mathcal {H}_{a})\) larger, for larger biases, the probability (92) will have a maximum for a certain bias. With this approach, one can thus evaluate whether the ’worst-case’ scenario \(\max _{b_{a}} \mathsf {P}_\mathrm{HMD}(b_{a})\) for each of the hypotheses still satisfies ones criterion (Ober 2003; Teunissen 2017).

As the above computations can be done without the need of having the actual measurements available, they are very useful for design verification purposes. Starting from a certain assumed design or measurement setup as described by A and \(Q_{yy}\), one can then infer how well the design can be expected to protect against biases in the event that one of the alternative hypotheses is true.

5.3 Detection and identification

The above analysis is not enough when next to detection, identification is involved as well. With identification included one eliminates (or reduces) the unavailability, but this goes at the expense of an increased hazardous probability. That is, now also the second term in the sum of (90) needs to be accounted for. This extra contribution to the hazardous probability is given for \(\mathcal {H}=\mathcal {H}_{0}, \mathcal {H}_{a}\) as

Thus in order to limit the increase in hazardous probability, the design–aim should be to keep the hazardous probability under correct identification, \(\mathsf {P}(\hat{x}_{a} \notin \mathcal {B}_{x} | \mathrm{CI})\), small, as well as the probability of correct identification, \(\mathsf {P}_\mathrm{CI}\), close to that of correct detection, \(\mathsf {P}_\mathrm{CD}\), thus giving a small probability of wrong identification, \(\mathsf {P}_\mathrm{WI}\).

Note that the computation of (94) is more complex than that of (91), since it involves all \(\hat{x}_{i \ne 0}\)’s as well. Under \(\mathcal {H}_{0}\), one can get rid of this dependency, when one is willing to work with the, usually small, upper bound \(\mathsf {P}_\mathrm{FA}\). In that case, by adding (94) to (91), the unconditional hazardous probability under \(\mathcal {H}_{0}\) can be bounded from above as

With this upper bound, the computational complexity is the same as that of the detection-only case. The situation becomes a bit more complicated under \(\mathcal {H}_{a}\) however. One can then still get rid of a large part of the complexity if one is willing to use the upper bound \(\mathsf {P}(\bar{x} \notin \mathcal {B}_{x} | \mathrm{WI})\mathsf {P}_\mathrm{WI} \le \mathsf {P}_\mathrm{WI} = 1-\mathsf {P}_\mathrm{CI}-\mathsf {P}_\mathrm{MD}\). In that case the unconditional hazardous probability can be bounded from above as

But to assure identification, the computation remains of course still more complex than that of the detection-only case. As the upper bound depends on \(b_{a}\), its computation can be done (using e.g. the MDB) in a similar way as discussed for (92).

Note that the upper bound of (96) can be sharpened by using

in which \(\mathcal {I}\) denotes the set of hypothesis identifiers for which the probabilities \(P_{ia}=\mathsf {P}(t \in \mathcal {P}_{i}|\mathcal {H}_{a})\) are considered significant.

5.4 Conditional evaluation

In practice, the outcome produced by an estimation–testing scheme is often not the end product but just an intermediate step of a whole processing chain. Such follow-on processing, for which the outcome of the DIA estimator \(\bar{x}\) is used as input, then also requires the associated quality description. This is not difficult in principle and only requires the proper forward propagation of the unconditional PDF of \(\bar{x}\). This is, however, not how it is done in practice. In practice, often two approximations are made when using the DIA estimator. The first approximation is that \(\bar{x}\) is not evaluated unconditionally, but rather conditionally on the outcome of testing. Thus instead of working with the PDF of \(\bar{x}\), one works with the PDF of \((\bar{x}|t \in \mathcal {P}_{i})\), i.e. the one conditioned on the outcome of testing, \(t \in \mathcal {P}_{i}\). The second approximation is that one then neglects this conditioning and uses the unconditional PDF of \(\hat{x}_{i}\) instead for the evaluation. But the fact that the random vector \((\bar{x}|t \in \mathcal {P}_{i})\) has the outcome \(\hat{x}_{i}\) does not mean that the two random vectors \((\bar{x}|t \in \mathcal {P}_{i})\) and \(\hat{x}_{i}\) have the same distribution. This would only be the case if \(\hat{x}_{i}\) and t are independent, which is true for \(\hat{x}_{0}\) and t, but not for \(\hat{x}_{i \ne 0}\) and t.

From a practical point of view, of course, it would be easiest if indeed it would suffice to work with the relatively simple normal PDFs of \(\hat{x}_{i}\). In all subsequent processing, one could then work with the PDF of \(\hat{x}_{0}\), \(\mathcal {N}(x, Q_{\hat{x}_{0}, \hat{x}_{0}})\), if the null hypothesis gets accepted, and with the PDF of \(\hat{x}_{a}\), \(\mathcal {N}(x, Q_{\hat{x}_{a}, \hat{x}_{a}})\), if the corresponding alternative hypothesis gets identified. To show what approximations are involved when doing so, we start with the case the null hypothesis gets accepted.

We then have, as \(\hat{x}_{0}\) and t are independent,

Thus in this case, it is correct to use the PDF \(f_{\hat{x}_{0}}(x)\) to describe the quality of the conditional random vector \((\bar{x}|t \in \mathcal {P}_{0})\). This PDF may thus then indeed be used in the computation of

However, since in contrast to (98),

it is not correct to use the PDF \(f_{\hat{x}_{a}}(x)\) for describing the uncertainty when \(t \in \mathcal {P}_{a}\). It depends then on the difference between the two PDFs, \(f_{\hat{x}_{a}}(x)\) and \(f_{\hat{x}_{a}|t \in \mathcal {P}_{a}}(x|t \in \mathcal {P}_{a})\), whether the approximation made is acceptable. The relation between the two PDFs is given in the following.

Corollary 5

The PDF of \(\hat{x}_{a}\) can be expressed in that of \(\hat{x}_{a}|t \in \mathcal {P}_{a}\) as

in which

and \(R(x)=\sum _{i=0, \ne a}^{k}f_{\hat{x}_{a}|t \in \mathcal {P}_{i}}(x|t \in \mathcal {P}_{i})\mathsf {P}(t \in \mathcal {P}_{i})\).

Proof

Follows from an application of the PDF total probability rule and (39). \(\square \)