Abstract

This paper investigates a dynamic model of strategic communication between a principal and an expert with conflicting preferences. In each period, the uninformed principal selects an experiment which privately reveals information about an unknown state to the expert. The expert then sends a cheap talk message to the principal. We show that the principal can elicit perfect information from the expert about the state and achieve the first-best outcome in only two periods if the expert’s preference bias is not too large. If the state space is unbounded, full information revelation is possible for an arbitrarily large bias. Moreover, full revelation of information is feasible in more general frameworks than those considered in the literature, including frameworks which include non-quasiconcave and non-supermodular payoff functions and those with a privately known bias of the expert.

Similar content being viewed by others

Notes

A single-stage protocol of learning information cannot implement the first-best outcome (Ivanov 2010a).

All or some of these assumptions are used in various communication models, e.g., communication with multiple experts (Krishna and Morgan 2001a, b; Battaglini 2002), delegation (Dessein 2002; Alonso and Matouschek 2008; Kovác and Mylovanov 2009), mediated communication (Goltsman et al. 2009), dynamic communication (Krishna and Morgan 2004; Golosov et al. 2014), contracting for information (Krishna and Morgan 2008), and communication via a noisy channel (Blume et al. 2007). Also, Morgan and Stocken (2003) and Li and Madaràsz (2008) show that an outcome of communication can change drastically if the expert’s bias is her private information.

Ambrus and Lu (2013) apply discontinuous punishment of the expert for local distortions of information via the principal’s actions the model of communication with multiple experts. As a result, the principal can elicit almost full information from the experts.

Roberts (2004) provides a clear example of manipulating such information by auto mechanics. In his words, “Sears Roebuck in 1992 sought to motivate the mechanics in its auto repair business by setting targets for the amount of work they did. The mechanics responded by telling customers that they needed steering and suspension repairs that were in fact unnecessary. Customers could not easily verify the need for the repairs themselves, and many paid for the unnecessary work. When the fraud was uncovered, Sears not only paid large fines, it lost much of the precious trust it had once enjoyed among its customers.”

If a single testing procedure must be applied to multiple independent variables, then obtaining information about all variables by the principal is costly if, e.g., there are capacity constraints on the number of tests performed. For instance, evaluating problems of cars covered by the manufacturer warranty is delegated to car dealers, who then submit reports and bills to the car producer. In this case, designing the standards on testing procedures for estimating car conditions and auditing the dealers is a less costly task for the manufacturer than testing cars directly.

Klein and Mylovanov (2011) investigate the conflict between the expert’s reputational concerns about appearing competent and the incentives to report information truthfully in the dynamic environment in which the expert can accumulate information about her competence over time.

Thus, compared to the CS setup, our model does not require: (1) the concavity of \(U\left( a,\theta ,b\right) \) in \(a\) for each \(\theta \); (2) the supermodularity of \(U\left( a,\theta ,b\right) \) in \(\left( a,\theta \right) \ \)or, equivalently, the monotonicity of \(a^{*}\left( \theta ,b\right) \) in \(\theta \); and (3) the single-sided bias \(y\left( \theta ,b\right) \ne a\left( \theta \right) \) for all \(b\ \)and \(\theta \).

For simplicity, we restrict attention to pure strategies of the principal as functions of messages \(\left\{ m_{1},m_{2}\right\} \).

All messages \(m_{t} \notin \mathcal {M},t=1,2\) are interpreted by the principal as some \(m_{t} ^{0}\in \mathcal {M}\).

The uniform-quadratic setup is known for the tractability in various modifications of the basic CS model. See, for example, Blume et al. (2007), Goltsman et al. (2009), Krishna and Morgan (2001a, b, 2004), Krishna and Morgan (2008), Melumad and Shibano (1991), Ottaviani and Squintani (2006), and Esö and Szalay (2010).

In this light, it is important to note that the efficiency of interaction relies on the expert’s being unable to get outside information. Otherwise, the expert could run the first test to find out the two possible states and then privately eliminate one of them with an outside investigation. Having then discovered the true state, he could mislead the principal afterwards.

The simplest non-trivial learning protocol is the protocol with the smallest number of \(Q_{k}\) and \(\Theta _{j}^{k}\) in which the expert acquires private information in the first period (that is, it contains at least two families \(Q_{k}\)) and can update her information in the second period (i.e., each \(Q_{k}\) contains at least two subsets).

To the best of our knowledge, these schemes are restricted delegation with monetary transfers for reported information (Krishna and Morgan 2008) and restricted delegation to an imperfectly informed expert (Ivanov 2010a). They provide the ex-ante payoffs to the principal approximately \(-\frac{1}{38}\) and \(-\frac{1}{98}\), respectively.

The principal’s ex-ante payoff in the most informative equilibrium is equal to the average residual variance across sub-intervals \(\Theta _{j}^{k}\). Suppose that the total number of sub-intervals in the learning protocol is \(N\) and all sub-intervals are of the equal length \(\Delta =\frac{1}{N}\). Since the density function can be approximated by the piecewise constant density on each sub-interval for large \(N\), this gives \(EU\simeq -\frac{\Delta ^{2}}{12} =-\frac{1}{12N^{2}}\). Setting \(EU=-\varepsilon \), where \(\varepsilon >0\) is sufficiently small, determines the necessary number of sub-intervals.

The derivation of the formulas for \(p_{s}\) and \(p_{s}^{c}\) is in the Appendix.

The effect of the binary first-period information structure on the expert’s uncertainty is clearly seen in the case of the risk-averse expert, i.e., if \(V\left( a,\theta ,b\right) \) is concave in \(a\) for all \(\left( \theta ,b\right) \). For a fixed interval \(\left[ \theta _{1},\theta _{2}\right] \), consider all distributions of a random variable \(X\) with the support \(L\subset \left[ \theta _{1},\theta _{2}\right] \) and a given mean value \(E\left[ X\right] \in \left[ \theta _{1},\theta _{2}\right] \). In this class, the binary distribution on \(\left\{ \theta _{1},\theta _{2}\right\} \) with the probabilities \(p_{\theta _{1}}=\Pr \left\{ X=\theta _{1}\right\} =\frac{\theta _{2}-E\left[ X\right] }{\theta _{2}-\theta _{1}}\) and \(p_{\theta _{2}}=1-p_{\theta _{1}}\), respectively, dominates any other distribution by the convex order. The proof follows from (3.A.8) in Shaked and Shanthikumar (2007). In our model, this means that the first-period information structure with binary distributions of posterior values of \(\theta \) maximizes the expert’s uncertainty. This increases her benefits from learning \(\theta \) in the second period and motivates her to reveal her first-period signal.

The choice of \(m_{1}^{0}=0\) is not important. Equivalently, it can be replaced by any \(m_{1}^{\prime }\in [0,\bar{s})\).

The messages \(\left\{ m_{1},m_{2}\right\} \) that induce \(y=y_{1}\left( s,b\right) \) can be constructed as follows. Denote \(\mathcal {A}_{1}=\left\{ a_{p}\left( \theta \right) |\theta \in [0,\bar{s})\right\} \) and \(\mathcal {A}_{2}=\left\{ a_{p}\left( \theta \right) |\theta \in [\bar{s},1)\right\} \). Then, define \(m_{1}=m_{2}\in [0,\bar{s})\cap a_{p}^{-1}\left( y\right) \) if \(y\in \mathcal {A}_{1}, m_{1}=\varphi ^{-1}\left( m_{2}\right) ,m_{2} \in [\bar{s},1)\cap a_{p}^{-1}\left( y\right) \) if \(y\in \mathcal {A} _{2}\), and \(m_{1}=m_{2}=1\) if \(y=a_{p}\left( 1\right) \).

In the uniform-quadratic example above, the distance \(\left| s-s^{\prime }\right| \le \frac{1}{2}\) for \(s^{\prime }=\frac{1}{2}\). Hence, if \(b>\frac{1}{4}\), then the second-period incentive-compatibility constraints (1) are violated for some \(s\). In addition, \(\underset{s,\varphi \left( s\right) :\underset{s}{\cup }\left\{ s,\varphi \left( s\right) \right\} =\Theta }{\sup }E\left[ V\left( y_{1}\left( s,b\right) ,\theta \right) |s\right] \ge -\frac{1}{16}>E\left[ V\left( a_{p}\left( \theta \right) ,\theta ,b\right) |s\right] =-b^{2}\) means that the expert’s learning incentive constraints (9) are also violated.

That is, there are no private benefits to the expert for simply being more biased.

Besides the quadratic payoff function with a constant bias, this class includes the generalized quadratic functions \(V\left( a,\theta ,b\right) =-\left( a-y\left( \theta ,b\right) \right) ^{2}\) used by Alonso and Matouschek (2008) and Kovác and Mylovanov (2009), and the symmetric functions \(V\left( a,\theta ,b\right) =V\big ( \left| a-(\theta +b)\right| \big ) \) exploited by Dessein (2002). Krishna and Morgan (2004) use a special case of the symmetric functions, \(V\left( a,\theta ,b\right) =-\left| a-(\theta +b)\right| ^{\rho }\).

Ambrus and Lu (2013) use this condition in the context of communication with multiple experts.

Consider the symmetric payoff function \(V\left( a,\theta ,b\right) =V\left( \left| a-\theta -\beta \left( b,\theta \right) \right| \right) \), where \(V^{\prime }\left( x\right) \le 0,V^{\prime \prime }\left( x\right) <0,x\ge 0\). Then, \(a_{p}\left( \theta \right) =\theta ,y\left( \theta ,b\right) =\theta +\beta \left( b,\theta \right) \), and \(y_{1}^{*}\left( \theta ,\theta +d,b\right) =\theta +\frac{d}{2}+\frac{\beta \left( b,\theta \right) +\beta \left( b,\theta +d\right) }{2}\). For a fixed \(b\), (A2) requires \(\beta \left( b,\theta \right) \le \delta \left( b\right) ,\forall \theta \). This implies \(V\left( y\left( \theta ,b\right) \mp \delta ,\theta ,b\right) =V\left( \delta \right) \ge V\left( \bar{\delta }\right) =V\left( y\left( \theta ,b\right) \pm \bar{\delta },\theta ,b\right) \) if \(\bar{\delta }\ge \delta \), so (A3) holds. Finally, \(y\left( \theta ,b\right) +\delta <y_{1}^{*}\left( \theta ,\theta +d,b\right) <y\left( \theta +d,b\right) -\delta \) if \(d>\delta \left( b\right) +2\delta \), so (A4) holds.

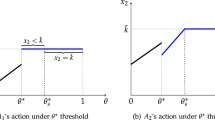

Denote \(\Theta _{h_{t}}^{t}=\)supp \(F_{t}\left( \theta |h_{t}\right) ,t=1,2\ \)the support of the posterior distribution \(F_{t}\left( \theta |h_{t}\right) \) in period \(t\) given a learning protocol \(I_{1},I_{2}\left( m_{1},I_{1}\right) \) and expert’s private histories \(h_{t}\), where \(h_{1}=\left\{ I_{1},s_{1}\right\} \ \)and \(h_{2}=\left\{ h_{1},I_{2},s_{2},m_{1}\right\} \). In a described class of learning protocols, a first-period information structure \(I_{1}\) is partitional, i.e., \(\Theta _{I_{1},s_{1}}^{1}\cap \Theta _{I_{1},s_{1}^{\prime } }^{1}=\varnothing \) for \(s_{1}\ne s_{1}^{\prime }\ \)and \(\underset{s_{1} \in \mathcal {S}}{\cup }\Theta _{I_{1},s_{1}}^{1}=\Theta \). Also, the second-period information structure \(I_{2}\left( m_{1},I_{1}\right) \) separates states in the posterior distribution associated with a reported signal only, i.e., \(\Theta _{h_{2}}^{2}=s_{2}\in \Theta _{h_{1}}^{1}\mathbf {\ }\)if \(m_{1}=s_{1}\), and \(\Theta _{h_{2}}^{2}=\Theta _{h_{1}}^{1}\mathbf {\ }\)if \(m_{1}\ne s_{1}\).

For simplicity, this definition implies that the expert does not randomize across messages. None of our results changes if we allow for mixed expert’s strategies.

The argument behind \(b_{s}>0\) and \(b_{\varphi }>0\) is as follows. Consider a function\(\ H:S\times \mathbb {R}_{+}\rightarrow \mathbb {R}\), which is continuous in \(\left( s,b\right) \) and \(H\left( s,0\right) >0\) for all\(\ s\in S\), where \(S\) is compact. Define \(\beta \left( s\right) =\inf \left\{ b\in \mathbb {R}_{+}|H\left( s,b\right) =0\right\} \) and \(b_{H}=\inf _{s\in \mathcal {S}} \beta \left( s\right) \). We claim that \(b_{H}>0\). By contradiction, suppose \(b_{H}=0\). Then, there is a sequence \(\left\{ s_{i}\right\} _{i=1}^{\infty } \), such that \(\beta \left( s_{i}\right) \rightarrow 0\). Because \(S\) is compact, then exists a converging subsequence \(\left\{ \tilde{s}_{i}\right\} _{i=1}^{\infty }\rightarrow \tilde{s}\), and \(\beta \left( \tilde{s}_{i}\right) \rightarrow 0\). The continuity of \(H\left( s,b\right) \) and \(H\left( \tilde{s}_{i},\beta \left( \tilde{s}_{i}\right) \right) =0\) mean \(H\left( \tilde{s},0\right) =0\) that contradicts \(H\left( \tilde{s},0\right) >0\).

References

Alonso R, Matouschek N (2008) Optimal delegation. Rev Econ Stud 75:259–293

Ambrus A, Lu S (2013) Robust almost fully revealing equilibria in multi-sender cheap talk. Working paper

Argenziano R, Severinov S, Squintani F (2013) Strategic information acquisition and transmission. Working paper

Arkhangel’skii A, Pontrjagin L (2011) General topology I: basic concepts and constructions dimension theory. Springer, Berlin

Austen-Smith D (1994) Strategic transmission of costly information. Econometrica 62:955–963

Battaglini M (2002) Multiple referrals and multidimensional cheap talk. Econometrica 70:1379–1401

Bergemann D, Pesendorfer M (2007) Information structures in optimal auctions. J Econ Theory 137:580–609

Blume A, Board O, Kawamura K (2007) Noisy talk. Theor Econ 2:395–440

Board S (2009) Revealing information in auctions: the allocation effect. Econ Theory 38:125–135

Crawford V, Sobel J (1982) Strategic information transmission. Econometrica 50:1431–1451

Damiano E, Li H, (2007) Information provision and price competition. Working paper

Dessein W (2002) Authority and communication in organizations. Rev Econ Stud 69:811–838

Esö P, Fong Y (2008) Wait and see: a theory of communication over time. Working paper

Esö P, Szalay D (2010) Incomplete language as an incentive device. Working paper

Fischer P, Stocken P (2001) Imperfect information and credible communication. J Account Res 39:119–134

Golosov M, Skreta V, Tsyvinski A, Wilson A (2014) Dynamic strategic information transmission. J Econ Theory 151:304–341

Goltsman M, Hörner J, Pavlov G, Squintani F (2009) Mediation, arbitration and negotiation. J Econ Theory 144:1397–1420

Green J, Stokey N (2007) A two-person game of information transmission. J Econ Theory 135:90–104

Ivanov M (2010a) Informational control and organizational design. J Econ Theory 145:721–751

Ivanov M (2010b) Communication via a strategic mediator. J Econ Theory 145:869–884

Ivanov M (2013) Information revelation in competitive markets. Econ Theory 52:337–365

Ivanov M (2015) Dynamic information revelation in cheap talk. BE J Theor Econ (forthcoming)

Johnson J, Myatt D (2006) On the simple economics of advertising, marketing, and product design. Am Econ Rev 93:756–784

Kartik N, Ottaviani M, Squintani F (2007) Credulity, lies, and costly talk. J Econ Theory 134:93–116

Klein N, Mylovanov T (2011) Will truth out? An advisor’s quest to appear competent. Working paper

Kovác E, Mylovanov T (2009) Stochastic mechanisms in settings without monetary transfers: the regular case. J Econ Theory 144:1373–1395

Krishna V, Morgan J (2001a) A model of expertise. Q J Econ 116:747–775

Krishna V, Morgan J (2001b) Asymmetric information and legislative rules: some amendments. Am Polit Sci Rev 95:435–452

Krishna V, Morgan J (2004) The art of conversation: eliciting information from experts through multi-stage communication. J Econ Theory 117:147–179

Krishna V, Morgan J (2008) Contracting for information under imperfect commitment. RAND J Econ 39:905–925

Lewis T, Sappington D (1994) Supplying information to facilitate price discrimination. Int Econ Rev 35:309–327

Li M, Madaràsz K (2008) When mandatory disclosure hurts: expert advice and conflicting interests. J Econ Theory 139:47–74

Melumad N, Shibano T (1991) Communication in settings with no transfers. RAND J Econ 22:173–198

Morgan J, Stocken P (2003) An analysis of stock recommendations. RAND J Econ 34:183–203

Myerson R (1991) Game theory: analysis of conflict. Harvard University Press, Cambridge, MA

Ottaviani M, Squintani F (2006) Naive audience and communication bias. Int J Game Theory 35:129–150

Radner R (1993) The organization of decentralized information processing. Econometrica 62:1109–1146

Roberts J (2004) The modern firm: organizational design for performance and growth. Oxford University Press, New York

Shaked M, Shanthikumar JG (2007) Stochastic orders. Springer, New York

Sydsæter K, Strøm A, Berck P (2005) Economists’ mathematical manual. Springer, Berlin

Acknowledgments

I am grateful to Ricardo Alonso, Dirk Bergemann, Archishman Chakraborty, Kalyan Chatterjee, Ettore Damiano, Péter Esö, Maria Goltsman, Seungjin Han, Johannes Hörner, Sergei Izmalkov, Andrei Karavaev, Vijay Krishna, Wei Li, Tymofiy Mylovanov, Gregory Pavlov, Vasiliki Skreta, Joel Sobel, Dezsö Szalay, and participants of CETC 2011, CEA 2011, 22nd Game Theory Conference in Stony Brook, WZB conference on Cheap Talk and Signaling in Berlin, and a seminar at the New Economic School for valuable suggestions and discussions on different versions of the paper. I am also indebted to the associate editor and two anonymous referees for helpful and constructive comments. Misty Ann Stone provided invaluable help with copy editing the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

The paper has been previously circulated under the title “Dynamic Informational Control”.

Appendix

Appendix

A perfect Bayesian equilibrium includes the learning protocol \(\left\{ I_{1}^{*},I_{2}^{*}\left( m_{1},I_{1}\right) \right\} \), the decision rule \(a^{*}\left( .\right) \), the belief \(\mu ^{*}\left( .\right) \), and the expert’s strategy \(\left\{ \sigma _{1}^{*}\left( .\right) ,\sigma _{2}^{*}\left( .\right) \right\} \), such that \(\mu ^{*}\left( .\right) \) is consistent with the players’ strategies and the strategies satisfy the following conditions.

-

(1)

Given any \(\left\{ I_{1},I_{2},m_{1},m_{2}\right\} \) and \(\mu _{2}^{*}\left( .\right) , a^{*}\) maximizes the principal’s payoff:

$$\begin{aligned} \underset{y\in \mathbb {R}}{a^{*}\in \arg \max }\, E_{\theta }\left[ U\left( y,\theta \right) |\mu _{2}^{*}\left( m_{1},m_{2},I_{1},I_{2}\right) \right] . \end{aligned}$$ -

(2)

Given \(a^{*}\left( .\right) \) and any \(\left\{ I_{1} ,I_{2},s_{1},s_{2},m_{1}\right\} , \sigma _{2}^{*}\) maximizes the expert’s second-period payoff:Footnote 25

$$\begin{aligned} m_{2}\underset{x\in \mathcal {M}}{\in \arg \max }E_{\theta }\left[ V\left( a^{*}\left( m_{1},x,I_{1},I_{2}\right) ,\theta ,b\right) |I_{1} ,I_{2},s_{1},s_{2}\right] . \end{aligned}$$ -

(3)

Given \(\left\{ \sigma _{1}^{*}\left( .\right) ,\sigma _{2}^{*}\left( .\right) ,a^{*}\left( .\right) \right\} ,\mu _{1}^{*}\left( .\right) \), and any \(\left\{ m_{1},I_{1}\right\} \), the information structure \(I_{2}^{*}\left( m_{1},I_{1}\right) \) maximizes the principal’s interim payoff:

$$\begin{aligned} I_{2}^{*}\underset{I_{2}\in \mathcal {I}}{\in \arg \max }E_{\theta ,s_{1},s_{2} }\left[ U\left( a^{*}\left( m_{1},\sigma _{2}^{*}\left( I_{1} ,I_{2},s_{1},s_{2},m_{1}\right) ,I_{1},I_{2}\right) ,\theta \right) |I_{1},I_{2},m_{1}\right] . \end{aligned}$$ -

(4)

Given \(\left\{ a^{*}\left( .\right) ,\sigma _{2}^{*}\left( .\right) ,I_{2}^{*}\left( .\right) \right\} \), and any \(\left\{ I_{1},s_{1}\right\} , \sigma _{1}^{*}\) maximizes the expert’s interim payoff:

$$\begin{aligned}&m_{1}\underset{x\in \mathcal {M}}{\in \arg \max }E_{\theta ,s_{2}}\left[ V\left( a^{*}\left( x,\sigma _{2}^{*}\left( I_{1},I_{2}\left( x,I_{1}\right) ,s_{1},s_{2},x\right) ,I_{1},I_{2}\left( x,I_{1}\right) \right) , \right. \right. \\&\quad \left. \left. \theta ,b\right) |I_{1},I_{2}\left( x,I_{1}\right) ,s_{1}\right] . \end{aligned}$$ -

(5)

Given \(\left\{ \sigma _{1}^{*}\left( .\right) ,\sigma _{2}^{*}\left( .\right) ,a^{*}\left( .\right) ,I_{2}^{*}\left( .\right) \right\} \), the information structure \(I_{1}^{*}\in \mathcal {I}\ \)maximizes the principal’s ex-ante payoff.

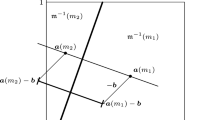

Now, we derive the expressions for \(p_{s}\) and \(p_{s}^{c}\). Split \(\Theta \) into two subintervals, \(\left[ 0,\bar{s}\right] \) and \(\left[ \bar{s},1\right] \). Then, split the subinterval \(\left[ 0,\bar{s}\right] \) into \(N\) subintervals of the equal length \(\Delta =\frac{\bar{s}}{N}\), and consider two-interval sets \(S_{s,\Delta }=S_{s,\Delta }^{1}\cup S_{s,\Delta } ^{2}=\left[ s,s+\Delta \right] \cup \left[ \varphi \left( s\right) ,\varphi \left( s+\Delta \right) \right] \), where \(s=0,\frac{\bar{s}}{N},...,\bar{s}-\frac{\bar{s}}{N},S_{s,\Delta }^{1}=\left[ s,s+\Delta \right] \), and \(S_{s,\Delta }^{2}=\left[ \varphi \left( s\right) ,\varphi \left( s+\Delta \right) \right] \). Then, we have

which gives \(p_{s}=\underset{\Delta \rightarrow 0}{\lim }p_{s}\left( \Delta \right) =\frac{f\left( s\right) }{f\left( s\right) +f\left( \varphi \left( s\right) \right) \varphi ^{\prime }\left( s\right) }\) and \(p_{s}^{c}=\underset{\Delta \rightarrow 0}{\lim }\left( 1-p_{s}\left( \Delta \right) \right) =1-p_{s}\). Since \(f\left( s\right) >0,f\left( \varphi \left( s\right) \right) >0\), and \(\varphi ^{\prime }\left( s\right) >0\) for all \(s\in \left[ 0,\bar{s}\right] \), then \(p_{s}>0\) and \(p_{s}^{c} >0\).

Proof of Theorem 1

Consider the principal’s decision rule defined by (4). Upon observing \(s=1\), the expert prefers to reveal it truthfully in both periods, since \(V\left( a_{p}\left( 1\right) ,1,b\right) \ge V\left( a,1,b\right) ,a\in \mathcal {A}\). After observing a signal \(s\in [0,\bar{s})\) in the first period, the expert’s optimal strategy is either to learn \(\theta \) in the second period by reporting \(s\) truthfully and inducing an action \(a\in \left\{ a_{p}\left( s\right) ,a_{p}\left( \varphi \left( s\right) \right) \right\} \) or to induce the optimal interim decision \(y_{1}\left( s,b\right) \in Y_{1}\left( s,b\right) \).

Consider the expert’s second-period incentive-compatibility constraints (7)–(8) given truthful reporting in \(t=1\). If \(b=0\), then \(a_{p}\left( s\right) =y\left( s,0\right) ,\forall s\). Since \(a_{p}\left( s\right) \ne a_{p}\left( \varphi \left( s\right) \right) ,s\in \left[ 0,\bar{s}\right] \), then

By the continuity of \(V\left( a,s,b\right) ,a_{p}\left( s\right) \), and \(\varphi \left( s\right) \) in \(\left( a,s,b\right) \) and the compactness of \(\left[ 0,\bar{s}\right] \), there are \(b_{s}>0\) and \(b_{\varphi }>0\), such thatFootnote 26

The expert’s interim payoffs \(E\left[ V\left( a_{p}\left( \theta \right) ,\theta ,b\right) |s\right] \) and \(E\left[ V\left( y_{1}\left( s,b\right) ,\theta ,b\right) |s\right] \) from truthtelling in both periods and inducing \(y_{1}\left( s,b\right) \), respectively, are

Suppose \(b=0\). Then, \(y\left( \varphi \left( s\right) ,0\right) =a_{p}\left( \varphi \left( s\right) \right) \ne a_{p}\left( s\right) =y\left( s,0\right) \) implies \(y_{1}\left( s,0\right) \ne y\left( s,0\right) \) and/or \(y_{1}\left( s,0\right) \ne y\left( \varphi \left( s\right) ,0\right) \). Because \(0<p_{s}<1\), we have

Because \(\mathcal {A}\) is an image of a continuous function \(a_{p}\left( \theta \right) \) on the compact \(\Theta \), it is compact (Arkhangel’skii and Pontrjagin 2011). By the continuity of \(\varphi \left( s\right) ,p_{s},V\left( a,s,b\right) \), and \(a_{p}\left( s\right) \) in \(\left( a,s,b\right) \), it follows that \(E\left[ V\left( a_{p}\left( \theta \right) ,\theta ,b\right) |s\right] \) and \(E\left[ V\left( y_{1}\left( s,b\right) ,\theta ,b\right) |s\right] \) are continuous in \(\left( s,b\right) \) (Sydsæter et al. 2005). Hence, there is \(b_{y}>0\) such that

Thus, there is the fully informative equilibrium if \(b\le \bar{b}\), where \(\bar{b}=\min \left\{ b_{s},b_{\varphi },b_{y}\right\} >0\). \(\square \)

Proof of Lemma 1

Suppose there is the fully informative equilibrium for \(\bar{b}>0\), that is,

and

for all \(s\in [0,1/2)\). We will prove that there exists the fully informative equilibrium for any \(b<\bar{b}\).

Because \(a_{p}\left( \varphi \left( s\right) \right) >a_{p}\left( s\right) \) and \(V_{ab}^{\prime \prime }>0\), then \(V_{b}^{\prime }\left( a_{p}\left( s\right) ,s,b\right) -V_{b}^{\prime }\left( a_{p}\left( \varphi \left( s\right) \right) ,s,b\right) <0,s\in [0,1/2)\). This implies that (11) holds for \(b<\bar{b}\):

Also, \(a_{p}\left( \varphi \left( s\right) \right) >y\left( s,b\right) ,s\in [0,1/2),b<\bar{b}\). \(\big ({\text {Otherwise, if}} \; a_{p}\left( \varphi \left( s\right) \right) \le y\left( s,b\right) ,\) then \(a_{p}\left( s\right) <a_{p}\left( \varphi \left( s\right) \right) \le y\left( s,b\right) \; \mathrm{{and}} \; V_{a}^{\prime }\left( a,\theta ,b\right) >0,a<y\left( \theta ,b\right) \) result in \(V\left( a_{p}\left( s\right) , s, b\right) <V\left( a_{p}\left( \varphi \left( s\right) \right) ,s,b\right) \big )\).

Since \(V_{ab}^{\prime \prime }>0\), then \(V_{b}^{\prime }\left( a,s,b\right) \) is increasing in \(a\). Because \(a_{p}\left( s\right) <y\left( s,b\right) ,\forall s,b>0\), we have:

Because \(y_{1}\left( s,b\right) >a_{p}\left( 0\right) \), we need to consider two cases: \(y_{1}\left( s,b\right) <a_{p}\left( 1\right) \) and \(y_{1}\left( s,b\right) =a_{p}\left( 1\right) \). First, let \(y_{1}\left( s,b\right) <a_{p}\left( 1\right) \). In this case, \(y_{1}\left( s,b\right) =y_{1}^{*}\left( s,b\right) \) and \(\Delta V\left( y_{1}\left( s,b\right) ,s,b\right) =\Delta V\left( y_{1}^{*}\left( s,b\right) ,s,b\right) \). This implies \(\Delta V_{b}^{\prime }\left( y_{1}\left( s,b\right) ,s,b\right) =\Delta V_{b}^{\prime }\left( y_{1}^{*}\left( s,b\right) ,s,b\right) \le 0\). Second, let \(y_{1}\left( s,b\right) =a_{p}\left( 1\right) \), which means \(y_{1}\left( s,b\right) >a_{p}\left( \varphi \left( s\right) \right) >a_{p}\left( s\right) \). Since \(V_{ab}^{\prime \prime }>0\), then \(V_{b}^{\prime }\left( a_{p}\left( s\right) ,s,b\right) <V_{b}^{\prime }\left( a_{p}\left( 1\right) ,s,b\right) \) and \(V_{b}^{\prime }\left( a_{p}\left( \varphi \left( s\right) \right) ,\varphi \left( s\right) ,b\right) <V_{b}^{\prime }\left( a_{p}\left( 1\right) ,\varphi \left( s\right) ,b\right) \). By the Envelope theorem, \(\frac{d}{db}E\left[ V\left( y_{1}\left( s,b\right) ,\theta ,b\right) |s\right] =E\left[ V_{b}^{\prime }\left( y_{1}\left( s,b\right) ,\theta ,b\right) |s\right] \) results in:

Thus, \(\Delta V_{b}^{\prime }\left( y_{1}\left( s,b\right) ,s,b\right) \le 0\) and \(\Delta V\left( y_{1}\left( s,b\right) ,s,b\right) \ge \Delta V\left( y_{1}\left( s,\bar{b}\right) ,s,\bar{b}\right) \ge 0,b<\bar{b}\). \(\square \)

Proof of Corollary 1

The decision \(y_{1}^{*}\left( s,b\right) \) is given by the first-order condition:

where \(V_{a}^{\prime }\left( y_{1}^{*}\left( s,b\right) -\beta \left( b,s\right) ,s\right) <0<V_{a}^{\prime }\left( y_{1}^{*}\left( s,b\right) -\beta \left( b,\varphi \left( s\right) \right) ,\varphi \left( s\right) \right) \) due to \(V_{a\theta }^{\prime \prime }>0\). Because \(V_{b}^{\prime }\left( a-\beta \left( b,\theta \right) ,\theta \right) =-V_{a}^{\prime }\left( a-\beta \left( b,\theta \right) ,\theta \right) \beta _{b}^{\prime }\left( b,\theta \right) \) and \(\beta _{b}^{\prime }\left( b,s\right) \ge 0\), this implies \(-V_{a}^{\prime }\left( y_{1}^{*}\left( s,b\right) -\beta \left( b,s\right) ,s\right) \beta _{b}^{\prime }\left( b,s\right) \ge 0\), and

where the inequality follows from \(\beta _{b}^{\prime }\left( b,s\right) \ge \beta _{b}^{\prime }\left( b,\varphi \left( s\right) \right) \) due to \(\beta _{b\theta }^{\prime \prime }\left( b,\theta \right) \le 0\). Thus, \(E\left[ V_{b}^{\prime }\left( a_{p}\left( \theta \right) ,\theta ,b\right) |s\right] <0\) and \(E\left[ V_{b}^{\prime }\left( y_{1}\left( s,b\right) ,\theta ,b\right) |s\right] \ge 0\), which gives

\(\square \)

Proof of Theorem 2

According to (A2), there exists \(\delta \left( b\right) >0\) such that \(\left| y\left( s,b\right) -a_{p}\right. \left. \left( s\right) \right| \le \delta \left( b\right) ,\forall s\). By (A3), there exists \(\bar{\delta }=\bar{\delta }\left( b,\delta \left( b\right) \right) >0\) such that \(V\left( y\left( s,b\right) -\delta \left( b\right) ,s,b\right) \ge V\left( y\left( s,b\right) +\bar{\delta },s,b\right) \) and \(V\left( y\left( s,b\right) +\delta \left( b\right) ,s,b\right) \ge V\left( y\left( s,b\right) -\bar{\delta },s,b\right) ,\forall s\). Then, \(\bar{\delta }\ge \delta \left( b\right) \). (Otherwise, if \(\bar{\delta }<\delta \left( b\right) \), then \(V\left( y\left( s,b\right) -\delta \left( b\right) ,s,b\right) \ge V\left( y\left( s,b\right) +\bar{\delta },s,b\right) >V\left( y\left( s,b\right) +\delta \left( b\right) ,s,b\right) \ge V\left( y\left( s,b\right) -\bar{\delta },s,b\right) \), where the first and the last inequalities follow from (A3), and the second one follows from \(y\left( s,b\right) +\bar{\delta }<y\left( s,b\right) +\delta \left( b\right) \) and \(V_{a}^{\prime }\left( a,s,b\right) <0,a>y\left( s,b\right) \) due to the strict pseudo-concavity of \(V\left( a,s,b\right) \). By the same property, we have the contradiction \(y\left( s,b\right) -\delta \left( b\right) >y\left( s,b\right) -\bar{\delta }\), or \(\delta \left( b\right) <\bar{\delta }\).)

Put \(\varphi \left( \theta \right) =\theta +\frac{d}{2}\). By (A4), there exists \(d=d\left( b,\bar{\delta }\right) >0\), such that

\(\square \)

Construct the learning protocol as follows:

Consider the expert’s second-period incentive-compatibility constraints given truthful reporting of \(s\) in the first period and observing \(\theta =s<\varphi \left( s\right) \) in the second period. First, we have

where the first inequality follows from (A2) and the third inequality follows from (13). This gives

where the first inequality follows from (A3) if \(a_{p}\left( s\right) \le y\left( s,b\right) \) and from \(V_{a}^{\prime }\left( a,s,b\right) <0,a>y\left( s,b\right) \) if \(a_{p}\left( s\right) >y\left( s,b\right) \). This property also implies the second inequality. Similarly, if the expert observes \(\theta =\varphi \left( s\right) \) in the second period, then

where the first inequality follows from (A2) and the third inequality follows from (13). This gives

where the first inequality follows from (A3) if \(a_{p}\left( \varphi \left( s\right) \right) \ge y\left( \varphi \left( s\right) ,b\right) \) and from \(V_{a}^{\prime }\left( a,\varphi \left( s\right) ,b\right) >0,a<y\left( \varphi \left( s\right) ,b\right) \) if \(a_{p}\left( \varphi \left( s\right) \right) <y\left( \varphi \left( s\right) ,b\right) \). This property also implies the second inequality.

Consider now the expert’s interim payoff in the first period. First, note that

where the first inequality follows from (14), and the second inequality follows from (A4) and \(V_{a}^{\prime }\left( a,s,b\right) <0,a>y\left( s,b\right) \). Similarly, we obtain

where the first inequality follows from (15), and the second inequality follows from (A4) and \(V_{a}^{\prime }\left( a,\varphi \left( s\right) ,b\right) >0,a<y\left( \varphi \left( s\right) ,b\right) \). As a result,

Note that (14)–(15) do not depend on \(p_{s}\). Also, the optimal decision in the case of deviation from truthtelling in the first period,

is continuous in \(p_{s}>0\) and \(y_{1}\left( s,\varphi \left( s\right) ,b\right) =y_{1}^{*}\left( s,\varphi \left( s\right) ,b\right) \) if \(p_{s}=\frac{1}{2}\). Because (16) holds strictly, there exists an \(\varepsilon \)–neighborhood of \(1\) for each \(\left( s,b\right) \), such that if it contains \(\frac{1-p_{s}}{p_{s}}=\frac{f\left( \varphi \left( s\right) \right) }{f\left( s\right) }\), then

Proof of Corollary 2

We construct the learning protocol as that in the proof of Theorem 2 with the distance \(\frac{d}{2}\) between the posterior states, which is determined below. Given a signal \(s\in [kd,kd+\frac{d}{2}),k\in \mathbb {N}_{0}\) in the first period, truthtelling in both periods provides the payoff to the expert:

Inducing the optimal interim decision \(y_{1}\left( s,s+\frac{d}{2},b\right) =p_{s}\left( s+\beta \left( b,s\right) \right) +p_{s}^{c}\left( s+\frac{d}{2}+\beta \left( b,s+\frac{d}{2}\right) \right) \) provides the payoff:

Because \(a_{p}\left( s+\frac{d}{2}\right) -a_{p}\left( s\right) =s+\frac{d}{2}-s=\frac{d}{2}\) and \(V\left( a,s,b\right) \) is symmetric in \(a\) around \(y\left( s,b\right) =s+\beta \left( s,b\right) \), the second-period incentive compatibility constraints are given by:

Since \(\beta \left( b,s\right) \le \delta \), (17) are satisfied if \(d\ge 4\delta \). Also, (17) implies \(\frac{d}{2}-\beta \left( b,s\right) \ge \beta \left( b,s\right) >0\) and

Suppose \(f\left( s\right) \ge f\left( s+\frac{d}{2}\right) \). (The case of \(f\left( s\right) <f\left( s+\frac{d}{2}\right) \) is symmetric.) If \(f\left( \theta \right) \) satisfies condition (A5) for some \(\alpha >0\) and \(d>0\), then

where \(\phi \left( d,\alpha \right) \) attains the minimum \(\phi \left( d^{*}\left( \alpha \right) ,\alpha \right) =-\frac{e^{-2-\alpha \delta } }{\alpha ^{2}}\) at \(d^{*}\left( \alpha \right) =\frac{4}{\alpha }+2\delta \) for any \(\alpha >0\). Equivalently, each \(d>2\delta \) is the minimizer of \(\phi \left( x,\alpha \right) \) over \(x\) for \(\alpha ^{*}\left( d\right) =\frac{4}{d-2\delta }>0\).

Because (A5) holds for \(\alpha =\frac{z^{*}}{\delta }\) and \(d=d^{*}\left( \alpha \right) =2\delta \left( \frac{2}{z^{*}}+1\right) \), where \(z^{*}\) is a unique solution to the equation \(z=e^{-1-\frac{z}{2} }\), we have \(\alpha \delta =z^{*}=e^{-1-\frac{z^{*}}{2}}=e^{-1-\frac{\alpha \delta }{2}}\) and \(e^{-2-\alpha \delta }=\alpha ^{2}\delta ^{2}\). This results in

Finally, (17) holds because \(z^{*}\simeq 0.314<1\) implies \(d=2\delta \left( \frac{2}{z^{*}}+1\right) >4\delta \). \(\square \)

Rights and permissions

About this article

Cite this article

Ivanov, M. Dynamic learning and strategic communication. Int J Game Theory 45, 627–653 (2016). https://doi.org/10.1007/s00182-015-0474-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-015-0474-x