Abstract

A purely Bayesian vector autoregression (VAR) framework is proposed to formulate and compare tri-variate models for the logs of the economy-wide aggregates of output and inputs (physical capital and labour). The framework is derived based on the theory of the aggregate production function, but at the same time, accounts for the dynamic properties of macroeconomic data, which makes it particularly appealing for modelling GDP. Next, using the proposed framework we confront a-theoretical time-series models with those that are based on aggregate production function-type relations. The common knowledge about capital and labour elasticities of output as well as on their sum is used in order to formulate prior distribution for each tri-variate model, favouring the linearly homogenous Cobb–Douglas production function-type relation. In spite of this, production function-based co-integration models fail empirical comparisons with simple VAR structures, which describe the three aggregates by three stochastic trends.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Aggregate production functions, which link aggregated product of an economy with its aggregated physical capital and labour—treated as the factors of production—have been widely used in macroeconomics, economic growth literature in particular. It has been so, despite serious objections formulated by many economists against the very concept of aggregate production functions; this critique is summarized in Felipe and McCombie (2013). While microeconomic production functions are reasonable models that describe technology of individual producers, meaningful aggregation of physical capital, labour and output is hardly possible, and any simple relation between aggregates looks very suspicious—see, for example, Fisher (1969). Even in modern attempts to derive aggregate production functions from micro-foundations, the underlying assumptions are extremely specific—see, for example, Jones (2005) and Growiec (2008, 2013). On the other hand, it is often argued that even though the link between the aggregate production function (APF hereafter) and its microeconomic foundations is not clear it still presents a reasonable, well-defined mathematical relationship.

In both theoretical and empirical models of economic growth the Cobb–Douglas functional form is prevailing due to historical reasons, its simplicity, a relatively good data fit as well as some formal justifications—e.g. it is a first-order local approximation of any smooth production function expressed in terms of logs of inputs and output (provided such a relation exists on the level of some aggregates of output and inputs). A more general and quite popular form is translog, which is a second-order local approximation. Nowadays, APF is used, for example, as the world technology frontier in international growth comparisons and in decomposition of economic growth into its main sources, as proposed in Koop et al. (1999, 2000), where Bayesian analysis of stochastic production frontiers is applied; see Makieła (2014) for a more recent study. Analyses of the production function-type relations between aggregates are mainly performed by econometricians and empirical macroeconomists. Their focus is more on statistical issues of modelling and inference than on theoretical economic foundations of such empirical models.

Stochastic production frontier models extend traditional production functions by adding non-negative unobservable random terms that represent technical inefficiency. Such models either are completely static, or use time trends (or time-varying parameters) to capture technology changes. On the other hand, however, GDP and GNP data (mainly for the USA and some other developed economies) were used long ago to demonstrate important models and techniques of modern econometrics; see, for example, Koop et al. (1997) for a Bayesian approach to joint modelling and testing of long memory and a unit root. Describing dynamic properties of economic time series is a crucial issue, and co-integration analysis constitutes a standard framework to establish stable, long-run relations among macroeconomic variables—variables that can usually be described by integrated stochastic processes. Co-integration techniques are useful tools to avoid spurious regressions. So far, the stochastic frontier—or, more generally, a comparative—analysis of economic growth has ignored these issues when analysing panel data, even those covering longer periods, like 30 years. Although our initial motivation was rooted in stochastic frontier production functions for panel data, we focus here on a simpler, purely time-series analysis with no panel context.

Since production function specifications use data on three variables, we have to build appropriate tri-variate models for Bayesian comparisons. Thus, all our competing models consist of three equations. We start from basic VAR(p) specifications—their first two equations (for the logarithms of inputs aggregates) are also used in production function-type models; only the form of the third equation (for the log of GDP) is specific. Empirical comparisons are based on individual economies (not on a panel of countries), namely the USA and Poland, but also on the UK and Hungary—in order to check whether the results are similar for different countries, developed ones as well as those undergoing economic transformation. Since we use annual data from relatively short periods, p = 3 is taken as the maximum lag.

The Bayesian VAR framework enables us to calculate posterior probabilities of many nested and non-nested models, in particular specifications that assume co-integrating relations (for Bayesian co-integration analysis, see, for example, Koop et al. 2004, 2010; Wróblewska 2009). In order to analytically obtain basic results, we use as much as possible the conjugate prior distributions. We also resort to the concept of Bayesian sequential cut (see, for example, Florens and Mouchart 1985; Osiewalski and Steel 1996), which allows us to separate the analysis of aggregate inputs dynamics from the main issue of analysing aggregate output given current aggregate inputs levels. This main issue is closely related to the formal econometric status of any APF-type relation. Thus, we would like to look from an a-theoretical, purely time-series co-integration perspective at the issue of existence and empirical adequacy of APF-type relations.

The aim of this study is to provide an unbiased, scientific evidence in favour or against APF, and thus to shed more light on the topic that has been on the research agenda for decades. Our contribution in this regard is threefold. Firstly, there are two main approaches used by practitioners to calculate potential output or output gap in macroeconomics: (i) a purely dynamic, time-series approach and (ii) a theory-driven approach based on APF; see, for example, Turner et al. (2016) or Alichi et al. (2017) for a survey of methods used to model macroeconomic output. We present a modelling framework that, on the one hand, addresses the dynamic properties of macroeconomic data and, on the other hand, encompasses models deeply rooted in economic theory of aggregate production and productivity. Secondly, we present a formal Bayesian model comparison, which brings together these two pieces of the literature that describe aggregate production. Thirdly, we examine the role of APF-type relations, especially Cobb–Douglas and translog, within a dynamic econometric framework and analyse if APF can be viewed as an empirically valid co-integration relation.

We are fully aware that our study requires an explicit assumption that APF is a testable, falsifiable construct. This, however, may not be feasible in some fields of macroeconomics, which greatly rely on the very existence of APF. We do not wish to dispute such studies since, given the Bayesian perspective, they merely imply a strong prior belief as regards APF—a belief that, via prior odds, simply outweighs any information that may come from (sometimes messy and distorted) macroeconomic data. We are also aware that our purely statistical approach to the relation between economy-wide aggregates of output and inputs can be criticized—even if we find a co-integration equation that can be interpreted as the APF. One should be aware of the argument given in Shaikh (1974), also discussed in Felipe and McCombie (2013), which shows that a well-fitted empirical Cobb–Douglas-type relation can be nothing more but a straightforward consequence of a simple identity between constant-price value added and the sum of wages and profits, provided that the observed shares of wages and profits are constant over time. That is, even if we find a co-integration relation with properties attributed to traditional production functions, the latter can still be considered spurious in a fundamental, economic sense. Such relation would only show that the conditions formulated in Shaikh (1974) are approximately met. However, if such co-integration relation is unlikely in view of the data, we obtain an empirical evidence against conditions that would make the APF-type equation a useful approximation of reality.

The structure of the paper is as follows: Sects. 2 and 3 present the technical details of the VAR modelling framework, Bayesian model comparison and the role of the Cobb–Douglas relation. Readers more interested in the empirical results may move forward to Sect. 4 where competing model classes are presented. Section 5 focuses on the data and empirical results of model comparison. Section 6 is devoted to the best specifications and posterior results on crucial parameters. Final remarks are summarized in Sect. 7.

2 Bayesian VAR modelling framework

We describe annual data on GDP (i.e. aggregate product Qt) as well as aggregates of capital (Kt) and labour (Lt) using a tri-variate VAR(p) model for the natural logarithms of both inputs (\( k_{t} = \ln K_{t} \), \( l_{t} = \ln L_{t} \)) and output (\( q_{t} = \ln Q_{t} \)). The basic form of a model for \( x_{t} = \left[{k_{t} l_{t} q_{t}} \right] \) can be written as

where \( \Phi_{i} \) are (3 × 3) matrices of parameters accompanying lags \( x_{t - i} = \left[{k_{t - i} l_{t - i} q_{t - i}} \right] \left({i = 1, \ldots,p} \right) \), Γo is a (m × 3) matrix of coefficients that post-multiplies \( d_{t} \), the row vector of m deterministic components (e.g. m = 2 and \( d_{t} \) = [1 t]), and {\( \varepsilon_{t} \)} is a tri-variate Gaussian white noise process with contemporaneous covariance matrix Σ, a (3 × 3) symmetric positive definite matrix.

Denoting \( z_{t} = \left[{x_{t - 1} \ldots x_{t - p } d_{t}} \right] \) and \( {\varPhi} = \left[{{\varPhi}_{1}^{\prime} \ldots {\varPhi}_{p}^{\prime} { \varGamma}_{o}^{\prime}} \right]^{\prime} \) we can rewrite (1a) as

where Φ is (3p + m) × 3 and can be represented by its 3 columns as \( {\varPhi} = \left[{\varphi^{\left(1 \right)} \varphi^{\left(2 \right)} \varphi^{\left(3 \right)}} \right] \), where \( \varphi^{\left(j \right)} \) groups the 3p + m unknown coefficients appearing in equation j (j = 1, 2, 3).

For the purposes of co-integration analysis, the particularly important representation of (1a) is the one in terms of first differences:

where \( {\varPi} = - I_{3} + \mathop \sum \limits_{i = 1}^{p} {\varPhi}_{i} \) and, for p > 1, \( {\varGamma}_{j} = - \mathop \sum \limits_{h = j + 1}^{p} {\varPhi}_{h} \). Denoting \( \tilde{z}_{t} = \left[{x_{t - 1} { \Delta}x_{t - 1} \ldots { \Delta}x_{{t - \left({p - 1} \right)}} { }d_{t}} \right] \) and \( {{\tilde{\varPhi}}} = \left[{{\varPi}^{\prime} { \varGamma}_{1}^{\prime} \ldots {\varGamma}_{p - 1}^{\prime} { \varGamma}_{o}^{\prime}} \right]^{\prime} \) we can rewrite (2a) as

where, again, \( {{\tilde{\varPhi}}} = \left[{\tilde{\varphi}^{\left(1 \right)} \tilde{\varphi}^{\left(2 \right)} \tilde{\varphi}^{\left(3 \right)}} \right] \) is (3p + m) × 3 with \( \tilde{\varphi}^{\left(j \right)} \) grouping the 3p + m unknown coefficients of equation j in (2a) (j = 1, 2, 3). Note that \( {{\tilde{\varPhi}}} = R{\varPhi} - F \), where

For the parameter matrices Φ and Σ in (1a,b) we assume the conjugate joint prior distribution, i.e. Σ has an inverted Wishart marginal prior distribution and Φ has a matrix-variate normal conditional distribution (given Σ), that is

The hyper-parameters A (a 3 × 3 symmetric, positive definite scale matrix) and s (degrees of freedom, s > 2) will be elicited in the next section. In the conditional prior of \( {\varPhi} = \left[{\varphi^{\left(1 \right)} \varphi^{\left(2 \right)} \varphi^{\left(3 \right)}} \right] \) we reflect the idea that xt is, most likely, just the tri-variate random walk, i.e. \( x_{t} = x_{t - 1} + \varepsilon_{t}. \) Thus, we take \( F = \left[{I_{3}\,\,\, 0} \right]^{\prime} \) as the prior mean. The precision of our prior assumptions on Φ is reflected by the choice of W, as \( \varSigma \otimes W \) is the covariance matrix of vec(Φ) given Σ. We assume that W, a square matrix of order 3p + m, is block-diagonal with \( I_{3}, \frac{1}{2}I_{3}, \ldots, \frac{1}{p}I_{3}, W_{0} \) as diagonal blocks. Our prior resembles the so-called Minnesota (Litterman) prior (see, for example, Doan et al. 1984; Litterman 1986), except that we use a purely Bayesian approach and our prior is exactly the marginal distribution of the parameters; thus, it does not use hyper-parameters based on the data being modelled.

The induced conditional prior for the matrix \( \tilde{\varPhi} = R\varPhi - F \), which groups the conditional mean parameters in (2b), is matrix-variate normal: \( {\text{vec}}\left({\tilde{\varPhi}} \right) | \varSigma \sim N\left({0, \varSigma \otimes \tilde{W}} \right) \), where

The simple prior distribution described here enables us to use purely analytical approach in order to obtain basic posterior results and the marginal data density (MDD hereafter). Of course, our conjugate prior is defined for completely free, unconstrained parameters of (1a, b) or, equivalently, (2a, b). In particular, we do not impose stability conditions that are imposed in, for example, Wróblewska (2009).

There are many ways of generating competing models with the use of this general framework. Obvious special cases are obtained by assuming different orders p of VAR(p) or imposing Π = 0 for each p and considering VAR(p − 1) for \( {\Delta}x_{t} \). But our main task is to reconsider the production function-type relation within dynamic framework; hence, the crucial transformation of VAR(p) is the one in terms of the marginal sampling model for \( x_{t}^{\left(1 \right)} = \left[{k_{t}\, l_{t}} \right] \), given the past of all three variables in xt, and the conditional sampling model for qt given \( x_{t}^{\left(1 \right)} \) and the past of xt. This enables us to directly consider exogeneity issues and to propose many other competing models.

Starting from (1a), we obtain the following forms of the sampling distribution of \( x_{t}^{\left(1 \right)} \) given the past of xt (denoted as \( \psi_{t - 1} \)) and the sampling distribution of qt given \( x_{t}^{\left(1 \right)} \) and \( \psi_{t - 1} \):

where \( {\varPhi}^{\left(1 \right)} = \left[{\varphi^{\left(1 \right)} \varphi^{\left(2 \right)}} \right] \) groups the coefficients of the first two equations of VAR(p), Σ11 is the (2 × 2) left upper block of

and \( \beta = {\varSigma}_{11}^{- 1} {\varSigma}_{12},{ }\omega = \sigma_{qq} - {\varSigma}_{21} \beta > 0, \delta = \varphi^{\left(3 \right)} - {\varPhi}^{\left(1 \right)} \beta \).

Due to the well-known properties of inverted Wishart distributions, Σ11 and (β, ω) are stochastically independent, \( \varSigma_{11} \sim IW\left({A_{11}, s - 1} \right) \), \( \beta |\omega \sim N\left({A_{11}^{- 1} A_{12}, \omega A_{11}^{- 1}} \right) \), and \( \omega \sim IW\left({a_{qq.1}, s} \right) \) or, equivalently, \( \tau = \omega^{- 1} \sim {Gamma}\left({\frac{s}{2} , \frac{{a_{qq.1}}}{2}} \right) \), where we use the obvious partitioning of the hyper-parameter matrix

and \( a_{qq.1} = a_{qq} - A_{21} A_{11}^{- 1} A_{12} > 0 \). Thus, the decomposition of (1a) into (3) and (4) has led to a one-to-one re-parameterization of the conditional (contemporaneous) covariance matrix Σ into two groups of parameters, Σ11 and (β, ω), which are a priori independent; Σ11 appears only in the marginal model for inputs (given the past of xt) and (β, ω) together with δ parameterizes the conditional model of output (given current inputs and \( \psi_{t - 1} \)). The one-to-one re-parameterization of Φ, which appears in (3) and (4), leaves \( \varPhi^{\left(1 \right)} = \left[{\varphi^{\left(1 \right)} \varphi^{\left(2 \right)}} \right] \) in the marginal model for inputs and uses \( \delta = \varphi^{\left(3 \right)} - {\varPhi}^{\left(1 \right)} \beta \) in the conditional model for output. Note that the joint conditional prior of \( {\text{vec}} \left[{\varPhi^{\left(1 \right)} \delta} \right] = Q_{\beta} {\text{vec}}\left(\varPhi \right) \), given Σ, is normal with mean \( Q_{\beta} {\text{vec}}\left(F \right) \) and covariance matrix \( Q_{\beta} \left({\varSigma \otimes W} \right)Q_{\beta}^{\prime} \), where

and \( Q_{\beta} \left({\varSigma \otimes W} \right)Q_{\beta}^{\prime} \) is block-diagonal with matrices \( {\varSigma}_{11} \otimes W \) and \( \omega W \) as diagonal blocks.

All this means that \( \theta^{\left(1 \right)} = ({\varPhi}^{\left(1 \right)}, {\varSigma}_{11} \)) is a sufficient parameterization of (3), \( \theta^{\left(2 \right)} = \left({\delta, \beta, \omega} \right) \) is a sufficient parameterization of (4), and both groups of parameters are independent a priori, which guarantees the Bayesian sequential cut (see, for example, Florens and Mouchart 1985; Osiewalski and Steel 1996), leading not only to posterior independence between \( \theta^{\left(1 \right)} \) and \( \theta^{\left(2 \right)} \) (i.e. their conditional independence given observations), but also to a useful factorization of MDD given initial observations:

where

\( p\left({\theta^{\left(1 \right)}} \right) \) and \( p\left({\theta^{\left(2 \right)}} \right) \) are prior densities and Τ is the number of tri-variate observations. Thus, our prior assumptions lead to complete separation of the Bayesian analysis in the marginal and conditional models (3) and (4).

The parameterization in terms of \( \theta^{\left(1 \right)} \) and \( \theta^{\left(2 \right)} \) is obviously not the only one that leads to the sequential cut. Instead of (1a, b), we can start from (2a, b) and obtain the following forms of the marginal and conditional sampling models:

where \( \tilde{\delta} = \tilde{\varphi}^{\left(3 \right)} - \left[{\tilde{\varphi}^{\left(1 \right)} \tilde{\varphi}^{\left(2 \right)}} \right]\beta \) and (6) can be equivalently written as

Now \( \tilde{\theta}^{\left(1 \right)} = \left({\tilde{\varphi}^{\left(1 \right)},\tilde{\varphi}^{\left(2 \right)}, \varSigma_{11}} \right) \) and \( \tilde{\theta}^{\left(2 \right)} = \left({\tilde{\delta}, \beta, \omega} \right) \) are sufficient parameterizations of the marginal and conditional models, respectively. Since \( {\text{vec}}\left({\tilde{\varPhi}} \right) | \varSigma \sim N\left({0, \varSigma \otimes \tilde{W}} \right) \) and \( {\text{vec}}\left[{\tilde{\varphi}^{\left(1 \right)} \tilde{\varphi}^{\left(2 \right)} \tilde{\delta}} \right] = Q_{\beta} {\text{vec}}\left({\tilde{\varPhi}} \right) \),

The conditional prior of \( {\text{vec}}\left[{\tilde{\varphi}^{\left(1 \right)} \tilde{\varphi}^{\left(2 \right)}} \right] \) given Σ11 is \( N\left({0, \varSigma_{11} \otimes \tilde{W}} \right) \), the conditional prior of \( \tilde{\delta} \) given \( \left({\beta, \omega} \right) \) is \( N\left({0, \omega \tilde{W}} \right) \), which does not depend on \( \beta \), and \( \tilde{\theta}^{\left(1 \right)} \) and \( \tilde{\theta}^{\left(2 \right)} \) are a priori independent. Thus, under our prior structure, the complete separation of posterior inference and MDD calculations is preserved not only for (3) and (4), but also for (5) and (6). This is useful for the model formulation strategy. As long as we do not impose restrictions that link parameters of the marginal and conditional models, we can separately consider their competing variants. Consequently, if we consider n1 variants of the marginal model and n2 variants of the conditional model, n1·n2 models for xt can be formulated and compared. Note that it is formally irrelevant which particular parameterization we choose—(3) or (5) for the marginal model, (4) or (6) for the conditional model. For instance, it may be easier to formulate separate restrictions on \( \theta^{\left(1 \right)} \) and \( \tilde{\theta}^{\left(2 \right)} \) (than on \( \theta^{\left(1 \right)} \) and \( \theta^{\left(2 \right)} \)) in order to create an interesting (interpretable) model for xt. Incidentally, remind that \( \theta^{\left(h \right)} \) and \( \tilde{\theta}^{\left(j \right)} \) are a priori independent \( \left({h, j = 1,2; h \ne j} \right) \). Since \( \theta^{\left(1 \right)} \bot \theta^{\left(2 \right)} \) and \( \left[{\tilde{\varphi}^{\left(1 \right)} \tilde{\varphi}^{\left(2 \right)}} \right] \) is a (one-to-one) transformation of \( \left[{\varphi^{\left(1 \right)} \varphi^{\left(2 \right)}} \right] \), it is clear that \( \tilde{\theta}^{\left(1 \right)} \bot \theta^{\left(2 \right)} \); starting from \( \tilde{\theta}^{\left(1 \right)} \bot \tilde{\theta}^{\left(2 \right)} \), by the same argument we get \( \theta^{\left(1 \right)} \bot \tilde{\theta}^{\left(2 \right)} \).

3 The role of the Cobb–Douglas-type relation in the modelling strategy

In this section we focus on the conditional sampling model for output and present the use of the relation between current levels of all three variables in both creating specific models and eliciting prior distribution of the conditional covariance matrix Σ.

In both equivalent formulations of the conditional sampling model for output given inputs, (4) and (6), the logarithm of output is linearly dependent on the logarithms of current input levels (plus some linear function of past values of kt, lt, qt). In view of the traditional concept of the APF, the presence of the relation between output and current inputs in the conditional model could be called the contemporaneous or immediate APF. Since we work within the VAR model framework for logs of Qt, Kt and Lt, this relation takes the Cobb–Douglas form. It is nothing to be empirically discovered. It is obtained by construction, as the nonzero vector \( \beta = {\varSigma}_{11}^{- 1} { \varSigma}_{12} \) is a consequence of nonzero conditional correlation between output and current inputs, i.e. nonzero \( {\varSigma}_{12} \) in the contemporaneous covariance matrix Σ (conditional with respect to the past of xt). As we consider standard VAR models, the conditional covariance matrix is constant over time, so are the immediate capital and labour elasticities of output. Making this covariance matrix vary over time would lead to time-varying elasticities in the spirit of Koop et al. (2000). That is, the immediate Cobb–Douglas APF with either constant or variable parameters is an obvious part of the standard VAR or a more general (TVP VAR) model.

Although the static Cobb–Douglas form is just a matter of re-parameterization of the basic VAR framework, there are some deeper, important questions, which can be answered through empirical investigations only. First, we are interested whether the immediate APF-type relation is the only important part of the conditional sampling model. If so, the very old idea of a purely static Cobb–Douglas APF would seem still relevant. If not, we may enquire about empirical validity of its different extensions, like generalizing its functional form, keeping deterministic trend in the equation, adding one-sided inefficiency terms, replacing β by a time-varying vector of parameters. From the viewpoint of dynamic econometrics, the most interesting issue amounts to testing if the immediate Cobb–Douglas APF-type relation is also a co-integration relation linking all three aggregate variables, if they are treated as integrated stochastic processes. If the issues mentioned above can be formalized as restrictions on—or extensions of—the conditional sampling model only, such modelling strategy preserves the Bayesian sequential cut. In this case weak exogeneity of inputs (for inference on any function of the conditional model parameters) is assumed. Of course, weak exogeneity can be tested within the full, three-equation framework—as well as the presence of any co-integration relation among the three (obviously non-stationary) variables. These issues require Bayesian analysis of the joint model for inputs and output—without any separation of MDD calculations—and thus they are computationally more demanding.

Before we present the competing models and their Bayesian comparison using observations from four countries, let us use the immediate Cobb–Douglas APF idea to elicit the hyper-parameters (A and s > 2) of the inverted Wishart prior of Σ. Since, given ω, β is normal with mean \( A_{11}^{- 1} A_{12} \) and covariance matrix \( \omega A_{11}^{- 1} \), and \( \tau = \omega^{- 1} \) has the gamma distribution with mean \( s a_{qq.1}^{- 1} \) and variance \( 2s a_{qq.1}^{- 2} \), the marginal prior distribution of β is Student’s t with s > 2 degrees of freedom, mean \( A_{11}^{- 1} A_{12} \) and covariance matrix \( \frac{{a_{qq.1}}}{s - 2}A_{11}^{- 1} \). Although, following Fisher (1969), Shaikh (1974) and Felipe and McCombie (2013), we are quite sceptical about the concept of the APF, we do favour the traditional Cobb–Douglas case (with constant returns to scale) through prior elicitation. Thus, this disputable concept, so popular in many economic models, stands in the centre of our prior elicitation and β is treated as the vector of capital and labour elasticities of output that, most likely, are equal and sum up to unity, with prior standard deviations 0.2 for each elasticity and 0.1 for their sum. This leads to five equations with seven unknowns; the sixth equation is obtained by assuming that the diagonal elements of A are equal. Finally, we get \( s = \frac{26}{3} \) and

since \( a_{qq.1} = \frac{a}{15} \), the corresponding marginal prior for \( \tau = \omega^{- 1} \) is gamma \( \left({\frac{13}{3}, \frac{a}{ 30}} \right) \). We assume a = 1, which results in the prior mean 130 and prior standard deviation \( 10\sqrt {39} \) for the precision parameter \( \tau \).

Finally, let us focus on the prior distribution of the parameters of just one equation in (1b). Without loss of generality, we consider only the first equation, where \( \varphi^{\left(1 \right)} \) given \( \sigma_{kk} \) is normal (with mean \( \left[{1\, 0^{\prime}} \right]^{\prime} \) and covariance matrix \( \sigma_{kk} W \)) and \( \sigma_{kk}^{- 1} \) is gamma (with parameters \( \frac{s - 2}{2} \) and \( \frac{{a_{kk}}}{2} \), i.e. with mean \( \frac{s - 2}{{a_{kk}}} \) and standard deviation \( a_{kk}^{- 1} \sqrt {2\left({s - 2} \right)} \)). Hence, the marginal prior distribution of \( \varphi^{\left(1 \right)} \) is the Student’s t distribution with s − 2 degrees of freedom, location vector \( \left[{1\, 0^{\prime}} \right]^{\prime} \) and precision matrix \( \frac{s - 2}{{a_{kk}}}W^{- 1} \); our choice of the hyper-parameters values leads to the prior covariance matrix \( V\left({\varphi^{\left(1 \right)}} \right) = \frac{{a_{kk}}}{s - 4}W = \frac{8}{35} {\text{Diag}}\left({I_{3}, \frac{1}{2}I_{3}, \ldots, \frac{1}{p}I_{3}, W_{0}} \right) \). In the case of m = 2 and \( d_{t} \) = [1 t] (unrestricted constant and trend) we take \( W_{0} = 100 I_{2} \).

4 Competing models

4.1 Type A models: standard VAR and VEC specifications

Since we work with annual data, we only consider VAR(p) models with \( p \in \left\{{1, 2, 3} \right\} \) and use the parameterization (2a, b), which enables us to impose rank restrictions on the (3 × 3) long-run multiplier matrix Π. Assuming that r = rank (Π) is a particular number from the set \( \left\{{0, 1, 2, 3} \right\} \), we generate four (very different) cases of VAR(p).

In the case of r = 0 (VAR(p– 1) for \( {\Delta}x_{t} \), Π = 0) or r = 3 (VAR(p) for xt, unrestricted non-singular Π) the sequential Bayesian cut is preserved when we work with appropriate sufficient parameterizations of the marginal model for inputs and the conditional model for output given current inputs, like in (5) and (6). Basic results can be obtained analytically, and the marginal data density factorizes as MDD = C1 C2.

The cases of r = 1 and r = 2 amount to one or two co-integration relations with no restrictions other than the basic identifying ones. Such specifications assume that the same parameters (e.g. co-integrating vectors) appear in all three equations and thus Bayesian cut is precluded. There is no MDD factorization, and numerical tools have to be used (see “Appendix” for details of Bayesian co-integration analysis adopted in this study).

All type A models belong to the VAR family; they differ in terms of p, r and the presence and character of the deterministic variables dt in (2a).

4.2 Type B models: based on production function

Type B models preserve (by their construction) the Bayesian sequential cut and thus MDD factorization. In fact, in all type B models, as the marginal model for current inputs, we take the first two equations (for \( x_{t}^{\left(1 \right)} \)) of this type A model (with r = 0 or r = 3) that leads to the highest value of C1, the first factor of MDD. Type B specifications differ in the form of the third equation, i.e. the conditional model for output given current inputs. The basic (and the simplest) one corresponds to zero restrictions on the whole vector δ in (4) except for the intercept; that is, the third equation consists of the static Cobb–Douglas APF and nothing else. In other type B models the conditional part (for output given current inputs) is richer; its more general form can be written as

where \( v_{t} \sim iiN\left({0, \omega} \right) \) and \( z_{t} \equiv 0 \) or \( z_{t} \sim iiExp\left(\lambda \right) \); if they appear, the exponential terms zt (with mean and standard deviation \( \lambda \)) are independent of symmetric errors \( v_{t} \) and represent period t technical inefficiency. The parameters \( \beta = \left[{\beta_{1} \beta_{2}} \right]^{\prime} \), \( \delta_{0} \), \( \delta_{1} \) and \( \omega \) appear also in (4), the conditional part of type A models with r = 0 or r = 3, so we keep for (\( \beta_{1} \), \( \beta_{2} \), \( \delta_{0} \), \( \delta_{1} \), \( \omega \)) the same conjugate prior distribution as in (4).

The translog parameters (\( \gamma_{1} \), \( \gamma_{2} \), \( \gamma_{3} \)) are a priori normally distributed (given \( \omega \) and the remaining parameters) with zero mean and covariance matrix \( 60^{- 1} \omega I_{3} \); higher variances have also been tried in the empirical part, always leading to worse Bayesian models in terms of MDD values. If \( z_{t} = 0 \) for all t, (7) can be treated as the classical normal linear regression model with a conjugate prior; C2 and basic posterior results can be obtained analytically.

In cases where exponential inefficiency terms are introduced, we assume that \( \lambda \) and the vector of other parameters are independent and \( \lambda^{- 1} \) is a priori exponential with mean \( (- \ln r^{*})^{- 1} \), where \( r^{*} \in \left({0, 1} \right) \) is the prior median of technical efficiency (see van den Broeck et al. 1994). Posterior inference is based on Gibbs sampling, as described in Osiewalski and Steel (1998), where nT inefficiency terms are assumed conditionally independent not only over n units, but also over T periods; this is directly useful now, as in the present work we have n = 1 (each economy is treated separately.) Evaluating C2 in models with inefficiency is a non-trivial numerical task; we use a very quick and efficient method, the modified arithmetic mean estimator proposed in Pajor (2017).

Although the conditional part of any type B model is static (except for a possible deterministic trend), the marginal part is always dynamic. Thus, the first two equations (taken from some VAR(p) model) describe the dynamics of input aggregates (conditionally not only on their own past, but also on the past of the aggregated output) and the third equation postulates a strong (almost deterministic) relation between the aggregates of output and current inputs.

Note that sampling models that can be obtained either by certain restrictions in class A or, equivalently, by appropriate restrictions in class B are particularly important for fair comparison of these two classes. Keeping, for the parameters of such a common specification, the same marginal distribution in B as in A makes prior elicitation in class B easier and enables making Bayesian model comparison as coherent as possible. The full-efficiency (\( z_{t} \equiv 0 \)) Cobb–Douglas production function-type relations with and without linear trend are two obvious special cases that can be obtained from either (4) or (7); thus, they belong to both classes A and B.

5 The data and results of initial model comparison

We use annual data prepared for international comparisons and published in Penn World Tables, version 8.0; \( K_{t} \) is capital stock (with PWT 8.0 code rkna), \( L_{t} \) denotes total hours worked by engaged persons (given as emp*avh), and \( Q_{t} \) is real GDP (rgdpna). Four countries are considered. For the USA and UK, the observations since 1979 till 2011 are used, but the first three data points serve as initial conditions; thus, we model T = 30 tri-variate observations from the period 1982–2011. In the case of Poland, we model only T = 20 observations, covering the period 1992–2011, in order to avoid modelling pre-transformation data; the data from 1989 to 1991 are used as initial conditions. In the case of Hungary, which was a more developed economy under the communist regime, we use the data from the period 1980–2011 and focus on modelling T = 29 observations from 1983 to 2011. Main interest is in the results for the USA, the world leader, and Poland, an example of successful economic transformation. The results for the UK and Hungary serve as a sensitivity check—whether similar findings can be obtained for different economies.

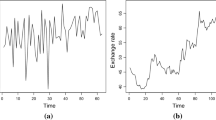

The data are presented in Fig. 1. Due to scale differences between capital and product variables (\( k_{t} = \ln K_{t} \), \( q_{t} = \ln Q_{t} \)), relatively smooth upward trends constitute a dominating picture, with fluctuations and short-term deviations being much less visible. Labour (\( l_{t} = \ln L_{t} \)) has its own scale on the right-hand side in each chart, so its fluctuations are more visible. However, the stochastic and dynamic character of all three aggregates is obvious when we look at the results of model comparison.

The natural Bayesian characteristic of how well a model fits the data is \( p\left({x_{1}, \ldots,x_{T} {|}\psi_{0}} \right) \), the marginal data density (MDD) value for the observations at hand. MDD values constitute the main ingredient to the formal Bayesian procedure of model comparison; see, for example, Osiewalski and Steel (1993). Using MDD values, each reader can use his or her own prior distribution on the model space and then easily calculate the resulting posterior model probabilities. In order to avoid selecting prior model probabilities, we are not going to report posterior probabilities of competing specifications. Tables 1 and 2 contain the decimal logarithms of MDD values for type A and B models, respectively. Of course, these values are based on particular prior distributions on model parameters that we have proposed.

The MDD values, presented in Table 1 for different VAR(p) models (p = 1,2,3), indicate that simple specifications with non-stationarity, namely VAR(0), VAR(1) and VAR(2) without constants for the tri-variate differenced series \( {\Delta}x_{t} \), are the best ones. The second best are VAR(1), VAR(2) and VAR(3) models for levels, with one co-integration relation and a constant restricted to this relation (or even without any constant). Thus, Bayesian co-integration analysis leads to the conclusion that the annual data on aggregate inputs and output are best described by three stochastic trends (in particular, just by a tri-variate random walk), with some possibility of only two stochastic trends and a long-run relation linking all three variables. However, three stochastic trends—precluding any long-run relation—are much more likely. Under equal prior probabilities for all models, specifications based on first differences are more than 10 times more probable a posteriori than co-integrated VAR models for levels. Also, these co-integration relations do not show any features of a regular production function. All other specifications appearing in Table 1 can be omitted in further considerations as they are unlikely in view of the data. The results presented above are strikingly similar for all four economies, and, in view of the relatively short time span of the data, they are strong even for the Polish economy, represented by 20 data points only. Table 1 is based on a very diffuse prior for the linear trend parameters, as we use \( W_{0} = 100 I_{2} \) in the prior covariance matrix for the coefficients corresponding to \( d_{t} \) = [1 t]. It is not surprising that the models with time variable t are less likely—in particular, models with an unrestricted trend become worse as the rank of \( {\varPi} \) increases; the worst models appear at the bottom of Table 1. Using much more precise prior for the deterministic components, with 1 and 0.01 as the diagonal elements of \( W_{0} \), leads to the same general (qualitative) conclusions, but makes models with deterministic terms slightly more likely a posteriori. In our final results in the next section we take advantage of this increased role of the constant term under the more precise prior.

The MDD values for the APF (or type B) models, presented in Table 2, are based on our initial assumption that the joint tri-variate type B model always uses the first two equations from one of the best VAR(p) models. So, we have taken these equations of the VAR(1) specification for \( {\Delta}x_{t} \) (without constants) as the marginal sampling model for inputs, and we have assumed different specifications of the general form (7) as conditional models for output given current inputs. All our type B models preserve a Bayesian sequential cut by construction, so MDD = C1C2—where, for example, log(C1) = 22.64 for the USA. However, the values of log(C2) are so small for most type B models that we cannot treat them as competitors to the best type A models. The basic, static, full-efficiency Cobb–Douglas APF model is completely unlikely in view of the data. Extending it to the translog specification also does not seem to provide any substantial difference (with some exception to Hungary) and adding exponential inefficiency terms even worsens the initial situation, since such extension is clearly not supported by the data. The only model extension that is worth noting amounts to adding linear trend to the basic APF (again, with the exception of Hungary), but such primitive dynamics cannot compete with the best co-integrated VAR models. We have also considered—among many other type B specifications, not mentioned in Table 2—the Cobb–Douglas APF with time-varying parameters, proposed in Koop et al. (2000). However, describing Cobb–Douglas elasticities by a certain bivariate AR(1) process has appeared to be a worse modelling strategy than just adding a linear trend.

6 A synthesis and final results

Our empirical results presented in the previous section suggest that a chance to obtain a good type B specification, hopefully competing with VAR (i.e. type A) models, is in richer dynamics in the APF equation. In order to achieve this goal, we extend a simple Cobb–Douglas model (with or without trend) in an old-fashioned manner, by adding an autocorrelation term. Hence, let us consider the following specification:

It can be treated as a member of class B, if we appropriately extend (7) to cover autocorrelation. On the other hand, (8) results from (4) if (taking \( \delta_{1} = \rho \)) we impose the restrictions: \( \delta_{2} = - \delta_{1} \beta_{1} \), \( \delta_{3} = - \delta_{1} \beta_{1} \) and \( \delta_{i} = 0 \) for \( i = 4, \ldots, 3p \). In fact, (8) can be obtained as the conditional part in the co-integrated VAR(1) model with one co-integrating relation, \( \left[{- \beta_{1} - \beta_{2} 1} \right]^{\prime} \) as the normalized co-integrating vector and weakly exogenous inputs:

The co-integrated VAR(1) model in (9) has a very interesting interpretation. In (9) it is assumed that

- (1)

All three variables are conditionally correlated (given their past), as it is in any type A model where matrix Σ has no zeros off the diagonal;

- (2)

The inputs formation is described by two stochastic trends;

- (3)

Both inputs are weakly exogenous for inference on the parameters in (8);

- (4)

The level of output, given current levels of inputs and the past of all variables, is determined by the Cobb–Douglas-type relation, both through its “immediate” impact and through deviations from it, which occurred in the previous period;

- (5)

The “immediate” Cobb–Douglas-type relation is a co-integrating relation as well.

Thus, the joint model (9), with its conditional part (8), reconciles the traditional Cobb–Douglas APF (“immediate” and static) with the concept of co-integration, fundamental in modern econometrics. Note that this model significantly differs from standard type A models with r = 1 (see Sect. 4). Firstly, it imposes weak exogeneity of inputs. Secondly, the normalized co-integrating vector \( \left[{- a_{1} - a_{2} 1} \right]^{\prime} \) reflects the conditional (contemporaneous) correlation structure between output and inputs, as \( a_{1} = \beta_{1} \), \( a_{2} = \beta_{2} \) and \( \beta = {\varSigma}_{11}^{- 1} {\varSigma}_{12} \) (or the other way round: conditional correlation reflects the co-integration relation).

The joint specification (9) is of particular interest, but it may occur too parsimonious to win the competition with richer structures. However, it can be easily generalized, keeping all its crucial properties. As in the case of any type B model, the first two equations of (9)—i.e. the marginal model for inputs—can be replaced by two equations with the highest value of C1 in class A. As the VAR(2) model with Π = 0 (assuming three stochastic trends) is one of the three best specifications of type A, it is crucial to consider its close VAR(2) alternative with only two stochastic trends and the “immediate” Cobb–Douglas-type relation as the co-integration relation:

The first two equations in (10) give the assumed value of C1, the first component of MDD, if both their intercepts are zero. The conditional model for output given current inputs can be written in the following form, with the Bayesian sequential cut being preserved:

vt is a Gaussian white noise (with variance ω); given ω, the parameters \( \beta \), \( \tilde{\delta}^{*} \) and \( \tilde{\delta}_{0} \) are a priori independent, with conditional normal prior distributions presented in Sect. 2, just below formula (6). In particular, \( \tilde{\delta}^{*} \sim N\left({0, \frac{1}{2} \omega I_{3}} \right) \) and \( \tilde{\delta}_{0} \sim N\left({0, \omega w_{00}} \right) \). Note that the zero restriction on \( \tilde{\delta}^{*} \) leads to the simpler specification (8). In Table 3 we present the decimal logarithms of MDD values based on the assumed C1 and on C2 corresponding to different values of \( \rho \) in the conditional model in (11). Since no dynamic stability constraints have been imposed in type A models, we consequently do not restrict \( \rho \) to the unit interval and the highest MDD values can be obtained even for \( \rho \) slightly above 1. As they do not lead to interesting results, we do not focus on specifications with \( \rho \) free, but on models with fixed values of this parameter, namely \( \rho \) = 1 (i.e. VAR with three stochastic trends) and \( \rho \) just below 1 (i.e. VAR with two stochastic trends, “immediate” Cobb–Douglas relation as the co-integration relationship and a slow adjustment). Models with and without constant are compared; if \( \tilde{\delta}_{0} \) is present, then \( w_{00} = 1 \) is assumed in the prior distribution, because \( w_{00} = 100 \) led to lower value of C2. Our task is now to find close competitors to VAR specifications (without constants) for first differences of logs of the original data; \( w_{00} = 100 \) would preclude finding them, as it is clear from Table 1.

Under equal prior probabilities, three stochastic trends are more likely a posteriori than the co-integrating relation of the Cobb–Douglas form, but by less than one order of magnitude for the USA and the UK (and even less for Hungary and Poland). If an economist has strong prior beliefs that a stable, long-run APF is an adequate and useful concept for macroeconomic analyses, and the case of three stochastic trends has only small prior probability (say, less than 0.1), then—looking at our results of model comparison—such economist still chooses (11) with \( \rho \) just below 1 as a better model than the specification in terms of first differences alone. Now the situation is different than in the case of comparison between pure type A models (see Table 1), where we did not impose any extra restrictions on the specifications with one co-integration relation and they were more than one order of magnitude worse than the models assuming three stochastic trends. As we can see, the additional assumptions (weak exogeneity of inputs and equivalence between the co-integration relation and the “immediate” Cobb–Douglas relation, together with fixing \( \rho \) just below 1 and introducing the constant term of high prior precision), imposed jointly, are helpful to make the co-integration VAR model a competitor to the VAR model for first differences.

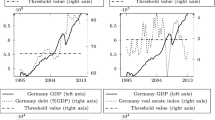

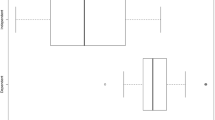

Finally, in Figs. 2 and 3 we present plots of the marginal prior and posterior densities of \( \beta_{1}, \beta_{2} \) and their sum; the posterior densities correspond to three values of \( \rho \): namely \( \rho \) = 1, \( \rho \) = 0.95 and \( \rho \) = 0.5. The value \( \rho \) = 0.99 (that yields a particularly good model) leads to almost the same posterior densities as \( \rho \) = 1, so there is no point in plotting them separately. Figure 2 corresponds to our original prior specification for the parameters of (11), and Fig. 3 is based on 100 times smaller prior precision for \( \beta_{1}, \beta_{2} \) and their sum.

Marginal posterior densities of \( \beta_{1}, \beta_{2} \) in (11) and their sum—informative priors; prior densities are plotted using dashed line; posterior densities are plotted using: dash-dot line for \( \rho = 1 \); solid line for \( \rho = 0.95 \); dotted line for \( \rho = 0.5 \); \( \beta_{1}, \beta_{2} \) and \( \beta_{1} + \beta_{2} \) are capital, labour and scale elasticities, respectively; model with \( \rho = 1 \) has the highest marginal data density (i.e. under equal prior odds it is the most likely of the three presented given the data); model with \( \rho = 0.5 \) has the lowest marginal data density

Marginal posterior densities of \( \beta_{1}, \beta_{2} \) in (11) and their sum—diffuse priors; prior densities are plotted using dashed line; posterior densities are plotted using: dash-dot line for \( \rho = 1 \); solid line for \( \rho = 0.95 \); dotted line for \( \rho = 0.5 \); \( \beta_{1}, \beta_{2} \) and \( \beta_{1} + \beta_{2} \) are capital, labour and scale elasticities, respectively; model with \( \rho = 1 \) has the highest MDD (i.e. under equal prior odds it is the most likely of the three presented, given the data); model with \( \rho = 0.5 \) has the lowest marginal data density

As our model specification gets better (i.e. the closer we are to the case \( \rho \) = 1, which amounts to three stochastic trends and lack of any co-integration relation), the marginal posterior distributions of \( \beta_{1}, \beta_{2} \) and their sum become very similar to the marginal prior distributions. This empirical result, the same for all four different data sets, indicates that our inference—on the parameters that have clear interpretation within the APF context—is very fragile and based mainly on prior assumptions, if we restrict to relevant dynamic models. For other specifications, which we can treat as completely inadequate, our posterior inference seems to be driven by the data. However, any conclusions based on a highly misspecified model can be misleading. It seems that, for our data covering the decades around the year 2000, the APF cannot be confirmed even as a purely empirical relation explained in Shaikh (1974). The dynamics of the output and inputs aggregates is best described by a tri-variate random walk.

7 Concluding remarks

The concept of modelling an economy-wide output via aggregate production function, APF, although fundamentally questionable and criticized, has been very important for both the theory of economic growth and empirical growth studies (e.g. growth accounting, development accounting). However, the studies have not focused on the empirical justification of APF within the modern dynamic econometrics. For this reason, we have used annual data on total production and two input aggregates in order to formally compare basic time-series and more traditional models. The crucial issue in modelling this tri-variate, non-stationary time series is to adequately capture its dynamics. We start within the VAR framework and stress that the “instantaneous” or “short-run” Cobb–Douglas-type relation appears through an obvious re-parameterization and representation of VAR in terms of the conditional and marginal models—for the output and inputs, respectively. The important empirical question is whether such APF-looking relation is also a co-integration relation (linking aggregate output with aggregate inputs) based on modern econometric techniques. If so, it could be treated as a valid tool to describe such links and interpreted at least as in Shaikh (1974).

For the purpose of formulation and comparison of our competing models we have devised a fully Bayesian modelling and inference framework. The framework is rooted in both a purely statistical, time-series analysis of the data and the macroeconomic theory of APF. This makes it particularly appealing to practitioners and statistical agencies who seek a well-founded framework for modelling the aggregate output. Furthermore, since Bayesian model comparison is sensitive to prior distributions, we have conducted our research carefully, keeping the same Bayesian Cobb–Douglas model in the two general classes (A and B); this model appears as a special case in class A, and it is the starting point for extensions in class B. In order to make the comparison fully operational, reduce the computational burden and avoid the possibility of critiques from the purely numerical perspective, we have restricted to the simplest prior distribution classes, like the natural conjugate one in the case of basic VAR models. Of course, other Bayesian models considered (co-integrated VAR models, stochastic frontier specifications) required much more effort, but they were built around the basic structures.

In fact, we have favoured a priori the regular, linearly homogenous Cobb–Douglas relation by using it to partially elicit the prior for the VAR covariance matrix. Despite the central role of the Cobb–Douglas case in our Bayesian modelling strategy, posterior results have not confirmed the empirical relevance of the APF-type relation in modelling yearly input and output data from individual economies. It is worth noting that we have also explored possible extensions to traditional Cobb–Douglas, translog in particular. However, an APF based on the translog specification has turned out to be less probable a posteriori.

Among all specifications under consideration, the best ones are VAR(0), VAR(1) and VAR(2) models for the logarithmic growth rates of output and inputs aggregates. Thus, our tri-variate series is (most likely) best described by three stochastic trends with only “instantaneous” Cobb–Douglas-type relation, reflecting possible conditional correlation between current output and inputs. The second best specifications are the specially constructed models with the error correction term in the output equation only. Therefore, less likely than the three stochastic trends, but still probable, are two stochastic trends, which are responsible for the dynamic formation of exogenous inputs, along with the co-integrating Cobb–Douglas-type relation, the same as the “instantaneous” one.

This main result of model comparison is not totally conclusive (in favour of three stochastic trends), which is rather obvious given the relatively short observation periods. However, the empirical role of APF-type relation is further diminished by poor identification of its crucial economic characteristics within the best specifications with co-integration; i.e. the better the model is, the less informative our posterior inference. Our results are strikingly similar for all four data sets—for the leading developed economies (the USA and the UK), as well as for the two transforming economies (Poland and Hungary)—which makes the findings more interesting.

An extension of this study could, perhaps, consider panel data or longer time frames. In particular, panel data with their cross section component can bring a new dimension of aggregate product’s variation which could be relevant in examining the APF. Nonetheless, the results presented in this study suggest that APF may be difficult to support empirically, at least if we look at it from dynamic econometrics perspective. This can be an additional argument in the debate summarized in Felipe and McCombie (2013).

References

Alichi A, Bizimana A, Laxton D et al (2017) Multivariate filter estimation of potential output for the United States. IMF Working Paper WP/17/106

Chikuse Y (1990) The Matrix Angular Central Gaussian distribution. J Multivar Anal 33:265–274

Doan T, Litterman RB, Sims CA (1984) Forecasting and conditional projection using realistic prior distributions. Econom Rev 3:1–100

Felipe J, McCombie JSL (2013) The aggregate production function and the measurement of technical change. Edward Elgar, Cheltenham

Fisher FM (1969) The existence of aggregate production functions. Econometrica 37:553–577

Florens J-P, Mouchart M (1985) Conditioning in dynamic models. J Time Ser Anal 6:15–34

Growiec J (2008) A new class of production functions and an argument against purely labor-augmenting technical change. Int J Econ Theory 4:483–502

Growiec J (2013) A microfoundation for normalized CES production functions with factor-augmenting technical change. J Econ Dyn Control 37:2336–2350

Jones CI (2005) The shape of production functions and the direction of technical change. Q J Econ 120:517–549

Koop G, Ley E, Osiewalski J, Steel MFJ (1997) Bayesian analysis of long memory and persistence using ARFIMA models. J Econom 76:149–169

Koop G, Osiewalski J, Steel MFJ (1999) The components of output growth: a stochastic frontier analysis. Oxf Bull Econ Stat 61:455–487

Koop G, Osiewalski J, Steel MFJ (2000) Modelling the sources of output growth in a panel of countries. J Bus Econ Stat 18:284–299

Koop G, Strachan R, Dijk H, Villani M (2004) Bayesian approaches to cointegration. In: Mills TC, Patterson K (eds) The Palgrave handbook of econometrics, vol 1. Econometric theory. Palgrave-Macmillan, Basingstoke, pp 871–898

Koop G, León-González R, Strachan R (2010) Efficient posterior simulation for cointegrated models with priors on the cointegration space. Econom Rev 29:224–242

Litterman RB (1986) Forecasting with Bayesian vector autoregressions—five years of experience. J Bus Econ Stat 4:25–38

Makieła K (2014) Bayesian stochastic frontier analysis of economic growth and productivity change in the EU, USA, Japan and Switzerland. Cent Eur J Econ Model Econom 6:193–216

Osiewalski J, Steel MFJ (1993) Una perspectiva bayesiana en selección de modelos. Cuadernos Economicos 55/3:327–351 (original English version available at: http://www.cyfronet.krakow.pl/~eeosiewa/pubo.htm)

Osiewalski J, Steel MFJ (1996) A Bayesian analysis of exogeneity in models pooling time series and cross sectional data. J Stat Plan Infer 50:187–206

Osiewalski J, Steel MFJ (1998) Numerical tools for the Bayesian analysis of stochastic frontier models. J Prod Anal 10:103–117

Pajor A (2017) Estimating the marginal likelihood using the arithmetic mean identity. Bayesian Anal 12:261–287

Shaikh A (1974) Laws of production and laws of algebra: the humbug production function. Rev Econ Stat 56:115–120

Turner D, Cavalleri M, Guillemette Y et al (2016) An investigation into improving the real-time reliability of OECD output gap estimates. OECD Working Paper ECO/WKP(2016)18

van den Broeck J, Koop G, Osiewalski J, Steel MFJ (1994) Stochastic frontier models: a Bayesian perspective. J Econom 61:273–303

Wróblewska J (2009) Bayesian model selection in the analysis of cointegration. Cent Eur J Econ Model Econom 1:57–69

Acknowledgements

This work was supported by research funds granted to the Faculty of Management at Cracow University of Economics, within the framework of the subsidy for the maintenance of research potential.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Data and computer code availability

The data used in the study can be downloaded from Penn World Tables. Information about exact variables and database version used is provided in Sect. 5. Computer code has been written in MATLAB and GAUSS and can be made available upon request.

Appendix: Bayesian co-integration analysis

Appendix: Bayesian co-integration analysis

In order to introduce prior distributions employed in the analysis of VEC models, we rewrite representation (2b) \( (x_{t} = x_{t - 1} + \tilde{z}_{t} \tilde{\varPhi} + \varepsilon_{t}) \) as

where \( \varGamma = \left[{\varGamma_{1}^{\prime}, \varGamma_{2}^{\prime}, \ldots \varGamma_{p - 1}^{\prime}, \varGamma_{0}^{\prime}} \right]^{\prime} \) and \( \tilde{z}_{t}^{2} = \left[{{\Delta}x_{t - 1},{\Delta}x_{t - 2}, \ldots {\Delta}x_{{t - \left({p - 1} \right) }}, d_{t}} \right] \). In the case of co-integration, the matrix Π is of reduced rank (r(Π) = r, r = 1, 2) and can be decomposed as the product of two full column rank matrices. Following the idea of Koop et al. (2010) to perform the analysis, we consider two equivalent parameterizations of Π:

where β has orthonormal columns, whereas \( {\rm B}_{\varPi} \in {\mathbb{R}}^{{\left({3 + l} \right)r}} \) (l = 1 when a constant or a linear trend is added to the co-integration relation, l = 0 when there are not any deterministic components in the co-integration relations). As β (BΠ) and α (AΠ) may have different dimensions we start (as suggested by Koop, León-González and Strachan, 2010) with the ΑΠ, ΒΠ parameterization.

We have decided to assume that ΒΠ and ΓΑ are a priori independent and impose the following matrix normal distributions for the considered parameters:

where \( \tilde{W}_{A} = M\tilde{W}M^{\prime}, \) with \( M = \left[{\begin{array}{*{20}c} {M_{11}} & 0 \\ 0 & I \\ \end{array}} \right]\;M_{11} = \left[{\begin{array}{*{20}c} {I_{r}} & {0_{{r \times \left({3 - r} \right)}}} \\ \end{array}} \right]. \)

From \( vec\left({{\text{\rm B}}_{\varPi}} \right)\sim N\left({0, \frac{1}{3 + l}I_{{\left({3 + l} \right)r}}} \right) \) follows that the orientation of \( {\text{\rm B}}_{\varPi} \) (i.e. \( {\text{\rm B}}_{\varPi} \left({{\text{\rm B}}_{\varPi}^{\prime}{\text{\rm B}}_{\varPi}} \right)^{{- \frac{1}{2}}} \)) is uniformly distributed over the Stiefel manifold (see, for example, Chikuse 1990) and so is the space spanned by this matrix (which is the element of the Grassmann manifold). In other words, by such formulated prior distribution we impose uniform prior over the set of all possible co-integration spaces, we do not favour any direction. Keeping that in mind and knowing that the uniform distribution over the Stiefel manifold is right orthogonal invariant, we can, without loss of generality, assume that \( {\beta} = {\text{\rm B}}_{\varPi} \left({{\text{\rm B}}_{\varPi}^{\prime}{\text{\rm B}}_{\varPi}} \right)^{{- \frac{1}{2}}} {\text{\rm O}}_{r} \) and \( {\alpha} = {\text{\rm A}}_{\varPi} \left({{\text{\rm B}}_{\varPi}^{\prime}{\text{\rm B}}_{\varPi}} \right)^{{\frac{1}{2}}} {\text{\rm O}}_{r} \), where the \( r \times r \) matrix \( {\text{\rm O}}_{r} = diag\left({\pm 1} \right) \) is constructed in such a way that it controls the element of the first row of β to be positive, so it helps us to deal with the many-to-one relationship between the Stiefel and the Grassmann manifolds.

Summing up our prior assumptions we obtain the following joint prior density function:

where |C| denotes the determinant of C, tr(C)—the trace of C, c1, c2—the normalizing constants of the matrix-variate normal distribution, c3—the normalizing constant of the inverted Wishart distribution, and

Combining the joint prior with the likelihood density function leads us to the joint posterior:

where \( \tilde{c} = c\left({2\pi} \right)^{{- \frac{3T}{2}}} \) and \( E = \left({\begin{array}{*{20}c} {\varepsilon_{1}^{\prime}} & {\varepsilon_{2}^{\prime}} & {\begin{array}{*{20}c} \ldots & {\varepsilon_{T}^{\prime}} \\ \end{array}} \\ \end{array}} \right)^{\prime}. \) To obtain MDD we have to integrate out \( {\text{\rm B}}_{\varPi}, \)\( {\varGamma}_{\text{A}} \) and Σ from the likelihood according to the imposed priors. The parameters ΓΑ and Σ can be integrated out analytically, which leads to the following conditional (on ΒΠ) data density:

where \( Z_{{\text{\rm B\,}}} = \left({\begin{array}{*{20}c} {Z_{1} {\text{\rm B}}}{Z_{2}} \\ \end{array}} \right) \), \( R_{\rm A} = \left({Z_{0} - Z_{\rm B} \hat{\varGamma}_{\rm A}} \right)^{\prime} \left({Z_{0} - Z_{\rm B} \hat{\varGamma}_{\rm A}} \right), \)\( \hat{\varGamma}_{\rm A} = \left({Z_{\rm B}^{\prime} Z_{\rm B}} \right)^{- 1} Z_{\rm B}^{\prime} Z_{0} \), \( D_{\rm A} = \tilde{\varGamma}_{\rm A}^{\prime} \tilde{W}_{\rm A}^{- 1} \left({\tilde{W}_{\rm A}^{- 1} + Z_{\rm B}^{\prime} Z_{\rm B}} \right)^{- 1} Z_{\rm B}^{\prime} Z_{\rm B} \hat{\varGamma}_{\rm A}, \)\( Z_{0} = \left({\begin{array}{*{20}c} {{\Delta}x_{1}^{\prime}} & {{\Delta}x_{2}^{\prime}} & {\begin{array}{*{20}c} \ldots & {{\Delta}x_{T}^{\prime}} \\ \end{array}} \\ \end{array}} \right)^{\prime} \), \( Z_{1} = \left({\begin{array}{*{20}c} {x_{0}^{\prime}} & {x_{1}^{\prime}} & {\begin{array}{*{20}c} \ldots & {x_{T - 1}^{\prime}} \\ \end{array}} \\ \end{array}} \right)^{\prime} \), \( Z_{2} = \left({\begin{array}{*{20}c} {\tilde{z}_{1}^{{2{\prime}}}} & {\tilde{z}_{2}^{{2{\prime}}}} & {\begin{array}{*{20}c} \ldots & {\tilde{z}_{T}^{{2{\prime}}}} \\ \end{array}} \\ \end{array}} \right)^{\prime} \). To integrate out \( {\text{\rm B}}_{\varPi} \) we have to use Monte Carlo methods, mainly the arithmetic mean estimator.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Osiewalski, J., Wróblewska, J. & Makieła, K. Bayesian comparison of production function-based and time-series GDP models. Empir Econ 58, 1355–1380 (2020). https://doi.org/10.1007/s00181-018-1575-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-018-1575-8

Keywords

- Bayesian inference

- VAR models

- Economic growth models

- Co-integration analysis

- Aggregate production function

- Potential output