Abstract

In this paper two procedures are developed for the identification of the parameters contained in an orthotropic elastic-plastic-hardening model for free standing foils, particularly of paper and paperboard. The experimental data considered are provided by cruciform tests and digital image correlation. A simplified version of the constitutive model proposed by Xia et al. (Int J Solids Struct 39:4053–4071, 2002) is adopted. The inverse analysis is comparatively performed by the following alternative computational methodologies: (a) mathematical programming by a trust-region algorithm; (b) proper orthogonal decomposition and artificial neural network. The second procedure rests on preparatory once-for-all computations and turns out to be applicable economically and routinely in industrial environments.

Similar content being viewed by others

1 Introduction

The industrial production of foils to various purposes (e.g., paper, cardboards, metal sheets, membranes) usually gives rise to anisotropy in mechanical properties. In many engineering situations such properties are substantially affected by the manufacturing process and turn out to be meaningful in practical applications; therefore, their realistic accurate description by constitutive models for structural analysis of final products turns out to be a recurrent practical problem.

Realism and accuracy of material models obviously require two interconnected but distinct stages in material and computational mechanics: selection of a suitable constitutive relationship; quantitative assessment of the parameters included in such relationship, namely “model calibration”. Both stages are based on experimental data but the latter at present often involves computer simulation of the tests and inverse analysis. Inverse analysis frequently turns out to represent a challenge in engineering practice since it may exhibit mathematical complexity (such as ill-posedness, non-convex minimization) and it can require a heavy computational burden particularly because many repeated test simulations are implied.

The purpose pursued in this study is a contribution to overcome the above difficulties by recourse to “ad hoc” methods employable in a specific industrial context. Reference is made to a, fairly popular now, material model devised for the orthotropic elastic-plastic behavior of paper and paperboard and endowed with particularly numerous parameters to identify.

The main features of the experimental test primarily considered here for foil material characterization are briefly described in Section 2, namely: (A) cruciform specimens of paper free-foils with a central hole intended to increase the, here desirable, non-uniformity of the stress and strain fields generated by the loadings imposed at the arm ends; (B) “full field” measurements of in-plane displacements by Digital Image Correlation (DIC).

Both the above experimental techniques and relevant instrumentations are frequently dealt with in the recent literature, (see e.g. Chen et al. 2008 on cruciform tests and Hild and Roux 2006 on DIC). Test simulations are performed here by conventional Finite Element (FE) structural analyses with quantitative features specified in Section 2 and using the commercial code Abaqus.

Section 3 first summarizes the constitutive model for paper developed by Xia et al. (2002), at present employed in various industrial environments and implemented here into Abaqus code by means of a user subroutine. The complexity to calibration purposes of such model, containing 27 parameters, has suggested to consider a modified version, presented in Section 3, characterized by a simpler non-linear hardening law. The proposed inverse analysis procedure turns out to successfully identify the parameters which are “active” when the simplified model is adopted for the FE simulation of a perforated cruciform specimen under biaxial tension.

The investigations presented and discussed in the subsequent Sections concern “pseudo-experimental” inverse analyses, namely: reasonable values are attributed to the parameters, which represent the “targets” to be identified; the measurable quantities are computed by FE simulation of the test and employed as input of the inverse analysis; the resulting estimates are compared to the pre-assumed parameters as validation check of the identification procedure.

The parameter identification approach adopted herein is deterministic and non-sequential: i.e. central is the minimization with respect to the unknown parameters of a “discrepancy function”, which quantifies the difference between (pseudo-) experimental data and their counterparts computed, through the simulation, as functions of the sought parameters.

In Section 4, on the basis of full-field pseudo-experimental data achievable by DIC, the parameter identification is carried out by a “Trust Region Algorithm” (TRA), namely by minimizing the discrepancy function through a popular mathematical programming procedure of first-order (i.e. requiring first derivatives of the objective functions), see e.g. Conn et al. (2000).

The minimization of the discrepancy function by TRA turns out to imply quite remarkable computational efforts. In order to avoid or mitigate such circumstance, an alternative inverse analysis procedure is developed and validated in Section 5, according to the methodology called Proper Orthogonal Decomposition (POD). Such methodology rests on a suitable approximation (“truncation”) related to the reasonably expected correlation of the specimen responses (“snapshots”) to numerical tests carried out with different sets of constitutive parameter values; each set represents a point of a suitably pre-established “feasible domain” (or “search domain”) in the space of the sought parameters. The POD methodology has remote origins in applied mathematics; sources employed for the present applications have been primarily the references Chatterjee (2000), Liang et al. (2002).

In Section 5 a preliminary computational effort consisting of POD is employed for the generation of suitable input to a suitably optimized Artificial Neural Network (ANN) which is “trained” and tested in order to make it a tool (implemented into a software for small computer) apt to economical and fast identification of the sought parameters, see e.g. Fedele et al. (2005), Maier et al. (2010), Aguir et al. (2011). The numerical exercises carried out evidence the potential of this procedure which, in industrial environments, can allow to perform parameter estimation for the simplified anisotropic model formulated in Section 3 in a relative inexpensive and routine fashion.

Section 6 is devoted to closing remarks and to prospects of future research.

2 Cruciform tests, full-field DIC measurements and computer simulations

2.1 Preliminary remarks

Inverse analysis plays a central role in the present study and is consistent with the following options in experimental methodology: cruciform test and digital image correlation. Traditionally cruciform tests on thin foils or laminates have been employed with the aim of generating a uniform stress (strain) field in the central part of the specimen, where strains are often measured by needle extensometers; to meet such requirement, special provisions like longitudinal slits in the specimen arms have been adopted, see e.g. Chen et al. (2008). The recent developments in digital correlation techniques for measuring “full-fields” of displacements and strains, combined with inverse analysis methodologies, make it possible and fruitful to calibrate material models of free-foils on the basis of experiments which generate inhomogeneous response field, see e.g. Cooreman et al. (2008).

An inhomogeneous field of displacements and strains in the response of specimen to test, if measurable with generation of a broad set of experimental data, turns out to be representative of the effects related to diverse parameters. Therefore cruciform tests (CT) which induce inhomogeneous fields are at present frequently adopted for free-foils mechanical characterization studies, see e.g. Lecompte et al. (2007). In order to increase the inhomogeneities of the test response field, a circular hole is considered here in the cruciform specimen.

Digital Image Correlation (DIC), based on comparison of digitalized photos (taken before and after the test considered), can measure accurately surface displacements of many preselected “nodes” of a grid on the specimen surface (“full-field” measurement), see e.g. Sutton et al. (2009). The possible ill-posedness of inverse problems is generally mitigated or eliminated by the growth in number of diverse experimental data.

For the simulations of the tests traditional plane-stress finite element (FE) modeling is employed herein by means of a commercial computer code. Later in this paper focus will be on novel procedures intended to reduce computing time and costs of multiple repeated FE simulations generally implied by inverse analyses.

Some features of the above outlined operative issues are specified here below with reference to paper and to its properties related to materials employed by a large industry (specifically TetraPak Company).

2.2 On the experimental equipment and procedure

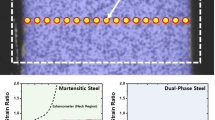

Figure 1 shows the shape of a cruciform specimen with a central hole and the area where the in-plane displacement field is monitored by DIC. A typical machine for biaxial tests appears in Fig. 2.

The use of full-field measurement methods for the characterization of anisotropic materials is a topic which has been, and still is, intensively studied, see e.g. Lecompte et al. (2007), Perie et al. (2009). Numerous DIC systems are at present available on the market and have been used in various technological domains. The remarks which follow briefly outline the main features of the DIC procedure selected to the present purposes. Methodological details are described e.g. in Hild and Roux (2006), Sutton et al. (2009).

Photographic pictures to be taken with a (CCD) camera, concern the reference state and different deformed states of the observed specimen surface over an area visualized in Fig. 1 and called Region of Interest (ROI) in the pertinent jargon. Two images of the specimen at different states of deformation are compared by means of a “correlation window”, i.e. on an area called Zone of Interest (ZOI). The resulting displacement estimates, to be associated with the center point of each ZOI, is an average of the displacements of the pixels inside the ZOI. In the present study the ROI size (shown on Fig. 1) is assumed to amount to 100 × 100 mm.

The specimen surface must exhibit a random speckle pattern in order to obtain in the images gray value distributions over each ZOI apt to recognize it after deformation. Speckle patterns can be generated by lightly spraying some paints.

One of the advantages of the DIC method is that the selection of the measurement points is flexible since it can be carried out after the experiment. The accuracy of displacement measurement depends primarily on the resolution of the camera, on the quality of the speckle pattern, and on surface conditions during deformation. It can be assumed equal to 1/100.000 of the ROI typical length (“field of view”), according to specification for DIC systems now available on the market (e.g. http://www.dantecdynamics.com). Since measurements are herein not truly experimental but results of FE simulations, for the numerical validation (Sections 4 and 5) the “pseudo-experimental” computed displacements will be first “noised” by random perturbation addends according to a constant probability density distribution over the interval between − 0.5 μm and + 0.5 μm; then they will be rounded off to the nearest integer value in μm, according to a reasonably expected accuracy of the foreseen DIC instrument.

The cruciform specimen considered herein is shown in Fig. 3 together with the chosen grid and its 241 nodes for DIC measurements of displacements. Each arm exhibits 60 mm width and length, not including the clamping zones. The hole perforated in the center has a 30 mm diameter. The principal material axes, “machine direction” MD and “cross direction” CD, are aligned with the arms of the cruciform specimen. In the numerical model the double symmetry of the system is exploited so that only one quarter of the specimen is analyzed. In a truly experimental procedure the measurements obtained by DIC in the four quadrants of the ROI would give rise to differences among displacements of symmetrically located points which could be employed to assess the uniformity of properties in the foil and the accuracy of the measurements. “Pseudo-experimental” reference displacements and their counterparts computed as functions of the sought parameters, are compared at nodes of the “a priori” selected grid over the monitored central area shown in Fig. 3.

2.3 On test simulations

Figure 4 shows the finite element (FE) mesh adopted for the simulation of cruciform tests by exploiting the double symmetry: it involves 5226 degrees-of-freedom (dofs) in the specimen plane. The commercial computer code employed for all the numerical exercises in this study is Abaqus, in its Release 6.9, Dassault System, 2009. For the present developments “small deformation hypothesis” is adopted herein.

Of course, the adequacy and accuracy of FE analysis depend on various factors, including modeling assumptions, mesh discretization, time integration, etc. Therefore a study of the FE analysis procedure as for error assessments is advisable in view of routinely repeated applications of the present method in industries. Such study could include, but should not be limited to, a mesh refinement study. In order to somehow optimize the FE model selection to the present purposes, the mesh sensitivity of the solution has been preliminarily assessed. The relevant computational exercises have concerned an elastic isotropic cruciform specimen with the geometry of Fig. 3 and with material parameters E = 8000 MPa, ν = 0.30, under imposed clamp displacements applied in both directions and equal to 1.8 mm.

The present validation exercises employ plane stress quadrilateral and triangular elements implemented in Abaqus code. The simulations performed by using such FE code with adaptive mesh refinements led to the orientative values gathered in Table 1 of the “element energy density error indicator” according to the criteria presented in Zienkiewicz and Zhu (1987). Error indicator and the adaptive remeshing function can help for an automatic mesh refinement optimization.

The results in Table 1 quantify the mesh-sensitivity in terms of “error indicators” with four models (coarse, medium, fine and very fine mesh). For the purposes pursued here, two different FE models are chosen: (i) model with a fine mesh (5226 degrees of freedom, see Fig. 4) used only to generate the “pseudo-experimental” data; (ii) model with a medium mesh (less dofs in order to reduce the computational time) employed for the “snapshots” generation in the POD procedure described in Section 5.2. The use of different FE meshes in the forward and inverse problems avoids the so-called “inverse crime”, see e.g. Kim et al. (2004), and more generally represents a basic check of the procedure robustness.

3 Orthotropic elastic-plastic constitutive model for paper

3.1 Preliminary remarks

The mechanical behavior of paper and paperboard is largely dependent on fiber properties and shapes, fiber density, properties of the inter-fiber bonds and on the production process as well. The fiber orientation, particularly dependent on that process, and the longitudinal properties of the fibers are important features contributing to the in-plane behavior of paper and to its anisotropy.

A simplifying but accurate treatment of the anisotropy of paper rests on the assumption of orthotropy. The three principal orthotropy directions attributed to machine-made paper coincide with the machine direction (MD), the cross direction (CD) and the thickness direction (ZD) as shown in Fig. 5a.

(a) Principal directions in paper and (b) typical results of uniaxial tests (from Harrysson and Ristinmaa 2008)

Figure 5b visualizes typical behaviors of paperboard subjected to uniaxial tensile tests along three in-plane directions, namely in MD, in CD and in an intermediate (45°) direction. The figure evidences significant direction dependency of the response, nonlinearity of the stress-strain relations and smooth transition between elastic and elastic-plastic deformation stage. Unloading from the nonlinear part of the stress-strain curve would result in a permanent deformation without meaningful “damage” (i.e. the stiffness under unloading practically coincides with the original one governed by Young’s modulus). Interesting experimental results of biaxial tests on paper sheets together with their elastic-plastic interpretation are reported in Castro and Ostoja-Starzewski (2003).

In what follows the “small” strain (ε ij ) hypothesis is assumed and plane-stress states only are considered in the foil plane with reference axes x 1 and x 2 in direction MD and CD, respectively (Fig. 5a); namely out-of-plane stress components \(\left(\sigma_{33},\sigma_{13},\sigma_{23}\right)\) are assumed to vanish consistently with the “free-foils” concept. Homogeneity is assumed at the macroscale, so that stresses and strains are constant along the foil thickness. Therefore, the elastic behavior is governed by the classical linear relationship:

The independent material parameters are two Young moduli E 1 and E 2, the shear modulus G 12 and the Poisson ratio ν 12 (ν 21 being a consequence of the matrix symmetry), subjected to the following constraints:

These inequalities are due to the prerequisite of positive definiteness of strain energy density and, hence, of the compliance matrix in (1), see Kaliszky (1989), Ting and Chen (2005). In the present parameter identification procedure (Sections 4 and 5), which will concern the three elastic moduli (with the Poisson ratio a priori assumed) the above constraints are “a priori” complied with by the pre-selection of the search domain.

Inelastic strains are here assumed to be additional to the elastic ones (in view of the “small deformation” hypothesis) and time-independent (non-viscous), namely plastic only (\(\varepsilon_{ij}^{p}\)). Their (nonholonomic, history-dependent, irreversible) development along any stress history can be described by adopting one of the elasto-plastic models specifically conceived for paper materials available in the literature, see e.g. Xia et al. (2002), Makela and Ostlund (2003), Harrysson and Ristinmaa (2008).

3.2 Xia et al. model

The constitutive model considered in this study is the one proposed in Xia et al. (2002) by Xia, Boyce and Parks and will be referred to here by the acronym XBP. Stresses are assumed to develop within the plane x 1, x 2 (namely MD-CD, see Fig. 5). The yield surface in the three-dimensional space of \(\boldsymbol {\sigma}=\left[ \sigma_{11}, \sigma_{22}, \sigma_{12}\right]^T\) is constructed by a combination of six plane “sub-surfaces”, \(\boldsymbol {\rm N}_{\alpha}=\left[N_{11}, N_{22}, N_{12}\right]^T_{\alpha}\), α = 1 ...6, being the unit vector orthogonal to the α-th subsurface, with double indices 11, 22 and 12 referring to the three axes in the stress space, for stress components σ 11, σ 22, σ 12, respectively (see Fig. 6).

Specifically, the yield criterion is formulated (in matrix notation) as follows:

In (3) the variable σ α , called the α-th “equivalent strength”, defines the distance of the α-th “subsurface” from the origin of the stress coordinate system; the functions \(\sigma_{\alpha}\left(\tilde{\varepsilon}^{p}\right)\) govern the material hardening; the scalar quantity \(\tilde{\varepsilon}^{p}\) means “equivalent plastic strain” defined as:

Parameter k is intended to smooth out the corners of the yield surface (Fig. 6) and is usually assumed “a priori” as an integer number between 1 and 3, larger then 2 in the original proposal of the model. Finally χ α is a “switching control” coefficient, such that:

The plane “subsurfaces” for α = 1,2,4,5 are shown in Fig. 6, the other two subsurfaces (α = 3,6) are parallel to the MD-CD plane with \(\boldsymbol {\rm N}_3=\left[0,0,1\right]^T\) and \(\boldsymbol {\rm N}_6=\left[0,0,-1\right]^T\) and are equidistant from the origin. Besides in the model it is assumed that \(\boldsymbol {\rm N}_4=-\boldsymbol {\rm N}_1\) and \(\boldsymbol {\rm N}_5=-\boldsymbol {\rm N}_2\). Versors \(\boldsymbol {\rm N}_1=\left[\cos\theta_1,\sin\theta_1,0\right]^T\) and \(\boldsymbol {\rm N}_2=\left[\cos\theta_2,\sin\theta_2,0\right]^T\), normal to the axis of shear stress σ 12, in view of the associativity assumption, are defined by the ratio between transversal and longitudinal incremental plastic strains under uniaxial loading in MD and CD, respectively. These ratios, equal to tanθ 1 and tanθ 2, will henceforth be denoted for simplicity by T 1 and T 2, respectively.

The evolution of the equivalent strengths σ α which control the hardening behavior is governed by the following functions:

for α = 1,...,5 and with the assumption that the equivalent strengths σ 3 and σ 6 evolve in the same way during hardening (σ 3 = σ 6).

Associativity in the elasto-plastic models (see e.g. Kaliszky 1989, Lubliner 1990) is suggested by experiments on paper and paperboard; therefore the plastic flow rule reads:

where λ represents the plastic multiplier.

The gradient of the yield function defined by (3) can be given the expression:

It is worth noting that an essential role is played by the switching control coefficients χ α (α = 1, ...,6) through (3), (5) and (8).

As a conclusion of the preceding synthesis of the constitutive model proposed in Xia et al. (2002), the 27 parameters contained in it are gathered in Table 2. The unusually high number of parameters may give rise to an expected burden and to some difficulty related to their identification in industrial environments.

3.3 Simplified XBP model

A reduction of the parameters exhibited by the XBP model may be desirable in view of its calibration to practical industrial purposes. The simplification proposed herein concerns merely the material hardening description. The hardening functions contain 4 parameters for each “subsurface”, namely equivalent strength \(\sigma_{\alpha}^{0}\) and three hardening parameters, A α , B α , C α , (totally 20 parameters for α = 1,...,5). The uniaxial behavior in the principal material directions of most paper products under increasing load is well described by the classical Ramberg–Osgood relation, see e.g. Makela and Ostlund (2003). Therefore power-law hardening turns out to be realistic; its adoption can reduce the number of inelastic parameters to 17, with 2 hardening parameters for each “subsurface”: factor q α , and exponent n α , for α = 1,...,5; namely:

where ε 0 is a constant to be chosen once-for-all, here \(\varepsilon_0=10^{-6}\). Numerical exercises like those visualized in Fig. 7 show that the simplified model can still capture the main features of paper and paperboard.

The full list of the parameters contained in the simplified model is shown in Table 3.

3.4 Sensitivity assessments

The design of the experiments to be combined with parameter identification procedures may be oriented and enhanced by preliminary sensitivity analyses. Such analyses are intended to quantify the influence of each sought parameter on measurable quantities and, hence, to corroborate the expectation of its identifiability by appropriate selection of measurements (see e.g. Kleiber et al. 1997). Usual sensitivity investigations are based on derivatives of measurable quantities with respect to the model parameters: higher normalized derivatives indicate more meaningful measurements.

In the present case of full-field displacement measurements by DIC, instead of each one of the measurable displacement components (say u 1 u 2, in the reference axes of Fig. 5a) at each grid node, the Euclidean norm of the vector which comprises all such displacements components is considered and its derivative is assessed with respect to each sought parameter, x i , i = 1,...,P. Such norm-based approach is an orientative, not rigorous, sensitivity assessment, adopted here for comparisons, in view of the high number of measurable quantities. Let K be the number of stages at which measurements are performed and recorded along a single test. The norm of the experimental displacements at the k-th stage reads:

where N is the number of the grid nodes over the ROI for DIC measurements.

By adding the K norms of all measurable displacements, (10) for k = 1,...,K, for each parameter x i , with i = 1,...,P, the sensitivity \(\bar{u}_i^*\) with a sort of “global sense” is here assessed according to the following equations (which describe also normalization and approximation of derivatives by forward finite differences):

In (11) vector \(\hat{\mathbf{x}}\) denotes the point in the parameter space from which increment Δx i of parameter x i is considered; e i is the corresponding unit vector; \(\bar{u}_{k}\left( \hat{\mathbf{x}} \right)\) represents the norm, (10), computed by test simulation at load level k on the basis of parameters \( \hat{\mathbf{x}}\).

The norm \(\bar f_k\) of the vector listing the two reaction forces f (MD) and f (CD) at the clamps, as measured response to the displacements imposed at the k-th stage, is also considered and its sensitivity \(\bar{f}^{*}_i\) with respect to the i-th sought parameter is computed, again in a global sense (i.e. summing contributions relevant to all the K loading levels), by the following formula similar to (11):

In the numerical exercises carried out in this study, K = 10 are the loading stages for measurements, at equal increments of clamp displacements; N = 241 is the number of grid nodes (and FE mesh nodes) where displacement components are measured by DIC; additional experimental data concern the two reactive clamp forces (in MD and CD direction) at the ends of the specimen arms. The material parameters amount to 17 (4 elastic and 13 inelastic) in the simplified XBP model and to 27 (4 elastic and 23 inelastic) in the original formulation of such model (see Tables 2 and 3, respectively). The increment of 1% in the argument has been adopted for derivative approximations in (11) and (12), namely it is assumed \(\Delta x_{i}=0.01\,\hat{x}_i\), (i = 1,...,P).

Figure 8 shows the resulting sensitivity values, obtained by summing over the 10 loading stages. The plots in Figs. 9 and 10 visualize at each one of the 10 loading steps the sensitivities given by (11)–(12), this time with no sum over k. Such comparative numerical results further corroborate the simplification in the hardening description proposed herein.

Sensitivity of measurable quantities norms with respect to the parameters according to formulas (11) and (12): (a) sensitivity of full-field displacements in biaxial tests simulated with original XBP model; (b) sensitivity of reactive forces in biaxial test simulated with original XBP model; (c) same as in (a) but with the present simplified XBP model; (d) same as in (b) but with the present simplified XBP model

4 Parameter identification by mathematical programming

A simple deterministic non-sequential (batch) least-square method is adopted herein for the identification of material parameters, namely a popular Trust Region Algorithm (TRA). Such procedure for the minimization of the discrepancy function can be very accurate, even when noisy data are employed, but requires repetitive use of non-linear finite element analysis and therefore turns out to be computationally expensive. Of course, with reference to both the original and modified XBP model, the material parameters which describe compressive behavior cannot be identified by making recourse to cruciform tests in tension, as evidenced by the preceding sensitivity study.

The iterative first-order TR algorithm can be efficiently employed for large scale problems with “box-constraints” defined on the minimization variables. Each iteration step is formulated as a two-dimensional quadratic programming problem in the plane defined by the gradient of the objective function and by its Gauss–Newton direction. A quadratic approximation of the objective function is generated in each step by the Hessian matrix which is in turn approximated by means of the Jacobian, so that only first order derivatives are required of the functions relating measurable quantities to the unknown parameters. Details are available in an abundant literature, e.g. Conn et al. (2000).

In the biaxial tensile tests considered in the present pseudo-experimental investigations, the cruciform specimen with perforated hole in the center is loaded in both direction by imposing the same clamp displacements in directions MD and CD, by a sequence of K equal steps, run by index k = 1,...,K: here with K = 10. At each k-th loading level, the in-plane displacements now denoted by \(u_{hk}^{m}\) (where h = 1,...,2N) at N selected nodes of the DIC grid on the membrane surface are supposed to be measured (hence superscript m although their values are computed, i.e. “pseudo-experimental”). At the same time also the reaction forces, \(f_{k(MD)}^m\) and \(f_{k(CD)}^m\), in both loading directions are measured and recorded. The discrepancy between the measured displacements and reaction forces and the corresponding computed quantities, marked by superscript c, is quantified by the following “discrepancy function”, namely by the Euclidean norm of the “discrepancy vectors”:

where at each measurement stage k: \(u_{hk}^{c}\) , \(f_{k\left(MD\right)}^{c}\) and \(f_{k\left(CD\right)}^{c}\) are the values of calculated displacements and calculated reaction forces in MD and CD, respectively; vector \(\boldsymbol {\rm x}\) collects the unknown parameters to be identified through the discrepancy minimization process. The dependence of the computed quantities on the parameter vector x is implicitly defined by the constitutive relationships adopted in the FE simulation of the test; thus the objective function ω is non-explicitly defined in terms of x and is a possibly non-convex function of \(\boldsymbol {\rm x}\).

In practical applications, the solution procedure starts from suitably chosen initial estimates of the sought parameters, either previously assessed on bulk material or expected on the basis of handbooks, previous experience or expert’s judgment. The “exact” values of the sought parameters are “a priori” assumed for the validation of the proposed method and for the preliminary assessment of its potentialities and limitations. In the present numerical tests the inverse analyses are initialized by attributing to each parameter a value randomly chosen in the range ±80% (namely between 20% and 180%) of its “exact” value, i.e. possibly far away from it, in order to test the robustness of the algorithms.

To take into account the effect of uncertainties in DIC measurements, the pseudo-experimental data are corrupted by randomly generated noise and truncated to a suitable accuracy as specified in Section 2.2. Uncertainties affect both the measurements and the system modeling. In what follows, the effects of noisy input data on the estimates will be investigated only to the purpose of evaluating the robustness of the parameter calibration procedure. However, systematic modeling errors are ruled out for the present preliminary validation purposes.

The first exercise according to the above criteria and method concerns the identification of all “active” parameters (both the elastic and inelastic ones) in the two models, using noisy data generated in the fashion described in Section 2.2, i.e. with a random perturbation ranging over the interval ±0.5 μm.

Figure 11a shows the convergence of the identification procedure which takes place when the modified XBP model is used.

Inverse analyses by a trust region algorithm (TRA) on the basis of a cruciform test: (a) identification of elastic and inelastic parameters in the simplified XBP model by DIC measurements with additional measurements on the reactive forces at the clamps; (b) failed identification of the elastic and inelastic parameters involved in the original XBP model

Figure 11b shows the lack of convergence which arises when the original XBP model is employed; similar results were obtained with different initializations (“noised” in the same way). Only when the initial values of the sought parameters were chosen very close to the target values (e.g. in the range \(\boldsymbol {\rm x}\pm0.1\boldsymbol {\rm x}\), \(\boldsymbol {\rm x}\) being the target values) convergence took place. If this is not the case the algorithm locks in one of the local minima of the discrepancy function and produces a poor estimation of the sought parameters.

In a second exercise the elastic parameters were considered as known and the identification procedure was carried out for the inelastic parameters only, using the same level of noise of the previous exercise. The results are shown in Fig. 12.

Inverse analysis by TRA, on the basis of a cruciform test, for the identification of inelastic parameters using both DIC measurements and measurements of reactive forces at the clamps: (a) successful identification of the 9 parameters involved in the simplified XBP model; (b) failed identification of the 15 parameters involved in the original XBP model

A third identification exercise was carried out concerning the whole set of (elastic and inelastic) parameters involved in the modified XBP model, using a much higher level of noise in order to assess the robustness of the procedure. Precisely the computed measurable quantities were noised by adopting a perturbation ranging over the interval ±5 μm, assuming again a uniform probability distribution for such perturbation. Figure 13a shows that convergence still takes place.

Inverse analyses by a trust region algorithm (TRA) on the basis of a cruciform test: (a) identification of elastic and inelastic parameters in the simplified XBP model by DIC measurements with additional measurements on the reactive forces at the clamps; (b) same as in (a) but without additional measurements on the reactive forces at the clamps

However, if the last identification exercise is carried out on the basis of DIC measurements only (i.e. values of reactive forces are not exploited as data) the function to be minimized, (13), reduces to the first summation and the identification procedure in terms of the sought parameters converges to values (see Fig. 13b) which are not as accurate as in the previous case.

The exercises just illustrated evidence that the simplified XBP model is better suited to parameter calibration than the original XBP model and that the combined exploitation of DIC displacement measurements and reactive force measurements is certainly beneficial in the proposed identification procedure.

Finally, since only normalized values of the estimated parameters are shown in the Figs. 11–13, some absolute values of such parameters at convergence are listed in Tables 4 and 5 together with the corresponding target values.

5 Inverse analysis by proper orthogonal decomposition and artificial neural networks

5.1 On proper orthogonal decomposition to the present purposes

The identification procedure proposed in this Section is an alternative to the one presented in Section 4. Its main feature is to condense most of the computational burden into a preliminary phase which involves computations to be carried out once-for-all; the further calculations needed for parameter estimation can be performed by exploiting the “tool” generated in the preliminary phase.

In the present engineering context it is particularly desirable that the assessment of material parameters be carried out repeatedly and routinely, by using equipment (including small computers) apt to provide all the sought estimates in a fast manner. In most practical situations including the real-life problems considered herein, the following circumstances characterize parameter identifications: (a) in the space of the sought parameters, a finite region (“search domain”) can “a priori” be specified (some times by an “expert” in the field), at least through lower and upper bounds on each parameter, as feasible domain of search; (b) let the term “response vectors” be used to denote different vectors of measurable quantities obtained as output of the same computational model by only varying the embedded material parameters: if such variations are within the above defined “search domain”, the corresponding “response vectors” turn out to be correlated, i.e. “almost parallel” in their space.

The correlation (b) among response vectors can be fruitfully exploited by making recourse to Proper Orthogonal Decomposition (POD). This procedure, of growing interest in mechanics, can be summarized as follows (for details see e.g. Chatterjee 2000, Ostrowski et al. 2005, 2008). Starting from, say, S points (“nodes”) in the pre-selected P-dimensional “search domain” in the space of the sought parameters, let experiment simulations lead to the S corresponding vectors \(\boldsymbol {\rm u}\) (“snapshots” in the POD jargon), each one collecting all the M measurable response quantities. As suggested by the correlation of the snapshots gathered in the M×S matrix \(\boldsymbol {\rm U}\), new Cartesian reference axes are determined such that, sequentially, a norm of the snapshot projections on each of them is made maximal. Thereafter a “truncation” is carried out, namely only K axes with non-negligible component are preserved. Such procedure computationally implies the calculation of eigenvalues and eigenvectors of the (symmetric, positive semidefinite) matrix \(\boldsymbol {\rm U}^{T}\boldsymbol {\rm U}=\boldsymbol {\rm D}\) of order S. After this preliminary computing effort, a “truncation”, based on a comparative assessment of the above eigenvalues, leads to a M×K matrix \(\bar{\boldsymbol {\Phi}}\) with K ≪ M, which represents a new “truncated” Cartesian reference. Thereafter, the snapshot “amplitudes” in the new reference are easily computed and gathered in K×S matrix \(\bar{\boldsymbol {\rm A}}=\left[\boldsymbol {\rm a}_1 \ldots \boldsymbol {\rm a}_s \right]\). After the above developments every snapshot \(\boldsymbol {\rm u}\) can be approximated as follows:

The above outlined POD approximation (in other terms “compression”) of the information contents of the snapshot matrix \(\boldsymbol {\rm U}\) generated once-for-all at the initial phase, is accomplished, again once-for-all, by “truncation” of the eigenvalues which contribute less then a threshold (say 1%) to the cumulative sum of all eigenvalues. Any new “snapshot” \(\boldsymbol {\rm u}\), vector of experimental data provided in the future by DIC and by possible other instruments (such as those measuring arm loads) can now be “compressed” to its “amplitude” \(\bar{\boldsymbol {\rm a}}\) in the truncated reference system generated by the POD procedure, namely:

When the number P of parameters to estimate increases (and it is relatively high in the present context), the computational burden of “snapshots” generation (as first stage of the above outlined POD method) grows exponentially with the dimensionality of the domain over which the grid of sampling points (nodes) has to be selected.

The following procedures turn out to be considered in the literature, e.g. Mackay et al. (1979), Iman and Conover (1980), for the generation of such node grid: (a) each parameter interval corresponding to the search domain is subdivided into (usually equal) intervals giving rise to a “rectangular grid”; (b) the number of nodes is a priori chosen and the nodes are distributed randomly over the domain (with danger of poor density in some subdomain); (c) the search interval of each one of the P parameter variables is subdivided into S equally spaced “levels”, but only one node is allowed to occupy each level (“Latin Hypercube Sampling”). Procedure (c) has been adopted to the present purposes.

5.2 On the neural networks adopted herein

Artificial Neural Networks (ANNs) can basically be interpreted as a mathematical construct consisting of a sequence of elementary operations apt to approximate a generally complex (say nonlinear and/or non-analytical) relationship between two variable vectors, \(\boldsymbol {\rm x}\) and \(\boldsymbol {\rm y}\). Fundamentals of (“feed-forward”) ANN methodology are available in a wide literature, e.g. Waszczyszyn (1999), Haykin (1998).

In the present context, for the set of S parameter vectors \(\boldsymbol {\rm x}^{j}\) (j = 1,...,S) corresponding to the nodes of the pre-selected grid in the “search domain”, let the “direct problem” solutions by FE test simulations be represented as follows:

The corresponding “pseudo-experimental” data are generated by corrupting the test simulation output for given \(\boldsymbol {\rm x}^{j}\) through an additive random perturbation \(\boldsymbol {\rm e}^{j}\), namely (j = 1,...,S, S being the above number of simulations):

The hypotheses underlying (17) are as follows: absence of a deterministic systematic error; additivity of measurement noise as random perturbation; null mean values of the perturbation. Let the inverse analysis problems concerning data \(\boldsymbol {y}_{\rm EXP}^{j}\) as input be concisely represented as:

In the present context an artificial neural network (ANN) can play the role of a perturbed operator \(\mathcal{H}_{E}^{-1}\) which leads to the output \(\boldsymbol {\rm x}^{j}\) corresponding to an assigned input vector \(\boldsymbol {\rm y}_{\rm EXP}^{j}\). In other words, ANNs are intended to reconstruct a continuous locus in the \(\boldsymbol {\rm x}\)-space on the basis of an assigned set of points \(\boldsymbol {\rm x}^{j}\) which correspond through (18) to points \(\boldsymbol {\rm y}_{\rm EXP}^{j}\) in the space of measurable quantities.

Pairs of vectors \(\left(\boldsymbol {\rm y}_{\rm EXP}^{j}, \, \boldsymbol {\rm x}^{j}\right)\), j = 1...S, related to each other through (17) and (18), are “patterns” employed for “training”, “testing” and validation of ANNs. The use of patterns corrupted by random noise makes the ANN more robust and apt to deal with truly “noisy” input data.

Generally, for the design and the computational behavior of ANN a balance is desirable between the dimensionalities of vectors \(\boldsymbol {\rm x}\) and \(\boldsymbol {\rm y}\). In the present context the number of experimental data, i.e. the dimension of vector \(\boldsymbol {\rm u}\) containing full-field measurements by DIC turns out to be by orders of magnitude larger than the dimension of the parameter vector \(\boldsymbol {\rm x}\). Therefore the role of vector \(\boldsymbol {\rm y}\) in (11) is attributed here to amplitude vector \(\boldsymbol {\rm a}\) which approximates the information contained in snapshot \(\boldsymbol {\rm u}\) by compressing it through the POD procedure outlined in the preceding Subsection. The role of vectors \(\boldsymbol {\rm y}=\boldsymbol {\rm a}\) is twofold: the preliminary generation of the ANN by means of the “patterns” (\(\boldsymbol {\rm x}_i\), \(\boldsymbol {\rm a}_i\), i = 1,...,S); the input of the ANN for the estimation of the parameters (\(\boldsymbol {\rm x}\)) on the basis of a test on cruciform specimen with DIC measurements.

The above remarks evidence the following potential advantages of POD-ANN-based identification procedures over the traditional discrepancy minimization techniques applied in Section 4. The snapshot generation (matrix \(\boldsymbol {\rm U}\)) and its “compression” (matrix \(\boldsymbol {\rm A}\) computation) are performed once-for-all; once-for-all is carried out also the subsequent training phase of ANNs on the basis of available patterns computed by test simulations through the direct mathematical model. Later, the applications of a trained ANN demand limited processing capacity, computer storage and CPU time, and, therefore, may be done routinely by simple operations.

The kind of ANN (details e.g. Waszczyszyn 1999, Haykin 1998) adopted in this study and employed to validate the proposed model calibration, is usually called Multi-Layer Perceptron (MLP). It is characterized by the following main features: the neurons in hidden layers and output layer perform linear combinations and sigmoidal transformations; training consists of a “back-propagation” procedure based on classical Levenberg–Marquardt algorithm; the simple “early stopping” criterion is here adopted in order to prevent overfitting. Training, testing and validation here will employ 70, 15 and 15%, respectively, of the POD pre-computed patterns.

To control and test an ANN training process, a criterion is needed to assess the “error”, namely the difference between the network output and the output from the training samples. The criterion here adopted rests on the “mean error”:

where: S′ is the number of pattern pairs, \(\boldsymbol {\rm t}^{j}\) is the input of the j-th pattern-target pair, and \(\boldsymbol {\rm x}^{j}\) is the network output corresponding to the pattern input \(\boldsymbol {\rm a}^{j}=\boldsymbol {\rm y}^{j}\) (j = 1,...,S′).

5.3 Numerical validation

The POD-ANN procedure proposed and outlined in the preceding Sections 5.1 and 5.2 has been validated by numerical exercises, some of which are summarized in what follows.

With reference to the modified XBP model, Table 6 lists the 12 parameters involved in the identification procedure by cruciform tension tests with K = 10 stages of full-field measurements (DIC displacements and reactive load, included). Poisson ratio ν 12 has been assumed as “a priori” given in order to limit the number of parameters to identify. The parameters governing the compression subsurfaces (q α , n α with α = 4,5) have been also assumed as given “a priori”, since the biaxial tension test considered in the present identification procedure does not lend itself to the calibration of such compression parameters, as clearly indicated by the very low values of the corresponding sensitivities highlighted in Fig. 8. The search domain adopted is specified in Table 6 by lower and upper bounds on each parameter to identify. Over this 12-dimensional domain \(S=\text{10,000}\) nodes are here generated according to approach (c) out of the three options mentioned in Section 5.1. At each stage the number of experimental data is 484 (forces in directions MD and CD; two displacement components in the foil plane at each one of 241 selected nodes of the FE mesh of Fig. 4, i.e. at each one of the grid nodes over the ROI employed for DIC, Fig. 3). Therefore the total number of pseudo-experimental data (“snapshots”) in the present computational checks amounts to \(M=\text{4,840}\).

To simulate measurement errors (“noise”) a random perturbation has been added to the DIC data generated with a uniform probability density over the ±1 μm interval. The snapshot matrix \(\boldsymbol {\rm U}\) on which the POD procedure is based turns out to have the dimensions \(\text{4,840} \times \text{10,000}\); its once-for-all generation through a sequence of 10,000 direct analyses (by Abaqus FE code) required 30-90 sec for each analysis on a Intel(R) Core(TM)2 CPU 6600 with 4GB RAM memory.

The POD “truncation” has been carried out at the 36-th eigenvalue of matrix \(\boldsymbol {\rm D}\) (of order 10,000). The eigenvalues assessment required a computational effort of few minutes on the above specified computer; the subsequent numerical solution of the linear algebraic problem to generate the amplitudes matrix \(\boldsymbol {\rm A}\) (of size \(36\times\text{10,000}\)) was achieved with comparatively negligible addition of computing time, as clearly expected.

In the ANN of the popular kind MLP mentioned in the preceding Subsection, input and output layers consist of 36 and 12 neurons, respectively. Its architecture has been designed with a single “hidden layer” containing 72 neurons, active with a linear combination and a sigmoidal transformation as usual. The choice of the optimal network is oriented to a compromise between the conflicting requirements of architecture simplicity and estimation accuracy. The neural networks, with different number of neurons in input layer (due to different levels of POD “truncation”) and with different number of neurons in hidden layer, were trained and tested in order to find the best network architecture, see Fig. 14. For the identification of material parameters in simplified Xia model it turns out that the best ANN architecture consists of 36–72–12 neurons in input, hidden and output layer, respectively.

Mean error according to (19) of training and testing results for the design of a ANN apt to identify the parameters in the simplified XBP model: (a) as function of the neuron number in the input layer with 72 neurons in the hidden layer; (b) as function of neuron number in the hidden layer with 36 input neurons

The above mentioned operative sequence “FE simulation + POD + compression” has produced 10,000 “patterns”, i.e. pairs consisting of a parameter vector and the corresponding snapshot “amplitude” vector. The ANN training consists in computing, here by the Levenberg-Marquardt back-propagation algorithm, the transformation coefficients (36×72 “weights” and 72 “bias” in hidden layer, and 72×12 “weights” and 12 “bias” in output layer) in all (72 + 12 = 84) active neurons.

As an example of details in the present numerical exercises, Table 7 collects different mean values of errors in estimates obtained by adopting different number of “patterns” in the ANN training: error here means “distance” from the parameter vector \(\boldsymbol {\rm x}_i\) originally used for the training as part of the i-th pattern and now a “target” which is compared to the value generated by the trained ANN with input given by the corresponding amplitude vector.

Testing of the above generated ANN has been performed by employing 100 patterns randomly selected in the set of the 10,000 patterns preliminarily generated, but different from those used for training. The inputs to the ANN are again perturbed as above for training by ±0.5 μm noise (see Section 2.2). Figure 15 visualizes the results of such testing procedure. The distributions of percentage errors in the above specified sense (differences between target and ANN output, in percentage of the former) are synthesized by the mean errors (i.e. their norms) indicated over each diagram. The identification accuracy for some plastic parameters in the simplified XBP model considered turns out to be rather low. Remedies may be achieved in practice by optimizing the choice of the ANN kind (e.g. by selecting an RBF-ANN) and its design; alternatively, a subsequent estimation phase might be useful, to be performed by assuming as known the ANN estimates affected by minor errors and by using all estimates for the initialization of a TRA procedure with only the uncertain parameters as variables.

The mean values of errors plotted in Fig. 15 are computed for each k-th material parameter separately by the usual formula:

where: N = 100 is the number of testing pairs, \(y_{k}^{n}\) is the k-th output of the n-th “pattern-target” pair, and \(t_{k}^{n}\) the network output corresponding to the k-th parameter of the pattern input \(\boldsymbol {\rm x}^{n}\).

An obvious difficulty intrinsic to the POD-ANN identification method arises from the relatively high number of parameters despite the transition here proposed from the original to a simplified XBP model. The consequent high dimensionality of the parameter space implies, through obvious relationship, an exponential high number of grid nodes over the search domain (e.g. with 12 parameters, two or three values for each parameter leads to 4,096 or 16,777,216 snapshots, respectively). Therefore, with reasonable snapshot number S in the preliminary POD computations (like \(S=\text{10,000}\) in the present exercises), the density of nodes over the domain is low (2.155 in average with \(S=\text{10,000}\)) and, hence, low becomes the accuracy of the estimates provided by the trained ANN on the basis of a set of experimental data through the approximate interpolation which is its purpose. Anyway, the increase of snapshot number S concerns only the preparatory computations to be done once-for-all.

6 Conclusions

The research project which includes the study presented herein is motivated by the following circumstances:

-

(i)

technologies leading to products based on foils (primarily to food containers but also to geo-membranes for dams and membranes for architectural tension structures) require at present mechanical characterization of free-foils as for anisotropic elastic and inelastic properties; these properties depend on the production process and substantially influence the quality of the final products;

-

(ii)

material parameters, which govern these properties, should be quantified (for later computer simulations of processes in fabrication and product employments) accurately, economically and fast, routinely in an industrial environment.

As a contribution to such practical purposes, the following features of the free-foils mechanical characterization have been investigated herein with some novelties with respect to the state-of-the-art praxis: (a) biaxial tests on cruciform specimens with substantially non-uniform stress field (which is made so by the specimen geometry with a central hole) and full-field displacements measurements, carried out at different load levels in a single test, by digital image correlation; (b) adoption of a modern, sophisticated and versatile material model (originally proposed to the paper industry), with a simplification which reduces the parameter number without accuracy reduction in practical applications; (c) parameter identification by inverse analyses which can be carried out by a portable computer fed by a large number of digitalized experimental data; these are “compressed” by a “proper orthogonal decomposition” procedure based on preparatory computations (“snapshots” production and “truncation”) and are input into a previously trained and tested artificial neural network accommodated as software tool in a small computer ready to provide the sought parameters by means of its repeated routine use.

Further developments, in progress within this research project, concern more general material models (including viscosity, damage and ultimate strength) and alternative, hopefully more effective and versatile, inverse analysis procedures, in particular a procedure based on the sequence “proper orthogonal decomposition”, radial basis functions, mathematical programming algorithm for discrepancy minimization; finally, stochastic approaches (such as Kalman filters) to the parametric identification problems tackled here are desirable in order to assess errors of the estimates due to “noise” in experimental data.

References

Aguir H, BelHadjSalah H, Hambli R (2011) Parameter identification of an elasto-plastic behaviour using artificial neural networks-genetic algorithm method. Mater Des 32:48–53

Buljak V, Maier G (2011) Proper orthogonal decomposition and radial basis functions in material characterisation based on instrument identation. Eng Struct 33:492–501

Castro J, Ostoja-Starzewski MO (2003) Elasto–plasticity of paper. Int J Plast 19:2083–2098

Chatterjee A (2000) An introduction to the proper orthogonal decomposition. Curr Sci 78(7):808–817

Chen S, Ding X, Fangueiro R, Yi H, Ni J (2008) Tensile behavior of pvc-coated woven membrane materials under uni- and bi-axial loads. J Appl Polym Sci 107:2038–2044

Conn AR, Gould NIM, Toint PL (2000) Trust-region methods. SIAM

Cooreman S, Lecompte D, Sol H, Vantomme J, Debruyne D (2008) Identification of mechanical material behavior through inverse modeling and dic. Exp Mech 48:421–433

Fedele R, Maier G, Miller B (2005) Identification of elastic stiffness and local stresses in concrete dams by in situ tests and neural networks. Struct & Infrast Eng 1(3):165–180

Harrysson A, Ristinmaa M (2008) Large strain elasto–plastic model of paper and corrugated board. Int J Solids Struct 45:3334–3352

Haykin S (1998) Neural networks: a comprehensive foundation. Prentice Hall

Hild F, Roux S (2006) Digital image correlation: from displacement measurement to identification of elastic properties - a review. Strain 42:69–80

Iman RL, Conover WJ (1980) Small sample sensitivity analysis techniques for computer models, with an application to risk assessment. Commun Stat, Theory Methods 9:1749–1842

Jinb R, Chena W, Sudjianto A (2005) An efficient algorithm for constructing optimal design of computer experiments. J Stat Plan Inference 134(1):268–287

Kaliszky S (1989) Plasticity - theory and engineering applications. Elsevier

Kim MC, Kim KY, Kim S, Lee KJ (2004) Reconstruction algorithm of electrical impedance tomography for particle concentration distribution in suspension. Korean J Chem Eng 21:352–357

Kleiber M, Antunez H, Hien TD, Kowalczyk P (1997) Parameter sensitivity in nonlinear mechanics. Theory and finite element computations. John Wiley and Sons, New York

Koehler J, Owen A (1996) Design and analysis of experiments, chapter computer experiments, pp 261–308. North-Holland

Lecompte D, Smits A, Sol H, Vantomme J, Van Hemelrijck D (2007) Mixed numerical–experimental technique for orthotropic parameter identification using biaxial tensile tests on cruciform specimens. Int J Solids Struct 44:1643–1656

Liang YC, Lee HP, Lim SP, Lin WZ, Lee KH, Wu CG (2002) Proper orthogonal decomposition and its applications – part i: theory. J Sound Vib 252(3):527–544

Lubliner J (1990) Plasticity theory. Macmillan

Mackay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21:239–245

Maier G, Bolzon G, Buljak V, Garbowski T, Miller B (2010) Synergic combination of computational methods and experiments for structural diagnoses, chapter Computer Methods in Mechanics - lectures of the CMM 2009, pp 453–473. Springer

Makela P, Ostlund S (2003) Orthotropic elastic–plastic material model for paper materials. Int J Solids Struct 40:5599–5620

McKay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables from a computer code. Technometrics 121:239–245

Ostrowski Z, Bialecki RA, Kassab AJ (2005) Estimation of constant thermal conductivity by use of proper orthogonal decomposition. Comput Mech 37:52–59

Ostrowski Z, Bialecki RA, Kassab AJ (2008) Solving inverse heat conduction problems using trained pod-rbf network. Inverse Probl Sci Eng 16(1):705–714

Perie JN, Leclerc H, Roux S, Hild F (2009) Digital image correlation and biaxial test on composite material for anisotropic damage law identification. Int J Solids Struct 46:2388–2396

Sutton MA, Orteu JJ, Schreier HW (2009) Image correlation for shape, motion and deformation measurements - basic concepts, theory and applications. Springer

Ting CT, Chen T (2005) Poisson’s ratios for anisotropic elastic material can have no bounds. The Quarterly Journal of Mechanics and Applied Mathematics 58(1):73–82

Waszczyszyn Z (1999) Neural networks in the analysis and design of structure. Springer Wien, New York

Xia QS, Boyce MC, Parks DM (2002) A constitutive model for the anisotropic elastic–plastic deformation of paper and paperboard. Int J Solids Struct 39:4053–4071

Zienkiewicz OC, Zhu JZ (1987) A simple error estimator and adaptive procedure for practical engineering analysis. Int J Numer Methods Eng 24:337–357

Acknowledgements

The results presented in this paper have been achieved in a research project supported by a contract between Politecnico (Technical University) of Milan and Tetra Pak Company. Thanks are expressed by the authors particularly to Dr. Roberto Borsari for fruitful interactions.

Open Access

This article is distributed under the terms of the Cre- 983 ative Commons Attribution Noncommercial License which permits 984 any noncommercial use, distribution, and reproduction in any medium, 985 provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The mathematical computational procedure called Proper Orthogonal Decomposition (POD) adopted herein in order to make more economical and fast the inverse analyses, has been outlined in Section 5.1 This Appendix is intended to provide supplementary information as a contribution to clarify the proposed method which involves details available in cited references.

-

(a)

The P parameters to identify are gathered in vector \(\boldsymbol {\rm p}\) and play the role of the unknown variables. In their P-dimensional space the “search domain” (SD) is specified be the “expert” by means of lower and upper bounds which define for each parameter the interval expected to contain the sought values of that parameter. Within the SD a selection of S points (“grid nodes”) has to be performed in order to provide parameter vector \(\boldsymbol {\rm p}_1\dots\boldsymbol {\rm p}_S\) as input to S test simulations (“direct” FE analyses) apt to generate S vectors \(\boldsymbol {\rm u}_1,\dots,\boldsymbol {\rm u}_S\) (“snapshots”) of measurable quantities as pseudo-experimental data in view of the POD leading to “fast” inverse analyses. For such node selection the procedure called “Optimal Latin Hypercube Sampling” was adopted in the present study and is briefly outlined below, while details can be found e.g. in McKay et al. (1979), Koehler and Owen (1996):

-

(i)

In a first step a Latin Hypercube Sampling (LHS) is randomly generated as a P ×S matrix, where each row is related to a model parameter and each column defines a point in the parameter space, i.e. one of S nodes;

-

(ii)

Random generation of LHS usually has a poor statistical quality and is optimized in order to improve the sampling point distribution in the parameter space (i.e. “space filling properties”). Here the Enhanced Stochastic Evolutionary Algorithm (ESEA) is adopted for LHS optimization. The ESEA is based on simple element-exchange techniques and on a “Maximin Distance” optimality criterion (details available in Jinb et al. 2005). A simple 2D example of randomly generated LHS and the subsequent optimized LHS shown in Fig. 16

-

(i)

-

(b)

The outline of the POD procedure applied to the present purposes in Section 5.1 can be clarified by the following additional remarks and related flowchart (Fig. 17). The transition from the snapshot matrix \(\boldsymbol {\rm U}\) consisting of the S vectors \(\boldsymbol {\rm u}_i\) of measurable quantities (computed by FE simulations) to matrix \(\boldsymbol {\rm A}\) of their “amplitudes” through the “basis matrix” \(\boldsymbol {\Phi}\) requires the following computational effort: accurate assessment of eigenvalues and eigenvectors of the square positive-definite (or semidefinite) matrix \(\boldsymbol {\rm D}\), which is generally large (here \(S=\text{10,000}\)) since its order equals the numbers of nodes over the multidimensional space of the sought parameters. It is worth underlying here two circumstances: such effort is done once-for-all, like the effort for the S simulations leading to the snapshots \(\boldsymbol {\rm u}_i\); the relevant mathematical proofs (particularly, the proof that the generation of matrix \(\boldsymbol {\Phi}\) consists of a sequence of optimizations) can be found in references Chatterjee (2000), Liang et al. (2002). After the “truncation” leading to the “compressed” basis \(\bar{\boldsymbol {\Phi}}\), the approximate linear relationship \(\boldsymbol {\rm u}\approx\bar{\boldsymbol {\Phi}}\boldsymbol {\rm a}\) between amplitude and corresponding snapshot provides the following practical benefits in two quite different tools of numerical mathematics for parameter identification.

-

(i)

When an ANN is adopted, “patterns” consisting of pairs of corresponding vectors \(\{\boldsymbol {\rm a}_i\), \(\boldsymbol {\rm p}_i\}\) (parameter vectors \(\boldsymbol {\rm p}_i\) as “targets”) are employed for ANN training, testing and validation: thus the neuron number in the input layer is strongly reduced with respect to the snapshot dimension (instead of snapshot \(\boldsymbol {\rm u}\) its approximate amplitude \(\boldsymbol {\rm a}\)); such reduction generates a balance between the neuron numbers in input and output layers as required by achievement of robustness in the ANN computational performance.

-

(ii)

When an iterative algorithm is employed for the discrepancy function minimization (like the TRA, here used in Section 4 only in order to check the identifiability of the sought parameters) a sequence of many test simulations is required with diverse inputs of parameters. Then the recourse to interpolations by Radial Basis Functions among pre-assessed amplitudes reduces by orders of magnitude the computing times with respect those needed for FE simulations. Some details on the above approach, not adopted herein, can be found in Liang et al. (2002), Ostrowski et al. (2005), Buljak and Maier (2011).

-

(i)

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Garbowski, T., Maier, G. & Novati, G. On calibration of orthotropic elastic-plastic constitutive models for paper foils by biaxial tests and inverse analyses. Struct Multidisc Optim 46, 111–128 (2012). https://doi.org/10.1007/s00158-011-0747-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-011-0747-3