Abstract

In a recent work Dappiaggi (Commun Contemp Math 24:2150075, 2022), a novel framework aimed at studying at a perturbative level a large class of nonlinear, scalar, real, stochastic PDEs has been developed and inspired by the algebraic approach to quantum field theory. The main advantage is the possibility of computing the expectation value and the correlation functions of the underlying solutions accounting for renormalization intrinsically and without resorting to any specific regularization scheme. In this work, we prove that it is possible to extend the range of applicability of this framework to cover also the stochastic nonlinear Schrödinger equation in which randomness is codified by an additive, Gaussian, complex white noise.

Similar content being viewed by others

1 Introduction

Stochastic partial differential equations (SPDEs) play a prominent rôle in modern analysis, probability theory and mathematical physics due to their effectiveness in modeling different phenomena, ranging from turbulence to interface dynamics. At a structural level, several progresses in the study of nonlinear problems have been made in the past few years, thanks to Hairer’s work on the theory of regularity structures [16, 17] and to the theory of paracontrolled distributions [15] based on Bony paradifferential calculus [3].

Without entering into the details of these approaches, we stress that a common hurdle in all of them is the necessity of applying a renormalization scheme to cope with ill-defined product of distributions. In all these instances, the common approach consists of introducing a suitable \(\epsilon \)-regularization scheme which makes manifest the pathological divergences in the limit \(\epsilon \rightarrow 0^+\), see, e.g., [16]. This strategy is very much inspired by the standard approach to a similar class of problems which appears in theoretical physics and, more precisely, in quantum field theory on Minkowski spacetime, studied in momentum space.

Despite relying on a specific renormalization scheme, all these approaches have been tremendously effective in developing the solution theory of nonlinear stochastic partial differential equations in the presence of an additive white noise. At the same time, not much attention has been devoted to computing explicitly the expectation value and the correlations of the underlying solutions, features which are of paramount relevance in the applications, especially when inspired by physics.

In view of these comments, in a recent paper, [11], a novel framework has been developed to analyze scalar, nonlinear SPDEs in the presence of an additive white noise. The inspiration as well as the starting point for such work comes from the algebraic approach to quantum field theory, see [6, 23] for reviews. In a few words this is a specific setup which separates on one side observables, collecting them in a suitable unital \(*\)-algebra encoding specific structural properties ranging from dynamics, to causality and the canonical commutation relations. On the other side, one finds states, that is normalized, positive linear functionals on the underlying algebra, which allow to recover via the GNS theorem the standard probabilistic interpretation of quantum theories.

Without entering into more details, far from the scope of this work, we stress that this approach, developed to be effective both in coordinate and in momentum space, has the key advantage of allowing an analysis of interacting theories within the realm of perturbation theory [5]. Most notably, renormalization plays an ubiquitous rôle and, following an approach à la Epstein-Glaser, this is codified intrinsically within this framework without resorting to any specific ad hoc regularization scheme [7].

From a technical viewpoint, the main ingredients in this successful approach are a combination of the algebraic structures at the heart of the perturbative series together with the microlocal properties both of the propagators ruling the linear part of the underlying equations of motion and of the two-point correlation function of the chosen state.

In [11], it has been observed that the algebraic approach could as well be adapted also to analyze stochastic, scalar semi-linear SPDEs such as

Here, \(\widehat{\Phi }\) must be interpreted as a random distribution on the underlying manifold M, \(\xi \) denotes the standard Gaussian, real white noise centered at 0 whose covariance is \({\mathbb {E}}[\xi (x)\xi (y)]=\delta (x-y)\). Furthermore, \(F:{\mathbb {R}}\rightarrow {\mathbb {R}}\) is a nonlinear potential which can be considered for simplicity of polynomial type, while E is a linear operator either of elliptic or of parabolic type–see [11] for more details and comments.

Following [5] and inspired by the so-called functional formalism [8, 10, 14], we consider a specific class of distributions with values in polynomial functionals over \({C}^{\infty }(M)\). While we refer a reader interested in more details to [11], we sketch briefly the key aspects of this approach. The main ingredients are two distinguished elements

where \(\mu \) is a strictly positive density over M, \(\varphi \in C^\infty (M)\) while \(f\in C^\infty _0(M)\). These two functionals are employed as generators of a commutative algebra \({\mathcal {A}}\) whose composition is the pointwise product. The main rationale at the heart of [11] and inspired by the algebraic approach [5] is the following: The stochastic behavior codified by the white noise \(\xi \) can be encoded in \({\mathcal {A}}\) by deforming its product setting for all \(\tau _1,\tau _2\in {\mathcal {A}}\)

where \(\tau _i^{(k)}\), \(i=1,2\) indicate the k-th functional derivatives, while \(Q=G\circ G^*\). Here G (resp. \(G^*\)) is a fundamental solution associated to E (resp. \(E^*\), the formal adjoint of E), while \(\circ \) indicates the composition of distributions. By a careful analysis of the singular structure of G and in turn of Q one can infer that the distributions \(t_k\) are well-defined on \(M^{2k+2}\) up to the total diagonal \(\textrm{Diag}_{2k+2}\) of \(M^{2k+2}\), that is, \(t_k(\cdot ;\varphi )\in {\mathcal {D}}'(M^{2k+2}\setminus \textrm{Diag}_{2k+2})\).

Yet, adapting to the case in hand the results of [7], in [11] it has been proven that it is possible to extend \(t_k\) to \({\hat{t}}_k\in {\mathcal {D}}^\prime (M^{2k+2})\). This renormalization procedure, when existent, might not be unique, but the ambiguities have been classified, giving ultimately a mathematical precise meaning to Equation (1.2). For a further applications of these techniques to the analysis of a priori ill-defined product of distributions, see [12].

As a consequence, one constructs a deformed algebra \({\mathcal {A}}_{\cdot Q}\) whose elements encompasses at the algebraic level the information brought by the white noise. Without entering into many details, which are left to [11] for an interested reader, we limit ourselves at focusing our attention on a specific, yet instructive example. More precisely, we highlight that, if one evaluates at the configuration \(\varphi =0\) the product of two generators \(\Phi \) of \({\mathcal {A}}_{\cdot Q}\), one obtains

where \(f\in C^\infty _0(M)\) and where \(=\widehat{Q\delta _{\textrm{Diag}_2}}\) indicates a renormalized version of the otherwise ill-defined composition between the operator Q and \(\delta _{\textrm{Diag}_2}\). The latter is the standard bi-distribution lying in \({\mathcal {D}}^\prime (M\times M)\) such that \(\delta _{Diag_2}(h)=\int _M\mu (x)\,h(x,x)\) for every \(h\in C^\infty _0(M\times M)\).

A direct inspection shows that we have obtained the expectation value \({\mathbb {E}}(\widehat{\varphi }^2(x))\) of the random field \(\widehat{\varphi }^2(x)\), where \(\widehat{\varphi }{:=}G*\xi \) is the so-called stochastic convolution, which is a solution of Eq. 1.1 when \(F=0\) and for vanishing initial conditions. With a similar procedure one can realize that \({\mathcal {A}}_{\cdot {Q}}\) encompasses the renormalized expectation values of all finite products of the underlying random field. Without entering into further unnecessary details, we stress that an additional extension of the deformation procedure outlined above allows also to identify another algebra whose elements encompass at the algebraic level the information on the correlations between the underlying random fields. All these data have been applied in [11] to the \(\Phi ^{3}_{d}\) model. This has been considered as a prototypical case of a nonlinear SPDE and it has been analyzed at a perturbative level constructing both the solutions and their two-point correlations. Most notably renormalization and its associated freedoms have been intrinsically encoded yielding order by order in perturbation theory a renormalized equations which takes them automatically into account. It is worth stressing that although an analysis of the convergence of the perturbative series is still not within our grasp, a notable advantage of the approach introduced in [11] lies in its applicability to a vast class of interactions, including all polynomial ones. On the contrary the existence and uniqueness results for an SPDE based either on the theory of regularity structures or on that of paracontrolled distributions require that the underlying model lies in the so-called subcritical regime. Without entering in the technical details, it entails that it is finite the number of distributions which need to be renormalized. This requirement does not apply to the approach used in this paper, hence allowing an investigation of specific models which cannot otherwise be discussed.

In view of the novelty of [11], several questions are left open and goal of this paper is to address and solve a specific one. Most notably the class of SPDEs considered in the above reference contains only scalar distributions and consistently a real Gaussian white noise. Yet, there exists several models not falling within this class and a notable one, at the heart of this paper, goes under the name of stochastic nonlinear Schrödinger equation.

The reasons to consider this model are manifold. From a physical viewpoint, such specific equation is used in the analysis of several relevant physical phenomena ranging from Bose–Einstein condensates to type II superconductivity when coupled to an external magnetic field [9, 25, 26]. From a mathematical perspective, such class of equations is particularly relevant for its distinguished structural properties and it has been studied by several authors, see, e.g., [4, 20, 21] for a list of those references which have been of inspiration to this work.

It is worth stressing that in many instances the attention has been given to the existence and uniqueness of the solutions rather than in their explicit construction or in the characterization of the mean and of the correlation functions. It is therefore natural to wonder whether the algebraic approach introduced in [11] can be adapted also to this scenario. A close investigation of the system in hand, see Sect. 1.1 for the relevant definitions, unveils that this is not a straightforward transition and a close investigation is necessary. The main reason can be ascribed to the presence of an additive complex Gaussian white noise, see Eqs. (1.4) and (1.5) which entails that the only non-vanishing correlations are those between the white noise and its complex conjugate. This property has severe consequences, most notably the necessity of modifying significantly the algebraic structure at the heart of [11].

A further remarkable difference with respect to the cases considered in [11] has to be ascribed to the singular structure of the fundamental solution of the Schrödinger operator. Without entering here into the technical details, we observe that especially the Epstein-Glaser renormalization procedure bears the consequences of this feature, since this calls for a thorough study of the scaling degree of all relevant integral kernels with respect to the submanifold \(\Lambda ^{2k+2}_t=\{({\widehat{t}}_{2k+2},{\widehat{x}}_{2k+2})\,\vert \,t_1=\ldots =t_{2k+2}\}\subset M^{2k+2}\) rather than with respect to the total diagonal \(\textrm{Diag}_{2k+2}\) of \(M^{2k+2}\), as in [11].

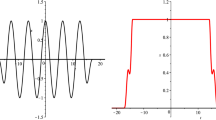

As we shall discuss in Sect. 5.2, the distinguished rôle of this richer singular structure emerges in the analysis of the subcritical regime which occurs only if the space dimension is \(d=1\), contrary to what occurs in many parabolic models as highlighted in [11].

The goal of this work will be to reformulate the algebraic approach to SPDEs for the case on a nonlinear Schrödinger equation and for simplicity of the exposition we shall focus our attention only to the case of \({\mathbb {R}}^{d+1}\) as an underlying manifold. Although the extension to more general curved backgrounds [11, 24] is possible with a few minor modifications, we feel that this might lead us astray from the main goal of showing the versatility of the algebraic approach and thus we consider only the scenario which is more of interest in the concrete applications.

In the next subsection, we discuss in detail the specific model that we study in this paper, but, prior to that, we conclude the introduction with a short synopsis of this work. In Chapter 2, we introduce the main algebraic and analytic ingredients necessary in this paper. In particular, we discuss the notion of functional-valued distributions, adapting it to the case of an underlying complex valued partial differential equation. In addition we show how to construct a commutative algebra of functionals using the pointwise product and we discuss the microlocal properties of its elements. We stress that we refer to [18, 19] for the basic notions concerning the wavefront set and the connected operations between distributions, while we rely on [11, App. B] for a concise summary of the scaling degree of a distribution and of its main properties. A reader interested on the interplay between microlocal techniques and Besov spaces, which appear naturally in the context of SPDEs, should refer instead to [13]. In Sect. 3, we prove the existence of \({\mathcal {A}}^{{\mathbb {C}}}_{\cdot Q}\) a deformation of the algebra identified in the previous analysis and we highlight how it can codify the information of a complex white noise. Renormalization is a necessary tool in this construction and it plays a distinguished rôle in Theorem 3.4 which is one of the main results of this work. In Sect. 4, we extend the analysis first identifying a non-local algebra constructed out of \({\mathcal {A}}^{{\mathbb {C}}}_{\cdot Q}\) and then deforming its product so to be able to compute multi-local correlation functions of the underlying random field. At last, in Sect. 5 we focus our attention on the stochastic nonlinear Schrödinger equation. First we show how to construct at a perturbative level the solutions using the functional formalism. In particular we show that, at each order in perturbation theory, the expectation value of the solution vanishes and we compute up to first order the two-point correlation function. As last step, we employ a diagrammatic argument to discuss under which constraint on the dimension of the underlying spacetime, the perturbative analysis to all orders has to cope with a finite number of divergences, to be tamed by means of renormalization. In this respect, we greatly improve a similar procedure proposed in [11, Sec. 6.3].

1.1 The Stochastic Nonlinear Schrödinger Equation

In this short subsection, we introduce the main object of our investigation, namely the stochastic nonlinear Schrödinger equation on \({\mathbb {R}}^{d+1}\), \(d\ge 1\). In addition, throughout this paper, we assume that the reader is familiar with the basic concepts of microlocal analysis, see, e.g., [19, Ch. 8], as well as with the notion of scaling degree, see in particular [7] and [11, App. B].

More precisely, with a slight abuse of notation, by \({\mathcal {D}}^\prime ({\mathbb {R}}^d)\) we denote the collection of all random distributions and, inspired by [21] we are interested in \(\psi \in {\mathcal {D}}^\prime ({\mathbb {R}}^d)\) such that

where \(\lambda \in {\mathbb {R}}\), \(\Delta \) is the Laplace operator on the Euclidean space \({\mathbb {R}}^d\), while t plays the rôle of the time coordinate along \({\mathbb {R}}\). The parameter \(\kappa \in {\mathbb {N}}\) controls the nonlinear behavior of the equation and it is here left arbitrary since, in our approach, it is not necessary to fix a specific value, although a reader should bear in mind that, in almost all concrete models, e.g., Bose–Einstein condensates or the Ginzburg–Landau theory of type II superconductors, \(\kappa =1\). At the same time, we remark that the framework that we develop allows to consider a more general class of potentials in Eq. (1.3), but we refrain from moving in this direction so to keep a closer contact with models concretely used in physical applications. For this reason, unless stated otherwise, henceforth we set \(\kappa =1\) in Eq. (1.3). The stochastic character of this equation is codified in \(\xi \), which is a complex, additive Gaussian random distribution, fully characterized by its mean and covariance:

where \(f,h\in {\mathcal {D}}({\mathbb {R}}^{d+1})\), while \((,)_{L^2}\) represents the standard inner product in \(L^2({\mathbb {R}}^{d+1})\). The symbol \({\mathbb {E}}\) stands for the expectation value.

Remark 1.1

We stress that we have chosen to work with a Gaussian white noise centered at 0 only for convenience and without loss of generality. If necessary and mutatis mutandis we can consider a shifted white noise, i.e., \(\xi \) is such that the covariance is left unchanged from Eq. (1.4) while

where \(\varphi \in {\mathcal {E}}({\mathbb {R}}^{d+1})\), while \(\varphi (f)\doteq \int \limits _{{\mathbb {R}}^{d+1}}dx\,\varphi (x)f(x)\).

To conclude the section, we observe that the linear part of Eq. (1.3) is ruled by the Schrödinger operator \(L\doteq i\partial _t+\Delta \), which is a formally self-adjoint operator. For later convenience, we introduce the fundamental solution \(G\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) whose integral kernel reads

where \(x=(t,\underline{x})\), \(y=(t^\prime ,\underline{y})\) while \(\Theta \) is the Heaviside function.

Remark 1.2

Observe that, in comparison with the cases considered in [11], L is not a microhypoelliptic operator. We can estimate the singular structure of G using the following standard microlocal techniques, see [19]. Since G is a fundamental solution of the Schrödinger operator \(L=i\partial _t+\Delta \), it holds that

where

and where \(\textrm{Char}\) denotes the characteristic set of the operator. If we combine this information with the smoothness of Eq. (1.6) when \(t\ne t^\prime \), we can conclude that

Observe that the first set in Eq. (1.8) is the wavefront set of \(\delta _{{Diag}_2}\), while the second one accounts for the contribution of the characteristic set of L. It is noteworthy that, since G(x, y) in Eq. (1.6) is manifestly singular as \(x\ne y\) and \(t=t^\prime \), this second contribution to Equation (1.8) cannot be empty.

This entails that, being the wavefront set a conical subset, there exist only three possible outcomes: 1) \(\omega >0\), 2) \(\omega <0\) or 3) \( \omega \in {\mathbb {R}}\setminus \{0\}\). Alas, to the best of our knowledge, an exact evaluation of the wave front set of G is not present in the literature and it is highly elusive to a direct calculation. From our viewpoint the reason lies in the interplay between the Heaviside function and the oscillatory kernel

As a matter of fact, since \({\widehat{K}}(\omega ,k)=\delta (\omega -|k|^2)\) and since \((L\otimes {\mathbb {I}})K=({\mathbb {I}}\otimes L)K=0\), using [22, Thm. IX.44], it descends that

Here, we have used the convention that, for all \(\phi \!\in \! L^1({\mathbb {R}}^d)\), \(\widehat{\phi }(k)\!=\!\int \nolimits _{{\mathbb {R}}^d}dx\,e^{ik\cdot x}\phi (x)\). Hence one cannot use Hörmander criterion for multiplication of distributions to define G as a product between the Heaviside distribution and K. This is the main reason which makes the evaluation of the wavefront set of G rather elusive.

In view of the estimate in Eq. (1.8), we will consider the worst case scenario, namely the third option above, where \(\omega \in {\mathbb {R}}\setminus \{0\}\). For the sake of the analytical constructions required in this paper, this assumption is not restrictive because we are interested in working with products of distributions of the form \(G\cdot G^*\), where \(G^*\) is the fundamental solution of the formal adjoint of L, namely \(i\partial _t+\Delta \). The definition of such product requires renormalization, and this distinguished feature is independent from the form of the second component of the right hand side of Equation (1.8).

Remark 1.3

(Notation). In our analysis, we shall be forced to introduce a cut-off to avoid infrared divergences; namely, we consider a real valued function \(\chi \in {\mathcal {D}}({\mathbb {R}}^{d+1})\) and we define

We stress that this modification does not change the microlocal structure of the underlying distribution, i.e., \(\textrm{WF}(G_\chi )=\textrm{WF}(G)\). Therefore, since the cut-off is ubiquitous in our work and in order to avoid the continuous use of the subscript \(\chi \), with a slight abuse of notation we will employ only the symbol G, leaving \(\chi \) understood.

2 Analytic and Algebraic Preliminaries

In this section, we introduce the key analytic and algebraic tools which are used in this work, also fixing notation and conventions. As mentioned in the Introduction, our main goal is to extend and to adapt the algebraic and microlocal approach to a perturbative analysis of stochastic partial differential equations (SPDEs), so to be able to discuss specific models encoding complex random distributions. In particular, our main target is the stochastic nonlinear Schrödinger equation as in Eq. (1.3) and, for this reason we shall adapt all the following definitions and structures to this case, commenting when necessary on the extension to more general scenarios.

The whole algebraic and microlocal program is based on the concept of functional-valued distribution which is here spelt out. We recall that with \({\mathcal {E}}({\mathbb {R}}^d)\) (resp. \({\mathcal {D}}({\mathbb {R}}^d)\)) we indicate the space of smooth (resp. smooth and compactly supported) complex valued functions on \({\mathbb {R}}^d\) endowed with their standard locally convex topology.

Remark 2.1

As mentioned in Introduction, due to the presence of a complex white noise in Eq. (1.3), we cannot apply slavishly the framework devised in [11] since it does not allow to account for the defining properties listed in Eq. (1.4). Heuristically, one way to bypass this hurdle consists of adopting a different viewpoint which is inspired by the analysis of complex valued quantum fields such as the Dirac spinors, see, e.g., [1]. More precisely, using the notation and nomenclature of Sect. 1.1, we shall consider \(\psi \) and \({\bar{\psi }}\) as two a priori independent fields, imposing only at the end the constraint that they are related by complex conjugation. As it will become clear in the following, this shift of perspective has the net advantage of allowing a simpler construction of the algebra deformations, see in particular Theorem 3.4 avoiding any potential ordering problem between the field and its complex conjugate. This might arise if we do not keep them as independent.

Definition 2.2

Let \(d,m\in {\mathbb {N}}\). We call functional-valued distribution \(u\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Fun}_{{\mathbb {C}}})\) a map

which is linear in the first entry and continuous in the locally convex topology of \({\mathcal {D}}({\mathbb {R}}^{d+1})\times {\mathcal {E}}({\mathbb {R}}^{d+1}) \times {\mathcal {E}}({\mathbb {R}}^{d+1})\). In addition, we indicate the \((k,k^\prime )\)-th order functional derivative as the distribution \(u^{(k,k^\prime )}\in {\mathcal {D}}^\prime (\underbrace{{\mathbb {R}}^{d+1} \times \dots \times {\mathbb {R}}^{d+1}}_{k+k^\prime +1};\textrm{Fun}_{\mathbb {C}})\) such that

We say that a functional-valued distribution is polynomial if \(\exists \,({\bar{k}},{\bar{k}}^\prime )\in {\mathbb {N}}_0\times {\mathbb {N}}_0\) with \({\mathbb {N}}_0={\mathbb {N}}\cup \{0\}\) such that \(u^{(k,k^\prime )}=0\) whenever at least one of these conditions holds true: \(k\ge {\bar{k}}\) or \(k^\prime \ge {\bar{k}}^\prime \). The collection of all these functionals is denoted by \({\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\).

Observe that the subscript \({\mathbb {C}}\) in \({\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Fun}_{{\mathbb {C}}})\) and in \({\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) is here introduced in contrast to the notation of [11] to highlight that, since we consider an SPDE with an additive complex white noise, we need to work with a different notion of functional-valued distribution. More precisely, Eq. (2.1) codifies that, in addition to the test-function f, we need two independent configurations, \(\eta ,\eta ^\prime \) in order to build a functional-valued distribution. The goal is to encode in this framework the information that we want to consider a priori as independent the random distribution \(\psi \) and \({\bar{\psi }}\) in Eq. (1.3). Their mutual relation via complex conjugation is codified only at the end of our analysis.

An immediate structural consequence of Definition 2.2 can be encoded in the following corollary, whose proof is immediate and, therefore we omit it.

Corollary 2.3

The collection of polynomial functional-valued distributions can be endowed with the structure of a commutative \({\mathbb {C}}\)-algebra such that for all \(u,v\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\), and for all \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\) and \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\),

A close inspection of Eq. (2.2) suggests the possibility of introducing a related notion of directional derivative of a functional \(u\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Fun}_{{\mathbb {C}}})\) by taking an arbitrary but fixed \(\zeta \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and setting for all \((f,\eta )\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

In order to make Definition 2.2 more concrete, we list a few basic examples of polynomial functional-valued distributions, which shall play a key rôle in our investigation, particularly in the construction of the algebraic structures at the heart of our approach.

Example 2.4

For any \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\) and \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\), we call

where dx is the standard Lebesgue measure on \({\mathbb {R}}^{d+1}\). Notice that \(\Phi \) and \({\overline{\Phi }}\) are two independently defined functionals and a priori they are not related by complex conjugation as the symbols might suggest. This difference originates from our desire to follow an approach similar to the one often used in quantum field theory, see, e.g., [1], when analyzing the quantization of spinors. In this case it is convenient to consider a field and its complex conjugate as a priori independent building blocks, since this makes easier the implementation of the canonical anticommutation relations. Here we wish to follow a similar rationale and we decided to keep the symbols \(\Phi \) and \({\overline{\Phi }}\) as a memento that, at the end of the analysis, one has to restore their mutual relation codified by complex conjugation.

In addition, we can construct composite functionals, namely for any \(p,q\in {\mathbb {N}}\), \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\) and \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\), we call

Observe that, for convenience, we adopt the notation \(|\Phi |^{2k}\equiv \Phi ^k{\overline{\Phi }}^k={\overline{\Phi }}^k\Phi ^k\).

One can readily infer that, for all \(p,q\in {\mathbb {N}}\), \(\textbf{1},\Phi ,{\overline{\Phi }},{\overline{\Phi }}^p\Phi ^q\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\). On the one hand, all functional derivatives of \(\textbf{1}\) vanish, while, on the other hand

whereas \(\Phi ^{(k,k^\prime )}=0\) for all \(k^\prime \ne 0\) or if \(k\ge 1\). A similar conclusion can be drawn for \({\overline{\Phi }}\) and for \({\overline{\Phi }}^p\Phi ^q\).

In the spirit of a perturbative analysis of Eq. (1.3), the next step consists of encoding in the polynomial functionals the information that the linear part of the dynamics is ruled by the Schrödinger operator \(L=i\partial _t+\Delta \). To this end, let \(u\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Fun}_{{\mathbb {C}}})\) and let G be the fundamental solution of L whose integral kernel is as per Eq. (1.6). Then, for all \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and for all \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

where, in view of Remark 1.2 and of [19, Th. 8.2.12] \(G\circledast f\in C^\infty ({\mathbb {R}}^{d+1})\) is such that, for all \(h\in {\mathcal {D}}({\mathbb {R}}^{d+1})\), \((G\circledast f)(h)\doteq G(f\otimes h)\).

We can now collect all the ingredients introduced, building a distinguished commutative algebra. We proceed in steps, adapting to the case in hand the procedure outlined in [11]. As a starting point, we introduce

that is the polynomial ring on \({\mathcal {E}}({\mathbb {R}}^{d+1})\) whose generators are the functionals \(\textbf{1},\Phi ,{\overline{\Phi }}\) defined in Example 2.4. The algebra product is the pointwise one introduced in Corollary 2.3. Subsequently we encode the action of the fundamental solution of the Schrödinger operator as

where the action of G is defined in Eq. (2.6). Here, \({\overline{G}}\) stands for the complex conjugate of the fundamental solution G as in Eq. (1.6). Its action on a function is defined in complete analogy with Eq. (2.6). In order to account for the possibility of applying to our functionals more than once the fundamental solution G, as well as \({\overline{G}}\), we proceed inductively defining, for every \(j\ge 1\)

Since \({\mathcal {A}}^{{\mathbb {C}}}_{j-1}\subset {\mathcal {A}}^{{\mathbb {C}}}_{j}\) for all \(j\ge 1\), the following definition is natural.

Definition 2.5

Let \({\mathcal {A}}^{{\mathbb {C}}}_j\), \(j\ge 0\), be the rings as per Eq. (2.7) and (2.9). Then, we call \({\mathcal {A}}^{{\mathbb {C}}}\) the unital, commutative \({\mathbb {C}}\)-algebra obtained as the direct limit

Remark 2.6

For later convenience, it is important to realize that \({\mathcal {A}}^{{\mathbb {C}}}\) (resp. \({\mathcal {A}}^{{\mathbb {C}}}_j\), \(j\ge 0\)) can be regarded as a graded algebra over \({\mathcal {E}}({\mathbb {R}}^{d+1})\), i.e.,

Here, \({\mathcal {M}}_{m,m',l,l'}\) is the \({\mathcal {E}}({\mathbb {R}}^{d+1})\)-module generated by those elements in which the fundamental solutions G and \({\overline{G}}\) act l and \(l'\) times, respectively, while the overall polynomial degree in \(\Phi \) is m and the one in \({\overline{\Phi }}\) is \(m'\). The components of the decomposition of \({\mathcal {A}}^{{\mathbb {C}}}_{j}\) are defined as \({\mathcal {M}}_{m,m',l,l'}^j={\mathcal {M}}_{m,m',l,l'}\cap {\mathcal {A}}^{{\mathbb {C}}}_j\). In the following, a relevant rôle is played by the polynomial degree of an element lying in \({\mathcal {A}}^{{\mathbb {C}}}\), ignoring the occurrence of G and \({\overline{G}}\). Therefore, we introduce

Since it holds that \({\mathcal {M}}_k\subseteq {\mathcal {M}}_{k+1}\) for all \(k\ge 0\), the direct limit is well defined and it holds:

To conclude the section, we establish an estimate on the wavefront set of the derivatives of the functionals lying in \({\mathcal {A}}^{{\mathbb {C}}}\), see Definition 2.5. To this end, we fix the necessary preliminary notation, namely, for any \(k\in {\mathbb {N}}\) we set

where \(x_1,\cdots ,x_k\in {\mathbb {R}}^{d+1}\).

Definition 2.7

For any but fixed \(m\in {\mathbb {N}}\), let us consider any arbitrary partition of the set \(\{1,\ldots ,m\}\) into the disjoint union of p non-empty subsets \(I_1\uplus \ldots \uplus I_p\), p being arbitrary. Employing the notations \({\widehat{x}}_m=(x_1,\ldots ,x_m)\in {\mathbb {R}}^{(d+1)m}\) and \({\widehat{t}}_{m}=(t_1,\ldots ,t_m)\in {\mathbb {R}}^m\), as well as their counterpart at the level of covectors, we set

where \(\vert I_j\vert \) denotes the cardinality of the set \(I_j\). Accordingly, we call

Remark 2.8

We observe that the elements of the space \({\mathcal {D}}'_C({\mathbb {R}}^{(d+1)};\textsf{Pol}_{{\mathbb {C}}})\) are distributions generated by smooth functions, as one can deduce from Eqs. (2.14) and (2.15) setting \(k=k^\prime =0\).

Remark 2.9

The space \({\mathcal {D}}'_C({\mathbb {R}}^{(d+1)};\textsf{Pol}_{{\mathbb {C}}})\) is stable with respect to the action of the fundamental solution G and of its complex conjugate, more precisely

The proof of this property follows the same lines of [11, Lemma 2.14], and thus, we omit it. We underline that the only difference concerns the different form of \(\textrm{WF}(G)\), which is nonetheless accounted for by the definition of the sets in Eq. (2.14).

Another important result pertains the estimate of the scaling degree of \(G\circledast \tau \). Before dwelling into its calculation, we recall the definition of weighted scaling degree at a point: given \(u\in {\mathcal {D}}'({\mathbb {R}}^{d+1})\) and \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\), consider the scaled function \(f_\lambda (t,x)=\lambda ^{-(d+2)}f(\lambda ^{-2}t,\lambda ^{-1}x)\) and \(u_\lambda (f)=u(f_\lambda )\).

In this work, we are interested in the scaling degree with respect to the hypersurface \(\Lambda _t\), see [7]. Under the aforementioned parabolic scaling, the fundamental solution G behaves homogeneously and a direct computation shows that \(\textrm{wsd}_{\Lambda _t}(G)=\textrm{wsd}_{\Lambda _t}({\overline{G}})=d\). Having in mind the preceding properties and referring to [11, App. B] and [11, Lemma 2.14 ] for the technical details, it holds

whenever \(\textrm{wsd}_{\Lambda ^{1+k_1+k_2}_t}(\tau )^{(k_1,k_2)}<\infty \). Here, \(\textrm{wsd}_{\Lambda ^{1+k_1+k_2}_t}\) denotes the scaling degree with respect to the subset

Remark 2.10

We underline that the choice of a parabolic scaling, weighting the time variable twice the spatial ones, is natural since this is the scaling transformation under which the linear part of the Schrödinger equation as well as its fundamental solution are scaling invariant.

The following proposition is the main result of this section.

Proposition 2.11

Let \({\mathcal {A}}^{{\mathbb {C}}}\) be the algebra introduced in Definition 2.5. In view of Definition 2.7, it holds that

Proof

In view of the characterization of \({\mathcal {A}}^{{\mathbb {C}}}\) as an inductive limit, see Definition 2.5, it suffices to prove the statement for each \({\mathcal {A}}^{{\mathbb {C}}}_j\) as in Eq. (2.7) and (2.9). To this end, we proceed inductively on the index j.

Step 1 – \(j=0\): In view of Eq. (2.7), it suffices to focus the attention on the collection of generators \(u_{p,q}\equiv {\overline{\Phi }}^p\Phi ^q\), \(p,q\ge 0\), see Example 2.4. If \(p=q=0\), there is nothing to prove since we are considering the identity functional \(\textbf{1}\) whose derivatives are all vanishing. Instead, in all other cases, we observe that a direct calculation shows that the derivatives yield either smooth functionals or suitable products between smooth functions and Dirac delta distributions. Per comparison with Definition 2.7, we can conclude

Step 2 – \(j\ge 1\): As first step, we observe that if \(u\in {\mathcal {A}}^{{\mathbb {C}}}_j\cap {\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1}; \textsf{Pol}_{{\mathbb {C}}})\), then \(G\circledast u,{\overline{G}}\circledast u\in {\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\). As a matter of fact, Eq. (2.6) entails that, for every \(k,k^\prime \ge 0\), \((G\circledast u)^{(k,k^\prime )}=u^{(k,k^\prime )}\cdot (G\otimes 1_{k+k^\prime })\), the dot standing for the pointwise product of distributions, while 1 stands here for the identity operator. Since \(\textrm{WF}(u^{(k,k^\prime )})\subseteq C_{k+k^\prime +1}\) per hypothesis, it suffices to apply [11, Lemma 2.14], see Remark 2.9, in combination with Remark 1.2 to conclude that \(\textrm{WF}((G\circledast u)^{(k,k^\prime )})\subseteq C_{k+k^\prime +1}\). The same line of reasoning entails that an identical conclusion can be drawn for \({\overline{G}}\).

We can now focus on the inductive step. Therefore, let us assume that the statement of the proposition holds true for \({\mathcal {A}}^{{\mathbb {C}}}_j\). In view of Eq. (2.9), \({\mathcal {A}}^{{\mathbb {C}}}_{j+1}\) is generated by \({\mathcal {A}}^{{\mathbb {C}}}_j\), \(G\circledast {\mathcal {A}}^{{\mathbb {C}}}_j\) and \({\overline{G}}\circledast {\mathcal {A}}^{{\mathbb {C}}}_j\). On account of the inductive step, it suffices to focus on the pointwise product of two functionals, say u and v lying in one of the generating algebras.

For definiteness, we focus on \(u\in {\mathcal {A}}^{{\mathbb {C}}}_j\) and \(v\in {\mathcal {A}}^{{\mathbb {C}}}_j\), the other cases following suit. Using the Leibniz rule, for every \(k,k^\prime \ge 0\), it turns out that \((uv)^{(k,k^\prime )}\) is a linear combination with smooth coefficient of products of distributions of the form \(u^{(p,p^\prime )}\otimes v^{(q,q^\prime )}\) with \(0\le p\le k\), \(0\le q\le k^\prime \) and \(p+q=k\) while \(p^\prime +q^\prime =k^\prime \). The inductive hypothesis entails

A direct inspection of Eq. (2.14) entails that if \((t,x,\omega _1,\kappa _1,s_{p+p'},y_{p+p^\prime },\omega _{s}, \kappa _y)\in C_{p+p^\prime +1}\) while \((t,x,\omega _2,\kappa _2,r_{q+q'},z_{q+q^\prime },\omega _{r}, \kappa _z)\in C_{q+q^\prime +1}\) then

from which it descends that \(\textrm{WF}(u^{(p,p^\prime )}\otimes v^{(q,q^\prime )})\subseteq C_{k+k^\prime +1}\). \(\square \)

3 The Algebra \({\mathcal {A}}^{{\mathbb {C}}}_{\cdot _Q}\)

The algebra \({\mathcal {A}}^{{\mathbb {C}}}\) introduced in Definition 2.5 does not codify the information associated to the stochastic nature of the underlying white noise.

In order to encode such datum in the functional-valued distributions and in the same spirit of [11], we introduce a new algebra which is obtained as a deformation of the pointwise product of \({\mathcal {A}}^{{\mathbb {C}}}\). As it will be manifest in the following discussion, this construction is a priori purely formal unless a suitable renormalization procedure is implemented

Remark 3.1

As a premise, we introduce the following bi-distribution, constructed out of the fundamental solution \(G\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) as per Eq. (1.6):

where \(\circ \) denotes the composition of bi-distributions, namely, for any \(f_1,f_2\in {\mathcal {D}}({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\),

Here, with a slight abuse of notation, \(1_{d+1}\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1})\) stands for the distribution generated by the constant smooth function 1 on \({\mathbb {R}}^{d+1}\) while \(\cdot \) indicates the pointwise product of distributions, see [19, Thm. 8.2.10]. Further properties of the composition \(\circ \) are discussed in [11, App. A].

From the perspective of the stochastic process at the heart of Eq. (1.3), we observe that the bi-distribution Q codifies the covariance of the complex Gaussian random field \(\widehat{\varphi }=G*\xi \). More explicitly, it holds

Observe, in addition that \(\widehat{\varphi }\) can be read also as a solution of the stochastic linear Schrödinger equation, namely Eq. (1.3) setting \(\lambda =0\).

To conclude our excursus on the bi-distribution Q, we highlight two notable properties of its singular structure:

-

1.

in view of [19, Thm. 8.2.14],

$$\begin{aligned} \textrm{WF}(Q)\subseteq \textrm{WF}(G)\,. \end{aligned}$$ -

2.

as a consequence of [11, Lemma B.12],

$$\begin{aligned} \textrm{wsd}_{{\Lambda ^2_t}}(Q)<\infty , \end{aligned}$$where \(\textrm{wsd}_{{\Lambda ^2_t}}(Q)\) denotes the scaling degree of the distribution \(Q\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) with respect to \(\Lambda _t^2{:}{=}\{(t_1,x_1,t_2,x_2)\in {\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1}\,\vert \,t_1=t_2\}\).

We are now in a position to introduce the sought deformation which encodes the stochastic properties due to the complex white noise present in Eq. (1.3). Inspired by [11], as a tentative starting point we set, for any \(\tau _1,\tau _2\in {\mathcal {A}}^{\mathbb {C}}\), \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\) and \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\),

Here with \(\tilde{\otimes }\) we denote the tensor product though modified in terms of a permutation of its arguments, which, at the level of integral kernel, reads

Remark 3.2

We observe that only a finite number of terms contributes to the sum on the right hand side of Eq. (3.3) on account of the polynomial nature of the functional-valued distributions \(\tau _1\) and \(\tau _2\). Nonetheless, at this stage, the right hand side of Eq. (3.3) is only a formal expression, since it can include a priori ill-defined structures such as the coinciding point limit of Q and \({\overline{Q}}\). In the following theorem, we shall bypass this hurdle by means of a renormalization procedure so to give meaning to Eq. (3.3) for any \(\tau _1,\tau _2\in {\mathcal {A}}^{\mathbb {C}}\).

The main motivation for the introduction of a deformation of the algebraic structure is to build an explicit algorithm for computing expectation values and correlation functions of polynomial expressions in the random fields \(\widehat{\varphi }=G*\xi \) and \(\overline{\widehat{\varphi }}={\overline{G}}*{\overline{\xi }}\). For this reason, let us illustrate the stochastic interpretation of the deformed product \(\cdot _Q\) and its link to the expectation values via the following example.

Example 3.3

Formally, at the level of integral kernels and referring to the defining properties of the complex white noise in Eq. (1.4), it holds that

for every \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\). Now let us consider the expectation value of \(\vert \widehat{\varphi }\vert ^2=\widehat{\varphi }\overline{\widehat{\varphi }}\):

where we used Eq. (1.5) together with the relation between the fundamental solution of L and its formal adjoint \(G^*\), \(G^*(x,x')=\overline{G(x',x)}\), c.f. Remark 1.2. On account of Eqs. (3.3) and (3.9), we can compute

Observe that, fixing \(\eta '={\overline{\eta }}\), the two configurations are no longer independent, allowing us to make contact with the stochastic nature of the equation. Evaluating these expressions for \(\eta =0\), we obtain

As stated in Remark 3.2, the expressions above are a priori ill-defined, accounting for the singular contribution \(Q\delta _{Diag_2}\). This problem is tackled in Theorem 3.4.

This example can be readily extended to arbitrary polynomial expressions of \(\varphi \) and \({\overline{\varphi }}\), highlighting how our guess for the deformed product \(\cdot _Q\) codifies properly the expectation values.

Theorem 3.4

Let \({\mathcal {A}}^{\mathbb {C}}\) be the algebra introduced in Definition 2.5 and let \({\mathcal {M}}_{m,m^\prime }\) be the moduli as per Remark 2.6. There exists a linear map \(\Gamma _{\cdot _Q}^{\mathbb {C}}:{\mathcal {A}}^{\mathbb {C}}\rightarrow {\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1};\textrm{Pol}_{\mathbb {C}})\) such that:

-

1.

for any \(\tau \in {\mathcal {M}}_{1,0},\,{\mathcal {M}}_{0,1}\), it holds

$$\begin{aligned} \Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )=\tau \,; \end{aligned}$$(3.5) -

2.

for any \(\tau \in {\mathcal {A}}^{\mathbb {C}}\), it holds

$$\begin{aligned}&\Gamma _{\cdot _Q}^{\mathbb {C}}(G\circledast \tau )=G\circledast \Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )\,,\nonumber \\&\Gamma _{\cdot _Q}^{\mathbb {C}}({\overline{G}}\circledast \tau )={\overline{G}}\circledast \Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )\, \end{aligned}$$(3.6) -

3.

for any \(\tau \in {\mathcal {A}}^{\mathbb {C}}\) and \(\psi \in {\mathcal {E}}({\mathbb {R}}^{d+1})\), it holds

$$\begin{aligned}&\Gamma _{\cdot _Q}^{\mathbb {C}}\cdot \delta _\psi =\delta _{\psi }\circ \Gamma _{\cdot _Q}^{\mathbb {C}}\,,\qquad \Gamma _{\cdot _Q}^{\mathbb {C}}\cdot \delta _{{\overline{\psi }}}=\delta _{{\overline{\psi }}}\circ \Gamma _{\cdot _Q}^{\mathbb {C}}\,,\nonumber \\&\Gamma _{\cdot _Q}^{\mathbb {C}}(\psi \tau )=\psi \Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )\,,\qquad \Gamma _{\cdot _Q}^{\mathbb {C}}({\overline{\psi }}\tau )={\overline{\psi }}\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )\,, \end{aligned}$$(3.7)where \(\delta _\psi \) denotes the directional functional derivative along \(\psi \) as per Eq. (2.4);

-

4.

denoting by \(\sigma _{(p,q)}(\tau )=\textrm{wsd}_{\textrm{Diag}_{p+q+1}}(\tau ^{(p,q)})\) the weighted scaling degree of \(\tau ^{(p,q)}\) with respect to the total diagonal of \(({\mathbb {R}}^{d+1})^{p+q+1}\), see [11, Rmk. B.9], it holds

$$\begin{aligned} \sigma _{(p,q)}(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau ))<\infty \,,\qquad \forall \,\tau \in {\mathcal {A}}^{\mathbb {C}}\,. \end{aligned}$$(3.8)

Proof

Strategy The construction of a map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) satisfying the conditions in the statement of the theorem goes by induction with respect to the indices m and \(m^\prime \) in the decomposition \({\mathcal {A}}^{\mathbb {C}}=\bigoplus _{m,m^\prime \in {\mathbb {N}}}{\mathcal {M}}_{m,m^\prime }\), discussed in Remark 2.6. Since the proof shares many similarities with the counterpart in [11] for the case of a stochastic, scalar, partial differential equation, we shall focus mainly on the different aspects. We start from Equation (3.5) and, for \(\tau _1,\ldots ,\tau _n\in {\mathcal {A}}^{\mathbb {C}}\), we set

where the product \(\cdot _Q\) is given by Eq. (3.3). As we anticipated above, the right hand side of Eq. (3.9) might be only formal since the product \(\cdot _Q\) is not a priori well defined on the whole \({\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1};\textrm{Pol}_{\mathbb {C}})\).

The Case \(\mathbf {d=1}\): In this scenario, the divergence of \(Q\delta _{\text {Diag}_2}\) needs no taming. Working at the level of integral kernels, see Eq. (1.6), the composition \(Q=G\circ G\) yields a contribution proportional to \(\int _{{\mathbb {R}}}dt/t\sim \log (t)\), which is locally integrable. As a consequence, in such scenario the proof does not need renormalization and it is straightforward starting from the condition in the statement of the theorem as well as from Eq. (3.9).

The Case \(\mathbf {d\ge 2}\): Step 1. We start by showing that, whenever \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) is well defined for \(\tau \in {\mathcal {A}}^{\mathbb {C}}\) in such a way in particular that Eqs. (3.7) and (3.8) hold true, then the same applies for \(G\circledast \tau \) and \({\overline{G}}\circledast \tau \). We shall only discuss the case of \(G\circledast \tau \), the other following suit.

First of all we notice that \(\Gamma _{\cdot _Q}^{\mathbb {C}}(G\circledast \tau )\) is completely defined by Eq. (3.6). In addition, Equation (3.8) for \(\Gamma _{\cdot _Q}^{\mathbb {C}}(G\circledast \tau )\) is a direct consequence of Remark 2.9. Finally, Eq. (3.7) descends from an iteration of the following argument, namely, for any \(\eta ,\eta ^\prime ,\zeta \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

With these data in our hand, we can start the inductive procedure.

Step 2: \(\mathbf {(m,m^\prime )=(1,1)}\). As a starting point, we observe that Eq. (3.5) completely determines the map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) restricted to the moduli \({\mathcal {M}}_{(1,0)}\) and \({\mathcal {M}}_{(0,1)}\). In addition, all other required properties hold true automatically. Next, we focus our attention on \({\mathcal {M}}_{(1,1)}\). In order to consistently construct \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) on \({\mathcal {M}}_{(1,1)}\), we rely on an inductive argument with respect to the index j subordinated to the decomposition—see Remark 2.6:

Setting \(j=0\), we observe that

On account of the previous discussion, we are left with the task of defining the action of \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) on \(\Phi {\overline{\Phi }}\). This suffices since \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) can be consequently extended to the whole \({\mathcal {M}}_{(1,1)}^0\) by linearity. Recalling that \(\Phi {\overline{\Phi }}\) is defined as in Eq. (2.5), we set, formally, for any \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

where in the last equality we exploited Eq. (3.3) and where \(G\cdot {\overline{G}}\) denotes the pointwise product between G and \({\overline{G}}\).

We observe that the above formula is purely formal due to the presence of the product \(G\cdot {\overline{G}}\) which is a priori ill-defined. As a matter of fact, due to the microlocal behavior of G codified in Remark 1.2 and using Hörmander criterion for the multiplication of distributions [19, Th. 8.2.10], we can only conclude that \(G\cdot {\overline{G}}\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1}\setminus \Lambda ^2_t)\). Since \(\textrm{wsd}_{\Lambda ^2_t}(G\cdot {\overline{G}})=2d\), [11, Thm. B.8] and [11, Rmk. B.9] entail the existence of an extension \(\widehat{G\cdot {\overline{G}}}\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) of \(G\cdot {\overline{G}}\) which preserves both the scaling degree and the wave-front set. Having chosen once and for all any such extension, we set for all \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

To conclude, we observe that Eq. (3.10) implies Eqs. (3.7) and (3.8) by direct inspection, as well as that \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\Psi {\overline{\Psi }})\in {\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1};\textrm{Pol}_{\mathbb {C}})\) on account of Remark 1.2.

Having consistently defined \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) on \({\mathcal {M}}_{(1,1)}^0\), we assume that the map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) has been coherently assigned on \({\mathcal {M}}_{(1,1)}^j\) and we prove the inductive step by constructing it on \({\mathcal {M}}_{(1,1)}^{j+1}\). We remark that, given \(\tau \in {\mathcal {M}}_{(1,1)}^{j+1}\), either \(\tau =G\circledast \tau ^\prime \) with \(\tau ^\prime \in {\mathcal {M}}_{(1,1)}^j\) (the case with the complex conjugate \({\overline{G}}\) is analogous), or \(\tau =\tau _1\tau _2\) with \(\tau _1\in {\mathcal {M}}_{(1,0)}^j\cup G\circledast {\mathcal {M}}_{(1,0)}^j\cup {\overline{G}}\circledast {\mathcal {M}}_{(1,0)}^j\) while \(\tau _2\in {\mathcal {M}}_{(0,1)}^j\cup G\circledast {\mathcal {M}}_{(0,1)}^j\cup {\overline{G}}\circledast {\mathcal {M}}_{(0,1)}^j\).

In the first case, \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau )\) is defined as per Step 1. of this proof, using in addition the inductive hypothesis. In the second one, we start from the formal expression which descends from Eq. (3.9). It yields for all \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\) and \(f\in {\mathcal {D}}({\mathbb {R}}^{d+1})\),

where

and where \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau _1)\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau _2)\) denotes the pointwise product between the functional-valued distributions, which is well defined on account of Remark 2.8. Yet, Eq. (3.11) involves the product of singular distributions and it calls for a renormalization procedure. First of all, we observe that, due to the microlocal behavior of Q—cf. Remark 3.1—and due to the inductive hypothesis entailing

it follows that

where \(\Lambda _t^{4,\textrm{Big}}{:}{=}\{({\widehat{t}},{\widehat{x}})\in ({\mathbb {R}}^{d+1})^4\,\vert \,\exists a,b\in \{1,2,3,4\}, a\ne b, t_a=t_b\}\).

This estimate can be improved observing that, whenever \(({\widehat{t}},{\widehat{x}})\in \Lambda _t^{4,\textrm{Big}}\setminus \Lambda _t^4\), one of the factors among \((\delta _{\textrm{Diag}_2}\otimes Q)\), \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau _1)^{(1,0)}\) and \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau _2)^{(0,1)}\) is smooth and the product of the remaining two is well-defined.

As a consequence, \(U\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^4\setminus \Lambda _t^4)\). Furthermore, [11, Rmk. B.7] here applied to the weighted scaling degree guarantees that

Once more, [11, Thm. B.8] and [11, Rem. B.9] entail the existence of an extension \({\widehat{U}}\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^4)\) of \(U\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^4\setminus \Lambda _t^4)\) which preserves both the scaling degree and the wave-front set. Eventually, we set

By direct inspection, one can realize that Eq. (3.12) satisfies the conditions codified in Eqs. (3.7) and (3.8). This concludes the proof for the case \((m,m^\prime )=(1,1)\).

Step 3: Generic \(\mathbf {(m,m^\prime )}\). We can now prove the inductive step with respect to the indices \((m,m^\prime )\). In particular, we assume that \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) has been consistently assigned on \({\mathcal {M}}_{(m,m^\prime )}\) and we prove that the same holds true for \({\mathcal {M}}_{(m+1,m^\prime )}\). We stress that one should prove the same statement also for \({\mathcal {M}}_{(m,m^\prime +1)}\), but since the analysis is mutatis mutandis the same as for \({\mathcal {M}}_{(m+1,m^\prime )}\), we omit it.

Once more we employ an inductive procedure exploiting that \({\mathcal {M}}_{(m+1,m^\prime )}=\bigoplus _{j\in {\mathbb {N}}}{\mathcal {M}}_{(m+1,m^\prime )}^j\), with \({\mathcal {M}}_{(m+1,m^\prime )}^j{:}{=}{\mathcal {M}}_{(m+1,m^\prime )}\cap {\mathcal {A}}_j^{\mathbb {C}}\). First of all, we focus on the case \(j=0\), observing that

On account of the inductive hypothesis, the map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) has been already assigned on all the generators of \({\mathcal {M}}_{(m+1,m^\prime )}^0\) but \(\Phi ^{m+1}{\overline{\Phi }}^{m^\prime }\). Hence, to determine \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) on the whole \({\mathcal {M}}_{(m+1,m^\prime )}^0\), it suffices to establish its action on \(\Phi ^{m+1}{\overline{\Phi }}^{m^\prime }\) extending it by linearity. To this end, we exploit again Eq. (3.9) which, on account of the explicit form of Equation (3.3), yields

where \(m+1\wedge m^\prime =\min \{m+1,m^\prime \}\) and where the symbol \(Q_{2k}\) is a shortcut notation for

We observe that although the distribution \(Q_{2k}\) is a priori ill-defined, as discussed in Step 2 of this proof, \(G{\overline{G}}\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^2\setminus \Lambda _t^2)\) admits an extension \(\widehat{G{\overline{G}}}\). In turn, this yields a renormalized version of \(Q_{2k}\), i.e.,

Bearing in mind these premises, we set

which is well defined since it involves only products of distributions generated by smooth functions. All required properties of \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) are satisfied by direct inspection.

Finally, we discuss the last inductive step, namely we assume that \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) has been assigned on \({\mathcal {M}}_{(m+1,m^\prime )}^j\) and we construct it consistently on \({\mathcal {M}}_{(m+1,m^\prime )}^{j+1}\). To this end, let us consider \(\tau \in {\mathcal {M}}_{(m+1,m^\prime )}^{j+1}\). Similarly to the inductive procedure in Step 2., on account of the definition of \({\mathcal {M}}_{(m+1,m^\prime )}^{j+1}\) and of the linearity of \(\Gamma _{\cdot _Q}^{\mathbb {C}}\), it suffices to consider elements \(\tau \) either of the form \(\tau =G\circledast \tau ^\prime \) with \(\tau ^\prime \in {\mathcal {M}}_{(m+1,m^\prime )}^{j}\) or of the form

where for any \(i\in \{1,\ldots ,\ell \}\) it holds \(m_i,m_i^\prime \in {\mathbb {N}}\setminus \{0\}\) and \(\sum _{i=1}^\ell m_i=m+1\) and \(\sum _{i=1}^\ell m^\prime _i=m^\prime \).

We observe that in the first case the sought result descends as a direct consequence of Step 1. and of the inductive hypothesis. Hence, we focus only on the second one. In view of the inductive hypothesis, \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) has already been assigned on \(\tau _i\) for all \(i\in \{1,\ldots ,\ell \}\). As before, Eq. (3.9) yields a formal expression, namely

where we have introduced the notation \(\Gamma _{\cdot _Q}^{\mathbb {C}}(\tau _i)=U_i\) and where the symbols \({\mathcal {F}}(N_1,\ldots ,N_\ell ;M_1,\ldots ,M_\ell )\) are \({\mathbb {C}}\)-numbers stemming from the underlying combinatorics. Their explicit form plays no rôle, and hence, we omit them. First of all, since we consider only polynomial functionals, only a finite number of terms in the above sum is non-vanishing. Nonetheless, the expression in Eq. (3.13) is still purely formal and renormalization needs to be accounted for. To be more precise, let us introduce

On the one hand, by [19, Thm. 8.2.9],

while, on the other hand, by the inductive hypothesis, \(\textrm{WF}(U_{i}^{(N_i,M_i)})\subseteq C_{N_i+M_i+1}\) for any \(i\in \{1,\ldots ,\ell \}\). These two data together with [19, Thm. 8.2.10] imply \(U_{N,M}\in {\mathcal {D}}'(M^{\ell +2N+2M}\setminus \Lambda _t^{\ell +2N+2M,\textrm{Big}})\), where

In addition, again by [19, Thm. 8.2.10], it holds

This estimate can be improved through the following argument, which is a generalization of the one used in Step 2. Let \(\{A,B\}\) be a partition of the index set \(\{1,\ldots ,\ell +2N+2M\}\), in two disjoint sets such that if \(\{t_1,x_1,\ldots ,t_{\ell +2N+2M},x_{\ell +2N+2M}\}=\{{\widehat{t}}_A,{\widehat{x}}_A,{\widehat{t}}_B,{\widehat{x}}_B\}\), then \(t_a\ne t_b\) for any \(t_a\in {\widehat{t}}_A\) and \(t_b\in {\widehat{t}}_B\). In such scenario, the integral kernel of \(U_{N,M}\) can be decomposed as

where the kernel \(S_{N,M}({\widehat{t}}_A,{\widehat{x}}_A,{\widehat{t}}_B,{\widehat{x}}_B)\) is smooth on such a partition and where \(K^A_{N,M}({\widehat{t}}_A,{\widehat{x}}_A)\) and \(K^B_{N,M}({\widehat{t}}_B,{\widehat{x}}_B)\) are kernels of distributions appearing in the definition of the map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) on \({\mathcal {M}}_{(k,k^\prime )}^p\) with \(k<m+1\), \(k^\prime <m^\prime \) and \(p<j+1\). This, together with the inductive hypothesis, implies that the product \((K^A_{N,M}\otimes K^B_{N,M})\cdot S_{N,M}\) is well defined. Since this argument is independent from the partition \(\{A,B\}\), it follows that \(U_{N,M}\in {\mathcal {D}}'(M^{\ell +2N+2M}\setminus \Lambda _t^{\ell +2N+2M})\). To conclude, observing that

once more, [11, Thm. B.8] and [11, Rem. B.9] grant the existence of an extension \({\widehat{U}}_{N,M}\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^{\ell +2N+2M})\) of \(U\in {\mathcal {D}}^\prime (({\mathbb {R}}^{d+1})^{\ell +2N+2M}\setminus \Lambda _t^{\ell +2N+2M})\) which preserves the scaling degree and the wave-front set. This allows us to set

Following the same strategy as in the proof of [11, Thm. 3.1], mutatis mutandis, one can show that Eq. (3.14) satisfies the conditions required in the statement of the theorem. \(\square \)

A map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) satisfying the properties listed in Theorem 3.4 is the main ingredient for introducing a deformation of the pointwise product allowing the class of functional-valued distributions to encode the stochastic behavior induced by the white noise. This is codified in the algebra introduced in the following theorem, whose proof we omit since, being completely algebraic, it is analogous to the one of [11, Cor. 3.3].

Remark 3.5

We observe that the deformation map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) introduced above is similar to the inverse of the one used to define Wick ordering, see, e.g., [2, Sec.2].

Theorem 3.6

Let \(\Gamma _{\cdot _Q}^{\mathbb {C}}:{\mathcal {A}}^{\mathbb {C}}\rightarrow {\mathcal {D}}^\prime _C ({\mathbb {R}}^{d+1};\textrm{Pol}_{\mathbb {C}})\) be the linear map introduced via Theorem 3.4. Moreover, let us set \({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}:=\Gamma _{\cdot _Q}^{\mathbb {C}}({\mathcal {A}})\). In addition, let

Then, \(({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q},\cdot _{\Gamma _{\cdot _Q}^{\mathbb {C}}})\) is a unital, commutative and associative \({\mathbb {C}}\)-algebra.

Uniqueness The main result of the previous section consists of the existence of a map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) satisfying the condition as per Theorem 3.4 and which, through a renormalization procedure, codifies at an algebraic level the stochastic properties of \(\Psi \) induced by the complex white noise \(\xi \). In the following we shall argue that the map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) is not unique and, to this end, it is convenient to focus on Eq. (3.10) and in particular on \(\widehat{G\cdot {\overline{G}}}\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\). As discussed above, this is an extension of \(G\cdot {\overline{G}}\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1}\setminus \Lambda _t^2)\). Due to [11, Thm. B.8] and [11, Rem. B.9], such an extension might not be unique depending on the dimension \(d\in {\mathbb {N}}\) of the underlying space.

In spite of this, we can draw some conclusions on the relation between different prescriptions for the deformation map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) and in turn for the associated algebras \({\mathcal {A}}_{\cdot _Q}^{\mathbb {C}}\). Results in this direction are analogous to those stated in [11, Thm. 5.2]. Hence mutatis mutandis we adapt them to the complex scenario. Once more we do not give detailed proofs, since these can be readily obtained from the counterparts in [11]. The following two results characterize completely the arbitrariness in choosing the linear map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\), as well as the ensuing link between the deformed algebras.

Theorem 3.7

Let \(\Gamma _{\cdot _Q}^{\mathbb {C}},\,{\Gamma _{\cdot _Q}^{\mathbb {C}}}':{\mathcal {A}}^{\mathbb {C}}\rightarrow {\mathcal {D}}^\prime _C ({\mathbb {R}}^{d+1};\textrm{Pol}_{\mathbb {C}})\) be two linear maps satisfying the requirements listed in Theorem 3.4. There exists a family \(\{C_{\ell ,\ell ^\prime }\}_{\ell ,\ell ^\prime \in {\mathbb {N}}_0}\) of linear maps \(C_{\ell ,\ell '}:{\mathcal {A}}^{\mathbb {C}}\rightarrow {\mathcal {M}}_{\ell ,\ell '}\) satisfying the following properties

-

For all \(m, m'\in {\mathbb {N}}\) such that either \(m\le j+1\) or \(m'\le j'+1\), it holds

$$\begin{aligned} C_{j,j'}[{\mathcal {M}}_{m,m'}]=0, \end{aligned}$$ -

For all \(\ell ,\ell '\in {\mathbb {N}}_0\) and for all \(\tau \in {\mathcal {A}}^{\mathbb {C}}\), it holds

$$\begin{aligned} C_{\ell ,\ell '}[G\circledast \tau ]=G\circledast C_{\ell ,\ell '}[\tau ],C_{\ell ,\ell '}[{\overline{G}}\circledast \tau ]={\overline{G}}\circledast C_{\ell ,\ell '}[\tau ], \end{aligned}$$ -

For all \(\ell ,\ell '\in {\mathbb {N}}\) and for all \(\zeta \in {\mathcal {E}}({\mathbb {R}}^{d+1};{\mathbb {C}})\), it holds

$$\begin{aligned} \delta _\zeta \circ C_{\ell ,\ell '}=C_{\ell -1,\ell '}\circ \delta _\zeta ,\delta _{{\overline{\zeta }}}\circ C_{\ell ,\ell '}=C_{\ell ,\ell '-1}\circ \delta _{{\overline{\zeta }}}. \end{aligned}$$ -

For all \(\tau \in {\mathcal {M}}_{m,m'}\), it holds

$$\begin{aligned} {\Gamma _{\cdot _Q}^{\mathbb {C}}}'(\tau )=\Gamma _{\cdot _Q}^{\mathbb {C}}\bigl (\tau +C_{m-1,m'-1}(\tau )\bigr ). \end{aligned}$$

Corollary 3.8

Under the hypothesis of Theorem 3.7, the algebras \({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}=\Gamma _{\cdot _Q}^{\mathbb {C}}({\mathcal {A}}^{\mathbb {C}})\) and \({{\mathcal {A}}^{\mathbb {C}}}'_{\cdot _Q}={\Gamma _{\cdot _Q}^{\mathbb {C}}}'({\mathcal {A}}^{\mathbb {C}})\), defined as per Theorem 3.6, are isomorphic.

4 The Algebra \({\mathcal {A}}^{{\mathbb {C}}}_{\bullet _Q}\)

The construction of Sect. 3 allows to compute the expectation values of the stochastic distributions appearing in the perturbative expansion of the stochastic nonlinear Schrödinger equation, as we shall discuss in Sect. 5.

Alas, this falls short from characterizing, again at a perturbative level, the stochastic behavior of the perturbative solution on Eq. (1.3), since it does not allow the computation of multi-local correlation functions, which are instead of great relevance for applications.

Similarly to the case of expectation values, also correlation functions can be obtained through a deformation procedure of a suitable non-local algebra constructed out of \({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\). Before moving to the main result of this section, we introduce the necessary notation.

As a starting point, we need a multi-local counterpart for the space of polynomial functional-valued distributions, namely

We are also interested in

As anticipated, we encode the information on correlation functions through a deformed algebra structure induced over \( {\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\). The main ingredient is the bi-distribution \(Q\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) we introduced in Sect. 3, which is the 2-point correlation function of the stochastic convolution \(G\circledast \xi \). Starting from Q we construct the following product \(\bullet _Q\): for any \(\tau _1\in {\mathcal {D}}^\prime _C(({\mathbb {R}}^{d+1})^{\ell _1};\textsf{Pol}_{{\mathbb {C}}})\) and \(\tau _2\in {\mathcal {D}}^\prime _C(({\mathbb {R}}^{d+1})^{\ell _2};\textsf{Pol}_{{\mathbb {C}}})\), for any \(f_1\in {\mathcal {D}}(({\mathbb {R}}^{d+1})^{\ell _1})\), \(f_2\in {\mathcal {D}}(({\mathbb {R}}^{d+1})^{\ell _2})\) and for all \(\eta ,\eta ^\prime \in {\mathcal {E}}({\mathbb {R}}^{d+1})\)

Here, \(\tilde{\otimes }\) codifies a particular permutation of the arguments of the tensor product as in Eq. (3.4).

Theorem 4.1

Let \({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}=\Gamma _{\cdot _Q}^{\mathbb {C}}({\mathcal {A}}^{\mathbb {C}})\) be the deformed algebra defined in Theorem 3.6 and let us consider the space \( {\mathcal {T}}_{C}^{'\scriptscriptstyle {\mathbb {C}}}({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) introduced in Eq. (4.1), as well as the universal tensor module \({\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\) defined via Eq. (4.2). Then, there exists a linear map \(\Gamma _{\bullet _Q}^{\mathbb {C}}:{\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}} ({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\rightarrow {\mathcal {T}}'_C({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) satisfying the following properties:

-

1.

For all \(\tau _1\ldots ,\tau _\ell \in {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\) with \(\tau _1\in \Gamma _{\cdot _Q}^{\mathbb {C}}({\mathcal {M}}_{1,0})\) or \(\tau _1\in \Gamma _{\cdot _Q}^{\mathbb {C}}({\mathcal {M}}_{0,1})\), see Remark 2.6, it holds

$$\begin{aligned} \Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes \tau _\ell ):=\tau _1\bullet _Q\Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _2\otimes \ldots \otimes \tau _n), \end{aligned}$$(4.4)where \(\bullet _Q\) is the product defined via Eq. 4.3.

-

2.

For any \(\tau _1,\ldots ,\tau _\ell \in {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\), \(\eta ,\eta ^\prime \in {\mathcal {E}}(M;{\mathbb {C}})\) and \(f_1,\ldots ,f_n\in {\mathcal {D}}({\mathbb {R}}^{d+1};{\mathbb {C}})\) such that there exists \(I \subsetneq \{1,\ldots ,n\}\) for which

$$\begin{aligned} \bigcup _{i\in I}\text {supp}(f_i)\cap \bigcup _{j\notin I}\text {supp}(f_j)=\emptyset , \end{aligned}$$it holds

$$\begin{aligned}{} & {} \Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes \tau _n)(f_1\otimes \ldots \otimes f_n;\eta ,\eta ^\prime )\\{} & {} \quad =\left[ \Gamma _{\bullet _Q}^{\mathbb {C}} \left( \bigotimes _{i\in I}\tau _{i}\right) \bullet _Q \Gamma _{\bullet _Q}^{\mathbb {C}} \left( \bigotimes _{j\notin I}\tau _{j}\right) \right] (f_1\otimes \ldots \otimes f_n;\eta ,\eta ^\prime ). \end{aligned}$$ -

3.

Denoting by \(\delta _\zeta ,\delta _{{\overline{\zeta }}}\) the functional derivatives in the direction of \(\zeta ,{\overline{\zeta }}\in {\mathcal {E}}({\mathbb {R}}^{d+1};{\mathbb {C}})\), \(\Gamma _{\bullet _Q}^{\mathbb {C}}\) satisfies the following identities:

$$\begin{aligned} \Gamma _{\bullet _Q}^{\mathbb {C}}(\tau )&=\tau \,,\quad \forall \tau \in {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}, \end{aligned}$$(4.5)$$\begin{aligned} \Gamma _{\bullet _Q}^{\mathbb {C}}\circ \delta _{\zeta }&=\delta _\zeta \circ \Gamma _{\bullet _Q}^{\mathbb {C}}\,,\quad \Gamma _{\bullet _Q}^{\mathbb {C}}\circ \delta _{{\overline{\zeta }}}=\delta _{{\overline{\zeta }}}\circ \Gamma _{\bullet _Q}^{\mathbb {C}}\,, \quad \forall \zeta \in {\mathcal {E}}({\mathbb {R}}^{d+1};{\mathbb {C}})\,, \end{aligned}$$(4.6)$$\begin{aligned} \begin{aligned}&\Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes G\circledast \tau _i\otimes \ldots \otimes \tau _n)\\&\quad =(\delta _{\text {Diag}_2}^{\otimes i-1}\otimes G\otimes \delta _{\text {Diag}_2}^{\otimes n-i})\circledast \Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes \tau _i\otimes \ldots \otimes \tau _n). \end{aligned} \end{aligned}$$(4.7)$$\begin{aligned} \begin{aligned}&\Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes {\overline{G}}\circledast \tau _i\otimes \ldots \otimes \tau _n)\\&\quad =(\delta _{\text {Diag}_2}^{\otimes i-1}\otimes {\overline{G}}\otimes \delta _{\text {Diag}_2}^{\otimes n-i})\circledast \Gamma _{\bullet _Q}^{\mathbb {C}}(\tau _1\otimes \ldots \otimes \tau _i\otimes \ldots \otimes \tau _n). \end{aligned} \end{aligned}$$(4.8)for all \(\tau _1,\ldots ,\tau _n\in {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\), \(n\in {\mathbb {N}}_0\).

Similarly to Sect. 3, through a map \(\Gamma _{\bullet _Q}^{\mathbb {C}}\) we can induce an algebra structure.

Theorem 4.2

Let \(\Gamma _{\bullet _Q}^{\mathbb {C}}:{\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\rightarrow {\mathcal {T}}_C^{'\scriptscriptstyle {\mathbb {C}}}({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) be a linear map satisfying the constraints of Theorem 4.1 and let us define

Furthermore, let us consider the bilinear map \(\bullet _{\Gamma _{\bullet _Q}^{\mathbb {C}}}:{\mathcal {A}}^{\mathbb {C}}_{\bullet _Q} \times {\mathcal {A}}^{\mathbb {C}}_{\bullet _Q}\rightarrow {\mathcal {A}}^{\mathbb {C}}_{\bullet _Q}\)

Then, \(({\mathcal {A}}^{\mathbb {C}}_{\bullet _Q},\bullet _{\Gamma _{\bullet _Q}^{\mathbb {C}}})\) identifies a unital, commutative and associative algebra.

Remark 4.3

For the sake of brevity, we omit the proofs of Theorems 4.1 and 4.2 since, barring minor modifications, they follow the same lines of those outlined in [11, Sec. 4].

Example 4.4

To better grasp the rôle played by this deformation of the algebraic structure, in total analogy with Example 3.3 we compare the two point correlation function of the random field \(\varphi \), defined via Equation (3.2), with the functional counterpart

which, evaluated at the configurations \(\eta =0\), yields \((\Phi \bullet _Q{\overline{\Phi }})(f_1\otimes f_2;0,0)=Q(f_1\otimes f_2)\equiv \omega _2(f_1\otimes f_2)\).

Note that we could have also computed the correlation function of the random field \(\varphi \) with itself, obtaining zero. More generally, the two-point correlation function of a polynomial expression of \(\varphi \) and \({\overline{\varphi }}\) with itself turns out to be trivial, while the one of the expression and its complex conjugate contains relevant stochastic information, as shown in Sect. 5.

Similarly to the case of the deformation map \(\Gamma _{\cdot _Q}^{\mathbb {C}}\) and the algebra \({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\), also for \(\Gamma _{\bullet _Q}^{\mathbb {C}}\) and the algebra \({\mathcal {A}}^{\mathbb {C}}_{\bullet _Q}\) one can discuss the issue of uniqueness due to the renormalization procedure exploited in the construction of \(\Gamma _{\bullet _Q}^{\mathbb {C}}\). The following theorem deals with this hurdle. Once more we do not give a detailed proof, since it can be readily obtained from the counterpart in [11].

Theorem 4.5

Let \(\Gamma _{\bullet _Q}^{{\mathbb {C}}}\,{\Gamma _{\bullet _Q}^{{\mathbb {C}}}}': {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\rightarrow {\mathcal {T}}^{'\scriptscriptstyle {\mathbb {C}}}_C({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) be two linear maps satisfying the requirements listed in Theorem 4.1. Then, there exists a family \(\{C_{\underline{m},\underline{m}'}\}_{\underline{m},\underline{m}'\in {\mathbb {N}}_0^{{\mathbb {N}}_0}}\) of linear maps \(C_{\underline{m},\underline{m}'}:{\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\rightarrow {\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\), the space \({\mathcal {T}}^{\scriptscriptstyle {\mathbb {C}}}({\mathcal {A}}^{\mathbb {C}}_{\cdot _Q})\) being defined via Eq. (4.2), satisfying the following properties:

-

1.

For all \(j\in {\mathbb {N}}_0\), it holds

$$\begin{aligned} C_{\underline{m},\,\underline{m}'}[(\mathcal {{\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}})^{\otimes j}]\subseteq {\mathcal {M}}_{m_1,m_1'}\otimes \ldots \otimes {\mathcal {M}}_{m_j,m_j'}, \end{aligned}$$while, if either \(m_i\le l_i-1\) or \(m'_i\le l'_i-1\) for some \(i\in \{1,\ldots , j\}\), then

$$\begin{aligned} C_{\underline{l},\,\underline{l}'}[{\mathcal {M}}_{m_1,m_1'}\otimes \ldots \otimes {\mathcal {M}}_{m_j,m'_j}]=0. \end{aligned}$$ -

2.

For all \(j\in {\mathbb {N}}\cup \{0\}\) and \(u_1\ldots , u_j \in {\mathcal {A}}^{\mathbb {C}}_{\cdot _Q}\), the following identities hold true:

$$\begin{aligned} \begin{aligned} C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots&\otimes G\circledast u_k\otimes \ldots \otimes u_j]\\&=(\delta _{\text {Diag}_2}^{\otimes (k-1)}\otimes G\otimes \delta _{\text {Diag}_2}^{\otimes (j-k)})\circledast C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots \otimes u_j], \end{aligned} \\ \begin{aligned} C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots&\otimes {\overline{G}}\circledast u_k\otimes \ldots \otimes u_j]\\&=(\delta _{\text {Diag}_2}^{\otimes (k-1)}\otimes {\overline{G}}\otimes \delta _{\text {Diag}_2}^{\otimes (j-k)})\circledast C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots \otimes u_j], \end{aligned} \\ \delta _\psi C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots \otimes u_j]=\sum _{a=1}^jC_{\underline{m}(a),\,\underline{m}'}[u_1\otimes \ldots \otimes \delta _\psi u_a\otimes \ldots \otimes u_j], \\ \delta _{{\overline{\psi }}} C_{\underline{m},\,\underline{m}'}[u_1\otimes \ldots \otimes u_j]=\sum _{a=1}^jC_{\underline{m},\,\underline{m}'(a)}[u_1\otimes \ldots \otimes \delta _{{\overline{\psi }}} u_a\otimes \ldots \otimes u_j], \end{aligned}$$where \(\underline{m}(a)_i=m_i\) if \(i\ne a\) and \(\underline{m}(a)_a=m_a-1\), while \(\delta _\psi \), \(\delta _{{\overline{\psi }}}\) are the directional derivatives with respect to \(\psi ,{\overline{\psi }}\in {\mathcal {E}}({\mathbb {R}}^{d+1};{\mathbb {C}})\), see Definition 2.2. Here \(G\in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) is a fundamental solution of the parabolic operator L.

-

3.

Let us consider two linear maps \(\Gamma _{\cdot _Q}^{\mathbb {C}},\, {\Gamma _{\cdot _Q}^{\mathbb {C}}}':{\mathcal {A}}^{\mathbb {C}}\rightarrow {\mathcal {D}}'_{\mathbb {C}} ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) compatible with the constraints of Theorem 3.4. For all \(u_{m_1,m'_1},\ldots , u_{m_j,m'_j}\in {\mathcal {A}}^{\mathbb {C}}\) with \(u_{m_i,m'_i}\in {\mathcal {M}}_{m_i,m'_i}\) for all \(i\in \{1,\ldots ,j\}\) and for \(f_1,\ldots ,f_j\in {\mathcal {D}}({\mathbb {R}}^{d+1})\) it holds

$$\begin{aligned} \begin{aligned}&{\Gamma _{\bullet _Q}^{\mathbb {C}}}'({\Gamma _{\cdot _Q}^{\mathbb {C}}}^{'\,\otimes j}(u_{m_1,m'_1}\otimes \ldots \otimes u_{m_j,m'_j}))(f_1\otimes \ldots \otimes f_j)\\&\quad =\Gamma _{\bullet _Q}^{\mathbb {C}}({\Gamma _{\cdot _Q}^{\mathbb {C}}}^{\otimes j}(u_{m_1,m_1'}\otimes \ldots \otimes u_{m_j,m'_j}))(f_1\otimes \ldots \otimes f_j)\\&\qquad +\sum _{{\mathcal {G}}\in {\mathcal {P}}(1,\ldots ,j)}\Gamma _{\bullet _Q}^{\mathbb {C}} \left[ {\Gamma _{\cdot _Q}^{\mathbb {C}}}^{\otimes \vert {\mathcal {G}}\vert }C_{\underline{m}_{{\mathcal {G}}}, \,\underline{m}'_{{\mathcal {G}}}}\left( \bigotimes _{I\in {\mathcal {G}}}\prod _{i\in I}u_{m_i,\,m'_i}\right) \right] \left( \bigotimes _{I\in {\mathcal {G}}}\prod _{i\in I}f_i\right) . \end{aligned} \end{aligned}$$Here, \({\mathcal {P}}(1,\ldots ,j)\) denotes the set of all possible partitions of \(\{1,\ldots ,j\}\) into non-empty disjoint subsets while \(\underline{m}_{{\mathcal {G}}}=(m_I)_{I\in {\mathcal {G}}}\), where \(m_I{:}{=}\sum _{i\in I}m_i\).

5 Perturbative Analysis of the Nonlinear Schrödinger Dynamics

In the following, we apply the framework devised in Sects. 3 and 4 to the study of the stochastic nonlinear Schrödinger equation, that is Eq. (1.3), where we set for simplicity \(\kappa =1\).

The first step consists of translating the equation of interest to a functional formalism, replacing the unknown random distribution \(\psi \) with a polynomial functional-valued distribution \(\Psi \in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) as per Definition 2.2. Recalling that we denote by \(G\in {\mathcal {D}}'({\mathbb {R}}^{d+1}\times {\mathbb {R}}^{d+1})\) the fundamental solution of the Schrödinger operator, see Eq. (1.6), the integral form of Eq. (1.3) reads

where \(\Phi \in {\mathcal {D}}^\prime ({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\) is the functional defined in Example 2.4.

Equation (5.1) cannot be solved exactly and therefore we rely on a perturbative analysis. Hence, we expand the solution as a formal power series in the coupling constant \(\lambda \in {\mathbb {R}}_{+}\) with coefficients lying in \({\mathcal {A}}^{{\mathbb {C}}}\subset {\mathcal {D}}^\prime _C({\mathbb {R}}^{d+1};\textsf{Pol}_{{\mathbb {C}}})\), see Definition 2.5: