Abstract

Computer science programs have seen surges in enrollment with approximately 130% increase in new bachelor’s students when comparing the 2009 and 2016 data from the Computing Research Association’s Taulbee survey. Most programs have not been able to hire tenure/tenure-track faculty at the same rate as the enrollment increase. This study reviews the Flip-Flop methodology, which can serve as an instructional approach to improve student learning outcomes. Stelovska, Stelovsky, and Wu discussed their instructional strategy which builds on the flipped classroom model. They added the Flop after Flip to increase student engagement with course content through participation in assessment material development. Students are tasked with creating quiz questions for the video lectures that are completed outside of class. They also have the option to augment the activity with feedback for questions and adding additional media to the video to better illustrate concepts. The pilot test included promising results which included a statistically significant increase in task performance and greater engagement in passive homework assignments including watching videos to prepare for in-class sessions.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Computer science education

- Flip-Flop

- Flipped classroom

- Constructive learning

- Constructivist learning

- Instructional videos

1 Introduction

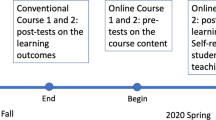

Enrollment in higher education computer science programs has been rapidly increasing since 2009 [1,2,3]. According to the Computing Research Association’s Taulbee Survey [4], new computer science (CS) bachelor’s students increased from 11,685 in 2009 to 26,919 in 2016. The surge in enrollment creates challenges to educate a large number of students with minimal increases to funding. Many departments “tighten their belts” in response to the enrollment surge because tenure-track faculty are increasing at 1/10th the rate of increased majors [1] (Fig. 1).

1.1 Responses to Enrollment Surges

Universities typically responded to enrollment growth by increasing class size and number of sections [3]. Since many computer science programs were not able to increase their tenure-track faculty at the same rate as their student population, they hired adjunct and visiting faculty to account for the increased number of classes. Some also shifted to a teaching assistant model that incorporated advanced undergraduate students, as opposed to graduate students to decrease costs. These responses led to tenure/tenure-track faculty having concerns such as sufficient teaching assistant support and equitable workload.

Additional challenges that occurred with increased enrollment were lower diversity of students and attrition [2]. Cerf and Johnson discuss approaches to combat decreased diversity and attrition including:

-

1.

Consider student interests with assignments

-

2.

Provide consistent feedback

-

3.

Ensure teaching assistants are aware of faculty pedagogy

-

4.

Promote intellectual capacity building (studying to increase CS capacity)

-

5.

Inform students about conferences and groups including target support groups

-

6.

Give positive reinforcement to students because many have perceptions of themselves that do not match their performance

-

7.

Be accessible

-

8.

Promote the formation of student groups

The strategies suggested are powerful methods to improve retention rates. Developing assignments that align with a wide range of student interests can be challenging with close-ended assignments. For example, requiring students to develop a video game can be motivating for those interested in this area, but not to others. Alternatively, creating open-ended assignments for students creates additional challenges for students such as selecting an area and ensuring they can incorporate the learning objectives into their program. This in turn would increase assessment time per student. Providing consistent feedback helps students to learn from mistakes but can be challenging in larger courses that include hundreds of students. For classes like these, TAs are vital to the feedback process. Working with teaching assistants to implement consistent pedagogy can create a synergistic learning environment. Training and consistent follow-up with TAs is possible but has challenges based on the range of students and their experiences in these roles. Promoting intellectual capacity targets development of programming skills like a muscle, where training is needed to improve skills. This approach has been implemented successfully by CS faculty as an athletic approach to software engineering (AthSE) [6]. Informing students about conferences and target support groups can help cultivate a sense of community which helps them to understand real-world applications of concepts learned and develop goals. Positive reinforcement gives students a sense of assurance and increases self-confidence.

Accessibility to students includes face-to-face and digital communication tools such as email. For many students, accessibility is based on the speed of responses when using online tools. Formation of student groups has similar benefits to attending conferences and support groups because they develop a sense of community. However, student groups have the advantage of proximity to other members. Professionals in organizations and at conferences have the benefit of helping students to better develop long-term goals and understanding how their education aligns with possible jobs.

Although these strategies have strong benefits, it can be difficult to deeply embed them in large-enrollment instruction. Since many CS units increased class size to account for the influx of students, it is critical to identify instructional strategies that promote student development. A recent strategy called Flip-Flop was developed by Stelovsky [5] includes features that can support student development with larger class sizes.

1.2 Flip-Flop Methodology

Stelovska et al. [5] discuss the possibilities of their strategy being utilized in classes as large as massive open online courses. Flip-Flop was originally based on the flipped classroom model, which shifts traditional in-class activities such as lectures to homework in preparation for in-class sessions [7]. The in-class sessions are then used as work sessions for students to solve problems and interact with their teachers and peers. Students work with their peers to construct knowledge and receive support as needed, as opposed to completing assignments at home without the availability of immediate support.

Flip-Flop extends on the flipped classroom model by adding a second component referred to as Flop. Flop targets how students interact with lecture-based content while reviewing it in preparation for class. Stelovska et al. focused on the flipped content as video lectures. They posited that students may not be able to focus on the video lectures for the entire duration. Therefore, they required students develop multiple choice questions with time stamps to embed in the video. By developing questions, students may develop a deeper understanding of underlying concepts. Developing appropriate distractors (incorrect answers) is also part of the challenge, which helps students to determine possible misconceptions. Students also had the opportunity to compose hints and feedback for each distractor and correct response. Creating feedback for each incorrect response is similar to offering feedback to a student who makes a mistake in class. Their Flip-Flop development tool also allowed students to also incorporate opinion polls and pinboards within each video lecture. Pinboards allowed students to identify areas of the video where interspersed content could improve understanding of material by adding additional text such as an alternative approach or an image to illustrate a point.

The final major component of Flip-Flop is peer evaluation. Students offered peer review of questions, answers, and hints. This component helped students to consider each question’s instructional effectiveness based on the deconstructed parts of the questions. Stelovska et al. believed that increased interaction through video lecture augmentation would improve students’ engagement with content and increase learning.

The theoretical components of Flip-Flop methodology are complementary to strategies used to improve retention rates. Based on Cerf and Johnson’s initial list of approaches, Flip-Flop can support students by providing consistent feedback and positive reinforcement through peer review of questions developed and instructor/TA interactions in class sessions. Since this technique is embedded in class, it is likely that the TA would also be well-aware of the instructional strategy to support students both in-class and on question development.

Duran also discussed the increased proficiency when students were participants in the instructional process [8]. Although students enrolled in courses cannot be fully responsible for class instruction, they can take part in certain areas to improve learning. Duran discussed options for including students in instruction by helping develop educational materials, participating as a part of lectures, using cooperative learning techniques, peer tutoring, peer assessment, and students as co-teachers. Flip-Flop incorporates several of these strategies including development of educational materials, participating as a part of lectures, cooperative learning, and peer-assessment.

Even though Flip-Flop incorporates strategies to improve academic achievement, others question the benefit of its basis, multiple-choice questions. Shuhidan, Hamilton, and D’Souza discussed the issues of multiple choice assessment [9]. They indicated that many multiple-choice questions assess lower level learning such as factual knowledge and may not be optimal to promote higher level thinking. This perspective on multiple choice question raises an important question, whether or not creating multiple-choice questions aids in developing conceptual knowledge.

1.3 Research Questions

The researcher was intrigued by the possibilities for the Flip-Flop methodology since it is an approach that incorporates many features to improve learning and could be scaled with class size. Prior to fully implementing in a live setting, the researcher conducted a pilot study to determine its initial effectiveness with students. The researcher posed the following questions:

-

1.

Does Flip-Flop Methodology increase students’ task performance compared to traditional homework assignments?

-

2.

How does Flip-Flop Methodology impact students’ approach to studying?

2 Methods

The research questions were addressed in 3 phases: (1) Data collection tools and procedures, (2) Participant recruitment, and (3) analysis.

2.1 Setting

Prior to discussing the three phases, it is important to understand the context for the study. The researcher previously taught a computer science service course that utilized a hybrid approach that included traditional lectures and on-line video lectures. Students met in the lecture hall once a week for a 75-min lecture and subsequently watched an approximately 25-min on-line video developed by the faculty member and completed a 10-question quiz based on the video content. This structure repeated each week with different topics such as search sets, logic, financial functions, social computing, security, and information management. Students also met in the laboratory with teaching assistants twice a week for hands-on application of the topics covered in the lectures.

2.2 Phase 1: Data Collection Tools and Procedures

To determine the effectiveness of the Flip-Flop approach, the researcher used an experimental design to test the effectiveness of the Flip-Flop and original approaches. The tasks completed were the video and quiz for the control group, while the experimental group were required to develop questions with time stamps. The dependent variable was a 5-question quiz based on the final examination questions. A replica of the social computing on-line lecture was used for the experiment. Two course sites using the Laulima course management system (implementation of Sakai at the University of Hawaii) were developed. The control site (See Fig. 2) emulated a typical week of class, where students were asked to watch the video and take notes as if they were enrolled in the class and complete the 10-question quiz (20 min in duration). After completing the 10-question quiz, students were asked to complete a 5-question quiz, which was the final examination questions. Their specific instructions were:

-

Step 1: Watch the video below and take notes as you would if you were taking ICS 101.

-

Step 2: Complete the quiz “PART 1 Lecture Quiz (Social Computing)” [20 min in duration]. You may refer to your notes. The password to begin the quiz is part1.

-

Step 3: Complete the quiz “PART 2 Lecture Quiz v2 (Social Computing)” [10 min in duration]. You may NOT refer to your notes or the video during this quiz. The password to begin the quiz is part2.

The experimental site (see Fig. 3) asked students to watch the video and develop 5 questions with time stamps. After creating the 5 questions, they submitted their file to the site and completed the 5-question quiz based on the final examination questions. Their instructions were:

-

Step 1: Watch the video below and take notes as you would if you were taking ICS 101.

-

Step 2: Develop and write 5 multiple choice questions in a MS Word document. Include the time stamp where you developed the question (e.g. 10:23). You may refer to your notes and the video. Questions must include the correct and incorrect responses. Mark the correct answers with yellow highlight in your document. Save your file with the following naming convention YourName_SocialComputing.docx where YourName is your first and last names (e.g. MichaelOgawa_SocialComputing.docx). Submit your file to the digital dropbox.

-

Step 3: Complete the quiz “Lecture Quiz v2 (Social Computing)” [10 min in duration]. You may NOT refer to your notes or the video during this quiz. The password to begin the quiz is lecture.

After completing the experiment, the researcher conducted two focus group interviews with the participants to determine if the Flip-Flop approach changed the students’ study habits. The focus group interview was conducted using a semi-structured interview format to allow the conversation to flow naturally based on insights. The following open-ended questions served as the interview guide:

-

How did you take notes during the video?

-

How did you study for the quizzes?

2.3 Phase 2: Participant Recruitment

The researcher invited current students who enrolled in at least one upper division CS course to participate in the study. Students who were enrolled in CS classes were offered extra credit for their participation. A total of 14 students participated in the study and were randomly assigned to the control or experimental group.

2.4 Phase 3: Analysis

The answer the first research question, an analysis of variance using the final examination question scores was used to determine the difference in scores and if the result was statistically significant. The researcher coded the focus group interview data to identify themes among the participants in their study habits in response to the second research question.

2.5 Limitations

Two limitations are apparent in the study: (1) small data set and (2) examination of two components of the seven in Flip-Flop. Since this is a pilot study, there were only 14 participants. Thus, the results were not generalizable to larger audiences but can be used as a starting point for research in this area. The research questions addressed the question development and distractor development sections of the approach. Additional studies will be needed to examine the remaining areas of Flip-Flip.

3 Results

3.1 RQ2: Task Performance

The researcher conducted a univariate analysis of variance to determine if there was a difference in scores between the control and experimental groups and if the change was significant. The ANOVA is summarized in Table 1.

Using the final exam percentage scores as the dependent variable, the analysis determined that the difference was statistically significant (p < .05) with F = 6.25. The mean for the control group was 80% and the experimental group was 94%.

3.2 RQ3: Study Approach

The control group (traditional approach) included four themes for their study habits. They focused their notes on facts and definitions, copied bulleted lists from slides, scrubbed the video for slides with text (used the navigation slider to select a section of the video), and multi-tasked while watching the video. Several participants noted that they had the video playing in one window, while they had their email open in another window. Those students stopped working on their email messages when they heard or saw an important point to write as study notes.

The experimental group (Flip-Flop approach) indicated that they also took factual notes. However, they also attempted to understand concepts and develop notes with illustrations to aid in question development (See Fig. 4). Since they were required to note the time for each question, there notes were more detailed and included time stamps next to facts and concepts rather than solely including content. This helped them to revisit difficult concepts because they knew the location in the video to replay content instead of having to search. The Flip-Flop group also focused on the task because it required an active approach to note-taking as they prepared questions. Most of the experimental group had difficulty creating appropriate distractor items for questions. They noted that writing distractors took more time than the questions. The themes for both groups are summarized in Table 2.

4 Discussion

4.1 Implications for Practice

Practitioners should consider the use of Flip-Flop methodology in their courses due to its task performance and engagement benefits. Those that are already using a flipped classroom approach to instruction can implement Flip-Flop with minimal adjustments to their teaching methods by adding a question development section to the activities completed outside of class. This could either augment or replace other tasks.

I caution educators to review their content to determine if this method would work well for their objectives. In this study, the lecture video included factual and conceptual information and did not develop a practical skill. If the objective for an out-of-class concept video was to develop a practical skill, as opposed to a conceptual understanding, other types of practice problem may be more beneficial.

4.2 Implications for Future Research

Several possibilities for future research emerged based on the study. The focus of the study was a pilot test of the Flip-Flop method with 14 participants. Increasing the number of participants and testing it in a live course could improve the reliability of the findings. It is possible that additional themes may also emerge regarding students’ study habits with a larger data set. However, open-ended surveys would likely be used to acquire data from the participants.

The course content assessed in the study was based on service course content which was not targeted at CS majors. It will be important to assess the approach using a CS1 or CS2 course to determine if it improves grades for these content areas.

Flip-Flop methodology includes several components: (1) creating questions, (2) appropriate distractors, (3) hints, (4) feedback, (5) polls, (6) pinboards, and (7) feedback. The current study focused on the foundational components of question development and distractors. It would be valuable to evaluate the remaining components to determine their benefits and best practices.

5 Conclusion

In the pilot study, Flip-Flop methodology increased students’ engagement and task performance with video lectures. Students reported note-taking approaches that targeted concepts and focused on the content rather than multi-tasking. It is possible to implement this strategy in lower level CS courses, where much of the enrollment numbers are higher. Including a scalable approach like Flip-Flop can improve student retention and achievement. The author believes that one of the most valuable aspects of Flip-Flop methodology is that Stelovsky’s software complements the approach, but the underlying concepts work well on their own.

References

Guzdial, M.: ‘Generation CS’ drives growth in enrollments: undergraduates who understand the importance of computer science have been expanding the CS student cohort for more than a decade. Commun. ACM 60(7), 10–11 (2017)

Cerf, V.G., Johnson, M.: Enrollments explode! But diversity students are leaving. Commun. ACM 59(4), 7 (2016)

Fisher, L.M.: Booming enrollments. Commun. ACM 59(7), 17–18 (2016)

The CRA Taulbee Survey. https://cra.org/resources/taulbee-survey/. Accessed 28 Feb 2017

Stelovska, U., Stelovsky, J., Wu, J.: Constructive learning using Flip-Flop methodology: learning by making quizzes synchronized with video recording of lectures. In: Zaphiris, P., Ioannou, A. (eds.) LCT 2016. LNCS, vol. 9753, pp. 70–81. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-39483-1_7

Johnson, P., Port, D., Hill, E.: An athletics approach to software engineering education. IEEE Softw. 33(1), 97–100 (2015)

Hsu, T.: Behavioural sequential analysis of using an instant response application to enhance peer interactions in a flipped classroom. Interact. Learn. Environ. 26(1), 91–105 (2018)

Duran, D.: Learning-by-teaching. Evidence and implications as a pedagogical mechanism. Innov. Educ. Teach. Int. 54(5), 476–484 (2017)

Shuhidan, S., Hamilton, M., D’Souza, D.: Instructor perspectives of multiple-choice questions in summative assessment for novice programmers. Comput. Sci. Educ. 20(3), 229–259 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Ogawa, MB. (2018). Evaluation of Flip-Flop Learning Methodology. In: Zaphiris, P., Ioannou, A. (eds) Learning and Collaboration Technologies. Learning and Teaching. LCT 2018. Lecture Notes in Computer Science(), vol 10925. Springer, Cham. https://doi.org/10.1007/978-3-319-91152-6_27

Download citation

DOI: https://doi.org/10.1007/978-3-319-91152-6_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91151-9

Online ISBN: 978-3-319-91152-6

eBook Packages: Computer ScienceComputer Science (R0)