Abstract

Robust cell detection in histopathological images is a crucial step in the computer-assisted diagnosis methods. In addition, recent studies show that subtypes play an significant role in better characterization of tumor growth and outcome prediction. In this paper, we propose a novel subtype cell detection method with an accelerated deep convolution neural network. The proposed method not only detects cells but also gives subtype cell classification for the detected cells. Based on the subtype cell detection results, we extract subtype cell related features and use them in survival prediction. We demonstrate that our proposed method has excellent subtype cell detection performance and our proposed subtype cell features can achieve more accurate survival prediction.

This work was partially supported by U.S. NSF IIS-1423056, CMMI-1434401, CNS-1405985.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Analysis of microscopy images is very popular in modern cell biology and medicine. In microscopic image analysis for computer-assisted diagnosis methods, automatic cell detection is the basis. However, this task is challenging due to (1) cell clumping and background clutter, (2) large variation in the shape and size of cells, (3) time consuming because of the high resolution in the histopathological images.

To solve these problems, Arteta proposes a general non-overlapping extremal regions selection (NERS) method [1], which achieves the state-of-the-art cell detection performance. Recently, to fully exploit the hierarchical discriminative features learned from deep neural networks, especially deep convolution neural networks (DCNN), many DCNN-based cell detection methods [7, 8, 11] are proposed. These methods regard DCNN as a two-class classifier to detect cells in a pixel-wise way.

Recent studies show that different cell types (tumor cells, stromal cells, lymphocytes) play different roles in tumor growth and metastasis, and accurately classifying cell types is a critical step to better characterization of tumor growth and outcome predictions [2, 9, 13]. However, to the best of our knowledge, there is no existing automatic microscopic subtype cell analysis method with DCNN.

In this paper, we propose a subtype cell detection method with accelerated deep convolution neural network. Our contributions are summarized as three parts: (1) Our work proposes a subtype cell detection method, which can detect cells in the histopathological images and give subtype cell information of the detected cells at the same time. To the best of our knowledge, this is the first study to report subtype cell detection using DCNN. (2) We introduce the d-regularly sparse kernels [6] to our method to elimination all the redundant computation and to speed up the detection process. (3) A new set of features based on the subtype cell detection results are extracted and used to give more accurate survival prediction.

2 Methodology

Our approach for subtype cell detection is to detect cells in histopathological images and give the subtype information of detected cells at the same time. To accomplish this, cell patches extracted according to their annotations are used to train two partially shared-weighted DCNN models for classification: one for cell/non-cell classification, the other for subtype cell classification. Then we apply sparse kernels in the two DCNN models to eliminate all the redundant computations so that detection of cells and subtypes for a tile can be done in one round. Then we integrate the two DCNN models into one subtype cell detection model for subtype cell detection. After that, we extract subtype cell features from the subtype cell detection results.

2.1 Training Two DCNNs for Classification

Given two sets of training data: the cell and non-cell patches \(\left\{ (x_c^i, y_c^i) \in (\mathcal {X}_c, \mathcal {Y}_c)\right\} _{i=1}^{\mathcal {N}_c}\) and subtype cell patches \(\left\{ (x_s^i, y_s^i) \in (\mathcal {X}_s, \mathcal {Y}_s)\right\} _{i=1}^{\mathcal {N}_s}\), we use them to train two deep convolution neural networks via

and

where L is the loss function, \(H_c\) and \(H_s\) are the outputs for cell/non-cell DCNN and subtype DCNN, \(\theta _{c1} \dots \theta _{cL}\), and \(\theta _{s1} \dots \theta _{sL}\) are the weights of layers indexed from 1 to L in these two DCNN models.

Since the training dataset \((\mathcal {X}_s, \mathcal {Y}_s)\) are for subtype cell patches and in real world application, it is harder to manually annotate the subtypes of cells, it is common that we have less subtype cell patches for training than cell/noncell patches, i.e. \(\mathcal {X}_s \subset \mathcal {X}_c\). In DCNN, the convolution layer and pooling layer operate together to extract hierarchical features from training images and the fully-connected layers and loss function layer mainly work for solving the classification task. To avoid the insufficiency and imbalance of subtype cell patches, meanwhile use the better convolution features learned from more cell/non-cell patch images, we use all convolution layer feature learned from Eq. (1) and keep them from changing when training the subtype DCNN model via optimization in equation Eq. (2). Suppose that all the convolution related layers are indexed from 1 to \(j-1\), then Eq. (2) becomes

We keep the weights in convolution layers unchanged in the whole DCNN training process. Thus, by Eqs. (1) and (3), we train our two DCNNs for cell/non-cell classification and subtype cell classification.

2.2 Accelerated Detection with d-Regularly Sparse Kernel

The traditional pixel-wise detection method requires the patch-by-patch sliding window scanning for every pixel in the image. It sequentially and independently takes cell patches as the inputs of DCNN model and the forward propagation is repeated for all the local pixel patches. However, this strategy is time consuming due to the fact that there exists a lot of redundant convolution operations among adjacent patches.

To eliminate the redundant convolution computation, we introduce the d-regularly sparse kernel technique [6] for convolution, pooling and fully-connected layers in our DCNN models. The d-sparse kernels are created by inserting all-zero rows and columns into the original kernels to make every two original neighboring entries d-pixel away. We apply the d-sparse kernel for all the original convolution, pooling and fully-connected layers.

After applying d-regularly sparse kernel into our model, our model can take a tile as input for subtype cell detection in one run instead of computing one patch at each time. In our subtype cell detection model and experiments, we have three subtype cells: lymphocyte, stromal cell and tumor cell, so the subtype cell DCNN model is a three-class classification model. The network structure used for our model is the same as basic LeNet [5] (Two convolution-pooling combinations and then two fully-connected layers) with input patch size as \(40 \times 40\) for training and \(551 \times 551\) for testing after padding.

2.3 Subtype Cell Detection

Our subtype cell detection model is shown in Fig. 1. Since we apply the d-regularly sparse kernel into our model, the tile image can be taken as the input of our model for processing at each time. After the shared convolution and pooling operations, we have two branches according to each DCNN that have been trained. The above branch is for cell detection. After the softmax layer, we have the cell probability of the tile image. The next operation can be any method which maps the probability map into the final detection result. In our model, we use the moment centroid based method to get our final cell detection result. The other branch is the DCNN for subtype cell classification. It gives the probability of all the subtypes for each pixel in the tile. In the end, the results of the two branches are merged by simply multiplying as the final subtype cell detection results.

2.4 Subtype Cell Features for Survival Prediction

According to recent studies, accurately classifying cell types is a critical step to better characterization of survival prediction. Thus, three groups of cellular features are extracted from our subtype cell detection result for survival prediction. These features, motivated by [12, 14], cover cell-level information (e.g., appearance and shapes) of individual subtype cells and also texture properties of background tissue regions.

Holistic Statistics: The four holistic statistics include overall information like the total area, perimeter, number and the corresponding ratio of each subtype cells. Geometry Features: Geometry properties including area, perimeter and so on are calculated from each detected subtype cell with its detection region in the prediction map. Zernike moments are also applied on each type of cells. When combine with different tiles, we calculate mean, median and std. of each feature. There are 564 features. Texture Features: This group of features contains Gabor “wavelet” features, Haralick, and granularity to measure texture properties of objects (e.g., cells and tissues), resulting in 1685 texture features.

3 Experiments

We evaluated our subtype cell detection model via two experiments: subtype cell detection and survival prediction with subtype cell features. All experiments are conduced on a workstation with Intel(R) Xeon(R) CPU E5-2620 v2 @ 2.10 GHz CPU, 32 gigabyte RAM and two NVIDIA Tesla K40c GPUs.

3.1 Subtype Cell Detection

The subtype cell detection performance of the proposed method is evaluated on the part of TCGA (The Cancer Genome Atlas) data portal. We use 300 \(512\times 512\) lung cancer tiles with subtype cell annotations as datasets, 270 for training and 30 for evaluation. All the tiles are annotated by a pathologist. For cell/noncell classification, we have 48562 patches for training and 5158 for testing. We have three subtypes cell in our dataset: lymphocytes, stromal cells and tumor cells. For subtype cell classification, we use 24281 patches (11671 lymphocytes, 10225 tumor cells and 2385 stromal cells) for training and 2579 patches (1218 lymphocytes, 1122 tumor cells and 239 stromal cells) for testing.

For cell/noncell detection, we compare our proposed method with NERS [1] and the robust lung cancer cell detection (RLCCD) method based on pixel-wise DCNN [8]. A detected cell centroid is considered to be a true positive (TP) sample if the circular area of radius 8 centered at the detected nuclei contains the ground-truth annotation; otherwise, it is considered as false positive (FP). Missed ground-truth dots are counted as false negatives (FN). The results are reported in terms of \(F_1\) score \(F_1 = 2PR/(P+R)\), where precision \(P=TP/(TP+FP)\) and recall \(R=TP/(TP+FN)\).

The average precision, recall, F1 score and time consuming on the testing tiles for the three methods are listed in Table 1. Among these methods, the proposed has higher precision, recall and F1 score. It demonstrates that the proposed method can ensure excellent detection performance. Among the time consumings, the proposed method is the fastest method. By applying d-regularly sparse kernel, the proposed method is around 80 times faster than pixel wise detection with DCNN. Our convolution kernel sizes in both training model and d-regularly sparse kernel are much larger than those in RLCCD [8]. If the proposed method uses the same kernel settings as RLCCD, it will be much faster.

Our subtype cell classification accuracy on the testing set is 88.64 %. 2286 of 2579 subtype cells in the testing dataset has been detected. If one subtype ground truth corresponds to multiple detection results, we choose the nearest detection and set its subtype result as the final result. Then for all the subtype detection results, the accuracy is 87.18 %. The accuracies for lymphocytes, tumor cells and stromal cells are 88.05 %, 87.39 % and 81.08 % respectively. It demonstrates that our method achieves impressive subtype cell detection performance. In addition, we show one of the subtype cell detection results in Fig. 2 with red points for lymphocytes, yellow points for tumors and green ones for stromal cells. Obviously, our detection results are close to the ground truth. To the best of our knowledge, this is the first study to report subtype cell detection using fast DCNN. Then we extract features from the subtype cell detection results with the corresponding probability map for survival prediction in order to further evaluate our subtype cell detection performance in the next subsection.

3.2 Survival Prediction

For survival prediction, we focused on the widely used lung cancer dataset NLST (National Lung Screening Trial). The NLST dataset contains complete patients’ pathology images. We collect data from 144 adenocarcinoma (ADC) and 113 squamous cell carcinoma (SCC) patients. To examine whether the features extracted from subtype cell detection from our proposed and trained model can achieve better predictions than traditional imaging biomarkers, we evaluated with the state-of-the-arts framework in lung cancer [10] which doesn’t use the subtype cell features.

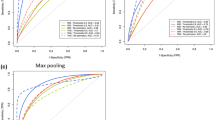

To test our proposed features, we randomly divided the whole NLST dataset into training (97 for ADC, 76 for SCC) and testing set (47 for ADC, 37 for SCC) and built multivariate Cox regression on the top 50 selected features for ADC and SCC, respectively. Figure 3 presents the predictive power on a partitioning into two groups on testing set ((a), (b) for ADC, (c), (d) for SCC). A significant difference (Wald-Test) in survival times can be seen in Fig. 3(a),(c). It demonstrates that our proposed features extracted from subtype cell detection results which cover subtype cell distributions and granularity are more associated with survival outcomes than traditional imaging biomarkers used in [10].

Kaplan-Meier survival curves of two groups on testing set. The x axis is the time in days and the y axis denotes the probability of overall survival. (a), (c) are from proposed framework while (b), (d) are from Wang’s method [10].

Then we randomly divide the whole set to 50 splits and use the concordance index (C-index) to show the prediction performances of two methods. The C-index is a nonparametric measurement to quantify the discriminatory power of a predictive model: 1 indicates perfect prediction accuracy and a C-index of 0.5 is as good as a random guess. Component-wise likelihood based boosting (CoxBoost) [3] and random survival forest (RSF) [4] are both applied as survival models on ADC and SCC cases. From Fig. 4, we can see the higher median C-index of the proposed method in both cases. This illustrates the robustness of the proposed subtype cell features since the subtype cell features are highly associated with tumor growth and survival outcomes.

4 Conclusion

In this paper, we propose a subtype cell detection method with an accelerated deep convolution neural network. The proposed method can detect the cells in the histological image and give the subtype cell information at the same time. By applying sparse kernel, the proposed method can detect subtype cells of the tile image in one round. We also present a set of features extracted from subtype cell detection results and use them in survival prediction to improve the prediction performance. Experimental results show that our proposed method can give good subtype cell detection and that the corresponding subtype features we extract are more associated with survival outcomes than traditional imaging biomarkers.

References

Arteta, C., Lempitsky, V., Noble, J.A., Zisserman, A.: Learning to detect cells using non-overlapping extremal regions. In: Ayache, N., Delingette, H., Golland, P., Mori, K. (eds.) MICCAI 2012. LNCS, vol. 7510, pp. 348–356. Springer, Heidelberg (2012). doi:10.1007/978-3-642-33415-3_43

Beck, A.H., Sangoi, A.R., Leung, S., Marinelli, R.J., Nielsen, T.O., van de Vijver, M.J., West, R.B., van de Rijn, M., Koller, D.: Systematic analysis of breast cancer morphology uncovers stromal features associated with survival. Sci. Transl. Med. 3, 108ra113 (2011)

Binder, H., Schumacher, M.: Allowing for mandatory covariates in boosting estimation of sparse high-dimensional survival models. BMC Bioinform. (2008)

Ishwaran, H., Kogalur, U.B., Blackstone, E.H., Lauer, M.S.: Random survival forests. Ann. Appl. Stat. 2(3), 841–860 (2008)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, H., Zhao, R., Wang, X.: Highly efficient forward and backward propagation of convolutional neural networks for pixelwise classification. arXiv preprint arXiv:1412.4526 (2014)

Liu, F., Yang, L.: A novel cell detection method using deep convolutional neural network and maximum-weight independent set. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 349–357. Springer, Heidelberg (2015). doi:10.1007/978-3-319-24574-4_42

Pan, H., Xu, Z., Huang, J.: An effective approach for robust lung cancer cell detection. In: Wu, G., Coupé, P., Zhan, Y., Munsell, B., Rueckert, D. (eds.) Patch-MI 2015. LNCS, vol. 9467, pp. 87–94. Springer, Heidelberg (2015). doi:10.1007/978-3-319-28194-0_11

Tabesh, A., Teverovskiy, M., Pang, H.Y., Kumar, V.P., Verbel, D., Kotsianti, A., Saidi, O.: Multifeature prostate cancer diagnosis and gleason grading of histological images. IEEE Trans. Med. Imaging 26(10), 1366–1378 (2007)

Wang, H., Xing, F., Su, H., Stromberg, A., Yang, L.: Novel image markers for non-small cell lung cancer classification and survival prediction. BMC Bioinform. 15, 310 (2014)

Xu, Z., Huang, J.: Efficient lung cancer cell detection with deep convolution neural network. In: Wu, G., Coupé, P., Zhan, Y., Munsell, B., Rueckert, D. (eds.) Patch-MI 2015. LNCS, vol. 9467, pp. 79–86. Springer, Heidelberg (2015). doi:10.1007/978-3-319-28194-0_10

Yao, J., Ganti, D., Luo, X., Xiao, G., Xie, Y., Yan, S., Huang, J.: Computer-assisted diagnosis of lung cancer using quantitative topology features. In: Zhou, L., Wang, L., Wang, Q., Shi, Y. (eds.) MLMI 2015. LNCS, vol. 9352, pp. 288–295. Springer, Heidelberg (2015). doi:10.1007/978-3-319-24888-2_35

Yuan, Y., Failmezger, H., Rueda, O.M., Ali, H.R., Gräf, S., Chin, S.F., Schwarz, R.F., Curtis, C., Dunning, M.J., Bardwell, H., et al.: Quantitative image analysis of cellular heterogeneity in breast tumors complements genomic profiling. Sci. Transl. Med. 4(157), 157ra143 (2012)

Zhu, X., Yao, J., Luo, X., Xiao, G., Xie, Y., Gazdar, A., Huang, J.: Lung cancer survival prediction from pathological images and genetic data - an integration study. In: IEEE ISBI, pp. 1173–1176, April 2016

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Wang, S., Yao, J., Xu, Z., Huang, J. (2016). Subtype Cell Detection with an Accelerated Deep Convolution Neural Network. In: Ourselin, S., Joskowicz, L., Sabuncu, M., Unal, G., Wells, W. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science(), vol 9901. Springer, Cham. https://doi.org/10.1007/978-3-319-46723-8_74

Download citation

DOI: https://doi.org/10.1007/978-3-319-46723-8_74

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46722-1

Online ISBN: 978-3-319-46723-8

eBook Packages: Computer ScienceComputer Science (R0)